Abstract

In smart cities, a large amount of optical camera equipment is deployed and used. Closed-circuit television (CCTV), unmanned aerial vehicles (UAVs), and smartphones are some examples of such equipment. However, additional information about these devices, such as 3D position, orientation information, and principal distance, is not provided. To solve this problem, the structured mobile mapping system point cloud was used in this study to investigate methods of estimating the principal point, position, and orientation of optical sensors without initial given values. The principal distance was calculated using two direct linear transformation (DLT) models and a perspective projection model. Methods for estimating position and orientation were discussed, and their stability was tested using real-world sensors. When the perspective projection model was used, the camera position and orientation were best estimated. The original DLT model had a significant error in the orientation estimation. The correlation between the DLT model parameters was thought to have influenced the estimation result. When the perspective projection model was used, the position and orientation errors were 0.80 m and 2.55°, respectively. However, when using a fixed-wing UAV, the estimated result was not properly produced owing to ground control point placement problems.

1. Introduction

Cities have recently been transformed into smart cities to increase their survivability and improve the quality of life of their residents. The goals of smart cities are achieved through the use of various sensors to collect and analyze data [1]. Cameras are examples of optical sensors that are used in smart cities. Optical sensors are used to perform real-time actions such as traffic control, social safety, and disaster response [2,3]. For example, closed-circuit television (CCTV) cameras are already installed in many cities and play an important role. In Korea, the number of CCTV cameras installed is gradually increasing for purposes such as crime prevention and disaster monitoring.

However, the precision of the three-dimensional position of optical sensors has received little attention. In the case of CCTV, the position information is roughly provided. However, only latitude and longitude can be checked, and the orientation information is unavailable [4]. Furthermore, cameras used in smart cities are not standardized. Hence, people cannot easily use specific information about cameras. Determining the specifications and locations of these numerous sensors takes time, incurs administrative costs, and is impossible without the cooperation of various organizations. In addition, public officials in charge have a low perception of the significance of camera information. In summary, interior orientation parameters (IOPs) and exterior orientation parameters (EOPs), which are the most important information regarding a sensor, are difficult to use.

To extract EOP information from cameras, various photogrammetric methods can be used. Based on the collinearity equation, single-photo resection (SPR) is the most representative EOP estimation algorithm. In SPR, a solution is obtained by repeatedly adjusting three or more control points. SPR has been studied to increase its efficiency [5,6,7]. However, because SPR is sensitive to the initial values of EOPs, it is difficult to use if those values are not specified [8,9]. A quaternion-based SPR algorithm was proposed to solve the initial-value problem and the gimbal lock phenomenon [8]. However, only ground control points (GCPs) that meet certain criteria can be used [10], which poses a limitation. To estimate the camera’s EOPs, SPR algorithms based on the law of cosines [11] and the Procrustes algorithm [12,13,14] were proposed. The PnP algorithm, proposed by Fischler and Bolles, is a method of estimating the position and orientation of a camera using a point corresponding to a 3D object and a 2D image [15]. It estimates the EOPs of a camera using a perspective projection model and is used in a variety of fields including indoor positioning and robotics [16,17,18]. Nonetheless, these methods can be used while the camera’s interior orientation information is known. The objective of this study is to develop a method for estimating the camera’s IOPs (especially focal length)/EOPs in situations where the vendor has not performed camera calibration and new calibration is difficult to perform.

The direct linear transformation (DLT) model can be used to estimate the camera’s IOPs and EOPs simultaneously. The DLT model was compared to the collinearity model and the perspective model by Seedahmed and Schenk [19]. DLT parameters are used to express EOP parameters. The least-square solution (LESS) is commonly used to estimate DLT parameters [20]. This method is widely used in photogrammetry and computer vision because of its significant advantage in estimating the camera’s IOPs/EOPs using a simple formula [21,22]. However, the accuracy of the estimated IOPs/EOPs is inferior to that of physical models such as the collinearity equation or the coplanarity equation [23].

The EOP estimation method using the perspective projection model also produces good results. For example, a perspective projection model was used to estimate radial distortion values, principal distances, and EOPs [24,25,26,27]. The solution was discovered using the Gröbner basis or the Sylvester matrix.

Several algorithms have been developed to estimate camera EOPs and to calibrate cameras. Table 1 summarizes the features of each algorithm as well as whether the initial value is required. Algorithms that can produce results without prior camera information can be useful for extracting location information and camera parameter information from many optical sensors in urban areas. These algorithms have good performance. However, there have been few studies on the reliability of the results and the comparison of the performance of each algorithm.

Table 1.

Characteristics of each camera position and orientation estimation algorithm.

Furthermore, some studies performed positioning estimation of various sensors using deep learning [28,29,30,31], but it is difficult to accept that it is close to the true value from a surveying standpoint.

Although many studies have been conducted in this way, previous studies have focused on the theoretical part rather than the application of actual data. Also, as far as we know, no research has been conducted that analyzes each algorithm using the same data. This study performed absolute position and orientation estimation as well as camera calibration for cameras with no initial information. We proposed and compared standardized algorithms that can be applied to a variety of camera sensors such as smartphones, drones, and CCTV without initial information. This study focuses on estimating IOP (principal distance)/EOP information. The main objectives were:

- Investigation and comparative analysis of IOP/EOP estimation models;

- Stability and accuracy analysis of IOP/EOP estimation models;

- Analysis of estimation results using practical optical sensor data.

2. Methodology

2.1. Camera Geometric Model

2.1.1. DLT Model

A DLT model connects points in 3D space and 2D image planes using parameters. Because of their simplicity and low computational cost, DLT models are widely used in close-range photogrammetry, computer vision, and robotics. The mathematical model of planar object space and image space using homogeneous coordinates is given as

where x and y are image coordinates; X, Y, and Z are the object space coordinates; and Ln is the DLT parameter. This model can be written as follows:

At least six well-distributed GCPs are required to calculate the DLT parameters in Equation (2). The LESS can be used to determine the best DLT parameters. Lens distortion parameters can also be applied to the DLT model by using Equation (3) [32]:

The DLT parameter can be obtained using a variety of constraints. The most common DLT condition is (ordinary DLT, ODLT). Furthermore, the norm criterion (, norm criterion DLT, NDLT) was also presented [33].

2.1.2. Perspective Projection Model

Equation (4) is a perspective projection camera model expressed by a homogeneous vector:

where is the 4 × 1 world point homogeneous vector (), is the 3 × 1 image point homogeneous vector (), and is the 3 × 4 homogeneous camera projection matrix. Principal point offset, pixel ratio, and skew can be applied using Equation (5):

where represents the coordinates of the principal point; are the pixel ratios; and s is the skew parameter [20].

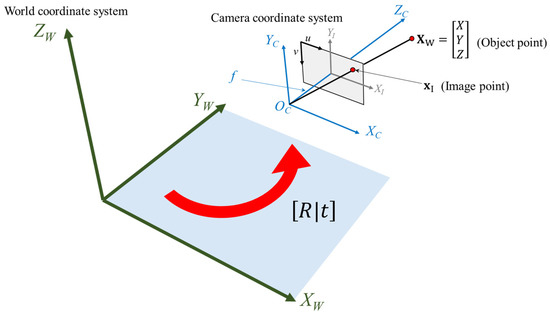

Figure 1 shows the process of converting two different coordinate systems (world coordinate system and camera coordinate system) using rotation and translation. The geometric camera model with camera rotation and translation is applied as follows [20]:

Figure 1.

World coordinate system and perspective projection camera coordinate system.

2.2. Absolute Position/Orientation Estimation and Calibration Using Camera Models

2.2.1. DLT Model

The camera position and parameters can be calculated using the DLT model [19]. Equations (7) and (8) show the DLT and perspective projection models, respectively.

where is the homogeneous image coordinates vector, is the calibration matrix, is the rotation matrix, is the camera position vector, is the homogeneous object point vector, and is the identity matrix.

From Equations (7) and (8), Equation (9) can be derived:

Equation (9) can be rewritten as follows:

On the basis of Equations (9) and (10), the camera position and rotation matrix can be computed as follows:

matrix means the camera position, and matrix means the camera orientation. The camera calibration matrix K can be calculated by Equation (10) and Choleski factorization:

2.2.2. Perspective Projection Model

Equation (14) is the geometric model of the perspective projection:

where is the ith image point coordinates and is the ith world point coordinates. Let us assume a typical camera (skew parameter ≈ 0 and pixel ratio ≈ 1). The camera calibration matrix K will be for . With the assumptions, Equation (14) can be written as follows:

Undistorted and distorted image coordinates are expressed by Equation (16), according to Fitzgibbon’s radial distortion model [34].

where k is the radial distortion parameter, is an undistorted image point, is a distorted image point, and is the radius of for distortion center. The image point can be written as follows:

In this step, we will use the properties of the skew-symmetric matrix. The skew-symmetric matrix of vector is defined as:

By the property of the skew-symmetric matrix, can be obtained as

The third row of Equation (19) can be rewritten as follows:

The seven parameters, , are unknown. If seven GCPs are obtained, the seven equations can be expressed in the matrix form as

By decomposing Matrix

through SVD, the last column of matrix can be selected as a solution. A constant value is needed to obtain the actual solution because the norm of the chosen solution is fixed at 1. Equation (22) shows the solution vector from the last column vector of matrix .

As are elements of the rotation matrix, Equations (23)–(25) can be established:

Using Equations (22) and (25), elements corresponding to 2/3 of the P matrix can be obtained.

Let a 3 × 3 submatrix of matrix be . Matrix can be written as Equation (26):

Herein, is the rotation matrix of the camera. The three rows of matrix are perpendicular because matrix is a diagonal constraint matrix. In addition, the norm of the first and second-row vectors of matrix are the same; thus, Equations (27)–(30) established:

Let . Subsequently, and can be parameterized for by using Equations (27) and (28). The results are given as follows:

where . The remaining unknown parameters are . These five unknown parameters can be obtained using the second row of Equation (20):

where

Here, and have dimensions of 7 × 5 and 7 × 1, respectively, because seven GCPs are used. As a result, can be calculated using LESS as Equation (37):

The values of each element of matrix P and camera distortion parameters can be obtained using the equations described above. The principal distance is the final unknown parameter. The relationship between the first and last rows of matrix P can be used to calculate the principal distance. Based on Equation (14), Equations (38) and (39) are obtained by multiplying the first row of P by w:

Finally, we can get focal length from Equation (39):

2.3. Equipment and Dataset

CCTV, unmanned aerial vehicles (UAVs), and smartphones, which can be used in smart cities, were used as target sensor platforms. Images were captured in a variety of environments with each sensor, and the estimation results were compared. EOPs were estimated and compared to a total station surveying result (position parameters, ) and the SPR result (orientation parameters ). The process using the DLT models and the perspective projection model is illustrated in Figure 2. Figure 3 shows the camera platforms used in the experiments. Each sensor platform was calibrated using a checkerboard and a camera geometric model.

Figure 2.

Process of estimating IOPs/EOPs using DLT model and perspective model.

Figure 3.

Camera sensor platforms.

Figure 4 shows images from each sensor platform. CCTV images were obtained from locations under conditions similar to those found in a smart city. Two drones were used to capture images: one in an oblique direction (rotary-wing UAV) and one in a nadir direction (fixed-wing UAV). The image acquisition conditions were investigated by comparing the results obtained from the two images. Smartphone images were acquired without any specific photographic conditions. The GCP positions on the images are denoted by yellow X marks.

Figure 4.

Images from each sensor platform.

Each image dataset has unique position and orientation properties. Although the height of the platform is clearly different, Figure 4a,b shows they have a similar orientation parameter of looking down diagonally. Figure 4b,c shows the camera mounted on a UAV, and while the Z value of the position is similar, the orientation parameter is noticeably different. Figure 4b examines the diagonal direction which can have a wide variety of GCPs, whereas Figure 4c shows the cause of the GCPs to be distributed on an almost constant plane. The Z diversity of GCP is particularly low in the park, which is the study’s target area. Finally, the smartphone image is captured by the user while holding the phone and looking to the side, which can differ significantly from the image orientation parameters of Figure 4a–c.

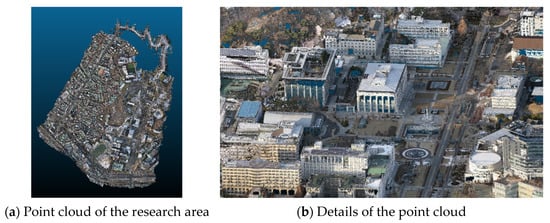

MMS + UAV hybrid point cloud data were used in this study to acquire the 3D location of GCPs and checkpoints (CKPs). The smart city point cloud was used because GCPs could be easily obtained without direct surveys. In this study, the georeferencing point cloud generated in Mohammad’s study [35] was used (as shown in Figure 5).

Figure 5.

Hybrid point cloud for GCPs.

3. Experimental Results

3.1. Simulation Experiments

Before conducting an experiment using a real sensor, simulation experiments were performed to compare the performance of each algorithm using a 10 × 10 × 10 virtual grid. Thirteen virtual grid points were chosen as GCPs at random from a pool of 1000. The camera parameters, position, and rotation were calculated using 100,000 GCP combinations from a possible set of . The camera parameters were set close to the actual camera parameters. Camera IOPs/EOPs and the coordinates of virtual points were also set based on the actual TM coordinate system. The set camera parameters and IOPs/EOPs values are listed in Table 2. The estimated values were directly compared with the true values. The reprojection error was calculated using 987 virtual points. Figure 6a depicts the virtual grid and the camera position. Figure 6b depicts the virtual grid, and Figure 6c depicts the virtual image generated by the virtual grid. The simulation environment was Win 11, Matlab R2022b.

Table 2.

Camera parameters, IOPs, and EOPs.

Figure 6.

Virtual grid and virtual image.

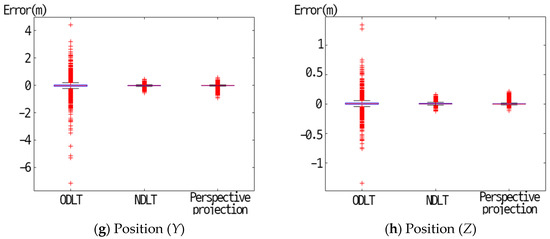

Figure 7 shows a comparison of each-algorithm-estimated principal points and EOPs. Based on the Median value, all three algorithms were able to estimate the principal distance with an accuracy of 0.1 pixels or less. However, when comparing the maximum values, NDLT resulted in the least error. Figure 7c–e shows the camera orientation and position estimation results. It was shown that the perspective projection model, NDLT, and ODLT model showed good performance in order. All three algorithms showed an error of less than 1 degree. Figure 7f–h shows the camera position estimation results. The NDLT model and perspective projection model showed good performance, and the ODLT model also showed satisfactory performance. The maximum error when using the NDLT and the perspective projection model did not exceed 1 m, but when the ODLT was used, the maximum error was relatively large.

Figure 7.

Accuracy assessment results using a 10 × 10 × 10 virtual grid.

Figure 8 shows box plots of the mean reprojection error of each algorithm. When comparing the mean reprojection error, ODLT showed outstanding performance. The maximum error of the ODLT model did not exceed 0.5 pixels. NDLT and Perspective projection models also showed good performance, but the maximum errors were 1.71 pixels and 3.72 pixels, respectively.

Figure 8.

Box plots of mean reprojection error results.

It is interesting to note that the X, Y, and Z distributions of GCP also affect the quality of the estimation results. Aside from the distribution of GCPs on the image plane, the even distribution of GCPs in a 3D object space is critical [36,37]. GCPs were randomly selected on one plane as shown in Figure 9a, and GCPs were randomly selected on multiple planes as shown in Figure 9b, and the results were compared.

Figure 9.

Three—dimensional distribution of GCPs.

When GCPs were selected on only one plane, IOP/EOP estimation was not performed properly. Figure 10 is a visual representation of the results. Figure 10 shows the size and direction of the reprojection error, and it can be seen that a visually unacceptable error has occurred. None of the three algorithms produced significant estimation results. In addition to the reprojection error, the estimation results of the camera IOPs and EOPs were also unacceptable. As with many camera models, it is clear that the 3D distribution of GCPs is critical.

Figure 10.

Reprojection error when using GCPs on one plane.

Table 3 shows the camera EOPs and the mean reprojection errors. It is confirmed that the camera parameter estimation and the reprojection results have remarkably improved. The X, Y, and Z errors of all three models were all less than 50 cm. In particular, in the case of perspective projection, it was confirmed that the size of positional error was more than twice as small as that of other models. Orientation error and mean reprojection error were also the smallest in the perspective projection model.

Table 3.

Estimated camera EOP errors and mean reprojection errors.

The degree of the 3D distribution can be determined by the distance between the camera and the object. Let us compare close-range photogrammetry with an object–sensor distance of about 20 m with aerial photogrammetry with a flight altitude of 200 m or more. Even GCP distributions with the same depth range can be treated as near-planar distributions in aerial photogrammetry [38]. As a result, GCPs must be carefully chosen by the sensor platform.

3.2. Practical Experiments

This section describes experiments in which the ODLT, NDLT, and perspective projection models were used to estimate the actual sensor position/orientation and principal distance. Sensor calibration was performed prior to the experiments to determine the IOP values of each sensor. However, IOPs can change for a variety of reasons. For example, the principal point varies due to lens group perturbation and may vary due to aperture and focus changes [39,40,41]. The value of the radial distortion parameter changes with the principal distance, making generalized modeling difficult [40]. The estimated radial distortion parameter value can also vary with the distance from the control points [42,43]. The camera was set to manual mode to control various factors; however, the micromechanism that operated the lens group was not. Therefore, in this study, a direct comparative analysis was only used to estimate the camera EOPs. The focal length was shown to examine the trend of the estimation result, but the principal point location and camera distortion parameters were not shown.

To examine the accuracy of the estimated sensor position, a virtual reference station VRS GPS survey was performed. Further, as the true value of EOPs, the SPR result based on sensor measurement and camera calibration can be used as the initial value. The accuracy of the orientation estimation result can be indirectly checked using the mean reprojection error (MRE) and the comparison with the SPR result. In this paper, both orientation parameters estimated by SPR and reprojection results are presented. The locations of the GCPs are marked in Figure 4. Pixel coordinates and ground coordinates of GCPs were applied to and to estimate to in Equation (7). Based on to , and matrices were estimated to estimate camera position, orientation, and principal distance. In addition, pixel coordinates of GCPs were applied to and , and ground coordinates of GCPs were applied to , , and of Equation (20) to estimate to for camera position, orientation, and principal distance.

3.2.1. CCTV

The CCTV image was used to estimate the camera principal distance and EOPs. The reprojection error was calculated for each image using the estimated IOPs/EOPs and 10 CKPs. Table 4 shows the estimated principal distance, whereas Table 5 shows the EOPs of the camera based on the CCTV image. The estimated camera position error for each method is shown in Figure 11a, and the rotation angle error is shown in Figure 11b. The MRE for each model is depicted in Figure 11c.

Table 4.

Calibration result and estimated principal distance of CCTV.

Table 5.

Estimated EOP and reprojection errors of CCTV.

Figure 11.

Estimated EOPs error (a,b) and mean reprojection error (c) for CCTV.

In the case of the position estimation error, the perspective projection model produced the most accurate estimation results. The position errors for the ODLT and NDLT models were 1.9856 m and 1.3951 m, respectively. The perspective projection had an error of 0.6336 m, allowing for a more accurate position estimation. In the case of the rotation angle estimation, the perspective projection model produced the best results, whereas ODLT produced a large error in the rotation angle. However, the reprojection results were consistent across all three models.

3.2.2. UAV

Table 6 shows the calibrated and estimated principal distance of the UAV camera sensor. The estimated EOP errors and the reprojection errors are shown in Table 7. Interestingly, as a result of experimenting with images taken in the direction of nadir (fixed-wing UAV), an unacceptably large error occurred in the IOP and EOP estimation. It was estimated very differently from the principal distance calibration result, and rotation and position errors largely occurred in the case of EOP as well. In contrast, the experiment using the image taken in the oblique direction to understand the rotary-wing UAV showed acceptable results. This is related to the distribution of the GCPs described in Section 3.1. The image was taken at a high altitude (>200 m), but the height distribution of the GCPs was within 4.09 m. Because all GCPs and CKPs were on nearly the same plane, the reprojection results were not large, but proper IOP/EOP estimation was not performed.

Table 6.

Calibration result and estimated principal distance of UAVs.

Table 7.

Estimated EOP and reprojection errors of UAVs.

Next, the results obtained using the rotary-wing UAV image are shown in Figure 12. Figure 12a,b depicts the camera position and the camera rotation angle errors, respectively. Figure 12c also displays the MRE. In terms of the camera position error, the perspective-projection-model-based algorithm performed the best. The DLT-based algorithms also produced acceptable estimation results with errors of less than 1.6960 m and 2.1053 m, respectively. The position estimation accuracy of the perspective projection model was within 0.7966 m. The ODLT model had a maximum rotation angle estimation error of 7.15°, but the other two algorithms were generally capable of accurate rotation angle estimation. All three algorithms had the MRE of fewer than 5 pixels.

Figure 12.

Estimated EOP error (a,b) and mean reprojection error (c) for UAVs.

3.2.3. Smartphone

The calibration and estimated principal distance of a smartphone camera are shown in Table 8. Table 9 displays the estimated EOP errors and the reprojection errors. Figure 13a shows a comparison of each-algorithm-estimated camera position error, and Figure 13b shows the estimated orientation angle error. The MRE is depicted in Figure 13c. All three models produced accurate camera position estimation results. The same pattern was observed in the results of orientation estimation. However, the ODLT position and orientation estimation performance suffered significantly.

Table 8.

Calibration result and estimated principal distance of a smartphone.

Table 9.

Estimated EOP and reprojection errors of a smartphone.

Figure 13.

Estimated EOP error (a,b) and mean reprojection error (c) for a smartphone.

Overall, the perspective projection model showed good results. This is because the correlation between parameters affected the quality when DLT models were used. The results of the three algorithms had lower reliability compared to the results of camera calibration or SPR, which are widely used. However, there was not much difference between the camera calibration result and the SPR result, and it is enough to be used as an initial parameter value. Therefore, it is possible to estimate the IOP/EOPs of the sensor precisely by fusion with the camera calibration and SPR.

4. Discussion

4.1. Simulation Experiments

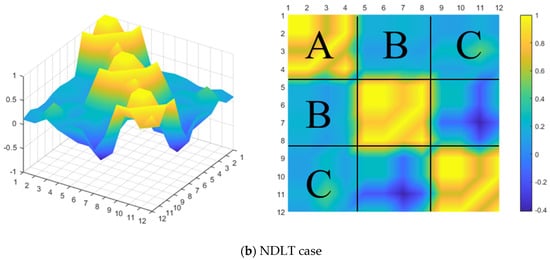

When estimating IOPs (the principal distance and the principal point) in the two DLT models, A and C components were used for the x component of IOPs, and B and C components were used for the y component of IOPs. When estimating EOPs, all A, B, and C components were used. Figure 14 shows the relationship between the ODLT parameters calculated with the total least square.

Figure 14.

Visualizing the correlation of the DLT parameters.

Overall, the DLT parameters correlated with each other in the case of ODLT. The correlation between the parameters of the A, B, and C block components was high in the case of NDLT. A strong relationship between parameters can reduce estimation precision and increase error [44,45]. Because of high correlation between DLT parameters, errors in some parameters may be used to correct other parameters, causing errors to propagate to the accuracy of the IOP/EOP estimation [46]. In this regard, when IOPs/EOPs are estimated using ODLT and NDLT, the correlation between parameters influences the result, potentially lowering the estimation accuracy. In particular, it is expected that the estimation accuracy of ODLT, which shows the overall correlation, will be lower than that of NDLT.

4.2. Practical Experiments

Experimental results using CCTV, UAV, and smartphone, camera EOPs estimation results showed good results in regards to the point-based perspective projection model, NDLT model, and ODLT model. In the case of camera position estimation, ODLT and NDLT showed similar results, but the position estimation error was slightly larger when ODLT was used. In the case of the rotation angle estimation, NDLT and the perspective projection model showed significantly better results than ODLT. In the case of the reprojection error, the three models showed similar results. It is judged that this is because the high correlation between DLT model parameters affects the estimation result, as analyzed in Section 3.1. In addition, since DLT parameters are for the purpose of connecting 3D points and image points, the amount of reprojection error is smaller than that of the perspective projection model, but the quality of camera position and orientation estimation results are analyzed to be inferior.

In general, it was possible to estimate IOPs/EOPs using three models, but good results were not obtained using nadir images acquired from a UAV. The position estimation errors were over 100 m, and the rotation angle estimation was not able to estimate a reasonable result. The camera IOPs estimation result was also less reliable. This is because GCPs are almost on the same plane due to high altitude imaging. The reprojection results seem reasonable, but this is because the distribution of CKPs is also on the same plane as presented in Section 3.1. Neither DLT nor the perspective projection model can be used in this environment, but it is more appropriate to use the classic SPR.

4.3. Contribution and Limitations

A study was carried out in this paper to estimate the IOPs/EOPs of an optical sensor in the absence of an initial value. Sensor positioning was performed using three different algorithms, and the results were confirmed to be different. Experiments were carried out using both real data and simulation levels. CCTV, UAV, and smartphones were used, and it was discovered that applying the three algorithms was difficult if the diversity of GCP was not secured.

The limitations of this study are discussed as follows. The first limitation of this study is dependent on the quality of the point cloud from which GCP can be acquired. Many MMS devices currently acquire city point clouds, but the quality of the point clouds varies. When uncalibrated MMS equipment is used, the location accuracy of the point cloud is greatly reduced, which has a direct impact on the optical sensor’s orientation/position estimation result. The simplification of the camera distortion parameters is the study’s second limitation. The tangential distortion parameter was ignored in this study, and the radial distortion parameter was assumed to be small. This study did not include cases with large lens distortion parameters, such as fisheye lenses. As a result, future research must investigate how the location accuracy of the point cloud is propagated to the estimation results. Furthermore, when using a lens with a high distortion parameter, a position and orientation estimation process must be developed. However, in a situation where sufficient GCP can be secured, for example, in the case of an indoor space where a point cloud is acquired with terrestrial LiDAR, effective results can be produced for estimating the position and orientation of the sensor. This research team is conducting additional research related to point cloud registration using these characteristics and expects to obtain interesting results.

5. Conclusions

In this study, the IOPs/EOPs of various smart city sensors were estimated using ODLT, NDLT, and the perspective projection models. MMS + UAV hybrid point cloud data were used to collect GCPs and CKPs. We tested two different images for each platform. In this study, camera IOPs were not used as true values because calibration results could vary depending on the experimental conditions and fine optical adjustment of the instrument was not possible. Instead, the obtained calibration and estimation results were presented in tables to confirm the trend of IOPs.

In general, the estimated camera EOP results are ranked in descending order: the results of the perspective projection model, NDLT model, and ODLT model. In the case of the camera position estimation, ODLT and NDLT produce similar results, but ODLT produces slightly larger position estimation errors. The maximum error in estimating the sensor’s position using the perspective projection model was 0.7966 m, and the average error was 0.6331 m. The ODLT and NDLT models had average errors of 1.6992 m and 1.2047 m, respectively. In the case of the rotation angle estimation, NDLT and the perspective projection model significantly outperforms ODLT. The average orientation angle errors for the perspective projection model and the NDLT model were 0.88° and 0.76°, respectively, and 3.07° for the ODLT. The three models produce similar reprojection error results. The average reprojection error of each model was 3.67 pixel, 2.14 pixel, and 2.93 pixel, respectively.

Herein, three models were used to estimate IOPs/EOPs. However, results obtained from UAV-acquired nadir images are poor. The position estimation results exceed 100 m, and the rotation angle estimation result is not reasonable. The estimation of camera IOPs is also less reliable. Because of high altitude imaging, GCPs can be regarded as being almost on the same plane. The reprojection results appear to be reasonable, but this is because the distribution of CKPs is also on the same plane. Table 10 is the error summary table for each sensor platform.

Table 10.

Error summary for each sensor platform.

Through this study, it is possible to quickly estimate the camera information, position, and orientation of various optical sensors distributed in a smart city. Because it uses the geometric characteristics of a frame camera, it can be applied not only to the optical sensor but also to the infrared camera. In addition, there is an advantage in that absolute or relative coordinates of various sensor platforms can be calculated. In particular, the results of this study can be significantly applied to indoor and underground spaces where positioning systems such as global navigation satellite systems cannot be used. This research team plans to apply the findings of this study to the coarse registration of point clouds in indoor space in a future study.

Author Contributions

Conceptualization, N.K.; methodology, N.K.; software, N.K.; validation, N.K.; formal analysis, N.K.; investigation, N.K.; resources, N.K.; data curation, N.K.; writing—original draft preparation, N.K.; writing—review and editing, S.B. and G.K.; visualization, N.K.; supervision, G.K.; project administration, N.K.; funding acquisition, N.K. and G.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science, ICT (MSIT), and the Ministry of Education (No. 2022R1G1A1005391, No. 2021R1A6A1A03044326). Also, This work was supported by research fund of Korea Military Academy (Hwarangdae Research Institute).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data not available due to the law of Korean government (ACT ON THE ESTABLISHMENT AND MANAGEMENT OF SPATIAL DATA).

Conflicts of Interest

The authors declare no conflict of interest.

References

- The World Bank. World Development Report 2016: Digital Dividends; The World Bank: Bretton Woods, NH, USA, 2016. [Google Scholar]

- National League of Cities. Trends in Smart City Development; National League of Cities: Washington, DC, USA, 2016. [Google Scholar]

- Rausch, S.L. The Impact of City Surveillance and Smart Cities. Available online: https://www.securitymagazine.com/articles/90109-the-impact-of-surveillance-smart-cities (accessed on 8 January 2023).

- MOHW CCTV. Installation and Operation in Public Institutions. Available online: http://www.index.go.kr/potal/main/EachDtlPageDetail.do?idx_cd=2855#quick_02 (accessed on 8 November 2022).

- Habib, A.; Kelley, D. Single-photo resection using the modified hough transform. Photogramm. Eng. Remote Sens. 2001, 67, 909–914. [Google Scholar]

- Habib, A.F.; Lin, H.T.; Morgan, M.F. Line-based modified iterated hough transform for autonomous single-photo resection. Photogramm. Eng. Remote Sens. 2003, 69, 1351–1357. [Google Scholar] [CrossRef]

- Seedahmed, G.H. On the Suitability of Conic Sections in a Single-Photo Resection, Camera Calibration, and Photogrammetric Triangulation; The Ohio State University: Columbus, OH, USA, 2004. [Google Scholar]

- Habib, A.; Mazaheri, M. Quaternion-based solutions for the single photo resection problem. Photogramm. Eng. Remote Sens. 2015, 81, 209–217. [Google Scholar] [CrossRef]

- Luhmann, T.; Robson, S.; Kyle, S.; Boehm, J. Close-Range Photogrammetry and 3D Imaging; Walter de Gruyter: Berlin, Germany, 2013; ISBN 3110302780. [Google Scholar]

- Kim, N.; Lee, J.-S.; Bae, J.-S.; Sohn, H.-G. Comparative analysis of exterior orientation parameters of smartphone images using quaternion-based SPR and PnP algorithms. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2019, 37, 465–472. [Google Scholar] [CrossRef]

- Hong, S.P.; Choi, H.S.; Kim, E.M. Single photo resection using cosine law and three-dimensional coordinate transformation. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2019, 37, 189–198. [Google Scholar] [CrossRef]

- Crosilla, F.; Beinat, A.; Fusiello, A.; Maset, E.; Visintini, D. Advanced Procrustes Analysis Models in Photogrammetric Computer Vision; Springer: Berlin, Germany, 2019; ISBN 303011760X. [Google Scholar]

- Fusiello, A.; Crosilla, F.; Malapelle, F. Procrustean point-line registration and the NPnP problem. In Proceedings of the 2015 International Conference on 3D Vision, Lyon, France, 19–22 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 250–255. [Google Scholar]

- Garro, V.; Crosilla, F.; Fusiello, A. Solving the pnp problem with anisotropic orthogonal procrustes analysis. In Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, Zurich, Switzerland, 13–15 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 262–269. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Albl, C.; Kukelova, Z.; Larsson, V.; Pajdla, T. Rolling shutter camera absolute pose. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 1439–1452. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, L. Pose estimation based on pnp algorithm for the racket of table tennis robot. In Proceedings of the 2013 25th Chinese Control and Decision Conference (CCDC), Guiyang, China, 25–27 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 2642–2647. [Google Scholar]

- Xu, D.; Li, Y.F.; Tan, M. A general recursive linear method and unique solution pattern design for the perspective-n-point problem. Image Vis. Comput. 2008, 26, 740–750. [Google Scholar] [CrossRef]

- Seedahmed, G.; Schenk, T. Comparative study of two approaches for deriving the camera parameters from direct linear transformation. In Proceedings of the Annual Conference of ASPRS, St. Louis, MO, USA, 23–27 April 2001. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2004; ISBN 9780521540513. [Google Scholar]

- Přibyl, B.; Zemčík, P.; Čadík, M. Absolute pose estimation from line correspondences using direct linear transformation. Comput. Vis. Image Underst. 2017, 161, 130–144. [Google Scholar] [CrossRef]

- Silva, M.; Ferreira, R.; Gaspar, J. Camera calibration using a color-depth camera: Points and lines based DLT including radial distortion. In Proceedings of the IROS Workshop in Color-Depth Camera Fusion in Robotics, Vilamoura, Portugal, 7 October 2012. [Google Scholar]

- El-Ashmawy, K.L.A. A comparison study between collinearity condition, coplanarity condition, and direct linear transformation (DLT) method for camera exterior orientation parameters determination. Geod. Cartogr. 2015, 41, 66–73. [Google Scholar] [CrossRef]

- Bujnak, M.; Kukelova, Z.; Pajdla, T. New efficient solution to the absolute pose problem for camera with unknown focal length and radial distortion. In Asian Conference on Computer Vision; Springer: Berlin, Germany, 2011; pp. 11–24. [Google Scholar]

- Josephson, K.; Byrod, M. Pose estimation with radial distortion and unknown focal length. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 2419–2426. [Google Scholar]

- Kukelova, Z.; Bujnak, M.; Pajdla, T. Real-time solution to the absolute pose problem with unknown radial distortion and focal length. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 2816–2823. [Google Scholar]

- Larsson, V.; Kukelova, Z.; Zheng, Y. Camera pose estimation with unknown principal point. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2984–2992. [Google Scholar]

- Park, S.-K.; Park, J.-K.; Won, H.-I.; Choi, S.-H.; Kim, C.-H.; Lee, S.; Kim, M.Y. Three-dimensional foot position estimation based on footprint shadow image processing and deep learning for smart trampoline fitness system. Sensors 2022, 22, 6922. [Google Scholar] [CrossRef] [PubMed]

- Khalili, B.; Ali Abbaspour, R.; Chehreghan, A.; Vesali, N. A context-aware smartphone-based 3D indoor positioning using pedestrian dead reckoning. Sensors 2022, 22, 9968. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Shang, S.; Wu, Z. Research on indoor 3D positioning algorithm based on wifi fingerprint. Sensors 2023, 23, 153. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.-Y.; Wen, C.-Y.; Sethares, W.A. Multi-target PIR indoor localization and tracking system with artificial intelligence. Sensors 2022, 22, 9450. [Google Scholar] [CrossRef] [PubMed]

- Challis, J.H.; Kerwin, D.G. Accuracy assessment and control point configuration when using the DLT for photogrammetry. J. Biomech. 1992, 25, 1053–1058. [Google Scholar] [CrossRef] [PubMed]

- Seedahmed, G.; Schenk, T. Direct linear transformation in the context of different scaling criteria. In Proceedings of the Annual conference of American Society of Photogrammetry and Remote Sensing, St. Louis, MO, USA, 23–27 April 2001. [Google Scholar]

- Fitzgibbon, A.W. Simultaneous linear estimation of multiple view geometry and lens distortion. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001; IEEE Computer Society: Washington, DC, USA, 2001; Volume 1, pp. I-125–I-132. [Google Scholar]

- Mohammad, G.F. Data Fusion Technique for Laser Scanning Data and UAV Images to Construct Dense Geospatial Dataset. Master’s Thesis, Yonsei University, Seoul, Korea, 2018. [Google Scholar]

- Fawzy, H.E.-D. The accuracy of mobile phone camera instead of high resolution camera in digital close range photogrammetry. Int. J. Civ. Eng. Technol. 2015, 6, 76–85. [Google Scholar]

- Jacobsen, K. Satellite image orientation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 703–709. [Google Scholar]

- Kim, P.; Chen, J.; Cho, Y.K. Automated point cloud registration using visual and planar features for construction environments. J. Comput. Civ. Eng. 2018, 32, 4017076. [Google Scholar] [CrossRef]

- Chen, Y.-S.; Shih, S.-W.; Hung, Y.-P.; Fuh, C.-S. Simple and efficient method of calibrating a motorized zoom lens. Image Vis. Comput. 2001, 19, 1099–1110. [Google Scholar] [CrossRef]

- Jeong, S.S.; Joon, H.; Kyu, W.S. Empirical modeling of lens distortion in change of focal length. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2008, 26, 93–100. [Google Scholar]

- Willson, R.G. Modeling and calibration of automated zoom lenses. In Proceedings of the Videometrics III, Boston, MA, USA, 6 October 1994; International Society for Optics and Photonics: Bellingham, WA, USA, 1994; Volume 2350, pp. 170–186. [Google Scholar]

- Bräuer-Burchardt, C. The Influence of Target Distance to Lens Distortion Variation. In Proceedings of the Modeling Aspects in Optical Metrology, Munich, Germany, 18 June 2007; International Society for Optics and Photonics: Bellingham, WA, USA, 2007; Volume 6617, p. 661708. [Google Scholar]

- Bräuer-Burchardt, C.; Heinze, M.; Munkelt, C.; Kühmstedt, P.; Notni, G.; Fraunhofer, I.O.F. Distance dependent lens distortion variation in 3D measuring systems using fringe projection. In Proceedings of the BMVC, Edinburgh, UK, 4–7 September 2006; p. 327. [Google Scholar]

- Bu-Abbud, G.H.; Bashara, N.M. Parameter correlation and precision in multiple-angle ellipsometry. Appl. Opt. 1981, 20, 3020–3026. [Google Scholar] [CrossRef] [PubMed]

- Ibrahim, M.M.; Bashara, N.M. Parameter-correlation and computational considerations in multiple-angle ellipsometry. J. Opt. Soc. Am. A 1971, 61, 1622–1629. [Google Scholar] [CrossRef]

- Tang, R.; Fritsch, D. Correlation analysis of camera self-calibration in close range photogrammetry. Photogramm. Rec. 2013, 28, 86–95. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).