Abstract

Fetal brain tissue segmentation is essential for quantifying the presence of congenital disorders in the developing fetus. Manual segmentation of fetal brain tissue is cumbersome and time-consuming, so using an automatic segmentation method can greatly simplify the process. In addition, the fetal brain undergoes a variety of changes throughout pregnancy, such as increased brain volume, neuronal migration, and synaptogenesis. In this case, the contrast between tissues, especially between gray matter and white matter, constantly changes throughout pregnancy, increasing the complexity and difficulty of our segmentation. To reduce the burden of manual refinement of segmentation, we proposed a new deep learning-based segmentation method. Our approach utilized a novel attentional structural block, the contextual transformer block (CoT-Block), which was applied in the backbone network model of the encoder–decoder to guide the learning of dynamic attentional matrices and enhance image feature extraction. Additionally, in the last layer of the decoder, we introduced a hybrid dilated convolution module, which can expand the receptive field and retain detailed spatial information, effectively extracting the global contextual information in fetal brain MRI. We quantitatively evaluated our method according to several performance measures: dice, precision, sensitivity, and specificity. In 80 fetal brain MRI scans with gestational ages ranging from 20 to 35 weeks, we obtained an average Dice similarity coefficient (DSC) of 83.79%, an average Volume Similarity (VS) of 84.84%, and an average Hausdorff95 Distance (HD95) of 35.66 mm. We also used several advanced deep learning segmentation models for comparison under equivalent conditions, and the results showed that our method was superior to other methods and exhibited an excellent segmentation performance.

1. Introduction

Congenital diseases are one of the leading causes of neonatal death worldwide. To comprehensively understand the neurodevelopment of normal fetuses and fetuses with congenital disorders and realize early detection and treatment of congenital disorders, prenatal maternal and infant health screening and quantitative analyses of the developing human fetal brain are essential. Ultrasonography is an important tool for prenatal identification and screening of fetal malformations, but detecting abnormal development of some tissues, such as the cerebellar vermis, is often limited due to the influence of fetal position and cranial acoustic attenuation on ultrasonography. In recent years, fetal magnetic resonance imaging (MRI) has become an important auxiliary tool that can provide more information on fetal development in the case of unclear ultrasound images to improve the possibility and accuracy of congenital disease diagnoses. Evidence has demonstrated that fetal MRI, especially brain MRI, can be a useful diagnostic tool for many congenital disorders, such as spina bifida, intrauterine growth retardation, congenital heart disease, anencephaly, corpus callosum anomalies, and more [1,2].

Important neurodevelopmental changes occur in the last trimester of pregnancy, between 30 and 40 weeks of gestation, and include volume growth, myelination, and cortical gyration. Fetal MR images allow clinicians to monitor fetal brain development in utero at an early developmental stage and detect brain abnormalities early. Fetal brain MRI analysis typically begins by segmenting the brain into different tissue categories, followed by volumetric and morphological analyses. This is because many congenital diseases cause subtle changes in these tissues [3]. The incidence rate of congenital heart disease (CHD) accounts for approximately 5–8% of all live births every year and is the most common congenital malformation and an important cause of neonatal death. Licht et al. [4] examined 25 neonates with CHD using MRI and found that 53% had brain damage, including microcephaly (24%), incomplete fontanel closure (16%), and periventricular leukomalacia (PVL) (28%). White matter damage was characteristic of brain damage in premature infants. Hypoplastic left heart syndrome (HLHS) is one of the most severe forms of congenital heart disease (CHD). The volume of cortical gray matter, white matter, and subcortical gray matter of HLHS fetus in the third trimester of pregnancy gradually decreases. In addition to white matter (WM), gray matter (GM), and cerebrospinal fluid (CSF), other tissues, such as the cerebellum (CB) and brainstem (BS), are equally important for understanding and predicting healthy or abnormal brain development in preterm infants of similar gestational age. The cerebellum is particularly clinically important because it is one of the fastest-growing brain regions in the last trimester of pregnancy. The Dandy–Walker malformation, for example, corresponds to changes in brain tissue with hypoplasia or absence of the cerebellar earthworms, hypoplasia of the cerebellar hemispheres, and enlargement of the posterior cranial fossa and fourth ventricle. The enlargement of the posterior fossa results in an elevation of the torcula that is nicely demonstrated on the sagittal images, as is associated hypoplasia of the brain stem. A prenatal ultrasound can show some of the features of Dandy–Walker malformation, but is limited in its ability to assess vermian hypoplasia. Therefore, the fetal MRI allows for a better evaluation of these anomalies. In contrast, spina bifida is associated with the brainstem and ventricle, and research [5] has shown that in fetuses with open spina bifida (OSB), the brainstem diameter is higher compared to normal fetuses, which may be the result of caudal displacement of the brainstem and compression of the fourth ventricle-large magna complex within the confined space between the sphenoid and occipital bones. T. GHI et al. [6] studied 66 fetuses with isolated spina bifida, with a mean gestational age at diagnosis of 21 weeks (range 16–34 weeks) and 56 cases diagnosed before 24 weeks. A total of 59 cases chose to terminate the pregnancy and 7 fetuses were delivered alive and survived. Fifty-seven cases representing the study group were followed up in detail. About 53 (93.0%) of the postnatal defects were classified as open and 4 (7.0%) cases had closed defects (three lipohypophysis, one meningocele). At mid-gestation, ventricular enlargement was present in 34/53 cases (64.2%). Therefore, it can be seen that the detection of morphological changes is of great value for congenital diagnosis.

Fetal MRI is a challenging imaging modality because the fetus is not sedated and tends to move freely, causing some artifacts. The imaging technique uses ultrafast MRI sequences, such as T2-weighted single-shot fast spin echo (ssFSE), which allows very rapid acquisition of low-resolution images, and super-resolution reconstruction algorithms can then be applied to merge several low-resolution images into a single high-resolution volume. Traditionally, physicians manually segmented these images into several tissues. However, this manual segmentation is tedious and time-consuming because of the small fetal brain volume and the partial volume effects of different tissue classes make manual segmentation error-prone and must be performed by highly specialized clinicians. Due to the difficulty of the segmentation task, many automated methods have been attempted to detect and segment brain tissues to aid in diagnosis.

Automated fetal brain tissue segmentation is challenging because the spontaneous movements of the fetus and the mother during the scanning process cause some artifacts, such as uneven intensity, making it difficult to distinguish between tissue types, as well as the complex shapes of different tissues, which can also pose some challenges for segmentation.

Atlas-based segmentation techniques rely on an image registration process that looks for voxel-to-voxel alignment of the two images. One image is an atlas from which a labeled map of the structure of interest can be obtained, and the other is an image of the target subject to be segmented. For the automatic segmentation of fetal brain tissue in reconstructed MR volumes, Habas et al. [7] proposed an atlas-based approach for automatic segmentation. For 14 fetal MR scans with a gestational age distribution between 20.57 and 22.86 weeks, a probabilistic map of the tissue distribution was constructed to segment white matter, gray matter, germinal matrix, and right cerebrospinal fluid using an expectation maximization (EM) model approach. Before segmentation, a bias field correction was performed using another EM model. Later, considering the extension of this framework to a wider range of gestational ages, the authors proposed another temporal model to construct a spatiotemporal atlas of the fetal brain [8], which was created using polynomial simulations of the changes in MR intensity, tissue probability and shape from a group of fetal MRIs between 20.57 and 24.71 weeks of gestational age. Serag et al. [9] proposed a method to construct a 4D multichannel atlas of the fetal brain that utilized the nonrigid registration of MR brain images and included the average intensity template of multiple modalities and tissue probability maps. Compared with the probability maps generated by the affine registration method, the tissue probability map generated by this method improved the automatic segmentation process based on the atlas. The data used in the study were collected from MR images of 80 fetuses aged between 23 and 37 weeks of gestational age (GA). Wright et al. [10] used MR images of 80 developing fetuses in the gestational age range of 21.7 to 38.9 weeks, took the spatiotemporal atlas as tissue priors, and adopted the Markov random field regularized expectation maximization (EM-MRF) method proposed by Ledig et al. [11] to improve tissue segmentation accuracy. The technique of atlas fusion was used, while the SVR technique was employed before extracting the brain to alleviate the sensitivity of motion-impaired fetal images to alignment.

In recent years, deep learning methods, particularly convolutional neural networks (CNNs), have gained popularity for many target recognition and biological image segmentation challenges [12,13,14]. Unlike traditional classification methods, CNN automatically learns complex and representative features directly from the data, eliminating the need to first extract a set of handcrafted features from the image as input to a classifier or model [15]. Due to this property, research on CNN-based fetal brain tissue segmentation has mainly focused on the design of network architectures rather than on extracting features in image processing. As a result, deep learning methods tend to achieve better performance than traditional machine learning methods. Kelly et al. first used three 2D U-Nets trained in three directions (axial, sagittal, and coronal) to form three separate networks and then trained a 3D U-Net with the same structure as the 2D U-Net. Data augmentation, epochs, loss functions, and optimizers were the same. Finally, they evaluated each of these four networks. Khalili et al. [16] first used a CNN to extract intracranial volumes by automatically cropping the image to the region of interest, after which a CNN with the same structure was used to segment the extracted volumes into seven brain tissue categories. Asim lqbal et al. [17] transformed 3D brain stacks into 2D brain images (256 × 256) covering the top-down axial plane to make the dataset compatible with SeBRe. Transfer learning was used during their training process. The FeTA brain dataset is initialized with pretrained weights for the Microsoft COCO dataset.

Different congenital diseases lead to various changes in fetal brain tissues, so this research aimed to help doctors obtain more intelligent and rapid structural segmentation to provide quantitative information for auxiliary diagnosis of congenital diseases. The fetal brain is small, with irregular and interconnected tissues. Automatic segmentation algorithms can reduce the burden of manual segmentation, improve segmentation efficiency, and quickly identify various tissues, which is beneficial to the judgment of fetal congenital diseases. In medical image processing, automatic segmentation algorithms usually use the U-Net model, which mainly differs in image pre-processing and post- processing, as well as the fine-tuning and parameter setting during the training process. However, the traditional U-Net architecture has several drawbacks, such as (1) the feature extraction capability being insufficient and (2) the inability to use global contextual information. Therefore, focusing on these two problems, the main contribution of this work is to design a new network structure, which combines CNN and transformer. The convolution layer in CNN obtains the output features through the convolution core, but the receptive field of the convolution core is small. It is not efficient to simply increase the receptive field by stacking convolution layers. The self-attention mechanism in transformer facilitates capturing the global information to obtain a larger receptive field. Therefore, we combined CNN and self-attention to design a feature pyramid model based on the contextual transformer block (CoT-Block). It integrated context information mining and self-attention learning into a unified architecture to guide the learning of dynamic attention matrix, thus enhancing the ability of image feature extraction. At the same time, the introduction of the hybrid dilated convolution module can expand the receptive field and retain detailed spatial information, effectively extracting the global context information of fetal brain MRI tissues. In this way, the defects of the traditional U-Net model structure mentioned above can be compensated to a certain extent. Comparative experiments with several state-of-the-art models showed that our trained model was less computationally intensive, faster, and had the best segmentation performance overall.

2. Materials and Methods

2.1. Fetal MRI Data

This study included T2-weighted MRI scans of 80 fetuses at a gestational age between 20 and 35 weeks. These data [18] were acquired on 1.5 T and 3 T GE clinical whole-body scanners (Signa Discovery MR450 and MR750) using either an 8-channel cardiac coil or a body coil. Several T2-weighted single-shot fast spin echo (ssFSE) images with a resolution of 0.5 mm × 0.5 mm × 3 mm were acquired in all three planes (at least one image each in the axial, sagittal, and coronal planes) for each subject and reconstructed as a single high-resolution volume of 0.5 mm × 0.5 mm × 0.5 mm using the MIAL Superresolution Toolkit [19,20] and Simple IRTK [21]. The repetition time (TR) was set to 2000–3500 ms, the echo time (TE) was set to 120 ms (minimum), the flip angle was set to 90 degrees, and the sampling percentage was 55%. Field of view (200–240 mm) and image matrix (1.5 T: 256 × 224; 3 T: 320 × 224) were adjusted depending on the gestational age and fetal size. The imaging plane was oriented relative to the fetal brain, and axial, coronal and sagittal images were acquired.

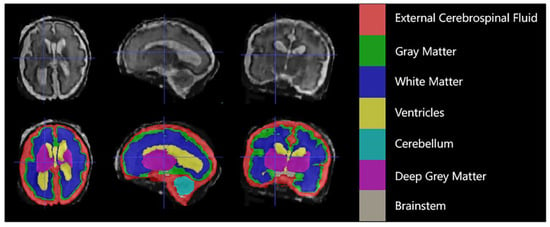

Each case consisted of a 3D superresolution reconstruction of the fetal brain (256 × 256 × 256 voxels). Training cases had an annotated label map corresponding to 7 different brain tissue types: external cerebrospinal fluid (eCSF), gray matter (GM), white matter (WM), lateral ventricles (LV), cerebellum (CB), deep gray matter (DGM), and brainstem (BS). As shown in Figure 1 below.

Figure 1.

An example of manual segmentation (red: external cerebrospinal fluid; green: GM; dark blue: WM; yellow: ventricles; cyan: cerebellum; maroon: deep GM: gray: brainstem).

2.2. Model Architecture

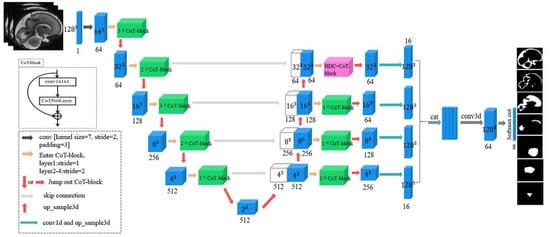

We combined CNN with Transformer to design a network model as shown in Figure 2. The backbone network of this model consisted of four parts: encoder, decoder, skip connection, and feature combination. Each layer of the encoder introduced self-attention blocks with the number of 3-2-3-2-1, and each layer of the decoder introduced one self-attention block and added a hybrid dilated convolution in the last layer. For localization, each feature map in the decoder was connected with the corresponding feature map from the encoder, and this structure was the skip connection. It linked the low-level features to the high-level features and collected the detailed information lost in the encoder, thus obtaining more accurate spatial information and improving the quality of the final tissues contour prediction.

Figure 2.

Network architecture: the network consists of a backbone network, which is composed of an encoder–decoder architecture, skip connection, and feature combination.

2.2.1. Backbone Network

Part of the encoder followed the new architecture of the contextual transformer block (CoT-Block), which will be explained in detail in the subsequent sections. The network started by extracting a series of feature maps at different resolutions. The shallower feature maps contained the high-resolution detailed information needed to correctly depict the tissue boundaries, and the deeper feature maps contained both coarse- and high-level information to help predict the overall profile of the tissue. The input was first processed by a 7 × 7 × 7 convolution layer with a stride of 2 and padding of 3. Then, batch normalization (BN) and a rectified linear unit (ReLU) were used, followed by a 3 × 3 × 3 max pooling operation with stride 2 for downsampling. The number of feature maps in the encoder part of the network was 64, 128, 256, 512, and 512, where the number of feature channels was doubled in each downsampling step.

Similarly, the decoder was also composed of a CoT-block. Each step consisted of up-sampling the feature maps and converting them to the size of the input image using trilinear interpolation, then halving the number of feature channels and concatenating them with the corresponding feature maps in the encoder. This large number of feature channels in the up-sampling section allowed the network to propagate contextual information to the higher resolution layers. Then, a convolution operation with a kernel size of 3 × 3 × 3 was applied to each layer of the output feature map to change its channel, and trilinear interpolation was applied to alter its size, creating 16 features in each feature map. Finally, the resultant feature maps were merged via concatenation, and these feature maps went through 3 × 3 × 3 convolutional layers followed by BN and PReLU to generate the probability maps of seven brain tissues.

2.2.2. Hybrid Dilated Convolution

In the last layer of the decoder, a hybrid dilated convolution module [22] was introduced, which consisted of three 3D dilated convolution branches with dilation rates of d = 1, 2, and 4. This module expanded the receptive field of the convolution kernel while keeping the number of parameters constant so that each convolution output contained a large range of information; at the same time, it ensured that the size of the output feature map remained unchanged.

2.2.3. CoT-Block

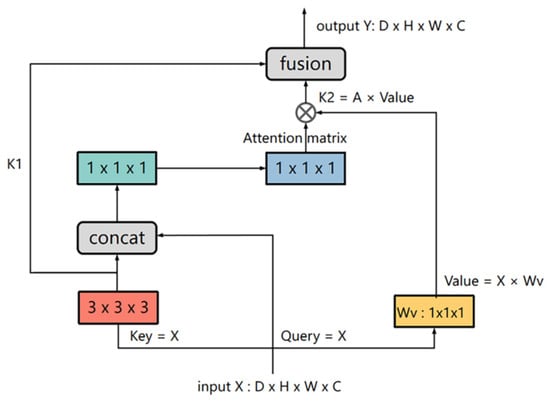

The contextual transformer block (CoT-Block) is a novel attention structure that makes full use of the context information of the key to guide the learning of the dynamic attention matrix to enhance the ability of image feature representation. Its structure is shown in Figure 3.

Figure 3.

Contextual Transformer block (CoT-Block), a novel attention structure [23].

The conventional self-attention block transformed the input feature map X into queries Q = XWq, keys K = XWk, and values V = XWv via the embedding matrix (Wq,Wk,Wv), respectively. When the image was 3D, each embedding matrix (Wq, Wk, Wv) adopted a 1 × 1 × 1 convolution in space. Then, keys K and queries Q performed matrix multiplication to obtain the local spatial relationship R, which was expressed as follows: , and further enriched the local relation matrix R through position information P: . However, this traditional self-attention mechanism has a defect, that is, when we study a pixel, we get keys K from the surrounding pixels to do correlation calculation with this pixel’s query Q, so all Q-K relationships are learned independently, without making use of the rich context information between pixels. This will severely limit the capacity of self-attention learning of feature map in the training process. For this reason, Li et al. [23] proposed the contextual transformer block (CoT-Block), a novel module that integrated contextual information mining and self-attention learning into a unified architecture. First of all, instead of directly converting the input feature map through the embedded matrix, it first used 3 × 3 × 3 convolution to encode the keys K of surrounding pixels to obtain the static context information of local adjacent positions. Then, it concatenated and query Q of the pixel being studied into two consecutive 1 × 1 × 1 convolutions to learn the dynamic multi-head attention matrix, which we can represent as and . Attention matrix A was expressed as . Instead of obtaining isolated Q-K pairs, this process obtained rich contextual information representation between pixels and learnt more about the details of the tissue. Then, the attention matrix A was multiplied by values V to obtain: , which implemented dynamic context representation of input. Finally, the module fused static and dynamic context representation to get the output result.

2.3. Loss Function

We used the combination of Dice loss and cross-entropy loss to train our network. The Dice coefficient is a similarity set metric function named by Lee Raymond Dice, which is commonly used to calculate the similarity between two samples. Its value ranged from 0 to 1. The Dice coefficient D between two binary volumes was denoted by

where the sums of the predicted binary segmentation volume and the ground truth binary volume were spread across the N voxels. The Dice loss was expressed as .

In dichotomous models, such as logistic regression and neural networks, the ground truth was labeled [0, 1], representing negative and positive classes, respectively. The model usually ended up with a sigmoid function (softmax function in the case of multiple classifications), which outputs a probability value reflecting the likelihood of predicting a positive class. The sigmoid function was expressed as follows: . The cross-entropy loss function was:

In this equation, and denote the ground truth brain tissue map and the predicted brain tissue probability map.

2.4. Implementation and Training

We implemented our network in PyTorch [24] and trained it in an end-to-end manner using the Adam optimizer [25] with a weight decay of 1 × 10−4. We used an initial learning rate of 1 × 10−4 and reduced it by a factor of 0.7 after 30 training epochs. Learning stopped after 300 epochs. All the training and experiments were run on a standard workstation equipped with 32 GB of memory, an Intel (R) Core (TM) i7-10700 CPU working at 2.90 GHz, and an Nvidia GeForce RTX 3090 GPU with a batch size of 2.

2.5. Data Augmentation

We applied a strong random data augmentation method to our training data, specifically applying random rotation of −15° to 15°, random horizontal flipping, translation transformation (10 voxels), and random 3D elastic transformation. We have conducted 300 epochs of training. At each training epoch, a different random augmentation transformation was applied than previous ones, so we ended up with 300 different representations of the data.

3. Experiments and Results

3.1. Alternative Techniques

To verify the segmentation performance of our proposed method, we compared the model with three existing deep neural networks, namely, 3D U-Net [26], V-Net [27], and 3D DMFNet [28]. They were both 3D network structures used to process medical images.

3D U-Net extends the U-Net architecture proposed by Ronneberger et al. [29]. The highlight of the current architecture is that it can be trained from scratch on sparsely annotated volumes and works on arbitrarily large volumes due to its seamless tiling strategy. It has been shown to be a powerful segmentation CNN with success in multiple segmentation tasks [30,31,32].

V-Net is a variant of U-Net. The left part consists of a compression path, while the right part decompresses the signal until its original size is reached. Convolutions are all applied with appropriate padding. The greatest difference with U-Net is that in each stage, it learns a residual function, which ensures convergence in a fraction of the time required by a similar network that does not learn residual functions, thus becoming the greatest improvement of V-Net.

The 3D dilated multifiber network (DMFNet) builds upon the multifiber unit [33], which uses efficient group convolution and introduces a weighted 3D dilated convolution operation to gain multiscale image representation for segmentation.

3.2. Gestational Age Analysis

We analyzed the effect of different gestational ages on algorithm performance. The fetal brain undergoes a variety of changes throughout development, such as increased brain volume, neuronal migration, and synaptogenesis. As a result, tissue contrast changes dramatically, particularly in the cortex. For example, as gestational age increases, the brain gyrus in the group 28–35 GW becomes narrower and the border between white matter and gray matter becomes blurred. Partial volume effects caused by tall and narrow gyri result in poor gray matter/white matter border visibility in certain regions [34]. Therefore, the random error in segmentation of gray matter and white matter between 28–35 GW may increase. The lateral ventricles change from the fetal type (vesicular and bicornuate) to the adult type with increasing gestational age, and the volume ratio of the lateral ventricles to brain increases between 10 and 13 GW, corresponding to the blistering phase of the ventricular system. At 28–35 weeks, the thickness of the hemispheric mantle increases exponentially, resulting in a decrease in the volume ratio of the lateral ventricles to brain [35]. For our study, we used 80 fetal MR scans with gestational ages ranging from 20 to 35 weeks. We divided these images into two age groups (20–27 weeks and 28–35 weeks) and analyzed the impact of different gestational ages on the performance of the segmentation algorithm through comparison experiments between these two groups.

3.3. Evaluation Metrics

The dataset was divided into training set, validation set and test set according to the ratio of 6:1:1. In the training and validation sets, we adopted ten-fold cross-validation to ensure the reliability of the results. We used six evaluation metrics to evaluate performance of the models: (1) the Dice similarity coefficient (DSC) was used to measure the similarity between Ground Truth and Prediction, (2) the segmentation precision (PRE), (3) the segmentation sensitivity (SEN), (4) the segmentation specificity (SPC), (5) the Volume Similarity (VS) was used to compared the volumes of the two segmentations, and (6) Hausdorff95 Distance (HD95) was defined as the quantized value of 95% of the maximum distance of the surface distance between Ground Truth and Prediction. All the evaluation metrics were calculated volume by volume, and each volume represented a specific tissue region of each patient.

In the above equations, TP, FP, TN, FN represent the value of true positives, false-positives, true negatives, and false negatives, respectively. X and Y represent the Prediction and Group Truth, respectively. The lower the HD95 value, the higher the segmentation accuracy. The larger the other values, the better the segmentation performance. In addition, we also test the model’s parameters and floating point operations (FLOPs). The smaller the parameters and FLOPs, the less computational resources the model requires.

3.4. Evaluation Results

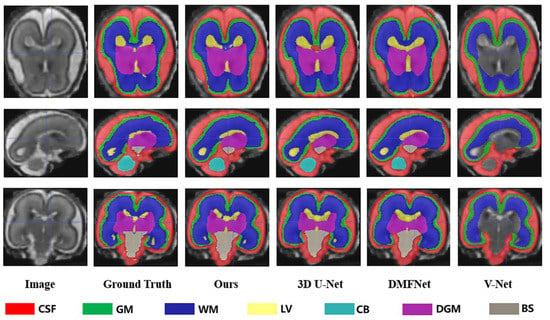

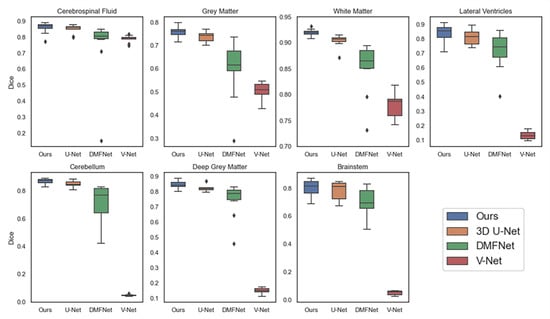

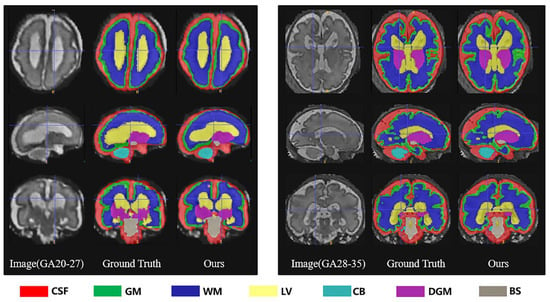

As demonstrated in Table 1, based on all evaluation metrics, overall, our method outperformed the other methods with the best average segmentation on 7 tissues. DSC, VS, PRE, SEN, and SPC all achieved the highest values with (83.79 ± 3.36)%, (84.84 ± 3.23)%, (85.73 ± 3.75)%, (84.32 ± 5.22)%, and (99.33 ± 0.15)%, and HD95 achieved the smallest with (35.66 ± 2.07) mm. In terms of different tissues, our method got better evaluation metrics in cerebrospinal fluid, gray matter, ventricle, cerebellum, and brainstem, whereas the best segmentation on white matter and dark gray matter were obtained with 3D U-Net. Unexpectedly, the ventricles, cerebellum, deep gray matter, and brainstem were not fully segmented in V-Net. We showed examples for visual comparison in Figure 4. Taking the ventricle as an example, it can be seen that only our method better identified the boundary between it and other tissues, such as white matter and deep gray matter, which was the result closest to the ground truth, whereas other methods either blurred the boundary or failed to find the ventricle part. Figure 5 depicted box plots to further visualize the differences in the Dice results. We have seen that the segmentation effect of the four models on CSF was relatively good, and Dice values were concentrated in a high range (0.8–0.9). For gray matter and white matter segmentation, the interquartile range (IQR) of DMFNet and V-Net was large, indicating a more dispersed distribution of Dice values. Table 2 showed the number of parameters and FLOPs for the four models. Our parameters were 24.21 M smaller than 3D U-Net, and FLOPs were reduced by 85.94%. DMFNET had the lowest number of parameters and FLOPs, but its segmentation performance was much lower than our method and 3D U-Net. Therefore, combining the results in Table 1 and Table 2 demonstrated that our network was a better model in the comprehensive evaluation of segmentation performance and computing resource utilization.

Table 1.

Comparison of segmentation results of different methods (V-Net, DMFNet, 3DU-Net and Ours) on different tissues based on quantitative performance metrics (DSC, VS, HD95, PRE, SEN, SPC). The best value for each metric in each tissue is marked in bold.

Figure 4.

Segmentation results of different methods (Ours, 3DU-Net, DMFNet and V-Net) in axial, sagittal and coronal planes respectively.

Figure 5.

Analysis of dice values of four different methods (Ours, 3DU-Net, DMFNet and V-Net) on seven tissues. The rhomb indicates the abnormal value.

Table 2.

Shows the comparison of Param, FLOPs of different methods (V-Net, DMFNet, 3DU-Net and Ours).

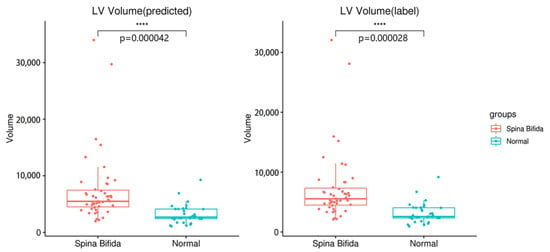

In the Introduction section above, we learned that congenital disorder may lead to enlarged ventricles. Here, we divided the data into the normal group and the pathological group (with spina bifida), then segmented the two groups with our network structure and calculated the volume of the ventricles. The volumes of the two groups were shown in Figure 6. The comparison between groups showed that the ventricle in the pathological group was significantly enlarged compared with the normal group (p < 0.0001), suggesting that measuring the changes in ventricular volume through fetal brain MRI tissue segmentation can be used as an important reference for diagnosing related congenital diseases.

Figure 6.

Analysis of ventricular volumes in the normal group and pathological group (spina bifida). **** represents p value less than 0.0001.

Table 3 showed the evaluation results of several metrics for two different gestational age groups (20–27 GW and 28–35 GW) as a way to analyze the effect of gestational age on the performance of our segmentation algorithm. The results obtained in the table showed that in the 28–35-week group, the segmentation performance of both gray matter and white matter became worse, with a significant decrease in DSC, VS, PRE, and SEN and an increase in HD95. The segmentation of the lateral ventricles was also poorer in the 28–35-week group. This supported our hypothesis that segmentation was more difficult due to the decrease in ventricle-to-brain volume ratio with increasing gestational age. Overall, the 28–35 age group showed a slight increase in segmentation difficulty compared to the group of 20–27 GW, with a 2.15% decrease in mean DSC (%) from 89.88 to 87.95 and a 1.98% decrease in VS (%) from 91.58 to 89.77. We also provided a few examples for visual comparison of the two age groups in Figure 7. The cerebral cortex expanded with increasing gestational age, and the morphology of the cerebral gyri and sulci became more notable. Discernibility of the gray/white matter boundary became variable in some regions due to partial volume effects produced by gyri. Gray matter (green) segmentation became more indistinguishable from cerebrospinal fluid (red) and white matter (dark blue) boundaries. In the 28–35 GW group, the volume of ventricles (yellow) became significantly smaller and the shape changed, and it was no longer simply vesicular or bicornuate, which also led to an increase in the random error of segmentation.

Table 3.

Comparison of segmentation results of different gestational age groups (20–27 weeks and 28–35 weeks) based on quantitative performance metrics (DSC, VS, HD95, PRE, SEN, SPC).

Figure 7.

Segmentation results of different gestational age groups (20–27 weeks and 28–35 weeks) in axial, sagittal and coronal planes respectively.

4. Discussion

Congenital diseases occur during the fetal period, which is caused by abnormal development of the fetus due to external or internal adverse factors during its growth and development in the womb. Once congenital diseases appear, they will bring serious burden to families and society. Timely detection and treatment of fetal congenital diseases will help to reduce mortality. Therefore, it is essential to conduct regular health analysies of developing fetus before delivery. Doctors usually analyze health conditions through changes in fetal brain tissues. Fetal MRI scanning has been an important auxiliary tool.

However, the fetal brain is small and has a complex tissue structure and the intensity of different tissues is not uniform but varies gradually across the image space. Higher field strength scanners result in greater intensity variation. The partial volume (PV) effect of mixing different tissues in a single voxel poses additional difficulties for accurately depicting tissue boundaries, such as the mixing of CSF and GM on the CSF-CGM (cortical GM) boundary results in an intensity similar to that of WM. This PV effect causes the CSF-CGM to mislabel voxels as WM. In addition to lower contrast, tissues such as the cerebellum and brainstem may have issues with network architecture not fully extracting features due to their smaller distribution. Therefore, to help physicians analyze tissue changes more quickly and accurately to determine congenital diseases, it is necessary to design a network structure with stronger feature extraction capability that can analyze global contextual information of tissues and perform accurate segmentation in a short period of time.

V-Net is a network modified from U-Net, which was first proposed specifically for medical image analysis. Although it demonstrated good segmentation performance on prostate MR images, it did not perform well in our fetal MRI tissue segmentation, where it completely failed in segmentation tasks of the cerebellum, deep gray matter, and brainstem. The possible reason was that it did not make full use of the global context information of various tissue structures, so it was not strong enough in feature extraction. DMFNet, relative to V-Net, was able to segment the above three brain tissues better because the 3D-dilated convolution introduced in DMFNet can build multiscale feature representations and help extract smaller tissue patches, and this module was also used in our network. In previous studies on fetal MRI brain tissue segmentation, the most popular convolutional neural network U-Net was commonly used to form a 2D network in three directions or directly using a 3D network. The 3D U-Net is a powerful model. It can segment all seven tissues better than V-Net and DMFNet in our tasks. However, it has too many parameters and takes too long to run for a quick diagnosis. Moreover, a major feature of medical images is the small amount of data. Therefore, if the network structure is too complex and there are too many parameters, the trained model may suffer from overfitting and bias.

Therefore, for the three requirements of (1) better feature extraction capability, (2) using global contextual information, and (3) fewer parameters and shorter training time, we designed a lightweight tissue segmentation model that combined CNN and the self-attention mechanism by introducing the contextual transformer block (CoT-Block), a novel attentional structure block, at each layer of the encoder and decoder. Previous studies have proposed different attention mechanisms for applications such as image classification and image segmentation. These attention mechanisms came in different forms, such as spatial attention, channel attention, and self-attention. The module we introduced in the proposed model was the self-attention module. The original self-attention mechanism in the NLP field mainly solves the long-distance dependence problem by computing the interactions between words, and extending to the field of computer vision, the research objects are not sequences but large pixel matrices, but they have the common feature that the input samples contain information of multiple elements at the same time, and these information are not independent, but have different degrees of contact, and are mutually contextual information. In vision domain, a simple migration of the self-attention mechanism from NLP to CV is to directly perform self-attention over feature vectors across different spatial locations within an image, and let each element in the input vector extract useful information from each other in turn before inputting it to the subsequent network for computation. Most existing techniques directly utilize the traditional self-attentive mechanism, ignoring the explicit modeling of rich contexts among neighbor keys, which severely limits the ability of self-attention learning of feature maps in the training process. In contrast, we first used the 3 × 3 × 3 convolution to encode the context of surrounding voxels to obtain static context information of local adjacent positions to guide the learning of dynamic attention matrix. We used this structure block to replace a 3 × 3 × 3 convolution in each layer of the encoder and decoder, which improved the representational properties of the deep network and enhanced the ability of the model to extract features from different fetal tissues. In addition, in the last layer of the decoder, a hybrid dilated convolution module was introduced, based on which the receptive field can be expanded to obtain global contextual information in fetal MRI and capture the multi-scale 3D spatial correlation of tissues, which facilitated the segmentation of smaller tissues such as cerebellum and brainstem and improved the accuracy of segmentation. The overall parameter size of our model was 66.09 M, and the time per training epoch was 33 s, which was a superior algorithm with less space complexity and time complexity compared to 90.3 M and 90 s of 3D U-Net.

We also analyzed the effect of different gestational ages on algorithm performance. The fetal brain experienced various changes throughout development. As gestational age increases, the cerebral cortex expands, the morphology of the gyri and sulci becomes more obvious, and the narrowed gyrus makes it more difficult to distinguish between white matter and gray matter boundaries, increasing random errors. The increase of gestational age also causes the ventricles to change from fetal (vesicular and bicornuate) to adult type, becoming smaller in size, while the thickness of the hemispheric mantle increases exponentially, so their volume ratio decreases and segmentation becomes more difficult. For the hypothesis that congenital diseases cause tissue changes, we conducted a verification experiment to analyze the tissues characteristics of normal brain and pathological brain. We divided the data into a normal group and a pathological group with spina bifida. We calculated the ventricle volumes based on the segmentation results of the two groups. The comparison showed that there was a significant difference in the volume of the ventricles between the pathological group and the normal group. This showed that fetal brain MRI tissue changes can be used as a way to diagnose congenital diseases.

We used 3D convolutional kernels to build our 3D network. To reduce the number of computations as much as possible, we cropped the image; only the excess background was cropped to preserve the intact fetal brain part, and it was scaled to 128 × 128 × 128. Additionally, we tried different choices of batchsize = 2, 4, 5, and 8, and the experimental results showed that the best segmentation result was obtained with batchsize = 2.

5. Conclusions

In this work, we proposed an automatic segmentation algorithm for fetal brain MRI, which aimed to segment seven tissues of the fetal brain to help diagnose congenital diseases or evaluate the effect of an intervention or treatment. We introduced a novel network structure, a feature pyramid model based on the contextual transformer block, which introduced the CoT-Block in both the encoder and decoder, which integrated contextual information mining and self-attention learning into a unified architecture to guide the learning of the dynamic attention matrix, thus enhancing the image feature representation capabilities. In addition, a hybrid dilated convolution module was also introduced in the last layer of the decoder, which can expand the receptive field and retain detailed spatial information to effectively extract global contextual information in medical images. The comparison experiments with other network models revealed that our model outperformed several advanced deep learning network models.

Due to the small amount of data in this work, in the subsequent studies, we will collect as much fetal brain data as possible from different institutions for a more detailed analysis, or introduce transfer learning to increase training samples to further verify the performance of our algorithm. In addition, during the data acquisition process, the fetal position in the maternal body is constantly changing, with no regularity, and motion artifacts are inevitable. Therefore, in order to address the artifact problems such as intensity nonuniformity generated during the scanning process, an adversarial data augmentation technique can be used to train the neural network by simulating the intensity inhomogeneity (bias field) caused by the common artifacts in MRI, which can improve the generalization ability and robustness of the model and can alleviate the problem of data scarcity. We can segment the brain into seven different tissue structures, allowing a detailed brain tissue analysis for a specific congenital disease, better predicting outcomes of surgery, such as fetal patients with spina bifida, and we can reconstruct the fetal brain at pre- and post- treatment time points and train our model to automatically segment tissues, register the post-treatment image to the pre-treatment image to determine tissue changes between the two time points, analyze the lesion size, type, and the effects of gestational age at the time of treatment on various tissue morphological changes, to better predict the surgical outcomes to provide guidance for prenatal parents.

Author Contributions

Conceptualization, X.H., Y.L. (Yuhan Li), K.Q., A.G., B.Z. and X.L.; data curation, X.H. and Y.L. (Yang Liu); formal analysis, X.H., Y.L. (Yuhan Li), K.Q., A.G. and B.Z.; funding acquisition, D.L. and X.L.; investigation, X.H. and Y.L. (Yang Liu); methodology, X.H., A.G. and B.Z.; project administration, X.H., D.L. and X.L.; resources, X.L.; software, X.H., A.G. and B.Z.; supervision, X.H., D.L. and X.L.; validation, X.H. and Y.L. (Yuhan Li); visualization, X.H. and K.Q.; writing—original draft, X.H. and X.L.; writing—review and editing, X.H. and X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Shenzhen Overseas High-level Talent Innovation and Entrepreneurship Special Fund (Peacock Plan) (KQTD20180413181834876), Shenzhen Science and Technology Program (KCXFZ20211020163408012), and Key Laboratory for Magnetic Resonance and Multimodality Imaging of Guangdong Province (2020B1212060051).

Institutional Review Board Statement

The ethical committee of the Canton of Zurich, Switzerland approved the prospective and retrospective studies that collected and analyzed the MRI data (Decision numbers: 2017-00885, 2016-01019, 2017-00167), and a waiver for an ethical approval was acquired for the release of an anonymous dataset for non-medical research purposes.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The fetal data used to support the findings of this study were provided by the University Children’s Hospital Zurich and can be available at https://doi.org/10.7303/syn23747212 (accessed on 5 September 2021). The dataset is cited at relevant places within the text as reference [18].

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

References

- Gholipour, A.; Akhondi-Asl, A.; Estroff, J.A.; Warfield, S.K. Multi-Atlas Multi-Shape Segmentation of Fetal Brain MRI for Volumetric and Morphometric Analysis of Ventriculomegaly. NeuroImage 2012, 60, 1819–1831. [Google Scholar] [CrossRef]

- Payette, K.; Moehrlen, U.; Mazzone, L.; Ochsenbein-Kölble, N.; Tuura, R.; Kottke, R.; Meuli, M.; Jakab, A. Longitudinal Analysis of Fetal MRI in Patients with Prenatal Spina Bifida Repair. In Smart Ultrasound Imaging and Perinatal, Preterm and Paediatric Image Analysis; Springer: Cham, Switzerland, 2019; Volume 11798, pp. 161–170. [Google Scholar] [CrossRef]

- Payette, K.; Kottke, R.; Jakab, A. Efficient Multi-Class Fetal Brain Segmentation in High Resolution MRI Reconstructions with Noisy Labels. In Medical Ultrasound, and Preterm, Perinatal and Paediatric Image Analysis; Springer: Cham, Switzerland, 2020; Volume 12437, pp. 295–304. [Google Scholar] [CrossRef]

- Licht, D.J.; Wang, J.; Silvestre, D.W.; Nicolson, S.C.; Montenegro, L.M.; Wernovsky, G.; Tabbutt, S.; Durning, S.M.; Shera, D.M.; Gaynor, J.W.; et al. Preoperative Cerebral Blood Flow Is Diminished in Neonates with Severe Congenital Heart Defects. J. Thorac. Cardiovasc. Surg. 2004, 128, 841–849. [Google Scholar] [CrossRef]

- Lachmann, R.; Chaoui, R.; Moratalla, J.; Picciarelli, G.; Nicolaides, K.H. Posterior Brain in Fetuses with Open Spina Bifida at 11 to 13 Weeks: OPEN SPINA BIFIDA AT 11 TO 13 WEEKS. Prenat. Diagn. 2011, 31, 103–106. [Google Scholar] [CrossRef]

- Ghi, T.; Pilu, G.; Falco, P.; Segata, M.; Carletti, A.; Cocchi, G.; Santini, D.; Bonasoni, P.; Tani, G.; Rizzo, N. Prenatal Diagnosis of Open and Closed Spina Bifida. Ultrasound Obstet. Gynecol. 2006, 28, 899–903. [Google Scholar] [CrossRef]

- Habas, P.A.; Kim, K.; Rousseau, F.; Glenn, O.A.; Barkovich, A.J.; Studholme, C. Atlas-Based Segmentation of Developing Tissues in the Human Brain with Quantitative Validation in Young Fetuses. Hum. Brain Mapp. 2010, 31, 1348–1358. [Google Scholar] [CrossRef]

- Habas, P.A.; Kim, K.; Corbett-Detig, J.M.; Rousseau, F.; Glenn, O.A.; Barkovich, A.J.; Studholme, C. A Spatiotemporal Atlas of MR Intensity, Tissue Probability and Shape of the Fetal Brain with Application to Segmentation. NeuroImage 2010, 53, 460–470. [Google Scholar] [CrossRef]

- Serag, A.; Kyriakopoulou, V.; Rutherford, M.A.; Edwards, A.D.; Hajnal, J.V.; Aljabar, P.; Counsell, S.J.; Boardman, J.P.; Rueckert, D. A Multi-Channel 4D Probabilistic Atlas of the Developing Brain: Application to Fetuses and Neonates. Ann. BMVA 2012, 2012, 1–14. [Google Scholar]

- Wright, R.; Kyriakopoulou, V.; Ledig, C.; Rutherford, M.A.; Hajnal, J.V.; Rueckert, D.; Aljabar, P. Automatic Quantification of Normal Cortical Folding Patterns from Fetal Brain MRI. NeuroImage 2014, 91, 21–32. [Google Scholar] [CrossRef]

- Ledig, C.; Wright, R.; Serag, A.; Aljabar, P. Neonatal Brain Segmentation Using Second Order Neighborhood Information. In Proceedings of the Workshop on Perinatal and Paediatric Imaging: PaPI, Medical Image Computing and Computer-Assisted Intervention: MICCAI, Nice, France, 1–5 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 33–40. [Google Scholar]

- Li, S.; Sui, X.; Luo, X.; Xu, X.; Liu, Y.; Goh, R. Medical Image Segmentation Using Squeeze-and-Expansion Transformers. arXiv 2021, arXiv:2105.09511. [Google Scholar] [CrossRef]

- Gu, Z.; Cheng, J.; Fu, H.; Zhou, K.; Hao, H.; Zhao, Y.; Zhang, T.; Gao, S.; Liu, J. CE-Net: Context Encoder Network for 2D Medical Image Segmentation. IEEE Trans. Med. Imaging 2019, 38, 2281–2292. [Google Scholar] [CrossRef]

- Dou, H.; Karimi, D.; Rollins, C.K.; Ortinau, C.M.; Vasung, L.; Velasco-Annis, C.; Ouaalam, A.; Yang, X.; Ni, D.; Gholipour, A. A Deep Attentive Convolutional Neural Network for Automatic Cortical Plate Segmentation in Fetal MRI. IEEE Trans. Med. Imaging 2021, 40, 1123–1133. [Google Scholar] [CrossRef]

- Lei, Z.; Qi, L.; Wei, Y.; Zhou, Y. Infant Brain MRI Segmentation with Dilated Convolution Pyramid Downsampling and Self-Attention. arXiv 2019, arXiv:1912.12570. [Google Scholar]

- Khalili, N.; Lessmann, N.; Turk, E.; Claessens, N.; de Heus, R.; Kolk, T.; Viergever, M.A.; Benders, M.J.N.L.; Išgum, I. Automatic Brain Tissue Segmentation in Fetal MRI Using Convolutional Neural Networks. Magn. Reson. Imaging 2019, 64, 77–89. [Google Scholar] [CrossRef]

- Iqbal, A.; Khan, R.; Karayannis, T. Developing Brain Atlas through Deep Learning. Nat. Mach. Intell. 2019, 1, 277–287. [Google Scholar] [CrossRef]

- Payette, K.; de Dumast, P.; Kebiri, H.; Ezhov, I.; Paetzold, J.C.; Shit, S.; Iqbal, A.; Khan, R.; Kottke, R.; Grehten, P.; et al. An Automatic Multi-Tissue Human Fetal Brain Segmentation Benchmark Using the Fetal Tissue Annotation Dataset. Sci. Data 2021, 8, 167. [Google Scholar] [CrossRef]

- Tourbier, S.; Bresson, X.; Hagmann, P.; Thiran, J.-P.; Meuli, R.; Cuadra, M.B. An Efficient Total Variation Algorithm for Super-Resolution in Fetal Brain MRI with Adaptive Regularization. NeuroImage 2015, 118, 584–597. [Google Scholar] [CrossRef]

- Gholipour, A.; Warfield, S.K. Super-Resolution Reconstruction of Fetal Brain MRI. In Proceedings of the MICCAI Workshop on Image Analysis for the Developing Brain (IADB’ 2009), London, UK, 24 September 2009. [Google Scholar]

- Kuklisova-Murgasova, M.; Quaghebeur, G.; Rutherford, M.A.; Hajnal, J.V.; Schnabel, J.A. Reconstruction of Fetal Brain MRI with Intensity Matching and Complete Outlier Removal. Med. Image Anal. 2012, 16, 1550–1564. [Google Scholar] [CrossRef]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Li, Y.; Yao, T.; Pan, Y.; Mei, T. Contextual Transformer Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 1–11. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation. arXiv 2016, arXiv:1606.06650. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.-A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; IEEE Press: New York, NY, USA, 2016; pp. 565–571. [Google Scholar] [CrossRef]

- Chen, C.; Liu, X.; Ding, M.; Zheng, J.; Li, J. 3D Dilated Multi-Fiber Network for Real-Time Brain Tumor Segmentation in MRI. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2019, Shenzhen, China, 13–17 October 2019; Volume 11766, pp. 184–192. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Hänsch, A.; Schwier, M.; Gass, T.; Morgas, T. Evaluation of Deep Learning Methods for Parotid Gland Segmentation from CT Images. J. Med. Imaging 2018, 6, 011005. [Google Scholar] [CrossRef]

- Huang, Q.; Sun, J.; Ding, H.; Wang, X.; Wang, G. Robust Liver Vessel Extraction Using 3D U-Net with Variant Dice Loss Function. Comput. Biol. Med. 2018, 101, 153–162. [Google Scholar] [CrossRef]

- An, L.; Zhang, P.; Adeli, E.; Wang, Y.; Ma, G.; Shi, F.; Lalush, D.S.; Lin, W.; Shen, D. Multi-Level Canonical Correlation Analysis for Standard-Dose PET Image Estimation. IEEE Trans. Image Process. 2016, 25, 3303–3315. [Google Scholar] [CrossRef]

- Chen, Y.; Kalantidis, Y.; Li, J.; Yan, S.; Feng, J. Multi-Fiber Networks for Video Recognition. In Proceedings of the 2018 Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; Volume 11205, pp. 364–380. [Google Scholar] [CrossRef]

- Prayer, D.; Kasprian, G.; Krampl, E.; Ulm, B.; Witzani, L.; Prayer, L.; Brugger, P.C. MRI of Normal Fetal Brain Development. Eur. J. Radiol. 2006, 57, 199–216. [Google Scholar] [CrossRef]

- Kinoshita, Y.; Okudera, T.; Tsuru, E.; Yokota, A. Volumetric Analysis of the Germinal Matrix and Lateral Ventricles Performed Using MR Images of Postmortem Fetuses. Am. J. Neuroradiol. 2001, 22, 382–388. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).