Classification of Acoustic Influences Registered with Phase-Sensitive OTDR Using Pattern Recognition Methods

Abstract

1. Introduction

- -

- The possibility of detecting many influences in several places at once, with a small error in determining the coordinates;

- -

- Obtaining high information content about the source of acoustic influences.

- -

- A soft-max layer takes the output of a fully connected layer;

- -

- A support vector machine (SVM) algorithm takes the output from a fully connected layer;

- -

- The SVM algorithm takes input data that are fed to the input of a fully connected layer.

2. Materials and Methods

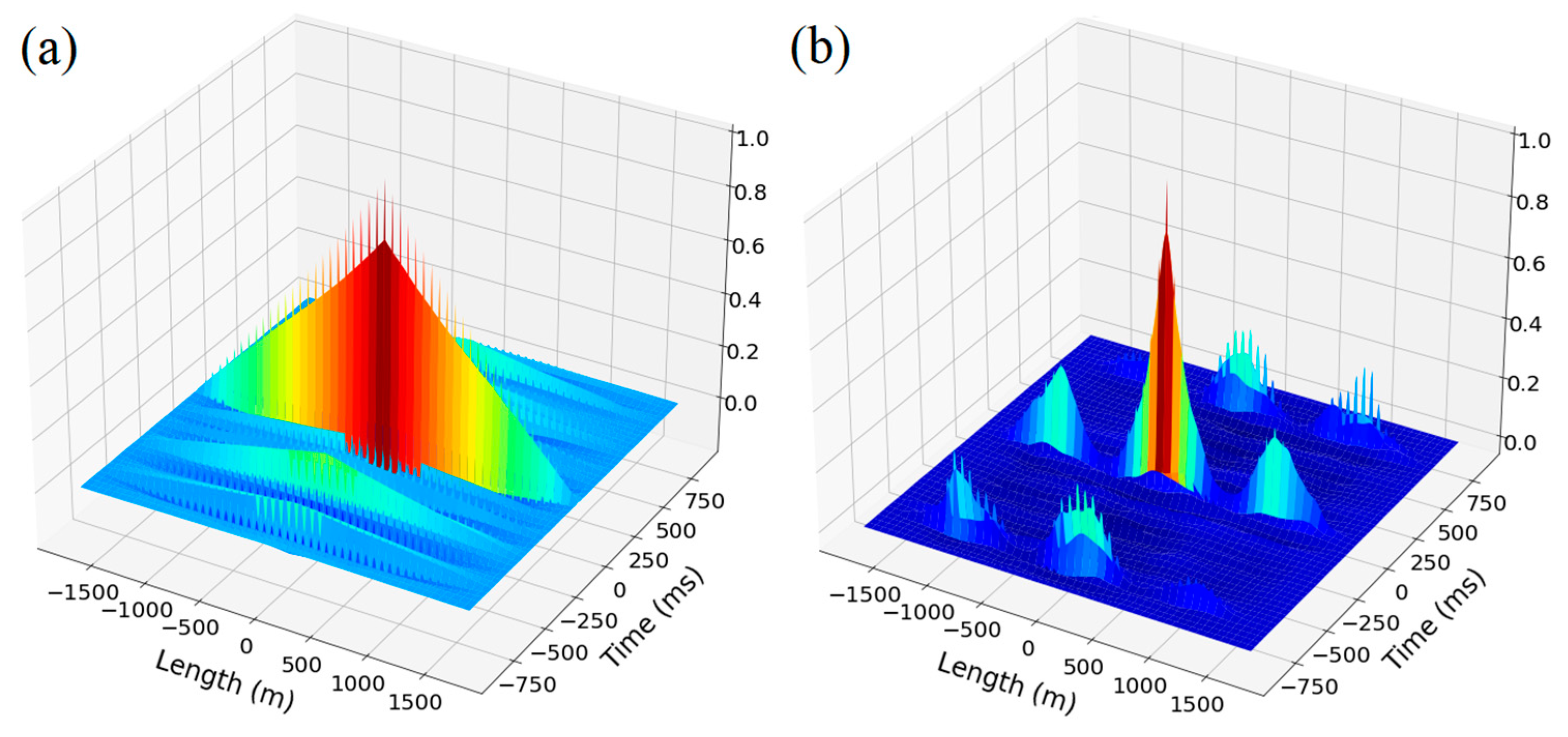

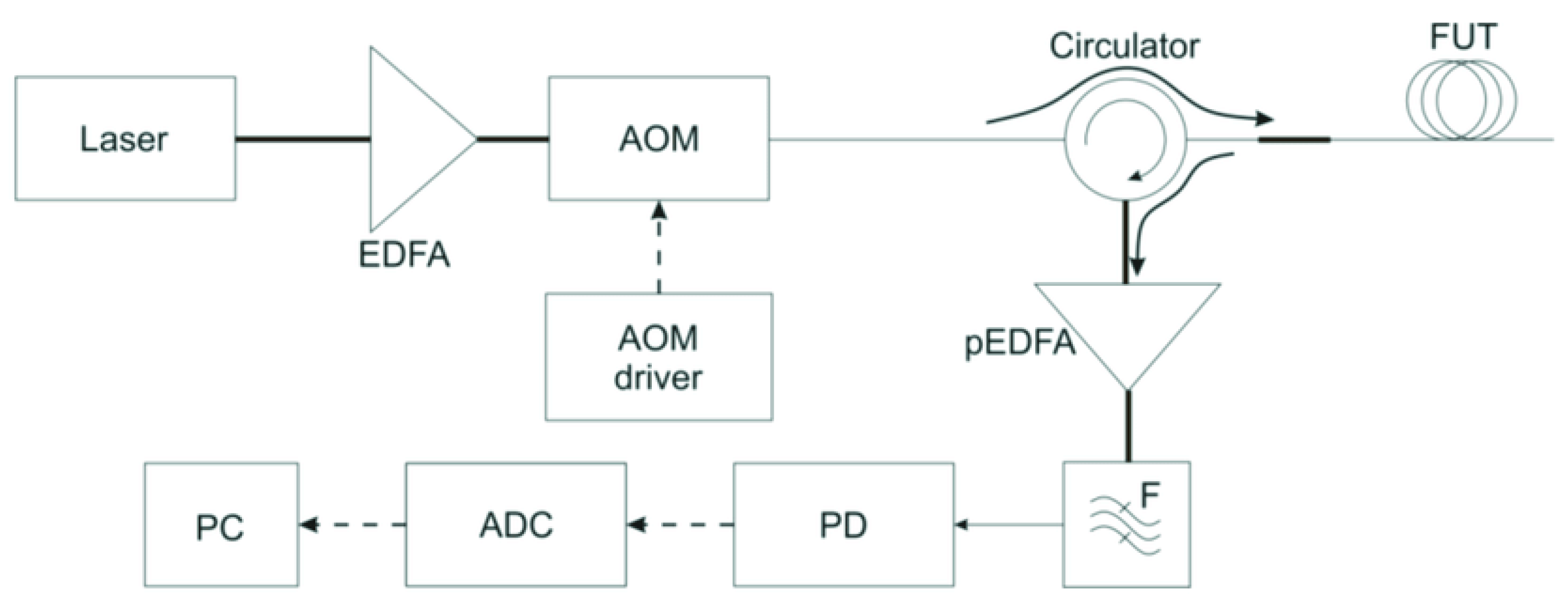

2.1. Input Data

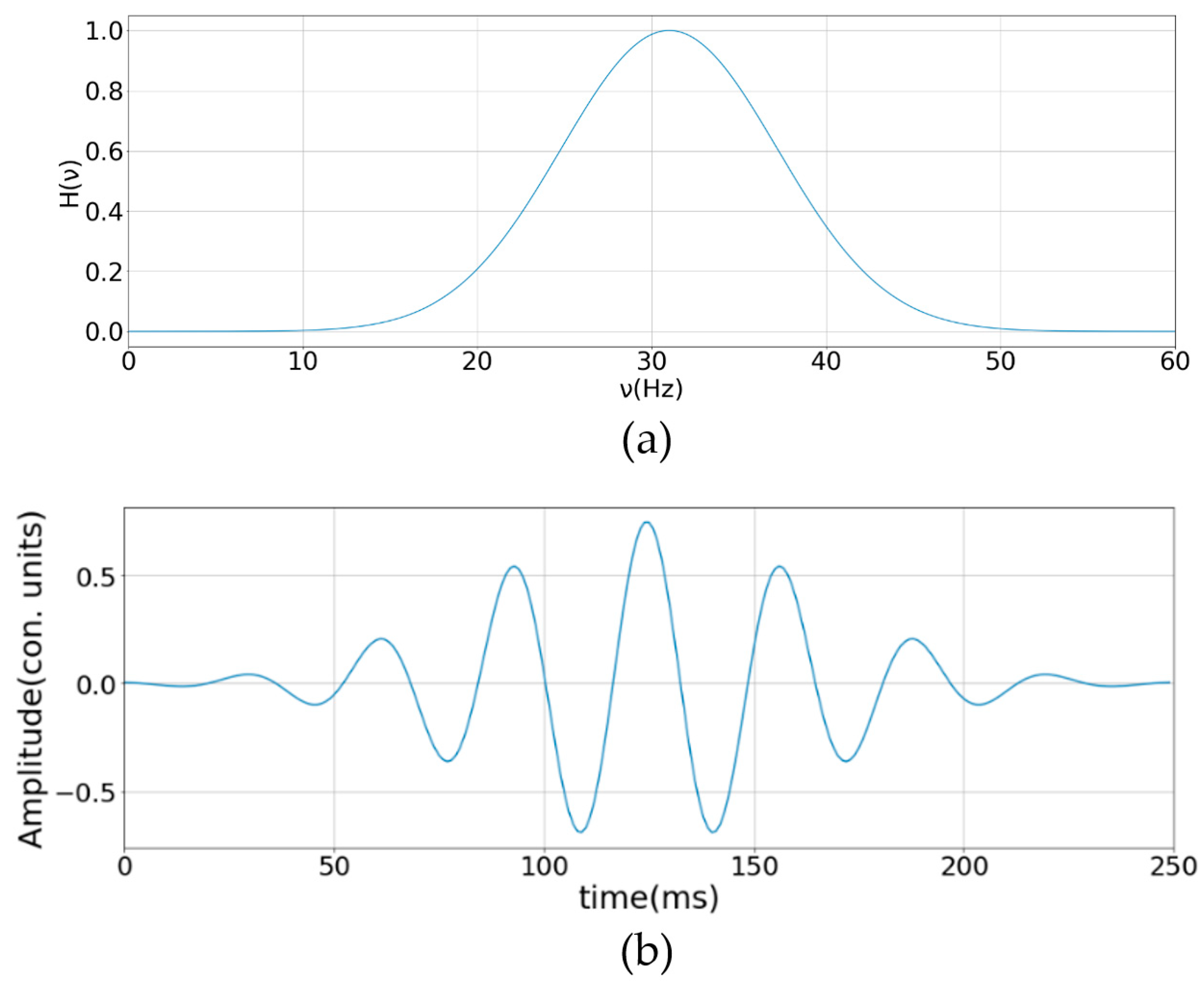

2.2. Filtering

- -

- is a harmonic function;

- -

- H(t) is the filter function (kernel);

- -

- t denotes time;

- -

- B denotes throughput;

- -

- C is the center frequency;

- -

- is a normalizing factor.

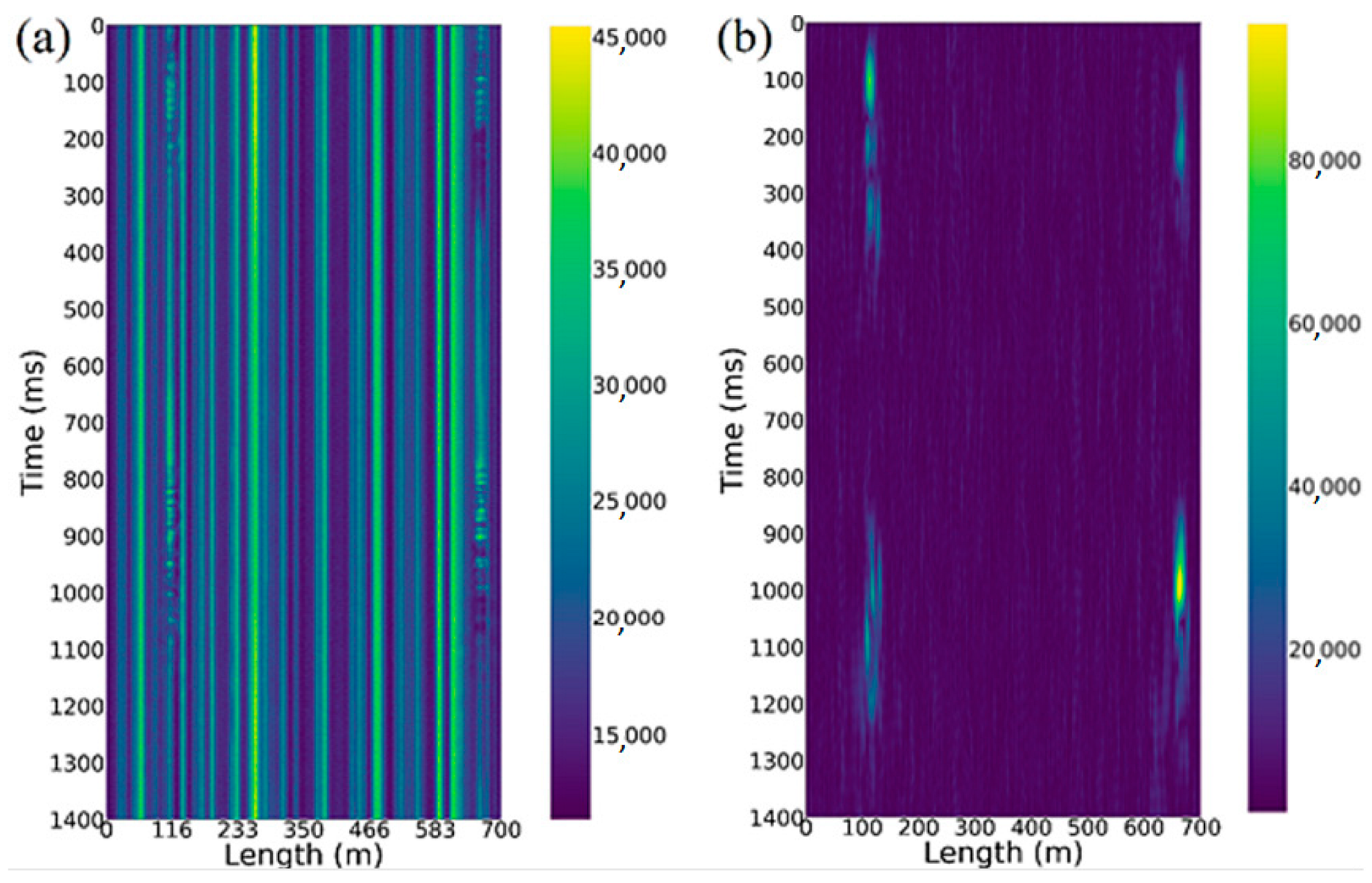

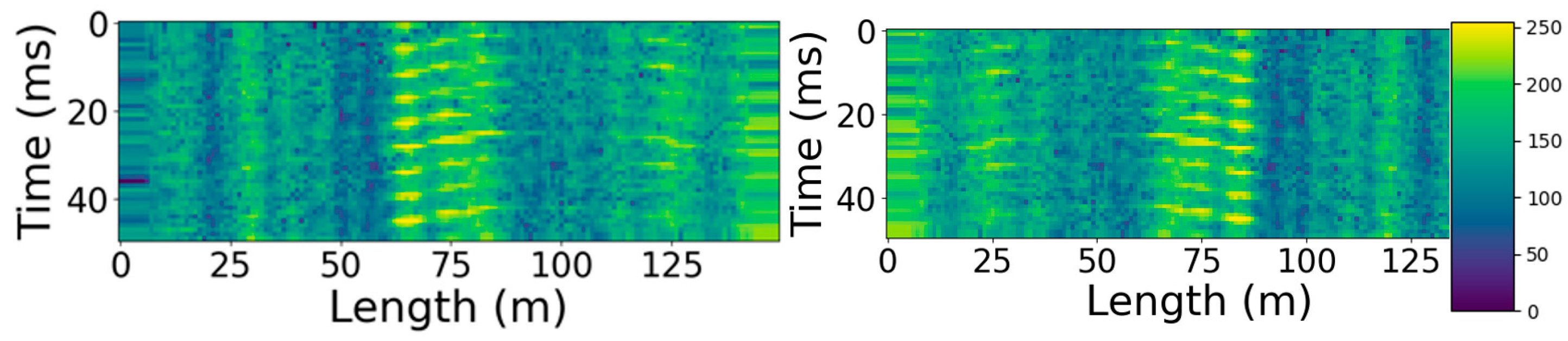

2.3. Pre-Processing

2.4. Model Synthesis

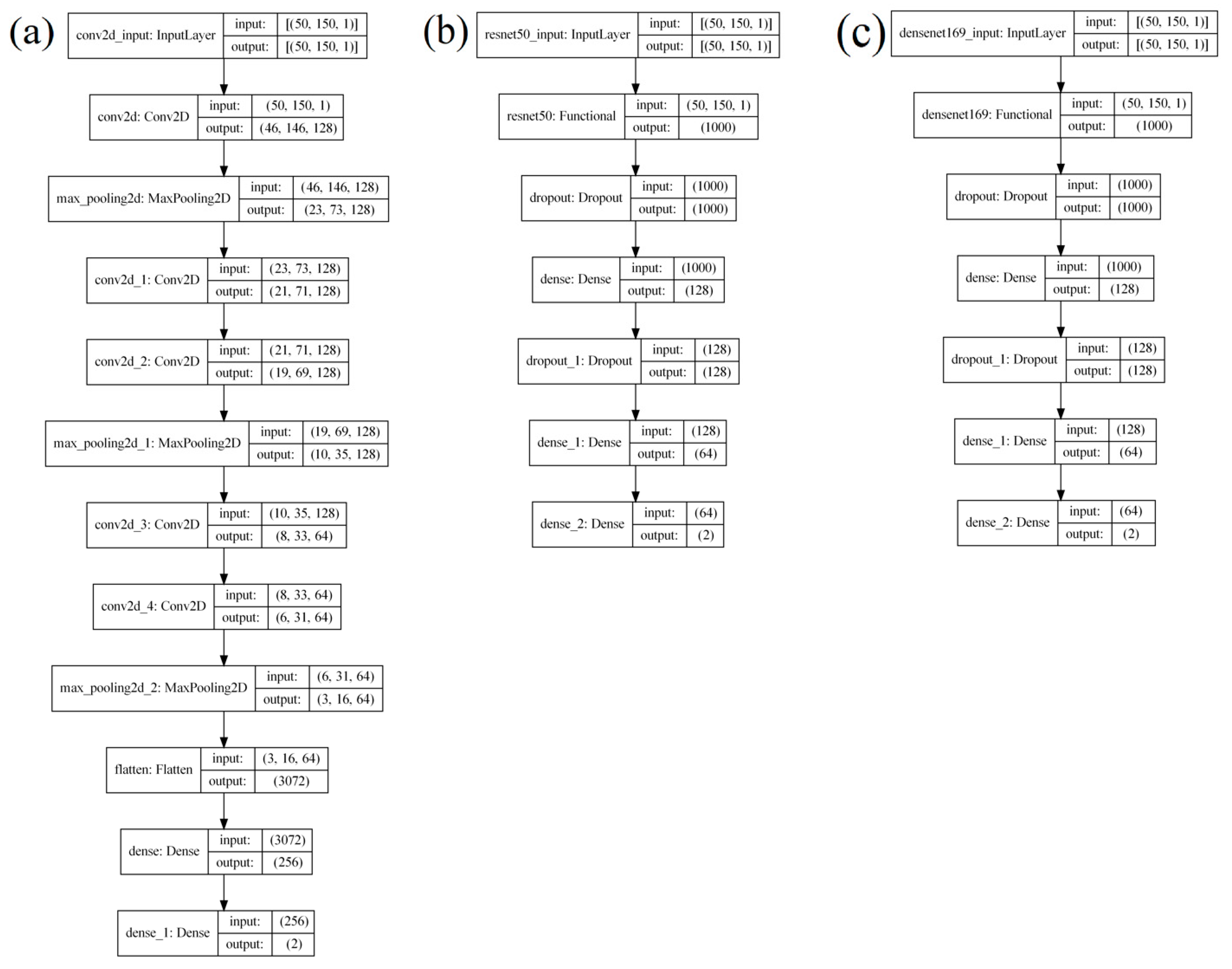

2.4.1. CNN-Based Architecture

2.4.2. Neural Network Training

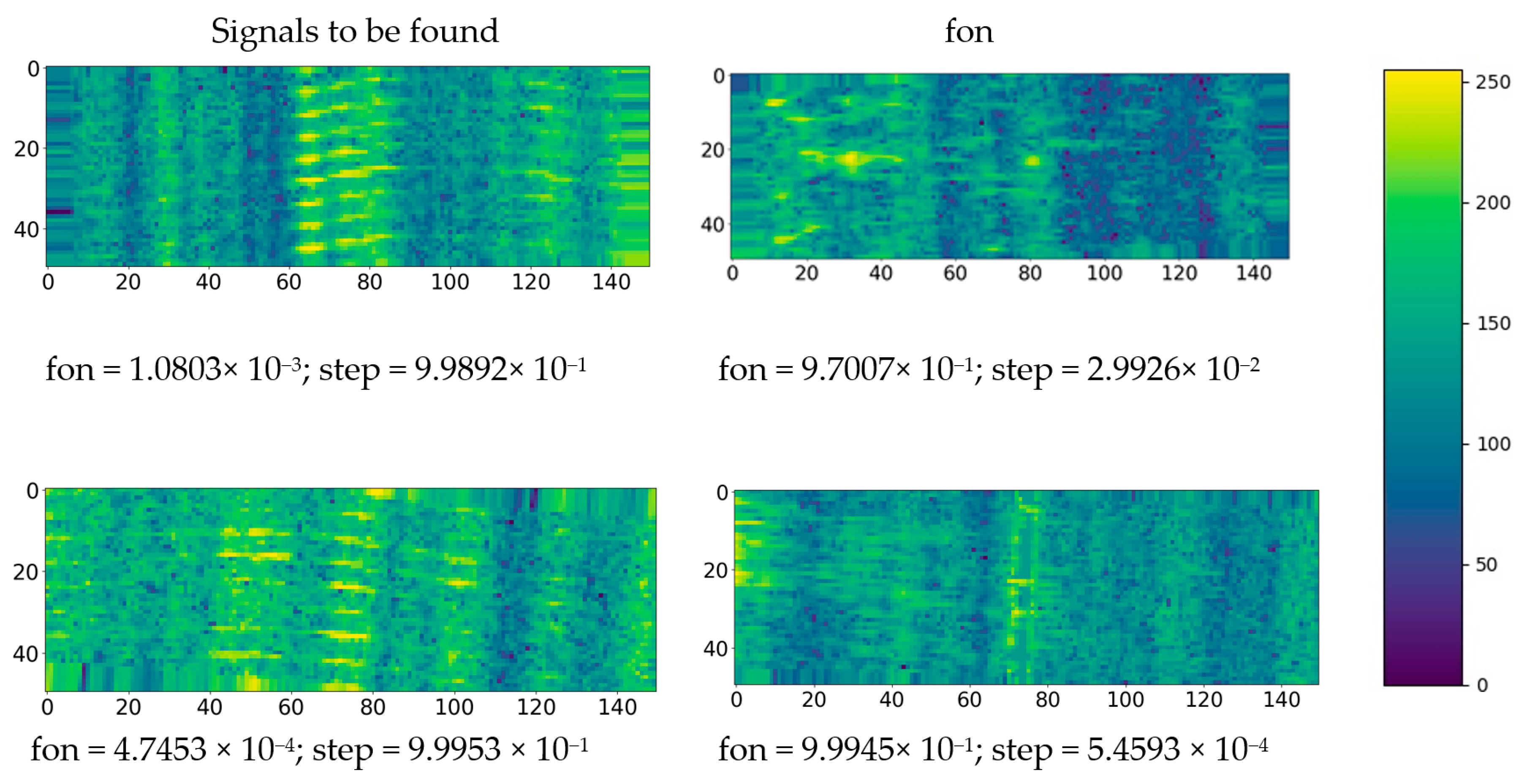

3. Experimental Study and Discussion

- (1)

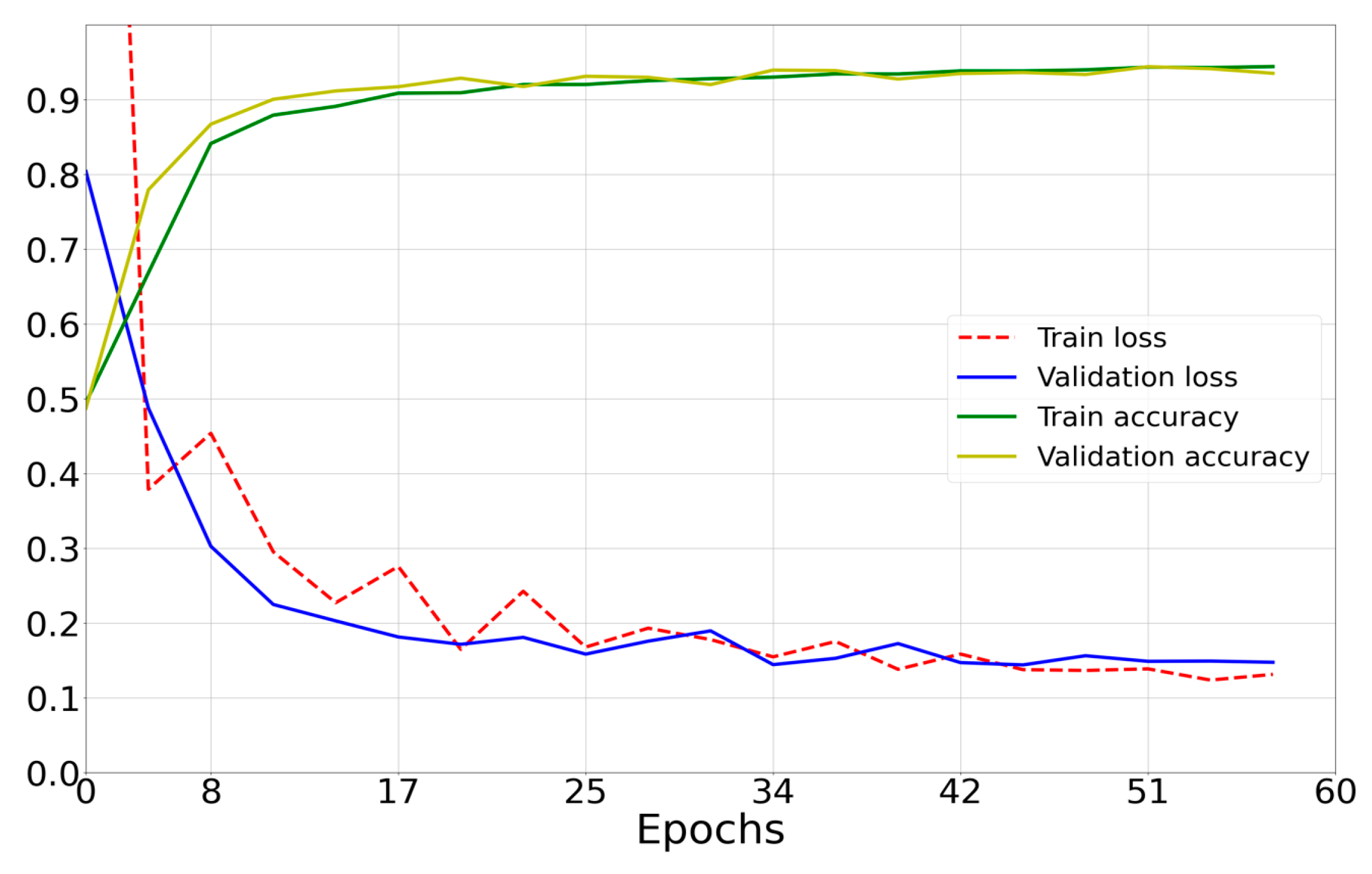

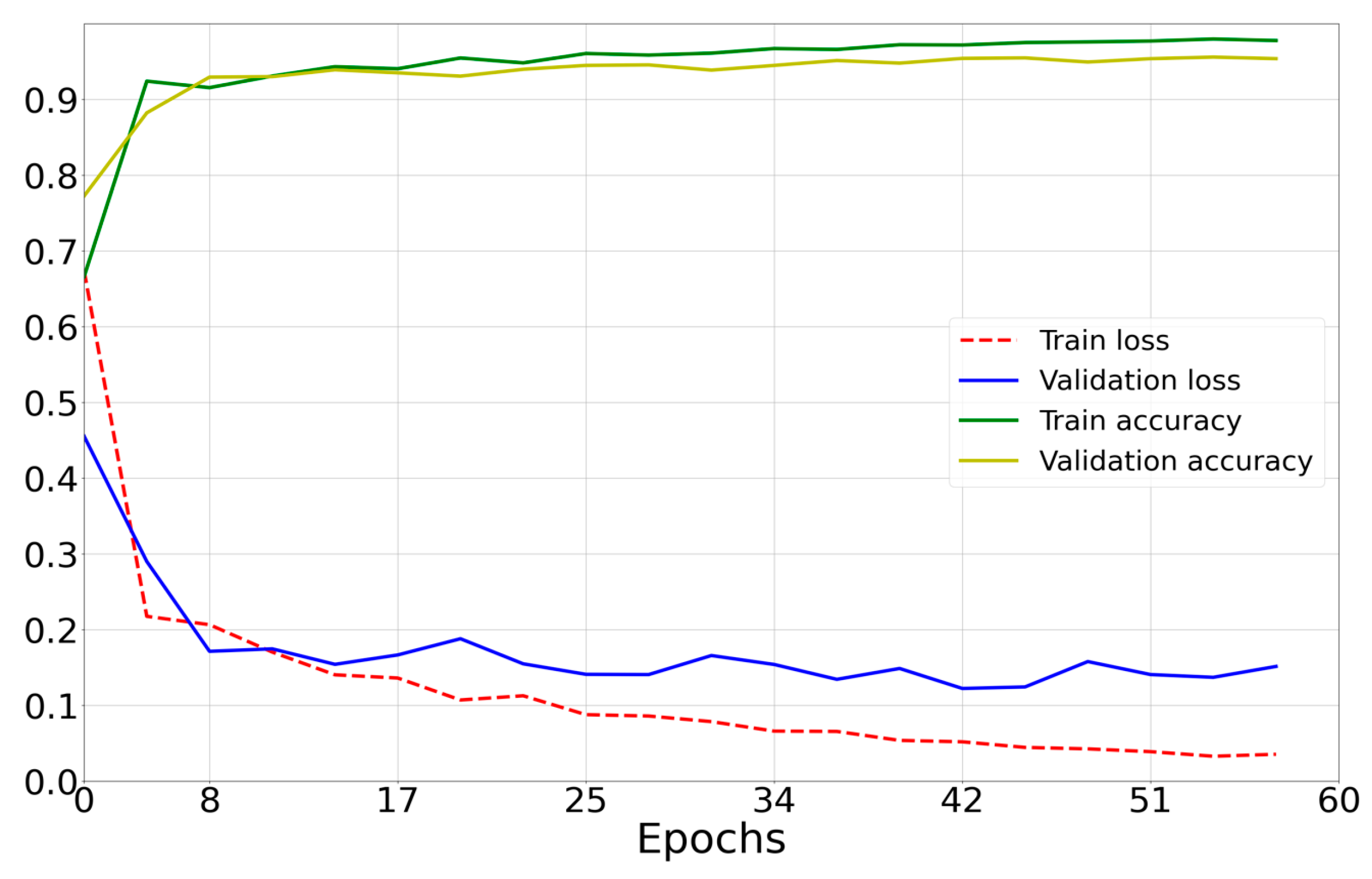

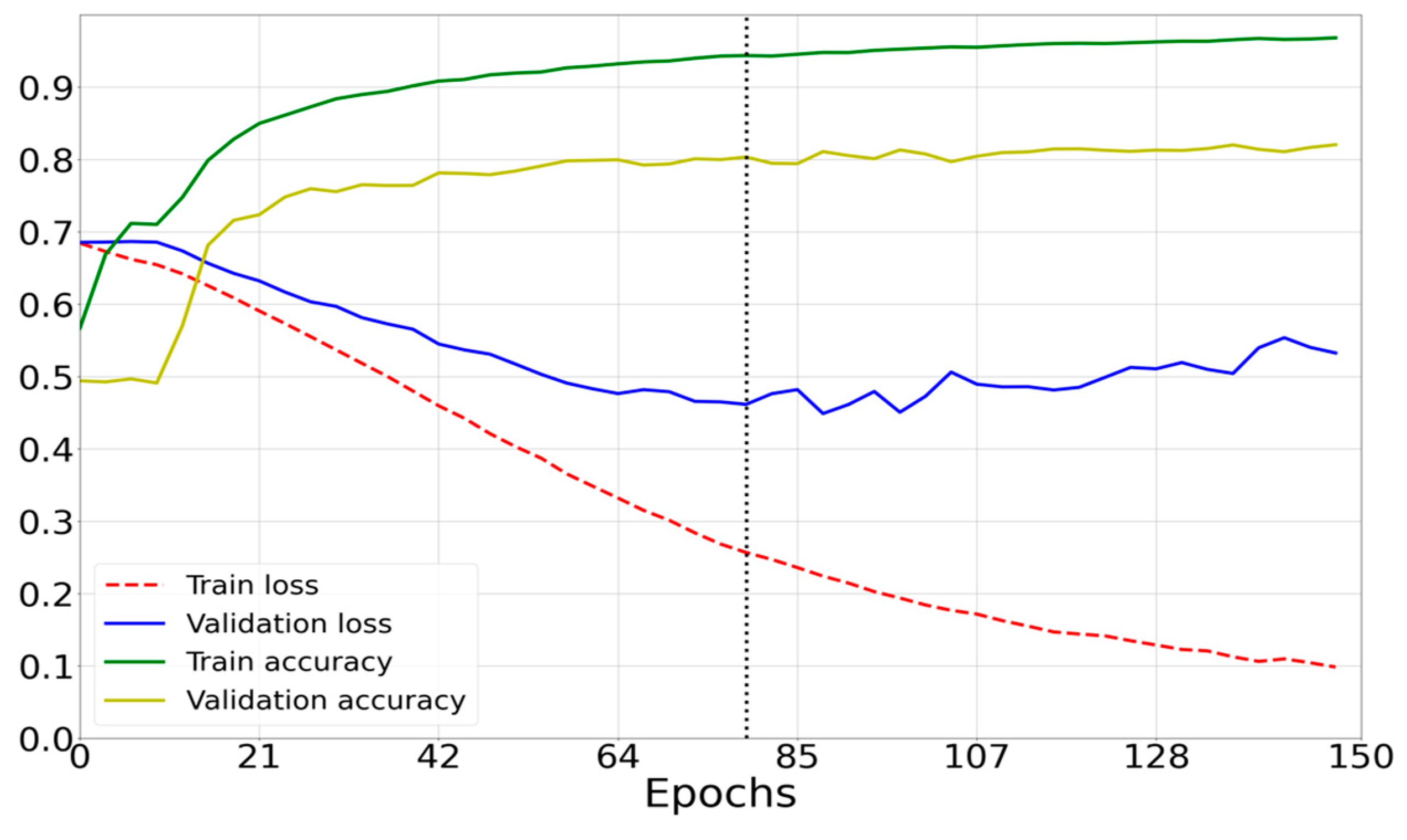

- Results for a neural network model with architecture based on AlexNet [4] (Figure 10a) are presented in Figure 11.

- -

- Number of epochs: 60;

- -

- Optimizer: Adam;

- -

- Mean prediction computation time: 0.037 s;

- -

- Mean power consumption to perform one prediction: 600 mW;

- -

- Percentage of correct predictions: 95.55%.

- (2)

- -

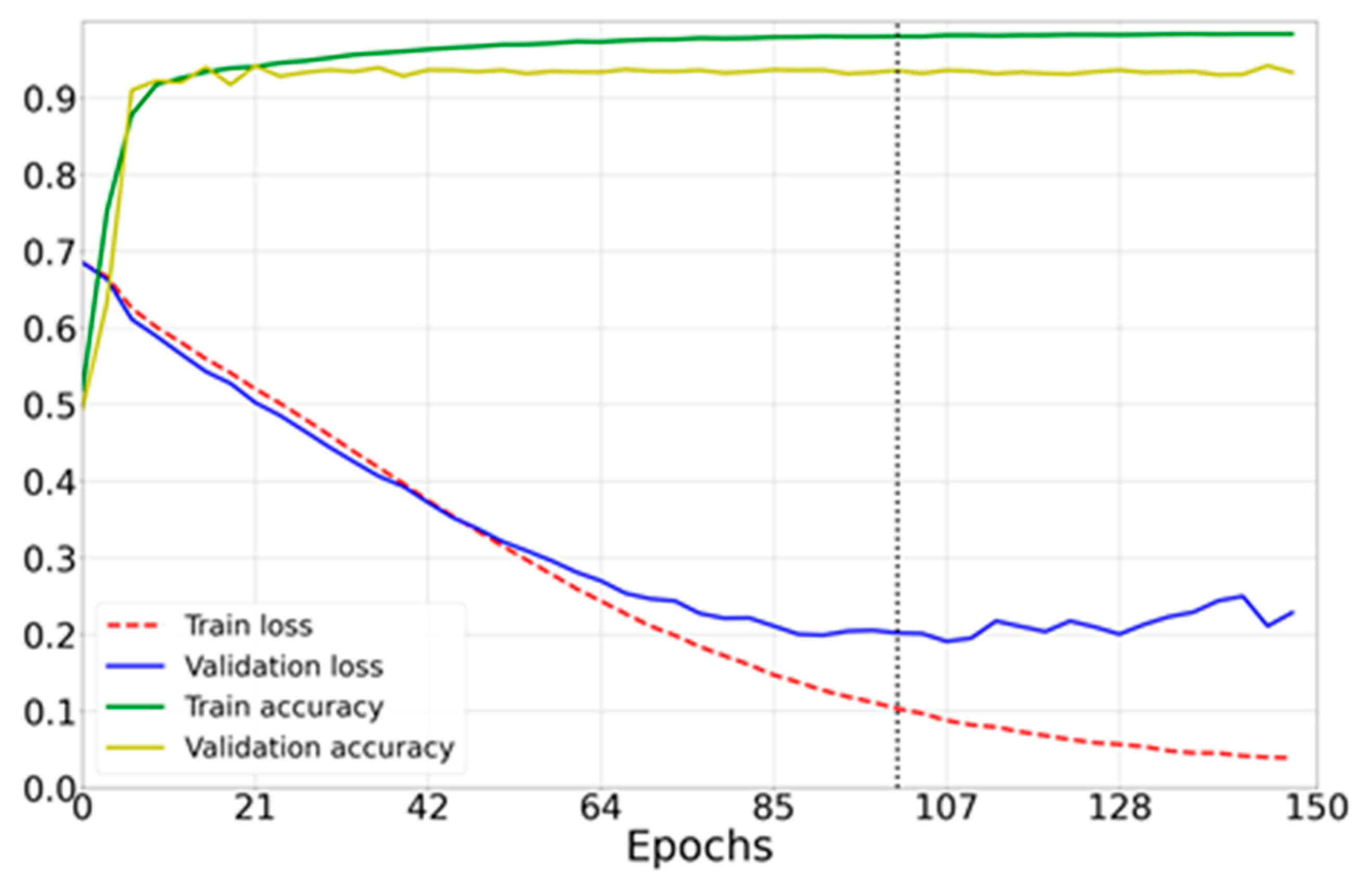

- Number of epochs: 90;

- -

- Optimizer: Adam;

- -

- Mean power consumption to perform one prediction: 3000 mW;

- -

- Mean prediction computation time: 0.086 s;

- -

- Percentage of correct predictions: 84.65%.

- (3)

- -

- Number of epochs: 100;

- -

- Optimizer: Adam;

- -

- Mean power consumption to perform one prediction: 1700 mW;

- -

- Mean prediction computation time: 0.108 s;

- -

- Percentage of correct predictions: 88.85%.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pnev, A.B.; Zhirnov, A.A.; Stepanov, K.V.; Nesterov, E.T.; Shelestov, D.A.; Karasik, V.E. Mathematical analysis of marine pipeline leakage monitoring system based on coherent OTDR with improved sensor length and sampling frequency. J. Phys. Conf. Series 2015, 584, 012016. [Google Scholar] [CrossRef]

- Svelto, C.; Pniov, A.; Zhirnov, A.; Nesterov, E.; Stepanov, K.; Karassik, V.; Laporta, P. Online Monitoring of Gas & Oil Pipeline by Distributed Optical Fiber Sensors. In Proceedings of the Offshore Mediterranean Conference and Exhibition, Ravenna, Italy, 27–29 March 2019. [Google Scholar]

- Kowarik, S.; Hussels, M.T.; Chruscicki, S.; Münzenberger, S.; Lämmerhirt, A.; Pohl, P.; Schubert, M. Fiber optic train monitoring with distributed acoustic sensing: Conventional and neural network data analysis. Sensors 2020, 20, 450. [Google Scholar] [CrossRef] [PubMed]

- Merlo, S.; Malcovati, P.; Norgia, M.; Pesatori, A.; Svelto, C.; Pniov, A.; Karassik, V. Runways Ground Monitoring System by Phase-Sensitive Optical-Fiber OTDR. In Proceedings of the 2017 IEEE International Workshop on Metrology for AeroSpace (MetroAeroSpace), Padua, Italy, 21–23 June 2017; pp. 523–529. [Google Scholar]

- Fouda, B.M.T.; Yang, B.; Han, D.; An, B. Pattern recognition of optical fiber vibration signal of the submarine cable for its safety. IEEE Sens. J. 2020, 21, 6510–6519. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, Y.; Zhao, L.; Fan, Z. An event recognition method for Φ-OTDR sensing system based on deep learning. Sensors 2019, 19, 3421. [Google Scholar] [CrossRef]

- Shi, Y.; Dai, S.; Jiang, T.; Fan, Z. A Recognition Method for Multi-Radial-Distance Event of Φ-OTDR System Based on CNN. IEEE Access 2021, 9, 143473–143480. [Google Scholar] [CrossRef]

- Wen, H.; Peng, Z.; Jian, J.; Wang, M.; Liu, H.; Mao, Z.H.; Chen, K.P. Artificial Intelligent Pattern Recognition for Optical Fiber Distributed Acoustic Sensing Systems Based on Phase-OTDR. In Proceedings of the Asia Communications and Photonics Conference, Hangzhou, China, 26–29 October 2018; Optica Publishing Group: Washington, DC, USA, 2018; p. Su4B-1. [Google Scholar]

- Li, S.; Peng, R.; Liu, Z. A surveillance system for urban buried pipeline subject to third-party threats based on fiber optic sensing and convolutional neural network. Struct. Health Monit. 2021, 20, 1704–1715. [Google Scholar] [CrossRef]

- Xu, C.; Guan, J.; Bao, M.; Lu, J.; Ye, W. Pattern recognition based on time-frequency analysis and convolutional neural networks for vibrational events in φ-OTDR. Opt. Eng. 2018, 57, 016103. [Google Scholar] [CrossRef]

- Sun, Q.; Feng, H.; Yan, X.; Zeng, Z. Recognition of a phase-sensitivity OTDR sensing system based on morphologic feature extraction. Sensors 2015, 15, 15179–15197. [Google Scholar] [CrossRef]

- Wu, H.; Yang, M.; Yang, S.; Lu, H.; Wang, C.; Rao, Y. A novel DAS signal recognition method based on spatiotemporal information extraction with 1DCNNs-BiLSTM network. IEEE Access 2020, 8, 119448–119457. [Google Scholar] [CrossRef]

- Wang, M.; Feng, H.; Qi, D.; Du, L.; Sha, Z. φ-OTDR Pattern Recognition Based on CNN-LSTM. Optik 2022, 272, 170380. [Google Scholar] [CrossRef]

- Ruan, S.; Mo, J.; Xu, L.; Zhou, G.; Liu, Y.; Zhang, X. Use AF-CNN for End-to-End Fiber Vibration Signal Recognition. IEEE Access 2021, 9, 6713–6720. [Google Scholar] [CrossRef]

- Shiloh, L.; Eyal, A.; Giryes, R. Deep Learning Approach for Processing Fiber-Optic DAS Seismic Data. In Proceedings of the Optical Fiber Sensors, Lausanne Switzerland, 24–28 September 2018; Optica Publishing Group: Washington, DC, USA, 2018; p. ThE22. [Google Scholar]

- Junnan, Z.; Shuqin, L.; Sheng, L. Study of pattern recognition based on SVM algorithm for φ-OTDR distributed optical fiber disturbance sensing system. Infrared Laser Eng. 2017, 46, 422003. [Google Scholar] [CrossRef]

- Huang, Y.; Zhao, H.; Zhao, X.; Lin, B.; Meng, F.; Ding, J.; Liang, S. Pattern recognition using self-reference feature extraction for φ-OTDR. Appl. Opt. 2022, 61, 10507–10518. [Google Scholar] [CrossRef]

- Rahman, S.; Ali, F.; Muhammad, F.; Irfan, M.; Glowacz, A.; Shahed Akond, M.; Armghan, A.; Faraj Mursal, S.N.; Ali, A.; Al-kahtani, F.S. Analyzing Distributed Vibrating Sensing Technologies in Optical Meshes. Micromachines 2022, 13, 85. [Google Scholar] [CrossRef] [PubMed]

- Fedorov, A.K.; Anufriev, M.N.; Zhirnov, A.A.; Stepanov, K.V.; Nesterov, E.T.; Namiot, D.E.; Karasik, V.E.; Pnev, A.B. Note: Gaussian mixture model for event recognition in optical time-domain reflectometry-based sensing systems. Rev. Sci. Instrum. 2016, 87, 036107. [Google Scholar] [CrossRef] [PubMed]

- Qin, Z.; Chen, L.; Bao, X. Continuous wavelet transform for non-stationary vibration detection with phase-OTDR. Opt. Express 2012, 20, 20459–20465. [Google Scholar] [CrossRef]

- Rao, Y.; Wang, Z.; Wu, H.; Ran, Z.; Han, B. Recent advances in phase-sensitive optical time domain reflectometry (Φ-OTDR). Photonic Sens. 2021, 11, 1–30. [Google Scholar] [CrossRef]

- Russell, B.; Han, J. Jean Morlet and the continuous wavelet transform. CREWES Res. Rep. 2016, 28, 115. [Google Scholar]

- Neto, A.M.; Victorino, A.C.; Fantoni, I.; Zampieri, D.E.; Ferreira, J.V.; Lima, D.A. Image Processing Using Pearson’s Correlation Coefficient: Applications on Autonomous Robotics. In Proceedings of the 2013 13th International Conference on Autonomous Robot Systems, Lisbon, Portugal, 24 April 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–6. [Google Scholar]

- Stavrou, V.N.; Tsoulos, I.G.; Mastorakis, N.E. Transformations for FIR and IIR filters’ design. Symmetry 2021, 13, 533. [Google Scholar] [CrossRef]

- Shi, Y.; Dai, S.; Liu, X.; Zhang, Y.; Wu, X.; Jiang, T. Event recognition method based on dual-augmentation for an Φ-OTDR system with a few training samples. Opt. Express 2022, 30, 31232–31243. [Google Scholar] [CrossRef]

- Thakur, R.; Shailesh, S. Importance of Spatial Hierarchy in Convolution Neural Networks. In Deep Learning Experiments; 2020; Available online: https://www.researchgate.net/publication/346017211_IMPORTANCE_OF_SPATIAL_HIERARCHY_IN_CONVOLUTION_NEURAL_NETWORKS (accessed on 12 December 2022).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Bieder, F.; Sandkühler, R.; Cattin, P.C. Comparison of methods generalizing max-and average-pooling. arXiv 2021, arXiv:2103.01746. [Google Scholar]

- Stepanov, K.V.; Zhirnov, A.A.; Koshelev, K.I.; Chernutsky, A.O.; Khan, R.I.; Pnev, A.B. Sensitivity Improvement of Phi-OTDR by Fiber Cable Coils. Sensors 2021, 21, 7077. [Google Scholar] [CrossRef] [PubMed]

- Vrigazova, B. The proportion for splitting data into training and test set for the bootstrap in classification problems. Bus. Syst. Res. J. Soc. Adv. Innov. Res. Econ. 2021, 12, 228–242. [Google Scholar] [CrossRef]

- Ranjan GS, K.; Verma, A.K.; Radhika, S. K-Nearest Neighbors and Grid Search cv Based Real Time Fault Monitoring System for Industries. In Proceedings of the 2019 IEEE 5th International Conference for Convergence in Technology (I2CT), Bombay, India, 29–31 March 2019; IEEE: Piscataway, NJ, USA; pp. 1–5. [Google Scholar]

- Tynchenko, V.S.; Petrovsky, E.A.; Tynchenko, V.V. The Parallel Genetic Algorithm for Construction of Technological Objects Neural Network Models. In Proceedings of the 2016 2nd International Conference on Industrial Engineering, Applications and Manufacturing (ICIEAM), Chelyabinsk, Russia, 19–20 May 2016; IEEE: Piscataway, NJ, USA; pp. 1–4. [Google Scholar]

- Tynchenko, V.S.; Tynchenko, V.V.; Bukhtoyarov, V.V.; Tynchenko, S.V.; Petrovskyi, E.A. The multi-objective optimization of complex objects neural network models. Indian J. Sci. Technol. 2016, 9, 99467. [Google Scholar]

| Optimizer | Adam | Adagrad | RMSprop |

|---|---|---|---|

| Number of filters in first layer | 64 | 32 | 16 |

| Number of filters in second layer | 64 | 32 | 16 |

| Number of filters in third layer | 64 | 32 | 16 |

| Number of neurons in the hidden fully connected layer | 128 | 64 | - |

| Designed Model (Figure 6) | AlexNet (Figure 9a) | |||

|---|---|---|---|---|

| Actually Negative | Actually Positive | Actually Negative | Actually Positive | |

| Predicted negative | 98.46% | 1.54% | 91.92% | 8.08% |

| Predicted positive | 4.64% | 95.36% | 1.78% | 98.22% |

| ResNet50 (Figure 9b) | DenseNet169 (Figure 9c) | |||

| Actually Negative | Actually Positive | Actually Negative | Actually Positive | |

| Predicted negative | 82.7% | 17.3% | 95% | 15% |

| Predicted positive | 13.4% | 86.6% | 17.3% | 82.7% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barantsov, I.A.; Pnev, A.B.; Koshelev, K.I.; Tynchenko, V.S.; Nelyub, V.A.; Borodulin, A.S. Classification of Acoustic Influences Registered with Phase-Sensitive OTDR Using Pattern Recognition Methods. Sensors 2023, 23, 582. https://doi.org/10.3390/s23020582

Barantsov IA, Pnev AB, Koshelev KI, Tynchenko VS, Nelyub VA, Borodulin AS. Classification of Acoustic Influences Registered with Phase-Sensitive OTDR Using Pattern Recognition Methods. Sensors. 2023; 23(2):582. https://doi.org/10.3390/s23020582

Chicago/Turabian StyleBarantsov, Ivan A., Alexey B. Pnev, Kirill I. Koshelev, Vadim S. Tynchenko, Vladimir A. Nelyub, and Aleksey S. Borodulin. 2023. "Classification of Acoustic Influences Registered with Phase-Sensitive OTDR Using Pattern Recognition Methods" Sensors 23, no. 2: 582. https://doi.org/10.3390/s23020582

APA StyleBarantsov, I. A., Pnev, A. B., Koshelev, K. I., Tynchenko, V. S., Nelyub, V. A., & Borodulin, A. S. (2023). Classification of Acoustic Influences Registered with Phase-Sensitive OTDR Using Pattern Recognition Methods. Sensors, 23(2), 582. https://doi.org/10.3390/s23020582