PhysioKit: An Open-Source, Low-Cost Physiological Computing Toolkit for Single- and Multi-User Studies

Abstract

:1. Introduction

- A novel open-source physiological computing toolkit (GitHub repo link, https://github.com/PhysiologicAILab/PhysioKit, accessed on 28 September 2023) that offers a one-stop physiological computing pipeline spanning from data collection and processing to a wide range of analysis functions, including a new machine learning module for the physiological signal quality assessment;

- A report on validation study results, as well as user reports on the PhysioKit’s usability and examples of use cases demonstrating its applicability in diverse applications.

2. Related Work

2.1. Physiological Computing and HCI Applications

2.2. Physiological Computing Sensors, Devices and Toolkits

2.2.1. Sensing and Data Acquisition Devices

Support for Passive and Interventional HCI Studies

Real-Time Signal Quality Assessment

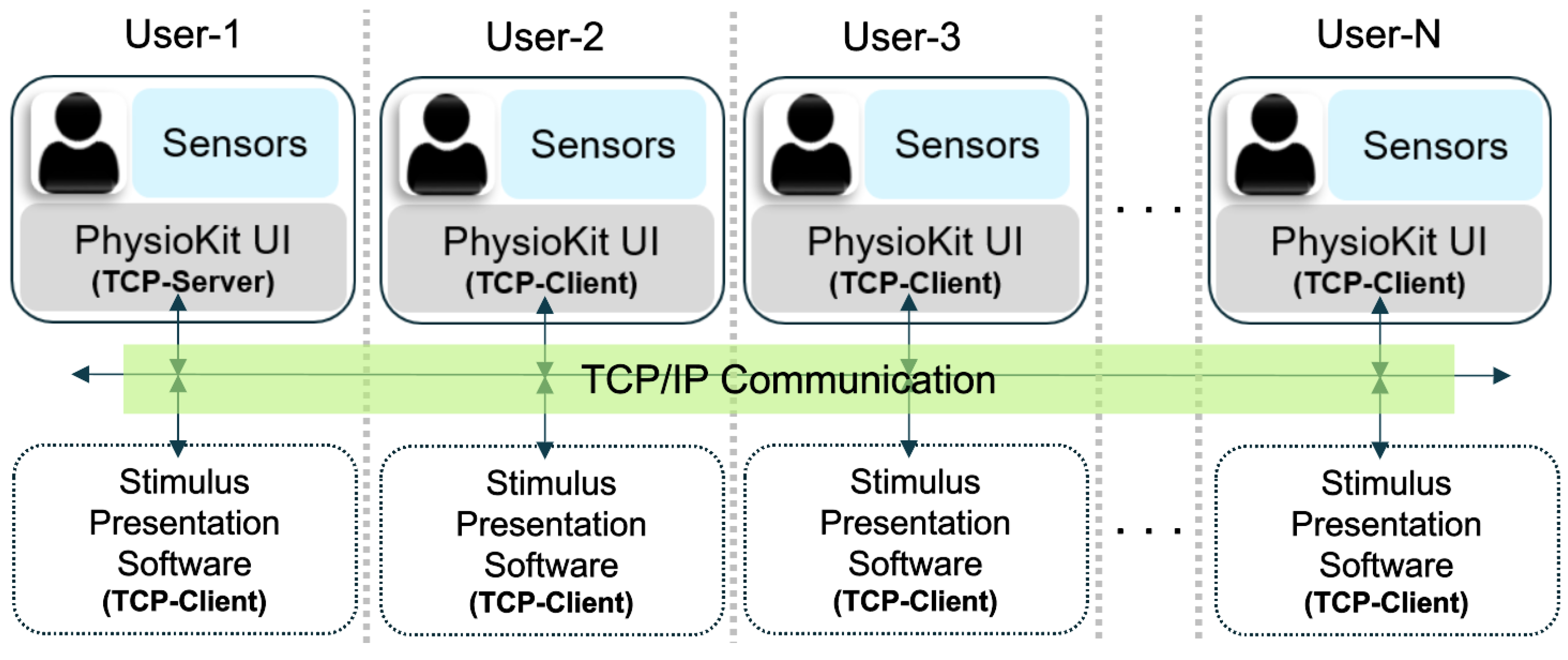

Support for Remote Multi-User Studies

Validation Studies

2.2.2. Data Analysis Toolkits

3. The Proposed Physiological Computing Toolkit: PhysioKit

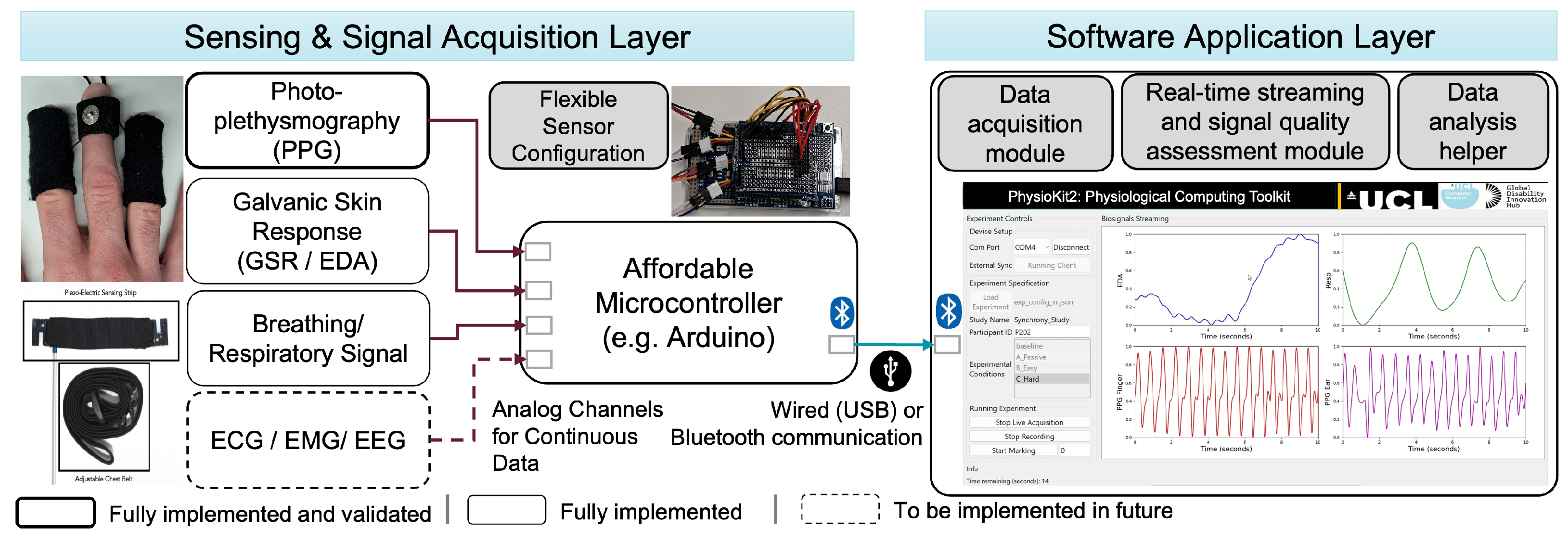

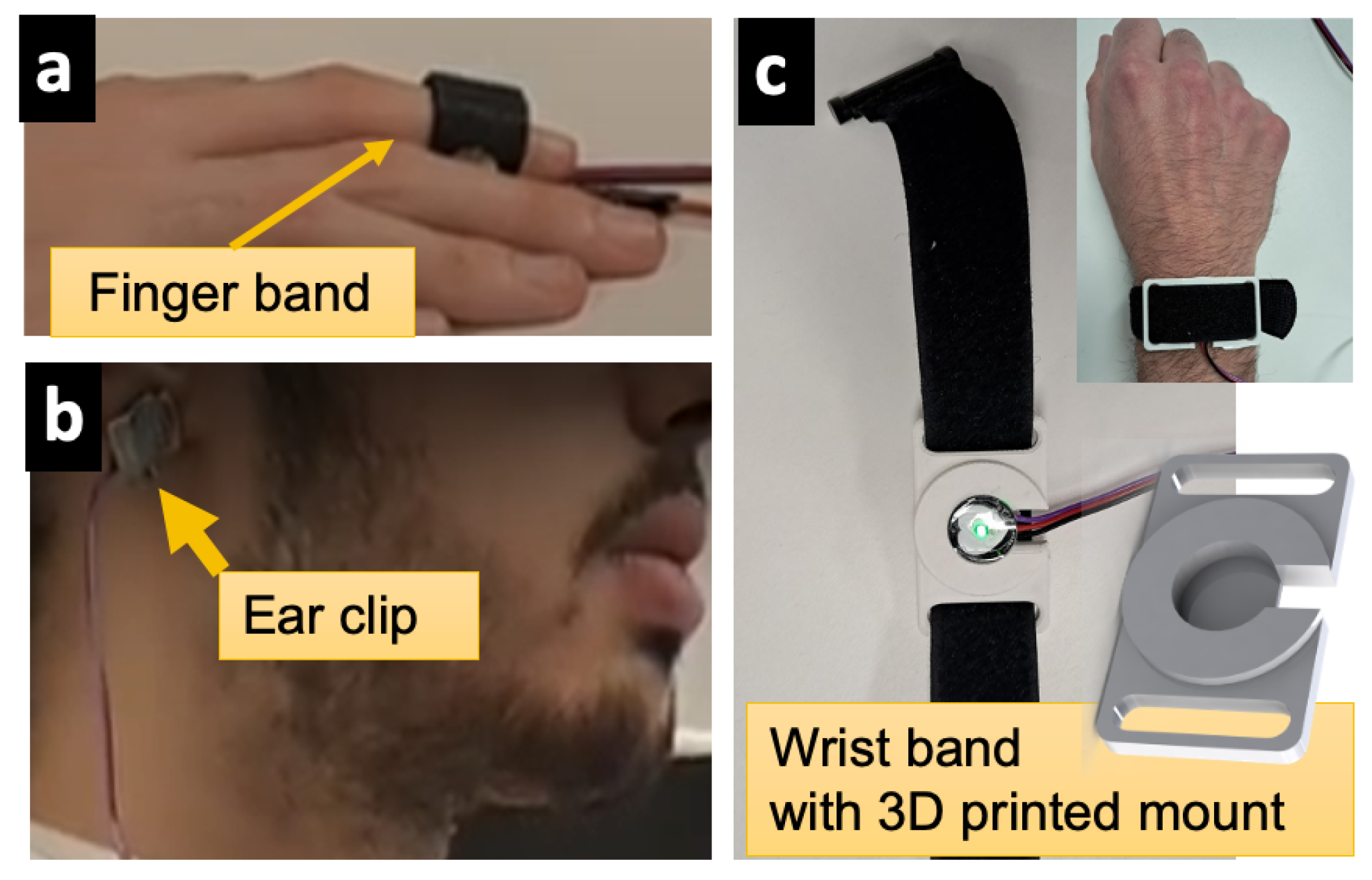

3.1. Sensing and Signal Acquisition Layer

3.2. Software Application Layer

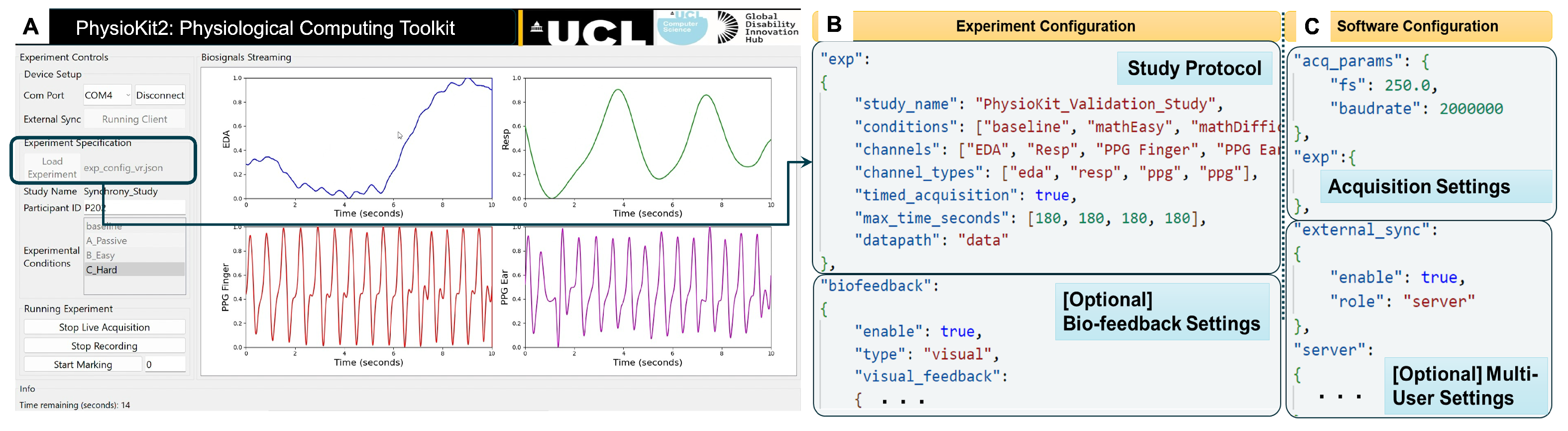

3.2.1. User Interface

3.2.2. Signal Quality Assessment Module for PPG

3.2.3. Configuring the UI for Experimental Studies

3.2.4. Support for Interventional Studies and Biofeedback

3.2.5. Data Analysis Helper

4. Evaluation

4.1. Study 1: Performance Evaluation

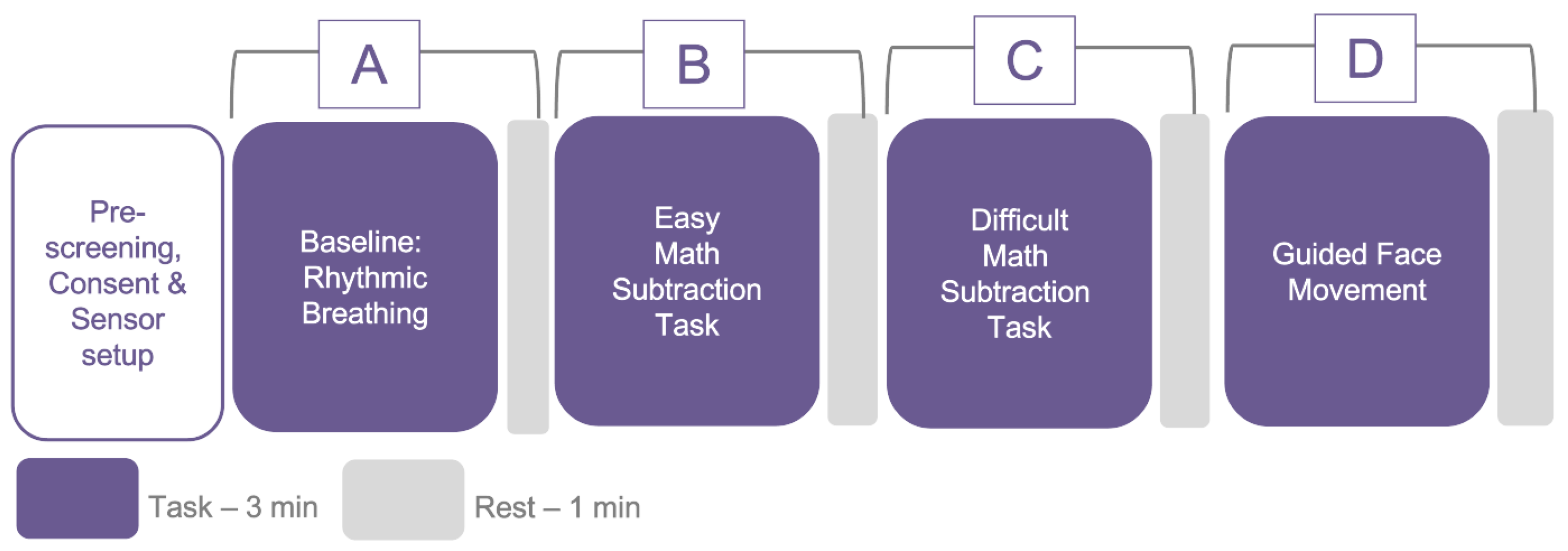

4.1.1. Data Collection Protocol

4.1.2. Participants and Study Preparation

4.1.3. Data Analysis

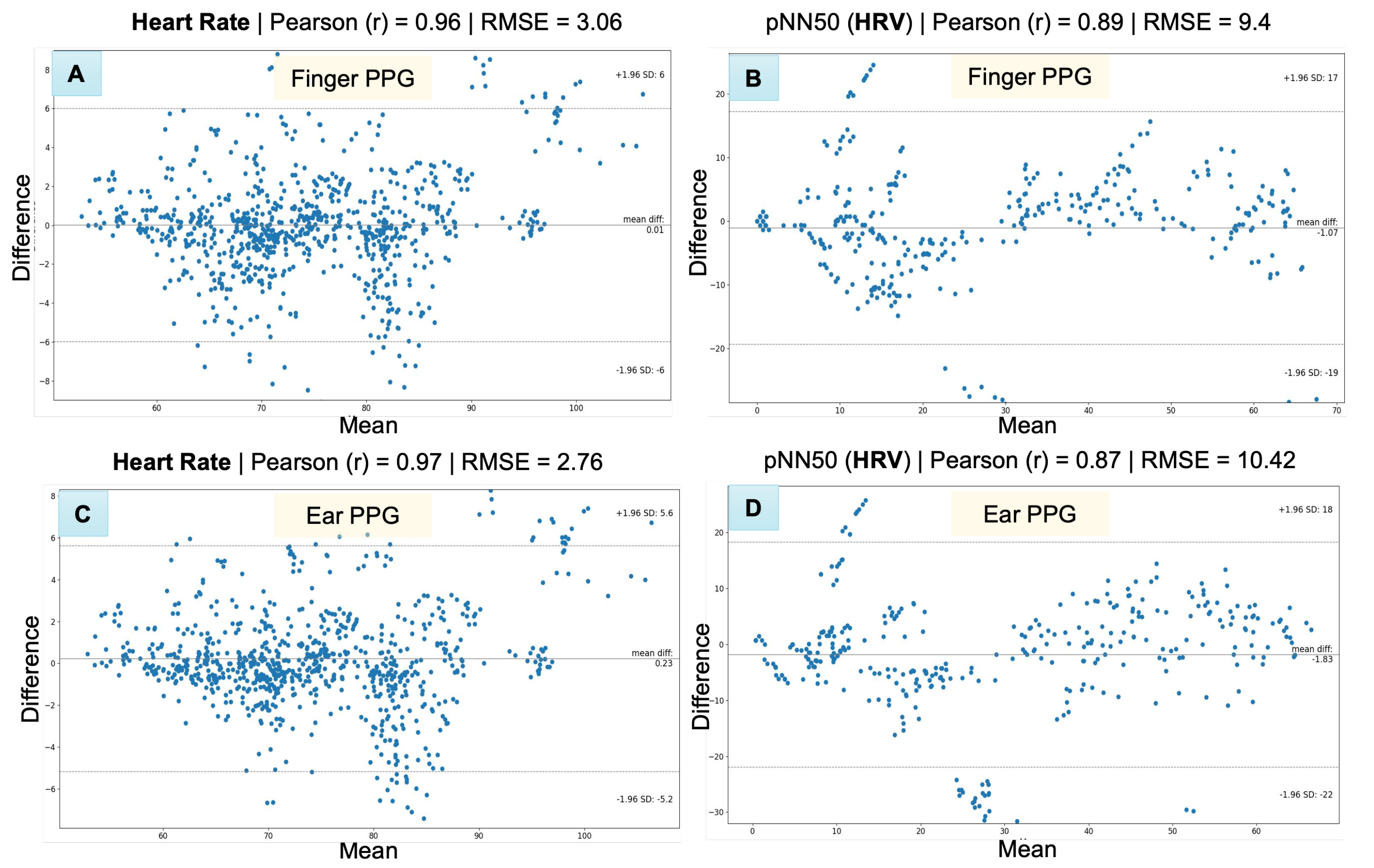

4.1.4. Results

4.2. Study 2: Usability Analysis

4.2.1. Use Cases

PhysioKit for Interventional Applications

PhysioKit for Passive Applications

4.2.2. Data Collection

4.2.3. Results

Usefulness and Ease of Use

Learning Process

Satisfaction

Open-Ended Questions

5. Discussion

5.1. Unique Propositions of PhysioKit

5.2. Evaluating the Validation of PhysioKit

5.3. Assessing the Usability of PhysioKit

5.4. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 1D-CNN | 1-dimensional convolutional neural network |

| ADC | Analog-to-digital converter |

| API | Application programming interface |

| BPM | Beats per minute |

| BVP | Blood volume pulse |

| CAD | Computer-aided design |

| ECG | Electrocardiogram |

| EDA | Electrodermal activity |

| EMG | Electromyography |

| GSR | Galvanic skin response |

| HCI | Human–computer interface |

| HR | Heart rate |

| HRV | Heart rate variability |

| LSTM | Long short-term memory |

| PPG | Photoplethysmography |

| PRV | Pulse rate variability |

| RGB | Color images with red, green an blue frames |

| RSP | Respiration or breathing |

| SOTA | State-of-the-art |

| SQI | Signal quality index |

| SVM | Support vector machine |

| TCP/IP | Transmission control protocol/Internet protocol |

| UI | User interface |

Appendix A. Validation Studies of Physiological Computing Systems

| Device | Device Category | Cost (USD) | Access to Raw Physiological Signals | Support for Interventional Studies | Support for Multi-User Studies | Validation Results for HR (bpm) | Validation Results for HRV |

|---|---|---|---|---|---|---|---|

| AliveCor KardiaMobile | C | 79.00 | No | No | No | 0.96 [53] | N.A. |

| Apple Watch 4 | C | 399.00 * | No | No | No | MAE = 4.4 ± 2.7 [67]; r = 0.99 [66] | N.A. |

| Apple Watch 6 | C | 399.99 * | No | No | No | 0.96 [14,53] | RMSSD: 0.67 [14] |

| Fitbit Charge 2 Fitbit | C | 149.95 * | No | No | No | MAE = 7.3 ± 4.2 [67] | N.A. |

| Fitbit Sense | C | 159.99 * | No | No | No | 0.88 [53] | N.A. |

| Garmin Vivosmart 3 | C | 139.99 * | No | No | No | MAE = 7.0 ± 5.0 [67] | N.A. |

| Garmin Fenix 5 | C | 599.00 * | No | No | No | r= 0.89 [66] | N.A. |

| Garmin Forerunner 245 Music | C | 349.00 * | No | No | No | 0.41 | RMSSD: = 0.24 [14] |

| Garmin Vivosmart HR+ | C | 219.99 * | No | No | No | MAE = 2.98, 0.90 [102] | N.A. |

| Oura Gen 2 | C | 299.00 | No | No | No | 0.85 | RMSSD: 0.63 [14] |

| Polar H10 † | C | 89.95 | No | No | No | N.A. | HRV (RR, ms) Spearman r = 1.00 [100] |

| Polar Vantage V | C | 499.95 | No | No | No | 0.93; r = 0.99 [66] | RMSSD: 0.65 [14] |

| Samsung Galaxy Watch3 | C | 399.99 * | No | No | No | 0.96 [53] | N.A. |

| Withings Scanwatch | C | 299.95 | No | No | No | 0.95 [53] | N.A. |

| Empatica E4 | R | 1690.00 * | Yes (filtered signals obtained from company servers) | No | No | MAE = 11.3 ± 8.0 [67] | N.A. |

| BITalino (PsychoBIT) | R | 459.78 | Yes | Using third party software | Yes, only co-located | Morphology-based validation with ECG signals: = 0.83 [104] | N.A. |

| OpenBCI (EmotiBit) | R | 499.97 | Yes | Using third party software | No | N.A. | N.A. |

References

- Wang, T.; Zhang, H. Using Wearable Devices for Emotion Recognition in Mobile Human–Computer Interaction: A Review. In HCII 2022: HCI International 2022—Late Breaking Papers. Multimodality in Advanced Interaction Environments; Kurosu, M., Yamamoto, S., Mori, H., Schmorrow, D.D., Fidopiastis, C.M., Streitz, N.A., Konomi, S., Eds.; Lecture Notes in Computer Science; Springer Nature Switzerland: Cham, Switzerland, 2022; pp. 205–227. [Google Scholar] [CrossRef]

- Malasinghe, L.P.; Ramzan, N.; Dahal, K. Remote Patient Monitoring: A Comprehensive Study. J. Ambient Intell. Humaniz. Comput. 2019, 10, 57–76. [Google Scholar] [CrossRef]

- Chanel, G.; Mühl, C. Connecting Brains and Bodies: Applying Physiological Computing to Support Social Interaction. Interact. Comput. 2015, 27, 534–550. [Google Scholar] [CrossRef]

- Moge, C.; Wang, K.; Cho, Y. Shared User Interfaces of Physiological Data: Systematic Review of Social Biofeedback Systems and Contexts in HCI. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, CHI ’22, New York, NY, USA, 29 April 2022; pp. 1–16. [Google Scholar] [CrossRef]

- Cho, Y. Rethinking Eye-blink: Assessing Task Difficulty through Physiological Representation of Spontaneous Blinking. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, CHI ’21, New York, NY, USA, 7 May 2021; pp. 1–12. [Google Scholar] [CrossRef]

- Ayobi, A.; Sonne, T.; Marshall, P.; Cox, A.L. Flexible and Mindful Self-Tracking: Design Implications from Paper Bullet Journals. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems; CHI ’18, New York, NY, USA, 19 April 2018; pp. 1–14. [Google Scholar] [CrossRef]

- Koydemir, H.C.; Ozcan, A. Wearable and Implantable Sensors for Biomedical Applications. Annu. Rev. Anal. Chem. 2018, 11, 127–146. [Google Scholar] [CrossRef] [PubMed]

- Peake, J.M.; Kerr, G.; Sullivan, J.P. A Critical Review of Consumer Wearables, Mobile Applications, and Equipment for Providing Biofeedback, Monitoring Stress, and Sleep in Physically Active Populations. Front. Physiol. 2018, 9, 743. [Google Scholar] [CrossRef]

- Cho, Y.; Julier, S.J.; Marquardt, N.; Bianchi-Berthouze, N. Robust Tracking of Respiratory Rate in High-Dynamic Range Scenes Using Mobile Thermal Imaging. Biomed. Opt. Express 2017, 8, 4480–4503. [Google Scholar] [CrossRef]

- Cho, Y.; Julier, S.J.; Bianchi-Berthouze, N. Instant Stress: Detection of Perceived Mental Stress Through Smartphone Photoplethysmography and Thermal Imaging. JMIR Ment. Health 2019, 6, e10140. [Google Scholar] [CrossRef]

- Ometov, A.; Shubina, V.; Klus, L.; Skibińska, J.; Saafi, S.; Pascacio, P.; Flueratoru, L.; Gaibor, D.Q.; Chukhno, N.; Chukhno, O.; et al. A Survey on Wearable Technology: History, State-of-the-Art and Current Challenges. Comput. Netw. 2021, 193, 108074. [Google Scholar] [CrossRef]

- Baron, K.G.; Duffecy, J.; Berendsen, M.A.; Cheung Mason, I.; Lattie, E.G.; Manalo, N.C. Feeling Validated yet? A Scoping Review of the Use of Consumer-Targeted Wearable and Mobile Technology to Measure and Improve Sleep. Sleep Med. Rev. 2018, 40, 151–159. [Google Scholar] [CrossRef]

- Arias, O.; Wurm, J.; Hoang, K.; Jin, Y. Privacy and Security in Internet of Things and Wearable Devices. IEEE Trans. Multi-Scale Comput. Syst. 2015, 1, 99–109. [Google Scholar] [CrossRef]

- Miller, D.J.; Sargent, C.; Roach, G.D. A Validation of Six Wearable Devices for Estimating Sleep, Heart Rate and Heart Rate Variability in Healthy Adults. Sensors 2022, 22, 6317. [Google Scholar] [CrossRef]

- Sato, M.; Puri, R.S.; Olwal, A.; Chandra, D.; Poupyrev, I.; Raskar, R. Zensei: Augmenting Objects with Effortless User Recognition Capabilities through Bioimpedance Sensing. In Proceedings of the Adjunct Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology; Daegu, Republic of Korea, 8–11 November 2015, pp. 41–42. [CrossRef]

- Shibata, T.; Peck, E.M.; Afergan, D.; Hincks, S.W.; Yuksel, B.F.; Jacob, R.J. Building Implicit Interfaces for Wearable Computers with Physiological Inputs: Zero Shutter Camera and Phylter. In Proceedings of the Adjunct Publication of the 27th Annual ACM Symposium on User Interface Software and Technology—UIST’14 Adjunct, Honolulu, HI, USA, 5 October 2014; pp. 89–90. [Google Scholar] [CrossRef]

- Yamamura, H.; Baldauf, H.; Kunze, K. Pleasant Locomotion—Towards Reducing Cybersickness Using fNIRS during Walking Events in VR. In Proceedings of the Adjunct Publication of the 33rd Annual ACM Symposium on User Interface Software and Technology, Virtual Event, USA, 20 October 2020; pp. 56–58. [Google Scholar] [CrossRef]

- Pai, Y.S. Physiological Signal-Driven Virtual Reality in Social Spaces. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16 October 2016; pp. 25–28. [Google Scholar] [CrossRef]

- Baldini, A.; Garofalo, R.; Scilingo, E.P.; Greco, A. A Real-Time, Open-Source, IoT-like, Wearable Monitoring Platform. Electronics 2023, 12, 1498. [Google Scholar] [CrossRef]

- Pradhan, N.; Rajan, S.; Adler, A. Evaluation of the Signal Quality of Wrist-Based Photoplethysmography. Physiol. Meas. 2019, 40, 065008. [Google Scholar] [CrossRef]

- Park, J.; Seok, H.S.; Kim, S.S.; Shin, H. Photoplethysmogram Analysis and Applications: An Integrative Review. Front. Physiol. 2022, 12, 808451. [Google Scholar] [CrossRef] [PubMed]

- Moscato, S.; Lo Giudice, S.; Massaro, G.; Chiari, L. Wrist Photoplethysmography Signal Quality Assessment for Reliable Heart Rate Estimate and Morphological Analysis. Sensors 2022, 22, 5831. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Natarrajan, R.; Rodriguez, S.S.; Panda, P.; Ofek, E. RemoteLab: A VR Remote Study Toolkit. In Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology, UIST ’22, New York, NY, USA, 28 October 2022; pp. 1–9. [Google Scholar] [CrossRef]

- Lee, J.H.; Gamper, H.; Tashev, I.; Dong, S.; Ma, S.; Remaley, J.; Holbery, J.D.; Yoon, S.H. Stress Monitoring Using Multimodal Bio-sensing Headset. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25 April 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Maritsch, M.; Föll, S.; Lehmann, V.; Bérubé, C.; Kraus, M.; Feuerriegel, S.; Kowatsch, T.; Züger, T.; Stettler, C.; Fleisch, E.; et al. Towards Wearable-based Hypoglycemia Detection and Warning in Diabetes. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25 April 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Liang, R.H.; Yu, B.; Xue, M.; Hu, J.; Feijs, L.M.G. BioFidget: Biofeedback for Respiration Training Using an Augmented Fidget Spinner. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21 April 2018; pp. 1–12. [Google Scholar] [CrossRef]

- Clegg, T.; Norooz, L.; Kang, S.; Byrne, V.; Katzen, M.; Velez, R.; Plane, A.; Oguamanam, V.; Outing, T.; Yip, J.; et al. Live Physiological Sensing and Visualization Ecosystems: An Activity Theory Analysis. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 2 May 2017; pp. 2029–2041. [Google Scholar] [CrossRef]

- Wang, H.; Prendinger, H.; Igarashi, T. Communicating Emotions in Online Chat Using Physiological Sensors and Animated Text. In Proceedings of the CHI ’04 Extended Abstracts on Human Factors in Computing Systems, Vienna, Austria, 24 April 2004; pp. 1171–1174. [Google Scholar] [CrossRef]

- Dey, A.; Piumsomboon, T.; Lee, Y.; Billinghurst, M. Effects of Sharing Physiological States of Players in a Collaborative Virtual Reality Gameplay. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, CHI ’17, New York, NY, USA, 2 May 2017; pp. 4045–4056. [Google Scholar] [CrossRef]

- Olugbade, T.; Cho, Y.; Morgan, Z.; El Ghani, M.A.; Bianchi-Berthouze, N. Toward Intelligent Car Comfort Sensing: New Dataset and Analysis of Annotated Physiological Metrics. In Proceedings of the 2021 9th International Conference on Affective Computing and Intelligent Interaction (ACII), Nara, Japan, 28 September 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Dillen, N.; Ilievski, M.; Law, E.; Nacke, L.E.; Czarnecki, K.; Schneider, O. Keep Calm and Ride Along: Passenger Comfort and Anxiety as Physiological Responses to Autonomous Driving Styles. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 21 April 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Frey, J.; Ostrin, G.; Grabli, M.; Cauchard, J.R. Physiologically Driven Storytelling: Concept and Software Tool. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 21 April 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Karran, A.J.; Fairclough, S.H.; Gilleade, K. Towards an Adaptive Cultural Heritage Experience Using Physiological Computing. In Proceedings of the CHI ’13 Extended Abstracts on Human Factors in Computing Systems, Paris, France, 27 April 2013; pp. 1683–1688. [Google Scholar] [CrossRef]

- Robinson, R.B.; Reid, E.; Depping, A.E.; Mandryk, R.; Fey, J.C.; Isbister, K. ’In the Same Boat’,: A Game of Mirroring Emotions for Enhancing Social Play. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, UK, 2 May 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Schneegass, S.; Pfleging, B.; Broy, N.; Heinrich, F.; Schmidt, A. A Data Set of Real World Driving to Assess Driver Workload. In Proceedings of the 5th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI ’13, New York, NY, USA, 28 October 2013; pp. 150–157. [Google Scholar] [CrossRef]

- Wang, K.; Julier, S.J.; Cho, Y. Attention-Based Applications in Extended Reality to Support Autistic Users: A Systematic Review. IEEE Access 2022, 10, 15574–15593. [Google Scholar] [CrossRef]

- Fairclough, S.H.; Karran, A.J.; Gilleade, K. Classification Accuracy from the Perspective of the User: Real-Time Interaction with Physiological Computing. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18 April 2015; pp. 3029–3038. [Google Scholar] [CrossRef]

- Oh, S.Y.; Kim, S. Does Social Endorsement Influence Physiological Arousal? In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, San Jose, CA, USA, 7 May 2016; pp. 2900–2905. [Google Scholar] [CrossRef]

- Sato, Y.; Ueoka, R. Investigating Haptic Perception of and Physiological Responses to Air Vortex Rings on a User’s Cheek. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 2 May 2017; pp. 3083–3094. [Google Scholar] [CrossRef]

- Frey, J.; Daniel, M.; Castet, J.; Hachet, M.; Lotte, F. Framework for Electroencephalography-based Evaluation of User Experience. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, CHI ’16, New York, NY, USA, 7 May 2016; pp. 2283–2294. [Google Scholar] [CrossRef]

- Nukarinen, T.; Istance, H.O.; Rantala, J.; Mäkelä, J.; Korpela, K.; Ronkainen, K.; Surakka, V.; Raisamo, R. Physiological and Psychological Restoration in Matched Real and Virtual Natural Environments. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25 April 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Grassmann, M.; Vlemincx, E.; von Leupoldt, A.; Mittelstädt, J.M.; Van den Bergh, O. Respiratory Changes in Response to Cognitive Load: A Systematic Review. Neural Plast. 2016, 2016, e8146809. [Google Scholar] [CrossRef] [PubMed]

- Vlemincx, E.; Taelman, J.; De Peuter, S.; Van Diest, I.; Van Den Bergh, O. Sigh Rate and Respiratory Variability during Mental Load and Sustained Attention. Psychophysiology 2011, 48, 117–120. [Google Scholar] [CrossRef]

- Xiao, X.; Pham, P.; Wang, J. AttentiveLearner: Adaptive Mobile MOOC Learning via Implicit Cognitive States Inference. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 9 November 2015; pp. 373–374. [Google Scholar] [CrossRef]

- DiSalvo, B.; Bandaru, D.; Wang, Q.; Li, H.; Plötz, T. Reading the Room: Automated, Momentary Assessment of Student Engagement in the Classroom: Are We There Yet? Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2022, 6, 1–26. [Google Scholar] [CrossRef]

- Huynh, S.; Kim, S.; Ko, J.; Balan, R.K.; Lee, Y. EngageMon: Multi-Modal Engagement Sensing for Mobile Games. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 1–27. [Google Scholar] [CrossRef]

- Qin, C.Y.; Choi, J.H.; Constantinides, M.; Aiello, L.M.; Quercia, D. Having a Heart Time? A Wearable-based Biofeedback System. In Proceedings of the 22nd International Conference on Human–Computer Interaction with Mobile Devices and Services, MobileHCI ’20, New York, NY, USA, 5 October 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Howell, N.; Niemeyer, G.; Ryokai, K. Life-Affirming Biosensing in Public: Sounding Heartbeats on a Red Bench. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, CHI ’19, Glasgow, Scotland, UK, 2 May 2019; pp. 1–16. [Google Scholar] [CrossRef]

- Curran, M.T.; Gordon, J.R.; Lin, L.; Sridhar, P.K.; Chuang, J. Understanding Digitally Mediated Empathy: An Exploration of Visual, Narrative, and Biosensory Informational Cues. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, UK, 2 May 2019; pp. 1–13. [Google Scholar] [CrossRef]

- Moraveji, N.; Olson, B.; Nguyen, T.; Saadat, M.; Khalighi, Y.; Pea, R.; Heer, J. Peripheral Paced Respiration: Influencing User Physiology during Information Work. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16 October 2011; pp. 423–428. [Google Scholar] [CrossRef]

- Wongsuphasawat, K.; Gamburg, A.; Moraveji, N. You Can’t Force Calm: Designing and Evaluating Respiratory Regulating Interfaces for Calming Technology. In Proceedings of the Adjunct Proceedings of the 25th Annual ACM Symposium on User Interface Software and Technology, Cambridge, MA, USA, 7 October 2012; pp. 69–70. [Google Scholar] [CrossRef]

- Frey, J.; Grabli, M.; Slyper, R.; Cauchard, J.R. Breeze: Sharing Biofeedback through Wearable Technologies. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, CHI ’18, New York, NY, USA, 21 April 2018; pp. 1–12. [Google Scholar] [CrossRef]

- Mannhart, D.; Lischer, M.; Knecht, S.; du Fay de Lavallaz, J.; Strebel, I.; Serban, T.; Vögeli, D.; Schaer, B.; Osswald, S.; Mueller, C.; et al. Clinical Validation of 5 Direct-to-Consumer Wearable Smart Devices to Detect Atrial Fibrillation: BASEL Wearable Study. JACC Clin. Electrophysiol. 2023, 9, 232–242. [Google Scholar] [CrossRef]

- Schneeberger, T.; Sauerwein, N.; Anglet, M.S.; Gebhard, P. Stress Management Training Using Biofeedback Guided by Social Agents. In Proceedings of the 26th International Conference on Intelligent User Interfaces, IUI ’21, New York, NY, USA, 14 April 2021; pp. 564–574. [Google Scholar] [CrossRef]

- Sweeney, K.T.; Ward, T.E.; McLoone, S.F. Artifact Removal in Physiological Signals—Practices and Possibilities. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 488–500. [Google Scholar] [CrossRef]

- Akbar, F.; Mark, G.; Pavlidis, I.; Gutierrez-Osuna, R. An Empirical Study Comparing Unobtrusive Physiological Sensors for Stress Detection in Computer Work. Sensors 2019, 19, 3766. [Google Scholar] [CrossRef]

- Shin, H. Deep Convolutional Neural Network-Based Signal Quality Assessment for Photoplethysmogram. Comput. Biol. Med. 2022, 145, 105430. [Google Scholar] [CrossRef] [PubMed]

- Desquins, T.; Bousefsaf, F.; Pruski, A.; Maaoui, C. A Survey of Photoplethysmography and Imaging Photoplethysmography Quality Assessment Methods. Appl. Sci. 2022, 12, 9582. [Google Scholar] [CrossRef]

- Gao, H.; Wu, X.; Shi, C.; Gao, Q.; Geng, J. A LSTM-Based Realtime Signal Quality Assessment for Photoplethysmogram and Remote Photoplethysmogram. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19 June 2021; pp. 3831–3840. [Google Scholar]

- Pereira, T.; Gadhoumi, K.; Ma, M.; Liu, X.; Xiao, R.; Colorado, R.A.; Keenan, K.J.; Meisel, K.; Hu, X. A Supervised Approach to Robust Photoplethysmography Quality Assessment. IEEE J. Biomed. Health Inform. 2020, 24, 649–657. [Google Scholar] [CrossRef]

- Elgendi, M. Optimal Signal Quality Index for Photoplethysmogram Signals. Bioengineering 2016, 3, 21. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, T.; Rahman, M.M.; Nemati, E.; Ahmed, M.Y.; Kuang, J.; Gao, A.J. Remote Breathing Rate Tracking in Stationary Position Using the Motion and Acoustic Sensors of Earables. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, CHI ’23, Hamburg, Germany, 19 April 2023; pp. 1–22. [Google Scholar] [CrossRef]

- Gashi, S.; Di Lascio, E.; Santini, S. Using Unobtrusive Wearable Sensors to Measure the Physiological Synchrony Between Presenters and Audience Members. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 1–19. [Google Scholar] [CrossRef]

- Milstein, N.; Gordon, I. Validating Measures of Electrodermal Activity and Heart Rate Variability Derived From the Empatica E4 Utilized in Research Settings That Involve Interactive Dyadic States. Front. Behav. Neurosci. 2020, 14, 148. [Google Scholar] [CrossRef]

- Stroop, J.R. Studies of Interference in Serial Verbal Reactions. J. Exp. Psychol. 1935, 18, 643–662. [Google Scholar] [CrossRef]

- Düking, P.; Giessing, L.; Frenkel, M.O.; Koehler, K.; Holmberg, H.C.; Sperlich, B. Wrist-Worn Wearables for Monitoring Heart Rate and Energy Expenditure While Sitting or Performing Light-to-Vigorous Physical Activity: Validation Study. JMIR MHealth UHealth 2020, 8, e16716. [Google Scholar] [CrossRef]

- Bent, B.; Goldstein, B.A.; Kibbe, W.A.; Dunn, J.P. Investigating Sources of Inaccuracy in Wearable Optical Heart Rate Sensors. Npj Digit. Med. 2020, 3, 1–9. [Google Scholar] [CrossRef] [PubMed]

- BIOPAC | Data Acquisition, Loggers, Amplifiers, Transducers, Electrodes. Available online: https://www.biopac.com/ (accessed on 23 June 2023).

- Shimmer Wearable Sensor Technology | Wireless IMU | ECG | EMG | GSR. Available online: https://shimmersensing.com/ (accessed on 9 August 2023).

- BITalino. Available online: https://www.pluxbiosignals.com/collections/bitalino (accessed on 28 May 2023).

- ThoughtTech—Unlock Human Potential. Available online: https://thoughttechnology.com/# (accessed on 9 August 2023).

- Makowski, D.; Pham, T.; Lau, Z.J.; Brammer, J.C.; Lespinasse, F.; Pham, H.; Schölzel, C.; Chen, S.H.A. NeuroKit2: A Python Toolbox for Neurophysiological Signal Processing. Behav. Res. Methods 2021, 53, 1689–1696. [Google Scholar] [CrossRef] [PubMed]

- van Gent, P.; Farah, H.; Nes, N.; Arem, B. Heart Rate Analysis for Human Factors: Development and Validation of an Open Source Toolkit for Noisy Naturalistic Heart Rate Data. In Proceedings of the 6th HUMANIST Conference, The Hague, The Netherlands, 13–14 June 2018. [Google Scholar]

- Föll, S.; Maritsch, M.; Spinola, F.; Mishra, V.; Barata, F.; Kowatsch, T.; Fleisch, E.; Wortmann, F. FLIRT: A Feature Generation Toolkit for Wearable Data. Comput. Methods Programs Biomed. 2021, 212, 106461. [Google Scholar] [CrossRef] [PubMed]

- Bizzego, A.; Battisti, A.; Gabrieli, G.; Esposito, G.; Furlanello, C. Pyphysio: A Physiological Signal Processing Library for Data Science Approaches in Physiology. SoftwareX 2019, 10, 100287. [Google Scholar] [CrossRef]

- Guo, Z.; Ding, C.; Hu, X.; Rudin, C. A Supervised Machine Learning Semantic Segmentation Approach for Detecting Artifacts in Plethysmography Signals from Wearables. Physiol. Meas. 2021, 42, 125003. [Google Scholar] [CrossRef]

- Lehrer, P.M.; Gevirtz, R. Heart Rate Variability Biofeedback: How and Why Does It Work? Front. Psychol. 2014, 5, 756. [Google Scholar] [CrossRef]

- Giggins, O.M.; Persson, U.M.; Caulfield, B. Biofeedback in Rehabilitation. J. Neuroeng. Rehabil. 2013, 10, 60. [Google Scholar] [CrossRef]

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array Programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef]

- Tonacci, A.; Billeci, L.; Burrai, E.; Sansone, F.; Conte, R. Comparative Evaluation of the Autonomic Response to Cognitive and Sensory Stimulations through Wearable Sensors. Sensors 2019, 19, 4661. [Google Scholar] [CrossRef]

- Birkett, M.A. The Trier Social Stress Test Protocol for Inducing Psychological Stress. J. Vis. Exp. JoVE 2011, 56, e3238. [Google Scholar] [CrossRef]

- Johnson, K.T.; Narain, J.; Ferguson, C.; Picard, R.; Maes, P. The ECHOS Platform to Enhance Communication for Nonverbal Children with Autism: A Case Study. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, CHI EA ’20, Honolulu, HI, USA, 25 April 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Alfonso, C.; Garcia-Gonzalez, M.A.; Parrado, E.; Gil-Rojas, J.; Ramos-Castro, J.; Capdevila, L. Agreement between Two Photoplethysmography-Based Wearable Devices for Monitoring Heart Rate during Different Physical Activity Situations: A New Analysis Methodology. Sci. Rep. 2022, 12, 15448. [Google Scholar] [CrossRef] [PubMed]

- Zanon, M.; Kriara, L.; Lipsmeier, F.; Nobbs, D.; Chatham, C.; Hipp, J.; Lindemann, M. A Quality Metric for Heart Rate Variability from Photoplethysmogram Sensor Data. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 706–709. [Google Scholar] [CrossRef]

- Altman, D.G.; Bland, J.M. Measurement in Medicine: The Analysis of Method Comparison Studies. J. R. Stat. Society. Ser. D Stat. 1983, 32, 307–317. [Google Scholar] [CrossRef]

- Giavarina, D. Understanding Bland–Altman Analysis. Biochem. Medica 2015, 25, 141–151. [Google Scholar] [CrossRef] [PubMed]

- Georgiou, K.; Larentzakis, A.V.; Khamis, N.N.; Alsuhaibani, G.I.; Alaska, Y.A.; Giallafos, E.J. Can Wearable Devices Accurately Measure Heart Rate Variability? A Systematic Review. Folia Medica 2018, 60, 7–20. [Google Scholar] [CrossRef]

- Lund, A. Measuring Usability with the USE Questionnaire. Usability User Exp. Newsl. STC Usability SIG 2001, 8, 3–6. [Google Scholar]

- Gao, M.; Kortum, P.; Oswald, F. Psychometric Evaluation of the USE (Usefulness, Satisfaction, and Ease of Use) Questionnaire for Reliability and Validity. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2018, 62, 1414–1418. [Google Scholar] [CrossRef]

- Speer, K.E.; Semple, S.; Naumovski, N.; McKune, A.J. Measuring Heart Rate Variability Using Commercially Available Devices in Healthy Children: A Validity and Reliability Study. Eur. J. Investig. Heal. Psychol. Educ. 2020, 10, 390–404. [Google Scholar] [CrossRef]

- Cowley, B.; Filetti, M.; Lukander, K.; Torniainen, J.; Henelius, A.; Ahonen, L.; Barral, O.; Kosunen, I.; Valtonen, T.; Huotilainen, M.; et al. The Psychophysiology Primer: A Guide to Methods and a Broad Review with a Focus on Human–Computer Interaction. Found. Trends® Hum.–Comput. Interact. 2016, 9, 151–308. [Google Scholar] [CrossRef]

- van Lier, H.G.; Pieterse, M.E.; Garde, A.; Postel, M.G.; de Haan, H.A.; Vollenbroek-Hutten, M.M.R.; Schraagen, J.M.; Noordzij, M.L. A Standardized Validity Assessment Protocol for Physiological Signals from Wearable Technology: Methodological Underpinnings and an Application to the E4 Biosensor. Behav. Res. Methods 2020, 52, 607–629. [Google Scholar] [CrossRef]

- Shiri, S.; Feintuch, U.; Weiss, N.; Pustilnik, A.; Geffen, T.; Kay, B.; Meiner, Z.; Berger, I. A Virtual Reality System Combined with Biofeedback for Treating Pediatric Chronic Headache—A Pilot Study. Pain Med. 2013, 14, 621–627. [Google Scholar] [CrossRef]

- Reali, P.; Tacchino, G.; Rocco, G.; Cerutti, S.; Bianchi, A.M. Heart Rate Variability from Wearables: A Comparative Analysis Among Standard ECG, a Smart Shirt and a Wristband. Stud. Health Technol. Informatics 2019, 261, 128–133. [Google Scholar]

- Wang, C.; Pun, T.; Chanel, G. A Comparative Survey of Methods for Remote Heart Rate Detection From Frontal Face Videos. Front. Bioeng. Biotechnol. 2018, 6, 33. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Li, X.; Zhao, G. Facial-Video-Based Physiological Signal Measurement: Recent Advances and Affective Applications. IEEE Signal Process. Mag. 2021, 38, 50–58. [Google Scholar] [CrossRef]

- Joshi, J.; Bianchi-Berthouze, N.; Cho, Y. Self-Adversarial Multi-scale Contrastive Learning for Semantic Segmentation of Thermal Facial Images. arXiv 2022, arXiv:2209.10700. [Google Scholar] [CrossRef]

- Manullang, M.C.T.; Lin, Y.H.; Lai, S.J.; Chou, N.K. Implementation of Thermal Camera for Non-Contact Physiological Measurement: A Systematic Review. Sensors 2021, 21, 7777. [Google Scholar] [CrossRef]

- Gault, T.; Farag, A. A Fully Automatic Method to Extract the Heart Rate from Thermal Video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 25–27 June 2013; pp. 336–341. [Google Scholar]

- Gilgen-Ammann, R.; Schweizer, T.; Wyss, T. RR Interval Signal Quality of a Heart Rate Monitor and an ECG Holter at Rest and during Exercise. Eur. J. Appl. Physiol. 2019, 119, 1525–1532. [Google Scholar] [CrossRef]

- Cassirame, J.; Vanhaesebrouck, R.; Chevrolat, S.; Mourot, L. Accuracy of the Garmin 920 XT HRM to Perform HRV Analysis. Australas. Phys. Eng. Sci. Med. 2017, 40, 831–839. [Google Scholar] [CrossRef]

- Chow, H.W.; Yang, C.C. Accuracy of Optical Heart Rate Sensing Technology in Wearable Fitness Trackers for Young and Older Adults: Validation and Comparison Study. JMIR MHealth UHealth 2020, 8, e14707. [Google Scholar] [CrossRef]

- Müller, A.M.; Wang, N.X.; Yao, J.; Tan, C.S.; Low, I.C.C.; Lim, N.; Tan, J.; Tan, A.; Müller-Riemenschneider, F. Heart Rate Measures From Wrist-Worn Activity Trackers in a Laboratory and Free-Living Setting: Validation Study. JMIR MHealth UHealth 2019, 7, e14120. [Google Scholar] [CrossRef]

- Batista, D.; Plácido da Silva, H.; Fred, A.; Moreira, C.; Reis, M.; Ferreira, H.A. Benchmarking of the BITalino Biomedical Toolkit against an Established Gold Standard. Healthc. Technol. Lett. 2019, 6, 32–36. [Google Scholar] [CrossRef]

| Sensing and Signal Acquisition Layer Specifications | |

|---|---|

| Parameter | Specifications |

| Sampling rate | 10–10,000 samples per second |

| ADC | 10/12 bit |

| Vref | 3.3V/ 5V |

| Baudrate | 9600–2,000,000 |

| Data transmission mode | USB/ Bluetooth |

| No. of channels | 1—Max supported by specific Arduino board |

| Installation and package contents | |

| Installation of the interface | pip install PhysioKit2 |

| GitHub repository | Repo link https://github.com/PhysiologicAILab/PhysioKit (accessed on 28 September 2023) |

| Configuration files | Download path https://github.com/PhysiologicAILab/PhysioKit/tree/main/configs |

| (accessed on 28 September 2023) | |

| Codes to program Arduino | Download path https://github.com/PhysiologicAILab/PhysioKit/tree/main/arduino |

| (accessed on 28 September 2023) | |

| 3D-printable model for a wristband case | Download path https://github.com/PhysiologicAILab/PhysioKit/tree/main/CAD_Models |

| (accessed on 28 September 2023) | |

| Metrics | PPG Site | Experimental Condition | RMSE | MAE | SD | Pearson (r) |

|---|---|---|---|---|---|---|

| Heart Rate (beats per minute) | Finger | Baseline | 3.63 | 2.43 | 3.38 | 0.96 |

| Math—Easy | 2.08 | 1.44 | 2.02 | 0.98 | ||

| Math—Difficult | 2.41 | 1.75 | 2.41 | 0.98 | ||

| Face Movement | 2.17 | 1.49 | 2.09 | 0.98 | ||

| All sessions combined | 2.65 | 1.78 | 2.65 | 0.97 | ||

| Ear | Baseline | 3.71 | 2.46 | 3.41 | 0.95 | |

| Math—Easy | 1.0 | 0.73 | 0.92 | 1.0 | ||

| Math—Difficult | 2.08 | 1.46 | 2.07 | 0.98 | ||

| Face Movement | 2.11 | 1.40 | 2.05 | 0.98 | ||

| All sessions combined | 2.45 | 1.53 | 2.43 | 0.97 | ||

| Pulse Rate Variability (pNN50) | Finger | Baseline | 4.31 | 3.46 | 4.28 | 0.98 |

| Math—Easy | 11.33 | 6.54 | 10.6 | 0.91 | ||

| Math—Difficult | 12.11 | 9.53 | 12.09 | 0.75 | ||

| Face Movement | 8.26 | 5.66 | 8.25 | 0.92 | ||

| All sessions combined | 9.4 | 6.26 | 9.34 | 0.89 | ||

| Ear | Baseline | 4.36 | 3.78 | 4.21 | 0.99 | |

| Math—Easy | 9.85 | 5.75 | 9.27 | 0.91 | ||

| Math—Difficult | 15.26 | 11.13 | 15.12 | 0.64 | ||

| Face Movement | 9.38 | 7.27 | 9.32 | 0.88 | ||

| All sessions combined | 10.42 | 7.02 | 10.26 | 0.87 |

| Application | Application Type | No. of Members | Project Duration |

|---|---|---|---|

| Using physiological reactions as emotional responses to music | Interventional | 6 | 4 weeks |

| Emotion recognition during watching of videos | Interventional | 6 | 4 weeks |

| Using artistic biofeedback to encourage mindfulness | Interventional | 1 | 6 weeks |

| Using acute stress response to determine game difficulty | Interventional | 7 | 4 weeks |

| Generating a dataset for an affective music recommendation system | Passive | 7 | 4 weeks |

| Adapting an endless runner game to player stress levels | Interventional | 8 7 | 4 weeks |

| Influencing presentation experience with social biofeedback | Interventional | 1 | 6 weeks |

| Mapping stress in virtual reality | Passive | 1 | 6 weeks |

| Assessing synchronous heartbeats during a virtual reality game | Passive | 2 | 6 weeks |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Joshi, J.; Wang, K.; Cho, Y. PhysioKit: An Open-Source, Low-Cost Physiological Computing Toolkit for Single- and Multi-User Studies. Sensors 2023, 23, 8244. https://doi.org/10.3390/s23198244

Joshi J, Wang K, Cho Y. PhysioKit: An Open-Source, Low-Cost Physiological Computing Toolkit for Single- and Multi-User Studies. Sensors. 2023; 23(19):8244. https://doi.org/10.3390/s23198244

Chicago/Turabian StyleJoshi, Jitesh, Katherine Wang, and Youngjun Cho. 2023. "PhysioKit: An Open-Source, Low-Cost Physiological Computing Toolkit for Single- and Multi-User Studies" Sensors 23, no. 19: 8244. https://doi.org/10.3390/s23198244