Abstract

Spatial frequency domain imaging (SFDI) is well established in biology and medicine for non-contact, wide-field imaging of optical properties and 3D topography. Especially for turbid media with displaced, tilted or irregularly shaped surfaces, the reliable quantitative measurement of diffuse reflectance requires efficient calibration and correction methods. In this work, we present the implementation of a generic and hardware independent calibration routine for SFDI setups based on the so-called pinhole camera model for both projection and detection. Using a two-step geometric and intensity calibration, we obtain an imaging model that efficiently and accurately determines 3D topography and diffuse reflectance for subsequently measured samples, taking into account their relative distance and orientation to the camera and projector, as well as the distortions of the optical system. Derived correction procedures for position- and orientation-dependent changes in spatial frequency and intensity allow the determination of the effective scattering coefficient and the absorption coefficient when measuring a spherical optical phantom at three different measurement positions and at nine wavelengths with an average error of 5% and 12%, respectively. Model-based calibration allows the characterization of the imaging properties of the entire SFDI system without prior knowledge, enabling the future development of a digital twin for synthetic data generation or more robust evaluation methods.

1. Introduction

Spatial frequency domain imaging (SFDI) is a non-contact and fast measurement method to determine both the 3D shape and optical properties of scattering samples. A major advantage is the high lateral resolution that can be achieved to quantitatively map, for example, the effective volume scattering coefficient , the absorption coefficient , the phase function parameter or the surface scattering parameter [1,2]. The technology has considerable applications in biology and medicine, where the separation of scattering and absorption can provide valuable information for imaging different tissue types [3,4,5]. The measurement principle is based on the projection of periodic patterns onto a sample, usually sinusoidally modulated along one spatial direction, and the detection of the diffusely scattered light with a camera. A frequently used method is phase shifting, i.e., subsequently recording a sequence of phase-shifted sinusoidal patterns to calculate the amplitude modulation (AC), phase and offset (DC) using a single-pixel demodulation algorithm [6]. Other methods aim to reduce the number of projections to ideally only a single snapshot to enable real-time measurements based on multi-pixel demodulation [7,8]. In both cases, the individual modulation transfer function (MTF) describing the response of the optical system at different spatial frequencies must be taken into account. The system’s MTF can be determined, for example, by measuring a reference object with known optical properties. In subsequent measurements, the MTF of the system can then be separated from the amplitude modulation emanating from the sample, i.e., the sample’s MTF, also called the diffuse reflection [9]. Based on this characteristic quantity, the optical properties of the sample, e.g., and , can be determined by the regression of a suitable light propagation model such as the diffusion equation or an analytical solution of the radiative transfer equation [10,11]. In addition, approaches based on machine learning and deep learning are gaining importance [12,13,14]. However, regardless of the evaluation method, the determination of diffuse reflectance is limited in accuracy and susceptible to various sources of error, especially when measuring samples with irregularly shaped or inclined surfaces and varying working distances [15]. This raises the question of how to achieve robust and repeatable system calibration and data acquisition for the typical measurement conditions encountered in the study of biological tissue.

In the simplified case of a flat sample, the diffuse reflectance can be corrected by measuring a reference sample with known optical properties at exactly the same position [10]. For more complex sample surfaces, various profile corrections have been reported based on phase profilometry [16,17,18,19]. In addition to triangulation-based approaches, which require knowledge of the system parameters, e.g., the relative position between the projector and camera, multi-height calibration, i.e., measuring a reference sample at known relative heights, is well established [20,21]. In both cases, a linear relationship between phase and height change relative to a defined reference plane is assumed. For additional angular corrections, the positions and optical axes of the camera and projector relative to the sample must be known or sufficiently accurately estimated. Both the calibration effort and the required prior knowledge can be reduced by an improved modeling of the system. In this context, the pinhole camera model introduced by Zhang [22] is noteworthy, originally developed for camera calibration and later extended for projector-camera systems focusing on improved 3D data determination [23,24,25]. The advantages of an easy-to-use calibration routine combined with the comprehensive characterization of imaging properties motivated the application and extension of the pinhole model in the field of structured illumination.

In this paper, we present a two-step calibration routine for SFDI systems: geometric calibration involves measuring a calibration target with a circular grid at different positions to determine the imaging characteristics and distortion of the projector and camera using the pinhole camera model. After transformation to a uniform coordinate system, the geometry of the projector-camera system can be described consistently, which is used to calculate a 3D map of the sample surface using a nonlinear phase distance model. Thus, for each 3D point, the relative distance to the camera and the projector as well as the projection and acquisition angle can be specified in cylindrical coordinates. Knowledge of the distortion parameters allows for their correction after image acquisition and the calculation of pre-distorted phase images, which produce sinusoidal patterns of high homogeneity when projected onto a reference plane for a given working distance. If the sample surface deviates from the reference surface, the resulting local change in spatial frequency can be quantified geometrically. The second calibration step uses a calibration sample with known optical properties to characterize the MTF of the system and the heterogeneous intensity distribution within the calibrated volume. By parameterization and interpolation within the entire calibrated volume, the model provides the system MTF and reference intensity as a look-up table for subsequent measurements. Optical phantoms were measured for validation, showing how the information from both calibration steps can be efficiently used for post-processing the data without the need for further measurements. In particular, and could be determined for a spherical phantom in three different positions with an average deviation of 5% and 12%, respectively, at nine different wavelengths.

The aim of this work was to develop a generic and hardware-independent calibration routine for SFDI setups based on the so-called pinhole camera model for projection and detection. Through a two-step geometric and intensity calibration, we obtain an imaging model that can be used to accurately determine the diffuse reflectance of turbid media with displaced or irregular surfaces. In particular, we present correction methods to account for position- and orientation-dependent changes in the spatial frequency and intensity, which are validated by measuring and of a spherical optical phantom at different positions and wavelengths.

2. Materials and Methods

2.1. Multispectral SFDI Setup

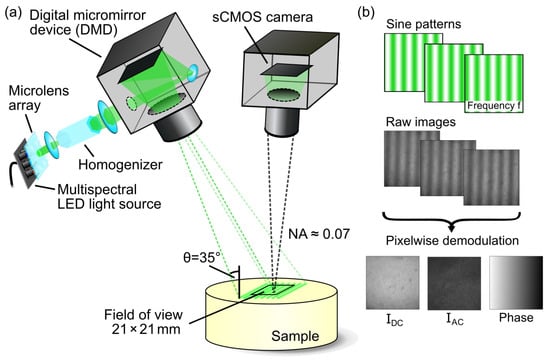

Calibration and subsequent measurements were performed using a multispectral SFDI system schematically shown in Figure 1a. The projection unit consists of a digital micromirror device (DLP LightCrafter 6500, Texas Instruments, Dallas, TX, USA) in combination with a self-configured LED light source that provides subsequent illumination based on nine switchable LEDs (XLamp XP-E and XQ-E series, CreeLED, Durham, NC, USA and LUXEON SunPlus series, Lumileds, San Jose, CA, USA) with peak emissions between 447 nm and 945 nm and a narrow bandwidth of about 10 nm each. The light emitted by the LEDs is collimated by a custom microlens array, which, in combination with a light guide homogenization rod, provides virtually homogeneous illumination. The DLP projects sinusoidal intensity patterns at an oblique angle of ° onto the sample and the diffusely reflected light from a 21 mm × 21 mm area is detected by a vertically mounted, cooled sCMOS camera (Zyla 4.2 sCMOS, Andor Technology, Belfast, UK) with a numerical aperture of about 0.07. For the measurement, different spatial frequencies between and are recorded with at least three phase shifts of 0, and , respectively. The exposure time is about 20 ms per image, resulting in a total measurement time of about 330 ms for five spatial frequencies with a complete sequence of 16 patterns (15 phase patterns + 1 dark pattern) per wavelength. Thus, the multispectral data acquisition takes less than 3 s in total. As shown in Figure 1b, the pixel-wise demodulation provides the offset , the modulation amplitude and the phase. A detailed description of the additional phase unwrapping used to avoid phase jumps can be found in Geiger et al. [26].

Figure 1.

Schematic drawing of (a) the multispectral spatial frequency domain imaging (SFDI) setup consisting of a projection unit with a digital micromirror device (DMD), a tunable LED lightsource and a sCMOS camera to detect the diffuse reflect light. (b) Pixel-wise demodulation for spatial frequency yields the offset , the modulation amplitude and the unwrapped phase.

2.2. Calibration Model

The basis for calibration is an appropriate model that describes the imaging process, in the most general case as a mapping of a ray from 3D space to a 2D pixel. Depending on the imaging system, there are both parameterized and non-parameterized models, e.g., for pinhole cameras, stereo cameras, fisheye cameras or catadioptric cameras, as shown in detail by Ramalingam [27] and Grossberg [28]. SFDI systems with commercial camera objectives of a low numerical aperture (NA), i.e., high f-number, can usually be approximated by a pinhole camera, for which Zhang [22] has presented a parameterized calibration model taking distortion into account. This so-called pinhole camera model assumes collinear mapping of object points along straight lines with a common intersection point in the projection center (pinhole) onto an image plane [22]. Mathematically, this corresponds to the transformation of a 3D point to its 2D projection on the camera chip given by

with the scale factor s, the camera intrinsic matrix A and the extrinsic rotation-translation matrix . The extrinsic parameters transform the global coordinates of an object by means of translation t and rotation R into the local camera coordinate system. Especially for systems consisting of several cameras or projectors, this allows the corresponding coordinates of the subsystems to be correlated. The camera intrinsic matrix

contains the focal lengths and and the principal points (, ), which together with the scale factor are called intrinsic parameters. In addition, the model can be extended to take into account radial distortions that occur in real systems approximated by

with the radial distortion coefficients and , as well as lateral distortions approximated by

with the lateral distortion coefficients and [29].

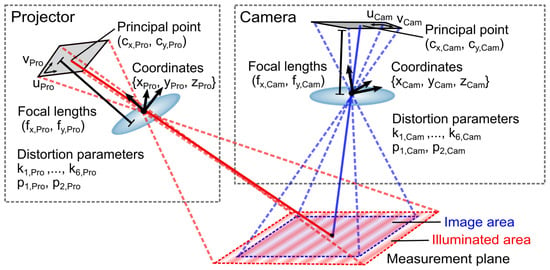

Figure 2 shows a schematic illustration of the pinhole camera model for a camera–projector system typically used for SFDI. In this case, both the camera and the projector are described individually by the pinhole camera model with its own set of parameters and coordinates.

Figure 2.

Schematic illustration of the pinhole camera model for a projector–camera system with intrinsic and extrinsic parameters.

There are several implementations of this parameterized pinhole camera model for general calibration purposes, such as the Python module Camera Calibration and 3D Reconstruction from the open source computer vision library (openCV) [30], which was used in this work. For Matlab, an implementation with comparable functionality has recently become available as part of the Camera Calibration Toolbox [31].

2.3. Calibration Routine

The calibration of the SFDI system is divided into two parts: the first calibration routine uses a circular grid to characterize the imaging properties of the camera and projector based on the pinhole camera model (referred to as geometric calibration), while afterwards, a calibration standard with known reflectance properties is used for intensity calibration.

2.3.1. Geometric Calibration

The aim of geometric calibration is to determine the extrinsic and intrinsic parameters using the pinhole camera model for both the camera and the projection system. If we first restrict ourselves to camera calibration, according to Zhang [22], it is sufficient to take snapshots of a calibration target in at least three different orientations and distances. The calibration target is essentially a two-dimensional marker structure consisting of regularly and grid-like arranged circles, points or a checkerboard. The two main axes of the grid span a local coordinate system in which each marker point can be uniquely assigned a 2D coordinate (in units of the grid constant a), which will be referred to as object points in the following. After capturing the calibration target in different positions, an image processing algorithm recognizes the individual marker structures in each of the resulting camera images. Their pixel coordinates are referred to as image points in the following and, together with the corresponding object points, serve as input parameters for the actual modeling. Estimating the pose of the camera based on a set of 2D points and their corresponding 3D points is also known as the perspective-n-point problem [32]. The solution approach followed in this study, called P3P [33], uses a Levenberg–Marquardt optimization algorithm to determine the rotation, translation and intrinsic parameters according to Equation (1), which minimizes the reprojection error of the 3D–2D point correspondences for all acquired positions of the calibration target. Accordingly, the camera calibration is valid only within the volume originally sampled by the calibration target, hereafter referred to as the calibrated volume. The extent of the calibrated volume is hardware limited, laterally by the field of view and in the z-direction by the depth of field of the camera.

The procedure described for a single camera can be extended to the calibration of a projector using the same pinhole camera model, but with reversed conceptualization. The main difference is the determination of the image points, which are not directly accessible with the projector in contrast to the camera. Instead, phase images are projected onto the calibration target, allowing the pixel rows and columns of the projector chip to be encoded onto the measurement plane. By evaluating the unique phase at each marker structure, the required correspondence can thus be found indirectly. In this work, the phase is determined using the phase shift method, which is based on the demodulation of at least three sequentially recorded sinusoidal patterns, each shifted by . The phase patterns are adapted to the resolution of the DMD chip and are projected both along the direction of the pixel columns and rows, while the pixel-based spatial frequencies and have to be specified in each case. Under this condition, the measured phase and for patterns along rows and columns, respectively, can be uniquely associated with a particular pixel coordinate on the DMD chip, according to

Once the two phase values and thus the corresponding image point for the projector calibration are found for each marker point of the calibration target, the subsequent modeling based on the pinhole camera model follows the approach already described for the camera.

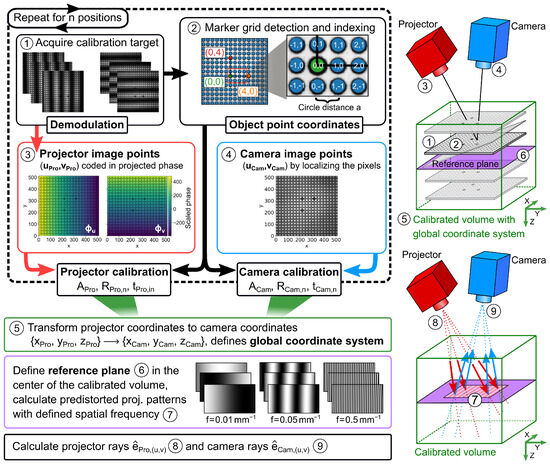

To better illustrate the combined calibration of the camera and projector, an overview of the processing steps for the geometric calibration is shown in Figure 3. In step ➀, the calibration target is positioned in the measurement field and three phase patterns are recorded along the columns and rows of the projector. After demodulation, in step ➁, we use the DC images (corresponding to images under homogeneous illumination) to detect the marker circles using the computer vision functionality of the openCV. Three points with a thicker outline, thus distinguishable from the other points, define the origin and the main axes of the object points with known distance a. In step ➂, the pixel positions in the camera image, i.e., the camera image points, are determined for each marker. In step ➃, and are determined for each marker circle from the demodulated and unwrapped phase images. Equation (5) yields the projector image points. Steps ➀ to ➃ are then repeated for n different positions, but at least three times. The camera pinhole model is then determined separately for the camera and projector image points of all n positions with their respective object points, resulting in their intrinsic and extrinsic parameters. Although this technically completes the geometric calibration, there are some useful post-processing steps. In step ➄, we define, without a loss of generality, a global coordinate system by transforming the projector coordinates into the camera coordinate system. The transformation matrix is obtained by transforming to . In step ➅, we define a reference plane in the center of the previously calibrated volume, aligned parallel to the camera chip. Using the imaging model of the projector and the distortion parameters, step ➆ computes a series of predistorted phase patterns that, when projected onto the reference plane, yield equidistant sinusoidal fringes with selectable spatial frequencies in units of , ranging from to . Finally, in steps ➇ and ➈, a normalized direction vector , according to the imaging model is assigned to each pixel of the projector and camera chip, respectively, which will be referred to as projector and camera rays in the following.

Figure 3.

Schematic illustration with an overview of the processing steps for the geometric calibration.

2.3.2. Parametrized 3D Point Estimation

Geometric calibration allows a 3D point cloud to be determined by triangulation using the phase information. This means that for a given camera pixel, the measured global phase is directly linked to a unique 3D coordinate. Instead of a strictly geometric determination, in this work, we applied a parameterized approach using the presented pinhole camera model, as reported by Lu [34]. For this purpose, each camera ray defines a vector within the calibrated volume, which is sampled with a certain number of 3D points with known distances from the camera center. Using the inverted imaging model, each of these 3D points can be associated with the corresponding 2D image point of the projector (i.e., DMD pixel) and thus, with the corresponding global phase . An efficient mapping between the scalars and is obtained by the regression of a cubic polynomial for each camera ray according to

Once the coefficients are determined, the distances for all camera pixels of a measured phase image can be computed efficiently and very quickly in the following, using a hyperbolic solution [35], which we call the phase-distance conversion in the following. Additional multiplication with the camera rays , i.e., the direction vectors, yields the corresponding 3D coordinate:

2.3.3. Calculating Normals and Angles for Spatial Frequency Correction

For the further post-processing of the point cloud, the open source library Open3D [36] was used to calculate a normal vector for each 3D point considering its nearest neighbors. The normal vectors , together with the corresponding projector rays and the direction of the fringe maxima (i.e., the direction of the constant phase in the reference plane), span a local coordinate system for every 3D point with the direction vectors given by

as shown schematically in Figure 4a. After nominating the direction vectors via

we can calculate the rotation matrix

which transforms the camera and projections rays from global to local coordinates according to

After the transformation from cartesian to spherical coordinates according to

we obtain the projection angles and detection angles for each 3D point, respectively.

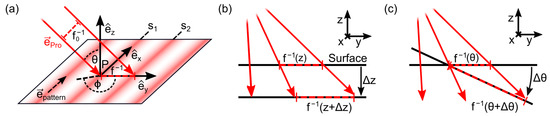

Figure 4.

(a) Schematic representation of a sinusoidal pattern with spatial frequency projected obliquely onto a plane surface in the local coordinate system of a point . (b) Both a height shift of Δz and (c) an inclination of the sample relative to the reference plane by lead to scaling of the local spatial frequency f observed on the sample surface.

As shown in Figure 4a, the oblique projection of a fringe pattern with a spatial period onto the sample surface results in a scaled spatial period , i.e., a changed distance between two fringe maxima, denoted by and . The scaling factor can be described geometrically for a tilt parallel to the fringes as

and thus

The scaling factor for a tilt perpendicular to the fringes is calculated similarly by

Knowing the spatial frequency at a point in the reference plane illuminated at and , the spatial frequency at the point of a relatively shifted and tilted surface is given as

with the magnification factor

2.3.4. Intensity Calibration

When determining the absolute diffuse reflectance, the inhomogeneous intensity distribution and the MTF of the system, which were neglected so far during the geometric calibration, must also be taken into account. For this purpose, the intensity calibration is performed in a second step using a reference standard of known diffuse reflectance measured at (usually 5 to 6) positions evenly distributed over the calibrated volume. At each position, spatial frequencies (usually 8 to 9) are uniformly acquired between and . After demodulation, as part of the data post-processing, we apply all demonstrated tools of geometric calibration to obtain pixel-wise 3D coordinates, normal vectors, angles and corrected spatial frequencies in addition to the AC and DC images. Since the detected intensity depends not only on the absolute position of the reference target, but also on its relative position to the projector and camera, we use a Lambert correction model:

assuming Lambertian reflection of the reference target as an approximation and thus

To achieve an efficient assignment and later on, the retrieval of the reference intensities despite the large parameter set, we describe the corrected intensities pixelwise as a function of both their 3D position (e.g., distance from the camera) and their corrected spatial frequency f. Thus, for each camera pixel (u,v), we obtain grid-like arranged reference intensities, which correspond to a 2D hypersurface given by

With additional 2D interpolation, the intensity reference can be approximated within the entire calibrated volume and stored in the form of a look-up table.

2.4. Phantom Measurements

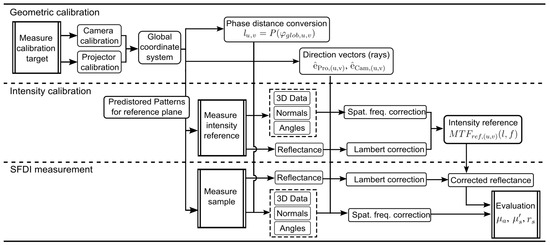

After measuring a sample such as an optical phantom, we repeat the evaluation steps shown so far. The geometric calibration provides a 3D model of the sample surface with corrected spatial frequencies and angles and . The diffuse reflectance is obtained by

As a summary, both the calibration routines and the subsequent calculation steps for the actual measurement are shown in Figure 5 as a flowchart.

Figure 5.

Flowchart showing an overview of the calibration routines and processing steps with spatial frequency and intensity correction applied to an SFDI measurement.

To evaluate the optical properties of the measured sample, we use an analytical solution of the radiative transfer equation based on the approximation for semi-infinite media to model light propagation [11,37,38]. The model was applied to the post-processed data using a nonlinear least squares algorithm with a computational accuracy of order , resulting in , and the surface roughness parameter for each pixel. Additional binning reduced both evaluation time and data volume.

Optical phantoms made of epoxy resin with additional scattering particles (titanium dioxide) and a mineral absorber (hematite) were used for the validation measurements. The geometry and surface properties of the phantoms can be controlled by molding or mechanical finishing. In the present case, flat cylindrical phantoms and spherical caps of different sizes were used. The reference values of their optical properties were determined with an integrating sphere [39].

3. Results and Discussion

3.1. Geometric Model of the SFDI Setup in Global Coordinates

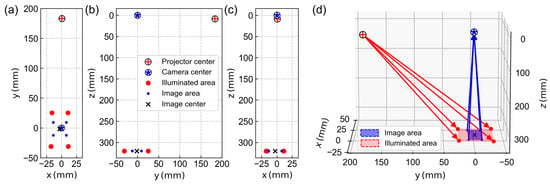

For the geometric calibration of the SFDI device, an aluminum plate with a printed grid of circles with a 1 mm diameter and 2 mm spacing was used. The depth of field of the camera limited the volume for calibration to a lateral with a height of centered around the focus position. Since the grid spacing enters Equation (1) via the scaling factor, the extrinsic parameters shown in Figure 6 result directly in millimeter units. The origin of the global coordinate system was chosen at the position of the virtual camera center, the reference plane is at a distance of below and the virtual projector center is at . The model predicts a camera field of view in the reference plane (hereafter called the image area) with a lateral extent of 21.6 mm, while the projector field of view (hereafter called the illuminated area) has a lateral extent of . The camera image center in the reference plane is at , i.e., the optical axis of the camera appears to be aligned almost exactly perpendicular to the reference plane.

Figure 6.

(a–c) 2D representation of the virtual camera and projector center, the illuminated area and the image area in the global coordinate system as they result from the geometric calibration. (d) The corresponding 3D illustration with the outer corner rays of both the camera (blue) and projector (red).

Compared to the real SFDI setup, some of these parameters can be directly validated, e.g., the size of the camera field of view in the reference plane can be easily measured and checked with a ruler. Regarding the illumination field, it should be noted that the DMD chip may not be fully illuminated and its margins are usually obscured by parts of the projection optics. The fact that the illumination field is significantly larger than the camera image serves to avoid marginal light propagation effects. The relative position of the camera, projector and reference plane could also be roughly confirmed with a tape measure, although the model parameters do not necessarily reflect the exact setup geometry. In particular, the virtual camera and projector centers are not characteristic or distinctive points of the real optical system. Strictly speaking, their specification is only meaningful if the entire set of model parameters is taken into account.

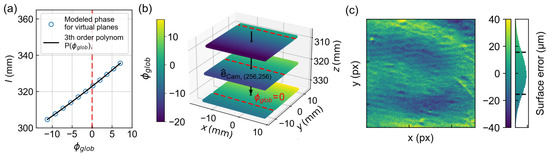

3.2. Phase-Distance Conversion

The geometric calibration allows the translation of the measured and unwrapped phase directly into a distance according to Equation (7). To determine this correspondence, ten virtual planes were defined in the calibrated volume, having been shifted in the z-direction. The projected phase as seen from the camera was then calculated for each plane using the known imaging model, shown exemplarily in Figure 7a for the pixel in the center of the camera. By fitting a third-degree polynomial according to Equation (7), the unique phase–distance relationship can be determined and stored for each pixel. In the subsequent measurement, it is sufficient to specify the camera pixel index, the phase value and the camera ray to calculate or, according to Equation (8), the corresponding 3D coordinate directly. Figure 7b shows three depicted planes in a 3D representation with color-coded , which can be assigned to a specific column of the DMD chip according to Equation (5). Due to the oblique projection direction, appears in the camera image at a different position in each plane. The black arrow again represents the camera ray of the center pixel. For validation, a flat reference target was measured in the reference plane and its 3D topography was determined using the phase–distance conversion. Figure 7c shows the surface error of the obtained point cloud determined by the regression of a plane to an average deviation of about .

Figure 7.

For a single pixel, e.g., in the center of the CCD, can be described as a function of the distance between the sample surface and the camera (blue dots). Fitting a third degree polynomial gives the analytical phase–distance conversion (black line). (b) shows three planes with a distance of 10 mm, centered around the reference plane, with color coding showing . The black arrow corresponds to the profile shown in (a) while the red dashed line marks in each case. (c) For validation, we measured the 3D topography of a flat reference target using the presented phase–distance conversion. The mean surface deviation was determined by the regression of a plane as .

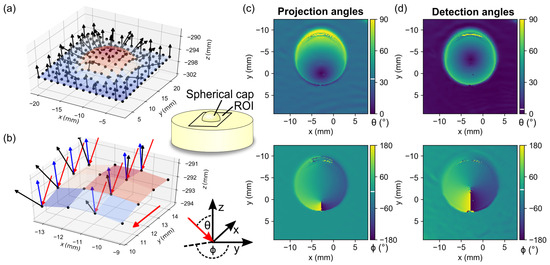

3.3. Calculating the Projection and Detection Angles

To illustrate the determination of the projection and detection angles, a custom-made planar phantom with a central spherical cap with a diameter of approximately 10 mm was measured. Figure 8a shows the 3D point cloud obtained by the phase–distance conversion, with pixel binning chosen for clarity. The black arrows illustrate the normal vectors computed for each pixel, while the color map codes are . Figure 8b shows an enlarged section of the point cloud with the additional representation of the camera rays (blue) and projector rays (red) as well as the propagation direction of the fringe pattern (red). Figure 8c,d show the polar angles calculated according to Equation (14) for projection and detection, respectively. The incidence angle of the projection is on average 35°, the detection angle of the camera is about 4° and and are on average 0°.

Figure 8.

(a) 3D topography of an optical phantom with a centered spherical cap showing the normal vectors (black arrows) and color-coded z-coordinate. (b) Detailed view with the additional marking of the incident projector direction (red arrows) and the camera detection (blue arrows). (c,d) show the polar angles and , respectively, with the mean angles indicated by a white bar in the colorbar.

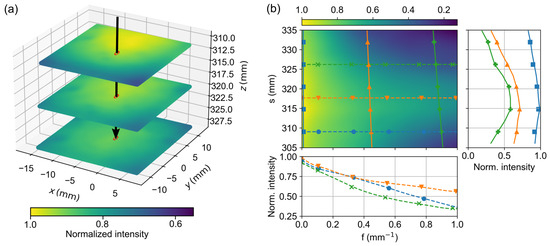

3.4. Look-Up Table for Intensity Reference

For the intensity calibration, a white coated, highly scattered aluminum plate with known reflectance properties was used as a reference target for further intensity calibration. It was measured at six different positions in the calibrated volume, each at nine spatial frequencies ranging from to . Figure 9a shows the corresponding DC images for measurements at three positions with a respective distance of 10 mm. It can be clearly seen that the intensity distribution is not homogeneous and changes laterally as well as for different heights. The black arrow marks the camera ray associated with the pixel at the center of the CCD chip. Figure 9b shows, for this pixel, the corresponding reflectance reference map obtained by an 2D interpolation of the acquired measurements. It shows the color-coded reflectance for this pixel as a function of distance from the camera along and as a function of the spatial frequency f. The outset on the right shows three intensity profiles as a function of but constant spatial frequencies at , and , corresponding to the vertical lines in Figure 9a. In all three cases, the intensity decreases at short and long distances due to the limited depth of field of the camera. The intensity maximum at 316 mm indicates the distance of the camera focal plane. The outset below shows the change in intensity as a function of the spatial frequency for three fixed distances at about , and . These curves correspond to the horizontal profiles in Figure 9a. Fringes with a high spatial frequency can obviously only be resolved properly near the focal plane, at distances from approx. ; the decrease of the MTF indicates blurring.

Figure 9.

(a) Three-dimensional representation of the DC images for three intensity reference measurements, each shifted by in the z-direction, with an exemplary camera ray marked in the center of the image (black arrow). (b) shows for this selected ray the reflectance resulting from the intensity calibration, which is plotted in 2D, color-coded against spatial frequency and distance. The right plot shows an example of the change in reflectance at , , and for different distances as solid lines, and the bottom plot shows the MTF in the range between and for distances 310 mm, 320 mm and 325 mm as dashed lines.

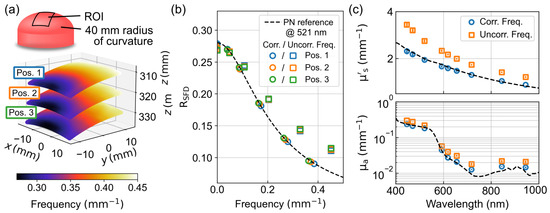

3.5. Determining Multispectral Optical Properties of a Hemispherical Phantom

To evaluate the influence of frequency and intensity corrections on the determination of optical properties, another custom-made hemispherical phantom with a radius of curvature of 40 mm was measured at three different positions in the calibrated volume. The SFD measurement was performed at all nine available wavelengths recording six spatial frequencies between and , with respect to the reference plane, and three phase patterns each. Figure 10a shows the three measurement positions where Pos. 2 was approximately at the reference plane and Pos. 1 and Pos. 3 were 10 mm above and below it, respectively. The false colors show the geometrically corrected spatial frequency due to the offset and curvature of the sample for a spatial frequency of . A clear gradient between and becomes apparent, which increases with shallower angles between the illumination direction and normal surface. Averaged over the entire field of view, the curvature causes about 15% deviation of the spatial frequency relative to the reference plane, and the height difference between Pos. 1 and Pos. 3 causes an additional variation of about 6%. Figure 10b shows at a wavelength of compared for the post-processing with and without consideration of both the spatial frequency correction and intensity correction. For comparison, the dashed line shows a forward calculation with a semi-infinite solution of the RTE. As a reference, the optical properties were determined by means of an integrating sphere as and , assuming the anisotropy factor and refractive index . While the measured is shifted to higher spatial frequencies and intensities without corrections, it agrees well with the prediction for all three positions with corrections. The deviations at low spatial frequencies probably result from the deviation from the assumed semi-infinite model. Figure 10c shows the corresponding optical properties for all wavelengths compared with and without correction, with the results of an integrating sphere measurement given as a reference. Without corrections, the deviations for and average 35% and 45%, respectively, over all wavelengths, while the deviations with a frequency correction are 5% and 12%, respectively.

Figure 10.

(a) Measurement of a hemispherical optical phantom with a radius of curvature of 40 mm in three different z-positions using false colors to display the geometrically corrected spatial frequency for a pattern with in the reference plane. (b) Averaged reflectances measured at a wavelength of 521 nm after post-processing considering (circles) and neglecting (squares) frequency and intensity correction and compared to a forward calculation. (c) Spectrally resolved effective scattering coefficient and absorption coefficient determined after post-processing, taking into account (circles) and neglecting (squares) the frequency and intensity correction compared to an integrating sphere measurement.

4. Summary and Outlook

The presented approach of a two-step geometry and intensity calibration allows a comprehensive and coherent description of the entire SFDI system with a large set of internal and external parameters obtained both for the projector and camera. In combination with the 3D topography of the sample, available through the phase information, local changes in spatial frequency or intensity can be determined directly from the displacement or tilt of the sample surface and taken into account in a further analysis. This allows the investigation and further development of correction methods that require virtually no prior knowledge of the setup. The parameterized approach to phase–distance conversion and intensity correction using rays associated with an individual camera and projector pixels provides an efficient and insightful representation of the measurement process and subsequent data processing. A general drawback, however, is the extensive algorithms that must be developed and implemented once for post-processing.

Overall, the results of the calibration, i.e., the characterization of the geometric imaging properties, including realistic distortions and inhomogeneous intensity distributions, can be further used to establish a digital twin of the setup. A future goal is therefore to simulate the entire measurement process with forward calculations using numerical methods such as the Monte Carlo method [40,41]. This would make it possible, for example, to determine the mutual error contribution in the simultaneous determination of the optical properties and the 3D topography of turbid samples or to enable new possibilities for data evaluation. The first progress with a similar approach for the corrected determination of 3D topography of teeth was recently shown by Geiger et al. [26]. In particular, for such complex sample geometries where analytical solutions of the radiative transfer equation do not exist, a regression algorithm to determine the optical properties by solving the inverse problem for virtually any 3D surface would be conceivable.

From an application perspective, the calibration model can provide advantages in various fields, such as medical imaging. After the one-time calibration, which does not require any prior knowledge of the specific hardware used in the application, comprehensive information on the distance and relative orientation of different image areas of the examined specimen is available in addition to the diffuse reflectance. A major advantage is that no additional image acquisition is required for their determination, i.e., the measurement time remains unchanged. In addition to the presented quantitative approach for the determination of scattering and absorption properties, applications in the field of machine learning are becoming more and more important. For example, in the automated classification of tissue types based on reflectance properties, additional knowledge of their relative position and distance to the camera and projector could enable more stable prediction models.

Author Contributions

Conceptualization, S.A.L. and S.N.; software, S.A.L. and S.N.; validation, S.A.L.; formal analysis, S.A.L.; writing—original draft preparation, S.A.L.; writing—review and editing, S.A.L., S.N. and A.K.; funding acquisition, A.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Bundesministerium für Bildung und Forschung (BMBF).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data underlying the results presented in this paper are not publicly available at this time but may be obtained from the authors upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bodenschatz, N.; Krauter, P.; Nothelfer, S.; Foschum, F.; Bergmann, F.; Liemert, A.; Kienle, A. Detecting structural information of scatterers using spatial frequency domain imaging. J. Biomed. Opt. 2015, 20, 116006. [Google Scholar] [CrossRef] [PubMed]

- Nothelfer, S.; Bergmann, F.; Liemert, A.; Reitzle, D.; Kienle, A. Spatial frequency domain imaging using an analytical model for separation of surface and volume scattering. J. Biomed. Opt. 2018, 24, 071604. [Google Scholar] [CrossRef]

- Phan, T.; Rowland, R.; Ponticorvo, A.; Le, B.C.; Wilson, R.H.; Sharif, S.A.; Kennedy, G.T.; Bernal, N.P.; Durkin, A.J. Characterizing reduced scattering coefficient of normal human skin across different anatomic locations and Fitzpatrick skin types using spatial frequency domain imaging. J. Biomed. Opt. 2021, 26, 026001. [Google Scholar] [CrossRef]

- Nguyen, J.Q.; Crouzet, C.; Mai, T.; Riola, K.; Uchitel, D.; Liaw, L.H.; Bernal, N.; Ponticorvo, A.; Choi, B.; Durkin, A.J. Spatial frequency domain imaging of burn wounds in a preclinical model of graded burn severity. J. Biomed. Opt. 2013, 18, 066010. [Google Scholar] [CrossRef]

- Lohner, S.A.; Biegert, K.; Nothelfer, S.; Hohmann, A.; McCormick, R.; Kienle, A. Determining the optical properties of apple tissue and their dependence on physiological and morphological characteristics during maturation. Part 1: Spatial frequency domain imaging. Postharvest Biol. Technol. 2021, 181, 111647. [Google Scholar] [CrossRef]

- Lu, Y.; Li, R.; Lu, R. Fast demodulation of pattern images by spiral phase transform in structured-illumination reflectance imaging for detection of bruises in apples. Comput. Electron. Agric. 2016, 127, 652–658. [Google Scholar] [CrossRef]

- Vervandier, J.; Gioux, S. Single snapshot imaging of optical properties. Biomed. Opt. Express 2013, 4, 2938. [Google Scholar] [CrossRef]

- Ghijsen, M.; Choi, B.; Durkin, A.J.; Gioux, S.; Tromberg, B.J. Real-time simultaneous single snapshot of optical properties and blood flow using coherent spatial frequency domain imaging (cSFDI). Biomed. Opt. Express 2016, 7, 870. [Google Scholar] [CrossRef]

- Gioux, S.; Mazhar, A.; Cuccia, D.J. Spatial frequency domain imaging in 2019: Principles, applications, and perspectives. J. Biomed. Opt. 2019, 24, 071613. [Google Scholar] [CrossRef]

- Cuccia, D.J.; Bevilacqua, F.; Durkin, A.J.; Ayers, F.R.; Tromberg, B.J. Quantitation and mapping of tissue optical properties using modulated imaging. J. Biomed. Opt. 2009, 14, 024012. [Google Scholar] [CrossRef]

- Liemert, A.; Kienle, A. Spatially modulated light source obliquely incident on a semi-infinite scattering medium. Opt. Lett. 2012, 37, 4158. [Google Scholar] [CrossRef]

- Sun, Z.; Xie, L.; Hu, D.; Ying, Y. An artificial neural network model for accurate and efficient optical property mapping from spatial-frequency domain images. Comput. Electron. Agric. 2021, 188, 106340. [Google Scholar] [CrossRef]

- Naglic, P.; Zelinskyi, Y.; Likar, B.; Pernuš, F.; Bürmen, M. OpenCL Framework for Fast Estimation of Optical Properties from Spatial Frequency Domain Images; SPIE: Bellingham, WA, USA, 2019; p. 45. [Google Scholar] [CrossRef]

- Stier, A.C.; Goth, W.; Zhang, Y.; Fox, M.C.; Reichenberg, J.S.; Lopes, F.C.; Sebastian, K.R.; Markey, M.K.; Tunnell, J.W. A Machine Learning Approach to Determining Sub-Diffuse Optical Properties; Optica Publishing Group: Washington, DC, USA, 2020; p. SM2D.6. [Google Scholar] [CrossRef]

- Bodenschatz, N.; Brandes, A.; Liemert, A.; Kienle, A. Sources of errors in spatial frequency domain imaging of scattering media. J. Biomed. Opt. 2014, 19, 071405. [Google Scholar] [CrossRef] [PubMed]

- Gioux, S.; Mazhar, A.; Cuccia, D.J.; Durkin, A.J.; Tromberg, B.J.; Frangioni, J.V. Three-dimensional surface profile intensity correction for spatially modulated imaging. J. Biomed. Opt. 2009, 14, 034045. [Google Scholar] [CrossRef] [PubMed]

- van de Giessen, M.; Angelo, J.P.; Gioux, S. Real-time, profile-corrected single snapshot imaging of optical properties. Biomed. Opt. Express 2015, 6, 4051. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Tabassum, S.; Piracha, S.; Nandhu, M.S.; Viapiano, M.; Roblyer, D. Angle correction for small animal tumor imaging with spatial frequency domain imaging (SFDI). Biomed. Opt. Express 2016, 7, 2373. [Google Scholar] [CrossRef] [PubMed]

- Dan, M.; Liu, M.; Bai, W.; Gao, F. Profile-based intensity and frequency corrections for single-snapshot spatial frequency domain imaging. Opt. Express 2021, 29, 12833. [Google Scholar] [CrossRef]

- Srinivasan, V.; Liu, H.C.; Halioua, M. Automated phase-measuring profilometry: A phase mapping approach. Appl. Opt. 1985, 24, 185. [Google Scholar] [CrossRef]

- Zhou, W.S.; Su, X.Y. A direct mapping algorithm for phase-measuring profilometry. J. Mod. Opt. 1994, 41, 89–94. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Zhang, S.; Huang, P.S. Novel method for structured light system calibration. Opt. Eng. 2006, 45, 083601. [Google Scholar] [CrossRef]

- Chen, X.; Xi, J.; Jin, Y.; Sun, J. Accurate calibration for a camera-projector measurement system based on structured light projection. Opt. Lasers Eng. 2009, 47, 310–319. [Google Scholar] [CrossRef]

- Zhang, S. Flexible and high-accuracy method for uni-directional structured light system calibration. Opt. Lasers Eng. 2021, 143, 106637. [Google Scholar] [CrossRef]

- Geiger, S.; Hank, P.; Kienle, A. Improved topography reconstruction of volume scattering objects using structured light. J. Opt. Soc. Am. A 2022, 39, 1823. [Google Scholar] [CrossRef] [PubMed]

- Ramalingam, S.; Sturm, P. A Unifying Model for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1309–1319. [Google Scholar] [CrossRef]

- Grossberg, M.; Nayar, S. A general imaging model and a method for finding its parameters. In Proceedings of the IEEE International Conference on Computer Vision, ICCV 2001, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 108–115. [Google Scholar] [CrossRef]

- Heikkila, J.; Silven, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 1106–1112. [Google Scholar] [CrossRef]

- Bradski, G. Dr. Dobb’s Journal of Software Tools; The OpenCV Library, UBM Technology Group: San Francisco, CA, USA, 2000. [Google Scholar]

- Bouguet, J.Y. Camera Calibration Toolbox for Matlab (1.0); CaltechDATA: San Francisco, CA, USA, 2022. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Marchand, E.; Uchiyama, H.; Spindler, F. Pose Estimation for Augmented Reality: A Hands-On Survey. IEEE Trans. Vis. Comput. Graph. 2016, 22, 2633–2651. [Google Scholar] [CrossRef]

- Lu, X.; Wu, Q.; Huang, H. Calibration based on ray-tracing for multi-line structured light projection system. Opt. Express 2019, 27, 35884. [Google Scholar] [CrossRef]

- Holmes, G.C. The use of hyperbolic cosines in solving cubic polynomials. Math. Gaz. 2002, 86, 473–477. [Google Scholar] [CrossRef]

- Zhou, Q.Y.; Park, J.; Koltun, V. Open3D: A Modern Library for 3D Data Processing. arXiv 2018, arXiv:1801.09847. [Google Scholar]

- Liemert, A.; Kienle, A. Analytical approach for solving the radiative transfer equation in two-dimensional layered media. J. Quant. Spectrosc. Radiat. Transf. 2012, 113, 559–564. [Google Scholar] [CrossRef]

- Liemert, A.; Kienle, A. Exact and efficient solution of the radiative transport equation for the semi-infinite medium. Sci. Rep. 2013, 3, 3–9. [Google Scholar] [CrossRef] [PubMed]

- Bergmann, F.; Foschum, F.; Zuber, R.; Kienle, A. Precise determination of the optical properties of turbid media using an optimized integrating sphere and advanced Monte Carlo simulations. Part 2: Experiments. Appl. Opt. 2020, 59, 3216–3226. [Google Scholar] [CrossRef] [PubMed]

- Crowley, J.; Gordon, G.S. Simulating Medical Applications of Tissue Optical Property and Shape Imaging Using Open-Source Ray Tracing Software; SPIE: Bellingham, WA, USA, 2021; p. 14. [Google Scholar] [CrossRef]

- Naglic, P.; Zelinskyi, Y.; Likar, B.; Pernuš, F.; Bürmen, M. From Monte Carlo Simulations to Efficient Estimation of Optical Properties for Spatial Frequency Domain Imaging; SPIE: Bellingham, WA, USA, 2019; p. 8. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).