An Adaptive Multi-Scale Network Based on Depth Information for Crowd Counting †

Abstract

:1. Introduction

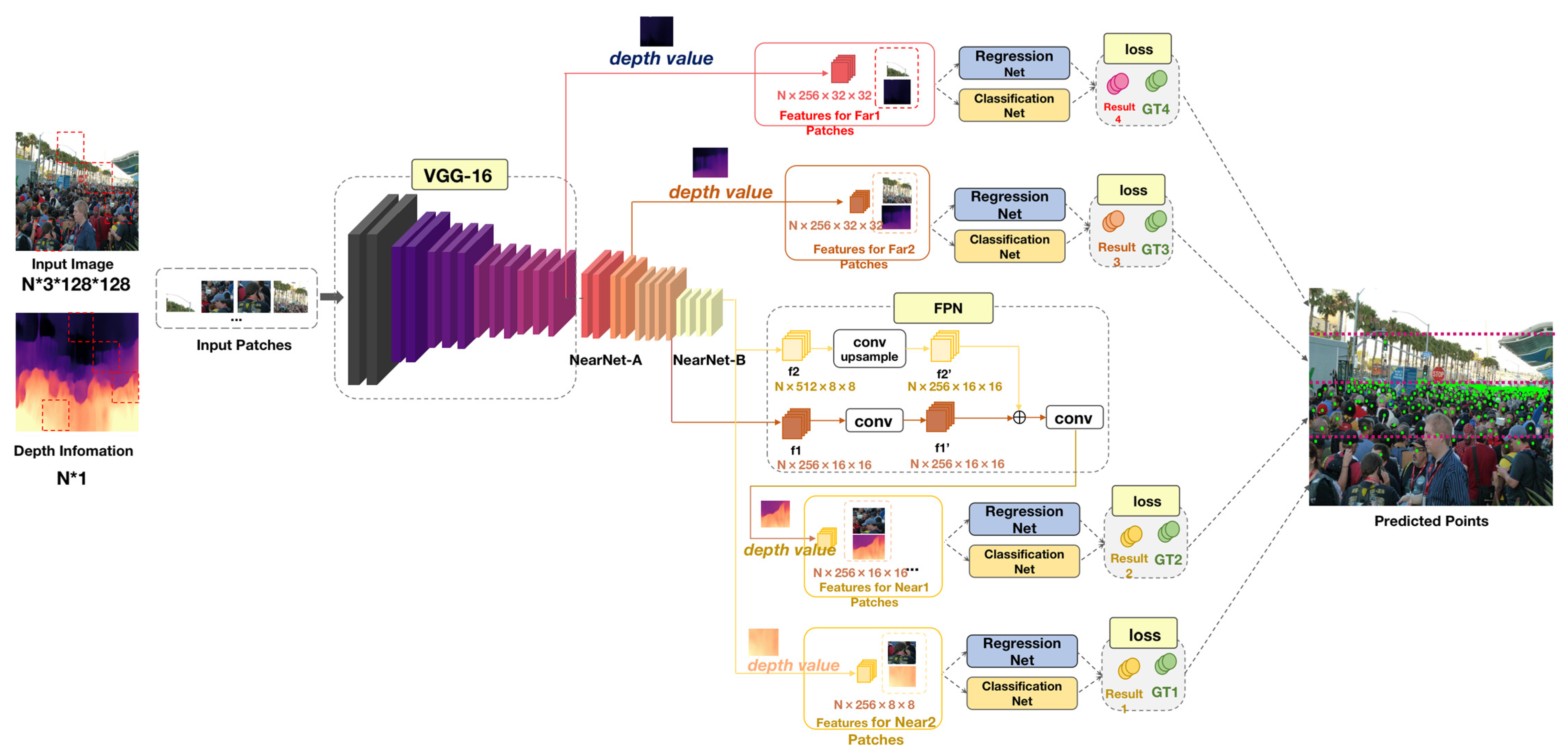

- We introduce a simple end-to-end crowd counting network, abbreviated as NF-Net. We have improved the structure of the original feature extraction network VGG16 to better focus on the differences in local feature information by deepening the layers of the feature extraction network. In order to allocate different receptive fields to populations of different densities, we propose NearNet to allocate the network to different depths. NearNet-A continuously stacks four convolutional layers, while NearNet-B continuously stacks eight convolutional layers. The network structure diagrams of NearNet-A, NearNet-B, and the head network are shown in Figure 3. The more layers stacked, the greater the receptive field that needs to be allocated to the population. Realizing distinguishing features between distant and nearby targets further improves the accuracy and robustness of the crowd counting model.

- We have designed an adaptive distance adjustment module based on depth information. By introducing depth information weight parameters to represent the distance between people and cameras, different densities of people are divided according to their density based on the distance. The fusion between features at different distances will guide the model to explicitly extract features from multiple receptive fields and learn the importance of each feature at each image location. In other words, our method adaptively encodes the size of the receptive field required for population density.

- The design of the patch selection module introduces the idea of local information. Specifically, the original image is evenly divided into four different patches, each corresponding to a different scale of population. Based on the angle taken by the camera, it can be inferred that the closer the area to the camera is, the denser the crowd is. Therefore, the patches on the top two parts of the image are divided into dense and sub-dense from top to bottom, while the bottom two parts are divided into sub-sparse and sparse from top to bottom. Finally, four different levels of crowd distribution information are formed to achieve spatial differentiation of crowds and effectively improve the recognition of nearby targets, while avoiding interference from complex backgrounds.

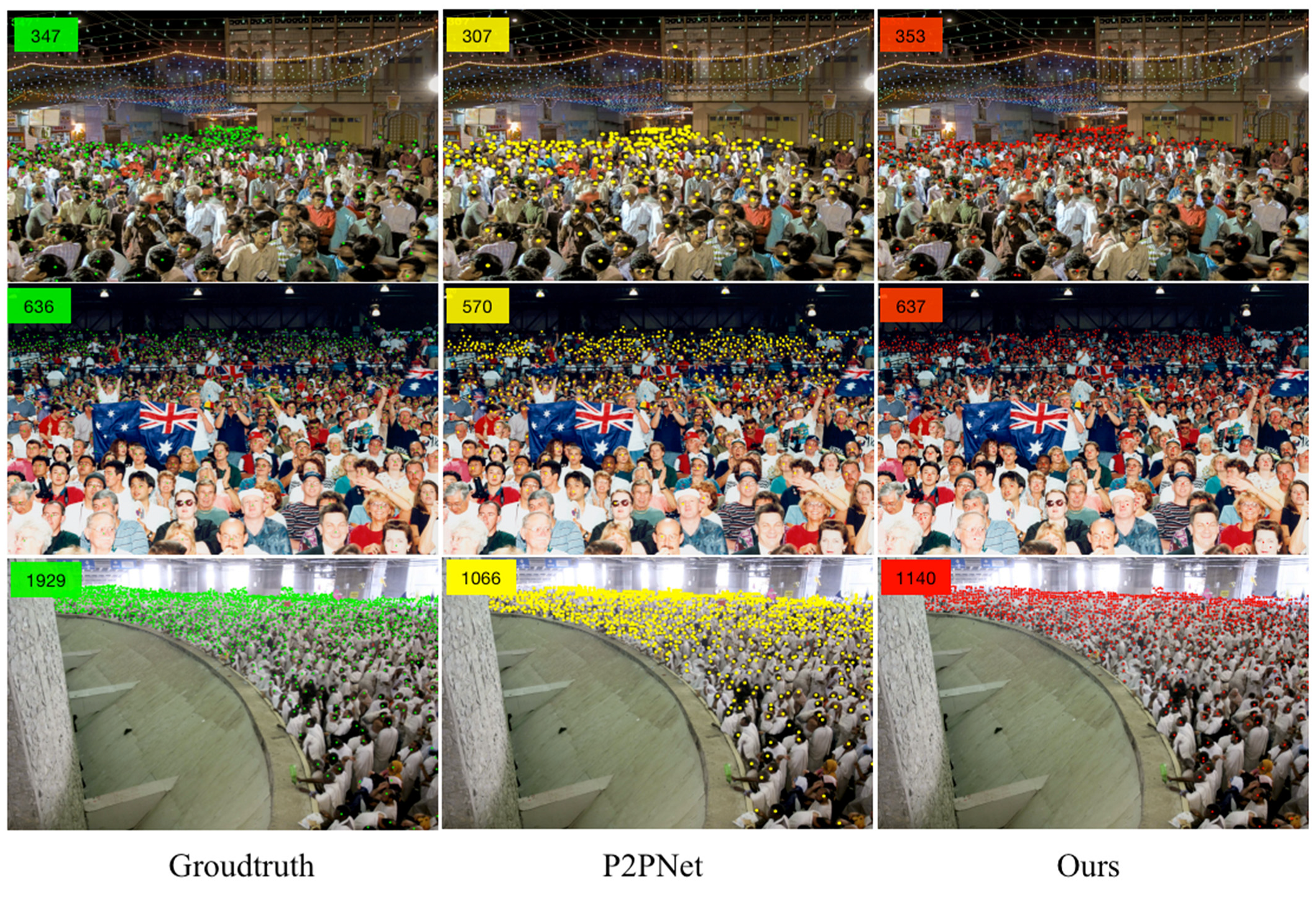

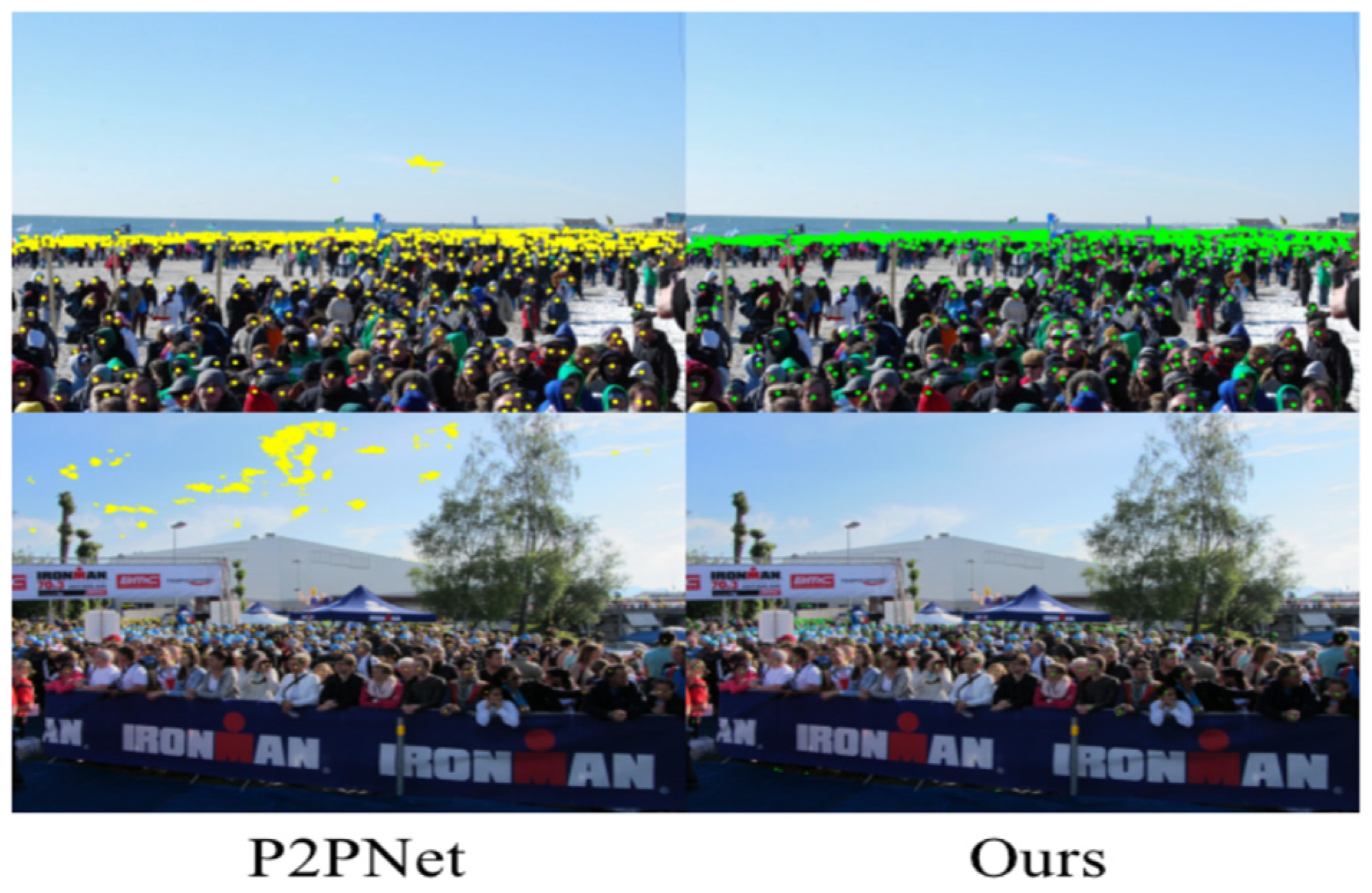

- Experimental evidence shows that NF-Net is effective. NF-Net has achieved state-of-the-art performance in the Shanghai Tech Part A and B, UCF_CC_ 50, and UCF-QNRF datasets. Our method surpasses the state-of-the-art P2PNet and significantly reduces MAE and RMSE. We have conducted ablation experiments and comparative analysis to verify the effectiveness of our method on the crowd counting benchmark.

2. Materials and Methods

2.1. Detection-Based Methods

2.2. Regression-Based Methods

2.3. Density Map-Based Methods

2.4. Transformer-Based Methods

3. Methodology

3.1. Near and Far Network

3.1.1. Backbone

3.1.2. Regression Head

3.1.3. Classification Head

3.1.4. NearNet

3.1.5. Feature Fusion Layer

3.2. Depth Information Module

3.3. Patch Selection Module

- First, divide the original image into four patches according to coordinates;

- Second, calculate the maximum value and minimum value of each patch according to the Formula (1) ;

- Third, when starts to split the far and near branches, the formula for the division factor is as follows:

- We first set τ to 2, 4, and 8 scenarios and experimental comparison is conducted on the UCF_CC_50 dataset. The results show that, when τ = 4, = 0.15, the MAE value is the smallest and the accuracy of the model reaches its best.

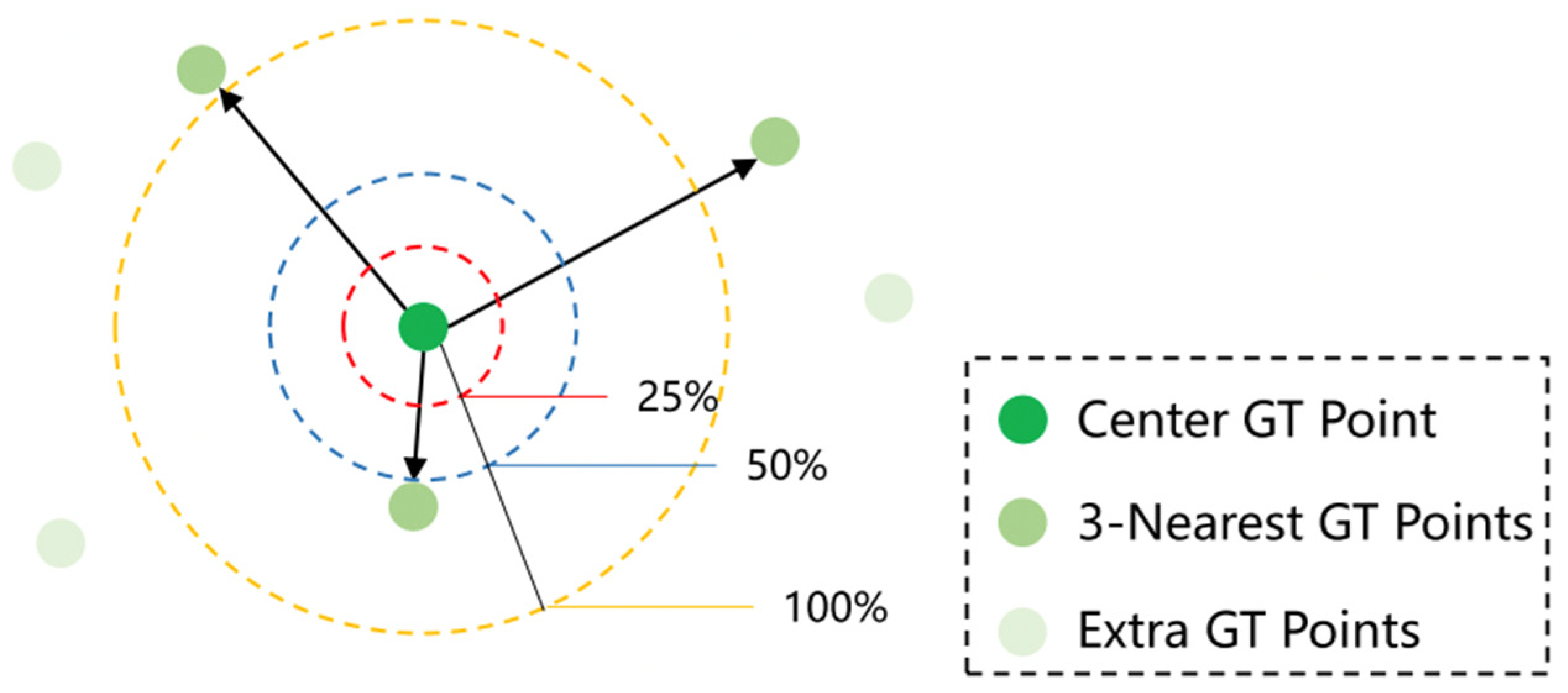

3.4. Loss Design

4. Experiment

4.1. Datasets

4.2. Evulations

5. Discussion

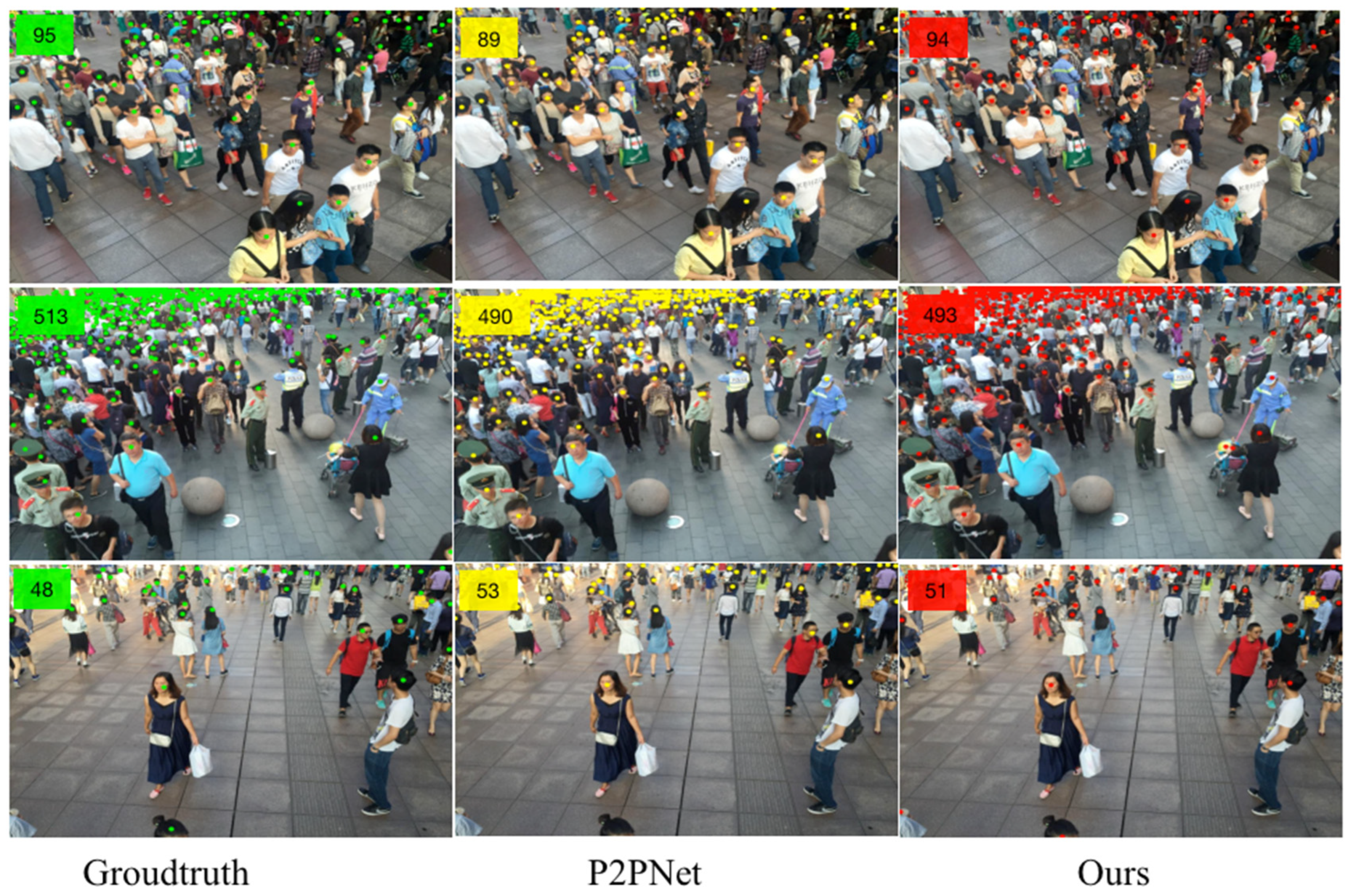

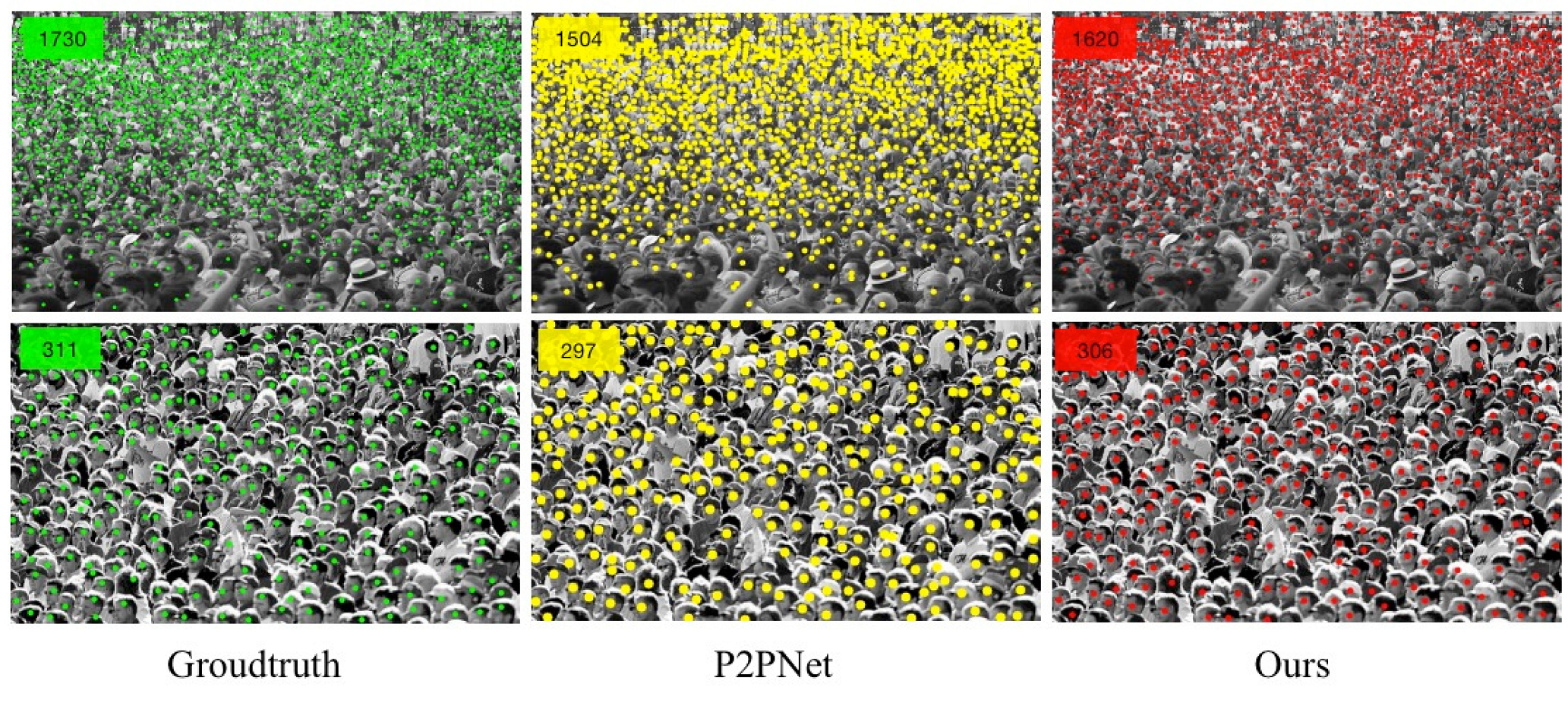

5.1. Comparison with State-of-the-Art Method

5.2. Ablation Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mundhenk, T.N.; Konjevod, G.; Sakla, W.A.; Boakye, K. A Large Contextual Dataset for Classification, Detection and Counting of Cars with Deep Learning. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 10–16 October 2016; pp. 785–800. [Google Scholar]

- Zhang, S.; Wu, G.; Costeira, J.P.; Moura, J.M. Understanding traffic density from large-scale web camera data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 June 2017; pp. 5898–5907. [Google Scholar]

- Xue, Y.; Ray, N.; Hugh, J.; Bigras, G. Cell Counting by Regression Using Convolutional Neural Network. In Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 274–290. [Google Scholar]

- Arteta, C.; Lempitsky, V.; Zisserman, A. Counting in the wild. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 483–498. [Google Scholar]

- Li, W.; Mahadevan, V.; Vasconcelos, N. Anomaly detection and localization in crowded scenes. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 18–32. [Google Scholar]

- Chan, A.B.; Liang, Z.-S.J.; Vasconcelos, N. Privacy preserving crowd monitoring: Counting people without people models or tracking. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–7. [Google Scholar]

- Xiong, F.; Shi, X.; Yeung, D.-Y. Spatiotemporal modeling for crowd counting in videos. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5151–5159. [Google Scholar]

- Walach, E.; Wolf, L. Learning to count with cnn boosting. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 660–676. [Google Scholar]

- Lempitsky, V.; Zisserman, A. Learning to count objects in images. In Proceedings of the Advances in Neural Information Processing Systems 23: 24th Annual Conference on Neural Information Processing Systems 2010, Vancouver, BC, Canada, 6–9 December 2010; pp. 1324–1332. [Google Scholar]

- Gao, G.; Gao, J.; Liu, Q.; Wang, Q.; Wang, Y. Cnn-based density estimation and crowd counting: A survey. arXiv 2020, arXiv:2003.12783. [Google Scholar]

- Zhang, Y.; Zhou, D.; Chen, S.; Gao, S.; Ma, Y. Single-image crowd counting via multi-column convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 589–597. [Google Scholar]

- Babu Sam, D.; Surya, S.; Venkatesh Babu, R. Switching convolutional neural network for crowd counting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 June 2017; pp. 5744–5752. [Google Scholar]

- Sindagi, V.A.; Patel, V.M. Cnn-based cascaded multi-task learning of high-level prior and density estimation for crowd counting. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Onoro-Rubio, D.; López-Sastre, R.J. Towards perspective-free object counting with deep learning. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 615–629. [Google Scholar]

- Sindagi, V.A.; Patel, V.M. Generating high-quality crowd density maps using contextual pyramid cnns. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1861–1870. [Google Scholar]

- Hossain, M.; Hosseinzadeh, M.; Chanda, O.; Wang, Y. Crowd counting using scale-aware attention networks. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 June 2019; pp. 1280–1288. [Google Scholar]

- Li, Y.; Zhang, X.; Chen, D. Csrnet: Dilated convolutional neural networks for understanding the highly congested scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1091–1100. [Google Scholar]

- Bai, S.; He, Z.; Qiao, Y.; Hu, H.; Wu, W.; Yan, J. Adaptive dilated network with self-correction supervision for counting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 4594–4603. [Google Scholar]

- Liu, N.; Long, Y.; Zou, C.; Niu, Q.; Pan, L.; Wu, H. Adcrowdnet: An attention-injective deformable convolutional network for crowd understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3225–3234. [Google Scholar]

- Sindagi, V.A.; Patel, V.M. Ha-ccn: Hierarchical attention-based crowd counting network. IEEE Trans. Image Process. 2019, 29, 323–335. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Song, Q.; Wang, C.; Jiang, Z.; Wang, Y.; Tai, Y.; Wang, C.; Li, J.; Huang, F.; Wu, Y. Rethinking counting and localization in crowds: A purely point-based framework. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 3365–3374. [Google Scholar]

- Zhang, P.; Lei, W.; Zhao, X.; Dong, L.; Lin, Z. NF-Net: Near and Far Network for Crowd Counting. In Proceedings of the 2023 8th International Conference on Computer and Communication Systems (ICCCS), Guangzhou, China, 20–24 April 2023; pp. 475–481. [Google Scholar]

- Wu, B.; Nevatia, R. Detection of multiple, partially occluded humans in a single image by bayesian combination of edgelet part detectors. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05) Volume 1, Beijing, China, 17–21 October 2005; pp. 90–97. [Google Scholar]

- Li, M.; Zhang, Z.; Huang, K.; Tan, T. Estimating the number of people in crowded scenes by mid based foreground segmentation and head-shoulder detection. In Proceedings of the 2008 19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar]

- Leibe, B.; Seemann, E.; Schiele, B. Pedestrian detection in crowded scenes. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; pp. 878–885. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Zhao, T.; Nevatia, R. Bayesian human segmentation in crowded situations. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; p. II-459. [Google Scholar]

- Ge, W.; Collins, R.T. Marked point processes for crowd counting. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–26 June 2009; pp. 2913–2920. [Google Scholar]

- Liu, B.; Vasconcelos, N. Bayesian model adaptation for crowd counts. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 4175–4183. [Google Scholar]

- Chen, K.; Gong, S.; Xiang, T.; Change Loy, C. Cumulative attribute space for age and crowd density estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–27 June 2013; pp. 2467–2474. [Google Scholar]

- Idrees, H.; Saleemi, I.; Seibert, C.; Shah, M. Multi-source multi-scale counting in extremely dense crowd images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–27 June 2013; pp. 2547–2554. [Google Scholar]

- Idrees, H.; Tayyab, M.; Athrey, K.; Zhang, D.; Al-Maadeed, S.; Rajpoot, N.; Shah, M. Composition loss for counting, density map estimation and localization in dense crowds. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 532–546. [Google Scholar]

- Liu, Y.; Shi, M.; Zhao, Q.; Wang, X. Point in, box out: Beyond counting persons in crowds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 6469–6478. [Google Scholar]

- Liu, W.; Salzmann, M.; Fua, P. Context-aware crowd counting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5099–5108. [Google Scholar]

- Liu, X.; Van De Weijer, J.; Bagdanov, A.D. Leveraging unlabeled data for crowd counting by learning to rank. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7661–7669. [Google Scholar]

- Yan, Z.; Yuan, Y.; Zuo, W.; Tan, X.; Wang, Y.; Wen, S.; Ding, E. Perspective-guided convolution networks for crowd counting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 952–961. [Google Scholar]

- Ranjan, V.; Le, H.; Hoai, M. Iterative crowd counting. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 270–285. [Google Scholar]

- Shi, M.; Yang, Z.; Xu, C.; Chen, Q. Revisiting perspective information for efficient crowd counting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7279–7288. [Google Scholar]

- Wang, Q.; Gao, J.; Lin, W.; Yuan, Y. Learning from synthetic data for crowd counting in the wild. In Proceedings of the IEEE/CVF Conference on computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8198–8207. [Google Scholar]

- Zhang, A.; Shen, J.; Xiao, Z.; Zhu, F.; Zhen, X.; Cao, X.; Shao, L. Relational attention network for crowd counting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6788–6797. [Google Scholar]

- Zhang, A.; Yue, L.; Shen, J.; Zhu, F.; Zhen, X.; Cao, X.; Shao, L. Attentional neural fields for crowd counting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5714–5723. [Google Scholar]

- Cao, X.; Wang, Z.; Zhao, Y.; Su, F. Scale aggregation network for accurate and efficient crowd counting. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Liu, C.; Weng, X.; Mu, Y. Recurrent attentive zooming for joint crowd counting and precise localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1217–1226. [Google Scholar]

- Jiang, X.; Xiao, Z.; Zhang, B.; Zhen, X.; Cao, X.; Doermann, D.; Shao, L. Crowd counting and density estimation by trellis encoder-decoder networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 6133–6142. [Google Scholar]

- Shi, Z.; Mettes, P.; Snoek, C.G. Counting with focus for free. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4200–4209. [Google Scholar]

- Ma, Z.; Wei, X.; Hong, X.; Gong, Y. Bayesian loss for crowd count estimation with point supervision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6142–6151. [Google Scholar]

- Wang, B.; Liu, H.; Samaras, D.; Nguyen, M.H. Distribution matching for crowd counting. Adv. Neural Inf. Process. Syst. 2020, 33, 1595–1607. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liang, D.; Xu, W.; Bai, X. An end-to-end transformer model for crowd localization. In Proceedings of the European Conference on Computer Vision, Tel-Aviv, Israel, 23–27 October 2022; pp. 38–54. [Google Scholar]

- Sun, G.; Liu, Y.; Probst, T.; Paudel, D.P.; Popovic, N.; Van Gool, L. Boosting crowd counting with transformers. arXiv 2021, arXiv:2105.10926. [Google Scholar]

- Liang, D.; Chen, X.; Xu, W.; Zhou, Y.; Bai, X. Transcrowd: Weakly-supervised crowd counting with transformers. Sci. China Inf. Sci. 2022, 65, 160104. [Google Scholar] [CrossRef]

- Tian, Y.; Chu, X.; Wang, H. CCTrans: Simplifying and improving crowd counting with transformer. arXiv 2021, arXiv:2109.14483. [Google Scholar]

- Chu, X.; Tian, Z.; Wang, Y.; Zhang, B.; Ren, H.; Wei, X.; Xia, H.; Shen, C. Twins: Revisiting the design of spatial attention in vision transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 9355–9366. [Google Scholar]

- Yang, S.; Guo, W.; Ren, Y. CrowdFormer: An overlap patching vision transformer for top-down crowd counting. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, Vienna, Austria, 23–29 July 2022; pp. 23–29. [Google Scholar]

- Liu, L.; Qiu, Z.; Li, G.; Liu, S.; Ouyang, W.; Lin, L. Crowd counting with deep structured scale integration network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1774–1783. [Google Scholar]

- Miao, Y.; Lin, Z.; Ding, G.; Han, J. Shallow feature based dense attention network for crowd counting. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 11765–11772. [Google Scholar]

- Liu, X.; Yang, J.; Ding, W.; Wang, T.; Wang, Z.; Xiong, J. Adaptive mixture regression network with local counting map for crowd counting. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 241–257. [Google Scholar]

- Jiang, X.; Zhang, L.; Xu, M.; Zhang, T.; Lv, P.; Zhou, B.; Yang, X.; Pang, Y. Attention scaling for crowd counting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 4706–4715. [Google Scholar]

- Liu, X.; Li, G.; Han, Z.; Zhang, W.; Yang, Y.; Huang, Q.; Sebe, N. Exploiting sample correlation for crowd counting with multi-expert network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3215–3224. [Google Scholar]

- Wan, J.; Liu, Z.; Chan, A.B. A generalized loss function for crowd counting and localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 1974–1983. [Google Scholar]

- Zhang, C.; Li, H.; Wang, X.; Yang, X. Cross-scene crowd counting via deep convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 833–841. [Google Scholar]

- Sam, D.B.; Sajjan, N.N.; Babu, R.V.; Srinivasan, M. Divide and grow: Capturing huge diversity in crowd images with incrementally growing cnn. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3618–3626. [Google Scholar]

- Shi, Z.; Zhang, L.; Liu, Y.; Cao, X.; Ye, Y.; Cheng, M.-M.; Zheng, G. Crowd counting with deep negative correlation learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5382–5390. [Google Scholar]

- Liu, L.; Wang, H.; Li, G.; Ouyang, W.; Lin, L. Crowd counting using deep recurrent spatial-aware network. arXiv 2018, arXiv:1807.00601. [Google Scholar]

- Xiong, H.; Lu, H.; Liu, C.; Liu, L.; Cao, Z.; Shen, C. From open set to closed set: Counting objects by spatial divide-and-conquer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8362–8371. [Google Scholar]

| Methods | Venue | Shanghai Tech Part A | Shanghai Tech Part B | ||

|---|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | ||

| TEDNet [45] | CVPR2019 | 64.2 | 109.1 | 8.2 | 12.8 |

| CAN [35] | CVPR2019 | 62.3 | 100.0 | 7.8 | 12.2 |

| BL [47] | ICCV2019 | 62.8 | 101.8 | 7.7 | 12.7 |

| DSSI-Net [56] | ICCV2019 | 60.6 | 96.0 | 6.8 | 10.3 |

| SDANet [57] | AAAI2020 | 63.6 | 101.8 | 7.8 | 10.2 |

| AMRNet [58] | ECCV2020 | 61.59 | 98.36 | 7.02 | 11.0 |

| DM-Count [48] | NeurIPS2020 | 59.7 | 95.7 | 7.4 | 11.8 |

| ASNet [59] | CVPR2020 | 57.78 | 90.13 | - | - |

| FDC [60] | ICCV2021 | 65.4 | 109.2 | 11.4 | 19.1 |

| GL [61] | CVPR2021 | 61.3 | 95.4 | 7.3 | 11.7 |

| P2PNet [22] | CVPR2021 | 58.8 | 97.47 | - | - |

| CCTrans [53] | CVPR2022 | 64.4 | 95.4 | 7.0 | 11.5 |

| Crowdformer [55] | IJCAI2022 | 62.1 | 94.8 | 8.5 | 13.6 |

| Ours | - | 56.26 | 93.24 | 6.6 | 11.0 |

| Methods | Venue | UCF_CC_50 | |

|---|---|---|---|

| MAE | RMSE | ||

| Crowd-CNN [62] | CVPR2015 | 467.0 | 498.5 |

| IG-CNN [63] | CVPR2018 | 291.4 | 349.4 |

| D-ConvNet [64] | CVPR2018 | 288.4 | 404.7 |

| CSRNet [17] | CVPR2018 | 266.1 | 397.5 |

| SANet [43] | ECCV2018 | 258.4 | 334.9 |

| DRSAN [65] | IJCAI2018 | 219.2 | 250.2 |

| CAN [35] | CVPR2019 | 212.2 | 243.7 |

| BL [27] | ICCV2019 | 229.3 | 308.2 |

| DSSI-Net [56] | ICCV2019 | 216.9 | 302.4 |

| S-DCNet [66] | ICCV2019 | 204.2 | 301.3 |

| AMRNet [58] | ECCV2020 | 184.0 | 265.8 |

| DM-Count [48] | NeurIPS2020 | 211.0 | 291.5 |

| ASNet [59] | CVPR2020 | 174.8 | 251.6 |

| P2PNet [22] | CVPR2021 | 181.6 | 249.39 |

| CCTrans [53] | CVPR2022 | 245.0 | 343.6 |

| Crowdformer [55] | IJCAI2022 | 229.6 | 360.3 |

| Ours | - | 112.7 | 214.33 |

| Methods | Venue | UCF-QNRF | |

|---|---|---|---|

| MAE | RMSE | ||

| Idrees et al. [32] | CVPR2013 | 315 | 508 |

| MCNN [12] | CVPR2016 | 277 | 426 |

| CMTL [14] | AVSS2017 | 252 | 514 |

| CL [33] | ECCV2018 | 132 | 191 |

| CSRNet [17] | CVPR2018 | 120.3 | 208.5 |

| CAN [35] | CVPR2019 | 107.0 | 183.0 |

| S-DCNet [66] | ICCV2019 | 104.4 | 176.1 |

| DSSI-Net [56] | ICCV2019 | 99.1 | 159.2 |

| BL [47] | ICCV2019 | 88.7 | 154.8 |

| AMRNet [58] | ECCV2020 | 86.6 | 152.2 |

| Ha-CNN [20] | IEEE TIP2020 | 118.1 | 180.4 |

| P2PNet [22] | CVPR2021 | 178.78 | 402.39 |

| Ours | - | 104.06 | 178.80 |

| Methods | Shanghai Tech Part A | Shanghai Tech Part B | ||

|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | |

| P2PNet (patch A) | 24.50 | 49.27 | - | - |

| P2PNet (patch B) | 28.14 | 50.45 | - | - |

| P2PNet (patch C) | 17.34 | 48.69 | - | - |

| P2PNet (patch D) | 4.66 | 10.00 | - | - |

| Ours (patch A) | 32.54 | 70.11 | 6.17 | 4.0 |

| Ours (patch B) | 14.96 | 47.31 | 1.41 | 1.0 |

| Ours (patch C) | 11.75 | 38.91 | 0.80 | 2.0 |

| Ours (patch D) | 3.07 | 10.00 | 0.59 | 1.0 |

| nAP | UCF_CC_50 | UCF-QNRF | ||

|---|---|---|---|---|

| P2PNet | Ours | P2PNet | Ours | |

| = 0.50 | 39.87% | 56.99% | 52.7% | 54.34% |

| = 0.25 | 17.68% | 21.60% | 23.31% | 24.55% |

| = 0.05 | 1.29% | 0.92% | 1.65% | 2.11% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, P.; Lei, W.; Zhao, X.; Dong, L.; Lin, Z. An Adaptive Multi-Scale Network Based on Depth Information for Crowd Counting. Sensors 2023, 23, 7805. https://doi.org/10.3390/s23187805

Zhang P, Lei W, Zhao X, Dong L, Lin Z. An Adaptive Multi-Scale Network Based on Depth Information for Crowd Counting. Sensors. 2023; 23(18):7805. https://doi.org/10.3390/s23187805

Chicago/Turabian StyleZhang, Peng, Weimin Lei, Xinlei Zhao, Lijia Dong, and Zhaonan Lin. 2023. "An Adaptive Multi-Scale Network Based on Depth Information for Crowd Counting" Sensors 23, no. 18: 7805. https://doi.org/10.3390/s23187805

APA StyleZhang, P., Lei, W., Zhao, X., Dong, L., & Lin, Z. (2023). An Adaptive Multi-Scale Network Based on Depth Information for Crowd Counting. Sensors, 23(18), 7805. https://doi.org/10.3390/s23187805