Abstract

Despite the continuous advancement of intelligent power substations, the terminal block components within equipment cabinet inspection work still often require loads of personnel. The repetitive documentary works not only lack efficiency but are also susceptible to inaccuracies introduced by substation personnel. To resolve the problem of lengthy, time-consuming inspections, a terminal block component detection and identification method is presented in this paper. The identification method is a multi-stage system that incorporates a streamlined version of You Only Look Once version 7 (YOLOv7), a fusion of YOLOv7 and differential binarization (DB), and the utilization of PaddleOCR. Firstly, the YOLOv7 Area-Oriented (YOLOv7-AO) model is developed to precisely locate the complete region of terminal blocks within substation scene images. The compact area extraction model rapidly cuts out the valid proportion of the input image. Furthermore, the DB segmentation head is integrated into the YOLOv7 model to effectively handle the densely arranged, irregularly shaped block components. To detect all the components within a target electrical cabinet of substation equipment, the YOLOv7 model with a differential binarization attention head (YOLOv7-DBAH) is proposed, integrating spatial and channel attention mechanisms. Finally, a general OCR algorithm is applied to the cropped-out instances after image distortion to match and record the component’s identity information. The experimental results show that the YOLOv7-AO model reaches high detection accuracy with good portability, gaining 4.45 times faster running speed. Moreover, the terminal block component detection results show that the YOLOv7-DBAH model achieves the highest evaluation metrics, increasing the F1-score from 0.83 to 0.89 and boosting the precision to over 0.91. The proposed method achieves the goal of terminal block component identification and can be applied in practical situations.

1. Introduction

Under the background of low-carbon development in energy industries [], the operation and maintenance of power substations are progressing in a digital and intelligent direction [,]. Modern power grids, which involve smart grids, microgrids, and intelligent substations, require more digitized solutions or data-driven applications to improve operation flexibility and economic property [,]. Although the intelligent substation has realized the intelligence of the automation system, many tasks of construction or operation inspection for decades still require a lot of manual documenting work for unstructured forms of drawings, documents, and other data carriers in the manufacturing processes. It’s important to propose some solutions for repetitive and inefficient work.

The advancement of computer science and information technology has led to a significant improvement in artificial intelligence, particularly in the field of deep learning [,]. This progress has opened up new avenues for the automation of online monitoring and fault diagnosis at substations. Oliveira et al. [] designed a remote monitoring system for substation bay construction with the goal of automated monitoring and management. Similarly, Zhao et al. [] proposed a personal safety protective equipment detection model to remind employees who violated safety regulations during operations to take action, protecting them from electrical injuries. Yan et al. [] compared six variants of the You Only Look Once (YOLO) [,,] model, which served as object and violation detectors in the realistic substation construction site of different scenarios. Thanks to the algorithms’ open-source nature, the researchers face fewer obstacles to reproducing and applying the detection model and can focus on the adjustments and improvements to the concrete problems.

Huang et al. [] identified the working state of isolation switches based on YOLO version 4 (YOLOv4) [] through robot inspection images, even in cases of foggy or rainy weather. Similarly, Lu et al. [] proposed a segmentation-based network to recognize the working state of the isolating switch in the traction substation; their experiment results suggest the pixel-level segmentation approach exhibits more fine-grained feature extraction capability. Huang et al. [] also utilized image segmentation methods to separate the damper from its complex background to accurately recognize the damper rust status. Nassu et al. [] designed a vision-based monitoring system for disconnect switches in distribution substations with multiple machine-learning techniques. In addition to scene images, infrared images also make contributions to equipment status recognition and fault diagnosis. Zheng et al. [] proposed an improved Single Shot MultiBox Detector (SSD) [] model to detect insulation status in substations, making full use of the thermographic diagnostics of insulators. To reduce the background interference in infrared images from substation scenes, the detection model is replaced by CenterNet with an Iresgroup structure to gain higher accuracy and better robustness in [].

The inspection tasks associated with secondary system equipment, characterized by repetition and meticulousness and previously reliant on substantial manpower, can also benefit from technical support and automation. A recognition framework for knob gears in unattended substations is described in [], in which key point extraction is an important part of calculating the correct angle under image noise and oblique view. Liu et al. [] proposed a versatile pointer meter recognition system that exploits the Regions with Convolutional Neural Networks features (Faster R-CNN) [] as a meter detector, image post-process methods as cropped image revision, and Hough transform as a pointer meter reader. The post-process includes feature correspondence algorithms and perspective transformation to increase the image quality. Deng et al. [] selected the YOLO version 5 (YOLOv5) model for its faster running speed, and a segmentation network, DeepLabv3+ [], was applied during the meter reading process to achieve better segment results on fine objects. Fan et al. [] constructed their meter reader system on their self-built power meter dataset. Fan et al. further conducted their work on dual-pointer meters by means of improved segmentation models and a full meter recognition workflow, which largely expands the scope of its application. Wang et al. [] integrated the mobile-friendly Vision Transformer (MobileViT) [] module into the YOLOv7-tiny model to improve feature extraction and fusion ability for bounce lock status inspection tasks in substations. Despite the improvements to the backbone network part, the tested images of cabinet bounce locks all have plain backgrounds, and the target-bound locks are sparsely distributed in the cabinet.

Despite the notable advancements achieved through the integration of AI techniques in power substations, the utilization of AI in the digital modeling of secondary system data remains constrained. The current method of inspecting the physical wiring of protection screen cabinets in substations involves workers who manually inspect and compare the terminal block components to their original design. This process is hardly adequate for efficient construction and advanced applications for intelligent power substations, which are integral to modern power systems. That’s because digital modeling of physical devices, such as electrical wiring connection identification tasks, contains several sub-tasks, including element detection, text recognition, and connection recognition. Furthermore, tiny element target detection in complex scenarios presents significant challenges for the digital modeling of secondary systems in substations. Therefore, it’s of great value to devise a terminal block components detection and recognition system for digital modeling secondary systems in substations.

In this paper, we propose an innovative approach for the detection and identification of irregularly shaped physical components within terminal blocks located in power substation equipment cabinets. Our approach capitalizes on the YOLOv7 model as the initial network structure for both block area extraction and terminal block component detection. As the widely approved no-free-lunch theorems [,] pointed out, the performance of the YOLOv7 model is offset by our downstream tasks. Therefore, it’s necessary to improve the detection algorithm for better performance in terminal block component detection tasks. In the block area extraction task, we developed a streamlined version of YOLOv7 to swiftly locate complete terminal block regions while maintaining a higher processing speed. For the task of component detection, we retain the backbone network and feature fusion modules of YOLOv7, with primary enhancements focused on the prediction head via differentiable binarization segmentation. To achieve comprehensive component identity recognition, we employ the latest general OCR algorithm, the PaddleOCR tool [], as the final step to match and consolidate identification results within our designed system.

The main contributions of this paper are as follows:

- To address the challenge of identifying minute, irregularly shaped component objects, we devise a three-stage system leveraging deep learning techniques. This system comprises terminal block area extraction, the detection of three categories of terminal block components, and element identity text recognition.

- In response to the distinctive regional characteristics of terminal blocks, we developed the YOLOv7 Area-Oriented (YOLOv7-AO) model for area extraction. This model omits the prediction branches and corresponding feature fusion modules that offer limited value. The streamlined regional detection model efficiently extracts block regions with reduced computational demands.

- To accommodate the densely arranged tags and cable markers within terminal blocks, we integrate differentiable binarization (DB) and an attention mechanism into the segmentation heads. This redesigned YOLOv7 model with a differentiable binarization attention head (YOLOv7-DBAH) significantly enhances element detection accuracy by producing results with precise boundaries.

The remainder of this paper is structured as follows. Section 2 provides a brief overview of the used terminal block components dataset. Section 3 explicitly describes the overall framework of block component detection and identification systems and introduces the model architecture of YOLOv7-AO and YOLOv7-DBH/DBAH models. Section 4 presents the corresponding model training process and the comparative experiments with other methods. Section 5 concludes this paper.

2. Materials

2.1. Datasets

2.1.1. Dataset Acquisition

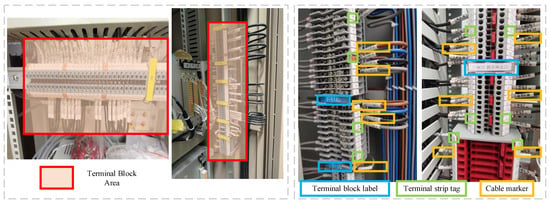

As of the present, no publicly accessible image dataset specifically catering to the task of identifying terminal block components is available. Hence, it becomes imperative for the subsequent detection models to establish a dataset characterized by both high quality and substantial volume. This image dataset is meticulously assembled through the utilization of high-resolution cameras, and its manual annotation is facilitated by employing the LabelImg tool. In order to ensure the clarity of each component instance, the filming distance from the target terminal block is subject to variations from the block’s actual size. Figure 1 exemplifies the appearance of key components featured in our paper, as depicted in image samples extracted from various power substations within our compiled dataset.

Figure 1.

The overview of terminal block components appearances.

2.1.2. Data Enhancement

Deep learning models necessitate a significant volume of data to effectively harness the automatic feature extraction capabilities. Beyond the sheer scale of the dataset, the assortment and proportion of distinct categories also exert influence on the ultimate outcomes of model training in the context of a multi-class detection problem. The statistical findings pertaining to the dataset are exhaustively detailed in Table 1. Instances that exhibit subpar quality or an oblique perspective are judiciously eliminated during the dataset generation phase to preempt the propagation of misleading labels. Evidently, the tally of labels assigned to terminal blocks is comparatively lower than the counts for the remaining two components due to the specific manner in which sub-area markings are practically utilized.

Table 1.

The statistics of terminal block components dataset.

To cope with the challenge from the data source, a series of data enhancement operations are applied. Along with the subdivision of the training, validation, and testing datasets, numerous basic online enhancement operations (color distortion, image flipping and rotation, and noise addition) [] are used during the training stage. Moreover, a mosaic data enhancement strategy involving image slicing, cropping, and combination [] is utilized for the block area location model, which allows the area detection model to function well in datasets of a smaller scale.

3. Methods

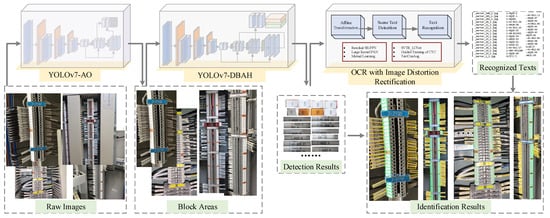

The comprehensive process of the terminal block component identification method is visually depicted in Figure 2. The identification method consists of the following steps. The initial step involves the location and extraction of the terminal block region in images by the YOLOv7-AO model to filter out unrelated backgrounds. Subsequently, three kinds of terminal components, namely terminal block labels, terminal strip tags, and cable markers, are detected within the terminal block area based on the YOLOv7-DBAH model and then cropped out for text recognition. A conventional box formation method is applied for segmentation output. To refine the detection results for each individual instance, an image distortion rectification technique founded on affine transformation is employed. Moreover, the label text associated with each element is recognized via OCR algorithms, thereby achieving the terminal component identification task. The following section introduces the details of the proposed model’s structure and procedure.

Figure 2.

The overall framework of terminal block components identification method. Blue box indicates Terminal block label; green box indicates Terminal strip tag; yellow box indicates Cable marker; red box indicates Terminal Block Area.

3.1. Terminal Block Area Extraction

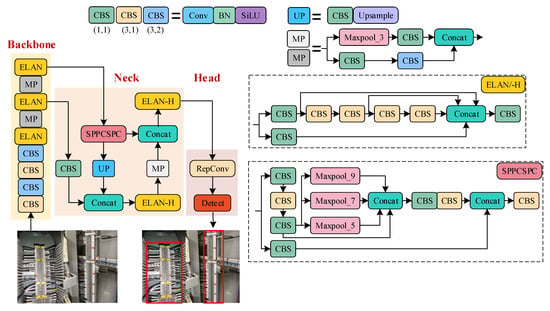

In the physical layout of terminal blocks within equipment cabinets on-site, a significant proportion of images predominantly capture the entirety of a single terminal block. Consequently, the number of regions of interest (ROI) is relatively limited. The detection of the terminal block area location is a typical large-scale object detection problem that removes the image portion of unrelated devices and cabinet background for subsequent tasks in the meantime. Therefore, building upon the conventional YOLOv7 model, this section develops a YOLOv7-AO model that is specifically designed to detect large-scale terminal block areas. The model’s structure is elaborated in Figure 3. The red boxes represent the targets detected by the YOLOv7-AO model.

Figure 3.

The structure of YOLOv7-AO model. The red boxes represent the targets detected by the YOLOv7-AO model.

By leveraging a backbone network, the YOLOv7-AO model is able to extract features of different scales from input images, with a main focus on high-level features for the purpose of locating terminal blocks. The streamlined YOLOv7-AO model is capable of quickly locating large targets and filtering irrelevant background noise, thereby enhancing the overall efficiency of the identification process.

The training loss of YOLOv7-AO comprises of boundary regression loss , classification loss , and object loss , as shown in Equation (1).

Both the classification loss and object loss adopt the binary cross-entropy (BCE) loss function, as shown in Equation (2).

where indicates the predicted outputs, indicate the ground-truth labels.

The boundary regression loss is calculated using complete intersection over union (CIoU) loss in a much more complex way to obtain accurate target boundaries, which is expressed in Equation (3).

where indicate the predicted boundary box and its ground-truth label respectively, indicate the width, height of predicted boundary box and its ground-truth label respectively, indicates the distance between the centers of predicted and labelled box, indicates the largest distance between the boundaries of predicted and labelled box, indicates the penalty of aspect ratio gap.

3.2. Terminal Block Components Detection

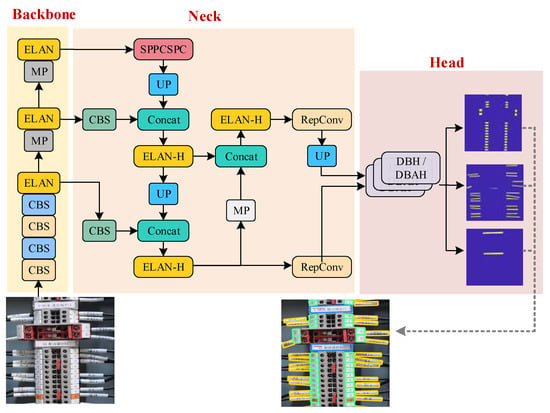

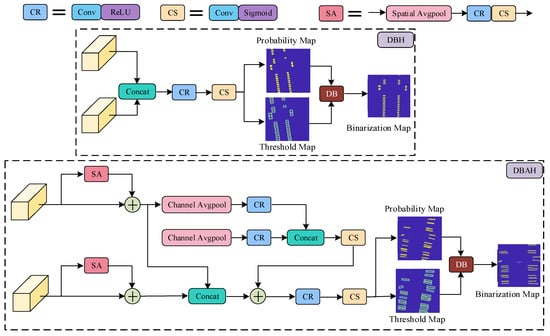

This section presents the Yolov7-DBAH model as a novel approach for detecting terminal block components. The proposed model is an adaptation of the YOLOv7 model, which is specifically designed for detecting small-sized targets. The original detection head of the YOLOv7 model is replaced with a segmentation head that supports differentiable binarization [] for detecting multiple target components with irregular shapes simultaneously. To further enhance the performance of the segmentation head, channel attention, and spatial attention modules are integrated, leading to the DBAH segmentation head. The YOLOv7-DBAH model is constructed to detect all three categories of terminal block components, and the overall network structure is shown in Figure 4.

Figure 4.

The structure of YOLOv7-DBH/DBAH model. Blue box indicates Terminal block label; green box indicates Terminal strip tag; yellow box indicates Cable marker; red box indicates Terminal Block Area.

The backbone and feature fusion module of the Yolov7-DBAH model are consistent with the conventional YOLOv7 model. The multi-channel features of different targets can be extracted from the terminal block region. In view of the small-scale and densely packed nature of terminal strip tags and cable markers, the original high-level prediction branch is deemed insufficient and is thus removed, leaving only the mid-level and low-level prediction branches. Unlike the YOLOv7 model, where the two prediction branches operate independently to produce detection results, the DBH/DBAH segmentation heads receive mid-level and low-level feature maps after feature map size alignment.

The YOLOv7-DBAH model also employs BCE loss as its training loss, which is a commonly used loss function in classification tasks. The terminal block components, namely terminal block labels, terminal strip tags, and cable markers, correspond to three groups of approximate binarization maps, probability maps, and threshold maps. The BCE loss is applied to the approximate binarization map loss and probability map loss, while the L1 loss function is used for the threshold map. Each group of outcome maps is supervised and contributes to the calculation of its respective component training loss. These component losses are then aggregated for gradient descent backpropagation.

where indicate the predicted outputs of probability maps, approximate binarization maps, and threshold maps for each target category, respectively; indicate the ground-truth labels of the probability maps, approximate binarization maps, and threshold maps for each target category, respectively; denotes scale factor of the binarization maps, set to 1; denotes scale factor of the threshold maps, set to 10.

The differentiable binarization technique involves the initial prediction of probability and threshold maps, which are subsequently utilized to produce a binarization map output. The model used in this process is equipped with three DBH/DBAH heads, which correspond to the three terminal block component categories that require identification.

The DB segmentation head converts the boundary box regression problem into a segmentation problem, resulting in a more precise contour of the actual boundary of terminal block components. This approach effectively resolves the problem of image distortion in target object detection. To further improve the detection of multiple classes simultaneously, a DBAH segmentation head is developed by integrating spatial and channel attention mechanisms into the basic DBH segmentation head structure. The structure of both DBH and DBAH segmentation heads is illustrated in Figure 5.

Figure 5.

The structure of DBH/DBAH segmentation head.

The DBH segmentation head is characterized by a straightforward structure. It involves the direct concatenation of two sets of feature maps, which are subsequently fused with a 3 × 3 convolutional layer with ReLU activation and a 1 × 1 convolutional layer with Sigmoid activation. The probability map and threshold map are predicted from fused features and are then utilized to produce a binarization map via a preset differentiable binarization function. From the binarization results, the boundaries of each instance are easy to obtain and approximated by a rotating rectangular box, bringing a unified and organized format of detection results.

By adding Spatial Attention (SA) and Channel Attention (CA) modules to the DBH segmentation head, the DBAH segmentation head strengthens the relevant features for each target category. The DBAH segmentation head utilizes independent spatial attention mechanisms to predict the spatial attention weights related to the target class before concatenating sets of feature maps. The CA module concatenates the attention vectors of two sets of feature maps and further calculates the global channel attention vector. The feature maps are then concatenated and fused with the channel attention vectors. The enhanced feature maps are subject to two convolutional layers: the generating target probability map, the threshold map, and the binarization map. These outcome maps are then utilized to obtain detection results in the same way as DBH heads.

The standard binarization process is expressed in Equation (8). While this standard binarization operation is intuitive and fast, its non-differentiable nature renders it unsuitable for joint optimization within the neural network.

where is the coordinate point of the probability map, is the binarization threshold.

The differentiable binarization process is expressed in Equation (9). Compared with the standard binarization operation, the introduction of the exponential function imparts differentiability to the binarization operation. Furthermore, scale factors are integrated to make the function curve approach the step function, i.e., the standard binarization function, so as to reach the goal of the binarization operation.

where is the coordinate point of the threshold map, indicates the scale factor for differentiable binarization, empirically set to 50.

The differential of a differentiable binarization operation can be calculated through the BCE loss function for binarization maps. With the positive labels in the foreground and the negative labels in the background, where the loss is for positive labels and for negative labels, the corresponding differential of the losses for probability maps can be expressed in Equation (10). The differential for threshold maps can be obtained in a similar manner.

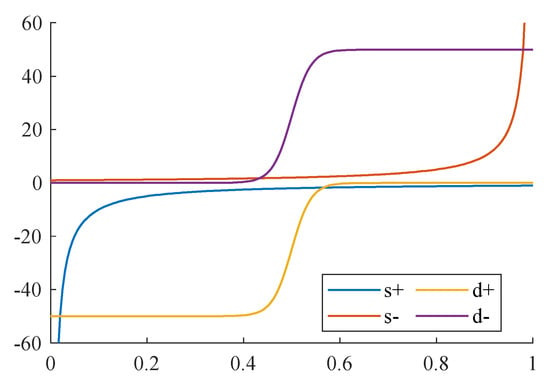

Accordingly, the differentials of the BCE losses of standard binarization and differentiable binarization are also shown in Figure 6. From the comparison, it can be perceived that the differential of training losses for boundary points or ambiguous points is amplified, guiding the network to correct the wrong outputs during the gradient descent backpropagation. In most cases where points are misclassified, their derivatives are greater, which can give effective feedback for backpropagation. Moreover, both the least upper bound and the greatest lower bound of the differential are constrained by the scale factor and binarization output, reducing the impact of extremely large or small values.

Figure 6.

The differential of losses of standard and differentiable binarization. The ‘s+’ and ‘s−’ are respectively the differential of positive and negative labels of standard binarization; The ‘d+’ and ‘d−’ are respectively the differential of positive and negative labels of differentiable binarization.

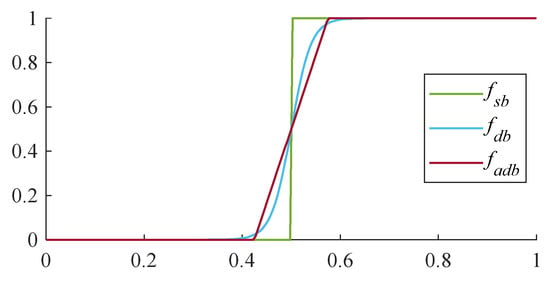

To alleviate the computational load associated with exponential operations during the inference stage, a new binarization function is designed to be an approximate substitute for the original differentiable binarization function (set to 50), as shown in Equation (11).

The comparison of the three above-mentioned binarization functions is presented in Figure 7. As shown in the figure, the differentiable binarization function can effectively mimic the standard binarization function. Even the approximate differentiable binarization function designed to accelerate computation can also accomplish the segmentation task of probability maps and obtain discriminating boundaries of instances with lower computational complexity.

Figure 7.

The comparison of binarization methods.

3.3. Text Recognition with Image Distortion Correction

In this section, we make use of the PaddleOCR tool, which is comprised of text detection, direction rectification, and text recognition modules. The OCR system is characterized by its lightweight network structure while still providing a complete set of recognition functions. It is noteworthy that the PaddleOCR system can accurately recognize input text even when it is misaligned or relatively small compared with the component area. To address the issue of perspective distortion, a correction operation based on affine transformation is implemented individually for each instance before being fed into the OCR system. Considering the adoption of a standardized and uniform text font, the PaddleOCR system with distortion correction is deemed sufficient for the scene-text recognition task.

The marking texts on terminal block components encompass the device type, ID tag, and other relevant detailed information, which serve to convey electrical wiring contexts in an intuitive manner. The text recognition for detected components constitutes the conclusive stage in the identification method for terminal block components and is of vital importance for the documentation of on-site devices and wiring connections.

4. Results and Discussion

4.1. Experimental Setup

4.1.1. Experiment Platform

The experimental environment is detailed as follows: Windows 10, Python 3.8, Pytorch 1.9, and PaddleOCR 2.6. The computing workstation for model training is configured with a CPU (AMD Ryzen 9 5900X 12-Core), a GPU (NVIDIA GeForce RTX 3080), and RAM (32 GB). This training platform is sufficient for model training under the following training settings. Computational environments with similar or higher GPU configurations should be suitable for the proposed methods. It’s not recommended to train the networks in environments with a GPU of less than 4 GB. The computing device for inference speed tests is the NVIDIA Xavier NX. The computation requirements for testing the trained models are open to devices with a greatly wider selection range.

4.1.2. Experiment Settings

For the task of terminal block area extraction, stochastic gradient descent (SGD) with a momentum of 0.9 is employed for network optimization. The learning rate is initially set to 1 × 10−2 with a weight decay of 5 × 10−4. The total epoch is set to 270 with a batch size of 16.

For the task of terminal block component detection, the optimizer is still SGD with a momentum of 0.9. The initial learning rate is decreased to 1 × 10−3 with the same weight decay of 5 × 10−4. The training total epoch is increased to 500 with a larger batch size of 32.

4.1.3. Evaluation Metrics

Various evaluation metrics are employed in this paper to comprehensively assess the detection performance of different models. For the detection tasks, precision, recall, and the F1-score are the classic metrics, which can be deduced from four basic statistical indicators, true positive (TP), true negative (TN), false positive (FP), and false negative (FN). Precision measures the proportion of correct positive samples in detected samples, while recall measures the ratio of correct samples in actual positive samples. As the harmonic average of precision and recall, the F1-score reflects precision and recall in a comprehensive way.

In addition to the three indicators, mean average precision (mAP) and frames per second (FPS) are commonly used in objection detection problems. The mAP measures the average performance in all target categories at different confidence levels. Furthermore, the FPS indicator measures the running speed of a deep learning model, reflecting the complexity of a network.

where is category number, set to 3 (the terminal block labels, cable markers, and terminal strip tags) in this paper; is the Precision Recall Curve (PRC) of a category.

4.2. Experimental Results

4.2.1. Terminal Block Area Exaction Results

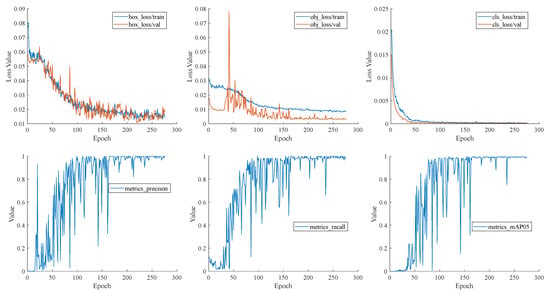

Figure 8 illustrates the training process of the YOLOv7-AO model, highlighting the differing convergence behaviors of different loss components. The classification loss (cls_loss) exhibits the fastest convergence speed and the smoothest loss curve, owing to the limited number of target categories in the terminal block areas. The object loss (obj_loss) experiences the most fluctuations in the early stage of training but gradually converges after 180 rounds in the later stage. In contrast, the boundary regression loss (box_loss) curve fluctuates more stably.

Figure 8.

Training process of YOLOv7-AO.

The convergence of the training loss is observed to occur towards the later stages of training, indicating that the model has attained the desired level of convergence within the expected time. This observation is further supported by the mAP and other metric curves, which exhibit a similar pattern. All the evaluation metrics approach the expected level after 160 rounds. Despite some oscillations around 200–250 rounds, they are eventually resolved, and the model converges.

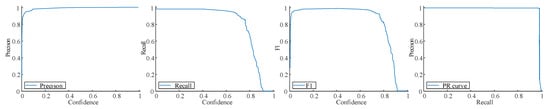

Following training, the YOLOv7-AO model is evaluated for its precision, recall, F1-score, and PR curves after training, as presented in Figure 9. The evaluation results indicate that all target curves meet the expected standards. The precision curve surpasses 0.8 before the confidence level of 0.1, while the recall curve exhibits a sharp decline only after the confidence level of 0.75. Moreover, the PR curve is positioned close to the upper right corner of the coordinate axis, which confirms the effectiveness of the YOLOv7-AO model in accurately locating terminal block areas.

Figure 9.

The metrics of Precision, Recall, F1-score, and PR-curve.

To validate the superiority of the YOLOv7-AO model, a comparative experiment is carried out to assess the effectiveness and inference speed of the standard YOLOv7 model and the YOLOv7 tiny model using identical test data. The comparative experimental results are presented in Table 2. The experimental results indicate that the YOLOv7-AO model performs better than the standard YOLOv7 model in terms of inference speed, suggesting that the proposed model has a faster deployment speed. Moreover, the YOLOv7-AO model exhibits a regional detection precision that is comparable to that of the standard YOLOv7 model and superior to the simplified version, the YOLOv7-tiny model.

Table 2.

The comparison of area location models.

4.2.2. Terminal Block Components Detection Results

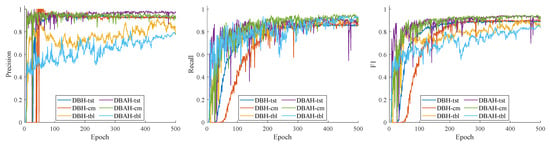

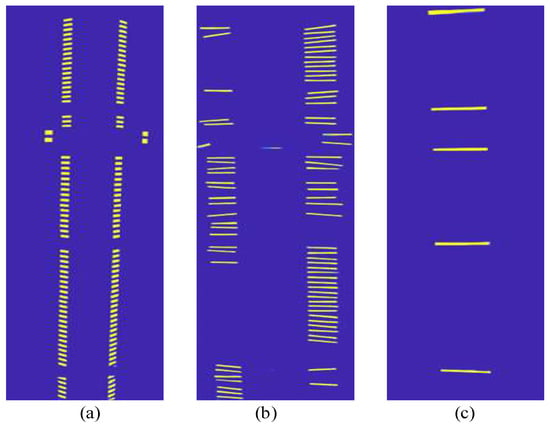

The training procedure of the YOLOv7-DBH/DBAH model is evaluated based on precision, recall, and F1-score, as shown in Figure 10. The target components analyzed are terminal strip tags (tst), cable markers (cm), and terminal block labels (tbl). The results indicate that the terminal block label exhibited a significantly greater fluctuation amplitude compared with the other two components, which are detected by both DBH and DBAH segmentation heads.

Figure 10.

Training process of YOLOv7-DBH and YOLOv7-DBAH model.

The fluctuations observed during training can be attributed to the relatively lower number of terminal block labels compared with the other components. In addition, it is noteworthy that terminal block labels exhibit greater length and width dimensions than terminal strip tags and cable markers. Unlike the densely packed other components, there are few or no instances of closely arranged terminal block labels, which may account for the slightly lower precision and F1-score. Nevertheless, the performance of the model remains adequate for practical applications.

The comparison of the training procedures for the DBAH and DBH segmentation heads reveals that the evaluation metrics for terminal strip tags and cable markers are comparable. The DBAH model exhibits marginally superior recall and precision for cable markers, while the DBH model displays nearly identical values for both metrics. The inclusion of supplementary attention mechanisms in the DBAH segmentation head leads to greater fluctuations in the evaluation metric during the initial stages of training. However, as the training procedure progressed towards convergence, the fluctuations gradually diminished and converged to a higher level.

The DBH segmentation head benefits from its simpler model structure and exhibits stable performance during the training stage, with only minor fluctuations observed. Specifically, the precision curve experiences a sudden drop before 50 rounds, while the recall curve experiences a sudden drop around 300 rounds. However, the metric curve oscillates and quickly recovers to its original level. In terms of the evaluation metric performance after convergence, the precision of the DBAH segmentation head is comparable to that of the DBH segmentation head. On the other hand, the DBAH segmentation head outperforms the DBH segmentation head in terms of recall, particularly for terminal strip tags and cable markers. This advantage is also reflected in the F1-score performance.

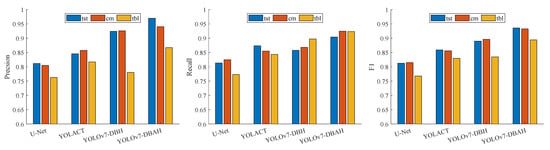

To assess the segmentation performance of the YOLOv7 DBAH model, comparative experiments with different segmentation networks are carried out using the same test data. The experimental results are tabulated in Table 3. The proposed YOLOv7-DBAH obtains better accuracy, but it can appear to fall short in terms of time cost, which is due to the fact that the new module incurs more cost. Meanwhile, the comparative experiment results for each subcategory are illustrated in Figure 11.

Table 3.

The comparison of average results of segmentation models.

Figure 11.

The Comparison of segmentation networks.

The above results of the experiment reveal that the YOLOv7-DBAH model exhibits the most superior performance in terms of the average evaluation outcomes for the three types of components. Even the lowest metric value exceeded 0.91. The YOLOv7-DBH model and YOLACT model are ranked in the second tier, with evaluation metrics hovering around 0.85. The classic segmentation model, U-Net, exhibits evaluation results of approximately 0.80.

The YOLOv7-DBH model outperforms benchmark segmentation models in terms of terminal blocks. Meanwhile, the YOLOv7-DBAH model, with its integrated spatial and channel attention mechanisms, enhances feature extraction for target-related components, leading to optimal segmentation performance. This mechanism aids in learning features relevant to target components, ultimately resulting in improved segmentation performance. The DBAH segmentation head, which is constructed based on attention mechanisms, has the potential to enhance the feature extraction ability in the context of unbalanced positive and negative samples. While the YOLOv7-DBH model fails to attain a higher F1-score in the terminal block label target compared with the YOLACT and U-Net models. Despite this, the YOLOv7-DBH model outperforms the YOLACT model in the average evaluation metrics, mainly due to its superior performance in terminal strip tags and cable markers.

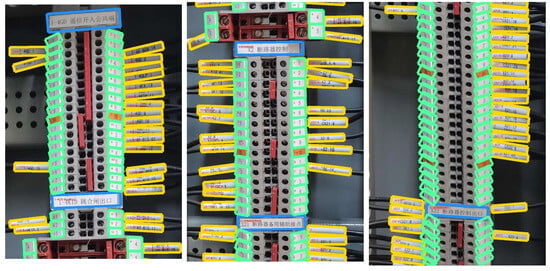

4.2.3. Visualization Analysis of Terminal Block Components Identification

In this section, the essential steps in the identification methodology are presented through a typical terminal block image. The main process and key intermediate feature maps of terminal block components are illustrated in Figure 12, Figure 13, Figure 14 and Figure 15.

Figure 12.

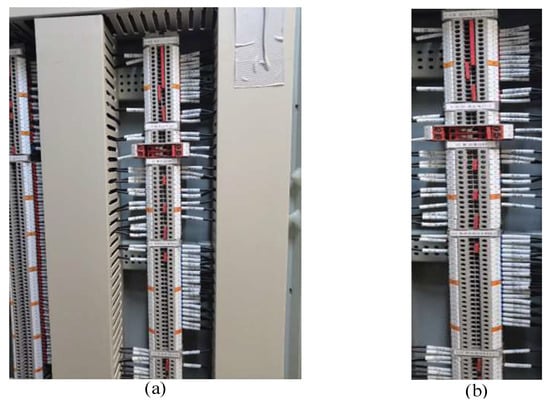

The input image and area location results. (a) is the raw image, (b) is extracted terminal block area for subsequent detection task.

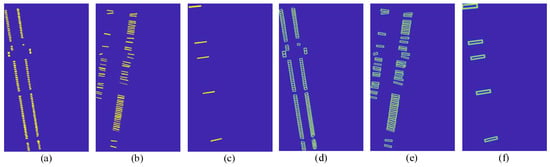

Figure 13.

The outcome probability maps and threshold maps. (a–c) are the probability maps; (d–f) are the threshold maps; (a,d) belong to terminal strip tags, (b,e) belong to cable markers, (c,f) belong to terminal block labels.

Figure 14.

The outcome binarization maps. (a) belongs to terminal strip tags, (b) belongs to cable markers, (c) belongs to terminal block labels.

Figure 15.

Display of the identification results. Blue box indicates Terminal block label; green box indicates Terminal strip tag; yellow box indicates Cable marker; red box indicates Terminal Block Area.

The outcome of the positioning task performed by the YOLOv7-AO model on the target terminal block region is presented in Figure 12. The model successfully excludes the irrelevant background region and accurately identifies the terminal block of interest. The model effectively handles the occlusion and distortion issues present in the terminal block located on the left side of the image.

Figure 13 and Figure 14 present probability maps, threshold maps, and binarization maps of terminal strip tags, cable markers, and terminal block labels processed by the DBAH head. The transformation of probability and threshold maps involves scaling and alignment operations that may introduce a slight asymmetry in the image. The results of the differentiable binarization process are adjusted to align with the input image.

By comparing the probability maps and binarization maps, it can be inferred that gaps between adjacent instances are of vital importance to identifying the densely arranged terminal strip tags or cable markers. The adaptive threshold processing method utilized in probability maps facilitates clear identification of such gaps. Besides, the threshold map prediction for terminal block labels can be omitted in the inference stage due to the limited number and wide spacing of such labels. The probability maps can be directly used to replace binarization maps without significant loss in precision.

In Figure 15, the detection bounding boxes for target objects are depicted, with the identification information of each instance annotated in red text. The detection task of terminal block labels, terminal strip tags, and cable markers exhibits satisfactory precision and recall performance, with boundary boxes closely resembling the actual target shape. It gives advantages to the item text recognition task. In spite of this, due to the curved shape and filming perspective of cable markers, the final text recognition results still require correction with electrical background knowledge during the implementation stage to obtain more precise text information.

5. Conclusions

In this paper, a multi-step identification method utilizing various deep learning techniques is proposed to address the detection challenges posed by small and densely arranged terminal block components. The method involves the use of a modified YOLOv7-AO area extraction model and an enhanced YOLOv7-DBAH detection model in the first and second stages of the system, respectively. The YOLOv7-AO model obtains a comparable accuracy performance with the standard YOLOv7 algorithm yet 4.45 times faster inference speed, dramatically decreasing the computation time of terminal block location. The block region extraction results suggest that the YOLOv7-AO model is well-suited for real-time applications, such as terminal block component area positioning tasks for video streams. The YOLOv7-DBAH model has demonstrated significant improvements in all evaluation metrics of the three terminal block components. This includes an increase in precision and recall, which are already higher than those of the YOLACT model, to over 0.91. Moreover, the F1-score of the terminal block label, which was initially only 0.83, has been increased to more than 0.89.

Future work would focus on improvements to the terminal block component identification accuracy and speed. The scene video of the terminal blocks can provide more complete information about their components. Analyzing the content of adjacent video frames can potentially enhance the accuracy of target identification and their wiring connections. This advancement could significantly improve the efficiency of substation inspection work by providing a more complete and contextual understanding of the terminal block components.

Author Contributions

Conceptualization and methodology, W.C. and Z.C.; investigation, X.D.; writing—original draft preparation, W.C. and C.W.; writing—review and editing, W.C. and T.L. All authors contributed substantially to the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the State Grid Headquarters Science and Technology Project “Research on Unified and Shared Modelling and Intelligent Application Technology for the Whole Secondary System of Substation” (SGHEDK00JYJS2200012).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wu, M.; Xu, J.; Shi, Z. Low carbon economic dispatch of integrated energy system considering extended electric heating demand response. Energy 2023, 278, 127902. [Google Scholar] [CrossRef]

- Tan, C.; Tan, Z.; Yin, Z.; Wang, Y.; Geng, S.; Pu, L. Study on grid price mechanism of new energy power stations considering market environment. Renew. Energy 2023, 203, 177–193. [Google Scholar] [CrossRef]

- Pinheiro, M.G.; Madeira, S.C.; Francisco, A.P. Short-term electricity load forecasting—A systematic approach from system level to secondary substations. Appl. Energy 2023, 332, 120493. [Google Scholar] [CrossRef]

- González, I.; Calderón, A.J.; Folgado, F.J. IoT real time system for monitoring lithium-ion battery long-term operation in microgrids. J. Energy Storage 2022, 51, 104596. [Google Scholar] [CrossRef]

- Daisy, M.; Dashti, R.; Shaker, H.R.; Javadi, S.; Aliabadi, M.H. Fault location in power grids using substation voltage magnitude differences: A comprehensive technique for transmission lines, distribution networks, and AC/DC microgrids. Measurement 2023, 220, 113403. [Google Scholar] [CrossRef]

- Khanafer, M.; Shirmohammadi, S. Applied AI in instrumentation and measurement: The deep learning revolution. IEEE Instrum. Meas. Mag. 2020, 23, 10–17. [Google Scholar] [CrossRef]

- Ahmad, S.; Shakeel, I.; Mehfuz, S.; Ahmad, J. Deep learning models for cloud, edge, fog, and IoT computing paradigms: Survey, recent advances, and future directions. Comput. Sci. Rev. 2023, 49, 100568. [Google Scholar] [CrossRef]

- Oliveira, B.A.S.; Neto, A.P.D.F.; Fernandino, R.M.A.; Carvalho, R.F.; Fernandes, A.L.; Guimaraes, F.G. Automated Monitoring of Construction Sites of Electric Power Substations Using Deep Learning. IEEE Access 2021, 9, 19195–19207. [Google Scholar] [CrossRef]

- Zhao, B.; Lan, H.; Niu, Z.; Zhu, H.; Qian, T.; Tang, W. Detection and Location of Safety Protective Wear in Power Substation Operation Using Wear-Enhanced YOLOv3 Algorithm. IEEE Access 2021, 9, 125540–125549. [Google Scholar] [CrossRef]

- Yan, K.; Li, Q.; Li, H.; Wang, H.; Fang, Y.; Xing, L.; Yang, Y.; Bai, H.; Zhou, C. Deep learning-based substation remote construction management and AI automatic violation detection system. IET Gener. Transm. Distrib. 2022, 16, 1714–1726. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27-30 June 2016; pp. 779–788. [Google Scholar]

- Choi, J.; Chun, D.; Kim, H.; Lee, H.-J. Gaussian YOLOv3: An Accurate and Fast Object Detector Using Localization Uncertainty for Autonomous Driving. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27–28 October 2019; pp. 502–511. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar] [CrossRef]

- Huang, X.; Tang, B.; Zhu, M.; Shen, L.; Ma, Y.; Wang, X.; Zhang, Z.; Xiao, D. State identification of transfer learning based Yolov4 network for isolation switches used in substations. Front. Energy Res. 2022, 10, 1000459. [Google Scholar] [CrossRef]

- Cai, Y.; Luan, T.; Gao, H.; Wang, H.; Chen, L.; Li, Y.; Sotelo, M.A.; Li, Z. YOLOv4-5D: An Effective and Efficient Object Detector for Autonomous Driving. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Lu, X.; Quan, W.; Gao, S.; Zhang, G.; Feng, K.; Lin, G.; Chen, J.X. A Segmentation-Based Multitask Learning Approach for Isolating Switch State Recognition in High-Speed Railway Traction Substation. IEEE Trans. Intell. Transp. Syst. 2022, 23, 15922–15939. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, X.; Zhang, Y.; Zhao, L. A Method of Identifying Rust Status of Dampers Based on Image Processing. IEEE Trans. Instrum. Meas. 2020, 69, 5407–5417. [Google Scholar] [CrossRef]

- Nassu, B.T.; Marchesi, B.; Wagner, R.; Gomes, V.B.; Zarnicinski, V.; Lippmann, L. A Computer Vision System for Monitoring Disconnect Switches in Distribution Substations. IEEE Trans. Power Deliv. 2021, 37, 833–841. [Google Scholar] [CrossRef]

- Zheng, H.; Sun, Y.; Liu, X.; Djike, C.L.T.; Li, J.; Liu, Y.; Ma, J.; Xu, K.; Zhang, C. Infrared Image Detection of Substation Insulators Using an Improved Fusion Single Shot Multibox Detector. IEEE Trans. Power Deliv. 2020, 36, 3351–3359. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Zheng, H.; Cui, Y.; Yang, W.; Li, J.; Ji, L.; Ping, Y.; Hu, S.; Chen, X. An Infrared Image Detection Method of Substation Equipment Combining Iresgroup Structure and CenterNet. IEEE Trans. Power Deliv. 2022, 37, 4757–4765. [Google Scholar] [CrossRef]

- Qin, X.; Wu, G.; Lei, J.; Fan, F.; Ye, X. Detecting Inspection Objects of Power Line from Cable Inspection Robot LiDAR Data. Sensors 2018, 18, 1284. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, J.; Ke, Y. A detection and recognition system of pointer meters in substations based on computer vision. Measurement 2020, 152, 107333. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Deng, G.; Huang, T.; Lin, B.; Liu, H.; Yang, R.; Jing, W. Automatic Meter Reading from UAV Inspection Photos in the Substation by Combining YOLOv5s and DeeplabV3+. Sensors 2022, 22, 7090. [Google Scholar] [CrossRef]

- Yu, L.; Zeng, Z.; Liu, A.; Xie, X.; Wang, H.; Xu, F.; Hong, W. A Lightweight Complex-Valued DeepLabv3+ for Semantic Segmentation of PolSAR Image. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 930–943. [Google Scholar] [CrossRef]

- Fan, Z.; Shi, L.; Xi, C.; Wang, H.; Wang, S.; Wu, G. Real Time Power Equipment Meter Recognition Based on Deep Learning. IEEE Trans. Instrum. Meas. 2022, 71, 1–15. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, X.; Li, L.; Wang, L.; Zhou, Z.; Zhang, P. An Improved YOLOv7 Model Based on Visual Attention Fusion: Application to the Recognition of Bouncing Locks in Substation Power Cabinets. Appl. Sci. 2023, 13, 6817. [Google Scholar] [CrossRef]

- Dai, Y.; Zheng, T.; Xue, C.; Zhou, L. Mvit-pcd: A lightweight vit-based network for martian surface topographic change detection. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Orosz, T.; Rassõlkin, A.; Kallaste, A.; Arsénio, P.; Pánek, D.; Kaska, J.; Karban, P. Robust Design Optimization and Emerging Technologies for Electrical Machines: Challenges and Open Problems. Appl. Sci. 2020, 10, 6653. [Google Scholar] [CrossRef]

- Adam, S.P.; Alexandropoulos, S.N.; Pardalos, P.M.; Vrahatis, M.N. No free lunch theorem: A review. In Approximation and Optimization: Algorithms, Complexity and Applications; Demetriou, I.C., Pardalos, P.M., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 57–82. [Google Scholar]

- Feng, L.; Ke, Z.; Wu, N. Modelskg: A Design and Research on Knowledge Graph of Multimodal Curriculum Based on Paddleocr and Deepke. In Proceedings of the 2022 14th International Conference on Advanced Computational Intelligence (ICACI), Wuhan, China, 15–17 July 2022; pp. 186–192. [Google Scholar]

- Liao, M.; Zou, Z.; Wan, Z.; Yao, C.; Bai, X. Real-Time Scene Text Detection with Differentiable Binarization and Adaptive Scale Fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 919–931. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).