Joint Calibration Method for Robot Measurement Systems

Abstract

:1. Introduction

2. Method

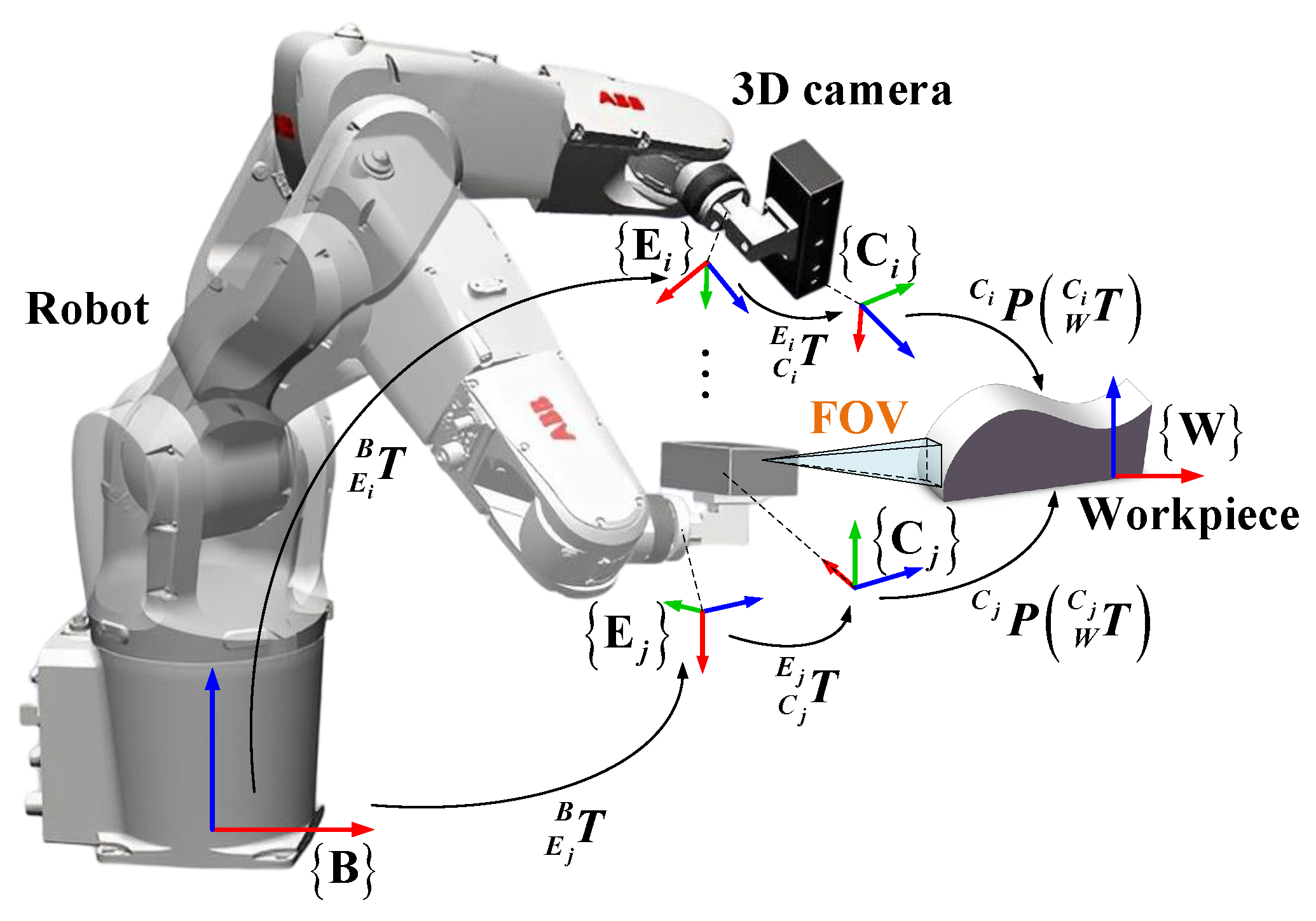

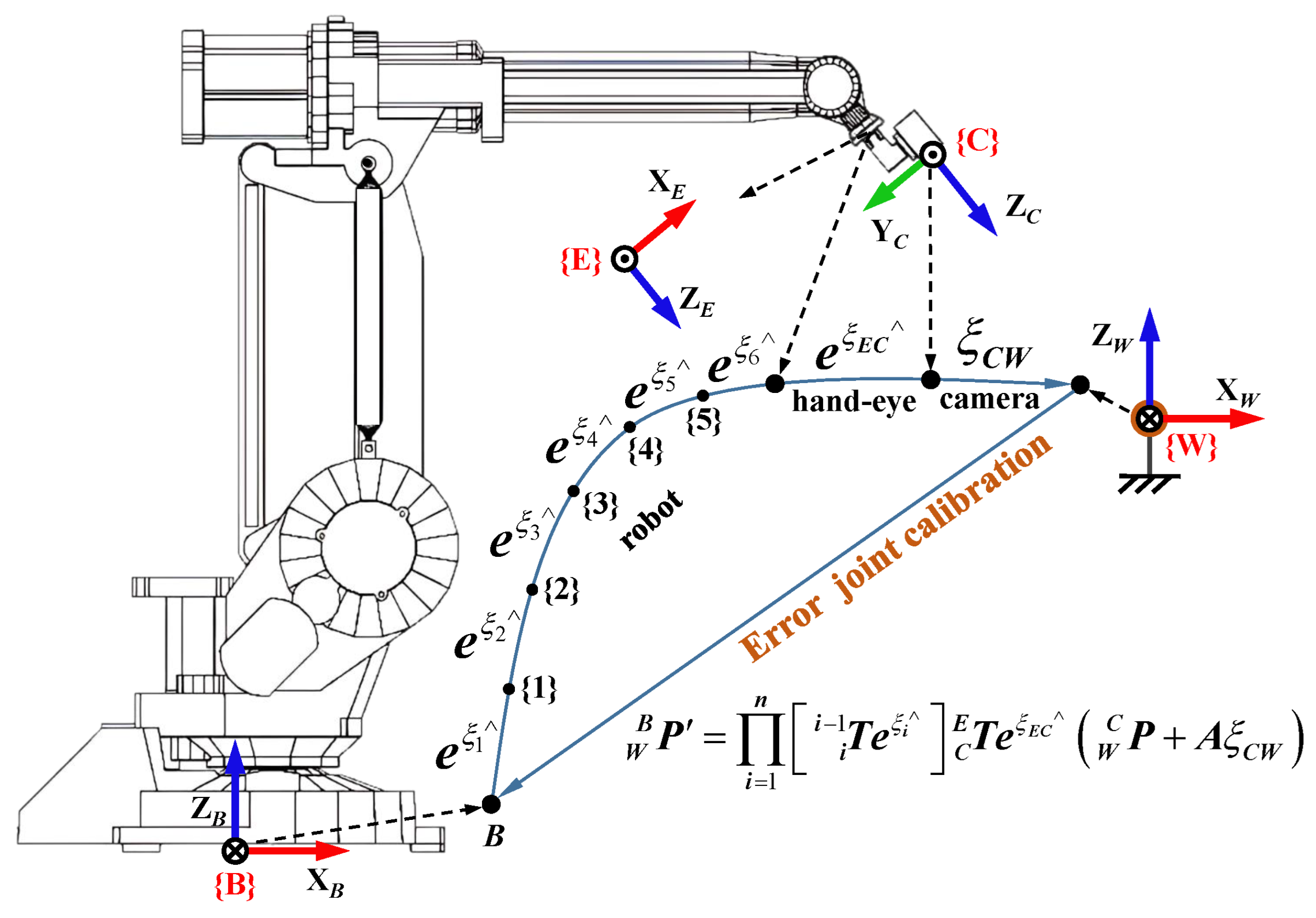

2.1. Problem Statement

2.2. Joint Calibration Method

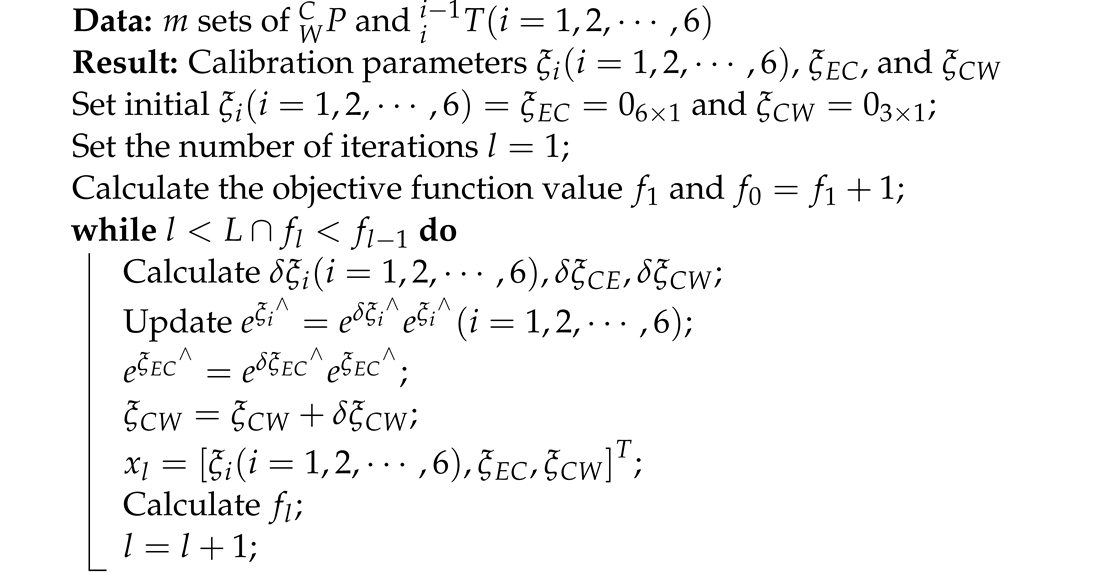

| Algorithm 1: The pseudo-code of calibration process |

|

3. Simulation

3.1. Calibration Accuracy

3.2. The Impact of Random Noise

3.3. The Impact of Camera Error

4. Experiment

4.1. Calibration Accuracy

4.2. Performance in Practical Applications

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Suzuki, R.; Okada, Y.; Yokota, Y.; Saijo, T.; Eto, H.; Sakai, Y.; Murano, K.; Ohno, K.; Tadakuma, K.; Tadokoro, S. Cooperative Towing by Multi-Robot System That Maintains Welding Cable in Optimized Shape. IEEE Robot. Autom. Lett. 2022, 7, 11783–11790. [Google Scholar] [CrossRef]

- Siguerdidjane, W.; Khameneifar, F.; Gosselin, F.P. Closed-loop shot peen forming with in-process measurement and optimization. CIRP J. Manuf. Sci. Technol. 2022, 38, 500–508. [Google Scholar] [CrossRef]

- Li, P. Research on Staggered Stacking Pattern Algorithm for Port Stacking Robot. J. Coast. Res. 2020, 115, 199–201. [Google Scholar] [CrossRef]

- Lyu, C.; Li, P.; Wang, D.; Yang, S.; Lai, Y.; Sui, C. High-Speed Optical 3D Measurement Sensor for Industrial Application. IEEE Sensors J. 2021, 21, 11253–11261. [Google Scholar] [CrossRef]

- Zhong, F.; Kumar, R.; Quan, C. A Cost-Effective Single-Shot Structured Light System for 3D Shape Measurement. IEEE Sens. J. 2019, 19, 7335–7346. [Google Scholar] [CrossRef]

- Chen, X.; Zhan, Q. The Kinematic Calibration of an Industrial Robot With an Improved Beetle Swarm Optimization Algorithm. IEEE Robot. Autom. Lett. 2022, 7, 4694–4701. [Google Scholar] [CrossRef]

- Meng, L.; Li, Y.; Zhou, H.; Wang, Q. A Hybrid Calibration Method for the Binocular Omnidirectional Vision System. IEEE Sens. J. 2022, 22, 8059–8070. [Google Scholar] [CrossRef]

- Chen, G.; Cui, G.; Jin, Z.; Wu, F.; Chen, X. Accurate Intrinsic and Extrinsic Calibration of RGB-D Cameras With GP-Based Depth Correction. IEEE Sens. J. 2019, 19, 2685–2694. [Google Scholar] [CrossRef]

- Sarabandi, S.; Porta, J.M.; Thomas, F. Hand-Eye Calibration Made Easy Through a Closed-Form Two-Stage Method. IEEE Robot. Autom. Lett. 2022, 7, 3679–3686. [Google Scholar] [CrossRef]

- Bai, M.; Zhang, M.; Zhang, H.; Li, M.; Zhao, J.; Chen, Z. Calibration Method Based on Models and Least-Squares Support Vector Regression Enhancing Robot Position Accuracy. IEEE Access 2021, 9, 136060–136070. [Google Scholar] [CrossRef]

- Sun, T.; Lian, B.; Yang, S.; Song, Y. Kinematic calibration of serial and parallel robots based on finite and instantaneous screw theory. IEEE Trans. Robot. 2020, 36, 816–834. [Google Scholar] [CrossRef]

- Okamura, K.; Park, F.C. Kinematic calibration using the product of exponentials formula. Robotica 1996, 14, 415–421. [Google Scholar] [CrossRef]

- Liu, Z.; Liu, X.; Cao, Z.; Gong, X.; Tan, M.; Yu, J. High Precision Calibration for Three-Dimensional Vision-Guided Robot System. IEEE Trans. Ind. Electron. 2023, 70, 624–634. [Google Scholar] [CrossRef]

- Du, G.; Liang, Y.; Li, C.; Liu, P.X.; Li, D. Online robot kinematic calibration using hybrid filter with multiple sensors. IEEE Trans. Instrum. Meas. 2020, 69, 7092–7107. [Google Scholar] [CrossRef]

- Messay-Kebede, T.; Sutton, G.; Djaneye-Boundjou, O. Geometry based self kinematic calibration method for industrial robots. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 4921–4926. [Google Scholar] [CrossRef]

- Yang, L.; Cao, Q.; Lin, M.; Zhang, H.; Ma, Z. Robotic hand-eye calibration with depth camera: A sphere model approach. In Proceedings of the 2018 4th International Conference on Control, Automation and Robotics (ICCAR), Auckland, New Zealand, 20–23 April 2018; pp. 104–110. [Google Scholar] [CrossRef]

- Fu, J.; Ding, Y.; Huang, T.; Liu, H.; Liu, X. Hand–eye calibration method based on three-dimensional visual measurement in robotic high-precision machining. Int. J. Adv. Manuf. Technol. 2022, 119, 3845–3856. [Google Scholar] [CrossRef]

- Yin, S.; Ren, Y.; Guo, Y.; Zhu, J.; Yang, S.; Ye, S. Development and calibration of an integrated 3D scanning system for high-accuracy large-scale metrology. Measurement 2014, 54, 65–76. [Google Scholar] [CrossRef]

- Madhusudanan, H.; Liu, X.; Chen, W.; Li, D.; Du, L.; Li, J.; Ge, J.; Sun, Y. Automated Eye-in-Hand Robot-3D Scanner Calibration for Low Stitching Errors. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 8906–8912. [Google Scholar] [CrossRef]

- Wang, G.; Li, W.l.; Jiang, C.; Zhu, D.h.; Xie, H.; Liu, X.j.; Ding, H. Simultaneous Calibration of Multicoordinates for a Dual-Robot System by Solving the AXB = YCZ Problem. IEEE Trans. Robot. 2021, 37, 1172–1185. [Google Scholar] [CrossRef]

- Liu, Q.; Qin, X.; Yin, S.; He, F. Structural Parameters Optimal Design and Accuracy Analysis for Binocular Vision Measure System. In Proceedings of the 2008 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Xi’an, China, 2–5 July 2008; pp. 156–161. [Google Scholar] [CrossRef]

- Lin, D.; Wang, Z.; Shi, H.; Chen, H. Modeling and analysis of pixel quantization error of binocular vision system with unequal focal length. J. Phys. Conf. Ser. 2021, 1738, 012033. [Google Scholar] [CrossRef]

- Bottalico, F.; Niezrecki, C.; Jerath, K.; Luo, Y.; Sabato, A. Sensor-Based Calibration of Camera’s Extrinsic Parameters for Stereophotogrammetry. IEEE Sens. J. 2023, 23, 7776–7785. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, Z. Calibration Between Depth and Color Sensors for Commodity Depth Cameras. In Computer Vision and Machine Learning with RGB-D Sensors; Springer: Berlin/Heidelberg, Germany, 2014; pp. 47–64. [Google Scholar] [CrossRef]

- Basso, F.; Pretto, A.; Menegatti, E. Unsupervised intrinsic and extrinsic calibration of a camera-depth sensor couple. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 6244–6249. [Google Scholar] [CrossRef]

- Li, W.L.; Xie, H.; Zhang, G.; Yan, S.J.; Yin, Z.P. Hand–Eye Calibration in Visually-Guided Robot Grinding. IEEE Trans. Cybern. 2016, 46, 2634–2642. [Google Scholar] [CrossRef]

- Lembono, T.S.; Suarez-Ruiz, F.; Pham, Q.C. SCALAR: Simultaneous Calibration of 2-D Laser and Robot Kinematic Parameters Using Planarity and Distance Constraints. IEEE Trans. Automat. Sci. Eng. 2019, 16, 1971–1979. [Google Scholar] [CrossRef]

- Karmakar, S.; Turner, C.J. Forward kinematics solution for a general Stewart platform through iteration based simulation. Int. J. Adv. Manuf. Technol. 2023, 126, 813–825. [Google Scholar] [CrossRef]

- Yang, L.; Wang, B.; Zhang, R.; Zhou, H.; Wang, R. Analysis on Location Accuracy for the Binocular Stereo Vision System. IEEE Photonics J. 2018, 10, 7800316. [Google Scholar] [CrossRef]

- Xu, D.; Zhang, D.; Liu, X.; Ma, L. A Calibration and 3-D Measurement Method for an Active Vision System With Symmetric Yawing Cameras. IEEE Trans. Instrum. Meas. 2021, 70, 5012013. [Google Scholar] [CrossRef]

| Joint Number | (mm) | (rad) | (mm) | (rad) |

|---|---|---|---|---|

| 1 | 0 (0.0016) | 0 (1.5 × 10) | 475 (0.0018) | 0 (3.0 × 10) |

| 2 | 150 (0.0023) | 0.5 (7.5 × 10) | 0 (0.0033) | 0.5 (4.5 × 10) |

| 3 | 600 (0.0390) | 0 (3.0 × 10) | 0 (0.0071) | 0 (−1.5 × 10) |

| 4 | 120 (−0.030) | 0.5 (9.0 × 10) | 720 (0.0075) | 0 (3.0 × 10) |

| 5 | 0 (0.0240) | −0.5 (7.5 × 10) | 0 (0.0032) | 0 (−0.0011) |

| 6 | 0 (−0.0510) | 0.5 (−4.5 × 10) | 85 (0.0018) | 0 (1.5 × 10) |

| (mm) | (mm) | (mm) | (rad) | (rad) | (rad) | |

|---|---|---|---|---|---|---|

| −0.0386 | 0.0141 | −0.0320 | 0.0250 | 0.0505 | −0.2077 | |

| −0.4040 | 0.4460 | 0.5121 | −0.4951 | −0.0021 | 0.0545 | |

| 5.62 × 10 | −0.1397 | −0.1761 | 0.4880 | 0.5026 | −0.0640 | |

| −9.91 × 10 | −3.04 × 10 | −8.92 × 10 | −7.50 × 10 | −4.54 × 10 | −0.0014 | |

| −8.97 × 10 | −4.84 × 10 | 4.42 × 10 | 8.53 × 10 | 0.0034 | −1.31 × 10 | |

| 8.75 × 10 | 3.72 × 10 | −6.04 × 10 | 1.24 × 10 | 1.98 × 10 | 0.0031 | |

| −1.2709 | 0.2462 | −1.2593 | 0.0033 | 0.0048 | 0.0049 | |

| −9.60 × 10 | −7.32 × 10 | −4.94 × 10 |

| Method | Robot | Hand–Eye Matrix | Camera | (mm) | (mm) | (mm) |

|---|---|---|---|---|---|---|

| 1 | √ | √ | √ | 0.2629 | 0.2918 | 0.0112 |

| 2 | √ | √ | × | 5.6545 | 5.9715 | 0.1831 |

| 3 | × | × | × | 2.5228 | 3.5615 | 0.5317 |

| 4 | √ | √ | × | 134.8228 | 140.9125 | 0.6630 |

| (mm) | (mm) | (mm) | (rad) | (rad) | (rad) | |

|---|---|---|---|---|---|---|

| 0.1416 | −0.2921 | −1.35 × 10 | −1.56 × 10 | 6.66 × 10 | 3.26 × 10 | |

| 0.5372 | −0.1758 | −0.0512 | 2.32 × 10 | −1.58 × 10 | 4.73 × 10 | |

| 0.3549 | 0.1113 | −0.0251 | 2.46 × 10 | −1.28 × 10 | 1.25 × 10 | |

| −0.1963 | 0.2188 | 0.6091 | 4.84 × 10 | −5.40 × 10 | 9.96 × 10 | |

| 0.1579 | −0.0294 | −0.2141 | 8.81 × 10 | 6.02 × 10 | 1.73 × 10 | |

| 0.0977 | 0.0743 | 0.0969 | −1.78 × 10 | 3.44 × 10 | 4.60 × 10 | |

| −0.1723 | −0.1967 | 0.3857 | −0.0121 | 0.0245 | 0.0157 | |

| 7.02 × 10 | 4.32 × 10 | 7.58 × 10 |

| Method | Robot | Hand–Eye Matrix | Camera | (mm) | (mm) | (mm) |

|---|---|---|---|---|---|---|

| 1 | √ | √ | √ | 0.1232 | 0.3137 | 0.0957 |

| 2 | √ | √ | × | 0.1488 | 0.3675 | 0.1045 |

| 3 | × | × | × | 1.5457 | 4.6076 | 1.3503 |

| 4 | √ | √ | × | 0.1933 | 0.4703 | 0.1414 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, L.; Zang, X.; Ding, G.; Wang, C.; Zhang, X.; Liu, Y.; Zhao, J. Joint Calibration Method for Robot Measurement Systems. Sensors 2023, 23, 7447. https://doi.org/10.3390/s23177447

Wu L, Zang X, Ding G, Wang C, Zhang X, Liu Y, Zhao J. Joint Calibration Method for Robot Measurement Systems. Sensors. 2023; 23(17):7447. https://doi.org/10.3390/s23177447

Chicago/Turabian StyleWu, Lei, Xizhe Zang, Guanwen Ding, Chao Wang, Xuehe Zhang, Yubin Liu, and Jie Zhao. 2023. "Joint Calibration Method for Robot Measurement Systems" Sensors 23, no. 17: 7447. https://doi.org/10.3390/s23177447

APA StyleWu, L., Zang, X., Ding, G., Wang, C., Zhang, X., Liu, Y., & Zhao, J. (2023). Joint Calibration Method for Robot Measurement Systems. Sensors, 23(17), 7447. https://doi.org/10.3390/s23177447