Abstract

A global health emergency resulted from the COVID-19 epidemic. Image recognition techniques are a useful tool for limiting the spread of the pandemic; indeed, the World Health Organization (WHO) recommends the use of face masks in public places as a form of protection against contagion. Hence, innovative systems and algorithms were deployed to rapidly screen a large number of people with faces covered by masks. In this article, we analyze the current state of research and future directions in algorithms and systems for masked-face recognition. First, the paper discusses the importance and applications of facial and face mask recognition, introducing the main approaches. Afterward, we review the recent facial recognition frameworks and systems based on Convolution Neural Networks, deep learning, machine learning, and MobilNet techniques. In detail, we analyze and critically discuss recent scientific works and systems which employ machine learning (ML) and deep learning tools for promptly recognizing masked faces. Also, Internet of Things (IoT)-based sensors, implementing ML and DL algorithms, were described to keep track of the number of persons donning face masks and notify the proper authorities. Afterward, the main challenges and open issues that should be solved in future studies and systems are discussed. Finally, comparative analysis and discussion are reported, providing useful insights for outlining the next generation of face recognition systems.

1. Introduction

Since the 1990s, image recognition has become a prominent topic, exploiting artificial intelligence (AI) and technological advancements. Face and object recognition is a common technique in computer vision and arguably its most fundamental aspect [1,2].

Most facial and object detection systems rely on traditional machine learning (ML) techniques; a balance learning framework was proposed in [3] to enhance the training of the networks, resulting in improved performance than previous ones.

Public and private organizations utilize facial recognition technology to identify and regulate admission to airports, schools, and offices. Because of its apparent accuracy in identifying people, face recognition systems have been used in healthcare to improve patient condition information security, sanitation with contactless applications, staff access point security, and patient and worker data collection [4].

In the last year, masked-face recognition has expanded applicability thanks to Internet of Things (IoT) devices to recognize and identify individuals wearing masks. With the widespread adoption of face masks in response to the COVID-19 pandemic, traditional facial recognition systems that rely on full-face visibility have faced challenges in accurately identifying individuals [5]. Masked-face recognition in IoT involves combining IoT devices, such as surveillance cameras or smart doorbells, with specialized algorithms and technologies to recognize and verify individuals even when wearing masks [6]. Furthermore, different face mask recognition systems were deployed during the COVID-19 health emergency to verify whether individuals correctly wore masks in public places.

Challenges in masked-face recognition include dealing with variations in mask types, lighting conditions, and occlusions caused by masks. Technological advancements, such as improved algorithms and hardware, can help overcome these challenges and enhance the accuracy of masked-face recognition in IoT applications. However, it is important to consider privacy and ethical concerns associated with facial recognition technology and ensure proper safeguards are in place to protect individuals’ rights and data.

The development of numerous digital technologies over the past decade has made it possible to employ them to fight the COVID-19 pandemic [7]. Artificial intelligence (AI) has recently been used to improve the identification of infection levels, as well as to locate and diagnose illnesses quickly.

In the late 1990s, the Bochum system, which used a Gabor filter to store face data and generated a grid of the face structure to link the characteristics, supplanted purely feature-based systems for facial recognition [8]. In the mid-1990s, Elastic Bunch Graph Matching was developed using skin segmentation to recover a face from a photograph [9]. Elastic Bunch Graph Matching was created in the mid-1990s to recover a face from an image using skin segmentation [9]. The algorithm was stable enough to identify individuals from less-than-ideal face views. It can also see through hurdles to identification, such as mustaches, beards, new hairstyles, spectacles, and even sunglasses [9].

Real-time face identification from video became possible with the release of the Viola-Jones object detection framework for faces in 2001 [10]. Paul Viola and Michael Jones presented AdaBoost, the first real-time frontal-view face detector, by merging their face detection algorithm with the Haar-like feature approach to object recognition in digital images [11]. In 2015, the Viola-Jones algorithm was implemented on mobile devices and embedded systems using small, low-power detectors. As a result, the Viola-Jones method has enabled new possibilities in user interfaces and teleconferencing, as well as expanded the practical use of facial recognition systems [12].

Ukraine is identifying dead Russian personnel using the Clearview AI facial recognition platform developed in the United States. Ukraine has identified the relatives of 582 slain Russian servicemen after conducting 8600 searches. The Ukrainian army’s information technology volunteer unit uses such software to inform the families of dead soldiers about Russian actions in Ukraine [13]. A new generation of AI software tools has been implemented in the surveillance cameras of the Paris Metro to ensure that passengers are wearing masks.

Deep learning and computer vision techniques for detecting COVID-19 face masks can help predict pandemic incidence based on anonymized and unidentifiable statistics data. The face mask recognition models provide the following advantages [14]:

- Reduce the propagation of the COVID-19 pandemic.

- Precise improvement of the detection performance.

- High processing speed and seamless integration with surveillance cameras.

- They can be applied in schools, universities, and other institutions that monitor attendance using facial recognition. Consequently, administrators can readily determine if students, employees, and other visitors are wearing masks.

Research papers [15,16] show significant advances in deep learning toward entity detection and recognition in numerous application domains have occurred over time. The detection of objects with deep learning and the early detection of a congestion control warning system on a bridge’s footsteps were extensively discussed.

Image reconstruction and facial recognition are now the subject of several investigations. However, the classification process based on machine learning (ML) and deep learning (DL) includes several steps that result in a final prediction to infer the input class based on extracted features [17].

Classification methods may be categorized into two types: supervised methods and unsupervised methods. ML learns to classify new inputs using supervised methods based on original labeled data, typically used to construct prediction models. According to [18], unsupervised ML uses unlabeled data to develop a model that predicts incoming inputs based on clustering algorithms.

The DeepMasknet framework was proposed in [19]; it can be applied for face mask recognition and masked-face detection. Furthermore, as shown in Figure 1, the authors created a large and distinct integrated mask detection and masked facial recognition (MDMFR) dataset to evaluate the effectiveness of the proposed method.

Figure 1.

Sample images contained in the MDMFR dataset [19].

The main contributions and novelties of the presented paper are as follows:

- A comprehensive overview of ML and DL algorithms and methods for face mask recognition and masked-face detection; in particular, operating modalities, advantages, and shortcomings of each method/algorithm are reported, along with application examples proposed in the scientific literature.

- A description of mobile networks for face mask detection and masked-face recognition applications developed during the COVID-19 pandemic to quickly detect whether people wear or not the mask, like Mobile Netv1 and MobileNetv2.

- A description of the main challenges and open issues for developing face mask detection systems, including precision, privacy, and improper use of private information.

- In-depth comparative analyzes of algorithms and models reported in the scientific literature to determine features and perspectives of innovative ML and DL tools for recognizing people wearing masks to fight future pandemics.

The remainder of the paper is organized as follows: in the following section, we discuss ML systems to recognize masked faces; then, Section 3 introduces DL methods for identifying masked faces, briefly discussing several scientific works and systems based on each algorithm and method. Furthermore, Section 4 presents examples of mobile networks developed for masked-face detection, like Mobile Netv1 and MobileNetv2. Moreover, IoT-based sensors for masked-face recognition were presented and developed during the COVID-19 pandemic. Section 6 reports challenges and open issues in developing systems and algorithms for masked-face recognition. Finally, Section 7 presents comparative analyses of systems and models reported in the scientific literature, comparing them from the performance perspective.

Paper Selection and Bibliometric Indexes

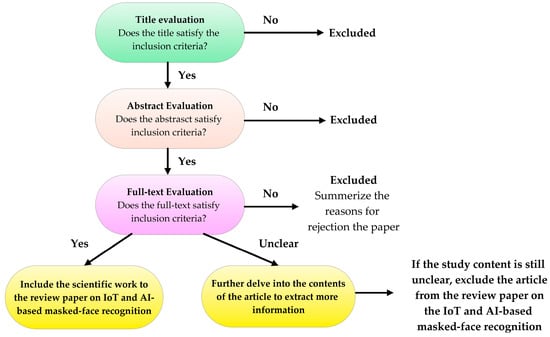

An essential step in writing the presented review paper concerns the selection of discussed and analyzed papers according to set inclusion and exclusion rules. Detailed consideration of numerous characteristics of the studied documents, such as their applicability to the themes they address, their relevance, when they were published, and whether or not they overlapped with other chosen papers, was made while defining the latter. According to the procedure shown in Figure 2, the selection approach was carried out; a three-step analysis was completed, starting with the title, moving on to the abstract, and finishing with reading the whole text.

Figure 2.

Workflow representing the inclusion/exclusion process for selecting the paper included in the presented review work.

In this way, 67 scientific works were selected and included in the presented review paper, organized into 20 review papers, 42 articles, 4 book chapters, and 1 website.

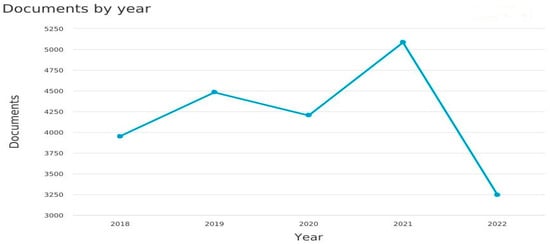

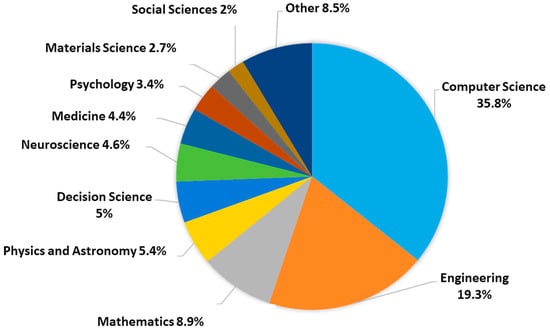

Exploratory and bibliometric analysis was performed, using Scopus as a data source to investigate statistics related to scientific works on AI-based face recognition applications. Using the analysis tools provided by the Scopus platform, the obtained data were plotted in Figure 3 to illustrate that these topics have received more attention recently, particularly from 2018 to 2022. While Figure 4 explores publications that confronted computer science and engineering subject areas and other areas.

Figure 3.

Trend of the face recognition-related documents by year.

Figure 4.

Face recognition-related documents classified by area.

Referring to Figure 3, the highest number of documents was in 2021 (approximately 5084), beginning in 2018 with 3950 documents and terminating in 2022 with the lowest number (about 3247).

As shown in Figure 4, more than 35.8% of documents are in the computer sciences field, 19.35% are in the engineering field, and 44.9% are distributed across other disciplines.

2. Machine Learning Prototypes Used to Identify Face Mask

Machine learning is a branch of artificial intelligence (AI) and computer science that uses data and algorithms to mimic human learning processes and progressively increase accuracy. It can be used to identify relationships in a dataset through unsupervised, supervised, or hybrid learning. Supervised learning and unsupervised learning are the two basic approaches used in machine learning and artificial intelligence (AI). While supervised learning involves training a machine-learning model on labeled data, unsupervised learning involves training a machine-learning model on unlabeled data without predefined target labels or output values. Unsupervised learning aims to discover patterns, structures, or relationships within the data without explicit guidance. The two strategies differ in various ways, and there are some situations when one works better than the other. Using open-source and local data, ref. [20] summarizes the ML models and how they were trained.

Machine-learning algorithms can be used to identify face masks in various ways. Common approaches followed are [21,22]:

- Image classification: ML models can be trained to classify images based on whether a person is wearing a face mask or not. This approach involves collecting a dataset of labeled images, where each image is categorized as either “with mask” or “without mask”. Using this dataset, a model can be trained to recognize patterns and features that distinguish between the two categories. Common algorithms for image classification are CNNs, SVM, Random Forest (RF), K-Nearest Neighbors (KNN), Naïve Bayes, etc.

- Object Detection: Object detection techniques can be employed to locate and classify face masks within an image or video. These models can identify the presence and position of face masks in real-time applications. Currently, for this application, several deep-learning techniques are applicable, like CNNs (e.g., R-CNN-Region-based CNN, Fast R-CNN, Faster R-CNN, YOLO-You Only Look Once, SSD-Single Shot MultiBox Detector, EfficientDet, etc.), which are discussed in Section 3.

- Facial Landmark Detection: Machine-learning models can also be trained to detect facial landmarks, such as the nose, mouth, and eyes, to determine if a face mask is properly worn. By analyzing the spatial relationships between these landmarks, the model can infer the presence and alignment of a face mask. Techniques like the Histogram of Oriented Gradients (HOG) combined with SVM or more modern methods like facial landmark detectors based on deep learning architectures (e.g., OpenPose, DLIB) can be utilized.

Combining various ML prototypes, including Support Vector Machines (SVM), decision trees, and combination techniques, in [23], the authors presented a hybrid deep transmits learning prototype for identifying face masks. The hybrid deep transfer-learning model utilized Resnet 50 feature extraction and three classifiers (i.e., SVM, decision trees, and ensemble approaches). Logistic regression, K-Nearest Neighbors Algorithm, and linear regression are examples of ensemble methods used to construct M-classifiers and train each before combining and averaging their outputs. Real-World Masked Face Dataset (RMFD) accuracy was 99.64%, simulated masked-face dataset accuracy was 99.48%, and accuracy within the untamed dataset was 100%.

SVM is a common classifier for medical applications; it is a supervised machine-learning technique for classification and regression problems. This algorithm suits linear or nonlinear classification applications, outlier identification, regression, and even outlier detection. In detail, SVMs can be used for various tasks, including text classification, image classification, spam detection, handwriting identification, gene expression analysis, face detection, and anomaly detection. SVM is adaptable and powerful in various applications because it can handle high-dimensional data and nonlinear relationships. Nevertheless, its primary application is for classification problems, particularly those involving two classes or binary classification. It employs hyperline or hyperplane to determine to which class new unlabeled data belongs during testing [24]. SVM uses the training subset of data to identify which labels they correspond to, then to build a hyperline for two classes or a hyperplane for more than two classes to distinguish between data.

In [25], the authors introduced a hybrid face mask identification model that combines deep learning, handcrafted feature extractors, and traditional machine learning classifiers. In particular, the proposed approach combines a Random Forest classifier on a hybrid feature set created by CNN and a handcrafted feature extractor from the input pictures to distinguish masks from faces. Principal component analysis, or PCA, is additionally employed for feature selection. Although the system has a test accuracy of about 62% for a random forest with 100 trees, this accuracy could be improved by expanding the training data set and adding historical data sets that contain localized information that may be useful concerning a specific geographic location.

Ensemble methods in machine learning are techniques that combine multiple models to improve overall prediction performance and generalization. The idea behind ensemble methods is that by combining diverse models, their weaknesses can be compensated, resulting in a more robust and accurate prediction. Ensemble methods are widely used in various machine learning tasks and have shown to be highly effective in many real-world applications. Popular ensemble methods include Bagging (Bootstrap Aggregating), Random Forest, Boosting, and Stacking. There are three phases of the ensemble method:

- Create M classifiers.

- Train each individual classifier.

- Combine the M classifiers and calculate their average throughput.

Datasets for Developing Face Mask Recognition Algorithms

Datasets play a crucial role in developing and training face mask recognition algorithms. It is important to note that the dataset’s quality, diversity, and size directly influence face mask recognition algorithms’ performance and generalization capabilities. Collecting and curating high-quality datasets encompassing a wide range of real-world scenarios is crucial for developing effective and reliable algorithms. Additionally, ongoing efforts to ensure the inclusiveness and fairness of datasets help minimize biases and disparities in algorithm performance. The main publicly available datasets for developing face mask recognition algorithms are summarized in Table 1.

Table 1.

Table summarizing the main datasets to develop face mask recognition algorithms.

In addition to what was previously reported, Masked Facial Recognition includes bounding boxes for the 853 photos from the three classes in the PASCAL VOC format. The pictures are classified into three different classes: “with a mask”, “without a mask”, and “wrongly worn”. Similarly, the Moxa3K dataset comprises images taken during the epidemic in Russia, Italy, China, and India. There are 3000 total photos in the dataset: 9161 faces without masks and 3015 faces with masks. Furthermore, Real-World Masked Face Dataset contains two data sets: (I) A dataset for masked-face recognition in the real world, obtained by scanning the website pictures; it has 90,000 regular faces and 5000 masks representing 525 individuals after cleaning and labeling. (II) Simulated masked-face recognition datasets comprise 500,000 masked faces from 10,000 people.

Also, LFW (Labeled Faces in the Wild) Simulated Masked Face Dataset is derived from the LFW dataset; this last is constituted by images of famous people gathered from the website. The SMFRD includes the same photos of the LFW dataset to which simulated masks have been applied. The dataset consists of 13,117 faces of 5713 people.

As the previous table shows, the MaskedFace-Net dataset is one of the largest datasets reported in the scientific literature, including images of people with and without face masks of different genders, ages, and ethnicities [26]. This dataset enables the accurate training and testing of machine-learning algorithms, ensuring a high degree of generalization.

3. Deep Learning (DL) Techniques Used to Identify Face Masks

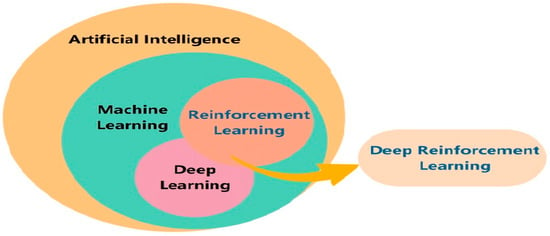

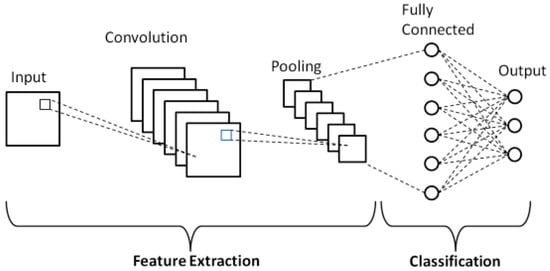

AI comprises a variety of technologies designed to mimic the reasoning functions and intelligent behavior of humans. In recent years, DL has been gaining popularity; DL is a subfield of machine learning that focuses on using Artificial Neural Networks to model and solve complex problems. For instance, DL models can identify intricate patterns in images, text, audio, and other data types to generate precise analyses and forecasts. DL techniques can be used to automate processes that ordinarily require human intellect, such as text-to-sound transcription or the description of photographs. As a subfield of AI, ML relies on training algorithms to acquire knowledge and insight from a dataset (Figure 5) [37]. Due to their limitations, ML models can only answer well-managed issues when confronting unstructured or complex problems, unlike DL models, which address unstructured or complex problems. Drawing inspiration from genetic nerve cells, models generated from biological neurons use numerous levels of interpretation to uncover the multidimensional and intrinsic relationship in data. DL techniques can extract significant relationships and dependencies from unstructured or unlabeled information by developing deep hierarchical features in the dataset [38].

Figure 5.

The relation between AI, ML, and DL [37].

Deep neural networks (DNNs) is a term used to describe SE-YOLOv3 (multi-scale object detection network that uses a feature extraction network and multiple detection heads to make predictions at multiple scales) technology occasionally. DL techniques comprise multilayered neural networks in which one or more hidden layers are linked together to form a learning-competent network with complex structures at an elevated level of extraction [39,40].

You Only Look Once (YOLO), ResNet-50, CNN (illustrated in Section CNN for Face Mask Recognition), and Region-based CNN (R-CNN), which extracts a significant amount of region proposals from the input image, identifying their classes and bounding boxes, were considered promising in identifying face marks [41,42]. Also, in [42], DL and ML models were effectively applied to identify COVID-19 from raw data obtained from medical IoT devices. These are only a few examples of the many areas where DL has been successfully applied.

Facial recognition technologies are the optimal solution for insecure systems, such as biometrics or entering a password via a keyboard, because they do not require physical interaction. Nevertheless, using face masks in these systems has presented a significant challenge for artificial vision [43], as half of the face is obscured during facial identification, losing vital information. This issue justifies the obvious need for algorithms to identify a person wearing a face mask [44].

CNN for Face Mask Recognition

Convolutional neural networks, often called CNNs or ConvNets, are a subclass of neural networks, especially effective in processing input with a grid-like architecture, like images [45]. A digital image is a binary representation of visual data. Each pixel, arranged in a grid-like pattern, has a pixel value to specify how bright and colorful it should be. Similarly to the biological vision system, each neuron in a CNN processes data only in its receptive field. The CNN arranges layers to detect the simpler patterns (e.g., lines, curves, etc.) initially, followed by complex patterns, like faces and objects. They are made up of a variety of building pieces, including convolution layers, pooling layers, and fully connected layers. The convolution layer is the core component of the CNN. This layer produces a dot product between two matrices: the constrained region of the receptive field and the kernel, a set of learnable parameters. The weight-sharing method CNNs uses significantly reduces the number of parameters that must be learned. Translation invariance is also caused by pooling layers and increasing receptive field sizes of neurons in subsequent convolutional layers. The backpropagation process enables iteratively optimizing network weights and biases, minimizing the gradient of the network’s parameters with respect to the loss function [45,46]. In [47], a framework for acquiring distinct features associated with face mask-wearing conditions was proposed. As previously introduced, CNNs are a subset of so-called DL approaches specialized for different problem typologies, like action recognition, inverse imaging problems, and image classification [48,49,50,51].

According to [52,53,54], CNN technologies have been adapted to the needs of humanity over time, resulting in applications in numerous application fields, ranging from agriculture to the military and medicine.

In research settings, neural network analysis has also been applied to the analysis of dental images for diagnostic purposes. Their usefulness and protection should be demonstrated using more precise, replicable, and comparable methods [55].

Although medical imaging modalities such as computed tomography (CT) scans and thoracic X-rays have been used for a long time, they have recently been focused on COVID-19-related applications. A review and discussion of CNN for identifying infected tissues in COVID-19 patients using images obtained from various medical imaging systems can be found in [56,57,58].

Due to recent advancements in CNN architectures for object detection, numerous CNN-based models have demonstrated exceptional performance in detecting face masks. The design of Artificial Neural Networks (ANNs) is replicated using CNN-based prototypes. A classifier is a classification algorithm that accumulates and processes hierarchical characteristics extracted from image data. Thus, input identifiers are assigned to images, and then automatic training is performed, as described in [44,59].

CNN’s first layer is the input image, followed by the pooling layer, the fully connected layer, and the convolutional layer. The central layer is the convolutional layer, which is responsible for convolving the input image with learnable filters and isolating the resulting image’s characteristics. Neurons designated to identify features in the layer inputs make up each filter. Using small squares of input data, convolution is a technique for learning visual attributes and functioning in conjunction with pixels. The pooling layer reduces the number of neurons in the small rectangular responsive area of the preceding convolutional layer. As proposed in [60], pooling and convolutional layers are responsible for feature extraction (Figure 6).

Figure 6.

General structure of Convolutional Neural Networks [60].

As previously discussed, CNNs are widely applied in image recognition applications; for instance, the research paper [60] presents a comprehensive solution for image recognition based on CNN, ensuring good classification accuracy (99.5%).

In Ref. [61] a drone was designed for mask detection and social distance monitoring, using Raspberry Pi 4 and convolutional neural network (faster R-CNN) model in order to capture images and detects unmasked persons, respectively. Then, it sends alerts to the people via speaker for maintaining the social distance, and also the detected people details to authorities and the nearest police station.

Using the VGG-16 CNN model, Ref. [62] implements a detection method with a 96% accuracy rate. Similarly, in [63], authors presented the SSDMNV2 model based on the MobileNetV2 architecture, with a 92.64 percent experimental accuracy.

In [64], a 5 × 5 complexity was divided into two 3 × 3 complexities. InceptionV3 is a pre-trained prototype that utilizes handover learning, transferring the trained neural network knowledge to the new prototype in periods of parametric substances. It employs a 48-layer CNN design. Concerning COVID-19 face mask recognition, Ref. [65] proposed a prototype employing transport learning of InceptionV3 transfer learning techniques. The proposed algorithm reached a training accuracy of 99.92% and a test accuracy of 100% using the Simulated Masked Face Dataset (SMFD).

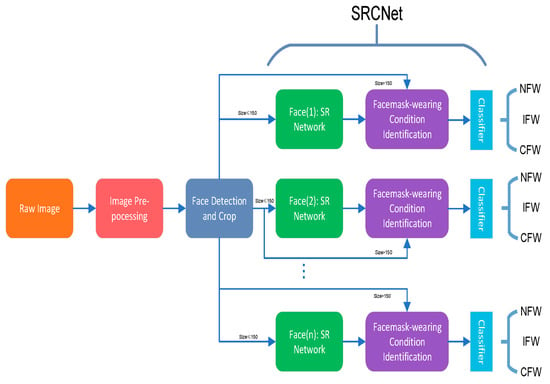

The authors of [66] propose an automatic face mask recognition method constituted by an image Super-Resolution and Classifier Network (SRCNet), evaluating the performance of a three-category classification algorithm using unrestricted 2D face pictures. The SRCNet was used to identify if masks were worn (Figure 7). The obtained test results demonstrated that the proposed method reached an improved accuracy (98.70%) compared to conventional image classification techniques.

Figure 7.

Block diagram of the face recognition algorithm presented in [66], which relies on the SRC Net and face mask-wearing condition identification process.

In [67], a face mask identification system employing ocular data and ImageNet-trained CNN was presented. The accuracy obtained ranged between 90 and 95%.

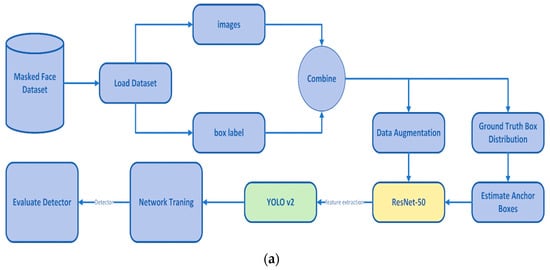

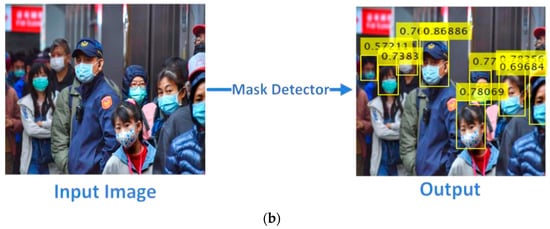

ResNet is an acronym for Residual Networks. ResNet’s benefit was that it enabled the successful training of DNNs with 150 or more layers. Before ResNet, the problem of disappearing constituents made training DNNs extremely problematic. Residual Network employs the concept of skip connection to address the problem of missing ingredients. This accomplishment was made possible by ResNet’s bypass connection feature; as a result, the weight did not decrease to a negligible level [68].

ResNet-50 is a 50-layer CNN applied to derive features. This architecture has been successfully implemented in various disciplines, such as image classification and object recognition [69]. The authors of [70] employed the ResNet-50 model for feature extraction, while YOLOv2 was used to detect medical face masks, achieving an 81% accuracy for face mask precision detection (Figure 8a); also, Figure 8b reports the outcomes of the proposed face mask detector, reported a bounding box for each detected mask and the corresponding score.

Figure 8.

Face recognition model including ResNet50 and YOLO v2 model presented in [70] (a); the outcome of the proposed masked-face detector (b).

A detection system for recognizing unmasked individuals was proposed in [71]. The proposed method comprises three components: the first layer (ResNet-50) used a Feature Pyramid Network (FPN), the second element included a Multi-Task CNN (MT-CNN), and the third element was a CNN classifier to identify masked and unmasked features. The proposed method was implemented on a mobile robot (Thor) and evaluated using a dataset of recordings captured by the robot in public spaces. The tests demonstrated that the proposed system obtained an F1 score accuracy of 99.2%.

In [72], an effective method based on computer vision was proposed, with the authors focusing on real-time automated surveillance of both social distance and face mask perspectives in public spaces. If these conditions are violated, the system will send an alert signal to the authorities.

A model that can detect people without masks using a facial detection system was proposed in [73]; the collected data were integrated with a public recognition database to compile information about the suspect, and a text message was sent to his mobile phone.

In [74], a system that can identify individuals not wearing masks in a smart city network equipped with Closed-Circuit Television (CCTV) cameras to monitor public areas was proposed. The corresponding authority is notified via the city’s network when a person is uncovered. They trained a deep learning architecture using images of persons wearing and not wearing masks. The accuracy of the trained architecture was 98.7%.

In [75], a CNN-based architecture with multiple stages was proposed to identify the individuals not wearing masks. The model’s detection accuracy was 91.2%. A near real-time technique for automatically distinguishing face masks was proposed in [76]. The proposed architecture, in conjunction with CNN, obtained a 95.8% accuracy in recognition.

The dual-stage CNN model proposed in [77] could differentiate between mask-wearing and mask-free individuals and be integrated with pre-installed CCTV cameras. The detection precision of this model is 99.98%.

4. Mobile Networks for Face Mask Detection (Mobile Netv1 and MobileNetv2)

In computer vision and deep learning, face mask recognition algorithms have seen a tremendous increase in popularity. The method proposed in [78] employed deep learning frameworks, like Tensor-Flow, Keras, and OpenCV libraries, to identify face masks in real time. The trained MobileNet model generated an accuracy score and F1 score of 99.9%.

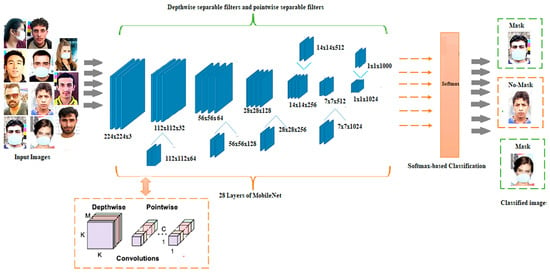

Convolutional neural networks, such as MobileNet, are specialized for embedded and mobile vision applications. They are built using depthwise separable convolutions, which are lightweight deep neural networks that can have minimal latency for embedded and mobile devices.

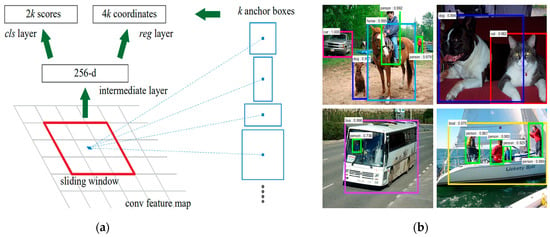

The MobileNets architecture proposed in [79] used depth-wise discrete convolutions to construct Lightweight Region Proposal Networks (RPNs), resulting in 73% accuracy for the PASCAL VOC 2007 trainval (Figure 9).

Figure 9.

Diagram depicting the operating modalities of the proposed Region Proposal Network (RPN) (a) and example of application on PASCAL VOC 2007 test (b) [79].

The smaller footprint and faster performance of MobleNets made them suitable candidates for deep-learning models. MobileNets lacked adequate accuracy compared to other prototypes, such as Accelerated R-CNN and InceptionV2 [80], a drawback of these typologies of the framework.

In [81], a detection architecture employing MobileNetV2 face mask recognition was proposed; the system prototype was developed and tested, demonstrating its correct operation in determining whether or not a person was wearing a mask. Since the proposed model was a lightweight CNN, it can be implemented into both mobile and computer vision techniques.

5. Face Mask Detection Sensors

Sensors play an essential role in the fight against COVID-19, as they are utilized in various ways, including detecting people wearing face masks and detecting COVID-19 by measuring a person’s temperature.

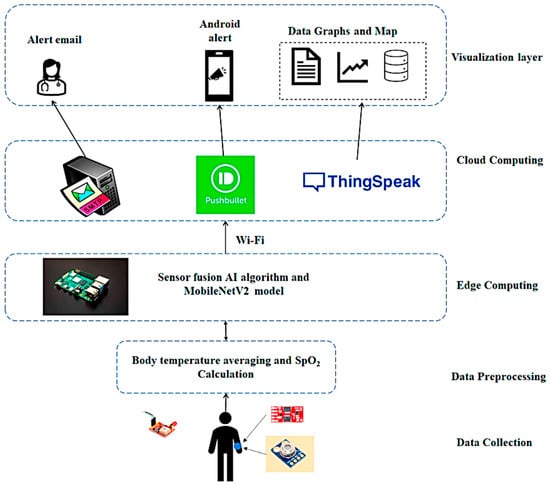

In [82], a novel Sensor Fusion (SF) method for detecting COVID-19 suspects was proposed. Also, the proposed system combines the SF algorithm with the MobileNetV2 model for face mask detection, improving the prediction accuracy. An Arduino board is interfaced with an IR temperature sensor and a PPG (photoplethysmography) sensor to acquire body temperature and SpO2. The Arduino forwards biophysical data to a Raspberry Pi board that deploys SF to detect COVID-19 suspects. MobileNetV2 is run on the Raspberry Pi board library to determine suitable mask alignment using images acquired by a camera module (Figure 10). The MobileNetV2 reached a 99.26% accuracy. On a cloud server, health data are perpetually monitored and stored (ThingSpeak). When a COVID-19 suspect is identified, healthcare authorities are notified via email with the infected person’s GPS location.

Figure 10.

Architecture of the Intelligent IoT sensor presented in [82].

The Smart Screening and Disinfection Walkthrough Gate (SSDWG) was proposed in [83] to control the entrances to public buildings. The SSDWG is designed to perform rapid screening, which includes measuring temperature with a contactless sensor and preserving the record of infected individuals for increased control and monitoring. The proposed system utilized real-time deep-learning models to detect and classify face masks. This module implemented transfer learning with VGG-16, MobileNetV2, Inception v3, ResNet-50, and CNN models. The presented system achieved a precision of 99.81%.

In [84], the authors proposed an IoT-based system for COVID-19 indoor safety monitoring. It comprises a sensor-based temperature measurement subsystem, a computer vision subsystem for mask detection, and a Raspberry Pi-based social distancing controller. In detail, the non-contact sensor measures the person’s temperature; if the person’s body temperature is higher than normal, the door is locked, and a message comprising the temperature value and location is sent to the server. The subsequent phase is mask detection; a CNN and a deep learning technique are combined for this purpose. The face frame is resized, converted into an array, and pre-processed using the MobileNetv2 algorithm. The following step involves the implemented model to forecast the processed input picture. The video frame will also be labeled with the subject’s mask-wearing status, along with the percentage inferring accuracy. The test results demonstrated that the computer vision subsystem reached 91% accuracy.

In [85], B. Varshini et al. proposed an IoT-enabled smart door that uses a machine-learning model for body temperature monitoring and face mask recognition. In detail, a model employing a real-time deep learning system implemented on Raspberry Pi was implemented to detect face masks and the number of individuals present at any given time. This model was based on a CNN deployed by the TensorFlow software library. The pictures used to train and test the model were from the internet. The dataset comprises 690 pictures with masks and 686 images without masks in this collection. The trained model obtained a 97% accuracy using the face mask detection algorithm.

Similarly, in [86], the authors introduced a face mask detection system to fight the diffusion of the COVID-19 pandemic operating on pictures and videos; furthermore, the system could monitor body temperatures to detect potentially infected people and automatically spray the disinfectant. They explored many classifiers, including the Symbolic Classifier and Support Vector Machine (SVM). In detail, three approaches are used to classify histopathological pictures. The first technique, nuclei segmentation, denotes cellular alterations. The second technique deals with textural characteristics; the last technique relies on variations in color densities. The main feature that characterizes mitotic behavior is its form. The features set and cellular structure of each blob, namely the area, perimeter, solidity, and circularity, are used to extract the morphological differences. Then, the best shape features are extracted, which characterize the behavior of mitosis. Detected nuclei are used to extract texture characteristics. Also, a custom CNN classification algorithm was proposed for face mask detection, including several Neuro-Fuzzy layers. Fifty different image datasets were tested in various experiments to evaluate their performance. The test results demonstrated that the proposed CNN method reached 91.11% accuracy with 7.24 s inferring time.

Finally, a DWS-based MobileNet, a Depthwise Separable Convolution Neural Network, was introduced in [87] (Figure 11). Instead of using 2D convolution layers, the suggested network uses depth-wise separable convolution layers, ensuring fast training with fewer parameters.

Figure 11.

Architecture of masked-face recognition algorithm based on DWS-based MobileNet proposed in [87].

It comprises a 1 × 1 convolution output node where each pulse’s spatial convolution is carried out separately. A one-dimensional maximum on the output of the Rectified Linear Unit (ReLU) activation function was employed, supplied by the output of the convolution layer. While the pooling layer’s filter size is fixed at 20 with a step number of 2, the first convolutional layer’s filtering size and depth are both modified to 60. The convolution layer’s output for the fully connected layer input is flattened down to a stepping of six. They use a dropout method in which neurons are randomly turned off during training to prevent overfitting. The Moxa3K dataset was employed for this investigation, comprising 3000 photos, 2800 used for training, and 200 for testing. The test results indicated that the DWS-based model has a greater accuracy (93.21%) than SVM and CNN, using the AIZOO FACE MASKS dataset.

Finally, Table 2 summarizes the main research lines investigated in this review work, reporting the main advantages and weaknesses of each research, as well as the current approaches to solving the problems.

Table 2.

Summarizing table of the main discussed research lines.

6. Challenges in Face Mask Recognition Systems

Face mask recognition has been criticized for its precision, reliability, and improper use of private information. In detail, listed below are some of the obstacles associated with masked-face recognition [88,89]:

- Accuracy: Face mask recognition technology should be developed and tested rigorously to ensure high accuracy rates, particularly in identifying both masked and unmasked individuals. False positives, where individuals are incorrectly identified as not wearing masks, can have severe consequences, such as denying access to essential services or causing unnecessary alarm. No face mask-recognition algorithm reaches 100% accuracy, even with the most sophisticated software. However, the technology is generally considered satisfactory, with at least 98% accuracy rates.

- Mask Variability: Face masks come in various shapes, sizes, colors, and designs. Recognizing and accommodating the diverse range of masks can be challenging for the algorithms. Each mask type may introduce unique textures, patterns, or features that must be considered for accurate recognition.

- Lighting and Environmental Factors: Variations in lighting conditions, such as shadows, reflections, or poor illumination, can affect the visibility of facial features and the overall performance of face mask recognition algorithms. Challenging lighting conditions can decrease accuracy and introduce additional variability.

- Rapid Deployment and Adaptation: The need for face mask recognition arose rapidly during the COVID-19 pandemic, requiring quick deployment of technology. Developing robust algorithms and adapting them to different scenarios and environments can be challenging due to the limited research, testing, and optimization time.

- Computational Resources: Implementing real-time face mask recognition systems that quickly process large amounts of data can be computationally demanding. High-speed processing and response times are crucial for applications where real-time identification is required.

- Database necessity: Training accurate and unbiased face mask recognition models requires diverse and representative datasets, including individuals wearing different types of masks. The availability of such datasets, as well as potential biases present in the data, can impact the performance and fairness of the algorithms.

- Ethical and Privacy Concerns: Face mask recognition involves capturing and processing personal biometric data, raising concerns about privacy, consent, and potential misuse of the collected information. Ensuring robust data protection measures, transparency, and addressing privacy concerns are important for the ethical use of the technology.

- User Acceptance and Cooperation: Face mask recognition systems often require user cooperation, such as proper positioning of masks, removing obstructions, or following specific guidelines. Achieving widespread user acceptance and compliance can be challenging, impacting the overall effectiveness of the technology.

Image variations compared to the factors discussed above (i.e., mask variability, positioning, lightning, poses) make it more difficult for the face mask recognition algorithm to detect the presence of a face mask. Indeed, it can be more difficult to compare two images if there are significant differences in head position, light orientation and intensity, mask typology, etc. There are two options for addressing these issues:

- Use numerous forms of training sets to acquire knowledge.

- The use of deep learning techniques facilitates the correction of these differences.

Addressing these challenges through ongoing research, advancements in computer vision, machine-learning techniques, and contemplating ethical implications can contribute to developing more accurate, reliable, and responsible face mask recognition systems.

7. Results and Discussions

In this section, the previous scientific works are compared and further analyzed to bring out trends and guidelines for developing modern face recognition systems.

As discussed above, face recognition employs numerous classification and processing methods, including but not limited to DL and ML algorithms, as well as Mobile Networks (V1 and V2). Table 3 summarizes the prototype discussed in the previous sections, classifying them from the perspective of the employed technique, implemented methodologies, application purposes, and considered domain.

Table 3.

Face recognition techniques and prototypes.

As shown in Table 3, CNNs are the most diffused tool for face mask and face-masked recognition detection, given the several offered advantages like spatial invariance, parameter sharing, translation invariance, and scalability [90,91,92]. As discussed above, CNNs offer a powerful framework for extracting and learning discriminative features from images, making them well-suited for masked-face recognition, face mask detection, and other computer vision tasks. Their ability to learn hierarchical representations, handle spatial variations, and scale to large datasets justifies their effectiveness and widespread use in computer vision applications.

Furthermore, the MobileNets are gaining ground in recent years thanks to their lightness and efficiency, making them ideal for implementations on mobile and embedded devices with limited computational resources. MobileNet architectures can independently process and capture unique features from sensor data, identifying complex relationships and dependencies, thus improving performances and reducing latency [93,94]. Also, deep learning, machine learning, and mixed MobileNet-sensors algorithms are common solutions for deploying masked-face recognition and face mask detection algorithms.

Afterward, the performance of the techniques previously discussed is evaluated to determine the most promising and performant solution for developing the future masked-face recognition system. Table 4 summarizes the research works on facial mask detection using deep learning and CNN, as well as the accuracy of the proposed techniques.

Table 4.

Achieved accuracy for COVID-19 facial mask detection techniques.

As shown in Table 4, surveyed algorithms varied from the prototype model/domain. Deep learning uses techniques such as public recognition database to compile information, a Hybrid deep transfer learning model, TensorFlow, Keras, and OpenCV; they also differ in the achieved accuracy from 95% to 99.64%. On the other hand, CNN combined with deep learning or consisting of multi-stage achieved better accuracy than DL with 99.98%. Face recognition sensor-based networks achieved an accuracy range from 91% to 99.81%. The lowest achieved was for Lightweight Region Proposal Networks (RPNs) (73%), compared with other techniques falling within this range.

MobileNetV2 is known for its improved accuracy compared to the original MobileNetV1 architecture. The accuracy of MobileNetV2 can vary depending on the specific dataset, task, and training configuration. However, in general, MobileNetV2 has demonstrated competitive performance on various computer vision tasks, including image classification [77,81].

Similarly, hybrid approaches that combine DL and classical ML classifiers [23] are valuable solutions in terms of performance (99.64% accuracy) but suffer in terms of processing (i.e., training and inferring) time and required resources. This result is predictable since, by stacking different algorithms, higher computational requirements are required.

8. Conclusions

Although the effects of the COVID-19 pandemic have diminished recently, it still affects various regions of the globe. According to the World Health Organization, social isolation and the use of face masks are two of the most essential methods to contain the pandemic’s spread. Recently, facial recognition has been the subject of several international studies. The presented review is focused on the most recent findings reported in the scientific literature regarding methodologies and systems for masked-face recognition and face mask detection developed to fight the COVID-19 pandemic. At first, classical ML algorithms and models for masked-face recognition and face mask detection are reviewed and discussed, lingering on solutions combining multiple ML models to improve their performance. After, DL techniques used to identify face masks are presented, focusing on CNN applications. Furthermore, modern tools, like Mobile Networks (MobileNetv1 and MobileNetv2), are introduced for facial recognition and face mask detection applications. After, IoT-based sensors for fighting the COVID-19 pandemic diffusion are reviewed; based on ML and DL algorithms, these prototypes enable rapid screening of numerous people, assessing whether they wear masks and body temperature. Then, challenges in facial recognition are reported, along with a comprehensive comparative analysis and discussion for outlining the features of face recognition systems.

In conclusion, in recent years, there has been a rise in the use of computer vision for masked-face recognition and face mask detection; to investigate their perspectives, we analyzed and compared modern facial recognition techniques, focusing on their performance. From the presented analysis, MobileNetV2 is the best candidate for designing the next generation of face recognition systems, given their performance, memory requirements, training time, etc. [95]. Likewise, hybrid approaches combining both DL and classical ML classifiers are worthy solutions in terms of performance (99.64% accuracy) [23]; however, they experience issues in terms of processing (i.e., training and inferring) time and required resources. Finally, we analyzed IoT-based sensors for face mask detection, demonstrating that they are effective tools for fighting the future pandemic, given their ubiquity and performance.

Author Contributions

Conceptualization, J.A.-N., B.A.-N., P.V. and R.D.F.; methodology, P.V., R.D.F., N.T., B.A.-N. and H.A.O.; validation, P.V. and B.A.-N.; formal analysis, J.A.-N., H.A.O., R.D.F., N.T. and B.A.-N.; investigation, J.A.-N., P.V., R.D.F. and B.A.-N.; resources, P.V. and B.A.-N.; data curation, H.A.O., P.V., N.T. and R.D.F.; writing—original draft preparation, J.A.-N., H.A.O. and R.D.F.; writing—review and editing, R.D.F., P.V. and B.A.-N.; visualization, P.V.; supervision, P.V., N.T. and B.A.-N.; funding acquisition, P.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data from our study are available upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zou, X. A Review of Object Detection Techniques. In Proceedings of the 2019 International Conference on Smart Grid and Electrical Automation (ICSGEA), Xiangtan, China, 10–11 August 2019; pp. 251–254. [Google Scholar]

- Calabrese, B.; Velázquez, R.; Del-Valle-Soto, C.; de Fazio, R.; Giannoccaro, N.I.; Visconti, P. Solar-Powered Deep Learning-Based Recognition System of Daily Used Objects and Human Faces for Assistance of the Visually Impaired. Energies 2020, 13, 6104. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Talahua, J.S.; Buele, J.; Calvopiña, P.; Varela-Aldás, J. Facial recognition system for people with and without face mask in times of the COVID-19 pandemic. Sustainability 2021, 13, 6900. [Google Scholar] [CrossRef]

- De Fazio, R.; Giannoccaro, N.I.; Carrasco, M.; Velazquez, R.; Visconti, P. Wearable Devices and IoT Applications for Detecting Symptoms, Infected Tracking, and Diffusion Containment of the COVID-19 Pandemic: A Survey. Front. Inf. Technol. Electron. Eng. 2021, 22, 1413–1442. [Google Scholar] [CrossRef]

- Visconti, P.; de Fazio, R.; Costantini, P.; Miccoli, S.; Cafagna, D. Innovative Complete Solution for Health Safety of Children Unintentionally Forgotten in a Car: A Smart Arduino-Based System with User App for Remote Control. IET Sci. Meas. Technol. 2020, 14, 665–675. [Google Scholar] [CrossRef]

- Ting, D.W.; Carin, L.; Dzau, V.; Wong, T.Y. Digital technology and COVID-19. Nat. Med. 2020, 26, 459–461. [Google Scholar] [CrossRef] [PubMed]

- Wechsler, H. Reliable Face Recognition Methods: System Design, Implementation and Evaluation, 2007th ed.; Springer: New York, NY, USA, 2006; ISBN 978-0-387-22372-8. [Google Scholar]

- Kundu, M.K.; Mitra, S.; Mazumdar, D.; Pal, S.K. Perception and Machine Intelligence. In Proceedings of the First Indo-Japan Conference, PerMIn 2012, Kolkata, India, 12–13 January 2011; Springer: Berlin/Heidelberg, Germany, 2012. ISBN 978-3-642-27386-5. [Google Scholar]

- Wechsler, H. Face in a Crowd. In Reliable Face Recognition Methods: System Design, Implementation and Evaluation; Springer: Boston, MA, USA, 2007; pp. 121–153. ISBN 978-0-387-38464-1. [Google Scholar]

- Li, S.Z.; Jain, A.K. (Eds.) Introduction. In Handbook of Face Recognition; Springer: London, UK, 2011; pp. 1–15. ISBN 978-0-85729-932-1. [Google Scholar]

- Fouquet, H. Paris Tests Facemask Recognition Software on Metro Riders—Bloomberg. Available online: https://www.bloomberg.com/news/articles/2020-05-07/paris-tests-face-mask-recognition-software-on-metro-riders#xj4y7vzkg (accessed on 15 June 2023).

- Graf, J.-P.; Neumann, J. Between Accuracy and Dignity: Legal Implications of Facial Recognition for Dead Combatants. Völkerrechtsblog 2022, 1–3. [Google Scholar] [CrossRef]

- Suganthalakshmi, R.; Hafeeza, A.; Abinaya, P.; Devi, A.G. COVID-19 facemask detection with deep learning and computer vision. Int. J. Eng. Res. Tech. (IJERT) ICRADL 2021, 9, 73–75. [Google Scholar]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-t.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Punn, N.S.; Agarwal, S. Crowd Analysis for Congestion Control Early Warning System on Foot Over Bridge. In Proceedings of the 2019 Twelfth International Conference on Contemporary Computing (IC3), Noida, India, 8–10 August 2019; pp. 1–6. [Google Scholar]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A. Deep Face Recognition. In Proceedings of the British Machine Vision Conference 2015; British Machine Vision Association: Swansea, UK, 2015; pp. 41.1–41.12. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A Unified Embedding for Face Recognition and Clustering. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; IEEE: Manhattan, NY, USA; pp. 815–823. [Google Scholar]

- Ullah, N.; Javed, A.; Ghazanfar, M.A.; Alsufyani, A.; Bourouis, S. A novel DeepMaskNet model for face mask detection and masked facial recognition. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 9905–9914. [Google Scholar] [CrossRef]

- Agbehadji, I.E.; Awuzie, B.O.; Ngowi, A.B.; Millham, R.C. Review of big data analytics, artificial intelligence and nature-inspired computing models towards accurate detection of COVID-19 pandemic cases and contact tracing. Int. J. Environ. Res. Public Health 2020, 17, 5330. [Google Scholar] [CrossRef]

- Singhal, P.; Srivastava, P.K.; Tiwari, A.K.; Shukla, R.K. A Survey: Approaches to Facial Detection and Recognition with Machine Learning Techniques. In Proceedings of the Second Doctoral Symposium on Computational Intelligence; Gupta, D., Khanna, A., Kansal, V., Fortino, G., Hassanien, A.E., Eds.; Springer: Singapore, 2022; pp. 103–125. [Google Scholar]

- Ramík, D.M.; Sabourin, C.; Moreno, R.; Madani, K. A Machine Learning Based Intelligent Vision System for Autonomous Object Detection and Recognition. Appl. Intell. 2014, 40, 358–375. [Google Scholar] [CrossRef]

- Loey, M.; Manogaran, G.; Taha, M.H.N.; Khalifa, N.E.M. A hybrid deep transfer learning model with machine learning methods for face mask detection in the era of the COVID-19 pandemic. Measurement 2021, 167, 108288. [Google Scholar] [CrossRef] [PubMed]

- Adjed, F.; Faye, I.; Ababsa, F.; Gardezi, S.J.; Dass, S.C. Classification of Skin Cancer Images Using Local Binary Pattern and SVM Classifier. In Proceedings of the AIP Conference Proceedings, Kuala Lumpur, Malaysia, 15–17 August 2016; Volume 1787, pp. 1–5. [Google Scholar]

- Sadhukhan, M.; Bhattacharya, I. HybridFaceMaskNet: A Novel Facemask Detection Framework Using Hybrid Approach. Res. Sq. 2021, 1–7. [Google Scholar] [CrossRef]

- Cabani, A.; Hammoudi, K.; Benhabiles, H.; Melkemi, M. MaskedFace-Net—A Dataset of Correctly/Incorrectly Masked Face Images in the Context of COVID-19. Smart Health Amst. Neth. 2021, 19, 100144. [Google Scholar] [CrossRef]

- Hammoudi, K.; Cabani, A.; Benhabiles, H.; Melkemi, M. Validating the Correct Wearing of Protection Mask by Taking a Selfie: Design of a Mobile Application “CheckYourMask” to Limit the Spread of COVID-19. Comput. Model. Eng. Sci. 2020, 124, 1049–1059. [Google Scholar] [CrossRef]

- Batagelj, B.; Peer, P.; Štruc, V.; Dobrišek, S. How to Correctly Detect Facemasks for COVID-19 from Visual Information? Appl. Sci. 2021, 11, 2070. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- DeepInsight RetinaFace Anti Cov Face Detector. Available online: https://github.com/deepinsight/insightface/tree/master/detection/retinaface_anticov (accessed on 15 May 2023).

- Eyiokur, F.I.; Ekenel, H.K.; Waibel, A. Unconstrained Face Mask and Face-Hand Interaction Datasets: Building a Computer Vision System to Help Prevent the Transmission of COVID-19. Signal Image Video Process. 2023, 17, 1027–1034. [Google Scholar] [CrossRef]

- Face Mask Detection Dataset 2020. Available online: https://www.kaggle.com/datasets/omkargurav/face-mask-dataset (accessed on 16 May 2023).

- Goyal, H.; Sidana, K.; Singh, C.; Jain, A.; Jindal, S. A Real Time Face Mask Detection System Using Convolutional Neural Network. Multimed. Tools Appl. 2022, 81, 14999–15015. [Google Scholar] [CrossRef]

- Ullah, N.; Javed, A. Face Mask Detection and Masked Facial Recognition Dataset (MDMFR Dataset) 2022. Available online: https://zenodo.org/record/6408603 (accessed on 16 May 2023).

- Kantarcı, A.; Ofli, F.; Imran, M.; Ekenel, H.K. BAFMD (Bias-Aware Face Mask Detection Dataset). arXiv 2022, arXiv:2211.01207. [Google Scholar]

- Ge, S.; Li, J.; Ye, Q.; Luo, Z. Detecting Masked Faces in the Wild with LLE-CNNs. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 426–434. [Google Scholar]

- Ji, H.; Alfarraj, O.; Tolba, A. Artificial intelligence-empowered edge of vehicles: Architecture, enabling technologies, and applications. IEEE Access 2020, 8, 61020–61034. [Google Scholar] [CrossRef]

- Mbunge, E.; Fashoto, S.G.; Bimha, H. Prediction of Box-Office Success: A Review of Trends and Machine Learning Computational Models. Int. J. Bus. Intell. Data Min. 2022, 20, 192–207. [Google Scholar] [CrossRef]

- Jiang, X.; Gao, T.; Zhu, Z.; Zhao, Y. Real-time face mask detection method based on YOLOv3. Electronics 2021, 10, 837. [Google Scholar] [CrossRef]

- De Fazio, R.; Mastronardi, V.M.; Petruzzi, M.; De Vittorio, M.; Visconti, P. Human–Machine Interaction through Advanced Haptic Sensors: A Piezoelectric Sensory Glove with Edge Machine Learning for Gesture and Object Recognition. Future Internet 2023, 15, 14. [Google Scholar] [CrossRef]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Rahman, A.; Hossain, M.S.; Alrajeh, N.A.; Alsolami, F. Adversarial examples—Security threats to COVID-19 deep learning systems in medical IoT devices. IEEE Internet Things J. 2020, 8, 9603–9610. [Google Scholar] [CrossRef]

- Mundial, I.Q.; Hassan, M.S.U.; Tiwana, M.I.; Qureshi, W.S.; Alanazi, E. In Towards facial recognition problem in COVID-19 pandemic. In Proceedings of the 2020 4rd International Conference on Electrical, Telecommunication and Computer Engineering (ELTICOM), Medan, Indonesia, 3–4 September 2020; pp. 210–214. [Google Scholar]

- Fan, X.; Jiang, M. RetinaFaceMask: A Single Stage Face Mask Detector for Assisting Control of the COVID-19 Pandemic. arXiv 2021, arXiv:2005.03950. [Google Scholar] [CrossRef]

- Cun, Y.L.; Boser, B.; Denker, J.S.; Howard, R.E.; Habbard, W.; Jackel, L.D.; Henderson, D. Handwritten Digit Recognition with a Backpropagation Network. In Advances in Neural Information Processing Systems 2; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1990; pp. 396–404. ISBN 978-1-55860-100-0. [Google Scholar]

- Arora, D.; Garg, M.; Gupta, M. Diving Deep in Deep Convolutional Neural Network. In Proceedings of the 2020 2nd International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), Greater Noida, India, 18–19 December 2020; pp. 749–751. [Google Scholar]

- Yao, G.; Lei, T.; Zhong, J. A review of convolutional-neural-network-based action recognition. Pattern Recognit. Lett. 2019, 118, 14–22. [Google Scholar] [CrossRef]

- McCann, M.T.; Jin, K.H.; Unser, M. Convolutional neural networks for inverse problems in imaging: A review. IEEE Signal Process. Mag. 2017, 34, 85–95. [Google Scholar] [CrossRef]

- Rawat, W.; Wang, Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Aloysius, N.; Geetha, M. A Review on Deep Convolutional Neural Networks. In Proceedings of the 2017 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 6–8 April 2017; pp. 0588–0592. [Google Scholar]

- Al-Saffar, A.A.M.; Tao, H.; Talab, M.A. Review of Deep Convolution Neural Network in Image Classification. In Proceedings of the 2017 International Conference on Radar, Antenna, Microwave, Electronics, and Telecommunications (ICRAMET), Jakarta, Indonesia, 23–24 October 2017; pp. 26–31. [Google Scholar]

- Kamilaris, A.; Prenafeta-Boldú, F.X. A review of the use of convolutional neural networks in agriculture. J. Agric. Sci. 2018, 156, 312–322. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, W.; Liang, P.; Guo, H.; Xia, L.; Zhang, F.; Ma, Y.; Ma, J. Deep transfer learning for military object recognition under small training set condition. Neural Comput. Appl. 2019, 31, 6469–6478. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef] [PubMed]

- Schwendicke, F.; Golla, T.; Dreher, M.; Krois, J. Convolutional neural networks for dental image diagnostics: A scoping review. J. Dent. 2019, 91, 103226. [Google Scholar] [CrossRef] [PubMed]

- Hassantabar, S.; Ahmadi, M.; Sharifi, A. Diagnosis and detection of infected tissue of COVID-19 patients based on lung X-ray image using convolutional neural network approaches. Chaos Solitons Fractals 2020, 140, 110170. [Google Scholar] [CrossRef] [PubMed]

- Lalmuanawma, S.; Hussain, J.; Chhakchhuak, L. Applications of machine learning and artificial intelligence for COVID-19 (SARS-CoV-2) pandemic: A review. Chaos Solitons Fractals 2020, 139, 110059. [Google Scholar] [CrossRef]

- Mukherjee, H.; Ghosh, S.; Dhar, A.; Obaidullah, S.M.; Santosh, K.; Roy, K. Deep neural network to detect COVID-19: One architecture for both CT Scans and Chest X-rays. Appl. Intell. 2021, 51, 2777–2789. [Google Scholar] [CrossRef]

- Al-Naami, B.; Badr, B.E.A.; Rawash, Y.Z.; Owida, H.A.; De Fazio, R.; Visconti, P. Social Media Devices’ Influence on User Neck Pain during the COVID-19 Pandemic: Collaborating Vertebral-GLCM Extracted Features with a Decision Tree. J. Imaging 2023, 9, 14. [Google Scholar] [CrossRef]

- Phung, V.H.; Rhee, E.J. A High-Accuracy Model Average Ensemble of Convolutional Neural Networks for Classification of Cloud Image Patches on Small Datasets. Appl. Sci. 2019, 9, 4500. [Google Scholar] [CrossRef]

- Meivel, S.; Sindhwani, N.; Anand, R.; Pandey, D.; Alnuaim, A.A.; Altheneyan, A.S.; Jabarulla, M.Y.; Lelisho, M.E. Mask Detection and Social Distance Identification using Internet of Things and Faster R-CNN Algorithm. Comput. Intell. Neurosci. 2022, 2022, 2103975. [Google Scholar] [CrossRef]

- Militante, S.V.; Dionisio, N.V. Real-Time Facemask Recognition with Alarm System Using Deep Learning. In Proceedings of the 2020 11th IEEE Control and System Graduate Research Colloquium (ICSGRC), Shah Alam, Malaysia, 8 August 2020; pp. 106–110. [Google Scholar]

- Nagrath, P.; Jain, R.; Madan, A.; Arora, R.; Kataria, P.; Hemanth, J. SSDMNV2: A real time DNN-based face mask detection system using single shot multibox detector and MobileNetV2. Sustain. Cities Soc. 2021, 66, 102692. [Google Scholar] [CrossRef]

- Liu, Z.; Yang, C.; Huang, J.; Liu, S.; Zhuo, Y.; Lu, X. Deep learning framework based on integration of S-Mask R-CNN and Inception-v3 for ultrasound image-aided diagnosis of prostate cancer. Future Gener. Comput. Syst. 2021, 114, 358–367. [Google Scholar] [CrossRef]

- Jignesh Chowdary, G.; Punn, N.S.; Sonbhadra, S.K.; Agarwal, S. Face Mask Detection Using Transfer Learning of InceptionV3. In Proceedings of the Big Data Analytics; Bellatreche, L., Goyal, V., Fujita, H., Mondal, A., Reddy, P.K., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 81–90. [Google Scholar]

- Qin, B.; Li, D. Identifying facemask-wearing condition using image super-resolution with classification network to prevent COVID-19. Sensors 2020, 20, 5236. [Google Scholar] [CrossRef] [PubMed]

- Alonso-Fernandez, F.; Hernandez-Diaz, K.; Ramis, S.; Perales, F.J.; Bigun, J. Facial masks and soft-biometrics: Leveraging face recognition CNNs for age and gender prediction on mobile ocular images. IET Biom. 2021, 10, 562–580. [Google Scholar] [CrossRef]

- Liu, J.-J.; Hou, Q.; Cheng, M.-M.; Wang, C.; Feng, J. Improving Convolutional Networks with Self-Calibrated Convolutions. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10093–10102. [Google Scholar]

- Ramachandran, P.; Parmar, N.; Vaswani, A.; Bello, I.; Levskaya, A.; Shlens, J. Stand-Alone Self-Attention in Vision Models. In Proceedings of the 33rd International Conference on Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2019; pp. 68–80. [Google Scholar]

- Loey, M.; Manogaran, G.; Taha, M.H.N.; Khalifa, N.E.M. Fighting against COVID-19: A novel deep learning model based on YOLO-v2 with ResNet-50 for medical face mask detection. Sustain. Cities Soc. 2021, 65, 102600. [Google Scholar] [CrossRef]

- Snyder, S.E.; Husari, G. Thor: A deep learning approach for face mask detection to prevent the COVID-19 pandemic. In Proceedings of the SoutheastCon 2021, Atlanta, GA, USA, 10–13 March 2021; pp. 1–8. [Google Scholar]

- Yadav, S. Deep learning based safe social distancing and face mask detection in public areas for COVID-19 safety guidelines adherence. Int. J. Res. Appl. Sci. Eng. Technol. 2020, 8, 1368–1375. [Google Scholar] [CrossRef]

- Inamdar, M.; Mehendale, N. Real-time face mask identification using facemasknet deep learning network. SSRN Electron. J. 2020. [Google Scholar] [CrossRef]

- Rahman, M.M.; Manik, M.M.H.; Islam, M.M.; Mahmud, S.; Kim, J.-H. An automated system to limit COVID-19 using facial mask detection in smart city network. In Proceedings of the 2020 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Vancouver, BC, Canada, 9–12 September 2020; pp. 1–5. [Google Scholar]

- Rao, T.S.; Devi, S.A.; Dileep, P.; Ram, M.S. A novel approach to detect face mask to control Covid using deep learning. Eur. J. Mol. Clin. Med. 2020, 7, 658–668. [Google Scholar]

- Lin, H.; Tse, R.; Tang, S.-K.; Chen, Y.; Ke, W.; Pau, G. Near-realtime face mask wearing recognition based on deep learning. In Proceedings of the 18th IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2021; pp. 1–7. [Google Scholar]

- Chavda, A.; Dsouza, J.; Badgujar, S.; Damani, A. Multi-stage CNN architecture for face mask detection. In Proceedings of the 6th International Conference for Convergence in Technology (I2CT), Maharashtra, India, 2–4 April 2021; pp. 1–8. [Google Scholar]

- Christa, G.H.; Jesica, J.; Anisha, K.; Sagayam, K.M. CNN-based mask detection system using openCV and MobileNetV2. In Proceedings of the 3rd International Conference on Signal Processing and Communication (ICPSC), Coimbatore, India, 13–14 May 2021; pp. 115–119. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7 December 2015; Volume 28, pp. 1–13. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Taneja, S.; Nayyar, A.; Vividha; Nagrath, P. Face Mask Detection Using Deep Learning During COVID-19. In Proceedings of the Second International Conference on Computing, Communications, and Cyber-Security; Singh, P.K., Wierzchoń, S.T., Tanwar, S., Ganzha, M., Rodrigues, J.J.P.C., Eds.; Springer: Singapore, 2021; pp. 39–51. [Google Scholar]

- Shinde, R.K.; Alam, M.S.; Park, S.G.; Park, S.M.; Kim, N. Intelligent IoT (IIoT) Device to Identifying Suspected COVID-19 Infections Using Sensor Fusion Algorithm and Real-Time Mask Detection Based on the Enhanced MobileNetV2 Model. Healthcare 2022, 10, 454. [Google Scholar] [CrossRef]

- Hussain, S.; Yu, Y.; Ayoub, M.; Khan, A.; Rehman, R.; Wahid, J.A.; Hou, W. IoT and deep learning based approach for rapid screening and face mask detection for infection spread control of COVID-19. Appl. Sci. 2021, 11, 3495. [Google Scholar] [CrossRef]

- Petrović, N.; Kocić, Đ. IoT-based system for COVID-19 indoor safety monitoring. In Proceedings of the 7th International Conference on Electrical, Electronic and Computing Engineering IcETRAN, Belgrade, Serbia, 28–30 September 2020. [Google Scholar]

- Varshini, B.; Yogesh, H.; Pasha, S.D.; Suhail, M.; Madhumitha, V.; Sasi, A. IoT-Enabled smart doors for monitoring body temperature and face mask detection. Glob. Transit. Proc. 2021, 2, 246–254. [Google Scholar] [CrossRef]

- Hussain, G.K.J.; Priya, R.; Rajarajeswari, S.; Prasanth, P.; Niyazuddeen, N. The Face Mask Detection Technology for Image Analysis in the COVID-19 Surveillance System. In Proceedings of the Journal of Physics: Conference Series, Thessaloniki, Greece, 16–19 June 2021; Volume 1916, p. 012084. [Google Scholar]

- Asghar, M.Z.; Albogamy, F.R.; Al-Rakhami, M.S.; Asghar, J.; Rahmat, M.K.; Alam, M.M.; Lajis, A.; Nasir, H.M. Facial Mask Detection Using Depthwise Separable Convolutional Neural Network Model During COVID-19 Pandemic. Front. Public Health 2022, 10, 855254. [Google Scholar] [CrossRef] [PubMed]

- Arbib, M.A. The Handbook of Brain Theory and Neural Networks, 2nd ed.; A Bradford Book: Cambridge, MA, USA, 2002; ISBN 978-0-262-01197-6. [Google Scholar]

- Shadin, N.S.; Sanjana, S.; Ibrahim, D. Face Mask Detection Using Deep Learning and Transfer Learning Models. In Proceedings of the 2022 International Conference on Innovations in Science, Engineering and Technology (ICISET), Chittagong, Bangladesh, 26 February 2022; pp. 196–201. [Google Scholar]

- Lu, P.; Song, B.; Xu, L. Human Face Recognition Based on Convolutional Neural Network and Augmented Dataset. Syst. Sci. Control. Eng. 2020, 9, 29–37. Available online: http://mc.manuscriptcentral.com/tssc (accessed on 22 May 2023). [CrossRef]

- Khan, S.; Javed, M.H.; Ahmed, E.; Shah, S.A.A.; Ali, S.U. Facial Recognition Using Convolutional Neural Networks and Implementation on Smart Glasses. In Proceedings of the 2019 International Conference on Information Science and Communication Technology, ICISCT 2019, Karachi, Pakistan, 1 March 2019; pp. 1–15. [Google Scholar]

- Wang, J.; Li, Z. Research on Face Recognition Based on CNN. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Banda Aceh, Indonesia, 26–27 September 2018; Volume 170, p. 032110. [Google Scholar]

- Sinha, D.; El-Sharkawy, M. Thin MobileNet: An Enhanced MobileNet Architecture. In Proceedings of the 2019 IEEE 10th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 10–12 October 2019; pp. 280–285. [Google Scholar]

- Liu, Y.; Miao, C.; Ji, J.; Li, X. MMF: A Multi-Scale MobileNet Based Fusion Method for Infrared and Visible Image. Infrared Phys. Technol. 2021, 119, 103894. [Google Scholar] [CrossRef]

- Dong, K.; Zhou, C.; Ruan, Y.; Li, Y. MobileNetV2 Model for Image Classification. In Proceedings of the 2020 2nd International Conference on Information Technology and Computer Application (ITCA), Guangzhou, China, 18–20 December 2020; pp. 476–480. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).