1. Introduction

The face is the most commonly used characteristic for expression recognition [

1] and personal identification [

2]. In unobtrusive sensing, a camera sensor is a commonly used sensor, and the use of camera sensors for facial information capturing and recording is a commonly employed method. The advantage of using a camera to obtain face information is that it can be conducted without attracting the attention of the monitored subjects, thus avoiding their discomfort and interference. In addition, the camera can operate for a long time and monitor multiple scenarios and time periods, providing a large amount of face information data [

3].

As one of the most fundamental tasks in face analysis, facial expression recognition (FER) plays an important role in understanding emotional states and intentions. FER also provides support in a variety of important societal applications [

4,

5,

6], including intelligent security, fatigue surveillance, medical treatment, and consumer acceptance prediction. The FER methods attempt to classify facial images based on their emotional content. For example, Ekman and Friesen [

7] defined six basic emotions based on a cross-cultural study, including happiness, disgust, surprise, anger, fear, and sadness. There have been various FER methods developed in machine learning and computer vision.

Over the past decade, image-level classification has made remarkable progress in computer vision. Much of this progress can be attributed to deep learning and the emergence of convolutional neural networks (CNNs) in 2012. The success of CNN inspired a wave of breakthroughs in computer vision [

8]. However, while the deep CNN methods have become the most advanced solution for FER, they also have obvious limitations. In particular, a major disadvantage of deep CNN methods is their low sampling efficiency and the fact that they require a large amount of labeled data, which excludes many applications in which the data are expensive or inherently sparse.

In particular, annotating facial expressions is a complicated task. It is extremely time-consuming and challenging for psychologists to annotate individual facial expressions. Therefore, several databases use crowdsourcing to perform annotation [

9]. For example, network datasets collected in uncontrolled environments, such as FER2013 and RAF-DB, have improved their reliability through crowdsourced annotations, but the number of annotated images is only about 30,000. The FER2013 database contains 35,887 facial expression images of different subjects, but only 547 of them show disgust. In contrast, deep learning-based approaches for face recognition are typically trained on millions of reliable annotations of face images. While the sizes of FER datasets are growing, they are still considered small from the perspective of deep learning, which requires a large amount of labeled data. For data-driven deep learning, the accuracy of direct training for such databases is low. Based on this observation, we refer to this problem as the limited labeled data obstacle; it inhibits the development of FER algorithms in deep learning. Therefore, determining how to recognize facial expressions efficiently and effectively from limited labeled data has become a critical problem.

For the FER methods relying on limited labeled data, there are two important strategies: transfer learning based on a face recognition model and semi-supervised learning based on large-scale unlabeled facial image data. One research stream focuses on applying transfer learning strategies to FER, i.e., fine-tuning deep networks on face recognition datasets to adapt them to the FER task [

10]. Furthermore, another research stream focuses on applying semi-supervised learning-based deep convolutional networks to recognize facial expressions [

11,

12]. Two points indicate the potential for the application of semi-supervised learning strategies in FER: (1) Existing large-scale face recognition databases (such as the MS-Celeb-1M dataset [

13]) contain abundant facial expressions; and (2) large amounts of facial expressions that are not labeled in databases, such as AffectNet and EmotioNet.

Motivated by the above observation and inspired by the above comments, in order to take full advantage of the limited labeled data and large amounts of unlabeled data, we conducted research on FER with limited labeled data and considered two concerns: the network structure and the training data. (1) Traditional CNNs generally have an excessive number of parameters and floating-point operations (FLOPs). However, the large parameter numbers make traditional CNN susceptible to over-fitting, especially when only limited labeled data are available. In order to improve the performance while reducing the number of parameters, we first proposed an efficient network from the perspective of limited labeled data. (2) Since a large-scale face recognition database contains an extensive number of facial expressions, the most feasible way to take full advantage of these numerous samples is to label them and then use these pseudo-labels as expression labels. Therefore, improving the efficiency and correctness of the pseudo-label labeling algorithm is another challenge to be solved in this paper. Using a pseudo-labeled training set and limited training data makes it possible to improve the accuracy of deep networks.

To address these problems, we propose an efficient FER approach based on a deep joint learning framework. In summary, this study primarily contributes in the following four ways.

We develop an effective deep joint learning framework for FER with limited labeled data that can learn parameters and cluster deep features simultaneously by combining a deep CNN with deep clustering.

We propose a novel efficient network, the affinity convolution-based neural network (ACNN), which greatly reduces the computational cost while maintaining the recognition accuracy. This network first generates a small number of intermediate features using convolution based on the affinity maps, and then applies a simple linear transformation to generate a large number of feature maps. This approach is more appropriate for limited training data.

A new expression-guided deep facial clustering method is proposed to cluster deep facial expression features in face recognition datasets. This clustering algorithm is particularly efficient and suitable for large-scale clustering, with a low computational complexity and an efficient clustering performance.

The experimental results of both inner-database evaluation and cross-database evaluation demonstrate that our framework surpasses existing state-of-the-art approaches. We investigate the factors that affect performance, including the influence of the network overhead, the impact of the clustering parameters, and we perform the visualization of deep features.

The rest of the paper is arranged as follows. We review relevant works on state-of-the-art FER methods and introduce FER with limited data in

Section 2. The proposed deep joint learning framework for FER is introduced in

Section 3. We present and discuss experimental results on four benchmark databases in

Section 4 and

Section 5. Finally,

Section 6 summarizes the work and recommends future research directions.

2. Related Work

In this section, we first briefly review the development of facial expression recognition models, from traditional methods to deep-learning-based methods, and then we introduce FER with limited labeled data in more detail.

2.1. Efficient Network for Facial Expression Recognition

The existing FER methods that are described here use two distinct approaches, i.e., traditional FER and deep-learning-based FER. In traditional FER, handcrafted features are learned directly from a set of handcrafted filters based on prior knowledge. Traditional FER methods typically employ handcrafted features that are created using methods such as local phase quantization (LPQ) [

14], histograms of oriented gradients (HOGs) [

15], Gabor features [

16], and the scaled-invariant feature transform (SIFT) [

17]. As an example, Ref. [

14] employed robust local descriptors to account for local distortions in facial images and then deployed various machine learning algorithms, such as support vector machines, multiple kernel learning, and dictionary learning, to classify the discriminative features. However, handcrafted features are generally considered to have limited representation power, and designing appropriate handcrafted features in machine learning is a challenging process.

Over the past decade, deep learning has proven highly effective in various fields, outperforming both handcrafted features and shallow classifiers. Deep learning has made great progress in computer vision and inspired a large number of research projects on image recognition, especially FER [

18,

19]. As an example, CNNs and their extensions were first applied to FER by Mollahosseini et al. [

20] and Khorrami et al. [

21]. Zhao et al. [

22] adopted a graph convolutional network to fully explore the structural information of the facial components behind different expressions. In recent years, attention-based deep models have been proposed for FER and have achieved promising results [

23,

24].

Although CNNs have been very successful, due to the large amounts of internal parameters in CNN-based algorithms, they have high computing requirements and require a lot of memory. Several efficient neural network architectures were designed to solve the above problems, such as MobileNet [

25] and ShuffleNet [

26], which have the potential to create highly efficient deep networks with fewer calculations and parameters; they have been applied to FER in recent years. For instance, Hewitt and Gunes [

27] designed three types of lightweight FER models for mobile devices. Barros et al. [

28] proposed a lightweight FER model called FaceChannel, which consists of an inhibitory layer that is connected to the final layer of the network to help shape facial feature learning. Zhao et al. [

29] proposed an efficient lightweight network called EfficientFace. EfficientFace is based on feature extraction and training, and it has few parameters and FLOPs.

Despite this, efficient networks are limited in terms of feature learning, because low computational budgets constrain both the depth and the width of efficient networks. Considering the challenges of pose variation and occlusion associated with FER in the wild, applying efficient networks directly to FER may result in poor performance in terms of both the accuracy and robustness. Furthermore, in a conventional lightweight network, such as MobileNet, pointwise convolution makes up a large portion of the overall calculations of the network, consuming a considerable amount of memory and FLOPs. Taking into account the above observation, we propose a new research contribution focused on developing an efficient neural network architecture based on the proposed affinity convolution that provides more discriminative features with fewer parameters for FER.

2.2. The Small-Sample Problem in Facial Expression Recognition

To mitigate the requirement for large amounts of labeled data, several different techniques have been proposed to improve the recognition results. In the following, we briefly review these approaches.

Table 1 provides a comparison of the representative techniques for the small-sample problem.

(1) Data augmentation for facial expression recognition.

A straightforward way to mitigate the problem of insufficient training data is to enhance the database with data augmentation techniques. Data augmentation techniques are typically based on geometric transformations or oversampling augmentation (e.g., GAN). The geometric transformation technique generates data by maintaining the linear transformations of the label and performing transformations, such as color transformations and geometric transformations (e.g., translation, rotation, scaling) [

35]. The oversampling augmentation technique generates facial images based on a GAN algorithm [

30]. Although data augmentation is effective, it has a significant drawback, namely the high computational cost of learning a large number of possible transformations for augmented data.

(2) Deep ensemble learning for facial expression recognition.

In deep ensemble learning or the use of multiple classifiers, different networks are integrated at the level of features or decisions, combined with their respective advantages, and applied to emotional contests to improve their performance on small-sample problems [

36]. Siqueira et al. [

31] proposed an ensemble learning algorithm based on shared representations of convolutional networks; they demonstrated its data processing efficiency and scalability for facial expression datasets. However, it is worth noting that the ensemble learning methodology requires additional computing time and storage requirements because multiple networks (rather than a single learning category) are used for the same task.

(3) Deep transfer learning for facial expression recognition.

The transfer learning method is an effective method for solving the small-sample problem [

37]. It attempts to transfer knowledge from one domain to another.

Fine-tuning is a general method used in transfer learning. Many previous studies have employed face recognition datasets like MS-Celeb-1M [

13], VGGFACE2 [

38], and CASIA WebFace [

39] in order to pre-train networks like ResNet [

8], AlexNet [

40], VGG [

41], and GoogleNet [

42] for expression recognition. Then, these networks can be fine-tuned based on expression datasets like CK+, JAFFE, SFEW, or any other FER dataset to accurately predict emotions. For example, Ding et al. presented FaceNet2ExpNet [

10], which was trained on a face recognition database and then trained on facial expressions and face recognition; it was fine-tuned on facial expressions to reduce the reliance of the model on face identity information. In spite of the advantages of training FER networks on face-related datasets, the identity information retained in the pre-trained models may negatively affect their accuracy.

Deep domain adaptation is another commonly used transfer learning method. This method uses labeled data from one or more relevant source domains to generate new tasks in the target domain [

43]. To reduce dataset bias, Li et al. [

1] introduced the maximum mean discrepancy (MMD) into a deep network for the first time. Taking advantage of the excellent performance of the GAN, the adversarial domain adaptation model [

32] was rapidly popularized in deep learning for domain adaptation.

(4) Deep semi-supervised learning for facial expression recognition.

Semi-supervised learning (SSL) explores both labeled data and unlabeled data simultaneously in order to mitigate the requirement for large amounts of labeled data. Many SSL models have shown excellent performance in FER, including self-training models [

33] and generative models [

34]. The principles of SSL are based on a regularization-based approach to achieving high performance; however, they rely heavily on domain-specific data enhancements that are difficult to generate for most data modalities. Based on pseudo-label-based semi-supervised learning methods, a deep joint learning model is proposed. It alternates between learning the parameters of an efficient neural network and efficiently clustering and labeling facial expressions.

A study that is closely related to ours [

12] suggested selecting high-confidence facial expression images to assist in network training with limited labeled data. Even though both our work and [

12] propose labeling face recognition datasets and adding them to the training data for the network, our work differs in two key aspects: First, compared with [

12], our proposed method not only solves the limited training data problem but also creates a more efficient network structure with fewer parameters and calculations. This makes it more suitable for small amounts of facial image training data and improves our FER performance. Second, in terms of labeling new data, Ref. [

12] used knowledge distillation to compress the entire dataset into a sparse set of synthetic images. Unlike [

12], the proposed expression-guided deep facial clustering method not only preserves the original features of the face data but also efficiently labels the data and controls the number of markers and their accuracy.

3. Methodology

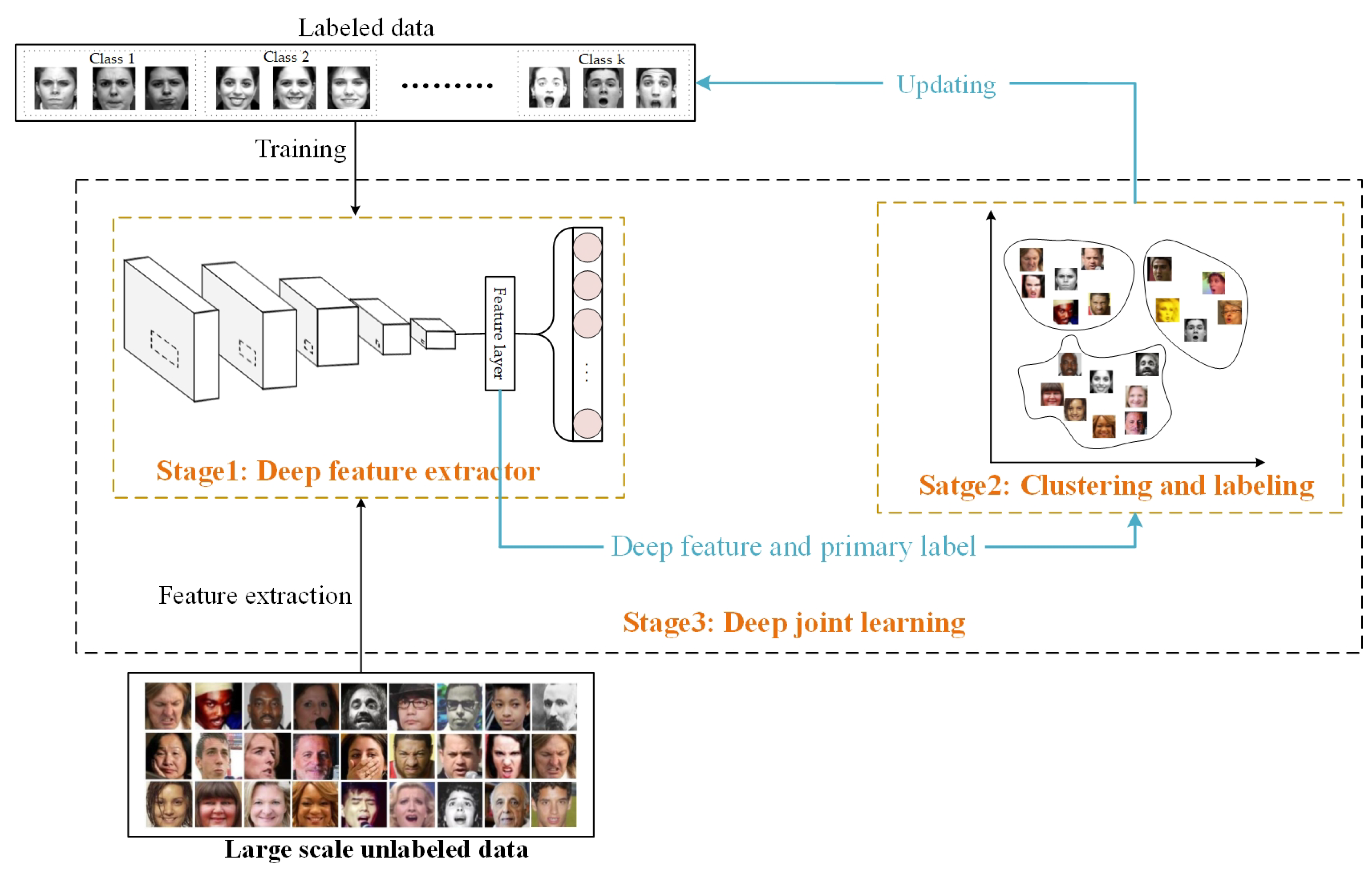

3.1. Overall Framework

In this paper, a FER algorithm based on deep joint learning is proposed; it uses a small amount of labeled data and a rich unlabeled face recognition dataset. The proposed framework alternately learns the parameters of the efficient network based on an affinity convolution (AC) module structure (denoted as ACNN) and implements face clustering (denoted as EC) using a deep feature clustering assignment method guided by expressions. We call the whole deep joint learning framework ACNN-EC. The framework consists of a three-stage iterative learning procedure. It includes a deep feature extraction stage, clustering and labeling stage, and deep joint learning stage. We provide a description of the general procedure of ACNN-EC in

Figure 1.

Before discussing our overall framework in detail, we first outline some preliminary aspects of FER formally. We are dealing with a facial expression dataset and a face recognition dataset . (1) First, we pre-train an efficient network model, ACNN, for facial expression recognition on . At this stage, the pre-trained ACNN is used as the deep feature extractor, and and are classified. Then, both and have initial labels and corresponding deep feature representations. (2) In the second stage, the deep features of all training data ( and ) are clustered, and expression-guided deep facial clustering is used. Then the confidence data and the corresponding pseudo-labels are selected. In the next iteration, the selected data will be added to the training dataset. (3) In the last stage, the pre-trained ACNN is fine-tuned using the selected pseudo-labeled data, as well as the labeled facial expression data, and a combined loss function is used to train the entire network model. The process continues iterating until the maximum number of iterations is reached or the error function is reduced to below some pre-set value.

3.2. Proposed ACNN for Facial Expression Recognition

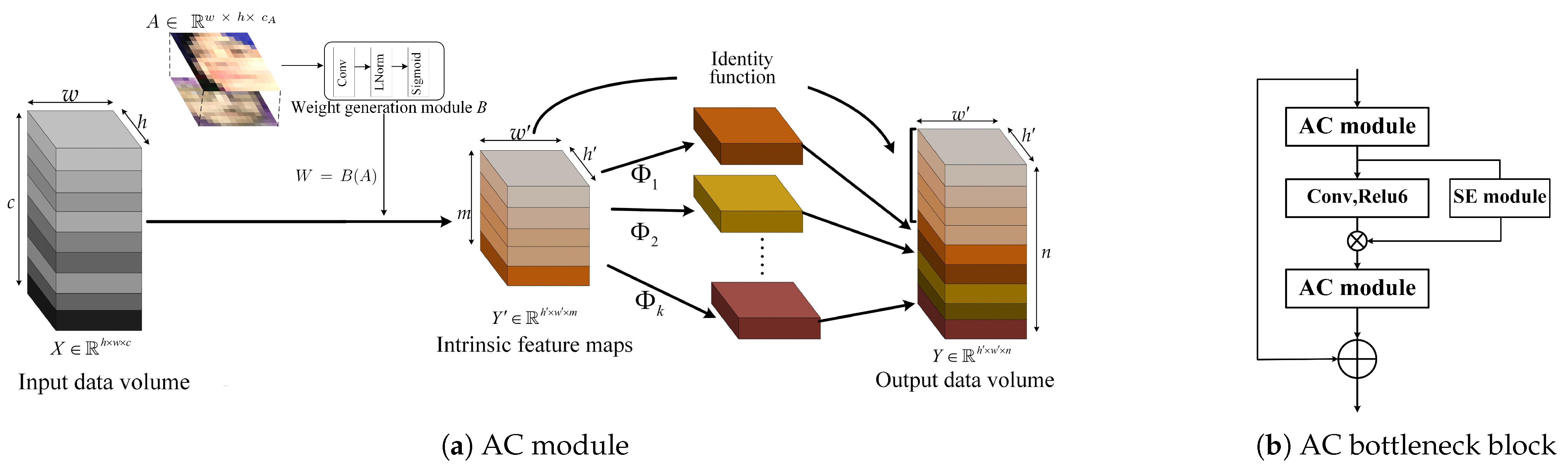

3.2.1. Proposed AC Module

Typically, deep learning-based approaches consist of a large number of parameters. In order to avoid problems such as over-fitting, the network requires a lot of training data. Although some recent studies have constructed efficient CNNs using shuffle operations [

26] or depthwise convolution [

25], the 1 × 1 pointwise convolution layers still consume the majority of the computational memory and FLOPs. To use cheap operations to generate more feature maps, we propose an efficient convolutional structure called the affinity convolution (AC) module. We first obtain a small number of more discriminative features from the weight generated by the affinity maps, and then we generate further features through inexpensive linear operations. In order to reduce the need for computing and storage resources, the AC module is used to add affinity maps to the primary convolution to produce a few intrinsic feature maps, and then it utilizes cheap linear operations to augment the features, as well as to increase the number of channels.

First, given the input feature map

,

h and

w are the width and height of the input feature map, respectively, and

c is the number of input channels. Specifically, we input the generated affinity maps

into the weight generation module

B to generate the weight

W:

inspired by [

44], the weight generation module

B consists of a standard convolutional layer, a normalization layer [

45], and an activation layer (e.g., sigmoid). The number of channels

in affinity maps is set to 4.

Second, the weight

W is then inserted into the primary convolutional layer to generate the intrinsic feature maps:

where

represents the intrinsic feature maps,

and

are the width and height of the intrinsic feature map,

m is the number of channels. The operation × between the input feature map

X and weight

W represents an element-wise multiplication operation. ∗ represents the convolution operation.

represents the convolution filter used (

), and the kernel size of the convolution filters

is

. In practice, the primary convolution in the AC module here is pointwise convolution for its efficiency.

Last, in order to obtain the

n feature maps,

s features are generated using a series of cheap linear operations that are performed on each intrinsic feature

:

where

is the

j-th linear operation used to generate the

j-th feature map

, and

is the

i-th intrinsic feature map in

. Finally, the last

is the identity mapping for preserving the intrinsic feature maps as shown in

Figure 2a. In the implementation of linear operations, the group convolution is performed using linear operations. The AC module can obtain

feature maps

. With a set of intrinsic feature maps, the AC module generates a large number of feature maps at a low cost by using a series of parallel linear transformations.

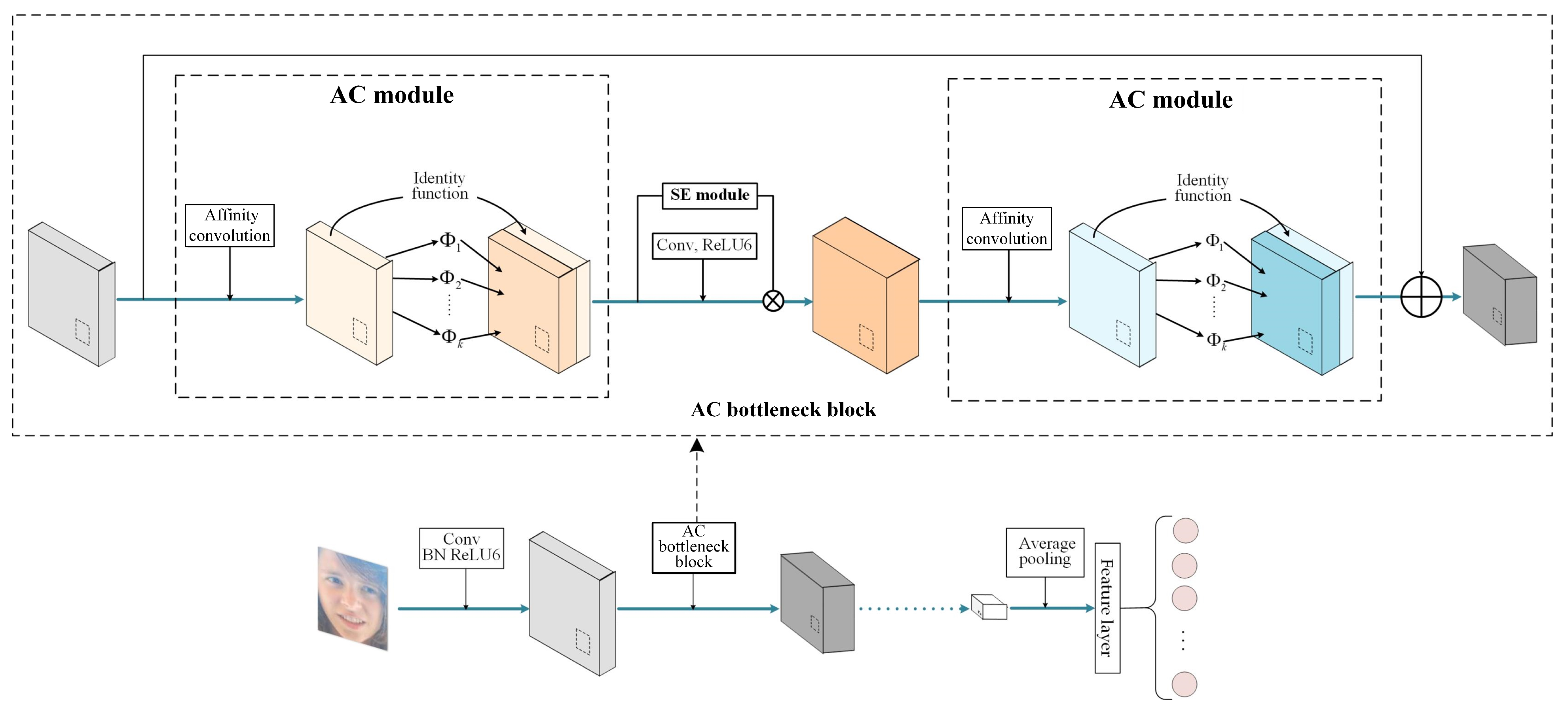

3.2.2. Proposed ACNN

Based on affinity convolution (AC), we propose an efficient neural architecture for FER. The proposed neural architecture is an efficient CNN based on an AC bottleneck block. First, we introduce the AC bottleneck block architecture in

Figure 2b. Unlike in a conventional bottleneck block, a squeeze-and-excitation (SE) block [

46] is inserted between the AC modules. The squeeze-and-excitation (SE) network, recognized as the winner of the ImageNet large-scale visual recognition competition (ILSVRC) 2017, has shown significant performance improvements for state-of-the-art deep architectures, albeit with a slightly higher computational cost. The SE block [

44] is an innovative architectural unit designed to enhance the representational capacity of a network by enabling dynamic channel-wise feature recalibration [

46]. Through the proposed AC bottleneck block, the percentage of pointwise convolution calculations in the overall network is reduced. Furthermore, the AC bottleneck block is also capable of performing multiple depthwise convolutions of different scales in order to extract the spatial features of an input feature map, thereby revealing more diverse and abstract information about the input feature map.

Based on the above AC bottleneck block, the state-of-the-art lightweight network MobileNet-V2 is used as the backbone network in our efficient CNN to reduce the computational overhead of the FER model; it is shown in

Figure 3.

3.2.3. Analysis of ACNN

In this part, we analyze the network from the number of parameters and FLOPs. We take the standard convolution process, given the input feature map

, where

w and

h are the width and height of the input feature map, respectively, and

c is the number of input channels; the standard convolution can be represented by the following equation:

where

represents the convolution filters,

represents the output feature map,

and

are the width and height of the output data, respectively,

n is the channel number, and ∗ represents the standard convolution operation. The kernel size of the convolution filters

f is

.

b is the bias term. In a standard convolution, the number of parameters and FLOPs involved can be calculated as follows:

The number of parameters and FLOPs involved in the AC module can be calculated as follows. This calculation disregards the computational cost of the weight generation module. It is worth mentioning that pointwise convolution is performed in the primary layer, which involves element-wise multiplication of corresponding elements in the input tensor. The group convolution is performed as linear operations, where convolutions are performed within each group.

Take a standard convolution with a convolutional layer as an example, with an input volume of and an obtained output volume of . The number of parameters is parameters, and 43,352,064 FLOPs are required for the standard convolution. We replace the standard convolution with an AC module, which consists of a primary layer and a grouped convolutional layer. The number of parameters in the AC module is parameters. Furthermore, the AC module involves only 3,763,200 FLOPs. The proposed AC module has nearly 10 times less FLOPs computation than standard convolution.

3.3. Expression-Guided Deep Face Clustering

In order to eliminate the need for the manual annotation of data, facial expression clustering has received increased attention. There are some existing centroid-based clustering methods, such as k-means and fuzzy c-means clustering, which are widely used and easy to apply. Nevertheless, these methods are sensitive to the random initialization state, making their clustering results difficult to replicate. Furthermore, clustering large-scale deep facial features requires a more efficient clustering algorithm.

In recent research, the approximate rank-order clustering algorithm [

47] has been shown to successfully overcome the challenges of large-scale clustering. The authors of [

47] proposed an approximate rank-order metric for linking image pairs in order to define a cluster. The key concept of approximate rank-order clustering is to calculate the distance between only the first

k nearest faces. Compared with other clustering methods, the computational complexity of approximate rank-order clustering is only

, and its efficiency is much higher than that of other clustering methods. However, since approximate rank-order clustering is unsupervised, facial expression clustering is easily affected by face identity features, and it cannot utilize prior labeled facial expression information to guide the learning process. Based on the above analysis, we developed an expression-guided approximate rank-order clustering method, which effectively solves the problem of large-scale facial expression clustering.

For facial expression recognition, we propose an expression-guided semi-supervised deep clustering method based on an approximate rank-order metric. Specifically, based on the learned deep feature space, we develop a semi-supervised approximate rank-order clustering method to visually and semantically identify similar groups of facial expression images. Then, we re-annotate these groups with the initial labels obtained by ACNN to train FER classifiers. Next, we will discuss the process of the expression-guided deep facial clustering algorithm in detail.

First, we learn the deep features of the facial expression data from the deep feature extractor, as shown in

Figure 4a. We measure the distance between two data according to their order in each other’s neighborhoods, i.e., the rank-order distance, as shown in

Figure 4b. Second, we compute a series of top-

k nearest neighbors in the facial expression data for the labeled expression data, as shown in

Figure 4c. Depending on whether the data share nearest neighbors, the distance between data

and

is computed as follows:

where

represents the rank of the data

in the data

’s neighbor list,

represents the

i-th data in the neighbor list of

.

where

is an indicator function.

is an asymmetric distance function, this asymmetric distance function is further utilized to define a symmetric distance between two faces,

and

can be calculated as follows:

The pairwise distances between each pair of data can be computed using Equation (

11). An illustration of expression-guided deep facial clustering is shown in

Figure 4. In

Figure 4c, we have two data samples,

a and

b. Using the Euclidean distance, we generate two neighbor lists,

and

, by sorting the top six closest points.

represents the data that are the closest to sample

a. From

Figure 4c and the above equations, we can conclude that

,

,

,

, and

.

In the third step, we merge all data pairs whose distance is below a certain threshold. Finally, we label all data within the cluster with the same label as the vast majority of the data, based on the principle of majority rule.

3.4. Deep Joint Learning Based on Combined Loss Function

In spite of the fact that the two-stage methods could simultaneously learn deep feature representations and perform classification and clustering, their performance may be adversely affected by the errors accumulated during the alternation process. Our observations of the expression labels assigned by clustering based on deep facial features revealed that the clusters of different classes overlapped; for instance, the categories ‘disgust’ and ‘anger’ shared similar facial features, thereby contributing to inter-class similarities. Additionally, within each class, there are images whose facial expression intensities vary from low to high, thus resulting in intra-class variations. The results of [

48] demonstrate that expression datasets are highly imbalanced in terms of inter-class similarities and intra-class variations. In contrast, Wen et al. [

49] showed that the commonly used softmax loss was not significantly discriminative and could not ensure a high similarity for intra-class data or diversity for inter-class data.

According to the above analysis, in order to better classify and cluster the deep facial expression features learned by ACNN, we propose a combined loss function in which the center loss is combined with the additive angular margin loss [

50]. The center loss is defined as

where

denotes the

ith deep feature, belonging to the

th class,

denotes the

th class center of the deep features by averaging over the deep features in the

th class.

m is the training mini-batch, and the class centers are updated with respect to mini-batch

m. This loss pulls closer training samples to their corresponding class centers.

The additive angular margin loss was first introduced by Deng et al. [

50]; it improves the conventional softmax loss by optimizing the feature embedding on a hypersphere manifold where the learned expression representation is more discriminative. Suppose that

denotes the embedding features computed from the last fully connected layer of the neural networks and

is its associated class label. By defining the angle

between

and

jth class center

as

, the additive angular margin loss is defined as

where

a is the additive angular margin and

s is the scaling parameter. The parameter setting of the loss function in the experiment uses the recommended parameters. More details about the optimization processes and recommended parameters can be found in [

50].

The combined loss is shown as follows:

where

and

denote the center loss and additive angular margin loss, respectively.

is a parameter that balances

and

.

3.5. The Pseudocode of the ACNN-EC Framework

This section elaborates on the details of the proposed ACNN-EC for facial expression recognition. The specifics of our algorithm can be found in Algorithm 1. ACNN-EC comprises three main modules: deep feature extractor, clustering and labeling, and deep joint learning.

| Algorithm 1 ACNN-EC framework. |

Require: Labeled data , unlabeled data (a face recognition dataset) , test data . Max iterations N.

Ensure: Label assignments for the test data

- 1:

Input labeled data , AC module is obtained by Equations (1), (2), and (3) respectively, and the ACNN network is constructed on the basis of the AC module. - 2:

Pre-train ACNN with combined loss by Equations (12)–(14). - 3:

for to do - 4:

/*deep feature extractor:*/ - 5:

pre-train()/fine-tune() DENet on - 6:

obtain deep feature representations and initial labels for - 7:

/*clustering and labeling:*/ - 8:

cluster the deep features of all training data ( and ) by Equations (10) and (11) - 9:

obtain confidence data and the corresponding pseudo-labels by label comparison - 10:

add confidence data and their pseudo-labels to - 11:

end for - 12:

obtain a label assignment on test data

|

4. Experiments

To demonstrate the validity of our proposed method, ACNN-EC, a large number of experiments on two in-the-wild FER benchmarks, one lab-controlled dataset and our self-collected dataset, are performed. Then, cross-dataset experiments are performed on the CK+ dataset, MMI dataset, and self-collected dataset. The MS-Celeb-1M-v1c database is used as the facial expression clustering data, and the labeled data are used as pseudo-labeled data to augment the training data for the network. The FER datasets used in our experiments and the details of our implementation are described in the next subsection. Then, ACNN-EC is compared with several state-of-the-art approaches. In addition, experiments are performed to investigate the influence of each component of the proposed model.

4.1. Description of Databases

Experiments are performed on four FER datasets to evaluate the proposed algorithm, ACNN-EC. A more detailed description of the datasets is presented as follows. We obtained permission to use and display images from the face dataset described below.

(1) The RAF-DB database [

9] contains 29,672 real-world facial images. There are two different emotion subsets in RAF-DB: single-label and multi-label. In this study, we adopt the single-label set, which contains 15,339 images, including 12,271 training images and 3068 test images.

(2) The FER2013 database [

51] was obtained from the Google search engine. FER2013 includes 35,887 images with expression labels. These images consist of 28,709 training images, 3589 validation images, and 3589 test images.

(3) The extended Cohn–Kanade (CK+) database [

52] consists of 593 video sequences from 123 subjects, and it is one of the standard benchmarks for FER. There are only 327 sequences that have been labeled with seven basic expression labels. Each of the final three frames of each sequence with peak formation is extracted, and the first frame (neutral face) is selected, yielding 1308 images labeled with seven basic expressions. Then the 1308 images are divided into 10 groups for n-fold cross-validation experiments.

(4) The MMI database [

53] is a collection of 326 sequences, 213 of which have basic expression tags; it was constructed in a controlled laboratory environment. Compared with CK+, MMI includes onset-apex-offset labels.

(5) The MS-Celeb-1M-v1c database [

54] is a celebrity recognition database. MS-Celeb-1M-v1c is a clean version of MS-Celeb-1M [

13]. The original MS-Celeb1M consists of a large number of noisy faces. As a result, we use MS-Celeb-1M-v1c, which is clean but maintains the completeness of facial images. MS-Celeb-1M-v1c contains 86,876 identities and 3,923,399 aligned images. In this experiment, to reduce the face identity information, we extracted 86,876 identities from the whole database, each identity with 10 images.

(6) Our self-collected dataset was collected in an online learning environment, where we gathered facial expression information from learners. We recruited 10 university students, including 5 males and 5 females. The data collection was conducted in a laboratory environment, and in order to ensure that the online learning process of the learners was not disturbed, we provided them with a free and independent learning environment. The indoor lighting was not specially treated, and natural light from indoor fluorescent lamps and outdoor sunlight was used. Each student watched three ten-minute teaching videos, and a total of 30 videos of learners’ online learning were collected. Unlike datasets such as CK+ and MMI, which induce facial expressions, our dataset contains real emotional data in a natural environment. The video data were recorded with a camera (Logitech C1000e) to capture 2D facial motion data at 30 frames per second and 512 × 512 pixels.

After filtering out data that did not meet the experimental requirements, we obtained 1067 valid video segments, as shown in

Figure 5. Referring to the processing method of the CK+ dataset, each of the final three frames of each sequence with peak formation is extracted, and the first frame (neutral face) is selected, yielding 71,185 images. These images were labeled in two ways: the first method involved labeling for the seven basic expressions, while the second method involved labeling for three levels of engagement (i.e., very engaged, nominally engaged, and not engaged) used to detect involvement. For this experiment, we only used data labeled for the seven basic expressions. The data were labeled through crowdsourcing. We used 56,947 images as training data, 7119 images as validation data, and another 7119 images as test data. The distribution of images among the seven emotions is shown in

Table 2.

As can be seen from

Table 2, there is a serious category imbalance in our self-collected dataset. In our experiments, we employed random flipping, random rotation, and random scaling operations to achieve approximate balance for each class. Although the number of subjects was relatively small, the number of training samples selected from the data of each subject was relatively large, with small intra-class distance and large inter-class distance.

4.2. Implementation Details

For all of the datasets used in our experiments, consistent with EfficientFace [

29] and MA-Net [

55], the face images are detected and aligned using RetinaFace [

56]. It should be noted that the RetinaFace is robust to occlusions and non-front poses. Following this, the faces are all resized to 112 × 112 pixels. We initialize the proposed network, ACNN, with the weights that result when it is pre-trained on MS-Celeb-1M. The SGD is used as an optimizer, the mini-batch size is set to 128, the momentum is set to 0.9, and the weight decay is set to 0.0005. The initial learning rate is set to 0.001, and every ten epochs, it is multiplied by 0.1. For the combined loss, we set

to 0.05. For clustering, we fix the distance thresholds

D at 2.2, 1.9, 1.7, and 1.7 for the RAF-DB, FER2013, CK+, and self-collected datasets, respectively, and we set the number of node nearest neighbors in the four datasets to 8, 9, 5, and 7, respectively. Our reported results are based on an average of five-fold cross-validation experiments. Our method is implemented in PyTorch and runs using two NVIDIA 3080 Ti GPUs.

4.3. Results and Discussion

To evaluate the effectiveness of ACNN-EC, we adopt the most common FER settings, which are the inner-database evaluation and cross-database evaluation. The proposed method, ACNN-EC, is compared with various state-of-the-art deep learning methods. Our experiments are evaluated using the average classification accuracy. In the inner-database evaluation experiment, we evaluated our method on four FER benchmarks, namely RAF-DB, FER2013, CK+, and self-collected datasets.

Table 3,

Table 4,

Table 5 and

Table 6 summarize the FER accuracy on these four datasets, respectively.

Table 3 reports the performances of different models on the RAF-DB dataset. In

Table 3, among all the competing models, [

23], gACNN [

24], and OADN [

60] aim to disentangle the disturbing factors in facial expression images, and RAN and gACNN design a regional attentional branch network and assign different weights to facial regions according to the occlusion amount. SCN [

61] was proposed to solve the noise label problem. DAS [

12] and PAT [

59] introduce more manual labels into the training data. Compared with algorithms that introduce more human labels into the training, such as the DAS and PAT algorithms, our algorithm achieves better results. MobileNet-V2 is employed as a baseline in our experiments, and we also consider some efficient CNNs, such as EfficientFace [

29]. Compared with some FER algorithms based on an efficient CNN structure, the proposed ACNN-EC also has a higher recognition accuracy.

The performances of the models on the FER2013 database are reported in

Table 4. Our method achieves an average recognition accuracy of 75.42%. We also compare it with DNNRL [

64] and ECNN [

65], where DNNRL uses InceptionNet as the backbone network and updates the model parameters according to the sample importance. ECNN proposes a probability-based fusion method to utilize multiple convolutional neural networks for FER. From

Table 4, it can be seen that ACNN-EC obtains the highest recognition accuracy among the comparison methods, demonstrating its effectiveness and robustness for FER. In

Table 5, for CK+, ACNN-EC achieved the highest recognition accuracy out of all the approaches.

Table 6 displays the inner-dataset experimental results on the self-collected dataset. It can be seen that our algorithm achieved excellent performance. Our self-collected dataset was used solely for inner-dataset experiments. However, we conducted five independent repetitions of the experiments to address any potential biases. This approach helped us mitigate optimistic bias and ensured a more robust evaluation of our proposed methods. It is worth noting that since the number of subjects was relatively small, and the number of training samples selected from each subject’s data was relatively large, the intra-class distance was small and the inter-class distance was large, so the overall accuracies of the algorithms were high in the self-collected dataset. In

Section 5, we will conduct a more detailed quantitative evaluation of our self-collected datasets.

Cross-database experiments were carried out to test the generalizability of our model. For the cross-database evaluation experiment, we evaluated ACNN-EC on two lab-controlled datasets, namely CK+ and MMI. We trained the network on the RAF-DB database and tested it on the CK+ and MMI databases. The results of the cross-dataset experiments using CK+ and MMI are shown in

Table 7 and

Table 8. The results of previous studies were cited in [

12]. It can be observed from

Table 5 and

Table 6 (ACNN-EC vs. WS-LGRN) and

Table 8 (ACNN-EC vs. ECANN) that the performance gains of our proposed algorithm are moderate. However, it is important to note that our algorithm offers the advantage of reduced computational cost, which is another significant aspect highlighted in our experimental analysis. In particular, we examine the performance of ACNN-EC in terms of the number of parameters and FLOPs. By highlighting this advantage, we aim to provide a comprehensive understanding of the trade-offs between performance gains and computational costs when considering the application of our algorithm.

4.4. Empirical Analysis

In this subsection, the ACNN-module is analyzed on the RAF-DB dataset with a ResNet-50 backbone and a MobileNet-V2 backbone in terms of the number of parameters and FLOPs. Then, we investigate the impact of the hyperparameters on expression-guided deep facial clustering. Finally, we perform a visualization analysis of each iteration of the proposed deep joint learning model.

4.4.1. Computational Overhead of Networks

In our experiments, the backbone network is either ResNet50 [

8] or MobileNetV2 [

63]. Then, expression-guided deep facial clustering is added to the network. Both ResNet-50 and MobileNet-V2 are pre-trained on MS-Celeb-1M.

Table 9 shows the number of parameters and FLOPs and compares them with the baseline ResNet-50 and MobileNet-V2 models on the RAF-DB dataset.

Table 9 includes the proposed model trained with the two different backbone networks in the last two rows. As we can see, MobileNet-V2 provides more benefits to the model than ResNet-50. We analyze this because our proposed algorithm is more suitable for processing small sample data and avoids the overfitting that may occur with a large number of parameters in the case of ResNet-50. In addition, compared with the backbone network, the AC module structure in the lightweight network obtains a higher recognition rate; in addition, the number of parameters is significantly reduced, and the computational complexity is also greatly reduced. Our method achieves a high level of accuracy with a small number of computations; it exceeds the baseline by a large margin, from 83.96% to 90.98%.

In comparison with networks used in previous works, which often used ResNet or VGG-16 as the backbone network, our network is relatively small. Furthermore, compared with some FER algorithms based on an efficient CNN structure, such as EfficientFace [

29] and MobileNet-V2 [

63], although the proposed algorithm requires more computational resources and memory than EfficientFace, the proposed ACNN model has a better recognition accuracy. This is one of the main advantages of this method.

4.4.2. Quantitative Evaluation of Expression-Guided Deep Face Clustering

In order to verify the effect of expression-guided deep facial clustering on the network training, we first investigate the performance of the expression-guided deep facial clustering method in the deep joint learning model on four FER datasets in

Table 10.

As can be seen from

Table 10, the number of initial labeled training images was relatively small, and the number of selected and labeled images was also relatively small, resulting in poor accuracy. However, by iteratively fine-tuning the network with the available labeled training data, the accuracy results were significantly improved. Specifically, starting from the second iteration of the model, we fine-tune the network with the newly labeled training data, and the accuracy improves to 87.29% and 90.98% on the RAF-DB dataset. It can be seen that ACNN-EC for expression-guided deep facial clustering effectively improves the network training and accuracy.

4.4.3. Impact of Clustering Hyperparameters

Considering the importance of the expression-guided deep facial clustering method, it is important to investigate the impact of hyperparameters on expression-guided deep facial clustering. Here, we empirically set the clustering hyperparameters for the four datasets, as shown in

Table 11. The distance thresholds for the clustering method were set to 2.2, 1.9, 1.7, and 1.7 for these four datasets, respectively. There is no formal method for selecting the most effective distance threshold, which is determined empirically. When dealing with extremely large datasets, such as the MS-Celeb-1M-v1c dataset, for example, one fundamental concern is the time cost, and the time cost is also an important evaluation metric. In

Table 11, we see that based on the deep feature spaces generated for the four FER datasets, the running time for expression-guided deep facial clustering is satisfactory for large-scale face clustering. This also confirms our primary motivation for adopting this clustering algorithm.

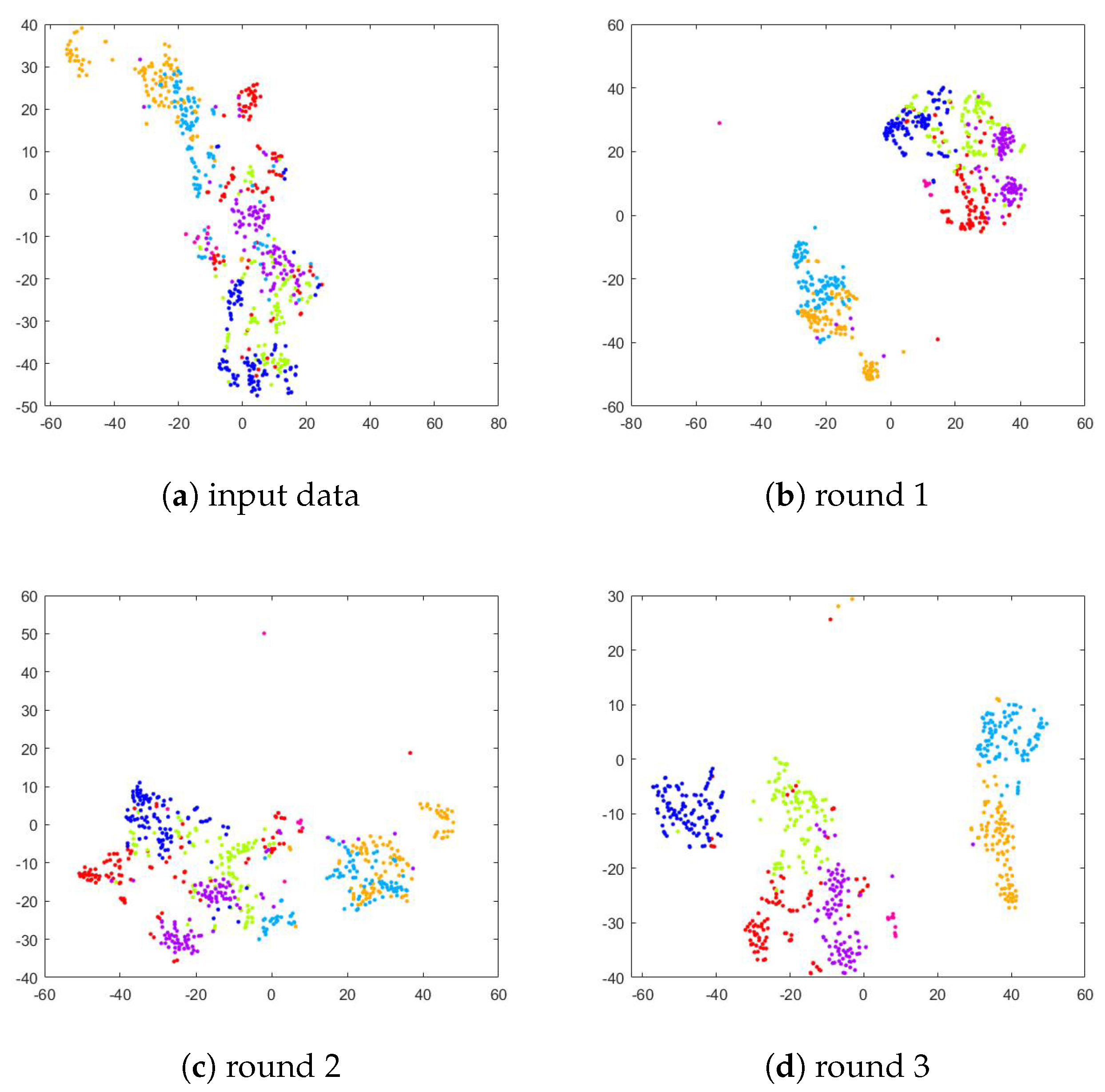

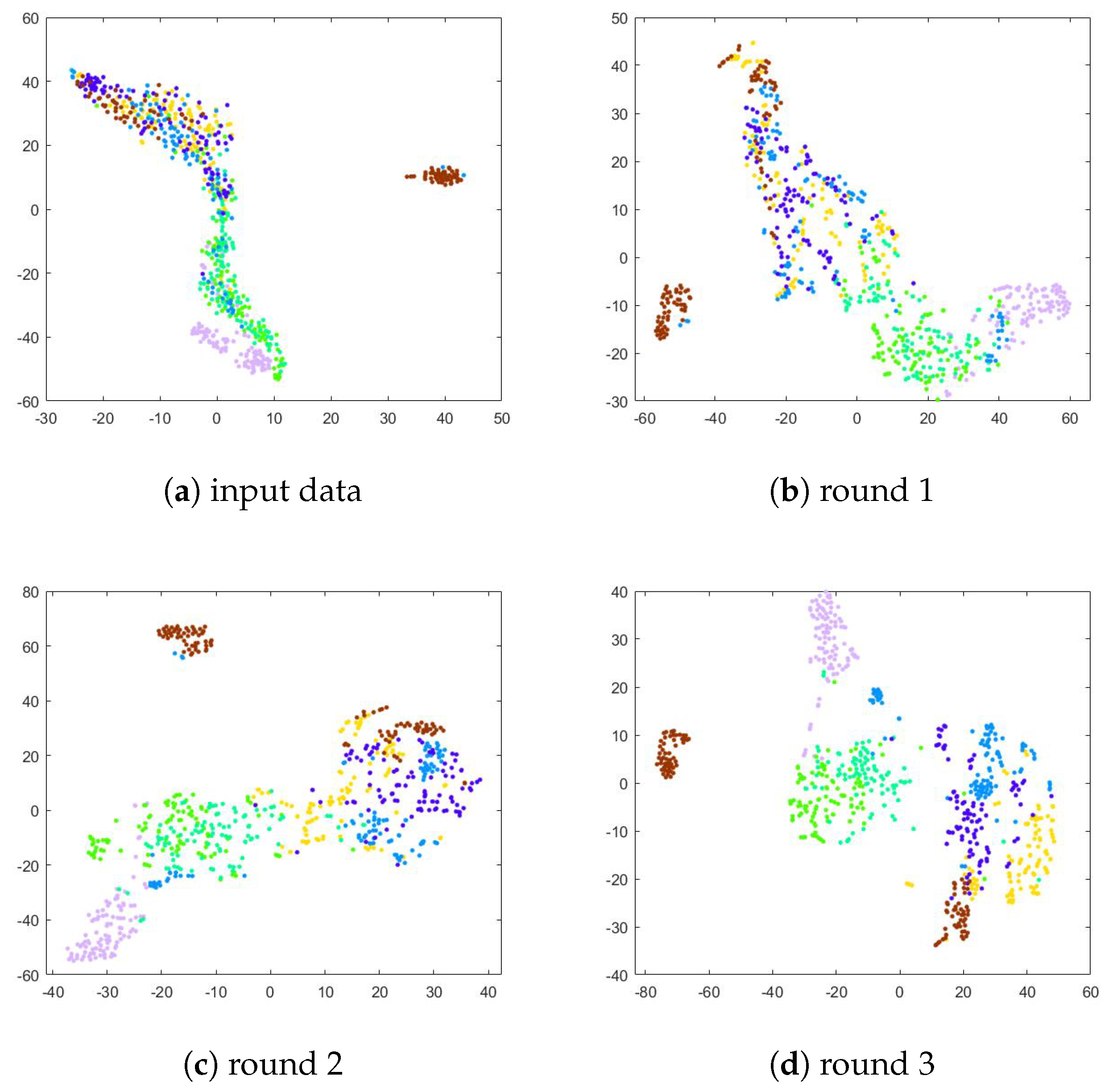

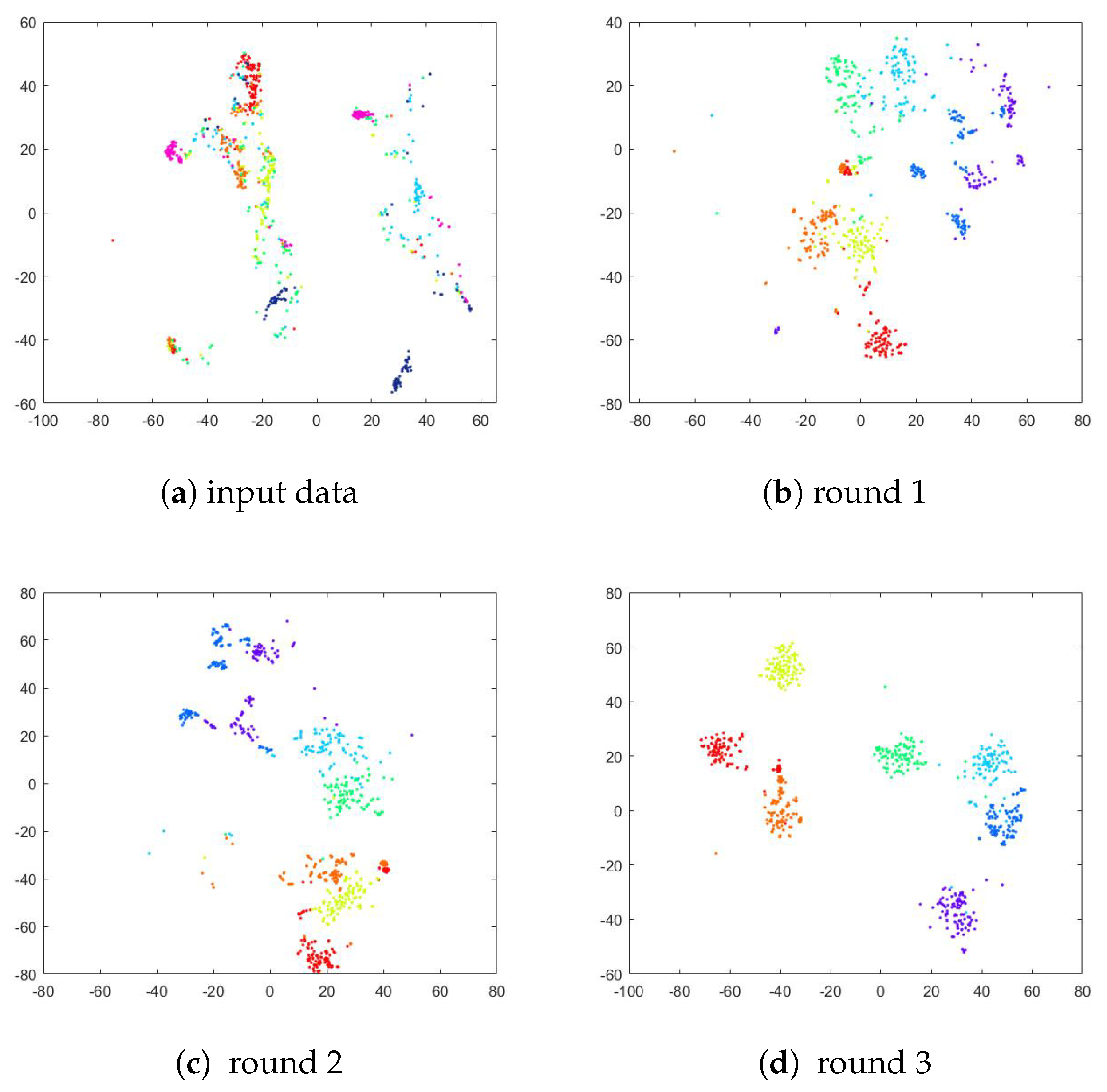

4.4.4. Deep Feature Visualization and Analysis

To verify the effect of the deep joint learning framework on the network training, we performed a comparison of the t-SNE [

70] visualization results of the extracted features obtained in the original feature space and each iteration. Specifically, we extracted features from the images using the trained representation learner models. Following the training, we implemented t-SNE to visualize the projections of the extracted features to interpret our approach. As shown in

Figure 6,

Figure 7 and

Figure 8, in the original feature space, the separability between different classes of data is poor because there are similar facial features among the face data. In the following iterations, the convergence of the data becomes more apparent. This distinguishable feature space not only improves the clustering performance but also facilitates the accuracy of the classification. Hence, it has been shown that ACNN-EC can better cluster data of the same class, demonstrating that it is able to learn more discriminative features for FER.

5. Quantitative Evaluation

5.1. Quantitative Evaluation Using Self-Collected Dataset

In this section, to validate the effectiveness of the proposed method in practical applications, we evaluated various classification methods using a self-collected dataset through rigorous quantitative evaluation procedures. Referring to evaluation metrics commonly used in previous research, such as widely adopted measures in object detection and classification, including accuracy, precision, recall, and F1 score, we conducted a comprehensive quantitative evaluation of the algorithm proposed in this paper. True positive (TP) represents the number of true positive samples, false positive (FP) represents the number of false positive samples, true negative (TN) represents the number of true negative samples, and false negative (FN) represents the number of false negative samples. Accuracy is the proportion of correctly classified samples in total samples, which is represented as the proportion of the sum of TP and TN to the sum of TP, FP, TN, and FN. Precision represents the proportion of the number TP to the sum of TP and FP. Recall represents the proportion of the number TP to the sum of TP and FN. The F1 score is the harmonic average value of precision and recall.

In

Section 4.3,

Table 6, we present an experimental analysis, where we compared our proposed algorithm with other popular methods in the field of facial expression recognition. Overall, our proposed algorithm achieved the highest classification performance, achieving an impressive accuracy of 95.87% on the self-collected dataset. It is worth noting that algorithms utilizing the ViT as a backbone, such as MVT [

66], VTFF [

67], and TransFER [

71], also obtained competitive results, although not surpassing our method’s performance.

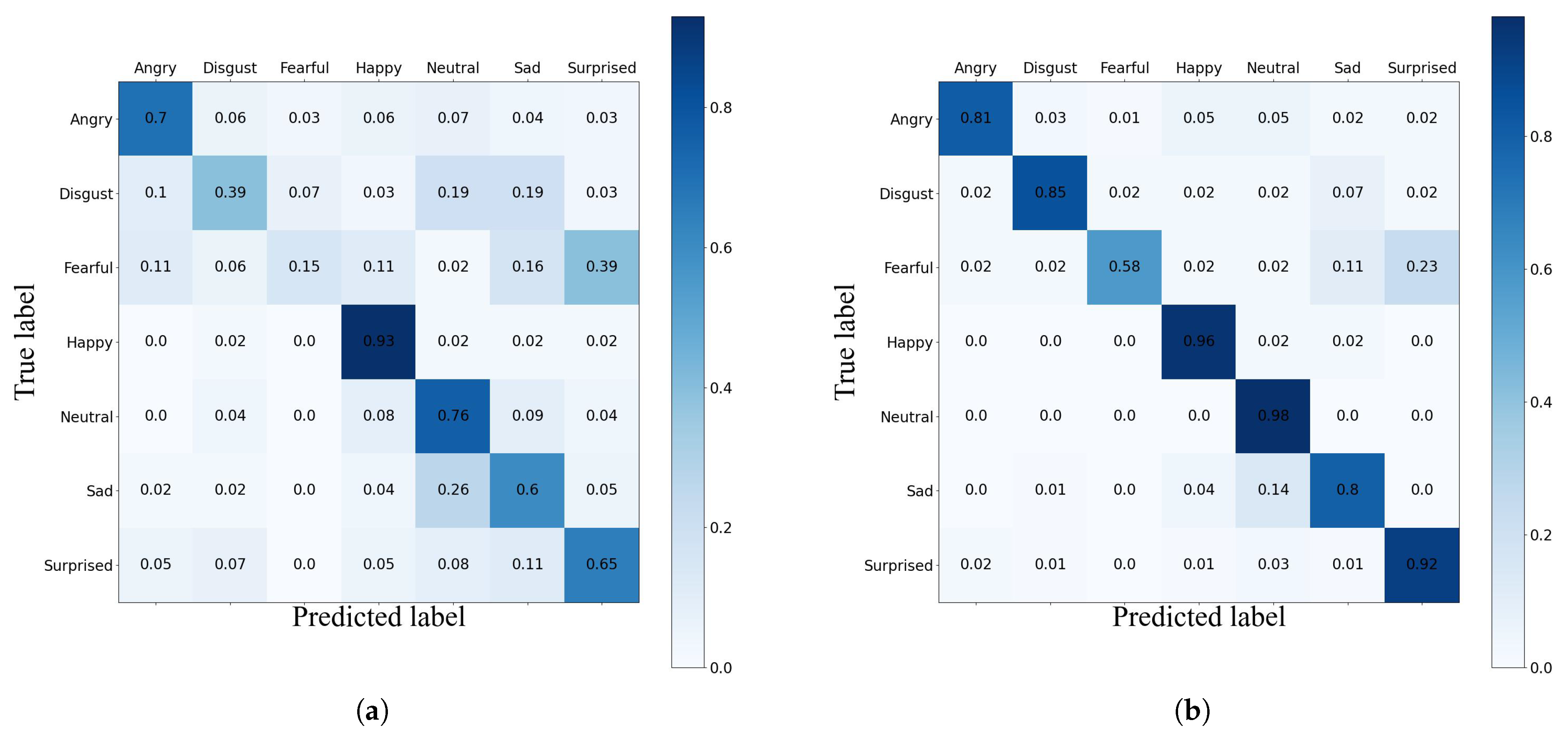

Table 12 presents the results of our proposed method on the self-collected dataset for seven different emotion categories, including quantitative evaluations of precision, recall, F1 score, and accuracy. From the table, it can be observed that the recognition accuracy for the “Neutral” and “Happy” categories is the highest, reaching 98.52% and 95.96%, respectively. We analyze that this is because the training sample set contains a large and diverse number of samples for the “Neutral” category, leading to high recognition accuracy. Additionally, the “Happy” category exhibits distinct facial expression features, and the facial expressions of happiness tend to be consistent across different individuals, contributing to the high recognition accuracy for this category. During the training phase, although we applied data augmentation to address the class imbalance issue in the self-collected dataset, traditional data augmentation affected the “Disgust” and “Fearful” categories differently. The recognition rate for the “Disgust” category reached 84.75%, while the recognition rate for the “Fearful” category was only 57.58%. Next, we will utilize the confusion matrices from the initial and final iterations to attempt an analysis of the underlying reasons.

5.2. Evaluation Based on Confusion Matrix

To comprehend the patterns of misclassifications within individual categories in the self-collected dataset, we present the confusion matrices for the initial and final iterations on the test set. From

Figure 9, it can be observed that despite applying data augmentation techniques to the categories with fewer samples, the ’Fearful’ category had low accuracy in the initial iteration, which can be attributed to the lack of sample diversity and the difficulty of identification. However, a significant improvement was observed for this category after the addition of new samples. Furthermore, it can be concluded that the newly added samples had little impact on the classification of ‘Happy’ expressions. On the other hand, for the ‘Disgusted’ and ‘Surprised’ categories, which had low accuracy in the initial iteration, there was a significant improvement in the classification results.

5.3. Ablation Study on Using Self-Collected Dataset

To validate the insights provided by our algorithm into the role of each component in real-world applications, we conducted an ablation study to assess the contribution of individual modules.

Table 13 illustrates the importance of each module of our method on the self-collected dataset. Model A indicates the algorithm’s direct use of the MobileNet v2 network to replace the proposed ACNN network. Model B represents the algorithm’s omission of using clustered labeled samples (EC) and directly undergoes one iteration. Model C demonstrates the algorithm’s use of Softmax Loss to replace the Combined Loss. Model D represents the ACNN-EC algorithm in our study. From

Table 13 we observe that all components are helpful. The accuracy for Model A, Model B and-C was 89.97%, 75.08%, and 93.91%, respectively, while our algorithm achieved 95.87%, so we outperform by 5.9%, 20.79% and 1.96% margins. The algorithm’s performance is significantly enhanced by the clustering sample labeling technique, mainly due to the utilization of a substantial number of labeled samples, which greatly benefits the network training process.

5.4. Limitations and Discussion

In the context of facial expression recognition applied to the field of education, our proposed method achieved an impressive accuracy of 95.87% on the self-collected dataset, surpassing other existing methods. This high level of accuracy demonstrates the potential of our algorithm in enhancing emotion recognition systems for educational applications, thereby paving the way for more effective and personalized learning experiences for students. Although our method demonstrates excellent performance on our self-collected inner-dataset, we acknowledge the challenge of generalizing to previously unseen face data, especially those with limited samples. Fine-tuning the model on new datasets may not always yield optimal results due to domain shift and data scarcity. Additionally, the clustering accuracy may decrease when certain emotions are underrepresented or when there is a wide range of variations within an expression category.

In real-world applications, face images are typically not cropped, and there may be elements around the face such as hair, hats, or hands. Dealing with such high-resolution images indeed warrants exploration in applied research, and it is an area that we will focus on for future investigations. In addition, it will be crucial to evaluate the proposed method on real-world datasets with diverse environmental conditions, lighting, and facial expressions to validate its effectiveness in practical applications. Assessing the accurate recognition of emotions in the field of education is of paramount importance as it provides timely and tailored support to learners, ultimately leading to improvements in educational outcomes.