Abstract

Coal is an important resource that is closely related to people’s lives and plays an irreplaceable role. However, coal mine safety accidents occur from time to time in the process of working underground. Therefore, this paper proposes a coal mine environmental safety early warning model to detect abnormalities and ensure worker safety in a timely manner by assessing the underground climate environment. In this paper, support vector machine (SVM) parameters are optimized using an improved artificial hummingbird algorithm (IAHA), and its safety level is classified by combining various environmental parameters. To address the problems of insufficient global exploration capability and slow convergence of the artificial hummingbird algorithm during iterations, a strategy incorporating Tent chaos mapping and backward learning is used to initialize the population, a Levy flight strategy is introduced to improve the search capability during the guided foraging phase, and a simplex method is introduced to replace the worst value before the end of each iteration of the algorithm. The IAHA-SVM safety warning model is established using the improved algorithm to classify and predict the safety of the coal mine environment as one of four classes. Finally, the performance of the IAHA algorithm and the IAHA-SVM model are simulated separately. The simulation results show that the convergence speed and the search accuracy of the IAHA algorithm are improved and that the performance of the IAHA-SVM model is significantly improved.

1. Introduction

Coal, as a precious resource on Earth, has played a significant role in the economic development of many countries, leading to increased surface and underground mining activities in countries with abundant coal reserves [1]. However, most coal mines are prone to safety hazards due to the unpredictable nature of coal seams and the inherent risks associated with underground mining [2]. Various types of mining disasters have occurred, such as roof accidents, gas explosions, fires, flooding, and gas poisoning [3]. These disasters not only pose a serious threat to the safety of miners but also result in substantial property damage for coal mining enterprises. The essence of safety accidents is closely related to the mining environment. For example, methane is the primary component of gas, and timely monitoring of methane concentration in the mining environment, coupled with appropriate protective measures, can greatly reduce the risk of gas explosions. In the event of a fire, the temperature rises rapidly, but real-time monitoring of temperature changes in the mine and prompt firefighting measures can help reduce casualties. Toxic gases in underground mines, including , , , and , can cause harm to workers’ health. Timely monitoring of toxic gas concentrations in the current area serves as a guarantee for miners’ well-being. Therefore, strengthening coal mine environmental monitoring and early warning systems is an effective means to reduce accidents and holds great significance for ensuring coal mine safety and production.

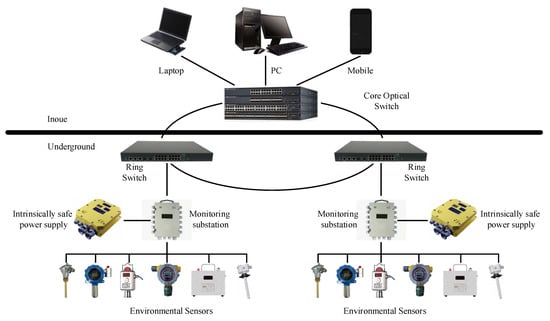

Figure 1 illustrates a commonly used framework for underground environmental monitoring systems, consisting of two main parts: surface (above ground) and underground components. The surface part primarily comprises a switch and user terminals, while the underground part consists of monitoring substations, ring network switches, power supplies, and sensors. Various sensors are employed to monitor the actual underground environment, and the data are transmitted in real time to the surface, enabling users to observe the underground conditions at any given time. With the continuous advancement of Internet of Things (IoT) technology, wireless sensor networks (WSNs) have become vital tools for underground environmental monitoring. Many experts utilize WSNs to collect underground environmental data for further research [4,5,6,7]. The data used in this study were also collected in real-time through WSNs.

Figure 1.

Framework of underground coal mine environmental monitoring system.

With the development of computer technology, machine learning has gradually become integrated into our daily lives [8], and its applications in coal mine safety are extensive. Kumari et al. proposed a deep learning model combining uniform manifold approximation and projection (UMAP) with long short-term memory (LSTM) in their paper [9]. They used this model to predict the fire status in sealed areas of coal mines, aiding in the early implementation of fire control strategies to prevent further losses caused by mine fires. Slezak et al., in their publication [10], combined machine learning techniques with sensor technology to develop a decision support system, which they applied to predict the risk level of coal mine methane. The system demonstrated good predictive accuracy. In another study by Jo et al. [11], the authors proposed an underground mine air quality prediction system based on Azure machine learning. This system allows for the rapid assessment and prediction of air quality in coal mines, providing a safety guarantee for the underground environment. These examples demonstrate the necessity of applying machine learning to analyze various coal mine safety data for risk assessment, ensuring efficiency and accuracy.

Intelligent optimization algorithms are an important research area within machine learning. These algorithms based on heuristic principles can overcome the uncertainty and complexity of problems during the optimization process, making them suitable for various types of optimization problems [12]. Some well-known algorithms in this field include particle swarm optimization (PSO) [13], grey wolf optimizer (GWO) [14], and the whale optimization algorithm (WOA) [15], among others. In recent years, researchers worldwide have conducted in-depth studies on these algorithms and applied them to complex optimization problems.The artificial hummingbird algorithm (AHA) is an intelligent optimization algorithm proposed by Zhao et al. in 2022 [16]. It simulates the unique flight skills and intelligent foraging strategies of hummingbirds in nature for optimization purposes. Compared to other intelligent optimization algorithms, AHA has the advantages of having fewer parameters, a stable convergence speed, strong optimization capabilities, and high implementation efficiency. However, when solving complex problems, AHA may encounter issues such as becoming trapped in local optima, insufficient global exploration capability, and slow convergence speed. To address these limitations, experts have proposed various methods to improve the algorithm, making it more suitable for a wide range of optimization problems.

Support vector machine (SVM) is a type of generalized linear classifier used for binary classification in supervised learning [17]. Compared to other classification algorithms, SVM offers several advantages, including simplicity, robustness, and generality. As a result, many researchers have applied SVM to various application domains for classification tasks. SVM relies on two important parameters: the kernel function parameter (C) and the penalty factor (g), which determine the performance of SVM. However, these parameters are often selected manually, and choosing inappropriate values can lead to overfitting or underfitting. To ensure the proper selection of the kernel function parameter (C) and the penalty factor (g), researchers frequently employ intelligent optimization algorithms to optimize these parameters [18,19,20].

Inspired by the aforementioned research, we propose using an intelligent optimization algorithm combined with SVM to construct a coal mine environmental safety warning model. To improve the model’s performance, we employ an enhanced version of the artificial hummingbird algorithm (AHA) to optimize the kernel function parameter (C) and the penalty factor (g). In this study, we introduce an improved version called IAHA, which incorporates the following modifications: (1) initialization of the population using a fusion of the Tent chaotic map and reverse learning strategy, (2) introduction of the Levy flight strategy during the food-guiding phase, and (3) incorporation of the simplex method at the end of each iteration to replace the worst solution. We evaluate the effectiveness and accuracy of the improved IAHA algorithm by conducting simulation experiments on eight benchmark test functions. We compare IAHA with four basic algorithms, three single-stage improved AHA algorithms, and two existing improved artificial hummingbird algorithms. The results demonstrate the effectiveness and accuracy of the proposed improvements. Furthermore, we combine IAHA with SVM to predict the safety level of coal mine environments. We conduct simulation experiments to evaluate the performance of the improved model in this context.

The organization of this paper is as follows:

In Section 2, we provide an introduction to the basic theories of the artificial hummingbird algorithm and support vector machine.

In Section 3, we propose an improved artificial hummingbird algorithm, namely IAHA, and analyze its performance.

In Section 4, we utilize the improved algorithm to optimize the parameters of SVM and construct the IAHA-SVM coal mine environmental safety warning model. We compare the performance of this model with that of the AHA-SVM model optimized by the basic AHA algorithm.

In Section 5, we summarize the findings of this study and provide an outlook for future work.

By following this organizational structure, we aim to present a comprehensive study on the application of the IAHA algorithm combined with SVM in the prediction of coal mine environmental safety.

2. Related Work

In this section, we provide an introduction to the fundamental theories of the artificial hummingbird algorithm (AHA) and support vector machine (SVM). This foundation is crucial for the subsequent discussions on algorithm improvements and model construction.

2.1. Artificial Hummingbird Algorithm

AHA is an algorithm that simulates the flight skills and foraging behavior of hummingbirds. It consists of four main phases: initialization, food guiding, area foraging, and migration foraging. In the AHA algorithm, a memory function is implemented by introducing an access table. This table records the quality level of different food sources chosen by hummingbirds. The access level is determined by the time interval since the last visit to the food source. A longer time interval corresponds to a higher access level, and hummingbirds prioritize higher-access-level food sources. If two food source levels are the same, hummingbirds choose the one with the highest nectar replenishment rate, which is determined by the current fitness value. By incorporating these mechanisms, the AHA algorithm allows hummingbirds (representing solutions in the algorithm) to efficiently search for optimal solutions in the search space. The memory function and selection criteria based on the nectar replenishment rate help guide the exploration and exploitation of the search space, enabling the algorithm to find high-quality solutions.

The three flight skills of the hummingbird are axial flight, diagonal flight, and omnidirectional flight, which are used to control the direction vectors in dimensional space. Axial flight is described as follows:

Diagonal flight is described as follows:

Omnidirectional flight is defined as follows:

where indicates flight skills, randi([1,d]) indicates the generation of random integers from 1 to d, denotes a random integer arrangement from 1 to k, and denotes a random number in the range of [0, 1].

2.1.1. Initialization

n hummingbirds are placed on each food source, and the food source locations are initialized as follows:

where denotes the ith food source location; and are the upper and lower limits of the search space, respectively; r is a random number in the range of [0, 1]; and n is the population size.

The food source access table is initialized as follows:

where indicates that the hummingbird is foraging at a specific food source, and indicates that the jth food source is currently visited by the ith hummingbird.

2.1.2. Guided Foraging

When , hummingbirds follow their current flight skill to guide foraging by visiting the target food source, thus obtaining the candidate food source. The candidate food source location is updated as follows:

where denotes the position of the ith candidate food source after iterations, denotes the position of the ith candidate food source in the tth iteration, denotes the position of the ith hummingbird to visit the target food source, and a is a bootstrap factor obeying a normal distribution (mean, 0; standard deviation, 1). The food source locations are updated as follows:

where denotes the position of the ith iteration of the food source, and denotes the fitness value of the function.

2.1.3. Territorial Foraging

When , hummingbirds forage regionally according to their current flight skill, searching for new food sources near their territory, with locations updated as follows:

where b is a region factor that follows a normal distribution (mean, 0; standard deviation, 1).

2.1.4. Migration Foraging

If , migratory foraging is conducted to replace the food source with the worst existing nectar replenishment rate with other new food sources. The food source locations are updated as follows:

where indicates the location of the food source with the worst nectar replenishment rate in the population.

2.2. Support Vector Machine

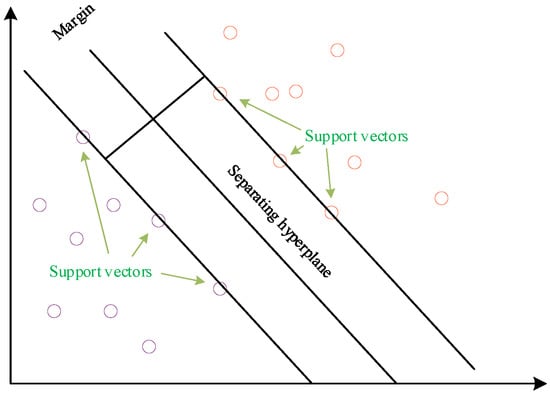

Support vector machine (SVM) is a powerful and widely used machine learning algorithm for classification and regression problems. It offers numerous advantages, including the use of a small number of training samples, a short training time, and a simple structure [21]. The fundamental idea behind SVM is to find an optimal hyperplane that separates samples from different classes. In binary classification problems, the hyperplane can be viewed as an (n-1)-dimensional decision boundary, where n represents the number of features. The goal of the algorithm is to identify a hyperplane with the largest possible margin, thereby maximizing the separation between samples from different classes. The samples that are closest to the hyperplane are referred to as support vectors, as depicted in Figure 2.

Figure 2.

Optimal partition plane.

The core idea of SVM is to address nonlinear problems by mapping the samples to a high-dimensional space and finding a linear hyperplane in that space. To tackle this problem, the concept of Lagrange multipliers is introduced to solve the quadratic optimization problem subject to inequality constraints. By solving this problem, a classification hyperplane can be obtained that maximizes the margin between different classes. Finally, the classification hyperplane is mapped back to the original input space, achieving the goal of nonlinear classification in the original input space. The classification function can be expressed as:

where training sets T = , represent the sign (+ or -) taken by the expression; denotes the Lagrange multipliers; and b is the threshold determined after training the data. C represents the penalty factor, which controls the degree of punishment for misclassified samples. It balances the tradeoff between maximizing the margin and minimizing the classification errors. When the value of C is high, it indicates low tolerance for errors and may lead to overfitting issues. Conversely, when the value of C is low, it may result in underfitting problems. If the value of C is too large or too small, it can lead to a decrease in the model’s generalization ability. represents the kernel function. In this study, the RBF kernel function is selected as the nonlinear mapping function for the SVM model. The expression for the RBF kernel function is as follows:

where g is the kernel function parameter, and the value of g affects the speed and results of SVM model training and prediction.

3. Our Proposed IAHA Algorithm

In this section, we propose an improved version of the artificial hummingbird algorithm (AHA) called IAHA, which incorporates multiple enhancement strategies. We then compare IAHA with four basic algorithms, three single-stage improved AHA algorithms, and two existing improved artificial hummingbird algorithms. The comparison is conducted using eight benchmark test functions and simulation experiments and evaluated using the Wilcoxon rank-sum test.The experimental results demonstrate that IAHA outperforms the four basic algorithms, three single-stage improved AHA algorithms, and two existing improved artificial hummingbird algorithms. IAHA exhibits a faster convergence speed, stronger global optimization capability, and better overall algorithm performance.

3.1. Improvement Strategies

3.1.1. Combining Tent Chaos Mapping with Backward Learning to Initialize Populations

The standard initialization strategy of AHA adopts a random initialization approach, which often leads to an uneven distribution of individuals in the population and insufficient population diversity. Previous research has shown that an effective population initialization strategy plays a crucial role in improving the optimization accuracy and convergence speed of the algorithm [22]. Chaotic mapping is frequently used to enhance the population initialization of intelligent optimization algorithms. Among them, Tent and logistic mapping are the most common chaotic models. However, Tent chaotic mapping exhibits better exploration capability and convergence speed compared to logistic chaotic mapping [23]. Therefore, in this study, we select Tent chaotic mapping as the foundation for population initialization. The formula for Tent chaotic mapping is as follows:

where . The initialized population () is obtained according to Tent chaos mapping. The inverse solution () for the initialized population () is calculated as:

where and are the maximum and minimum values of the d dimension in the ith individual corresponding to , respectively. Then, the fitness values of each individual within and are calculated, and the most outstanding individual is selected as the initial population individual after comparison using the following equation:

where and denote the fitness values of individuals in and , respectively.

3.1.2. Levy Flight

Levy flight is a random flight simulated using a heavy-tailed distribution, where the individual takes small steps for a long time and occasionally takes large steps [24]. Introducing Levy flight into the algorithm ensures that the algorithm can perform a local search during small steps and disrupt the population positions during large steps, helping to escape local optima.In this study, the Levy flight strategy is incorporated into the food source updating process described in Equation (6). The improved formula is as follows:

where and are described as follows:

Equation (16) is the standard food source update method, and Equation (17) is the Levy flight-based food source update method. The fitness values of the two are compared, then selected based on merit. Equation (16), is a random search path that satisfies:

Its step size (s), which obeys the Levy distribution, is calculated as follows:

where and v are normally distributed and are defined as:

where

where is 1.5.

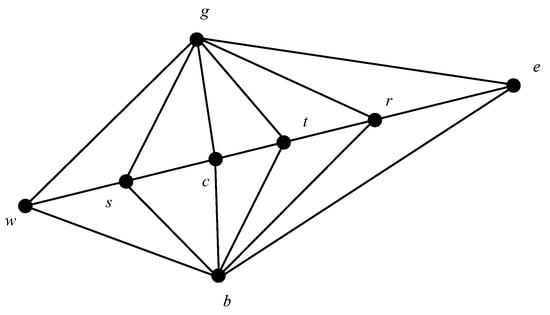

3.1.3. Simplex Method for Optimization

The simplex method is a fast and simple polytope search algorithm [25] that involves reflecting, expanding, and searching for geometric transformations in the worst-performing individuals in a problem. This process generates better-quality individuals to replace them. The search points in the simplex method are illustrated in Figure 3.

Figure 3.

Search points of the simplex method.

The aim of the simplex method is to determine the location of the center point (c) on the basis of the best point (g), the second-best point (b), and the lowest point (w). The basic operation of the simplex method is as follows:

(1) Reflection operation:

The reflection point is , where is the reflection coefficient.

(2) Expansion operation:

When , the extension point is calculated (, where is the extension factor). When , the expansion point (e) is used instead of the closest point (w); otherwise, the reflection point (r) is used instead of the closest point (w).

(3) Compression operation

When , the reflection direction is incorrect; then, the inward compression operation is performed ( where is the compression coefficient). When , the compression point (t) is used instead of the closest point (w). When , the outward compression operation () is performed. When , the contraction point (s) is used instead of the closest point (w); otherwise, the reflection point (r) is used instead of the closest point (w).

In this paper, by using the simplex method, the algorithm optimizes the worst-quality individuals in the process of each iteration so as to improve the overall quality of the population, which can effectively solve the problem of the algorithm falling into a local optimum and improve the local search and optimality-seeking abilities of the algorithm.

3.1.4. IAHA Execution Steps

After AHA is improved by the above three improvement strategies, the flow-specific implementation process of IAHA is shown in Algorithm 1.

| Algorithm 1 IAHA implementation steps. |

| Step 1: Parameters such as population size, dimensionality, number of iterations, and upper and lower limits of the search space are set;

Step 2: The food source locations are initialized using a fused Tent chaos mapping and direction-learning strategy, and the corresponding fitness values are calculated. The access table is also initialized; Step 3: Flight skills are randomly selected; Step 4: The phase of guided foraging or area foraging begins based on the Levy flight strategy with a 50% probability of each of the two foraging behaviors. The visit table is updated after foraging behavior; Step 5: When migratory foraging conditions are met, hummingbirds perform migratory foraging, randomly replacing the worst food source location. The access table is updated after foraging behavior; Step 6: The location of poor food sources is optimized using the simplex method; Step 7: The algorithm is terminated when the algorithm termination condition is met; otherwise, return to step 3. |

The process of finding a solution in this study was conducted on an Intel Core i5 CPU with a clock speed of 2.50 GHz and 8 GB of memory. The experiments were performed in a Windows 10 64-bit testing environment using MATLAB R2022a software. The proposed IAHA algorithm was validated using eight classical test functions, as shown in Table 1. Among them, represent a unimodal function with a single unique extremum point within the interval that is used to test the algorithm’s development capability, and represent a multimodal function with multiple extremum points within the interval that can be used to test the algorithm’s ability to escape local optima. In the subsequent experiments, it is important to ensure that the algorithm parameters remain consistent across each experimental group. The population size is set to 30, the number of iterations is set to 500, and the function dimension is set to 30. For each function, 30 independent experiments are conducted, and the average value and standard deviation of the experiments are recorded to evaluate the algorithm’s performance.

Table 1.

Test function.

3.2. Experimental Results

3.2.1. Experimental Environment

3.2.2. Comparison with Other Intelligent Optimization Algorithms

(1) Simulation results

To validate the effectiveness and feasibility of the algorithm proposed in this study, a comparative analysis is performed between the proposed IAHA algorithm and other algorithms, including PSO, WOA, GWO, and AHA. The analysis is conducted using the eight aforementioned test functions. The average values and standard deviations obtained by each algorithm are calculated and presented in Table 2. This comparison aims to assess the performance of the algorithms and provide insights into the superiority of the IAHA algorithm over the other methods.

Table 2.

Comparison of IAHA and basic algorithm experimental results.

Based on the analysis presented Table 2, it can be observed that in unimodal functions , the IAHA algorithm achieves the theoretical optimum with a mean value, and the standard deviation is 0. In unimodal function , the IAHA algorithm’s mean value is closer to the theoretical optimum, and the standard deviation is minimized. From this, it can be inferred that the IAHA algorithm exhibits a stronger exploration capability and stability during the optimization process.

For multimodal functions and , the IAHA algorithm achieves an average value equal to the theoretical optimum, and the standard deviation is 0. In multimodal function , the IAHA algorithm’s average value is closer to the theoretical optimum, and the standard deviation is 0, indicating consistent optimization results across multiple experiments. Therefore, it can be concluded that the IAHA algorithm exhibits strong optimization capabilities and can quickly escape local optima in multimodal test functions with multiple extreme points.

In conclusion, the IAHA algorithm demonstrates strong search capability and high stability in both unimodal and multimodal functions. It exhibits adaptability to different types of functions.

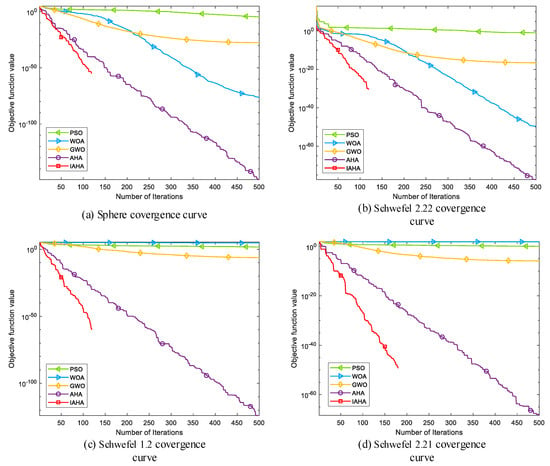

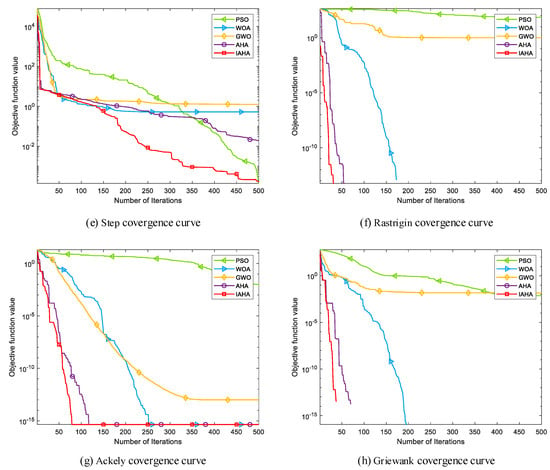

(2) Comparative analysis of convergence curves

Figure 4 depicts the convergence curves comparing IAHA with other basic intelligent optimization algorithms on various test functions. From Figure 4a–d,f,h, it is evident that the IAHA algorithm can rapidly converge to the theoretical optimum of the respective test functions. Even in the case of multimodal functions and in Figure 4f,h, where other algorithms also find the theoretical optimum, their convergence speed is significantly slower compared to that of the IAHA algorithm.

Figure 4.

Comparison of IAHA and basic algorithm convergence curves.

From Figure 4e,g, it is apparent that the IAHA algorithm does not find the theoretical optimum for test functions and . However, in the case of the test function, although the theoretical optimum is not reached, the IAHA algorithm exhibits faster convergence and higher optimization accuracy compared to the other algorithms. In the test function, the IAHA algorithm achieves higher optimization accuracy and demonstrates significantly faster convergence, reaching a stable function value around 80 iterations, whereas the AHA algorithm, despite achieving the same accuracy, requires approximately 115 iterations to reach a stable function value.

In summary, compared to the other four basic algorithms, IAHA demonstrates excellent performance in terms of convergence speed and optimization accuracy. The convergence curves of IAHA consistently remain below those of the other algorithms, indicating higher optimization accuracy and faster convergence speed.

3.2.3. Comparative Analysis with a Single-Improvement-Stage AHA Algorithm

(1) Simulation results

To verify the effectiveness of the different improvement strategies proposed in this paper, a comparison is conducted between IAHA, the original AHA algorithm, and the AHA algorithms with single-strategy improvements. These single-strategy improved algorithms, including TAHA, which incorporates the fusion of Tent chaotic mapping and a reverse learning strategy; LAHA, which solely incorporates the Levy flight strategy; and DAHA, which solely incorporates the simplex method. The comparison is conducted on the eight test functions presented in Table 1 to evaluate the effects of different improvement strategies and the performance of the multi-strategy improved artificial hummingbird algorithm. The experimental results are summarized in Table 3.

Table 3.

Comparison of IAHA and single-improvement-phase experimental results.

From the analysis in Table 3, it can be observed that in unimodal functions , TAHA does not show a significant improvement in optimization accuracy compared to standard AHA. However, it consistently exhibits a smaller standard deviation, ensuring the stability of the algorithm. Therefore, by combining Tent chaotic mapping and the reverse learning strategy for population initialization, the algorithm can achieve a more stable optimization performance. In comparison to standard AHA, LAHA shows a significant improvement in optimization accuracy. This is due to the introduction of the Levy flight strategy in the algorithm, which enhances both local search accuracy and the ability to quickly escape local optima. While DAHA can find the theoretical optimum in the test functions, its optimization accuracy is not as good as that of other improvement strategies in the function. On the other hand, IAHA not only achieves the theoretical optimum in the functions but also maintains high optimization accuracy in the function. In multimodal functions , all the improvement strategies yield the same optimization values. Additionally, in the and functions, all algorithms are able to find the theoretical optimum.

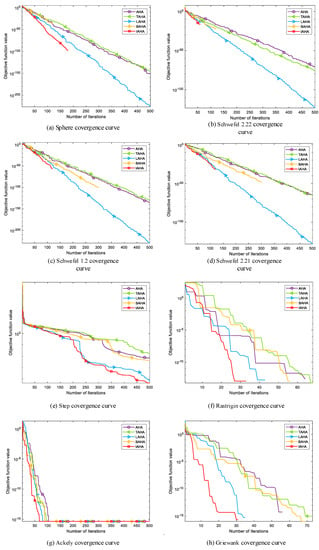

(2) Comparative analysis of convergence curves

Figure 5 shows the convergence curves of IAHA and the AHA algorithm with single-stage improvements in various test functions. Overall, TAHA consistently exhibits higher optimization accuracy than AHA and maintains algorithm stability. LAHA demonstrates significantly faster convergence compared to the other two single-stage improvement algorithms, enabling faster optimization. DAHA reaches the theoretical optimum of the corresponding function with fewer iterations, as shown in Figure 5a–d. IAHA combines the advantages of these three improvement strategies. It ensures better algorithm stability, faster convergence during optimization, and the ability to reach the theoretical optimum in fewer iterations. In conclusion, integrating these three improvement strategies into the AHA algorithm effectively enhances its capabilities.

Figure 5.

Comparison of IAHA and single-improvement-phase AHA convergence curves.

3.2.4. Comparative Analysis with Other Improved AHA Algorithms

(1) Simulation results

To further demonstrate the performance of the IAHA algorithm, we conduct comparative experiments with two recent improvement algorithms: CLAHA proposed by Wang et al. [26] and AOAHA proposed by Ramadan et al. [27]. The experimental results are presented in Table 4.

Table 4.

Comparison of IAHA and other improved AHA experimental results.

From Table 4, it can be observed that in the case of unimodal functions , the IAHA algorithm proposed in this study outperforms the other two improvement algorithms in terms of optimization accuracy. However, in the case of multimodal functions , the three improvement algorithms achieve similar optimization accuracy. Overall, the IAHA algorithm demonstrates superior optimization performance compared to the other two improvement algorithms.

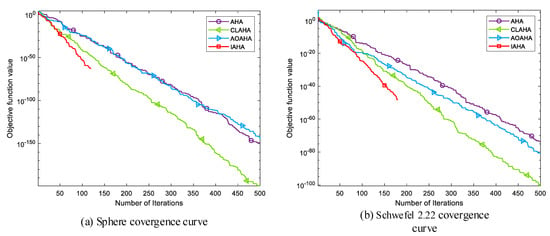

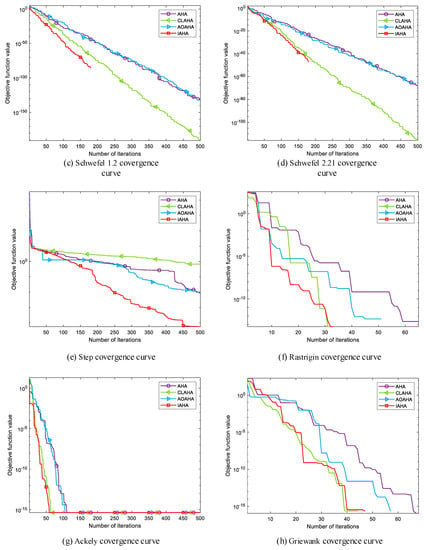

(2) Comparative analysis of convergence curves

Figure 6 shows the convergence curves of IAHA and other improved versions of AHA in various test functions. Overall, it can be seen that the AOAHA algorithm only slightly improves the optimization accuracy and convergence speed compared to the original AHA algorithm. Although the CLAHA algorithm exhibits a noticeable improvement in convergence speed, the optimization accuracy still has room for improvement. On the other hand, the proposed IAHA algorithm demonstrates faster convergence speed in both unimodal functions () and multimodal functions (). Overall, the IAHA algorithm’s convergence performance is superior to that of the other two improvement algorithms.

Figure 6.

Comparison of IAHA with other improved AHA convergence curves.

3.2.5. Significance Analysis

To further elucidate the performance differences between the IAHA algorithm proposed in this study and other algorithms, such as PSO, WOA, GWO, AHA, TAHA, LAHA, BAHA, CLAHA, and AOAHA, the Wilcoxon rank-sum test is conducted. The experimental parameters are kept consistent with the previous settings, and the 30 independent runs are subjected to the rank-sum test. The results of the test are presented in Table 5.

Table 5.

Comparison of IAHA and single-improvement-phase experimental results.

a. indicates that there is no difference in the solution results of the comparison algorithms.

b. indicates a difference in the solution results of the comparison algorithms.

In the Wilcoxon rank-sum test, the significance level threshold is typically set to 0.05. When the calculated p-value is less than the significance level (), we accept the alternative hypothesis () and conclude that there is a significant difference between IAHA and the compared algorithm. This suggests that IAHA exhibits a significantly different performance compared to the other algorithm being tested. On the other hand, when the calculated p-value is greater than or equal to the significance level (), we accept the null hypothesis () and conclude that there is no significant difference between IAHA and the compared algorithm in terms of the performance metric for the corresponding test function. This implies that IAHA and the compared algorithm have comparable performance on that specific test function. Similarly, when the calculated p-value is less than the significance level (), we conclude that IAHA and the compared algorithm exhibit consistent solution accuracy on that test function without any significant difference. By utilizing a significance level threshold of 0.05, the Wilcoxon rank-sum test allows us to assess whether IAHA shows a significant difference in performance compared to the other algorithms being evaluated, thereby providing insights into the relative effectiveness of IAHA.

4. Our Proposed IAHA-SVM Coal Mine Environmental Safety Warning Model

In this section, we utilize the IAHA algorithm to optimize the penalty factor (C) and the kernel function parameter (g) of the SVM model, resulting in the development of the IAHA-SVM coal mine environmental safety level warning model. Based on the safety regulations of coal mines, the model classifies the safety levels into four categories. We then apply the model to classify coal mine safety data that we collected. By comparing the classification accuracy of the IAHA-SVM model with that of the AHA-SVM model, we aim to demonstrate the advantages of the improved algorithm integrated with the SVM model in this study.

The IAHA-SVM coal mine environmental safety level warning model is designed to provide accurate and reliable safety-level predictions for coal mine environments. By optimizing the SVM parameters using the IAHA algorithm, the model can effectively handle the complexities and variations in coal mine safety data, leading to improved classification performance. A comparison of the classification accuracy with that of the AHA-SVM model serves as an evaluation metric for the effectiveness of the IAHA-SVM model. If the IAHA-SVM model achieves higher accuracy in predicting the safety levels compared to the AHA-SVM model, it indicates that the integration of the IAHA algorithm has improved the performance of the SVM model for coal mine safety classification.This analysis provides evidence of the superiority of the IAHA-SVM model in accurately predicting coal mine safety levels. By leveraging the optimization capabilities of the IAHA algorithm, the IAHA-SVM model can better adapt to the characteristics of coal mine safety data, leading to enhanced classification accuracy and more reliable safety-level predictions.

4.1. Our Proposed IAHA-SVM Model

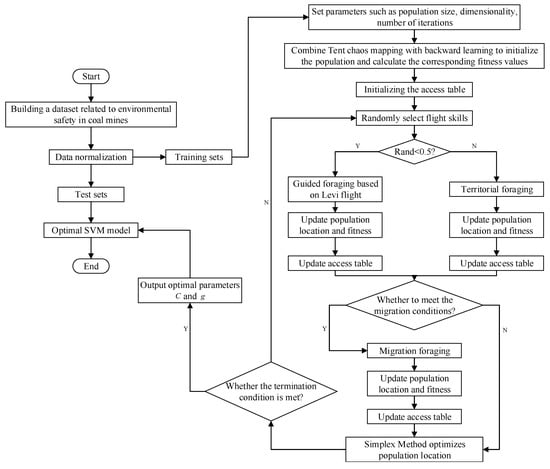

In the SVM model, the penalty factor (C) and the kernel function parameter (g) have a significant impact on the prediction accuracy of the model. Usually, these parameters are determined empirically and greatly affect the prediction results. Therefore, in this study, we employ the IAHA algorithm to optimize these parameters and select the values that lead to the best performance of the model. A flow chart of the proposed model is shown in Figure 7. The flow-specific implementation process of IAHA-SVM is shown in Algorithm 2.

| Algorithm 2 IAHA-SVM Execution Steps. |

| Step 1: The collected coal mine safety-related data are divided into training and test sets and normalized;

Step 2: The SVM penalty-term coefficients (C); kernel function parameters (g); and IAHA-related parameters, including population size, maximum number of iterations, etc., are initialized; Step 3: The food source locations are initialized using a fused Tent chaos mapping and direction-learning strategy, and the training-set samples are classified, with the SVM coal mine environmental safety classification accuracy as the individual fitness value; Step 4: Flight skills are randomly selected; Step 5: The phase of guided foraging or area foraging based on the Levy flight strategy begins, with a 50% probability of each of the two foraging behaviors. The visit table is updated after the foraging behavior; Step 6: When migratory foraging conditions are met, hummingbirds perform migratory foraging, randomly replacing the worst food source location. The access table is updated after the foraging behavior; Step 7: The location of poor food sources is optimized using the simplex method; Step 8: The algorithm is terminated if the IAHA algorithm termination condition is met and the optimal C and g parameter values are output; otherwise, return to step 4; Step 9: The IAHA-SVM coal mine environmental safety warning model is established. |

Figure 7.

IAHA-SVM flow chart.

4.2. Experimental Results

In this section of the simulation experiment, the population size is set to 20, and the maximum number of iterations is set to 50. The experimental data were obtained from a coal mine in Ningdong. Due to the scarcity of data in some extreme situations, in order to better meet the requirements of the experiment, part of the data are simulated based on real surface environmental data to form the dataset. This study classifies the coal mine environmental safety conditions into four levels according to the coal mine safety regulations, as shown in Table 6.

Table 6.

Comparison of IAHA and single-improvement-phase experimental results.

A D-level warning indicates a safe state where all parameters of the current environment are within a normal range, allowing for normal operation. A C-level warning indicates the occurrence of abnormal conditions, such as an increase in the concentration of flammable or explosive gases or leakage of toxic gases. The situation should be promptly investigated to eliminate hazards. A B-level warning triggers the underground alarm device when there are significant anomalies in the underground gas parameters. Personnel should be evacuated, and the situation should be investigated promptly. An A-level warning is the highest level of warning, indicating the occurrence of dangerous situations such as fire or massive leakage of toxic gases. Immediate evacuation and power cutoff should be carried out, and the situation should be investigated. Corresponding measures should be taken to resolve the issue when the parameters decrease to a B-level warning. In order to better ensure the safety of workers, this study determines the overall warning level based on the highest level of each parameter. For example, if the value of is 5%, which corresponds to the A level, but the ranges of other parameters are not in the A-level range, we consider this situation an overall A-level warning.

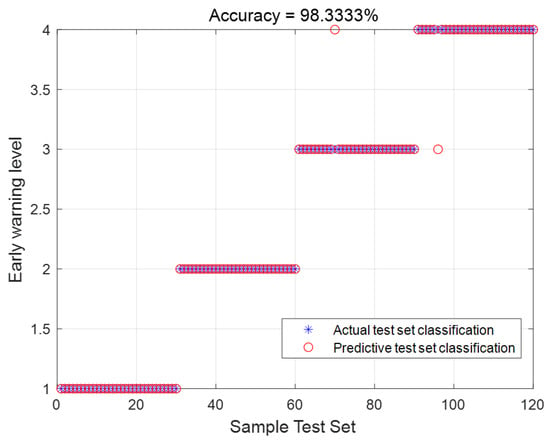

In this section, a total of 4120 data samples are selected for the simulation experiment. Among them, 4000 samples are used for training, with 1000 samples for each warning level. The remaining 120 samples are used for testing, with 30 samples for each warning level. The purpose of the experiment is to find the optimal penalty factor (C) and kernel function parameter (g) to achieve the best classification performance based on the accuracy of the training data levels. The parameter range is set from 0 to 1000. The experimental results are shown in Figure 8. From the figure, it can be observed that out of the 120 test data samples, only 2 data samples were misclassified, resulting in a classification accuracy of 98.3333%.

Figure 8.

Actual and predicted classification plots for the test set.

To demonstrate the improved performance of the proposed model, a comparative experiment is conducted by combining the basic AHA algorithm with SVM. The experimental results are presented in Table 7. From the table, it can be observed that the accuracy of the IAHA-SVM model is significantly higher than that of the AHA-SVM model. This indicates that the use of the IAHA algorithm to optimize the SVM model improves the precision of classification and reduces the impact of boundary values. As a result, the overall performance of the model is further enhanced.

Table 7.

Experimental results.

5. Conclusions and Future Work

In this paper, we propose the IAHA-SVM coal mine environmental safety warning model. First, to address the limited global exploration capability and slow convergence speed of the artificial bee hummingbird algorithm (AHA), we employ a strategy that combines Tent chaotic mapping with reverse learning to initialize the population. In the foraging phase, the Levy flight strategy is introduced to enhance the search ability. Additionally, the Simplex method is incorporated at the end of each iteration to replace the worst solution. Comparative experiments are conducted to demonstrate the effectiveness of the IAHA algorithm. Next, we combine the improved IAHA algorithm with support vector machine (SVM) to optimize the SVM model’s penalty factor and kernel function parameters. The IAHA algorithm is used to search for the optimal parameters, and a coal mine environmental safety level warning model is established. The effectiveness of the proposed model is validated using a dataset generated from actual measurements in a coal mine in Ningdong Town, Ningxia. Compared to the SVM model optimized by the basic AHA algorithm, the IAHA-SVM model shows superior performance.

Integrating intelligent optimization algorithms with real-world application problems can enhance work efficiency. The IAHA-SVM model proposed in this paper can also be applied to classification problems in various other domains. In our future work, we will continue to explore the application of intelligent optimization algorithms in different aspects of coal mine safety. Some potential directions include optimizing the deployment of wireless sensors underground, addressing underground positioning problems, and solving three-dimensional path planning for underground drones.

Author Contributions

Writing—original draft, Z.L. and F.F.; Writing—review and editing, Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Ningxia Key Research and Development project grant number 2022BEG02016 and Ningxia Natural Science Foundation Key Project grant number 2021AAC02004.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The corresponding author can provide data supporting the findings of this study upon request. Due to ethical or privacy concerns, the data are not publicly available.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mulumba, D.M.; Liu, J.; Hao, J.; Zheng, Y.; Liu, H. Application of an Optimized PSO-BP Neural Network to the Assessment and Prediction of Underground Coal Mine Safety Risk Factors. Appl. Sci. 2023, 13, 5317. [Google Scholar] [CrossRef]

- Senapati, A.; Bhattacherjee, A.; Chatterjee, S. Causal relationship of some personal and impersonal variates to occupational injuries at continuous miner worksites in underground coal mines. Saf. Sci. 2022, 146, 105562. [Google Scholar] [CrossRef]

- Petsonk, E.L.; Rose, C.; Cohen, R. Coal mine dust lung disease. New lessons from an old exposure. Appl. Sci. 2013, 187, 1178–1185. [Google Scholar] [CrossRef] [PubMed]

- Alfonso, I.; Goméz, C.; Garcés, K.; Chavarriaga, J. Lifetime optimization of Wireless Sensor Networks for gas monitoring in underground coal mining. In Proceedings of the 7th International Conference on Computers Communications and Control, Oradea, Romania, 8–12 May 2018; pp. 224–230. [Google Scholar]

- Krishnan, A.; Shalu, R.; Sandeep, S.; Jithin, S.; Thomas, L.; Panicker, S.T. A Need-To-Basis Dust Suppression System using Wireless Sensor Network. In Proceedings of the IEEE Recent Advances in Intelligent Computational Systems (RAICS), Thiruvananthapuram, India, 3–5 December 2020; pp. 207–212. [Google Scholar]

- Porselvi, T.; Ganesh, S.; Janaki, B.; Priyadarshini, K. IoT based coal mine safety and health monitoring system using LoRaWAN. In Proceedings of the 2021 3rd International Conference on Signal Processing and Communication (ICPSC), Coimbatore, India, 13–14 May 2021; pp. 49–53. [Google Scholar]

- Zhu, Y.; You, G. Monitoring system for coal mine safety based on wireless sensor network. In Proceedings of the 2019 Cross Strait Quad-Regional Radio Science and Wireless Technology Conference (CSQRWC), Taiyuan, China, 18–21 July 2019; pp. 1–2. [Google Scholar]

- Mitchell, T.M. Machine Learning; McGraw-Hill: New York, NY, USA, 2007; Volume 1. [Google Scholar]

- Kumari, K.; Dey, P.; Kumar, C.; Pandit, D.; Mishra, S.; Kisku, V.; Chaulya, S.; Ray, S.; Prasad, G. UMAP and LSTM based fire status and explosibility prediction for sealed-off area in underground coal mine. Process. Saf. Environ. Prot. 2021, 146, 837–852. [Google Scholar] [CrossRef]

- Ślęzak, D.; Grzegorowski, M.; Janusz, A.; Kozielski, M.; Nguyen, S.H.; Sikora, M.; Stawicki, S.; Wróbel, Ł. A framework for learning and embedding multi-sensor forecasting models into a decision support system: A case study of methane concentration in coal mines. Inf. Sci. 2018, 451, 112–133. [Google Scholar] [CrossRef]

- Jo, B.W.; Khan, R.M.A. An internet of things system for underground mine air quality pollutant prediction based on azure machine learning. Sensors 2018, 18, 930. [Google Scholar] [CrossRef] [PubMed]

- Wang, L. Intelligent Optimization Algorithms with Applications; Tsinghua University Press: Beijing, China, 2021. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; IEEE: New York, NY, USA, 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Mirjalili, S. Artificial hummingbird algorithm: A new bio-inspired optimizer with its engineering applications. Comput. Methods Appl. Mech. Eng. 2022, 388, 114194. [Google Scholar] [CrossRef]

- Joachims, T. Making Large-Scale SVM Learning Practical; Technical Report;University of Dortmund: Dortmund, Germany, 1998. [Google Scholar]

- Li, C.; Li, S.; Liu, Y. least squares support vector machine model optimized by moth-flame optimization algorithm for annual power load forecasting. Adv. Eng. Softw. 2016, 45, 1166–1178. [Google Scholar] [CrossRef]

- Cong, Y.; Wang, J.; Li, X. Traffic flow forecasting by a least squares support vector machine with a fruit fly optimization algorithm. Procedia Eng. 2016, 137, 59–68. [Google Scholar] [CrossRef]

- Tuerxun, W.; Chang, X.; Hongyu, G.; Zhijie, J.; Huajian, Z. Fault diagnosis of wind turbines based on a support vector machine optimized by the sparrow search algorithm. IEEE Access 2021, 9, 69307–69315. [Google Scholar] [CrossRef]

- Xue, Y.; Dou, D.; Yang, J. Multi-fault diagnosis of rotating machinery based on deep convolution neural network and support vector machine. Measurement 2020, 156, 107571. [Google Scholar] [CrossRef]

- Haupt, R.L.; Haupt, S.E. Practical Genetic Algorithms; John Wiley & Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

- Shan, L.; Qiang, H.; Li, J.; Wang, Z. Chaotic optimization algorithm based on Tent map. Control. Decis. 2005, 20, 179–182. [Google Scholar]

- Iacca, G.; dos Santos Junior, V.C.; de Melo, V.V. An improved Jaya optimization algorithm with Levy flight. Expert Syst. Appl. 2021, 165, 113902. [Google Scholar] [CrossRef]

- Lamming, M.G.; Rhodes, W.L. A simple method for improved color printing of monitor images. TOG 1990, 9, 345–375. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, L.; Zhao, W.; Liu, X. Parameter Identification of a Governing System in a Pumped Storage Unit Based on an Improved Artificial Hummingbird Algorithm. Energies 2022, 15, 6966. [Google Scholar] [CrossRef]

- Ramadan, A.; Kamel, S.; Hassan, M.H.; Ahmed, E.M.; Hasanien, H.M. Accurate photovoltaic models based on an adaptive opposition artificial hummingbird algorithm. Energies 2022, 11, 318. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).