Automated Categorization of Multiclass Welding Defects Using the X-ray Image Augmentation and Convolutional Neural Network

Abstract

1. Introduction

- The automated and precise multi-class categorization of welding defects in X-ray images.

- Creation of a new dataset by segmenting and augmenting the original “GDXray” dataset, the Grima Database of X-ray images that contains multiple welding defects [14]. The newly generated dataset serves as the foundation for our automated categorization approach. In the future, it can be processed and explored by other potential researchers.

- Developing a CNN model that directly processes the augmented dataset for the automated multiclass categorization of welding defects in X-ray images.

2. Related Works

3. Methodology

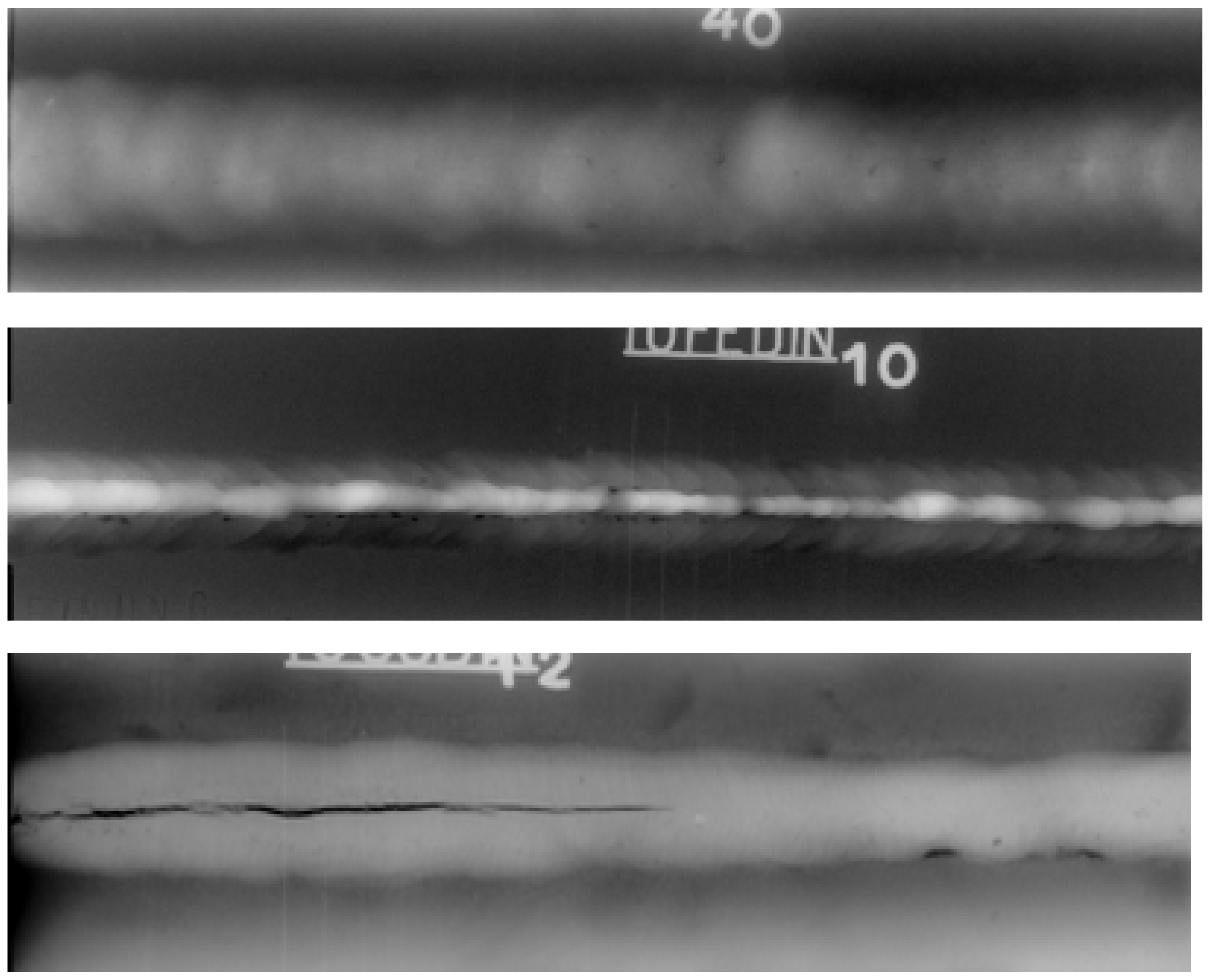

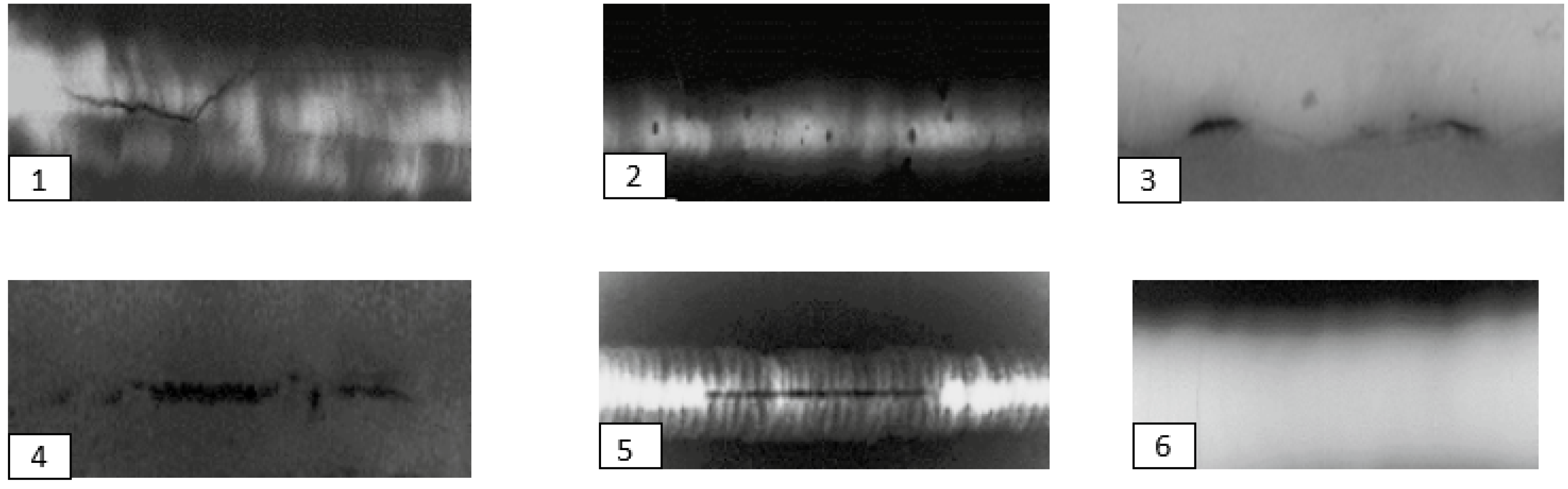

3.1. Dataset

3.2. Data Augmentation

- Random rotation: This technique in the valves rotates an image by a certain number of degrees around its original center point. The degree of rotation can be either to the right or left and can range from 1 to 359 degrees. In many cases, the rotation process, which is symbolized as is combined with zero padding to fill out any missing pixels as it is shown in Equation (1). We apply a random rotation of 40 degrees:

- Shearing (shear range): The process of shearing involves applying a transformation, denoted as , to an image. This transformation moves each point in the image in a particular direction, and the distance of the movement depends on the point’s distance from a line that runs parallel to the selected direction and passes through the origin as it is shown in Equation (2):The symbols and represent the shear coefficient along the and axes, respectively. In our case, the shearing is equal to 20%.

- Zooming: This technique refers to the process of changing the image’s size to enhance its visibility or to focus on a specific part of the image. It involves enlarging or reducing the image’s dimensions. The process is given by Equation (3) and can be achieved independently in diverse instructions. We apply a zoom range of 20%:

- Brightness: This method is a quality of an image that indicates how light or dark it is. This can refer to specific areas within the image or the overall illumination of the scene. The luminosity of the image can be transformed by appending a 0.2 to all pixel ethics. Yet, we apply the brightness range between 0.5 and 1.5.

- Flips: Flipping is a method of making a reflection from the original image. In a two-dimensional image, the positions of the pixels are flipped or mirrored along one of the axes, either horizontally or vertically. In our case, we use only horizontal flipping.Even though some small changes are made to the images, their important meanings remain the same, and the images are still labeled based on their original training label. In other words, the modifications to the images do not affect their core semantic content or the original classification they were given during training. Figure 3 presents the different data augmentation techniques.

3.3. Convolutional Neural Network (CNN) Architecture

3.4. The Evaluation Metrics

- True positive (): The “true positive” in a confusion matrix is the number of occurrences where the model accurately predicts the positive class or event among all true positives in the dataset.

- True negative (): in a confusion matrix, “true negative” refers to the number of instances where the model correctly predicts the negative class out of all the true negative instances in the data.

- False positive (): The “false positive” represents the error made by the model when it wrongly predicts the presence of a specific condition or event, even though it is not present in reality. This type of error is also known as a Type I error, and it can lead to incorrect decisions if not properly managed.

- False negative (): A “false negative” in a confusion matrix is when the model incorrectly predicts the absence of an event among all actual positive instances in the data. It is a measure of the model’s tendency to miss positive cases or make Type II errors.

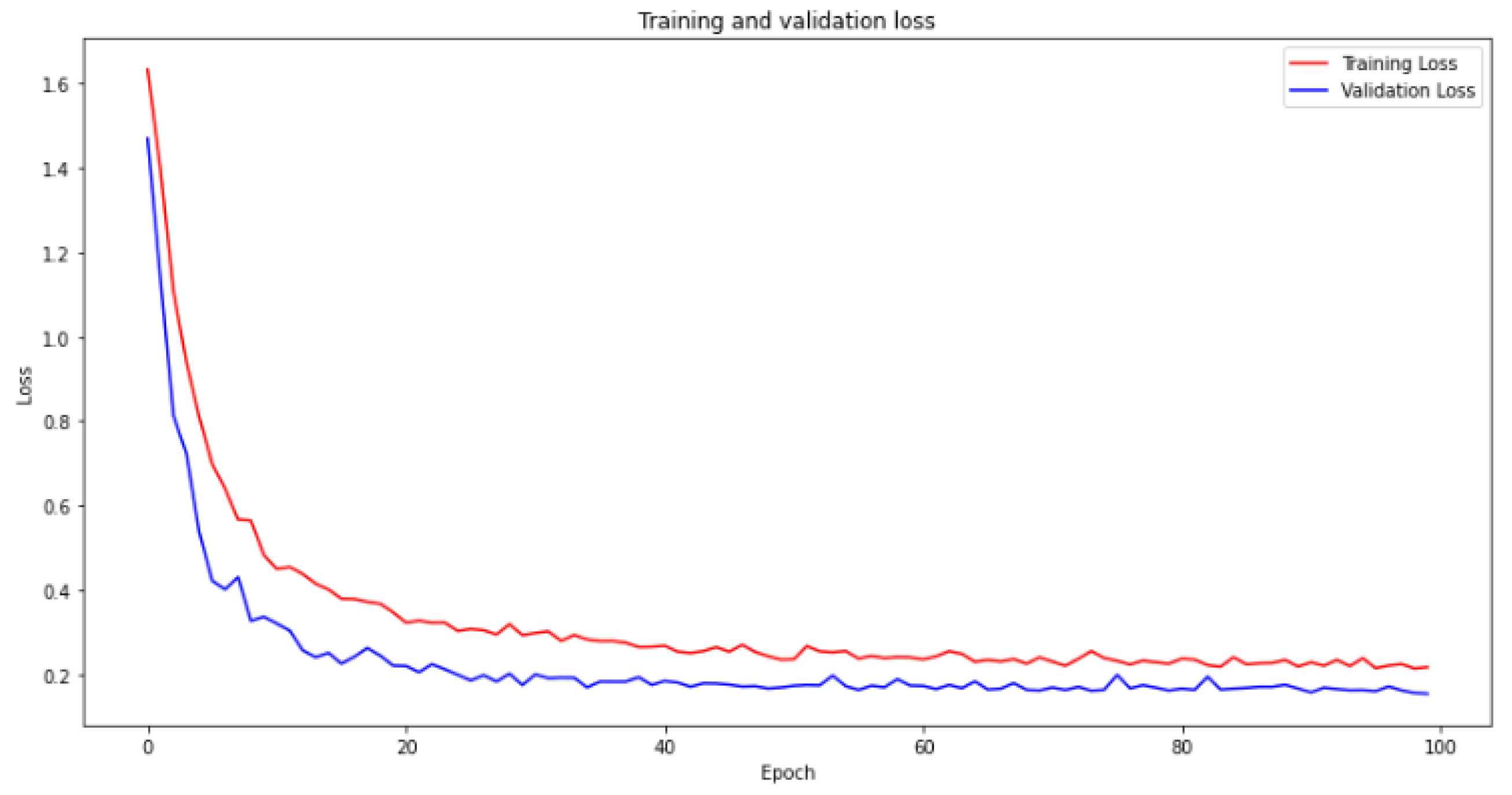

4. Results and Discussion

4.1. The Dataset Preparation

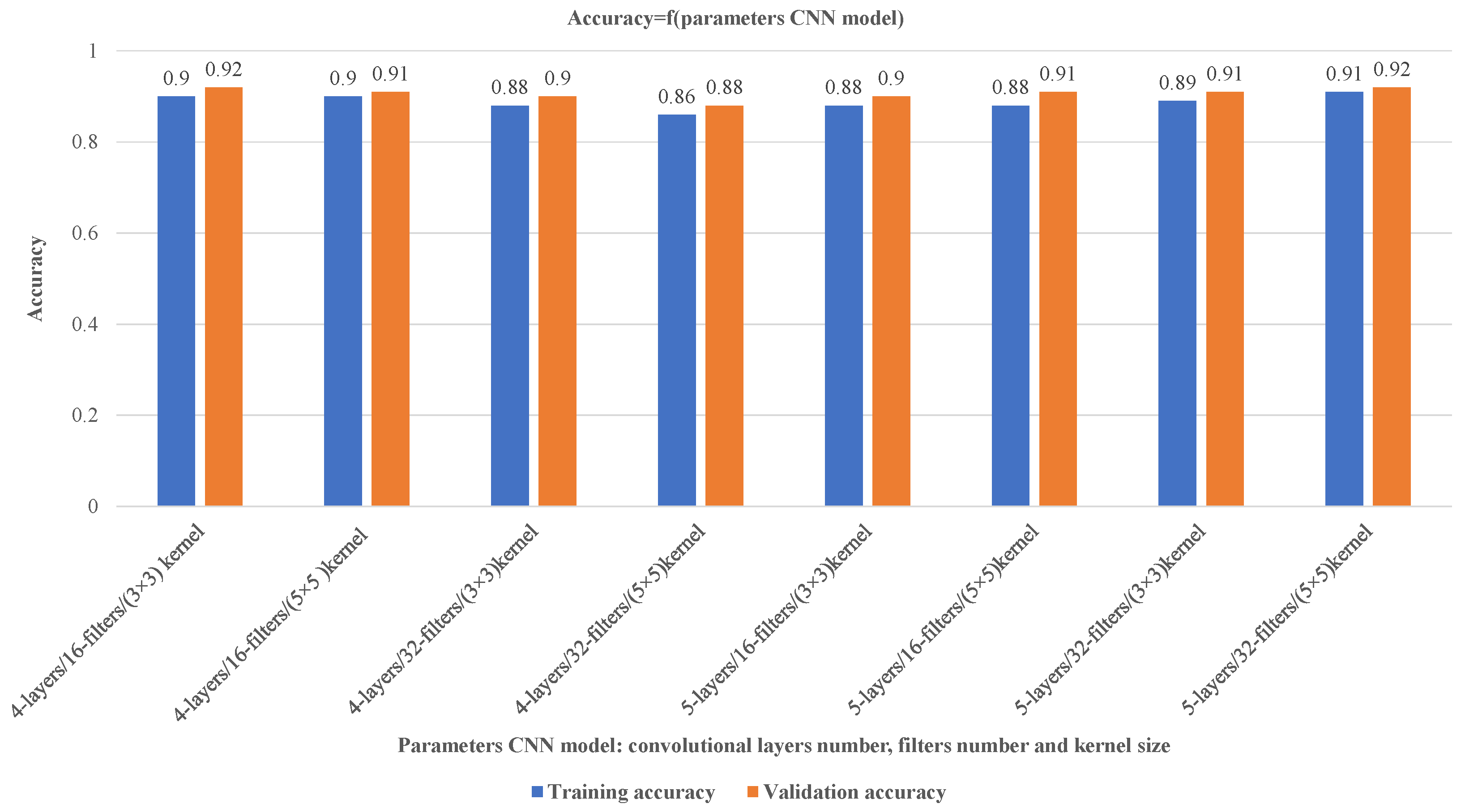

4.2. Proposed CNN Model

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Convolutional Neural Network | |

| Random Forest | |

| Normal Defect | |

| Cracks | |

| C | Cavity |

| I | Inclusion |

| Lack Of Fusion | |

| Shape Defect | |

| Non-Destructive Testing | |

| Federal Institute for Materials Research and Testing | |

| Histogram of Oriented Gradients | |

| Deep Neural Network | |

| Support Vector Machines | |

| Stacked Auto-Encoder |

References

- Hou, W.; Rao, L.; Zhu, A.; Zhang, D. Feature Fusion for Weld Defect Classification with Small Dataset. J. Sens. 2022, 2022, 8088202. [Google Scholar] [CrossRef]

- Liu, W.; Shan, S.; Chen, H.; Wang, R.; Sun, J.; Zhou, Z. X-ray weld defect detection based on AF-RCNN. Weld. World 2022, 66, 1165–1177. [Google Scholar] [CrossRef]

- Yang, L.; Wang, H.; Huo, B.; Li, F.; Liu, Y. An automatic welding defect location algorithm based on deep learning. NDT E Int. 2021, 120, 102435. [Google Scholar] [CrossRef]

- Provencal, E.; Laperrière, L. Identification of weld geometry from ultrasound scan data using deep learning. Procedia CIRP 2021, 104, 122–127. [Google Scholar] [CrossRef]

- Yan, Y.; Liu, D.; Gao, B.; Tian, G.; Cai, Z. A deep learning-based ultrasonic pattern recognition method for inspecting girth weld cracking of gas pipeline. IEEE Sens. J. 2020, 20, 7997–8006. [Google Scholar] [CrossRef]

- Droubi, M.G.; Faisal, N.H.; Orr, F.; Steel, J.A.; El-Shaib, M. Acoustic emission method for defect detection and identification in carbon steel welded joints. J. Constr. Steel Res. 2017, 134, 28–37. [Google Scholar] [CrossRef]

- Kumaresan, S.; Aultrin, K.J.; Kumar, S.; Anand, M.D. Transfer learning with CNN for classification of weld defect. IEEE Access 2021, 9, 95097–95108. [Google Scholar] [CrossRef]

- Hu, A.; Wu, L.; Huang, J.; Fan, D.; Xu, Z. Recognition of weld defects from X-ray images based on improved convolutional neural network. Multimed. Tools Appl. 2022, 81, 15085–15102. [Google Scholar] [CrossRef]

- Ajmi, C.; Zapata, J.; Elferchichi, S.; Zaafouri, A.; Laabidi, K. Deep learning technology for weld defects classification based on transfer learning and activation features. Adv. Mater. Sci. Eng. 2020, 2020, 1574350. [Google Scholar] [CrossRef]

- Zhu, H.; Ge, W.; Liu, Z. Deep learning-based classification of weld surface defects. Appl. Sci. 2019, 9, 3312. [Google Scholar] [CrossRef]

- Baek, D.; Moon, H.S.; Park, S.H. In-process prediction of weld penetration depth using machine learning-based molten pool extraction technique in tungsten arc welding. J. Intell. Manuf. 2022, 1–17. [Google Scholar] [CrossRef]

- Cardellicchio, A.; Nitti, M.; Patruno, C.; Mosca, N.; di Summa, M.; Stella, E.; Renò, V. Automatic quality control of aluminium parts welds based on 3D data and artificial intelligence. J. Intell. Manuf. 2023, 1–20. [Google Scholar] [CrossRef]

- Shevchik, S.; Le-Quang, T.; Meylan, B.; Farahani, F.V.; Olbinado, M.P.; Rack, A.; Masinelli, G.; Leinenbach, C.; Wasmer, K. Supervised deep learning for real-time quality monitoring of laser welding with X-ray radiographic guidance. Sci. Rep. 2020, 10, 3389. [Google Scholar] [CrossRef]

- Mery, D.; Riffo, V.; Zscherpel, U.; Mondragón, G.; Lillo, I.; Zuccar, I.; Lobel, H.; Carrasco, M. GDXray: The database of X-ray images for nondestructive testing. J. Nondestruct. Eval. 2015, 34, 42. [Google Scholar] [CrossRef]

- Yang, L.; Jiang, H. Weld defect classification in radiographic images using unified deep neural network with multi-level features. J. Intell. Manuf. 2021, 32, 459–469. [Google Scholar] [CrossRef]

- Ajmi, C.; Zapata, J.; Martínez-Álvarez, J.J.; Doménech, G.; Ruiz, R. Using deep learning for defect classification on a small weld X-ray image dataset. J. Nondestruct. Eval. 2020, 39, 68. [Google Scholar] [CrossRef]

- Hou, W.; Wei, Y.; Jin, Y.; Zhu, C. Deep features based on a DCNN model for classifying imbalanced weld flaw types. Measurement 2019, 131, 482–489. [Google Scholar] [CrossRef]

- Nacereddine, N.; Goumeidane, A.B.; Ziou, D. Unsupervised weld defect classification in radiographic images using multivariate generalized Gaussian mixture model with exact computation of mean and shape parameters. Comput. Ind. 2019, 108, 132–149. [Google Scholar] [CrossRef]

- Su, L.; Wang, L.; Li, K.; Wu, J.; Liao, G.; Shi, T.; Lin, T. Automated X-ray recognition of solder bump defects based on ensemble-ELM. Sci. China Technol. Sci. 2019, 62, 1512–1519. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, Z.; Zhang, C.; Xi, J.; Le, X. Weld defect detection based on deep learning method. In Proceedings of the 2019 IEEE 15th International Conference on Automation Science and Engineering (CASE), Vancouver, BC, Canada, 22–26 August 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1574–1579. [Google Scholar]

- Zhang, Z.; Wen, G.; Chen, S. Weld image deep learning-based on-line defects detection using convolutional neural networks for Al alloy in robotic arc welding. J. Manuf. Process. 2019, 45, 208–216. [Google Scholar] [CrossRef]

- Jiang, H.; Hu, Q.; Zhi, Z.; Gao, J.; Gao, Z.; Wang, R.; He, S.; Li, H. Convolution neural network model with improved pooling strategy and feature selection for weld defect recognition. Weld. World 2021, 65, 731–744. [Google Scholar] [CrossRef]

- Thakkallapally, B.C. Defect classification from weld radiographic images using VGG-19 based convolutional neural network. In Proceedings of the NDE 2019—Conference & Exhibition, Bengaluru, India, 5–7 December 2019; Volume 18. [Google Scholar]

- Jiang, H.; Zhao, Y.; Gao, J.; Wang, Z. Weld defect classification based on texture features and principal component analysis. Insight-Non-Destr. Test. Cond. Monit. 2016, 58, 194–200. [Google Scholar] [CrossRef]

- Kumaresan, S.; Aultrin, K.J.; Kumar, S.; Anand, M.D. Deep Learning Based Simple CNN Weld Defects Classification Using Optimization Technique. Russ. J. Nondestruct. Test. 2022, 58, 499–509. [Google Scholar] [CrossRef]

- Stephen, D.; Lalu, P. Development of Radiographic Image Classification System for Weld Defect Identification using Deep Learning Technique. Int. J. Sci. Eng. Res. 2021, 12, 390–394. [Google Scholar] [CrossRef]

- Khelifi, L.; Mignotte, M. Deep learning for change detection in remote sensing images: Comprehensive review and meta-analysis. IEEE Access 2020, 8, 126385–126400. [Google Scholar] [CrossRef]

- Li, Y. Research and application of deep learning in image recognition. In Proceedings of the 2022 IEEE 2nd International Conference on Power, Electronics and Computer Applications (ICPECA), Shenyang, China, 21–23 January 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 994–999. [Google Scholar]

- Bohra, P.; Campos, J.; Gupta, H.; Aziznejad, S.; Unser, M. Learning activation functions in deep (spline) neural networks. IEEE Open J. Signal Process. 2020, 1, 295–309. [Google Scholar] [CrossRef]

- Rahmat, T.; Ismail, A.; Aliman, S. Chest X-ray image classification using faster R-CNN. Malays. J. Comput. MJoC 2019, 4, 225–236. [Google Scholar]

- Chang, Y.; Wang, W. A Deep Learning-Based Weld Defect Classification Method Using Radiographic Images with a Cylindrical Projection. IEEE Trans. Instrum. Meas. 2021, 70, 5018911. [Google Scholar] [CrossRef]

- Fioravanti, C.C.B.; Centeno, T.M.; Da Silva, M.R.D.B. A deep artificial immune system to detect weld defects in DWDI radiographic images of petroleum pipes. IEEE Access 2019, 7, 180947–180964. [Google Scholar] [CrossRef]

- Yang, D.; Cui, Y.; Yu, Z.; Yuan, H. Deep learning based steel pipe weld defect detection. Appl. Artif. Intell. 2021, 35, 1237–1249. [Google Scholar] [CrossRef]

- Yun, J.P.; Shin, W.C.; Koo, G.; Kim, M.S.; Lee, C.; Lee, S.J. Automated defect inspection system for metal surfaces based on deep learning and data augmentation. J. Manuf. Syst. 2020, 55, 317–324. [Google Scholar] [CrossRef]

- Saraiva, A.A.; Santos, D.; Costa, N.J.C.; Sousa, J.V.M.; Ferreira, N.M.F.; Valente, A.; Soares, S. Models of Learning to Classify X-ray Images for the Detection of Pneumonia using Neural Networks. In Proceedings of the Bioimaging, Prague, Czech Republic, 22–24 February 2019; pp. 76–83. [Google Scholar]

- Stephen, D.; Lalu, P.; Sudheesh, R. X-ray Weld Defect Recognition Using Deep Learning Technique. Int. Res. J. Eng. Technol. 2021, 8, 818–823. [Google Scholar]

- Dong, X.; Taylor, C.J.; Cootes, T.F. A random forest-based automatic inspection system for aerospace welds in X-ray images. IEEE Trans. Autom. Sci. Eng. 2020, 18, 2128–2141. [Google Scholar] [CrossRef]

- Qaisar, S.M. Efficient mobile systems based on adaptive rate signal processing. Comput. Electr. Eng. 2019, 79, 106462. [Google Scholar] [CrossRef]

- Qaisar, S.M.; Khan, S.I.; Srinivasan, K.; Krichen, M. Arrhythmia classification using multirate processing metaheuristic optimization and variational mode decomposition. J. King Saud-Univ.-Comput. Inf. Sci. 2023, 35, 26–37. [Google Scholar]

| Layer (Type) | Output Shape | Param # |

|---|---|---|

| conv2d (Conv2D) | (None, 124, 124, 32) | 2432 |

| max_pooling (MaxPooling2D) | (None, 62, 62, 32) | 0 |

| conv2d_1 (Conv2D) | (None, 60, 60, 64) | 18,496 |

| max_pooling2d_1 (MaxPooling2D) | (None, 30, 30, 64) | 0 |

| conv2d_2 (Conv2D) | (None, 28, 28, 128) | 73,856 |

| max_pooling2d_2 (MaxPooling2D) | (None, 14, 14, 128) | 0 |

| conv2d_3 (Conv2D) | (None, 12, 12, 128) | 147,584 |

| max_pooling2d_3 (MaxPooling2D) | (None, 6, 6, 128) | 0 |

| conv2d_4 (Conv2D) | (None, 4, 4, 256) | 295,168 |

| max_pooling2d_4 (MaxPooling2D) | (None, 2, 2, 256) | 0 |

| dropout (Dropout) | (None, 2, 2, 256) | 0 |

| Flatten (Flatten) | (None, 1024) | 0 |

| Dense (Dense) | (None, 512) | 524,800 |

| Dense_1 (Dense) | (None, 6) | 3078 |

| A Model Trained and Compiled Using Adam | ||||

|---|---|---|---|---|

| Precision | Recall | F1-score | Support | |

| C | 0.97 | 0.79 | 0.87 | 98 |

| CR | 0.99 | 0.93 | 0.96 | 163 |

| I | 0.85 | 0.87 | 0.86 | 162 |

| LOF | 0.89 | 0.93 | 0.91 | 163 |

| ND | 0.99 | 0.99 | 0.99 | 120 |

| SD | 0.87 | 0.97 | 0.91 | 161 |

| Accuracy | 0.92 | 867 | ||

| Macro avg | 0.93 | 0.91 | 0.92 | 867 |

| Weighted avg | 0.92 | 0.92 | 0.92 | 867 |

| Model | Accuracy |

|---|---|

| InceptionV3 | 88.58% |

| RF | 86% |

| LeNet | 85% |

| CNN | 92% |

| Filters | Kernel_Size | Units | Dropout_Rate | Batch_Size | Accuracy | |

|---|---|---|---|---|---|---|

| Optuna optimizer | 32 | 4 | 448 | 0.28 | 32 | 91% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Say, D.; Zidi, S.; Qaisar, S.M.; Krichen, M. Automated Categorization of Multiclass Welding Defects Using the X-ray Image Augmentation and Convolutional Neural Network. Sensors 2023, 23, 6422. https://doi.org/10.3390/s23146422

Say D, Zidi S, Qaisar SM, Krichen M. Automated Categorization of Multiclass Welding Defects Using the X-ray Image Augmentation and Convolutional Neural Network. Sensors. 2023; 23(14):6422. https://doi.org/10.3390/s23146422

Chicago/Turabian StyleSay, Dalila, Salah Zidi, Saeed Mian Qaisar, and Moez Krichen. 2023. "Automated Categorization of Multiclass Welding Defects Using the X-ray Image Augmentation and Convolutional Neural Network" Sensors 23, no. 14: 6422. https://doi.org/10.3390/s23146422

APA StyleSay, D., Zidi, S., Qaisar, S. M., & Krichen, M. (2023). Automated Categorization of Multiclass Welding Defects Using the X-ray Image Augmentation and Convolutional Neural Network. Sensors, 23(14), 6422. https://doi.org/10.3390/s23146422