Abstract

Good data feature representation and high precision classifiers are the key steps for pattern recognition. However, when the data distributions between testing samples and training samples do not match, the traditional feature extraction methods and classification models usually degrade. In this paper, we propose a domain adaptation approach to handle this problem. In our method, we first introduce cross-domain mean approximation (CDMA) into semi-supervised discriminative analysis (SDA) and design semi-supervised cross-domain mean discriminative analysis (SCDMDA) to extract shared features across domains. Secondly, a kernel extreme learning machine (KELM) is applied as a subsequent classifier for the classification task. Moreover, we design a cross-domain mean constraint term on the source domain into KELM and construct a kernel transfer extreme learning machine (KTELM) to further promote knowledge transfer. Finally, the experimental results from four real-world cross-domain visual datasets prove that the proposed method is more competitive than many other state-of-the-art methods.

1. Introduction

Traditional classification tasks deal with situations where the distribution of source domain samples and target domain samples are the same, and a classifier trained from source domain samples can be directly applied to the target domain samples. Theoretical studies on classifiers are also based on this assumption [1]. However, in real-world environments, the distribution of source domain samples and target domain samples is often different due to factors such as lighting, viewpoints, weather conditions, and cameras [2]. The most advanced classifiers trained on source domain samples may dramatically degrade when applied to target domain samples. One possible solution is to annotate the new data and retrain the model. Unfortunately, labeling a large number of samples is costly and time-consuming. Another promising solution is domain adaptation (DA), which aims to minimize the distribution gap between the two domains and learn a fairly accurate shared classifier for both domains, where the target domain samples do not have available labels.

One strategy of DA is to find invariant feature representation subspaces between domains to minimize distribution divergence. A large number of existing methods applying this strategy learn a shared feature subspace where the distribution mismatch between the two domains is reduced, and then employ standard classification methods in this subspace [3]. Metrics that measure the mismatch in distribution between domains include maximum mean discrepancy (MMD) [4], Kullback–Leibler (KL) divergence [5], Bregman divergence [6], and Wasserstein distance [7], among others. Due to the fact that MMD is a nonparametric estimation criterion for distance, researchers have proposed various adaptive models commonly with this metric. Pan et al. [8] proposed a classical DA method maximum mean difference embedding (MMDE) by defining a semidefinite program (SDP) and introducing the MMD criterion to match marginal distribution. To further reduce the computational burden of the MMDE and to preserve the key features of the data, [9] proposed an improved method, transfer component analysis (TCA), which reduces the differences in marginal distribution between domains by projecting the data into a latent feature space. Gong et al. [10] developed geodesic flow kernel (GFK), which is another classical method. GFK establishes connections between domains based on a series of intermediate subspaces instead of using only one subspace. In addition to marginal distributions, the joint distribution adaptive (JDA) [11] approach also considers conditional distribution matching, where target samples are iteratively assigned pseudo-labels. Based on JDA, various variants have been developed. Lu et al. [12] introduced weight adaptation into JDA, paid attention to the feature representation of a single sample, emphasized the difference between samples in the target domain learning, and proposed the weights adaptation joint distribution adaptation (W-JDA). A joint distribution adaptation based transfer network with diverse feature aggregation (JDFA) is proposed by Jia et al. [13], which enhances feature extraction capability across large domain gaps through the diverse feature aggregation module; then, MMD was employed to reduce distribution differences.

Huang et al. [14] proposed the extreme learning machine (ELM) model, which is a “generalized” single-hidden-layer feedforward neural network that has been employed to address classification and regression problems. The hidden layer nodes of the ELM are randomly initialized and without tuning, and the output weights between the hidden and output layers can be analytically determined [15]. Many researchers have extended it in theory and application, and proposed numerous new ELMs, such as AdaBoost ELM [16], kernel-based multilayer ELM (ML-KELM) [17], voting-based ELM (V-ELM) [18], Kalman filter ELM (KA-ELM) [19], and others. However, the above algorithms require a prerequisite assumption that there is consistency in the distribution of labeled training samples and testing samples, and therefore these classical ELM algorithms cannot handle domain adaptation problem. To address this issue, some improvements have been made to ELM. Zang et al. [20] proposed a two-stage transfer limit learning machine (TSTELM) framework. The framework uses MMD to reduce the distribution differences of output layers between domains in the statistical matching stage. In the subspace alignment stage, the source and target model parameters are aligned, and output weight approximation is added to further promote knowledge transfer across domains. Chen et al. [21] developed a DST-ELM method to learn a new feature space. With the target domain discrimination information fixed in the feature space, the source domain samples are adapted to the target domain and the MMD distance between them is minimized. The MMD metric has been used in the above methods to measure the distribution differences between the source domain samples and the target domain samples as a whole, but they ignore the impact of individual samples on the distribution differences.

To sum up, there are several challenges to DA. The first issue is the similarity between source domain and target domain. To cope with this issue, suitable distribution distance measurement criteria are found to eliminate this dissimilarity. Secondly, there is class separability and shared feature extraction between source and target domains in the feature subspace. The third challenge is the knowledge transfer ability of the classifier.

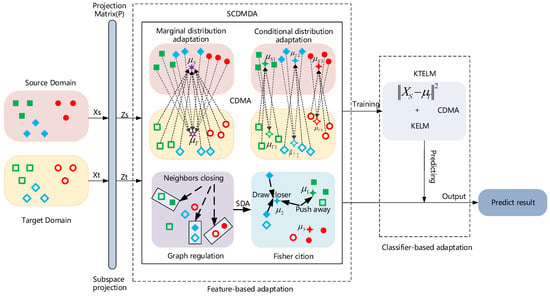

We believe that a well-developed DA algorithm is able to address these three challenges. Therefore, we want to tackle all these problems simultaneously. We propose a domain adaptation approach which consists of two stages: feature-based adaptation and classifier-based adaptation. At the former stage, we develop semi-supervised cross-domain mean discriminative analysis (SCDMDA). It introduces cross-domain mean approximation (CDMA) [17] into SDA to minimize the marginal and conditional interdomain distribution discrepancy. Meanwhile, the category separability of LDA and the original structure information of graph regularizers are used together to capture shared features between the source and target domains. At the latter stage, we first choose KELM as the classification model. Then, a kernel transfer extreme learning machine (KTELM) is designed by adding a cross-domain mean constraint term into KELM to further enhance the knowledge transfer ability of our method, as shown in Figure 1. Finally, we investigate the performance of our approach on public domain adaptation datasets, and the experimental results show that it can learn better domain-invariant features with high accuracy and outperform other existing shallow and deep domain adaptation methods.

Figure 1.

Samples of the same color are of the same class in the source and target domains. An illustration of our method: (1) SCDMDA is designed by introducing CDMA into SDA for shared feature extraction across domains. (2) KTELM is constructed by adding a cross-domain mean approximation constraint into a kernel extreme learning machine for classification. (3) SCDMDA and KTELM are combined for cross-domain feature extraction and classification.

In this paper, we make the following contributions:

- We introduce CDMA into SDA and then propose SCDMDA. It extracts shared discriminative features across domains by using CDMA to minimize the marginal and conditional discrepancies between domains and applying SDA to exploit the label information and original structure information.

- We present KTELM by designing a cross-domain mean approximation constraint into KELM for classification in domain adaptation.

- We obtain a classifier with the ability of knowledge transfer by combining SCDMDA and KTELM and implement a classification task on public image datasets. The results show the superiority of our approach.

2. Preliminary

In this section, we will briefly introduce SDA, ELM, KELM, and DA.

2.1. Semi-Supervised Discriminant Analysis (SDA)

As a classical semi-supervised feature extraction algorithm, SDA extends LDA by adding a geometrical regularizer to prevent overfitting. Given a sample set , it is divided into two parts: a labeled subset and an unlabeled subset . is a labeled sample with a label , and is an unlabeled sample. and are the number of labeled and unlabeled samples, respectively, . The dimension of the sample is . We can define the objective function of SDA as follows:

where is the projection matrix; is Laplacian matrix. is the graph neighbor similarity matrix of . , where and are among nearest neighbors of each other, and denotes the set of nearest neighbors of . is a diagonal matrix; its entries are a column (or a row, since is symmetric) sum of , . is the parameter balancing the graph regularization. and represent the interclass scatter matrix and between-class scatter matrix, respectively, which are calculated as follows:

where , and . is the number of class, is the -th sample belonging to class , represents the number , and is the mean value of samples of class .

2.2. Extreme Learning Machine (ELM) and Kernel Extreme Learning Machine (KELM)

As a high-performance classifier, ELM with a single hidden layer has a fast training process and high classification performance. Given a dataset with samples and its label , we could construct an ELM network with hidden nodes as follows:

where is the output prediction result of sample , and is the output weight connecting the hidden layer and output layer. is an activation function to improve the nonlinear fitting ability of the network. and are input weight and bias, between the input layer and the hidden layer, which can be set randomly. It can be seen that is the key parameter of an ELM network. If we want to obtain an optimal result, the following objective function needs to be solved:

In Equation (5), , , and . is a regularizer to avoid model overfitting, and is the training error. is a tradeoff parameter between minimizing training errors and the regularizer.

We consider Equation (5) to be a regular least square problem and set . The optimal solution of is calculated as follows:

Then, the output of the trained ELM model corresponding to test sample is

where .

If the activation function is unknown, we let , , is a kernel function. The output of KELM can be defined as:

where .

2.3. Domain Adaptation (DA)

In practical application scenarios, it is difficult to collect enough training samples with the same distribution as the testing samples. For example, in photography, it is hard to avoid changes in pixel distribution caused by changes in lighting, posture, weather, and background. If a large number of samples with the same distribution are selected and manually marked for model training, the cost will be high [22]. The above distribution changes will generate a domain bias between the training dataset and the testing dataset, which could degrade the performance of traditional machine learning methods. DA can not only apply knowledge from other domains (source domains) that contain substantial labeled samples and are related to the target domain for model training, but also eliminate domain bias. Existing DA methods are mainly divided into instance-based adaptation, feature-based adaptation, and classifier-based adaptation.

The instance-based adaptation methods seek a strategy which selects “good” samples in the source domain to participate in model training and suppresses “bad” samples to prevent negative transfer. Kernel mean matching (KMM) [23] minimizes the maximum mean difference [24] and weighs the source data and target data in the reproducing kernel Hilbert space (RKHS), which can correct the inconsistent distribution between domains. As a classic instance-based adaptation method, transfer adaptive boosting (TrAdaBoost) [25] extends the AdaBoost algorithm to weigh source-labeled samples and target-labeled samples to match the distributions between domains. In the work [26], a simple two-stage algorithm was proposed to reweight the results of testing data from the training classifier using their signed distance to the domain separator. Moreover, it applied manifold regularization to propagate the labels of target instances with larger weights to those with smaller weights. Instance-based adaptation methods are more efficient for knowledge transfer, but negative transfer [27] can easily occur when there are no shared samples between domains.

The feature-based adaptation methods attempt to learn a subspace with a better shared features representation, in which the marginal or conditional distribution divergences between domains are minimized to facilitate knowledge transfer. Transfer component analysis (TCA), joint distribution adaptation (JDA), balanced distribution adaptation (BDA), and multiple kernel variant of maximum mean discrepancy (MK-MMD) apply the maximum mean discrepancy (MMD) strategy to perform distribution matching, thus minimizing the marginal or conditional distribution divergences between domains [28]. In [29], Wang et al. use the locality preserving projection (LPP) to learn a joint subspace from the source and target domains.

In recent years, deep neural networks (DNNs) have performed well in high-level semantic feature extraction with strong expression ability, and have broad application prospects in domain adaptation. Domain adaptation networks (DAN) [30], faster domain adaptation networks (FDAN) [31], residual transfer networks (RTN) [32], domain adversarial neural networks (DANN) [33], and collaborative and adversarial networks (CAN) [34] use MMD to align distribution discrepancies in an end-to-end learning paradigm for feature extraction across domains. However, DNNs also have their shortcomings, for example, the models contain huge parameters and require a large amount of data for training, which is not suitable for small sample data. DNNs have a strong ability to fit data and can approximate any complex objective function, but the computational cost is high. Our method in this paper has a small number of parameters and fast calculation speed, and it is suitable for small sample data classification.

The goal of the classifier-based adaptation methods is to adjust the parameters of the classifier so that the classifier trained on the source domain has good performance in the target task. Adaptive support vector machine (Adapt-SVM) [35] introduced a regularizer into SVM to minimize the classification error and the discrepancy between two classifiers trained on the source- and target-labeled samples. Based on LS-SVM, Tommasi et al. [36] presented an adaptation model called multi-model knowledge transfer (Multi-KT), in which the objective function imposed the requirement that the target classifier and a linear combination of the source classifiers be close to each other for knowledge transfer. Considering the simplicity and efficiency of ELM, many adaptation models based on ELM have been proposed for domain adaptation, such as joint domain adaptation semi-supervised extreme learning machine (JDA-S2ELM) [37], cross-domain ELM (CDELM) [38], domain space transfer ELM (DST-ELM) [21], domain adaptation extreme learning machine (DAELM) [39], and so on.

In addition, the adaptation model based on the generative adversarial network (adversarial-based adaptation) [34,40,41,42] has recently shown exceptional performance. Many target samples with transferable and domain-invariant features are produced through the generator of adversarial learning and then applied to confuse the domain discriminator training on the source domain and optimize the generator. It can effectively reduce the distribution differences between domains and transfer knowledge efficiently. Since generating samples with domain-invariant features is the main task of adversarial-based adaptation, it is commonly attributed to feature-based adaptation.

In this paper, our method is a combination of feature- and classifier-based adaptation. SCDMDA belongs to a shallow feature-based adaptation method, and KTELM is a classifier-based adaptation method. At the feature-based adaptation stage, SCDMDA finds domain-invariant features. It not only applies SDA to maximize between-class scatter, minimize within-class scatter, and keep the original structure information by pseudo-labeled target samples and labeled source samples, but it also reduces the distribution differences between domains using CDMA. At the classifier-based adaptation stage, a cross-domain mean constraint term is added to KELM to further enhance its knowledge transfer ability.

3. Proposed Method

In a domain adaptation environment, given a source domain with labeled samples and a target domain with unlabeled samples , where the distributions between and are not the same but are related and the label space is shared, the number of categories is denoted by . To address the domain adaptation problem, our proposed method is divided into two stages: feature-based adaptation and classifier-based adaptation.

At the feature-based adaptation stage, we design SCDMDA, and its goal is to find an optimal transformation matrix , which embeds and into low-dimensional subspaces and , where is the subspace dimension. In this subspace, the distributions between and are closer than those between and .

At the classifier-based adaptation stage, KTELM is constructed by adding a cross-domain mean constraint term into KELM, which improves the knowledge transfer ability of KELM.

3.1. Feature-Based Adaptation of SCDMDA

3.1.1. Cross-Domain Mean Approximation (CDMA)

From Equation (1) in Section 2.1, we can see that although SDA has better feature extraction performance than LDA, it can obtain bad feature representation when the training samples and testing samples have inconsistent data distributions. To address this issue, we need to reduce the distribution discrepancy of training samples and testing samples with the help of domain adaptation technology based on CDMA. It is expressed as follows:

where and . is the mean vector of the target (source) domain samples, and is the mean vector of the target (source) domain with category. and are the number of samples in and . , , , and . is the center matrix, where is the column vector with element 1, . In Equation (9), CDMA adapts both the marginal distribution in the first term and the conditional distribution in the second term.

3.1.2. Semi-supervised Cross-Domain Mean Discriminative Analysis (SCDMDA)

To enhance the knowledge transfer ability of SDA, we combine Equation (9) and Equation (1) and design the objective function of SCDMDA for shared feature extraction across domains as follows:

Furthermore, the above formula is equivalent to:

where with tradeoff parameter is introduced into the objective function to ensure the sparsity of matrix . and balance the influence of the graph regularizer and LDA, respectively.

We set , and Equation (11) becomes a generalized eigen-decomposition problem:

Finally, Equation (12) is used to find , the smallest eigenvectors for the optimal adaptation matrix , to reduce the difference distribution between domains.

To address the issue of the nonlinear data, we transform Equations (11) and (12) into a high-dimensional space by kernel mapping, namely . The kernel matrix corresponding to the dataset is , then we obtain:

where and are the nonlinear forms of and under the kernel map, respectively, which can be obtained through Ref. [43]. A complete procedure of SCDMDA is summarized in Algorithm 1.

| Algorithm 1. SCDMDA |

| Input: Dataset and , subspace dimension , parameters , , , , and , classifier KTELM, maximum number of iterations . Output: Projection matrix and target prediction . Step1: According to Equations (1) and (9), construct , , and , and set and to 0. Step2: Let . Step3: Solve Equation (12) or Equation (14) to obtain the projection matrix . Step4: Project and by into -dimensional subspace to obtain and . Step5: Learn a KTELM on , and classify to obtain the label set of the target domain data . Step6: Use and , construct , and solve and ( and in the nonlinear case) according to Equations (2) and (3). Step7: Let . Step8: If or does not change, output , otherwise, go to Step3. |

3.2. Classifier-Based Adaptation of KTELM

After feature adaptation of SCDMADA, we can obtain and obtain and . Then, it can be seen from Section 2.2 that we can train a KELM on and effectively predict . However, we can see from Equation (5) that KELM has no capacity for knowledge transfer. In this section, we design a cross-domain mean constraint on the source domain as follows:

where is the samples dataset with category in , and denotes the mean of the samples dataset with category in . Then, we add Equation (15) into Equation (5) and obtain

where and balance the influence of training errors and cross-domain mean constraint on the source domain, respectively.

Similar to ELM, we set and obtain

We kernelize Equation (17) and let , , is a kernel function. The output of KTELM can be defined as:

where , , and is the mean of . , and is the mean of .

3.3. Discussion

In this paper, our method is proposed for domain adaptation, which includes feature adaptation based on SCDMDA and classifier adaptation based on KTELM.

- From Equation (11) and Equation (18), it can be seen that, compared with SDA, SCDMDA adopts CDMA to reduce the distribution discrepancy between domains, which is better for domain adaptation than SDA. Moreover, as a semi-supervised feature extraction method, SCDMDA focuses more attention on individual information with the help of the category separability of LDA and the original structure information of graph regularizers.

- Compared with MMD, CDMA reflects individual differences. In our method, CDMA mines individual information through and , which is a more effective interdomain distribution difference measurement mechanism than MMD. In addition, we verify the improved method on k-NN, KELM, and KTELM classifiers.

- In the classical ELM, solving the output weight that connects the hidden layer and the output layer is the key, and the optimal solution is obtained by solving Equation (5). However, for samples with interdomain distribution differences, the solution obtained by Equation (5) is not the optimal. The domain adaptation is added to the ELM to obtain Equation (16), and the optimal can be obtained. By adopting the cross-domain mean constraint on the source domain to achieve the cross-domain transfer of knowledge, the interdomain distribution difference can be reduced, which shows that KTELM has higher domain adaptation accuracy.

4. Experiments and Analysis

In this section, extensive experiments were conducted on four widely adopted datasets, including Office+Caltech [10], Office-31 [44] object recognition, USPS [45] and MNIST [46] digital handwriting, and PIE face [47], to test SCDMDA+KETLM. Table 1 shows some descriptions of these. All the experiments were conducted on a PC equipped with Win10, an Intel i5 10400F, 2.9 GHz CPU, and 8 GB RAM, with software MATLAB 2017b. In the experiment, we repeated the experiment 20 times and recorded the average results.

Table 1.

Description of image datasets.

4.1. Dataset Description

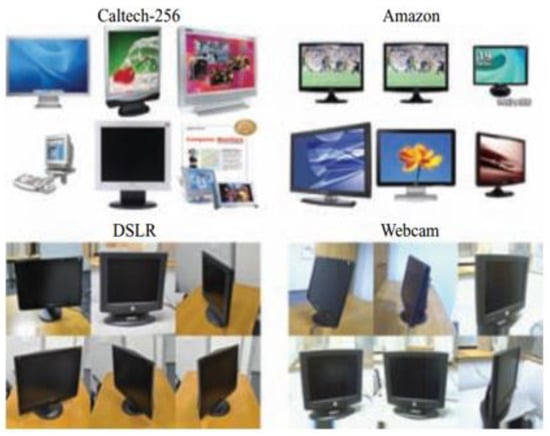

Office + Caltech: It is a visual domain adaptation benchmark dataset, which contains two sub-datasets of Office and Caltech (C), shown in Figure 2. Office contains a total of 4652 pictures with 31 categories from 3 different domain datasets: Amazon (A), DSLR (D), and Webcam (W). In Amazon, each class typically contains an average of 90 images, with an average of 30 images in DSLR or Webcam. Additionally, Caltech is a benchmark dataset for target recognition, containing 30,607 images in 256 categories. In our experiments, we selected 10 shared categories in 4 domains with a total of 2533 images with 800 SURF features. During the experiment, two different domains were selected from the four databases as source and target domain datasets. Thus, we had 12 cross-domain tasks, i.e., C vs. A, C vs. W, C vs. D... and D vs. W.

Figure 2.

Office + Caltech dataset.

Office-31: This is a public dataset commonly used to investigate domain adaptation algorithms, which includes three distinct domains: amazon, webcam, and dslr. It contains 4652 images with 31 categories. In our experiments, we carried out a classification task in a 2048-dimensional ResNet-50 feature space and performed six groups of experiments: amazon vs. dslr, amazon vs. webcam, dslr vs. amazon, dslr vs. webcam, webcam vs. amazon, and webcam vs. dslr.

PIE: It is a standard facial dataset collected by Carnegie Mellon University, which contains 41,368 face images with a pixel resolution of 32 × 32 from 68 people. These images depict facial postures, lighting, and expressions (as shown in Figure 3a). To verify the performance of the domain adaptation algorithm, we selected five subsets from the dataset: PIE1 (left pose), PIE2 (upward pose), PIE3 (downward pose), PIE4 (frontal pose), and PIE5 (right pose). In each subset (posture), all face images with different expressions and illuminations are changed. In this paper, we selected the source data and the target data, respectively, and constructed 20 cross-domain tasks: PIE1 vs. PIE2, PIE1 vs. PIE3... PIE5 vs. PIE4.

Figure 3.

Datasets PIE, USPS, and MNIST. (a) PIE; (b) USPS; (c) MNIST.

USPS+MNIST: USPS and MNIST are two closely correlated, yet differently distributed, handwritten datasets, sharing 10 numeric categories of 0–9 (as shown in Figure 3b,c). The USPS dataset contains 7291 training samples and 2007 test samples, each with 16 × 16 pixels. There are 60,000 training images and 10,000 test images with 28 × 28 pixels in the MNIST database. During this experiment, 1800 pictures from USPS and 2000 pictures from MNIST were selected to construct source and target domain datasets. All the pictures were converted into grayscale images with 16 × 16 pixels. We constructed two recognition tasks, i.e., USPS as the source domain and MNIST as the target domain (USPS vs. MNIST) and vice versa (MNIST vs. USPS).

4.2. Experiment Setting

To evaluate the performance of our algorithm, we compared our approach with several state-of-the-art domain adaptation approaches categorized into three classes: the traditional methods, e.g., 1-NN [48], KELM, and SDA; the shallow feature adaptation methods, e.g., GFK, JDA, STDA-CMC [49], W-JDA, and JDA-CDMAW [50]; and the deep transfer methods, e.g., DAN, DANN, and CAN. In addition, our method is divided into three cases: SCDMDA0 represents SCDMDA+1NN, SCDMDA1 represents SCDMDA+KELM, and SCDMDA2 represents SCDMDA+KTELM. In addition to KELM, SCDMDA1, and SCDMDA2, other algorithms all choose 1-NN as the basic classifier. We applied classification accuracy as the evaluation metric for each algorithm and its formula is as follows:

To achieve optimal functionality of SCDMDA and KTELM, we set on Office+Caltech, USPS+MNIST, PIE, and Office-31 datasets, respectively, , , , , , and for all datasets. For comprehensive comparison, the results of some methods are provided in their original papers or their publicly available codes. We tuned parameters according to their default parameters in released codes or their respective literature.

4.3. Results and Analysis

We applied our method, compared algorithms, and performed classification experiments on the Office+Caltech, USPS+MNIST, PIE, and Office-31 datasets. The results are presented in Table 2 and Table 3. From Table 2, conclusions can be obtained as follows:

Table 2.

Accuracy (%) comparison of different algorithms on Office+Caltech, PIE, and USPS+MNIST datasets.

Table 3.

Accuracy (%) comparison of different algorithms on Office-31 (Resnet-50).

- Table 2 summarizes the accuracies of all methods on Office+Caltech, PIE, and USPS+MNIST datasets with shallow feature representation, and the optimal result of each row in the table among all the methods is presented in bold. Our method SCDMDA (0–2) outperforms any other compared methods. The total average accuracy of SCDMDA2 on the 34 tasks is 74.9%, which achieves a 28.75% improvement compared with the baseline SDA. This verifies that CDMA is effective in reducing the between-domain distribution discrepancy and improving the knowledge transfer ability of SDA.

- SCDMDA2 outperforms SCDMDA0 and SCDMDA1, indicating that KTELM and KELM have higher accuracy than 1-NN, and that the cross-domain mean constraint on the source domain is effective for domain adaptation.

- Similarly, the semi-supervised feature extraction method SCDMDA0 works better than SWTDA-CMC. An explanation is that CDMA is a better distribution discrepancy measurement criterion than MMD, so our method works quite well in reducing domain bias. SCDMDA0 and SWTDA-CMC are better than JDA, JDA-CDMAW, and W-JDA. This illustrates that the category separability of LDA and the original structure information of graph regularizers are important for the classification task.

- The accuracy of SDA, GFK, JDA, STDA-CMC, W-JDA, JDA-CDMAW, and SCDMDA (0–2) outperforms 1-NN in most cases, showing the importance of feature extraction in classification tasks. Most domain adaptation techniques such as JDA, STDA-CMC, W-JDA, JDA-CDMAW, and SCDMDA (0–2) achieve higher accuracy than SDA, due to cross-domain shared feature extraction with few or no same distribution samples in the target domain. The accuracy of JDA, STDA-CMC, W-JDA, JDA-CDMAW, and SCDMDA (0–2) generally improves shared feature extraction through joint distribution adaptation.

In addition, results on the Office-31 dataset with ResNet-50 features are shown in Table 3. We observe the classification results obtained by 1-NN, KELM, JDA, STDA-CMC, JDA-CDMAW, and SCDMDA (0–2) and some deep domain adaptation methods such as DAN, DANN, and CAN to verify the effectiveness of our approach on this more complex dataset. SCDMDA2 outperforms other methods on all six groups of domain adaptation tasks. In particular, for the amazon vs. dslr domain adaptation task, it achieves a higher accuracy (91.16%). It further validates that our method, equipped with deep generic features, can further reduce the cross-domain distribution discrepancy and achieve the best adaptation performance, demonstrating the potential of our method.

Table 4 shows the running-time comparisons of SCDMDA (0–2) with 1-NN, KELM, JDA, STDA-CMC, and JDA-CDMAW on the PIE1 vs. PIE2 dataset. The following can be seen: (1) Because JDA, STDA-CMC, JDA-CDMAW, and SCDMDA (0–2) require 20 iterations for label refinement, these approaches consume more time than 1-NN, and KELM. (2) JDA-CDMAW needs less running time than JDA due to no requirement for the construction of the interdomain distribution divergence matrix. (3) SCDMDA (0–2) and STDA-CMC take more time than JDA-CDMAW to obtain the within- and between-class scatter matrices and graph Laplacian matrix (4) The time cost of SCDMDA2 requires more time than SCDMDA1 to compute the cross-domain mean constraint term for domain adaptation. Because KELM is faster than 1-NN, SCDMDA1 needs less time than SCDMDA0.

Table 4.

Consuming time of different approaches on PIE1 vs. PIE 2.

4.4. Sensitivity Analysis

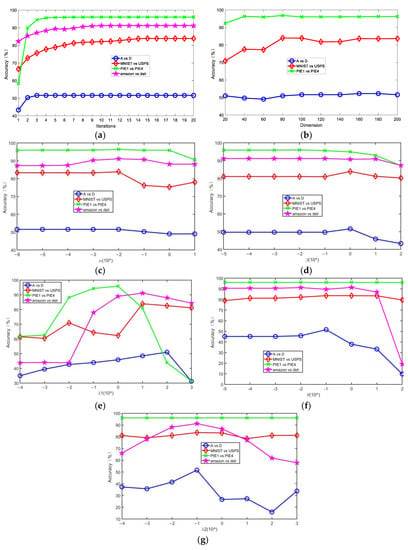

In this section, we conducted some experiments to investigate the influence on the accuracy of SCDMDA2, including the number of dimensions of the subspace, the graph tradeoff parameter , the SDA tradeoff parameter , and the sparse regularization parameter in SCDMDA; and the cross-domain mean constraint control parameter and the model regular tradeoff parameter of KETLM. In addition, we needed to observe the convergence of the designed algorithm. Then, we carried out experimental analysis on four cross-domain tasks, including A vs. D, MNIST vs. USPS, PIE1 vs. PIE2, and amazon vs. dslr datasets, and the results are shown in Figure 4.

Figure 4.

Effect of number of iterations, dimensions of subspace, α, β, , θ, and on accuracy.

In Figure 4a, we observe that the accuracy of SCDMDA2 grows with the increasing number of iterations on the four sets of datasets and gradually stabilizes after the 8th iteration, verifying its good convergence.

Figure 4b illustrates the experiments with the subspace dimension on 3 datasets, which indicated the impact of the subspace dimension on our method. It can be observed that SCDMDA2 obtains strong robustness when . Moreover, we did not perform this experiment on the amazon vs. dslr datasets, because the Office-31 dataset with ResNet-50 feature is robust with regard to different dimensions for feature transfer learning.

We ran SCDMDA2 with other parameters fixed . From Figure 4c, we can see that the accuracy rises first and then falls with increasing and obtains optimal performance when on 4 datasets. When is too small, the original structure information cannot be exploited. On the contrary, if it is too large, other terms in Equation (13) will not work and hurt the proposed algorithm.

Similar situations also occur in Figure 4d–g. The accuracy curve increases first and then decreases with the increase of , , , and . When , ,

, and , SCDMDA2 works well. If , , , and are too small, label information will be lost, the sparsity of the projection matrix fails to be controlled to reach the optimum, the cross-domain mean constraint term does not’ work, and KTELM will decline. In other words, the control of these parameters is beneficial to our approach when the parameter values are reasonable.

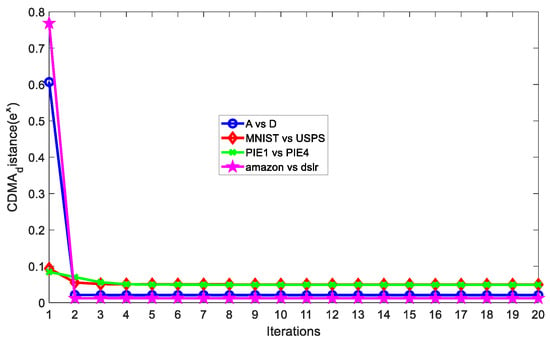

We ran SCDMDA with KTELM on A vs. D, MNIST vs. USPS, PIE1 vs. PIE2, and amazon vs. dslr datasets with the optimal parameter settings, and computed the CDMA distance (shown in Figure 5) according to its definition as follows:

Figure 5.

CDMA distances versus number of iterations.

We can see that as the iteration number increases, SCDMDA reduces the between-domain marginal and conditional distribution difference, leading to a smaller CDMA distance and higher accuracy. Therefore, SCDMDA can extract better shared features across domains.

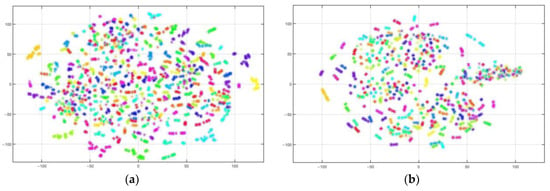

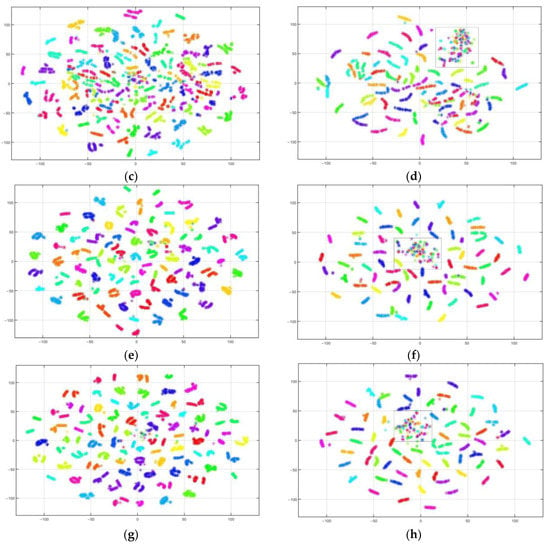

4.5. Visualization Analysis

We further display the t-distributed stochastic neighbor embedding (t-SNE) visualization plots of feature embedding for the PIE1 vs. PIE2 classification task. t-SNE, as a dimension-reduction method, transforms high-dimensional data into a low-dimensional space for visualization. In t-SNE visualization plots, clusters of the same color are closer, clusters of different colors are more distant, and the extracted features are more discriminative. The visualized source and target features derived from raw data, JDA, STDA-CMC, and SCDMA were drawn in the feature scatterplot Figure 6a–h, in which each color represents one of the 68 categories.

Figure 6.

Scatter diagram of feature visualization on PIE1 vs. PIE2. (a) Source domain feature. (b) Target domain feature. (c) Source domain JDA feature. (d) Target domain JDA feature. (e) Source domain STDA-CMC feature. (f) Target domain STDA-CMC feature. (g) Source domain SCDMDA feature. (h) Target domain SCDMDA feature.

As we can see from Figure 6a,b, the original data of the source and target domains before adaptation are highly mixed and difficult to distinguish. At the moment, we can easily obtain a poor classifier trained on the source domain. It can be seen from Figure 6c–f that JDA and STDA-CMC can unite samples with the same category and partly separate samples with different categories. Compared with JDA and STDA-CMC from Figure 6d,f, the small number of easily misclassified samples of SCDMDA in the boxes of Figure 6h can be especially observed, showing that SCDMDA can effectively obtain more discriminative and domain-invariant feature representation.

5. Conclusions

In this paper, we develop a semi-supervised domain adaptation approach which contains feature-based adaptation and classifier-based adaptation. At the feature-based adaptation stage, we introduce CDMA into SDA to reduce the cross-domain distribution discrepancy and develop SCDMDA for domain-invariant feature extraction. At the classifier-based adaptation stage, we select KELM as the baseline classifier. To further enhance knowledge transfer ability of the proposed approach, we design a cross-domain mean constraint term and add it to KELM to construct KTELM for domain adaptation. Through feature and classifier adaptation, we can learn more discriminatory feature representations and obtain higher accuracy. Comprehensive experiments on several visual cross-domain datasets show that SCDMDA with KTELM significantly outperforms many other state-of-the-art shallow and deep domain adaptation methods.

In the future, we plan to explore the interdomain metric criterion in more depth. At present, CDMA is superior to MMD in reducing cross-domain distribution differences; however, its computational complexity is greater than that of MMD. To solve this problem, better metrics can be developed to improve the distribution differences between domains.

Author Contributions

Methodology, software, writing—original draft preparation, X.L.; validation, writing—review and editing, funding acquisition, J.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under Grants 62073124 and 51805149, in part by the Key Scientific Research Projects of Universities in Henan Province under Grant 22A120005, the National Aviation Fund Projects under Grant 201701420002, and Henan Province Key Scientific and Technological Projects under Grant 222102210095.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, X.Z.; Xing, H.J.; Li, Y.; Hua, Y.L.; Dong, C.R.; Pedrycz, W. A study on relationship between generalization abilities and fuzziness of base classifiers in ensemble learning. IEEE Trans. Fuzzy Syst. 2014, 23, 1638–1654. [Google Scholar] [CrossRef]

- Li, S.; Huang, J.Q.; Hua, X.S.; Zhang, L. Category dictionary guided unsupervised domain adaptation for object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 2–9 February 2021; Volume 35, pp. 1949–1957. [Google Scholar]

- Zhang, B.Y.; Liu, Y.Y.; Yuan, H.W.; Sun, L.J.; Ma, Z. A joint unsupervised cross-domain model via scalable discriminative extreme learning machine. Cogn. Comput. 2018, 10, 577–590. [Google Scholar] [CrossRef]

- Gretton, A.; Borgwardt, K.M.; Rasch, M.J.; Schölkopf, B.; Smola, A. A kernel two-sample test. J. Mach. Learn. Res. 2012, 13, 723–773. [Google Scholar]

- Zhuang, F.; Cheng, X.; Luo, P. Supervised representation learning with double encoding-layer autoencoder for transfer learning. ACM Trans. Intell. Syst. Technol. (TIST) 2017, 9, 1–17. [Google Scholar] [CrossRef]

- Shi, Q.; Zhang, Y.; Liu, X. Regularised transfer learning for hyperspectral image classification. IET Comput. Vis. 2019, 13, 188–193. [Google Scholar] [CrossRef]

- Lee, C.Y.; Batra, T.; Baig, M.H. Sliced wasserstein discrepancy for unsupervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 10285–10295. [Google Scholar]

- Pan, S.J.; Kwok, J.T.; Yang, Q. Transfer learning via dimensionality reduction. In Proceedings of the Twenty-Third AAAI Conference on Artificial Intelligence, Chicago, IL, USA, 13–17 July 2008; Volume 8, pp. 677–682. [Google Scholar]

- Pan, S.J.; Tsang, I.W.; Kwok, J.T.; Yang, Q. Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 2010, 22, 199–210. [Google Scholar] [CrossRef] [PubMed]

- Gong, B.Q.; Shi, Y.; Sha, F.; Grauman, K. Geodesic flow kernel for unsupervised domain adaptation. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2066–2073. [Google Scholar]

- Long, M.S.; Wang, J.M.; Ding, G.G.; Sun, J.G.; Yu, P. Transfer feature learning with joint distribution adaptation. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 3–6 December 2013; pp. 2200–2207. [Google Scholar]

- Lu, N.N.; Chu, F.; Qi, H.R.; Xia, S. A new domain adaption algorithm based on weights adaption from the source domain. IEEJ Trans. Electr. Electron. Eng. 2018, 13, 1769–1776. [Google Scholar] [CrossRef]

- Jia, S.; Deng, Y.F.; Lv, J.; Du, S.C.; Xie, Z.Y. Joint distribution adaptation with diverse feature aggregation: A new transfer learning framework for bearing diagnosis across different machines. Measurement 2022, 187, 110332. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Huang, G.; Song, S.J.; Gupta, J.N.D.; Wu, C. Semi-supervised and unsupervised extreme learning machines. IEEE Trans. Cybern. 2014, 44, 2405–2417. [Google Scholar] [CrossRef]

- Sharifmoghadam, M.; Jazayeriy, H. Breast cancer classification using AdaBoost-extreme learning machine. In Proceedings of the 5th Iranian Conference on Signal Processing and Intelligent Systems (ICSPIS), IEEE, Shahrood, Iran, 18–19 December 2019; pp. 1–5. [Google Scholar]

- Zhang, J.; Li, Y.J.; Xiao, W.D.; Zhang, Z.Q. Non-iterative and fast deep learning: Multilayer extreme learning machines. J. Frankl. Inst. 2020, 357, 8925–8955. [Google Scholar] [CrossRef]

- Min, M.C.; Chen, X.F.; Xie, Y.F. Constrained voting extreme learning machine and its application. J. Syst. Eng. Electron. 2021, 32, 209–219. [Google Scholar]

- Li, D.Z.; Li, S.; Zhang, S.B.; Sun, J.R.; Wang, L.C.; Wang, K. Aging state prediction for supercapacitors based on heuristic kalman filter optimization extreme learning machine. Energy 2022, 250, 123773. [Google Scholar] [CrossRef]

- Zang, S.F.; Li, X.H.; Ma, J.W.; Yan, Y.Y.; Gao, J.W.; Wei, Y. TSTELM: Two-stage transfer extreme learning machine for unsupervised domain adaptation. Comput. Intell. Neurosci. 2022, 2022, 1582624. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.M.; Song, S.J.; Li, S.; Yang, L.; Wu, C. Domain space transfer extreme learning machine for domain adaptation. IEEE Trans. Cybern. 2018, 49, 1909–1922. [Google Scholar] [CrossRef]

- Li, X.; Zhang, W.; Ding, Q.; Sun, J.Q. Multi-layer domain adaptation method for rolling bearing fault diagnosis. Signal Process. 2019, 157, 180–197. [Google Scholar] [CrossRef]

- Cheng, M.; You, X. Adaptive matching of kernel means. In Proceedings of the 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 13–18 September 2021; pp. 2498–2505. [Google Scholar]

- Wang, W.; Li, H.J.; Ding, Z.M.; Nie, F.P.; Chen, J.Y.; Dong, X.; Wang, Z.H. Rethinking maximum mean discrepancy for visual domain adaptation. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 264–277. [Google Scholar] [CrossRef]

- Yan, L.; Zhu, R.X.; Liu, Y.; Mo, N. TrAdaBoost based on improved particle swarm optimization for cross-domain scene classification with limited samples. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3235–3251. [Google Scholar] [CrossRef]

- Li, S.; Song, S.; Huang, G. Prediction reweighting for domain adaptation. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 1682–1695. [Google Scholar] [CrossRef] [PubMed]

- Li, J.Q.; Sun, T.; Lin, Q.Z.; Jiang, M.; Tan, K.C. Reducing negative transfer learning via clustering for dynamic multiobjective optimization. IEEE Trans. Evol. Comput. 2022, 26, 1102–1116. [Google Scholar] [CrossRef]

- Farahani, A.; Voghoei, S.; Rasheed, K.; Arabnia, H.R. A brief review of domain adaptation. In Advances in Data Science and Information Engineering, Transactions on Science and Computational Intelligence; Springer: Cham, Switzerland, 2021; pp. 877–894. [Google Scholar]

- Wang, Q.; Breckon, T.P. Cross-domain structure preserving projection for heterogeneous domain adaptation. Pattern Recognit. 2022, 123, 108362. [Google Scholar] [CrossRef]

- Wei, D.D.; Han, T.; Chu, F.L.; Zuo, M.J. Weighted domain adaptation networks for machinery fault diagnosis. Mech. Syst. Signal Process. 2021, 158, 107744. [Google Scholar] [CrossRef]

- Li, J.J.; Jing, M.M.; Su, H.Z.; Lu, K.; Zhu, L.; Shen, H.T. Faster domain adaptation networks. IEEE Trans. Knowl. Data Eng. 2021, 34, 5770–5783. [Google Scholar] [CrossRef]

- Fang, X.H.; Bai, H.L.; Guo, Z.Y.; Shen, B.; Hoi, S.; Xu, Z.L. DART: Domain-adversarial residual-transfer networks for unsupervised cross-domain image classification. Neural Netw. 2020, 127, 182–192. [Google Scholar] [CrossRef]

- Sicilia, A.; Zhao, X.C.; Hwang, S.J. Domain adversarial neural networks for domain generalization: When it works and how to improve. Mach. Learn. 2023, 1–37. [Google Scholar] [CrossRef]

- Zhang, W.C.; Ouyang, W.L.; Li, W.; Xu, D. Collaborative and adversarial network for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3801–3809. [Google Scholar]

- Yang, J.; Yan, R.; Hauptmann, A.G. Adapting SVM classifiers to data with shifted distributions. In Proceedings of the Seventh IEEE International Conference on Data Mining Workshops (ICDMW 2007), Omaha, NE, USA, 28–31 October 2007; pp. 69–76. [Google Scholar]

- Tommasi, T.; Orabona, F.; Caputo, B. Learning categories from few examples with multi model knowledge transfer. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 928–941. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.P.; Lv, W.J.; Li, Z.R.; Chang, J.; Li, X.C.; Liu, S. Unsupervised domain adaptation for vibration-based robotic ground classification in dynamic environments. Mech. Syst. Signal Process. 2022, 169, 108648. [Google Scholar] [CrossRef]

- Li, S.; Song, S.J.; Huang, G.; Wu, C. Cross-domain extreme learning machines for domain adaptation. IEEE Trans. Syst. Man Cybern. Syst. 2019, 49, 1194–1207. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, C.; Liu, K.X.; Chen, B.C.; Yao, Y. Domain adaptation transfer learning soft sensor for product quality prediction. Chemom. Intell. Lab. Syst. 2019, 192, 103813. [Google Scholar] [CrossRef]

- Tang, H.; Jia, K. Discriminative adversarial domain adaptation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 5940–5947. [Google Scholar]

- Li, S.; Liu, C.H.; Xie, B.H.; Su, L.M.; Ding, Z.M.; Huang, G. Joint adversarial domain adaptation. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 729–737. [Google Scholar]

- Xu., M.H.; Zhang, J.; Ni, B.B.; Li, T.; Wang, C.J.; Tian, Q.; Zhang, W.J. Adversarial domain adaptation with domain mixup. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 6502–6509. [Google Scholar]

- Cai, D.; He, X.; Han, J. Speed up kernel discriminant analysis. VLDB J. 2011, 20, 21–33. [Google Scholar] [CrossRef]

- Saenko, K.; Kulis, B.; Fritz, M.; Darrell, T. Adapting visual category models to new domains. In Proceedings of the Computer Vision–ECCV 2010: 11th European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; pp. 213–226. [Google Scholar]

- Gentile, C. A new approximate maximal margin classification algorithm. In Advances in Neural Information Processing Systems 13, Proceedings of the Neural Information Processing Systems (NIPS) 2000, Denver, USA, 1 January 2000; MIT Press: Cambridge, MA, USA, 2001; pp. 479–485. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffber, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Gross, R.; Matthews, I.; Cohn, J.; Kanade, T.; Baker, S. Multi-pie. Image Vis. Comput. 2010, 28, 807–813. [Google Scholar] [CrossRef] [PubMed]

- Guo, G.; Wang, H.; Bell, D.; Bi, Y.; Greer, K. KNN model-based approach in classification. In Proceedings of the On the Move to Meaningful Internet Systems 2003: CoopIS, DOA, and ODBASE: OTM Confederated In-ternational Conferences, Catania, Italy, 3–7 November 2003; pp. 986–996. [Google Scholar]

- Zang, S.F.; Cheng, Y.H.; Wang, X.S.; Yu, Q. Semi-supervised transfer discriminant analysis based on cross-domain mean constraint. Artif. Intell. Rev. 2018, 49, 581–595. [Google Scholar] [CrossRef]

- Zang, S.F.; Cheng, Y.H.; Wang, X.; Yu, Q.; Xie, G.S. Cross domain mean approximation for unsupervised domain adaptation. IEEE Access 2020, 8, 139052–139069. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).