Scalability of Cyber-Physical Systems with Real and Virtual Robots in ROS 2

Abstract

1. Introduction

2. Materials and Methods

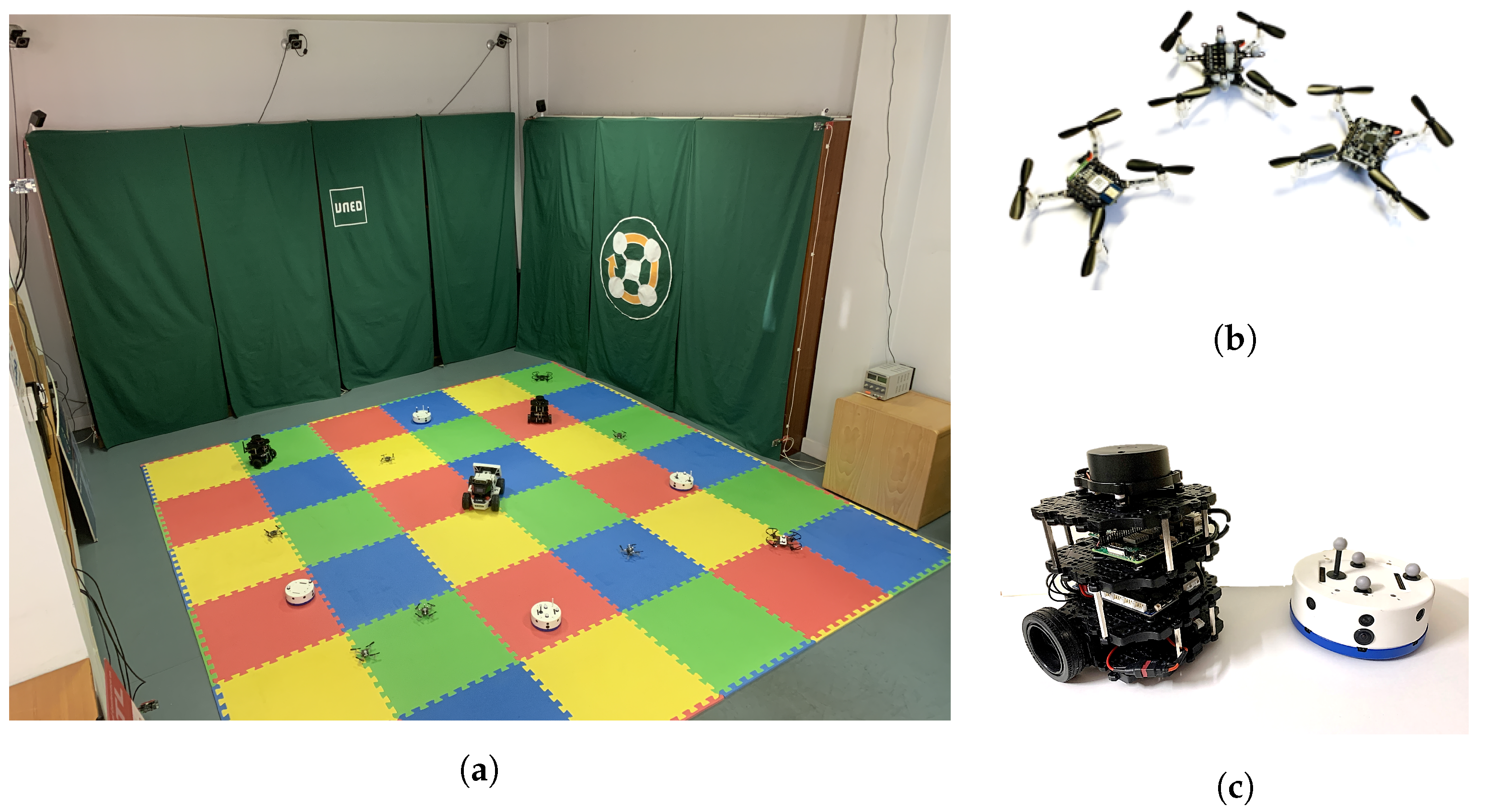

2.1. Experimental Platform

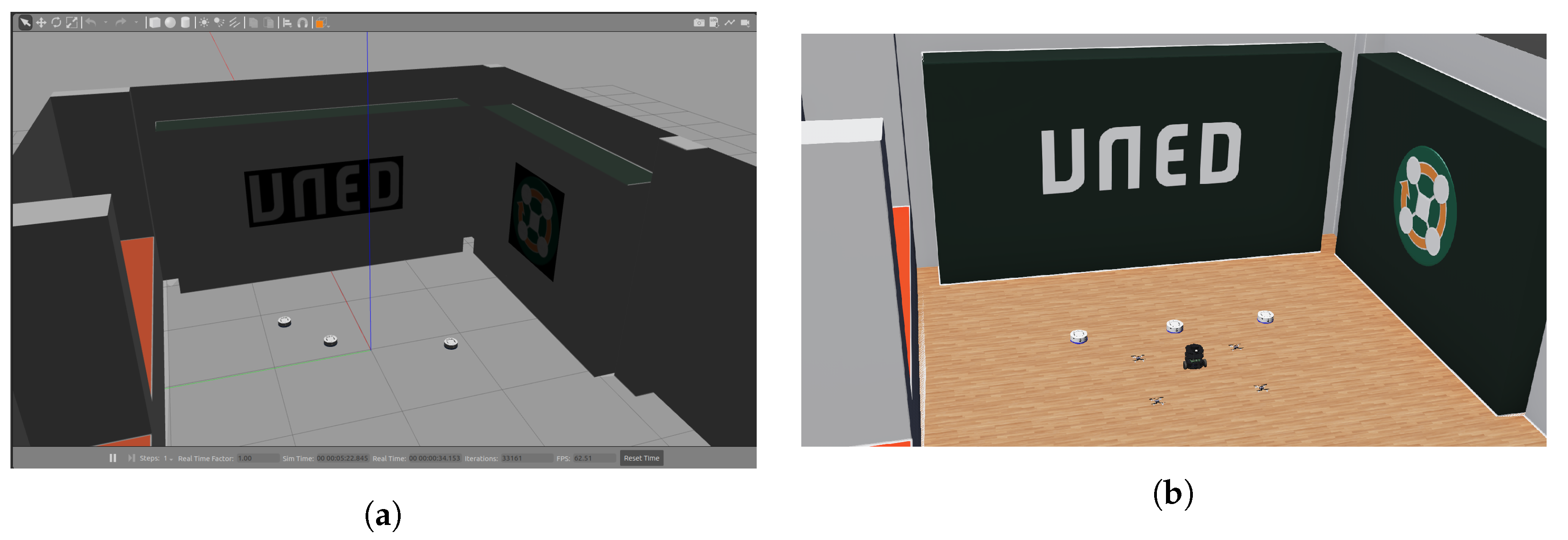

2.2. Simulators

2.3. ROS 2

2.4. Computational Resources

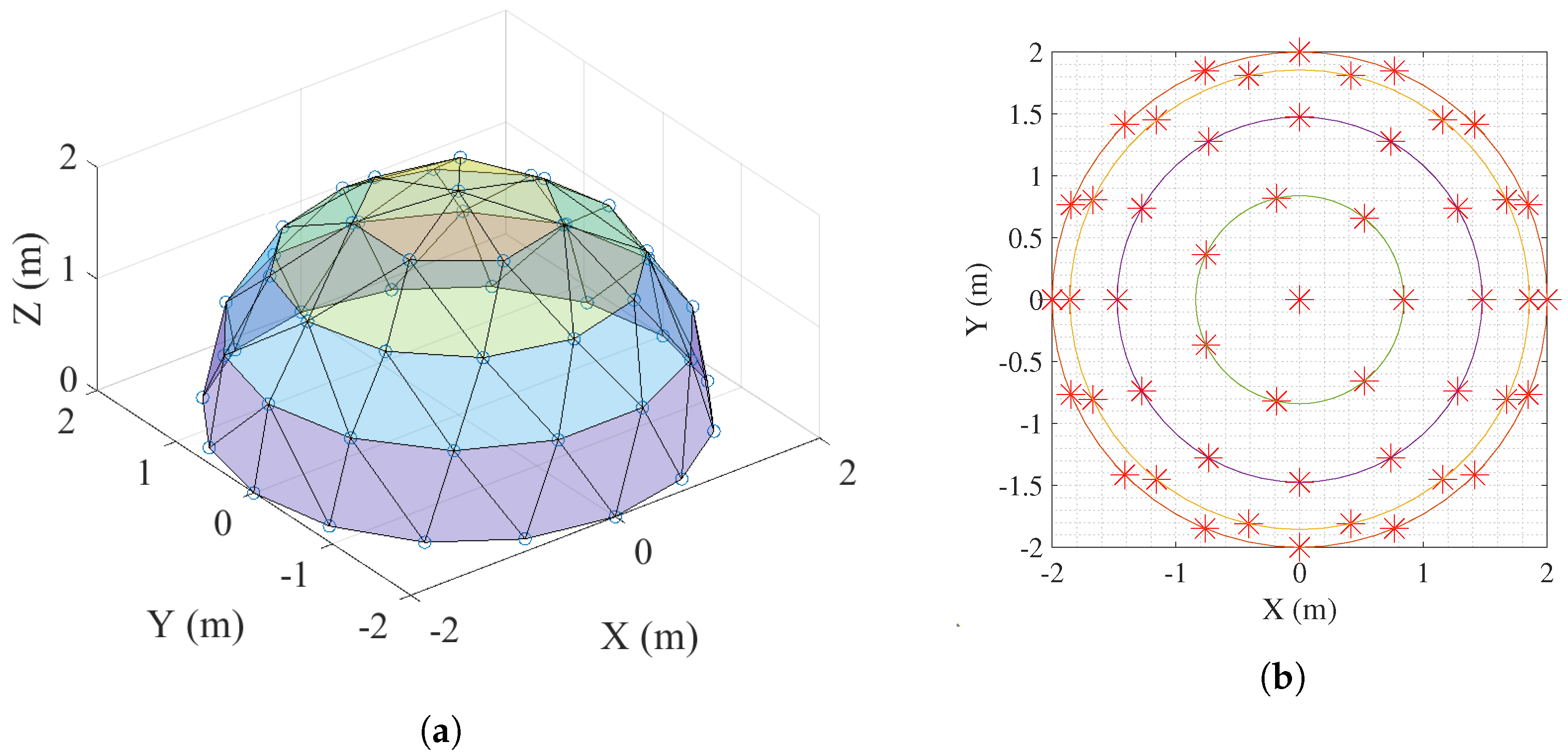

3. Problem Formulation and Experiments

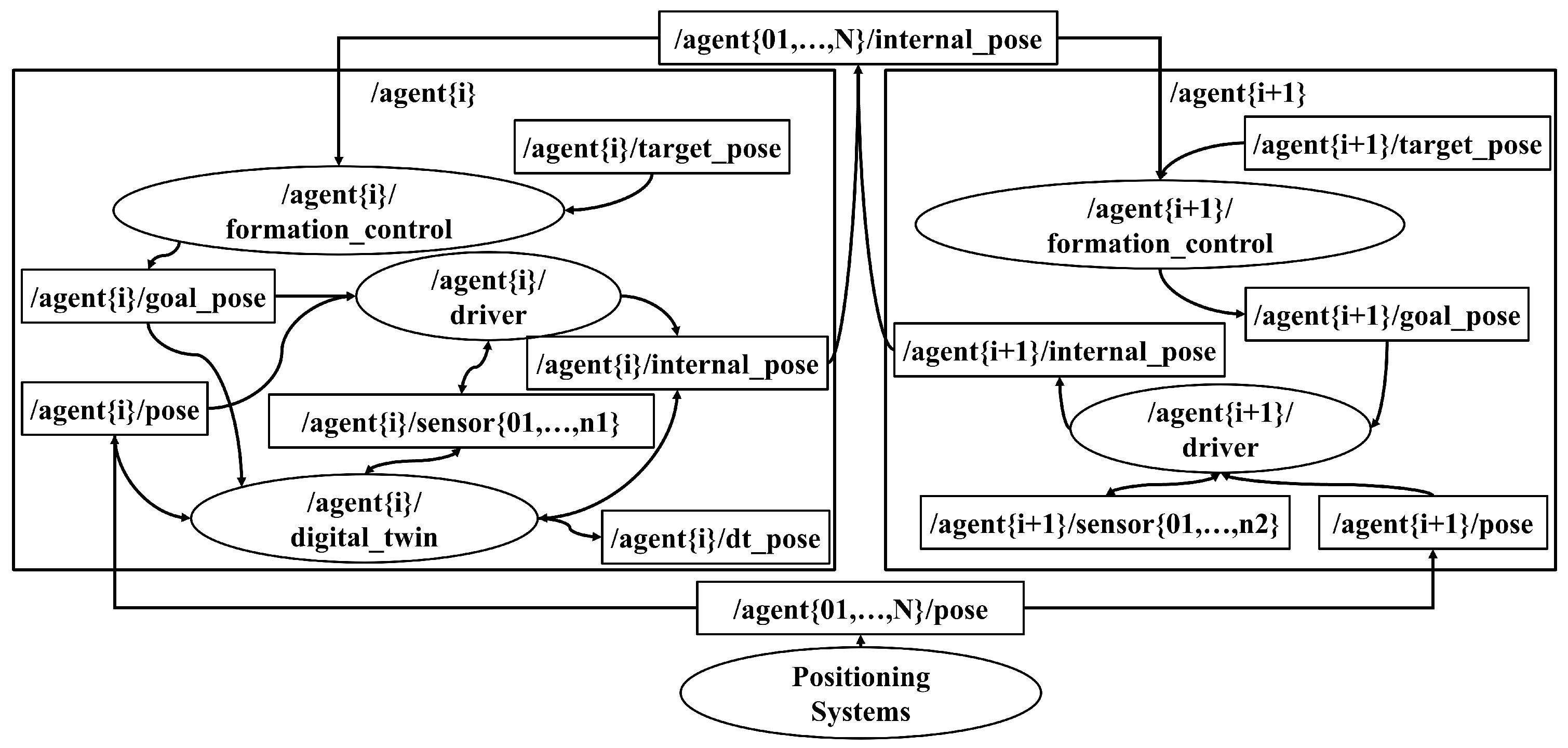

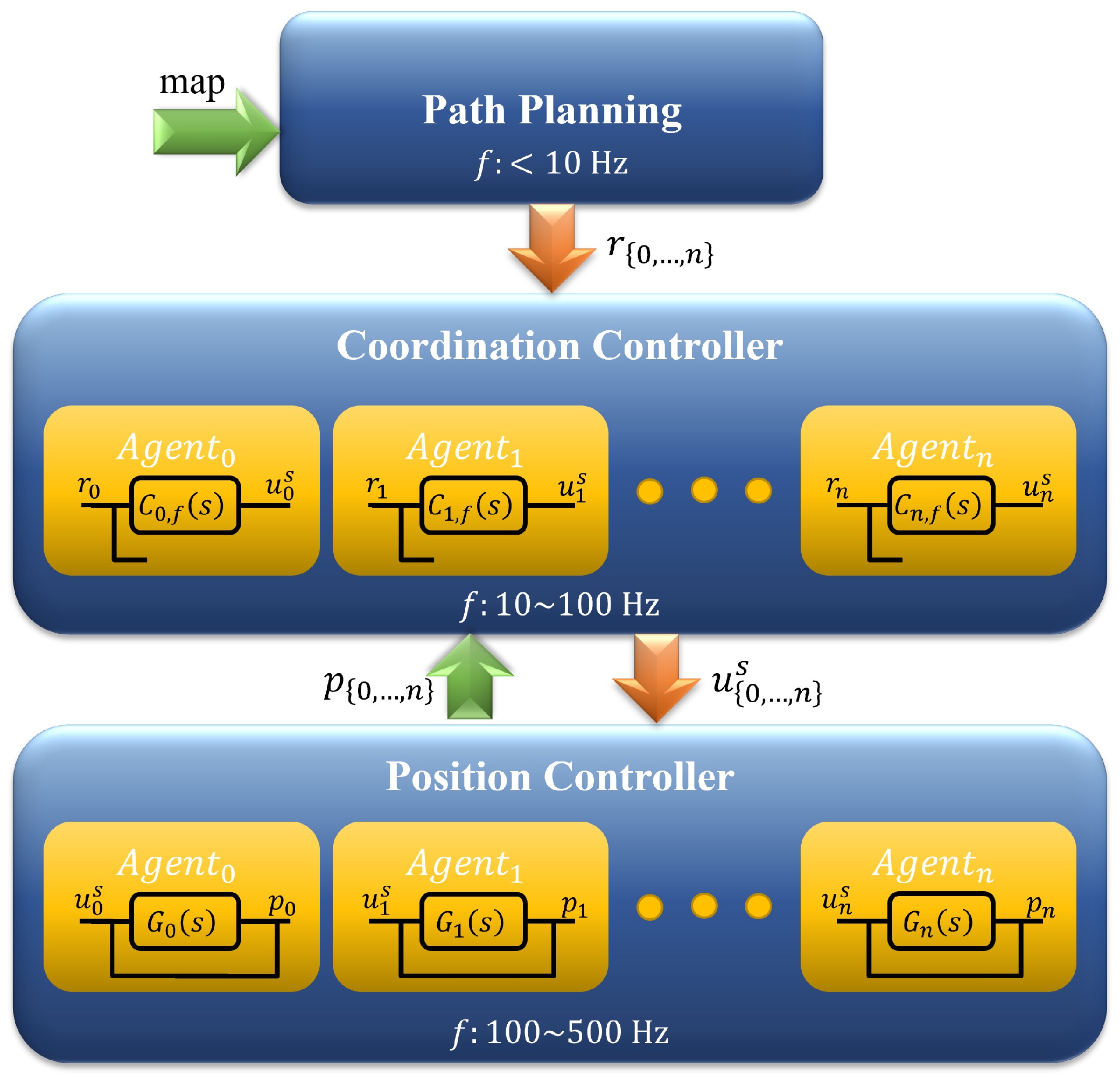

3.1. Control Architecture

3.2. Experiments Description

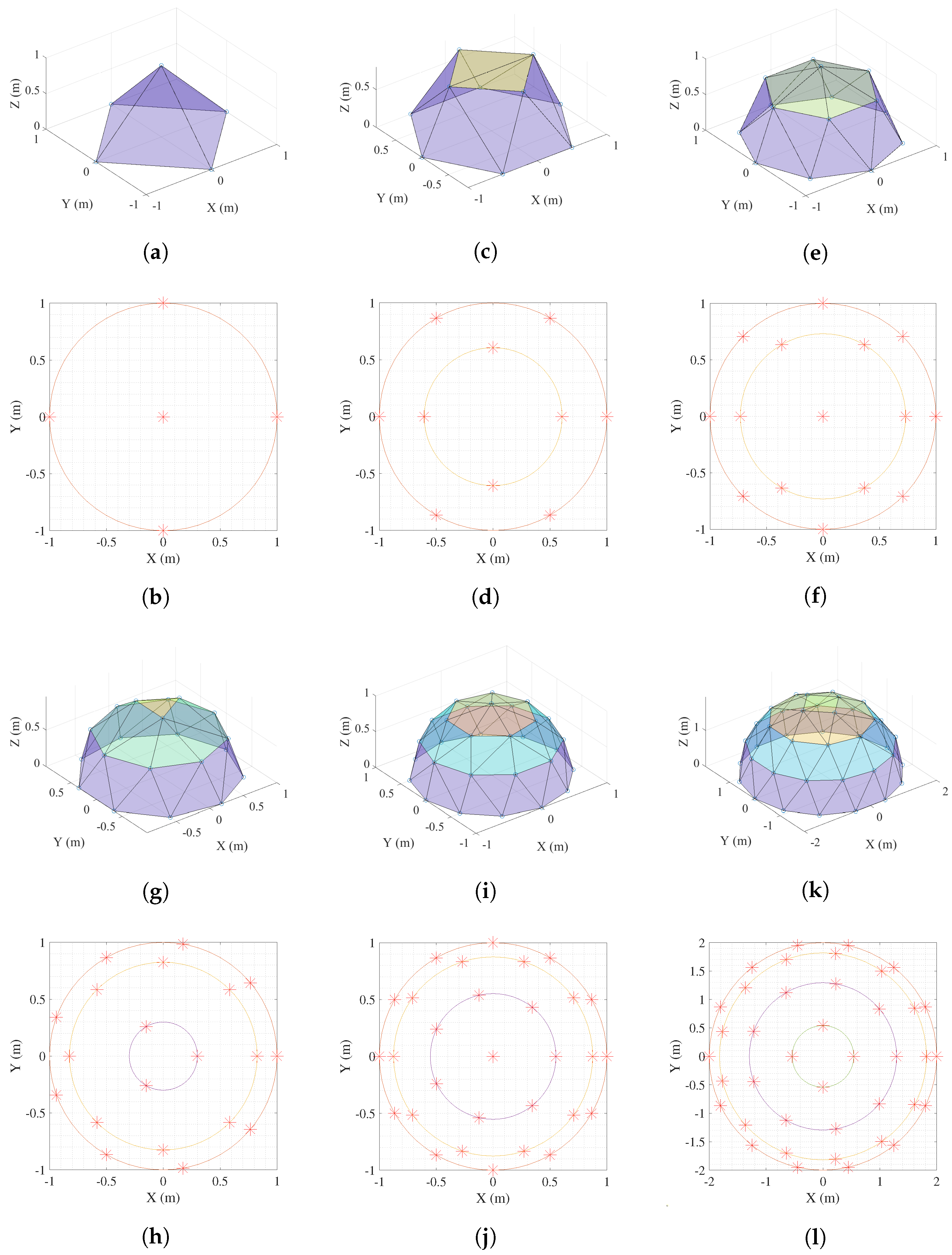

- The MRS of experiment A (see Figure 6a,b) consists of a total of five agents, four of which are Khepera IV and one Crazyflie. In this case, all the robots are real and only their corresponding DTs are running in the virtual environment.

- In experiment B (see Figure 6c,d), the MRS is composed of 10 agents: four Crazyflies, and six Khepera. In this case, four real Crazyflies and four real Kheperas are used. In the virtual environment, two Kheperas run in addition to the virtual twins of the real robots.

- In experiment C (see Figure 6e,f), the MRS is composed of 15 agents: seven Crazyflies, and eight Khepera. In this case, five real Crazyflies and four real Kheperas are used. The rest of the agents up to 15 are completely digital.

- The fourth experiment, D (see Figure 6g,h), employs a total of 20 agents, 11 of which are Kheperas and 9 are Crazyflies. In this experience, six Crazyflies and four Kheperas are real. The rest of the agents up to 20 are completely digital.

- The MRS in experiment E (see Figure 6i,j) is composed by 30 agents. In this case, the distribution of agents is 18 Crazyflies and 12 Khepera.

- For the last experiment, F, depicted in Figure 6k,l, the number of robots is 40 (26 Crazyflies and 14 Khepera).

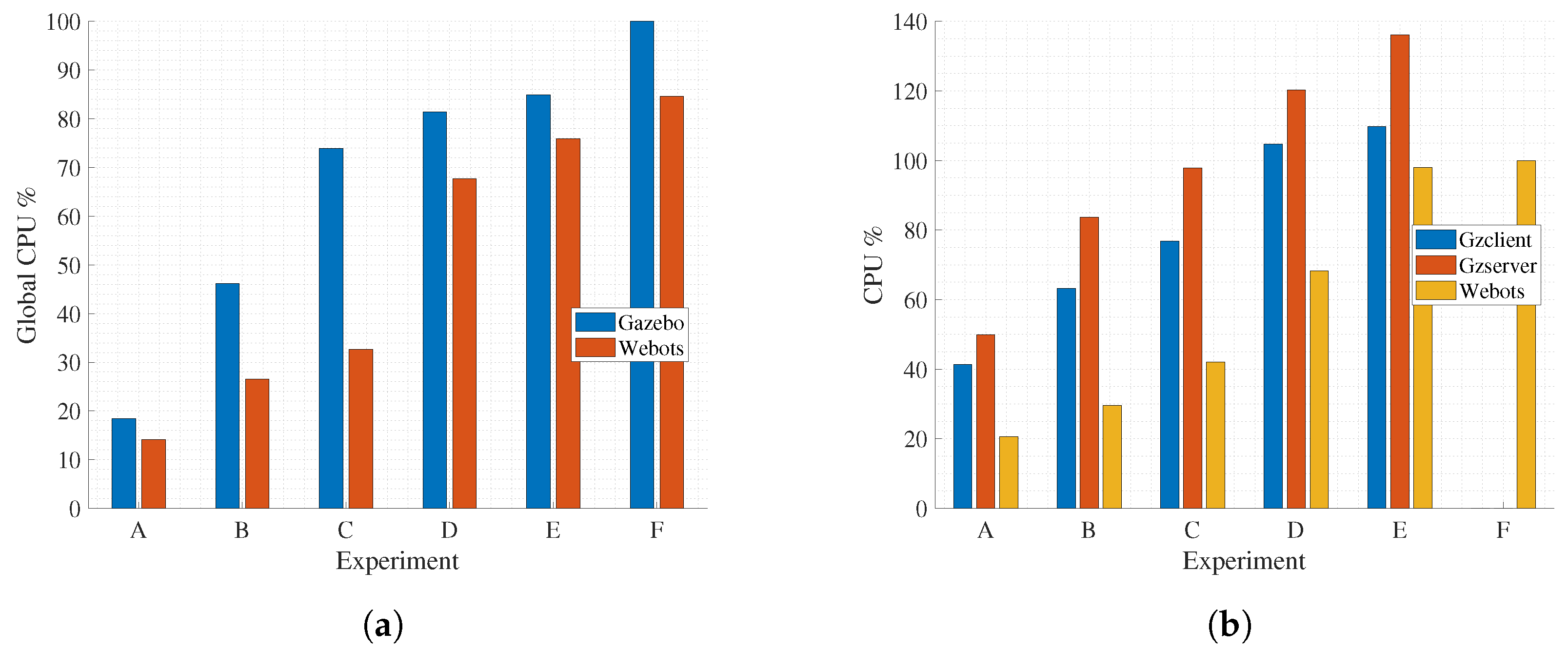

- Global CPU percentage. This value represents the current system-wide CPU utilization as a percentage.

- CPU percentage. This represents the individual process CPU utilization as a percentage. It can be >100.0 in case of a process running multiple threads on different CPUs.

- Real-Time Factor (RTF). This shows a ratio of calculation time within a simulation (simulation time) to execution time (real time).

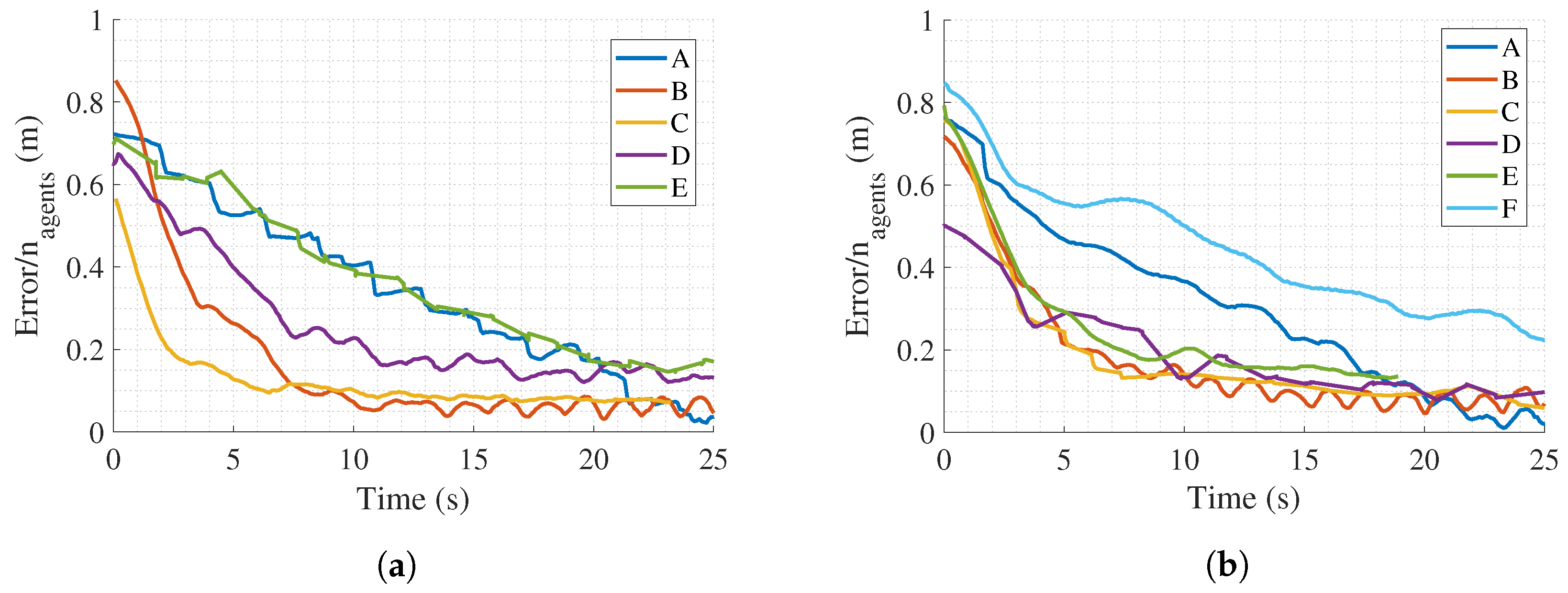

- Integral Absolute Error (IAE). This index weights all errors equally over time. It gives global information about the agents.

- Integral of Time-weighted Absolute Error (ITAE). In systems that use step inputs, the initial error is always high. Consequently, to make a fair comparison between systems, errors maintained over time should have a greater weight than the initial errors. In this way, ITAE emphasizes reducing the error during the initial transient response and penalizes larger errors for longer.

4. Results

4.1. CPU Consumption

4.2. Real-Time Factor

4.3. System Performance

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AR | Augmented reality |

| CPS | Cyber-physical system |

| CPU | Central processing unit |

| DART | Dynamic Animation and Robotics Toolkit |

| GUI | Graphical user interface |

| HiLCPS | Human-in-the-Loop Cyber-Physical System |

| IAE | Integral Absolute Error |

| IMU | Inertial Measurement Unit |

| ITAE | Integral of Time-weighted Absolute Error |

| MDPI | Multidisciplinary Digital Publishing Institute |

| MR | Mixed reality |

| MRS | Multi-robot system |

| ODE | Open Dynamics Engine |

| PID | Proportional–Integral–Derivative |

| ROS | Robot Operating System |

| RTF | Real-Time Factor |

| SDF | Simulation Description Format |

| URDF | Universal Robot Description Format |

| UWB | Ultra-wideband |

| VR | Virtual reality |

References

- Lee, E.A. Cyber physical systems: Design challenges. In Proceedings of the 2008 11th IEEE International Symposium on Object and Component-Oriented Real-Time Distributed Computing (ISORC), Orlando, FL, USA, 5–7 May 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 363–369. [Google Scholar]

- Zanero, S. Cyber-physical systems. Computer 2017, 50, 14–16. [Google Scholar] [CrossRef]

- Schirner, G.; Erdogmus, D.; Chowdhury, K.; Padir, T. The future of human-in-the-loop cyber-physical systems. Computer 2013, 46, 36–45. [Google Scholar] [CrossRef]

- Kim, J.; Seo, D.; Moon, J.; Kim, J.; Kim, H.; Jeong, J. Design and Implementation of an HCPS-Based PCB Smart Factory System for Next-Generation Intelligent Manufacturing. Appl. Sci. 2022, 12, 7645. [Google Scholar] [CrossRef]

- Liu, Y.; Peng, Y.; Wang, B.; Yao, S.; Liu, Z. Review on cyber-physical systems. IEEE/CAA J. Autom. Sin. 2017, 4, 27–40. [Google Scholar] [CrossRef]

- Duo, W.; Zhou, M.; Abusorrah, A. A survey of cyber attacks on cyber physical systems: Recent advances and challenges. IEEE/CAA J. Autom. Sin. 2022, 9, 784–800. [Google Scholar] [CrossRef]

- Romeo, L.; Petitti, A.; Marani, R.; Milella, A. Internet of robotic things in smart domains: Applications and challenges. Sensors 2020, 20, 3355. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Hu, X.; Hu, B.; Cheng, J.; Zhou, M.; Kwok, R.Y. Mobile cyber physical systems: Current challenges and future networking applications. IEEE Access 2017, 6, 12360–12368. [Google Scholar] [CrossRef]

- Mañas-Álvarez, F.J.; Guinaldo, M.; Dormido, R.; Dormido, S. Robotic Park. Multi-Agent Platform for Teaching Control and Robotics. IEEE Access 2023, 11, 34899–34911. [Google Scholar] [CrossRef]

- Maruyama, T.; Ueshiba, T.; Tada, M.; Toda, H.; Endo, Y.; Domae, Y.; Nakabo, Y.; Mori, T.; Suita, K. Digital twin-driven human robot collaboration using a digital human. Sensors 2021, 21, 8266. [Google Scholar] [CrossRef]

- Poursoltan, M.; Traore, M.K.; Pinède, N.; Vallespir, B. A Digital Twin Model-Driven Architecture for Cyber-Physical and Human Systems. In Proceedings of the International Conference on Interoperability for Enterprise Systems and Applications, Tarbes, France, 24–25 March 2020; Springer: Berlin/Heidelberg, Germany, 2023; pp. 135–144. [Google Scholar]

- Phanden, R.K.; Sharma, P.; Dubey, A. A review on simulation in digital twin for aerospace, manufacturing and robotics. Mater. Today Proc. 2021, 38, 174–178. [Google Scholar] [CrossRef]

- Guo, J.; Bilal, M.; Qiu, Y.; Qian, C.; Xu, X.; Choo, K.K.R. Survey on digital twins for Internet of Vehicles: Fundamentals, challenges, and opportunities. Digit. Commun. Netw. 2022, in press. [CrossRef]

- Makhataeva, Z.; Varol, H.A. Augmented reality for robotics: A review. Robotics 2020, 9, 21. [Google Scholar] [CrossRef]

- Hoenig, W.; Milanes, C.; Scaria, L.; Phan, T.; Bolas, M.; Ayanian, N. Mixed reality for robotics. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 5382–5387. [Google Scholar]

- Cruz Ulloa, C.; Domínguez, D.; Del Cerro, J.; Barrientos, A. A Mixed-Reality Tele-Operation Method for High-Level Control of a Legged-Manipulator Robot. Sensors 2022, 22, 8146. [Google Scholar] [CrossRef]

- Blanco-Novoa, Ó.; Fraga-Lamas, P.; Vilar-Montesinos, M.A.; Fernández-Caramés, T.M. Creating the internet of augmented things: An open-source framework to make iot devices and augmented and mixed reality systems talk to each other. Sensors 2020, 20, 3328. [Google Scholar] [CrossRef]

- Phan, T.; Hönig, W.; Ayanian, N. Mixed reality collaboration between human-agent teams. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Tuebingen/Reutlingen, Germany, 18–22 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 659–660. [Google Scholar]

- Chen, I.Y.H.; MacDonald, B.; Wunsche, B. Mixed reality simulation for mobile robots. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 232–237. [Google Scholar]

- Seleckỳ, M.; Faigl, J.; Rollo, M. Communication architecture in mixed-reality simulations of unmanned systems. Sensors 2018, 18, 853. [Google Scholar] [CrossRef]

- Ostanin, M.; Klimchik, A. Interactive robot programing using mixed reality. IFAC-PapersOnLine 2018, 51, 50–55. [Google Scholar] [CrossRef]

- Groechel, T.; Shi, Z.; Pakkar, R.; Matarić, M.J. Using socially expressive mixed reality arms for enhancing low-expressivity robots. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–8. [Google Scholar]

- Tian, H.; Lee, G.A.; Bai, H.; Billinghurst, M. Using Virtual Replicas to Improve Mixed Reality Remote Collaboration. IEEE Trans. Vis. Comput. Graph. 2023, 29, 2785–2795. [Google Scholar] [CrossRef]

- Mañas-Álvarez, F.J.; Guinaldo, M.; Dormido, R.; Socas, R.; Dormido, S. Formation by Consensus in Heterogeneous Robotic Swarms with Twins-in-the-Loop. In Proceedings of the ROBOT2022: Fifth Iberian Robotics Conference: Advances in Robotics, Zaragoza, Spain, 23–25 November 2022; Springer: Zaragoza, Spain, 2022; Volume 1, pp. 435–447. [Google Scholar]

- Koenig, N.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 3, pp. 2149–2154. [Google Scholar]

- Rohmer, E.; Singh, S.P.; Freese, M. V-REP: A versatile and scalable robot simulation framework. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1321–1326. [Google Scholar]

- Michel, O. Cyberbotics ltd. webots™: Professional mobile robot simulation. Int. J. Adv. Robot. Syst. 2004, 1, 5. [Google Scholar] [CrossRef]

- Blanco-Claraco, J.L.; Tymchenko, B.; Mañas-Alvarez, F.J.; Cañadas-Aránega, F.; López-Gázquez, Á.; Moreno, J.C. MultiVehicle Simulator (MVSim): Lightweight dynamics simulator for multiagents and mobile robotics research. SoftwareX 2023, 23, 101443. [Google Scholar] [CrossRef]

- Collins, J.; Chand, S.; Vanderkop, A.; Howard, D. A review of physics simulators for robotic applications. IEEE Access 2021, 9, 51416–51431. [Google Scholar] [CrossRef]

- Kästner, L.; Bhuiyan, T.; Le, T.A.; Treis, E.; Cox, J.; Meinardus, B.; Kmiecik, J.; Carstens, R.; Pichel, D.; Fatloun, B.; et al. Arena-bench: A benchmarking suite for obstacle avoidance approaches in highly dynamic environments. IEEE Robot. Autom. Lett. 2022, 7, 9477–9484. [Google Scholar] [CrossRef]

- Farley, A.; Wang, J.; Marshall, J.A. How to pick a mobile robot simulator: A quantitative comparison of CoppeliaSim, Gazebo, MORSE and Webots with a focus on accuracy of motion. Simul. Model. Pract. Theory 2022, 120, 102629. [Google Scholar] [CrossRef]

- Noori, F.M.; Portugal, D.; Rocha, R.P.; Couceiro, M.S. On 3D simulators for multi-robot systems in ROS: MORSE or Gazebo? In Proceedings of the 2017 IEEE International Symposium on Safety, Security and Rescue Robotics (SSRR), Shanghai, China, 11–13 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 19–24. [Google Scholar]

- Portugal, D.; Iocchi, L.; Farinelli, A. A ROS-based framework for simulation and benchmarking of multi-robot patrolling algorithms. In Robot Operating System (ROS) The Complete Reference (Volume 3); Springer: Berlin/Heidelberg, Germany, 2019; pp. 3–28. [Google Scholar]

- De Melo, M.S.P.; da Silva Neto, J.G.; Da Silva, P.J.L.; Teixeira, J.M.X.N.; Teichrieb, V. Analysis and comparison of robotics 3d simulators. In Proceedings of the 2019 21st Symposium on Virtual and Augmented Reality (SVR), Rio de Janeiro, Brazil, 28–31 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 242–251. [Google Scholar]

- Audonnet, F.P.; Hamilton, A.; Aragon-Camarasa, G. A Systematic Comparison of Simulation Software for Robotic Arm Manipulation using ROS2. In Proceedings of the 2022 22nd International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 27 November–1 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 755–762. [Google Scholar]

- Körber, M.; Lange, J.; Rediske, S.; Steinmann, S.; Glück, R. Comparing popular simulation environments in the scope of robotics and reinforcement learning. arXiv 2021, arXiv:2103.04616. [Google Scholar]

- Pitonakova, L.; Giuliani, M.; Pipe, A.; Winfield, A. Feature and performance comparison of the V-REP, Gazebo and ARGoS robot simulators. In Proceedings of the Towards Autonomous Robotic Systems: 19th Annual Conference, TAROS 2018, Bristol, UK, 25–27 July 2018; Proceedings 19. Springer: Berlin/Heidelberg, Germany, 2018; pp. 357–368. [Google Scholar]

- Giernacki, W.; Skwierczyński, M.; Witwicki, W.; Wroński, P.; Kozierski, P. Crazyflie 2.0 quadrotor as a platform for research and education in robotics and control engineering. In Proceedings of the 2017 22nd International Conference on Methods and Models in Automation and Robotics (MMAR), Miedzyzdroje, Poland, 28–31 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 37–42. [Google Scholar]

- Khepera IV User Manual. Available online: https://www.k-team.com/khepera-iv#manual (accessed on 30 June 2023).

- Farias, G.; Fabregas, E.; Torres, E.; Bricas, G.; Dormido-Canto, S.; Dormido, S. A distributed vision-based navigation system for Khepera IV mobile robots. Sensors 2020, 20, 5409. [Google Scholar] [CrossRef]

- Macenski, S.; Foote, T.; Gerkey, B.; Lalancette, C.; Woodall, W. Robot Operating System 2: Design, architecture, and uses in the wild. Sci. Robot. 2022, 7, 66. [Google Scholar] [CrossRef]

- Hardin, D.P.; Michaels, T.; Saff, E.B. A Comparison of Popular Point Configurations on S2. arXiv 2016, arXiv:1607.04590. [Google Scholar]

- Anderson, B.D.; Yu, C.; Fidan, B.; Hendrickx, J.M. Rigid graph control architectures for autonomous formations. IEEE Control Syst. Mag. 2008, 28, 48–63. [Google Scholar]

- Heemels, W.P.; Johansson, K.H.; Tabuada, P. An introduction to event-triggered and self-triggered control. In Proceedings of the 2012 IEEE 51st IEEE Conference on Decision and Control (CDC), Maui, HI, USA, 10–13 December 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 3270–3285. [Google Scholar]

| Real Robots | Virtual Robots | |||||

|---|---|---|---|---|---|---|

| Experiment | Figure | Size | Crazyflie 2.1 | Khepera IV | Crazyflie 2.1 | Khepera IV |

| A | Figure 6a,b | 5 | 1 | 4 | 1 | 4 |

| B | Figure 6c,d | 10 | 4 | 4 | 4 | 6 |

| C | Figure 6e,f | 15 | 5 | 4 | 7 | 8 |

| D | Figure 6g,h | 20 | 6 | 4 | 11 | 9 |

| E | Figure 6i,j | 30 | 6 | 4 | 18 | 12 |

| F | Figure 6k,l | 40 | 6 | 4 | 26 | 14 |

| Experiment | Size | Gazebo | Webots |

|---|---|---|---|

| A | 5 agents | ||

| B | 10 agents | ||

| C | 15 agents | ||

| D | 20 agents | ||

| E | 30 agents | ||

| F | 40 agents | - |

| IAE (m/s) | ITAE (m) | |||

|---|---|---|---|---|

| Experiment | Gazebo | Webots | Gazebo | Webots |

| A | ||||

| B | ||||

| C | ||||

| D | ||||

| E | ||||

| F | - | - | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mañas-Álvarez, F.J.; Guinaldo, M.; Dormido, R.; Dormido-Canto, S. Scalability of Cyber-Physical Systems with Real and Virtual Robots in ROS 2. Sensors 2023, 23, 6073. https://doi.org/10.3390/s23136073

Mañas-Álvarez FJ, Guinaldo M, Dormido R, Dormido-Canto S. Scalability of Cyber-Physical Systems with Real and Virtual Robots in ROS 2. Sensors. 2023; 23(13):6073. https://doi.org/10.3390/s23136073

Chicago/Turabian StyleMañas-Álvarez, Francisco José, María Guinaldo, Raquel Dormido, and Sebastian Dormido-Canto. 2023. "Scalability of Cyber-Physical Systems with Real and Virtual Robots in ROS 2" Sensors 23, no. 13: 6073. https://doi.org/10.3390/s23136073

APA StyleMañas-Álvarez, F. J., Guinaldo, M., Dormido, R., & Dormido-Canto, S. (2023). Scalability of Cyber-Physical Systems with Real and Virtual Robots in ROS 2. Sensors, 23(13), 6073. https://doi.org/10.3390/s23136073