A Novel Calibration Method of Line Structured Light Plane Using Spatial Geometry

Abstract

1. Introduction

2. Methods for Light Plane Calibration

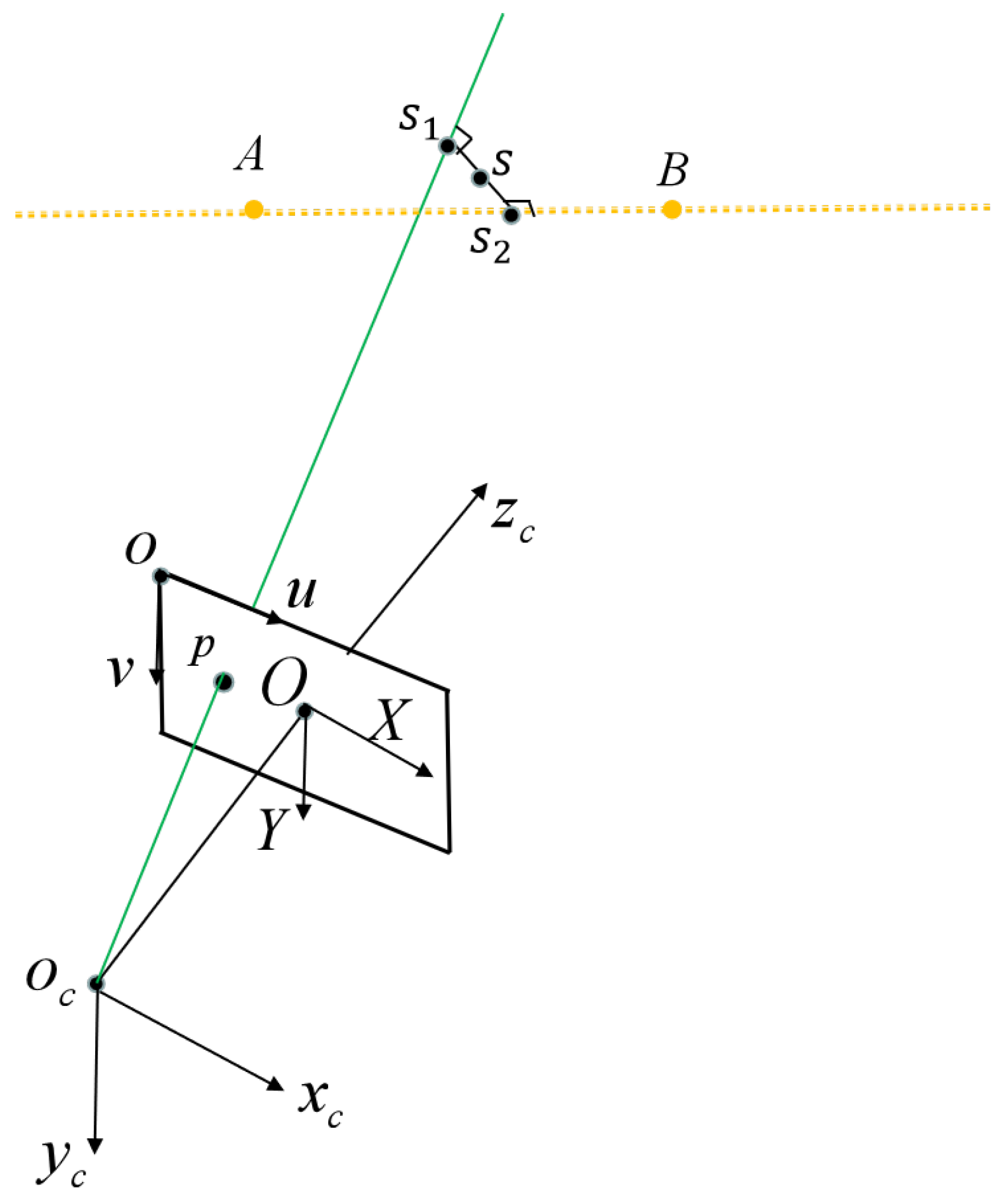

2.1. Model of the Line Structured Light Vision Sensor

2.2. The Proposed Line Structured Light Plane Calibration Method

- (1)

- Correcting the distortion of the calibration images.

- (2)

- Extracting light stripe centers for all calibration images.

- (3)

- Fitting the line with the extracted light stripe centers.

- (4)

- Fitting the line with the image coordinates of the horizontal collinear points on the checkerboard.

- (5)

- Computing the intersection points of the above two lines on the images.

- (6)

- Computing the line linking the intersection point on the image and the camera optical center in the CCF.

- (7)

- Computing the line where the horizontal collinear points locate on the chessboard in the CCF.

- (8)

- Computing the common perpendicular of the two lines in (6) and (7).

- (9)

- Computing the midpoint of the common perpendicular as calibrated points.

- (10)

- Estimating the equation of the light plane in the CCF by fitting non-collinear calibrated points.

3. Experiment Results and Discussion

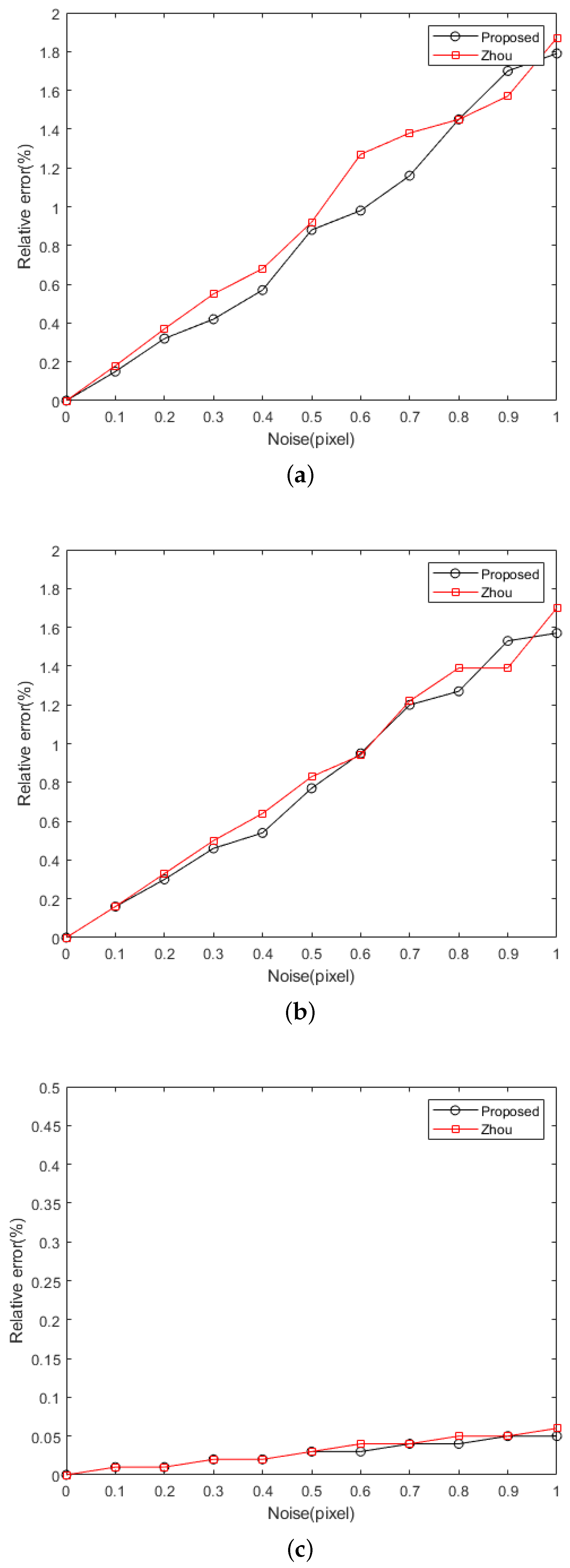

3.1. Simulation Experiment

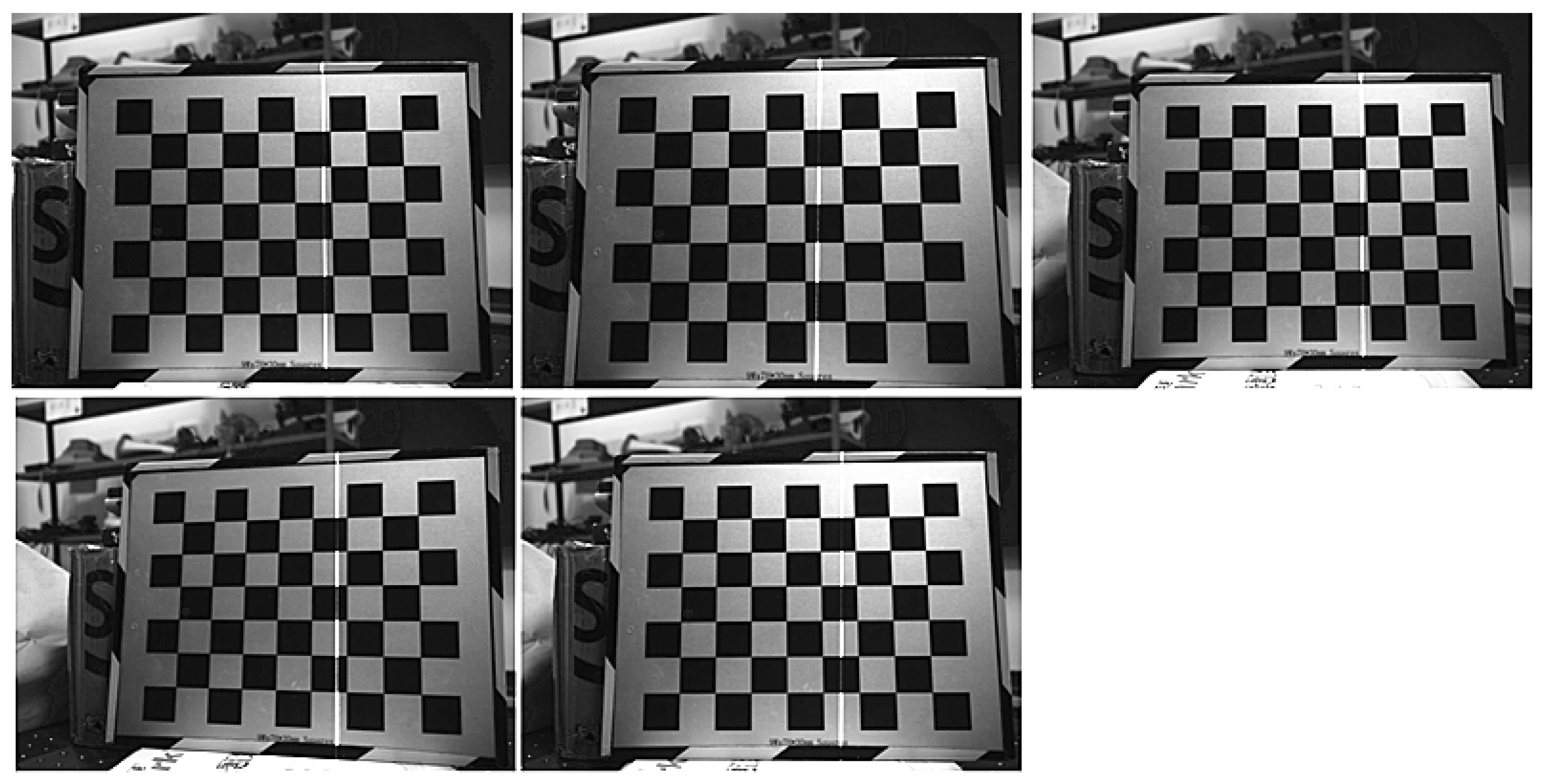

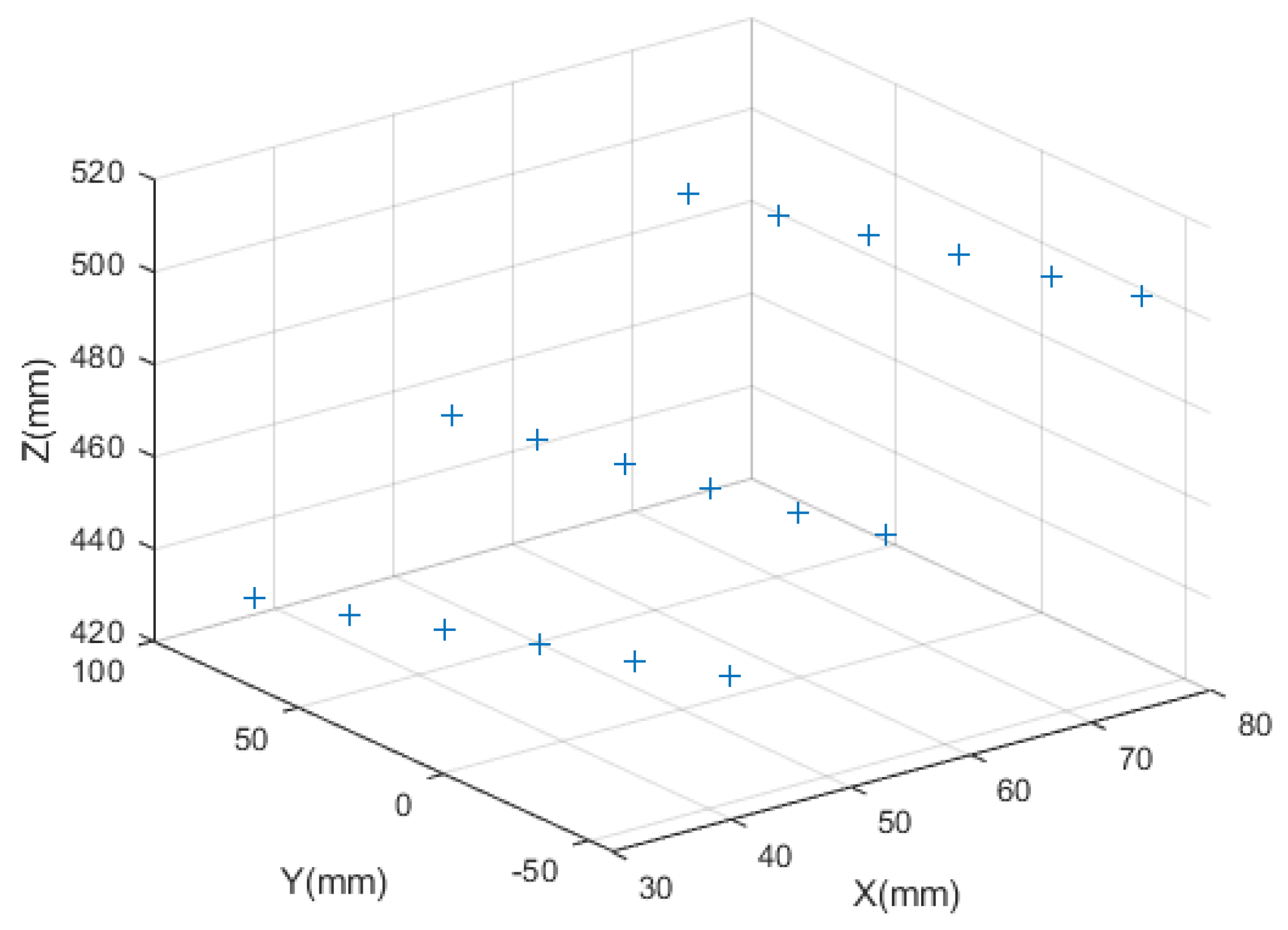

3.2. Physical Experiments

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CCF | Camera coordinate frame |

References

- Frauel, Y.; Tajahuerce, E.; Matoba, O.; Castro, A.; Javidi, B. Comparison of passive ranging integral imaging and active imaging digital holography for three-dimensional object recognition. Appl. Opt. 2004, 43, 452–462. [Google Scholar] [CrossRef] [PubMed]

- Lu, K.; Wang, W. A multi-sensor approach for rapid and precise digitization of free-form surface in reverse engineering. Int. J. Adv. Manuf. Technol. 2015, 79, 1983–1994. [Google Scholar] [CrossRef]

- Kapłonek, W.; Nadolny, K. Laser methods based on an analysis of scattered light for automated, in-process inspection of machined surfaces: A review. Optik 2015, 126, 2764–2770. [Google Scholar] [CrossRef]

- Mavrinac, A.; Chen, X.; Alarcon-Herrera, J.L. Semiautomatic model-based view planning for active triangulation 3-D inspection systems. IEEE/ASME Trans. Mechatron. 2014, 20, 799–811. [Google Scholar] [CrossRef]

- Pan, X.; Liu, Z.; Zhang, G. Line structured-light vision sensor calibration based on multi-tooth free-moving target and its application in railway fields. IEEE Trans. Intell. Transp. Syst. 2020, 22, 5762–5771. [Google Scholar] [CrossRef]

- Miao, J.; Tan, Q.; Wang, S.; Liu, S.; Chai, B.; Li, X. A Vision Measurement Method for the Gear Shaft Radial Runout with Line Structured Light. IEEE Access 2020, 9, 5097–5104. [Google Scholar] [CrossRef]

- Song, Z.; Jiang, H.; Lin, H.; Tang, S. A high dynamic range structured light means for the 3D measurement of specular surface. Opt. Lasers Eng. 2017, 95, 8–16. [Google Scholar] [CrossRef]

- Muhammad, J.; Altun, H.; Abo-Serie, E. Welding seam profiling techniques based on active vision sensing for intelligent robotic welding. Int. J. Adv. Manuf. Technol. 2017, 88, 127–145. [Google Scholar] [CrossRef]

- Wang, Z.; Fan, J.; Jing, F.; Deng, S.; Zheng, M.; Tan, M. An efficient calibration method of line structured light vision sensor in robotic eye-in-hand system. IEEE Sens. J. 2020, 20, 6200–6208. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Slaughter, D.C.; Max, N.; Maloof, J.N.; Sinha, N. Structured light-based 3D reconstruction system for plants. Sensors 2015, 15, 18587–18612. [Google Scholar] [CrossRef]

- Salvi, J.; Fernandez, S.; Pribanic, T.; Llado, X. A state of the art in structured light patterns for surface profilometry. Pattern Recognit. 2010, 43, 2666–2680. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Zhang, Z. Camera calibration with one-dimensional objects. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 892–899. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Wu, Q.; Wu, S.; Pan, X. Flexible and accurate camera calibration using grid spherical images. Opt. Express 2017, 25, 15269–15285. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Cheng, X.; Fan, Q. Camera calibration based on two-cylinder target. Opt. Express 2019, 27, 29319–29331. [Google Scholar] [CrossRef]

- Chuang, J.H.; Ho, C.H.; Umam, A.; Chen, H.Y.; Hwang, J.N.; Chen, T.A. Geometry-Based Camera Calibration Using Closed-Form Solution of Principal Line. IEEE Trans. Image Process. 2021, 30, 2599–2610. [Google Scholar] [CrossRef]

- Huynh, D.Q.; Owens, R.A.; Hartmann, P. Calibrating a structured light stripe system: A novel approach. Int. J. Comput. Vis. 1999, 33, 73–86. [Google Scholar] [CrossRef]

- Liu, Z.; Li, X.; Li, F.; Zhang, G. Calibration method for line-structured light vision sensor based on a single ball target. Opt. Lasers Eng. 2015, 69, 20–28. [Google Scholar] [CrossRef]

- Liu, Z.; Li, X.; Yin, Y. On-site calibration of line-structured light vision sensor in complex light environments. Opt. Express 2015, 23, 29896–29911. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, X.; Zhou, F.; Cen, Y. Calibration method for a line-structured light vision sensor based on a single cylindrical target. Appl. Opt. 2020, 59, 1376–1382. [Google Scholar] [CrossRef]

- Wu, X.; Tang, N.; Liu, B.; Long, Z. A novel high precise laser 3D profile scanning method with flexible calibration. Opt. Lasers Eng. 2020, 132, 105938. [Google Scholar] [CrossRef]

- Wei, Z.; Cao, L.; Zhang, G. A novel 1D target-based calibration method with unknown orientation for structured light vision sensor. Opt. Laser Technol. 2010, 42, 570–574. [Google Scholar] [CrossRef]

- Zhou, F.; Zhang, G. Complete calibration of a structured light stripe vision sensor through planar target of unknown orientations. Image Vis. Comput. 2005, 23, 59–67. [Google Scholar] [CrossRef]

- Longlong, Y.; Yanwen, L.; Yingbao, L. Line structured light calibrating based on two-dimensional planar target. Chin. J. Sci. Instrum. 2020, 41, 124–131. [Google Scholar]

- Zou, W.; Wei, Z.; Liu, F. High-accuracy calibration of line-structured light vision sensors using a plane mirror. Opt. Express 2019, 27, 34681–34704. [Google Scholar] [CrossRef]

| Position Number | (Point 1 Point 2) | (mm) | Zhou’s Method | Yu’s Method | Proposed Method | |||

|---|---|---|---|---|---|---|---|---|

| (mm) | (mm) | (mm) | (mm) | (mm) | (mm) | |||

| No. 1 | (0 1) | 30.001 | 29.980 | −0.021 | 29.978 | −0.223 | 30.011 | 0.010 |

| (0 2) | 60.000 | 59.966 | −0.034 | 59.953 | −0.047 | 60.019 | 0.019 | |

| (0 3) | 90.001 | 89.955 | −0.046 | 89.923 | −0.078 | 90.021 | 0.020 | |

| (0 4) | 120.000 | 119.949 | −0.051 | 119.891 | −0.109 | 120.019 | 0.019 | |

| (0 5) | 150.000 | 149.959 | −0.041 | 149.866 | −0.134 | 150.024 | 0.024 | |

| (1 2) | 30.000 | 29.985 | −0.015 | 29.975 | −0.025 | 30.007 | 0.007 | |

| (1 3) | 60.001 | 59.974 | −0.027 | 59.945 | −0.056 | 60.009 | 0.008 | |

| (1 4) | 90.001 | 89.969 | −0.032 | 89.913 | −0.088 | 90.008 | 0.007 | |

| (1 5) | 120.001 | 119.979 | −0.022 | 119.888 | −0.113 | 120.012 | 0.011 | |

| (2 3) | 30.001 | 29.989 | −0.012 | 29.970 | −0.031 | 30.002 | 0.001 | |

| (2 4) | 60.002 | 59.984 | −0.018 | 59.938 | −0.064 | 60.001 | −0.001 | |

| (2 5) | 90.001 | 89.993 | −0.008 | 89.913 | −0.088 | 90.005 | 0.004 | |

| (3 4) | 30.009 | 29.995 | −0.014 | 29.968 | −0.041 | 29.999 | −0.010 | |

| (3 5) | 60.004 | 60.004 | 0.000 | 59.942 | −0.062 | 60.003 | −0.001 | |

| (4 5) | 30.000 | 30.010 | 0.010 | 29.974 | −0.026 | 30.004 | 0.004 | |

| No. 2 | (0 1) | 30.007 | 29.992 | −0.015 | 29.991 | −0.016 | 30.023 | 0.016 |

| (0 2) | 60.027 | 59.990 | −0.037 | 59.980 | −0.047 | 60.043 | 0.016 | |

| (0 3) | 90.037 | 89.985 | −0.052 | 89.958 | −0.079 | 90.051 | 0.014 | |

| (0 4) | 120.056 | 119.995 | −0.061 | 119.942 | −0.114 | 120.064 | 0.008 | |

| (0 5) | 150.068 | 150.022 | −0.046 | 149.936 | −0.132 | 150.086 | 0.018 | |

| (1 2) | 30.022 | 29.998 | −0.024 | 29.989 | −0.033 | 30.020 | −0.002 | |

| (1 3) | 60.031 | 59.993 | −0.038 | 59.967 | −0.064 | 60.028 | −0.003 | |

| (1 4) | 90.050 | 90.003 | −0.047 | 89.951 | −0.099 | 90.041 | −0.009 | |

| (1 5) | 120.062 | 120.030 | −0.032 | 119.944 | −0.118 | 120.063 | 0.001 | |

| (2 3) | 30.010 | 29.995 | −0.015 | 29.978 | −0.032 | 30.008 | −0.002 | |

| (2 4) | 60.028 | 60.004 | −0.024 | 59.962 | −0.066 | 60.021 | −0.007 | |

| (2 5) | 90.041 | 90.031 | −0.010 | 89.955 | −0.086 | 90.043 | 0.002 | |

| (3 4) | 30.020 | 30.010 | −0.010 | 29.984 | −0.036 | 30.013 | −0.007 | |

| (3 5) | 60.032 | 60.037 | 0.005 | 59.978 | −0.054 | 60.035 | 0.003 | |

| (4 5) | 30.012 | 30.027 | 0.015 | 29.994 | −0.018 | 30.022 | −0.010 | |

| RMS error | 0.031 | 0.075 | 0.011 | |||||

| Position Number | Point Index | Zhou’s Method | Proposed Method | Yu’s Method | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | x | y | z | x | y | z | ||

| No. 1 | 1 | 65.287 | −58.002 | 493.947 | 65.321 | −58.032 | 494.205 | 65.252 | −57.971 | 493.680 |

| 2 | 64.597 | −28.357 | 489.528 | 64.621 | −28.368 | 489.710 | 64.553 | −28.338 | 489.197 | |

| 3 | 63.906 | 1.293 | 485.109 | 63.921 | 1.293 | 485.216 | 63.854 | 1.292 | 484.714 | |

| 4 | 63.216 | 30.946 | 480.689 | 63.220 | 30.948 | 480.723 | 63.156 | 30.917 | 480.233 | |

| 5 | 62.525 | 60.605 | 476.269 | 62.520 | 60.600 | 476.231 | 62.457 | 60.539 | 475.751 | |

| 6 | 61.834 | 90.279 | 471.846 | 61.820 | 90.258 | 471.737 | 61.758 | 90.169 | 471.269 | |

| No. 2 | 1 | 63.865 | −57.918 | 491.485 | 63.897 | −57.947 | 491.737 | 63.833 | −57.889 | 491.239 |

| 2 | 63.118 | −28.276 | 486.970 | 63.141 | −28.287 | 487.147 | 63.078 | −28.259 | 486.662 | |

| 3 | 62.372 | 1.371 | 482.455 | 62.385 | 1.371 | 482.557 | 62.324 | 1.370 | 482.084 | |

| 4 | 61.626 | 31.014 | 477.941 | 61.629 | 31.016 | 477.969 | 61.570 | 30.986 | 477.508 | |

| 5 | 60.879 | 60.673 | 473.424 | 60.874 | 60.667 | 473.380 | 60.816 | 60.610 | 472.932 | |

| 6 | 60.132 | 90.348 | 468.904 | 60.117 | 90.326 | 468.790 | 60.061 | 90.242 | 468.353 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, H.; Xu, G.; Ma, Z. A Novel Calibration Method of Line Structured Light Plane Using Spatial Geometry. Sensors 2023, 23, 5929. https://doi.org/10.3390/s23135929

Gao H, Xu G, Ma Z. A Novel Calibration Method of Line Structured Light Plane Using Spatial Geometry. Sensors. 2023; 23(13):5929. https://doi.org/10.3390/s23135929

Chicago/Turabian StyleGao, Huiping, Guili Xu, and Zhongchen Ma. 2023. "A Novel Calibration Method of Line Structured Light Plane Using Spatial Geometry" Sensors 23, no. 13: 5929. https://doi.org/10.3390/s23135929

APA StyleGao, H., Xu, G., & Ma, Z. (2023). A Novel Calibration Method of Line Structured Light Plane Using Spatial Geometry. Sensors, 23(13), 5929. https://doi.org/10.3390/s23135929