Machine Learning-Based Sensor Data Fusion for Animal Monitoring: Scoping Review

Abstract

1. Introduction

1.1. Multi-Modal Animal Monitoring

1.2. Sensor Fusion

1.3. Sensor Fusion in Animal Monitoring

1.4. Machine Learning and Sensor Fusion in Animal Monitoring

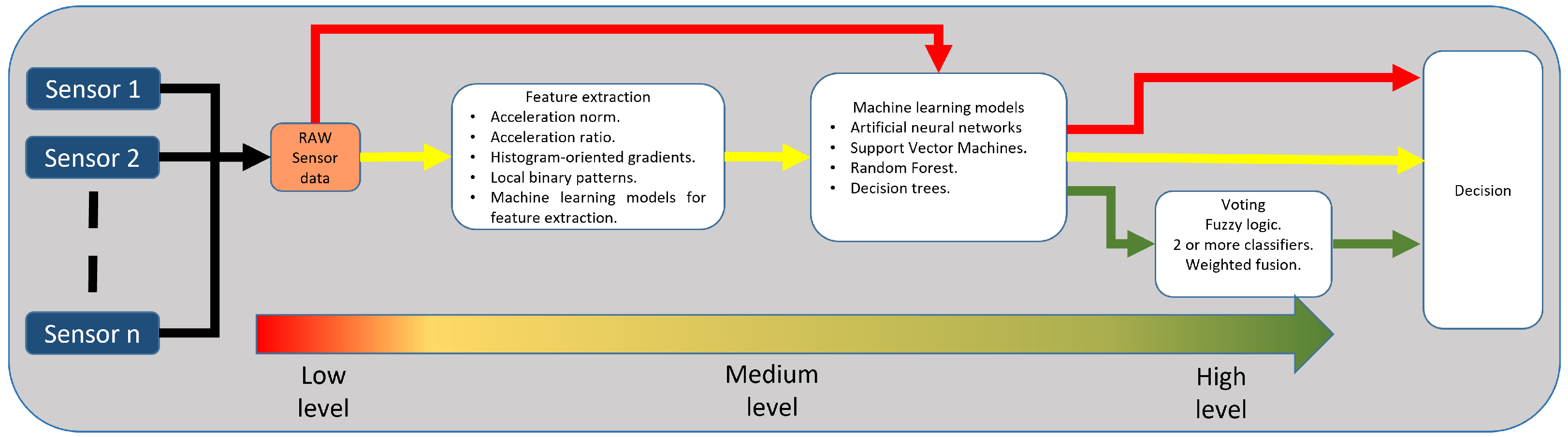

1.5. Workflow of Machine Learning-Based Sensor Fusion

2. Objectives

- What specific challenges or problems have been addressed through the application of machine learning-based sensor fusion techniques in the field of animal monitoring?

- Which animal species have been the primary subjects of studies exploring the application of machine learning-based sensor fusion techniques in the field of animal monitoring?

- What are the applications of machine learning-based sensor fusion systems for animal monitoring?

- What sensing technologies are utilized in machine learning-based sensor fusion applications for animal monitoring?

- How have machine learning-based fusion techniques been utilized for animal monitoring in sensor fusion applications?

- What are the documented performance metrics and achieved results in machine learning-based sensor fusion applications for animal monitoring?

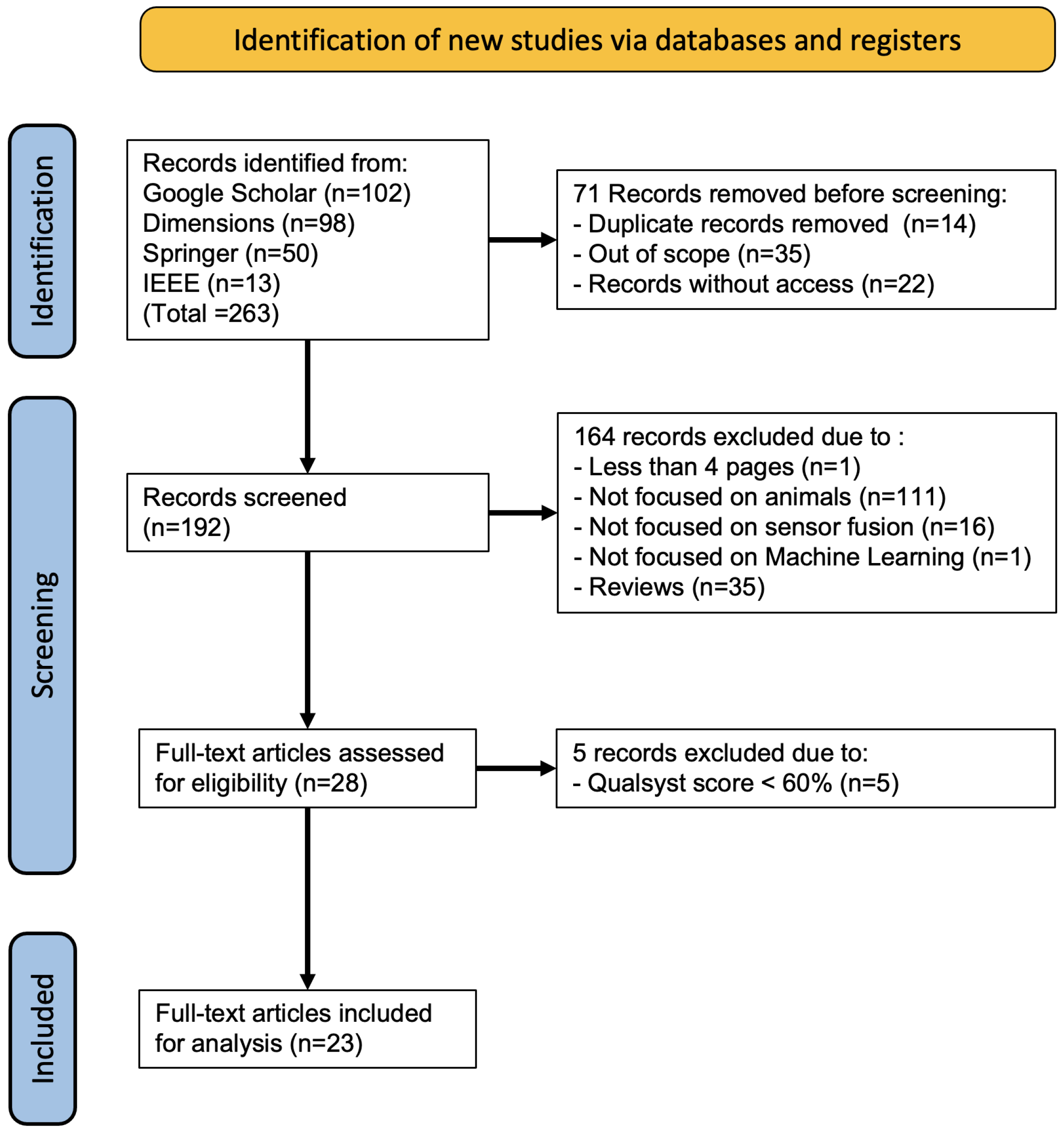

3. Methods

3.1. Eligibility Criteria

3.2. Exclusion Criteria

3.3. Information Sources

3.4. Study Selection

3.5. Data Charting and Synthesis of Results

- Related to the study.

- -

- Year of publication (2011–2022).

- -

- Qualsyst score.

- Related to animal monitoring.

- -

- Problem addressed (e.g., increasing production, monitoring, and welfare).

- -

- Target species.

- -

- Sensor fusion target.

- -

- Animal activities.

- -

- Animal postures.

- -

- Number of animals included in the experiment.

- Related to monitoring technology.

- -

- Animal-computer interface (e.g., collars, vest, ear tags, and girth straps).

- -

- Sensor technology (e.g., accelerometer, gyroscope, magnetometer, optic flow sensor, Global Positioning System (GPS), and microphone).

- -

- The sensor sampling rate.

- Related to sensor fusion.

- -

- Sensor fusion type (e.g., single fusion, uni-modal switching, and mixing). Sensor fusion can be performed by fusing raw data from different sources, extrapolated features, or even decisions made by single nodes.

- -

- Data alignment techniques (e.g., re-sampling interpolation and timestamps). Machine learning assumes data regularity for identifying target patterns, such as fixed duration, to detect a bark in audio. Discrepancies in time, frame rates, or sensor variations (audio, video) can disrupt this regularity. Data alignment techniques are needed to establish consistent time steps in the dataset.

- -

- Feature extraction techniques. These techniques condense valuable information in raw data using mathematical models. Often, these algorithms are employed to reduce extracted features and optimize dataset size. A trend is using pre-trained machine learning models for feature extraction and reduction, leveraging knowledge from large-scale datasets for improved efficiency.

- -

- Feature type. Commonly, feature extraction techniques produce a compact and interpretative resulting dataset by applying mathematical domain transformations to the raw data. The resulting dataset can be assigned a type according to the domain, for example, time, frequency, or timefrequency domain. Raw data is collected in time series in real time. In the time domain, some representative types are traditional descriptive statistics such as mean, variance, skewness, etc. Information coming from the frequency domain can be recovered by using the Fast Fourier Transformation (FFT) or Power Spectrum analysis. Regarding time-frequency features, the short-time Fourier Transform (STFT) is the most straightforward method, but Wavelets are also employed.

- -

- Machine Learning (ML) algorithms. According to Goodfellow et al. [25], ML is essentially a form of applied statistics with an increased emphasis on the use of computers to statistically estimate complicated functions and a decreased emphasis on proving confidence intervals around these functions; typical classification of such methods is on supervised or unsupervised learning depending on the presence or absence of a labeled dataset, respectively. Examples of relevant ML algorithms are [26]: Gradient Descent, Logistic Regression, Support Vector Machine (SVM), K-Nearest Neighbor (KNN), Artificial Neural Networks (ANNs), Decision Tree, Back Propagation Algorithm, Bayesian Learning, and Naïve Bayes.

- Related to performance metrics and results.

- -

- Assessment techniques used to measure model performance (e.g., accuracy, f-score, recall, and sensibility) metrics used in the model evaluation should be aligned with the specific task, such as classification, regression, translation, or anomaly detection. For instance, accuracy is commonly employed in classification tasks to gauge the proportion of correct model outputs. Performance metrics are valuable for assessing model effectiveness during experiments. However, it is essential to note that model performance in real scenarios can be inconsistent due to dependencies on the available training data. This review recorded the best-reported performance metric from each article in the corresponding table.

4. Results

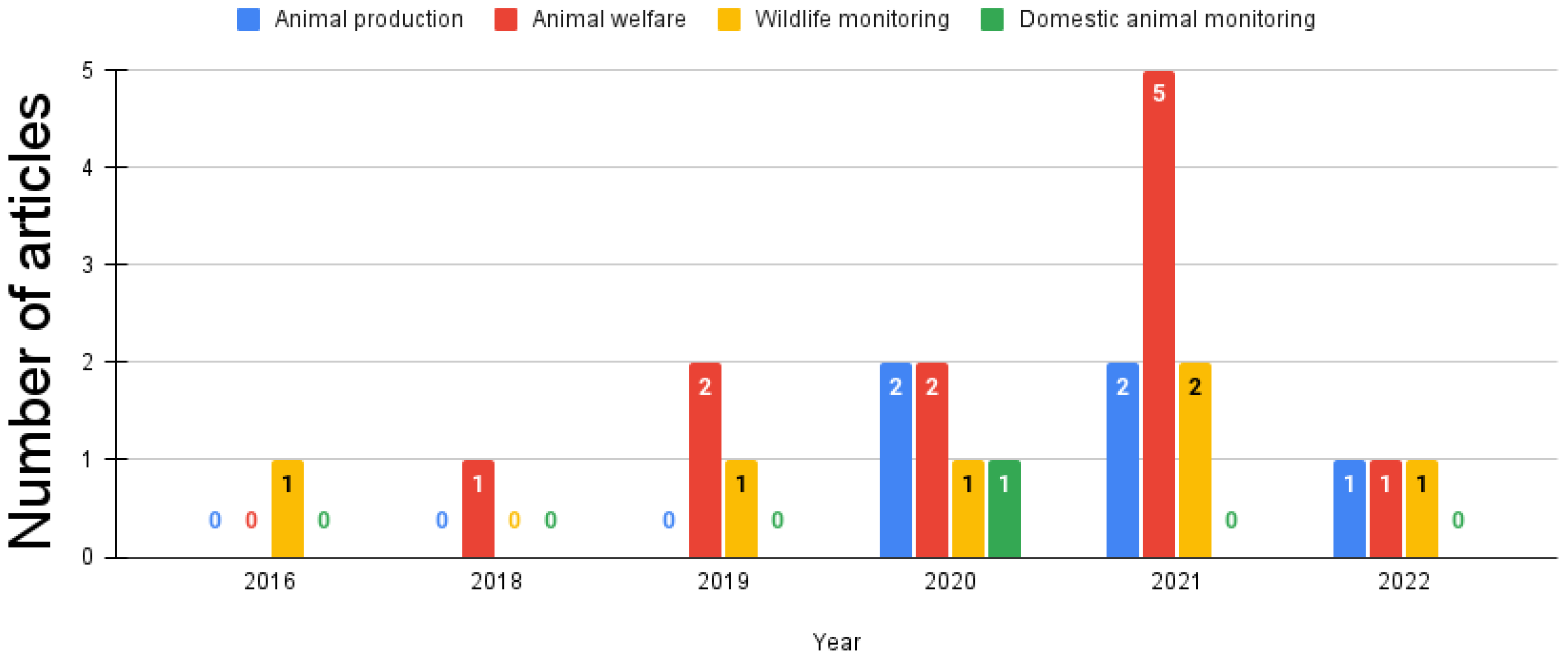

4.1. Overview

4.2. Problems Addressed and Target Species

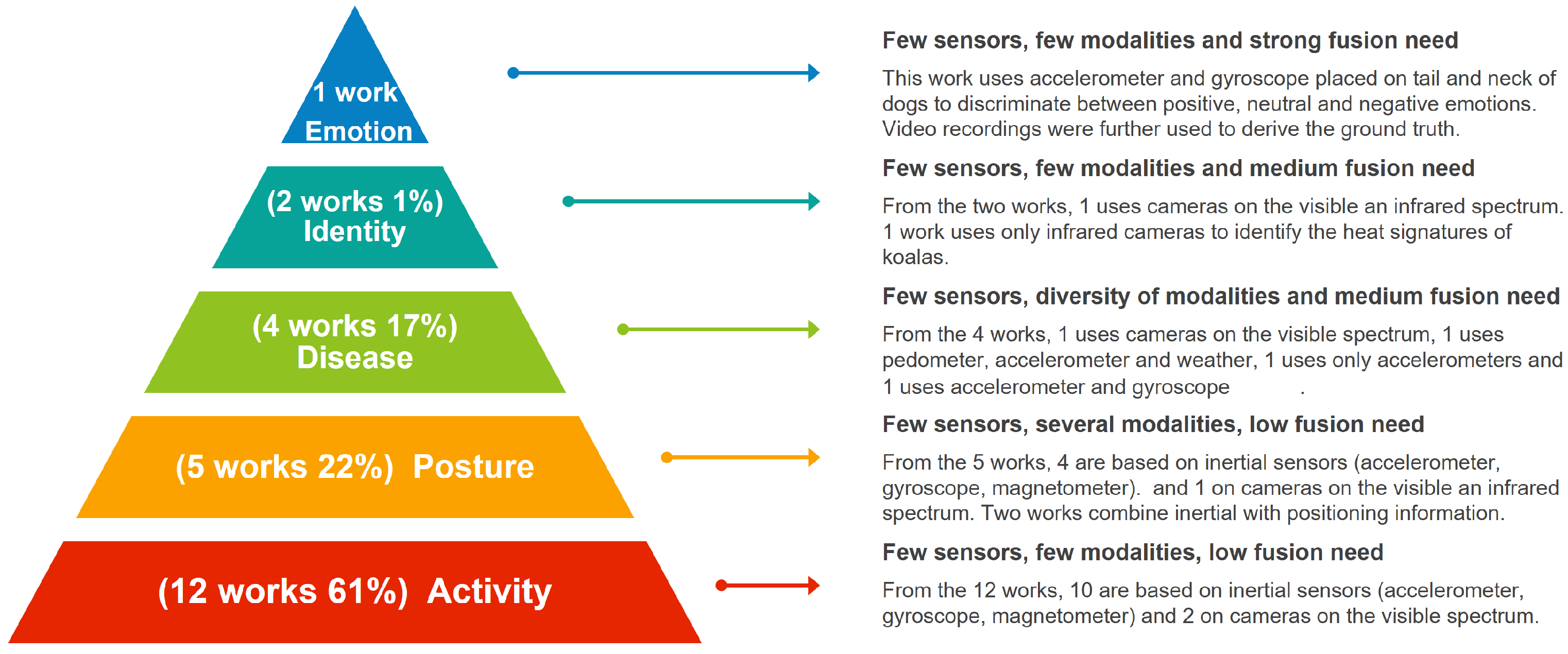

4.3. Sensor Fusion Application

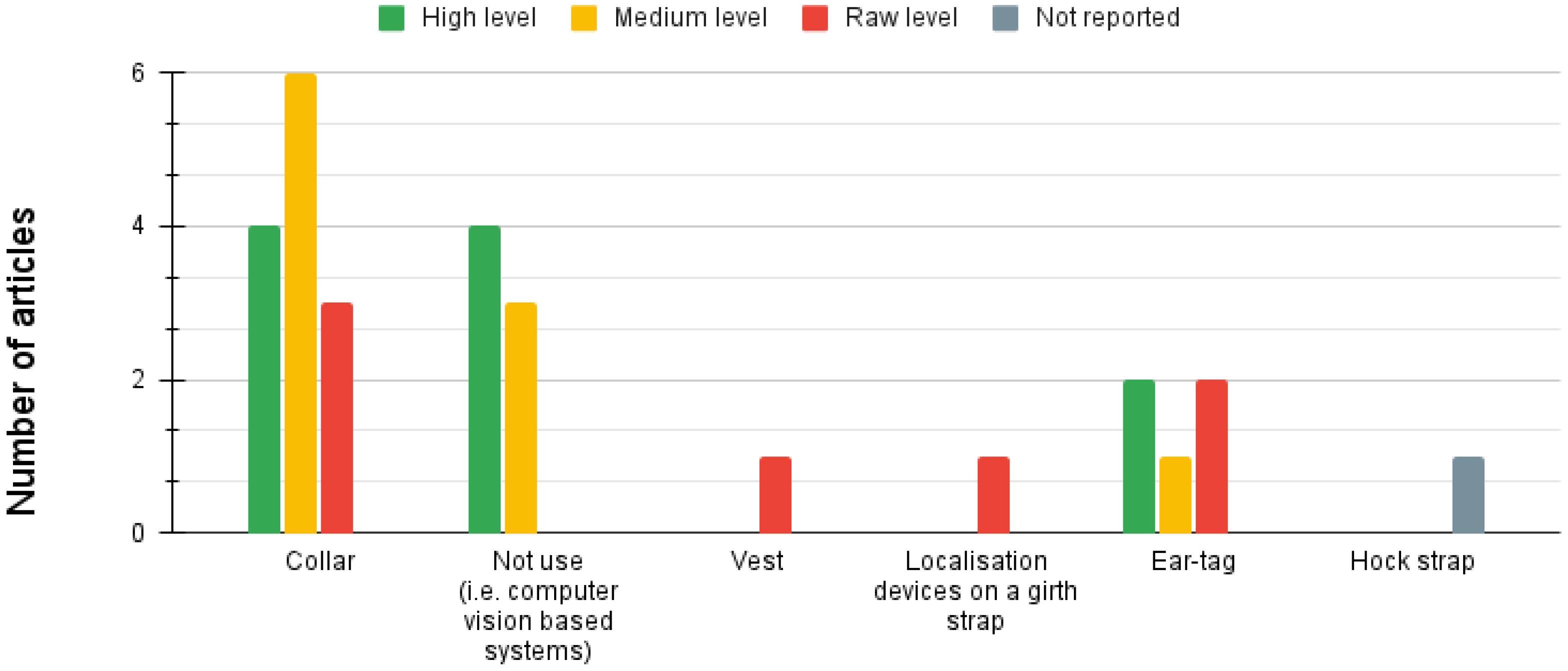

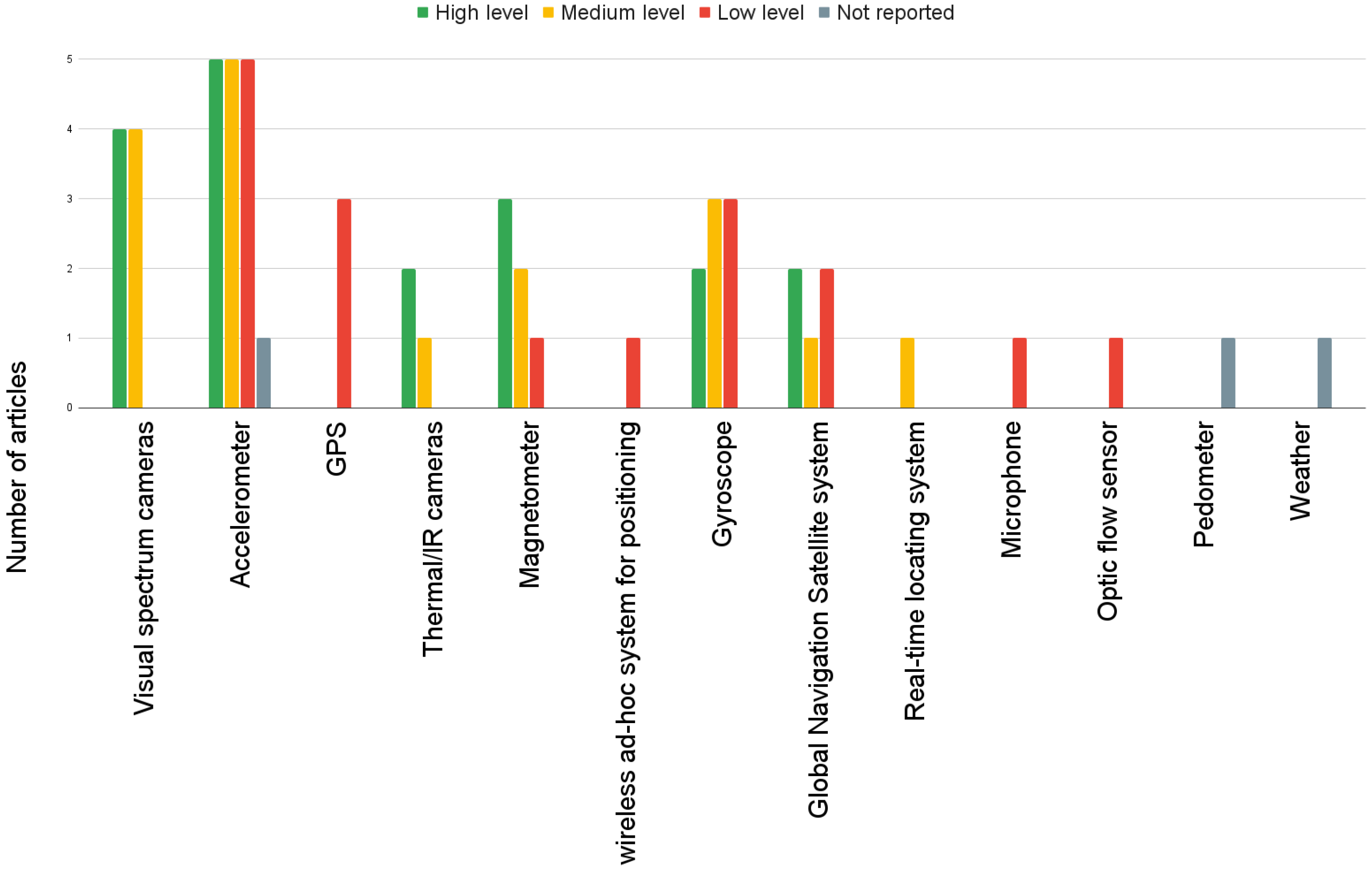

4.4. Sensing Technology for Animal Monitoring Using Sensor Fusion

4.5. Sensor Fusion Techniques

4.6. Machine Learning Algorithms Used for Sensor Fusion

4.7. Performance Metrics and Reported Results

4.8. Analysis by Sensor Fusion Level

5. Discussion

5.1. Overview

5.2. Problems Addressed and Target Species

5.3. Sensor Fusion Application

5.4. Sensing Technology

5.5. Levels of Sensor Fusion

5.6. Sensor Fusion Techniques

5.7. Machine Learning Algorithms

5.8. Performance Metrics and Reported Results

5.9. Limitation of the Review

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Allam, Z.; Dhunny, Z.A. On big data, artificial intelligence and smart cities. Cities 2019, 89, 80–91. [Google Scholar] [CrossRef]

- Andronie, M.; Lăzăroiu, G.; Iatagan, M.; Uță, C.; Ștefănescu, R.; Cocoșatu, M. Artificial Intelligence-Based Decision-Making Algorithms, Internet of Things Sensing Networks, and Deep Learning-Assisted Smart Process Management in Cyber-Physical Production Systems. Electronics 2021, 10, 2497. [Google Scholar] [CrossRef]

- Chui, K.T.; Lytras, M.D.; Visvizi, A. Energy Sustainability in Smart Cities: Artificial Intelligence, Smart Monitoring, and Optimization of Energy Consumption. Energies 2018, 11, 2869. [Google Scholar] [CrossRef]

- Misra, N.N.; Dixit, Y.; Al-Mallahi, A.; Bhullar, M.S.; Upadhyay, R.; Martynenko, A. IoT, Big Data, and Artificial Intelligence in Agriculture and Food Industry. IEEE Internet Things J. 2022, 9, 6305–6324. [Google Scholar] [CrossRef]

- Zhang, Z.; Wen, F.; Sun, Z.; Guo, X.; He, T.; Lee, C. Artificial Intelligence-Enabled Sensing Technologies in the 5G/Internet of Things Era: From Virtual Reality/Augmented Reality to the Digital Twin. Adv. Intell. Syst. 2022, 4, 2100228. [Google Scholar] [CrossRef]

- Cabezas, J.; Yubero, R.; Visitación, B.; Navarro-García, J.; Algar, M.J.; Cano, E.L.; Ortega, F. Analysis of accelerometer and GPS data for cattle behaviour identification and anomalous events detection. Entropy 2022, 24, 336. [Google Scholar] [CrossRef]

- Kasnesis, P.; Doulgerakis, V.; Uzunidis, D.; Kogias, D.G.; Funcia, S.I.; González, M.B.; Giannousis, C.; Patrikakis, C.Z. Deep Learning Empowered Wearable-Based Behavior Recognition for Search and Rescue Dogs. Sensors 2022, 22, 993. [Google Scholar] [CrossRef]

- Castanedo, F. A Review of Data Fusion Techniques. Sci. World J. 2013, 2013, 704504. [Google Scholar] [CrossRef]

- Alberdi, A.; Aztiria, A.; Basarab, A. Towards an automatic early stress recognition system for office environments based on multimodal measurements: A review. J. Biomed. Informa. 2016, 59, 49–75. [Google Scholar] [CrossRef]

- Novak, D.; Riener, R. A survey of sensor fusion methods in wearable robotics. Robot. Auton. Syst. 2015, 73, 155–170. [Google Scholar] [CrossRef]

- Meng, T.; Jing, X.; Yan, Z.; Pedrycz, W. A survey on machine learning for data fusion. Inf. Fusion 2020, 57, 115–129. [Google Scholar] [CrossRef]

- Elmenreich, W. An Introduction to Sensor Fusion. Vienna University of Technology: Vienna, Austria, 2002; pp. 1–28. [Google Scholar]

- Feng, L.; Zhao, Y.; Sun, Y.; Zhao, W.; Tang, J. Action recognition using a spatial-temporal network for wild felines. Animals 2021, 11, 485. [Google Scholar] [CrossRef]

- Jin, Z.; Guo, L.; Shu, H.; Qi, J.; Li, Y.; Xu, B.; Zhang, W.; Wang, K.; Wang, W. Behavior Classification and Analysis of Grazing Sheep on Pasture with Different Sward Surface Heights Using Machine Learning. Animals 2022, 12, 1744. [Google Scholar] [CrossRef]

- Kaler, J.; Mitsch, J.; Vázquez-Diosdado, J.A.; Bollard, N.; Dottorini, T.; Ellis, K.A. Automated detection of lameness in sheep using machine learning approaches: Novel insights into behavioural differences among lame and non-lame sheep. R. Soc. Open Sci. 2020, 7, 190824. [Google Scholar] [CrossRef]

- Leoni, J.; Tanelli, M.; Strada, S.C.; Berger-Wolf, T. Data-Driven Collaborative Intelligent System for Automatic Activities Monitoring of Wild Animals. In Proceedings of the 2020 IEEE International Conference on Human-Machine Systems (ICHMS), Rome, Italy, 7–9 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Neethirajan, S. The role of sensors, big data and machine learning in modern animal farming. Sens. Bio-Sens. Res. 2020, 29, 100367. [Google Scholar] [CrossRef]

- Fuentes, S.; Gonzalez Viejo, C.; Tongson, E.; Dunshea, F.R. The livestock farming digital transformation: Implementation of new and emerging technologies using artificial intelligence. Anim. Health Res. Rev. 2022, 23, 59–71. [Google Scholar] [CrossRef]

- Isabelle, D.A.; Westerlund, M. A Review and Categorization of Artificial Intelligence-Based Opportunities in Wildlife, Ocean and Land Conservation. Sustainability 2022, 14, 1979. [Google Scholar] [CrossRef]

- Neethirajan, S. Recent advances in wearable sensors for animal health management. Sens. Bio-Sens. Res. 2017, 12, 15–29. [Google Scholar] [CrossRef]

- Stygar, A.H.; Gómez, Y.; Berteselli, G.V.; Dalla Costa, E.; Canali, E.; Niemi, J.K.; Llonch, P.; Pastell, M. A systematic review on commercially available and validated sensor technologies for welfare assessment of dairy cattle. Front. Vet. Sci. 2021, 8, 634338. [Google Scholar] [CrossRef]

- Yaseer, A.; Chen, H. A Review of Sensors and Machine Learning in Animal Farming. In Proceedings of the 2021 IEEE 11th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Jiaxing, China, 27–31 July 2021; pp. 747–752. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- Kmet, L.; Lee, R.; Cook, L. Standard Quality Assessment Criteria for Evaluating Primary Research Papers from a Variety of Fields; Institute of Health Economics: Dhaka, Bangladesh, 2004. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Ray, S. A Quick Review of Machine Learning Algorithms. In Proceedings of the 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, 14–16 February 2019; pp. 35–39. [Google Scholar] [CrossRef]

- Aich, S.; Chakraborty, S.; Sim, J.S.; Jang, D.J.; Kim, H.C. The Design of an Automated System for the Analysis of the Activity and Emotional Patterns of Dogs with Wearable Sensors Using Machine Learning. Appl. Sci. 2019, 9, 4938. [Google Scholar] [CrossRef]

- Arablouei, R.; Wang, Z.; Bishop-Hurley, G.J.; Liu, J. Multimodal sensor data fusion for in-situ classification of animal behavior using accelerometry and GNSS data. arXiv 2022, arXiv:2206.12078. [Google Scholar] [CrossRef]

- Bocaj, E.; Uzunidis, D.; Kasnesis, P.; Patrikakis, C.Z. On the Benefits of Deep Convolutional Neural Networks on Animal Activity Recognition. In Proceedings of the 2020 International Conference on Smart Systems and Technologies (SST), Osijek, Croatia, 14–16 October 2020; pp. 83–88. [Google Scholar] [CrossRef]

- Byabazaire, J.; Olariu, C.; Taneja, M.; Davy, A. Lameness Detection as a Service: Application of Machine Learning to an Internet of Cattle. In Proceedings of the 2019 16th IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 11–14 January 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Corcoran, E.; Denman, S.; Hanger, J.; Wilson, B.; Hamilton, G. Automated detection of koalas using low-level aerial surveillance and machine learning. Sci. Rep. 2019, 9, 3208. [Google Scholar] [CrossRef] [PubMed]

- Dziak, D.; Gradolewski, D.; Witkowski, S.; Kaniecki, D.; Jaworski, A.; Skakuj, M.; Kulesza, W.J. Airport Wildlife Hazard Management System. Elektron. Ir Elektrotechnika 2022, 28, 45–53. [Google Scholar] [CrossRef]

- Hou, J.; Strand-Amundsen, R.; Tronstad, C.; Høgetveit, J.O.; Martinsen, Ø.G.; Tønnessen, T.I. Automatic prediction of ischemia-reperfusion injury of small intestine using convolutional neural networks: A pilot study. Sensors 2021, 21, 6691. [Google Scholar] [CrossRef] [PubMed]

- Huang, K.; Li, C.; Zhang, J.; Wang, B. Cascade and fusion: A deep learning approach for camouflaged object sensing. Sensors 2021, 21, 5455. [Google Scholar] [CrossRef]

- Luo, Y.; Zeng, Z.; Lu, H.; Lv, E. Posture Detection of Individual Pigs Based on Lightweight Convolution Neural Networks and Efficient Channel-Wise Attention. Sensors 2021, 21, 8369. [Google Scholar] [CrossRef]

- Mao, A.; Huang, E.; Gan, H.; Parkes, R.S.; Xu, W.; Liu, K. Cross-modality interaction network for equine activity recognition using imbalanced multi-modal data. Sensors 2021, 21, 5818. [Google Scholar] [CrossRef]

- Rahman, A.; Smith, D.V.; Little, B.; Ingham, A.B.; Greenwood, P.L.; Bishop-Hurley, G.J. Cattle behaviour classification from collar, halter, and ear tag sensors. Inf. Process. Agric. 2018, 5, 124–133. [Google Scholar] [CrossRef]

- Ren, K.; Bernes, G.; Hetta, M.; Karlsson, J. Tracking and analysing social interactions in dairy cattle with real-time locating system and machine learning. J. Syst. Archit. 2021, 116, 102139. [Google Scholar] [CrossRef]

- Rios-Navarro, A.; Dominguez-Morales, J.P.; Tapiador-Morales, R.; Dominguez-Morales, M.; Jimenez-Fernandez, A.; Linares-Barranco, A. A Sensor Fusion Horse Gait Classification by a Spiking Neural Network on SpiNNaker. In Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2016, Barcelona, Spain, 6–9 September 2016; Villa, A.E.P., Masulli, P., Pons Rivero, A.J., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 36–44. [Google Scholar]

- Schmeling, L.; Elmamooz, G.; Hoang, P.T.; Kozar, A.; Nicklas, D.; Sünkel, M.; Thurner, S.; Rauch, E. Training and Validating a Machine Learning Model for the Sensor-Based Monitoring of Lying Behavior in Dairy Cows on Pasture and in the Barn. Animals 2021, 11, 2660. [Google Scholar] [CrossRef]

- Sturm, V.; Efrosinin, D.; Öhlschuster, M.; Gusterer, E.; Drillich, M.; Iwersen, M. Combination of Sensor Data and Health Monitoring for Early Detection of Subclinical Ketosis in Dairy Cows. Sensors 2020, 20, 1484. [Google Scholar] [CrossRef]

- Tian, F.; Wang, J.; Xiong, B.; Jiang, L.; Song, Z.; Li, F. Real-Time Behavioral Recognition in Dairy Cows Based on Geomagnetism and Acceleration Information. IEEE Access 2021, 9, 109497–109509. [Google Scholar] [CrossRef]

- Wang, G.; Muhammad, A.; Liu, C.; Du, L.; Li, D. Automatic recognition of fish behavior with a fusion of RGB and optical flow data based on deep learning. Animals 2021, 11, 2774. [Google Scholar] [CrossRef]

- Xu, H.; Li, S.; Lee, C.; Ni, W.; Abbott, D.; Johnson, M.; Lea, J.M.; Yuan, J.; Campbell, D.L.M. Analysis of Cattle Social Transitional Behaviour: Attraction and Repulsion. Sensors 2020, 20, 5340. [Google Scholar] [CrossRef]

- Schollaert Uz, S.; Ames, T.J.; Memarsadeghi, N.; McDonnell, S.M.; Blough, N.V.; Mehta, A.V.; McKay, J.R. Supporting Aquaculture in the Chesapeake Bay Using Artificial Intelligence to Detect Poor Water Quality with Remote Sensing. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 3629–3632. [Google Scholar] [CrossRef]

- de Chaumont, F.; Ey, E.; Torquet, N.; Lagache, T.; Dallongeville, S.; Imbert, A.; Legou, T.; Le Sourd, A.M.; Faure, P.; Bourgeron, T.; et al. Real-time analysis of the behaviour of groups of mice via a depth-sensing camera and machine learning. Nat. Biomed. Eng. 2019, 3, 930–942. [Google Scholar] [CrossRef]

- Narayanaswami, R.; Gandhe, A.; Tyurina, A.; Mehra, R.K. Sensor fusion and feature-based human/animal classification for Unattended Ground Sensors. In Proceedings of the 2010 IEEE International Conference on Technologies for Homeland Security (HST), Waltham, MA, USA, 8–10 November 2010; pp. 344–350. [Google Scholar] [CrossRef]

- Dick, S.; Bracho, C.C. Learning to Detect the Onset of Disease in Cattle from Feedlot Watering Behavior. In Proceedings of the NAFIPS 2007—2007 Annual Meeting of the North American Fuzzy Information Processing Society, San Diego, CA, 24–27 June 2007; pp. 112–116. [Google Scholar] [CrossRef]

- Roberts, P.L.D.; Jaffe, J.S.; Trivedi, M.M. Multiview, Broadband Acoustic Classification of Marine Fish: A Machine Learning Framework and Comparative Analysis. IEEE J. Ocean. Eng. 2011, 36, 90–104. [Google Scholar] [CrossRef]

- Jin, X.; Gupta, S.; Ray, A.; Damarla, T. Multimodal sensor fusion for personnel detection. In Proceedings of the 14th International Conference on Information Fusion, Chicago, IL, USA, 5–8 July 2011; pp. 1–8. [Google Scholar]

- Tian, Q.; Xia, S.; Cao, M.; Cheng, K. Reliable Sensing Data Fusion Through Robust Multiview Prototype Learning. IEEE Trans. Ind. Inform. 2022, 18, 2665–2673. [Google Scholar] [CrossRef]

- Jukan, A.; Masip-Bruin, X.; Amla, N. Smart Computing and Sensing Technologies for Animal Welfare: A Systematic Review. ACM Comput. Surv. 2017, 50, 1–27. [Google Scholar] [CrossRef]

- Tuia, D.; Kellenberger, B.; Beery, S.; Costelloe, B.R.; Zuffi, S.; Risse, B.; Mathis, A.; Mathis, M.W.; van Langevelde, F.; Burghardt, T.; et al. Perspectives in machine learning for wildlife conservation. Nat. Commun. 2022, 13, 792. [Google Scholar] [CrossRef]

- Asmare, B. A Review of Sensor Technologies Applicable for Domestic Livestock Production and Health Management. Adv. Agric. 2022, 2022, e1599190. [Google Scholar] [CrossRef]

| Question |

|---|

| 1. Is the research question/objective sufficiently described? |

| 2. Is the study design evident and appropriate? |

| 3. Is the selection of subject/comparison group or source of information/input variables |

| described and appropriate? |

| 4. Are the subject (and comparison group, if applicable) characteristics sufficiently described? |

| 5. If it is an interventional study, and random allocation was possible, is it described? |

| 6. If it is an interventional study and blinding of investigators was possible, is it reported? |

| 7. If it is an interventional study, and blinding of subjects was possible, is it reported? |

| 8. Are the outcome and (if applicable) exposure measure(s) well-defined and robust |

| to measurement/miss-classification bias? Are the means of assessment reported? |

| 9. Are the analytic methods described/justified and appropriate? |

| 10. Is some estimate of variance reported for the main results? |

| 11. Was confounding controlled for? |

| 12. Are the results reported in sufficient detail? |

| 13. Are the conclusions supported by the results? |

| Characteristic | Studies, n (%) | |

|---|---|---|

| Year of publication | ||

| 2022 | 4 (17.3%) | |

| 2021 | 9 (39.1%) | |

| 2020 | 5 (21.7%) | |

| 2019 | 3 (13%) | |

| 2018 | 1 (4.3%) | |

| 2017 | 0 (0%) | |

| 2016 | 1 (4.3%) | |

| Problems addressed | ||

| Animal welfare | 11 (47.8%) | |

| Wildlife monitoring | 6 (26.1%) | |

| Animal production | 5 (21.7%) | |

| Domestic animal monitoring | 1 (4.3%) | |

| Target species | ||

| Cows | 8 (34.8%) | |

| Horses | 3 (13%) | |

| Pigs | 2 (8.7%) | |

| Felines | 2 (8.7%) | |

| Dogs | 2 (8.7%) | |

| Sheep | 2 (8.7%) | |

| Fish | 1 (4.3%) | |

| Camouflaged animals | 1 (4.3%) | |

| Primates | 1 (4.3%) | |

| Koalas | 1 (4.3%) | |

| Goats | 1 (4.3%) | |

| Birds | 1 (4.3%) | |

| Sensor fusion application | ||

| Activity detection | 12 (52.2%) | |

| Posture detection | 11 (47.8%) | |

| Health screening | 4 (17.4%) | |

| Identity recognition | 2 (8.6%) | |

| Camouflaged animal detection | 1 (4.3%) | |

| Emotion classification | 1 (4.3%) | |

| Personality assessment | 1 (4.3%) | |

| Social behavior analysis | 1 (4.3%) | |

| Spatial proximity measurement | 1 (4.3%) | |

| Animal-computer interface | ||

| Collar | 11 (47.8%) | |

| Natural (vision, audio) | 7 (30.4%) | |

| Ear-tag | 4 (17.4%) | |

| Vest | 1 (4.3%) | |

| Localization devices on a girth strap | 1 (4.3%) | |

| Hock strap | 1 (4.3%) | |

| Sensors technology | ||

| Accelerometer | 14 (60.7%) | |

| Camera (visual spectrum range) | 8 (34.8%) | |

| Gyroscope | 7 (30.4%) | |

| Magnetometer | 5 (21.7%) | |

| Global Positioning System (GPS) | 3 (13%) | |

| Camera (IR spectrum range) | 3 (13%) | |

| Global Navigation Satellite System | 2 (8.7%) | |

| Pedometer | 1 (4.3%) | |

| Wireless ad-hoc system for positioning | 1 (4.3%) | |

| Real-time locating system | 1(4.3%) | |

| Microphone | 1 (4.3%) | |

| Optic flow sensor | 1 (4.3%) | |

| Weather sensor | 1 (4.3%) | |

| Fusion level | ||

| Features/medium level | 9 (39.1%) | |

| Classification/regression/late/decision | 8 (34.8%) | |

| Raw/Early fusion | 7 (30.4%) | |

| Not specified | 1 (4.3%) | |

| Author | Rios-Navarro et al. [39] | Kasnesis et al. [7] | Leoni et al. [16] | Xu et al. [44] | Arablouei et al. [28] | Kaler et al. [15] |

|---|---|---|---|---|---|---|

| Year | 2016 | 2022 | 2020 | 2020 | 2022 | 2020 |

| Qualsyst Score | 76% | 86% | 100% | 100% | 100% | 61% |

| Problem addressed | Wildlife monitoring | Domestic animal monitoring | Wildlife monitoring | Increase production | Increase production | Welfare |

| Fusion application | Activity detection | - Activity detection

- Posture detection | Posture detection | - Personality assessment

- Influence of individuals | Activity detection | Health detection |

| Target population | Horses | Dogs | Primates | Cows | Cows | Sheep |

| Animal interface | Collar | Vest | Collar | Girth strap | - Collar

- Ear-tag | Ear-tag |

| Activities | - Walking

- Trotting | - Walking

- Trotting - Running - Nose work | - Walking

- Running - Feeding - Eating | Leading | - Walking

- Resting - Drinking - Grassing | Walking |

| Postures | Standing | Standing | - Standing

- Sitting | - Stationary

- Non-stationary - Closeness between individuals | - | - Standing

- Lying on the belly, - Lying on the side |

| Participants | 3 | 9 | 26 | 10 | 8 | 18 |

| Sensors/ Sampling rate (Hz, FPS) | - Accelerometer

- Magnetometer - Gyroscope - GPS | - Accelerometer (100 Hz)

- Magnetometer - Gyroscope (100 Hz) - GPS (10 Hz) | - Accelerometer (12 Hz)

- GPS - Optic flow sensor | - GPS (1 Hz)

- Wireless ad-hoc system for positioning | - Accelerometer (50 Hz, 62.5 Hz)

- GPS - Optic flow sensor | - Accelerometer (16 Hz)

- Gyroscope |

| Feature extraction | - | ML models (LSTM-models, CNN-models, etc.) | - Acceleration norm

- Acceleration ratios | - ML models (LSTM-models, CNN-models, etc.) | - | - |

| Feature type | - | Spatiotemporal | - Spatiotemporal

- Motion | - Spatiotemporal

- Spatial - Closeness | - Spatiotemporal

- Spatial | Motion Statistical |

| Data alignment | - | Same datasize | Downsampling | Timestamps | Timestamps | Timestamps |

| Machine learning algorithms | Spiking Neural Network | Convolutional Neural Networks | XGBoost | - K-Means

- Agglomerative Hierarchical Clustering | Multilayer perceptron | - KNN

- Random forest - SVM - Adaboost |

| Sensor fusion type | Single fusion algorithm | Single fusion algorithm | Single fusion algorithm | Extended Kalman filter (EKF) | - Multimodal switching

- Two or more classifiers | Mixing |

| Performance metrics | Accuracy (83.33%) | Accuracy (93%) | - Accuracy (100%)

- Sensibility/Recall (100%) - Specificity (100%) | -Accuracy (15 cm),

- Silhouette analysis | Matthews correlation coefficient | -Accuracy (91.67%)

- Sensibility/Recall (≈80%) - Specificity (≈70%) - Precision (≈75%) - F1-score (≈85%) |

| Author | Ren et al. [38] | Mao et al. [36] | Wang et al. [43] | Luo et al. [35] | Huang et al. [34] | Aich et al. [27] | Jin et al. [14] | Tian et al. [42] | Arablouei et al. [28] |

|---|---|---|---|---|---|---|---|---|---|

| Year | 2021 | 2021 | 2021 | 2021 | 2021 | 2019 | 2022 | 2021 | 2022 |

| Qualsyst Score | 69% | 100% | 69% | 69% | 64% | 78% | 100% | 65% | 100% |

| Problem addressed | Increase production | Welfare | Welfare | Welfare | Wildlife monitoring | Welfare | Welfare | Increase production | Increase production |

| Fusion application | Social behavior analysis | - Posture detection

- Activity detection | - Activity detection

- Health detection | Posture detection | camouflaged animals detection | - Activity detection

- Emotion detection | Activity detection | - Activity detection

- Posture detection | Activity detection |

| Target population | Cows | Horses | Fishes | Pigs | Camouflaged animals | Dogs | Sheep | Cows | Cows |

| Animal interface | Collar | Collar | - | - | - | Collar | Collar | Collar | - Collar

- Ear-tag |

| Activities | - | - Galloping

- Trotting - Feeding/eating - Walking | - Normal

- Feeding/eating | - | - | - Normal

- Feeding/eating - Nose work - jumping | - Walking

- Running - Grassing | - Walking

- Running - Feeding - Eating - Resting - Drinking - Head-shaking - Ruminating | - Walking

- Resting - Drinking - Grassing |

| Postures | - | Standing | - | - Standing,

- Lying on belly - Lying on side - Sitting - Mounting | - | - Sitting

- Sideways - Stay | - Standing

- Lying on belly - Lying on the side - Sitting - Standing | - | - |

| Participants | 120 | 18 | - | 2404 | - | 10 | 3 | 60 | 8 |

| Sensors/ Sampling rate (Hz, FPS) | - Visual spectrum camera (25 FPS)

- Real-time locating system | - Accelerometer (100 Hz)

- Magnetometer - Gyroscope (12 Hz) | Visual spectrum camera (25 FPS) | - Visual spectrum camera (15 FPS)

- Infrared thermal camera | Visual spectrum camera | - Accelerometer (33 Hz)

- Gyroscope | - Accelerometer (20 Hz)

- Gyroscope | - Accelerometer (12.5 Hz)

- Magnetometer | - Accelerometer (50 Hz, 62.5 Hz)

- GPS - Optic flow sensor |

| Feature extraction | ML models (LSTM-models, CNN-models, etc.) | ML models (LSTM-models, CNN-models, etc.) | ML models (LSTM-models, CNN-models, etc.) | ML models (LSTM-models, CNN-models, etc.) | ML models (LSTM-models, CNN-models, etc.) | - | - | Randomly selected features | - |

| Feature type | Temporal domain | - Spatiotemporal

- Temporal domain | - Spatiotemporal

- Motion | Spatial | Spatial | - Statistical

- Peak-based features | - Frequency domain

- Statistical | - Temporal domain | - Spatiotemporal

- Spatial |

| Data alignment | - Timestamps

- Mapping | Concatenation | - Concatenacton

- Downsampling - Same datasize | - Concatenation

- downsampling - same datasize | Same datasize | Timestamps | Same datasize | - | Timestamps |

| Machine learning algorithms | - Convolutional Neural Networks

- Long Short-Term Memory - Long-Term Recurrent Convolution Networks | Convolutional Neural Networks | DSC3D network | - Light-SPD-YOLO model

- YOLO | - Cascade

- Feedback Fusion | - SVM

- KNN - Naive Bayes - Random forest - Artificial Neural Networks | ELM Adaboost Stacking | - SVM

- KNN - Random forest - KNN-RF Weighted Fusion - Gradient Boosting Decision Tree - Learning Vector Quantization | Multilayer perceptron |

| Sensor fusion type | Single fusion algorithm | Cross-modality interaction | Dual-stream Convolutional Neural Networks | Path aggregation network | - Cascade

- Feedback Fusion | Single fusion algorithm | Two or more classifiers | KNN-RF weighted fusion model | Multimodal switching of two or more classifiers |

| Performance metrics | - Accuracy (%)

- Confusion matrix (92%) | - Accuracy (%)

- Sensibility/Recall (%) - F1-score (%) | - Accuracy (%)

- Sensibility/Recall (100%) - F1-score - Confusion matrix | - Sensibility/ Recall

- Accuracy (%) - Receiver operating characteristic curve - Mean average precision (%) | - Accuracy | - Accuracy (%)

- Sensibility/Recall (%) - F1-score (%) - Confusion matrix | - Accuracy (%)

- Kappa value () | -Accuracy (%)

- Confusion matrix - Recognition error rate - Recognition rate (%) | Matthews correlation coefficient |

| Author | Hou et al. [33] | Feng et al. [13] | Bocaj et al. [29] | Schmeling et al. [40] | Dziak et al. [32] | Sturm et al. [41] | Rahman et al. [37] | Corcoran et al. [31] |

|---|---|---|---|---|---|---|---|---|

| Year | 2021 | 2021 | 2020 | 2021 | 2022 | 2020 | 2018 | 2019 |

| Qualsyst Score | 83% | 71% | 70% | 96% | 75% | 100% | 86% | 67% |

| Problem addressed | Welfare | Wildlife monitoring | Welfare | Welfare | Wildlife monitoring | Increase production | Welfare | Wildlife monitoring |

| Fusion application | Health detection | Activity detection | Activity detection | Activity detection | Individuals recognition | Health detection | Activity detection | Individuals recognition |

| Target species | Pigs | Felines | - Horses - Goats | Cows | - Felines - Birds | Cows | Cows | Koalas |

| Animal interface | - | - | Collar | Collar | - | Ear-tag | -Collar - Ear-tag - Halter | Collar |

| Postures | - | Standing | - | - Standing - Lying | - Standing - Lying on the side | - Walking - Running - Flying postures | - Stationary - Non-Stationary - Lying on belly | Standing |

| Activities | - | - Walking - Running | - Galloping - Walking - Running - Trotting - Feeding/eating - Grassing - Walking (with rider) | - Normal - Walking - Feeding/eating - Resting | - Walking - Running - Trotting - Flying | Ruminating | - Grassing - Ruminating | - |

| Participants | 10 | - | 11 | 7–11 | - | 671 | - | 48 |

| Sensors/Sampling rate (Hz, FPS) | Visual spectrum cameras | Visual spectrum cameras (30 FPS) | - Accelerometer (100 Hz) - Magnetometer (12 Hz) - Gyroscope (100 Hz) | - Accelerometer - Magnetometer - Gyroscope - Visual spectrum cameras (60 FPS) | - Visual spectrum cameras - Infrared thermal camera | Accelerometer (10 Hz) | Accelerometer (30 Hz) | Infrared thermal camera (9 Hz) |

| Feature extraction | - Histogram-oriented gradients - Local binary patterns - ML models (LSTM-models, CNN-models, etc.) | ML models (LSTM-models, CNN-models, etc.) | ML models (LSTM-models, CNN-models, etc.) | ML models (LSTM-models, CNN-models, etc.) | ML models (LSTM-models, CNN-models, etc.) | ML models (LSTM-models, CNN-models, etc.) | - | ML models (LSTM-models, CNN-models, etc.) |

| Feature type | Spatial | Spatiotemporal | - | - Spatiotemporal - Motion | - Spatiotemporal - Motion | - Spatial - Statistical | - Statistical - Frequency domain | - |

| Data alignment | Same datasize | Same datasize | Same datasize | - | Downsampling | Timestamps | Timestamps | Same datasize |

| Machine learning algorithms | - Convolutional Neural Networks - Bayesian-CNN | - VGG - LSTMs | Convolutional Neural Networks | - SVM - Naive Bayes - Random forest | - YOLO - FASTER RCNN | - Naive Bayes - Nearest centroid classification | Random forest | - Convolutional Neural Networks - YOLO - FASTER RCNN |

| Sensor fusion type | Two or more classifiers | Single fusion algorithm | Single fusion algorithm | Multimodal switching | Single fusion algorithm | Feature level fusion | Mixing | Two or more classifiers |

| Performance metrics | - Accuracy (%) - Precision | Accuracy (92%) | - Accuracy (%) - F1-score (%) | Accuracy (%) | Accuracy (94%) | - Accuracy (%) - Sensibility/Recall (%) - Precision (%) - F1-score (%) - Mattews correlation (%) - Youdens index (%) | F1-score (%) | Probability of detection (87%) Precision (49%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aguilar-Lazcano, C.A.; Espinosa-Curiel, I.E.; Ríos-Martínez, J.A.; Madera-Ramírez, F.A.; Pérez-Espinosa, H. Machine Learning-Based Sensor Data Fusion for Animal Monitoring: Scoping Review. Sensors 2023, 23, 5732. https://doi.org/10.3390/s23125732

Aguilar-Lazcano CA, Espinosa-Curiel IE, Ríos-Martínez JA, Madera-Ramírez FA, Pérez-Espinosa H. Machine Learning-Based Sensor Data Fusion for Animal Monitoring: Scoping Review. Sensors. 2023; 23(12):5732. https://doi.org/10.3390/s23125732

Chicago/Turabian StyleAguilar-Lazcano, Carlos Alberto, Ismael Edrein Espinosa-Curiel, Jorge Alberto Ríos-Martínez, Francisco Alejandro Madera-Ramírez, and Humberto Pérez-Espinosa. 2023. "Machine Learning-Based Sensor Data Fusion for Animal Monitoring: Scoping Review" Sensors 23, no. 12: 5732. https://doi.org/10.3390/s23125732

APA StyleAguilar-Lazcano, C. A., Espinosa-Curiel, I. E., Ríos-Martínez, J. A., Madera-Ramírez, F. A., & Pérez-Espinosa, H. (2023). Machine Learning-Based Sensor Data Fusion for Animal Monitoring: Scoping Review. Sensors, 23(12), 5732. https://doi.org/10.3390/s23125732