Sensor-Based Classification of Primary and Secondary Car Driver Activities Using Convolutional Neural Networks

Abstract

1. Introduction

- Monitor correct cognitive and safe driver behaviors with intelligent sensors and IoT to monitor the face, eyes, or movements of a driver’s entire body for a novel driver education process [17].

- We investigate the use of JINS MEME ES_R (smart glasses) sensor data and develop a state-of-the-art machine learning model that learns patterns related to the primary and secondary activities of drivers and classifies them into their respective classes.

- We perform a comparative analysis of wearable sensor data consisting of nine activities of the first driver and four activities of the second driver.

- We provide a brief review of related approaches.

2. Related Works

2.1. Drowsiness in Drivers

2.2. Wireless Sensor Networks

2.3. Deep Learning and Driver’s Gaze

3. Materials and Methods

3.1. Data Acquisition

- A central unit equipped with:

- –

- An Intel Core i7 processor;

- –

- XFX RADEON HD 5770 1 GB graphic card with NVIDIA processor and 3D VISION system;

- –

- 4 GB memory;

- –

- Gigabyte’s Ultra Durable 3 motherboard;

- A special construction made of steel;

- A two-way adjustable seat;

- A Logitech set: steering wheel, pedals, and gearbox;

- Three LED 27 monitors suitable for long operation;

- A sound system;

- Dedicated software “Nauka jazdy” (English: Driver training).

3.1.1. Scenarios

- Passing through uncontrolled intersections (three- and four-way);

- Passing through intersections marked with signs establishing priority of passage;

- Drive through intersections with traffic lights;

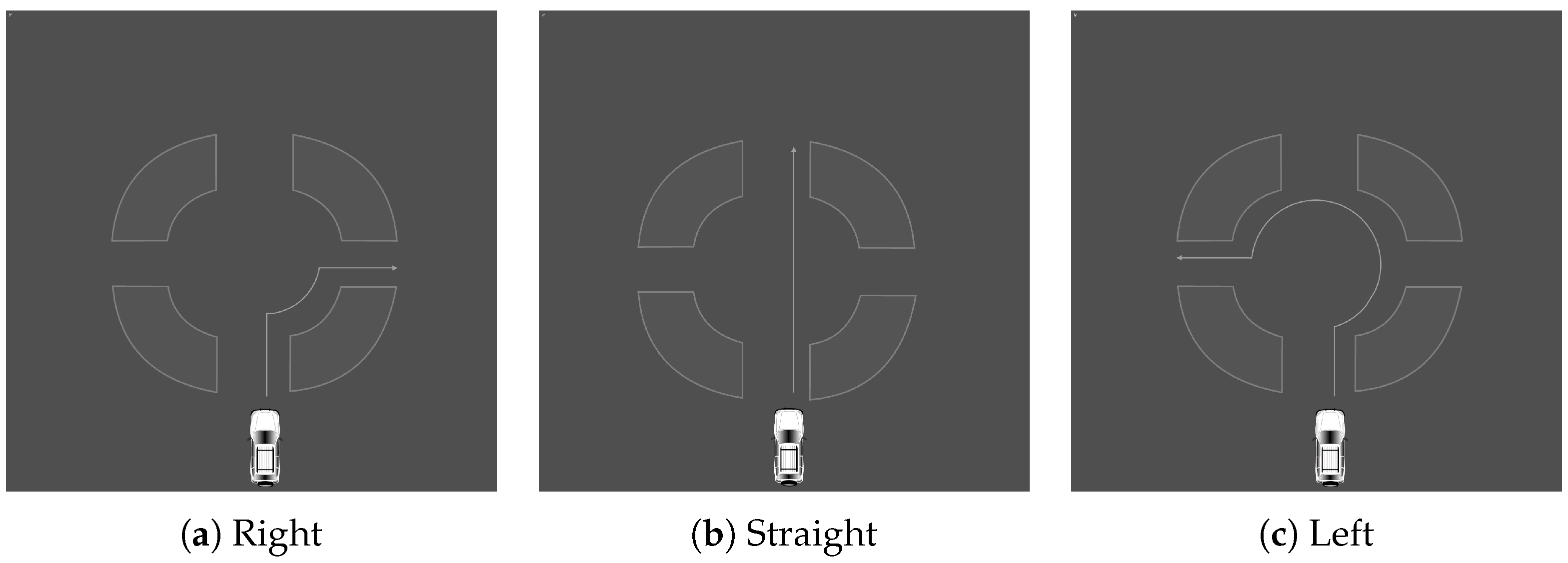

- Drive through intersections where traffic flows around a traffic island;

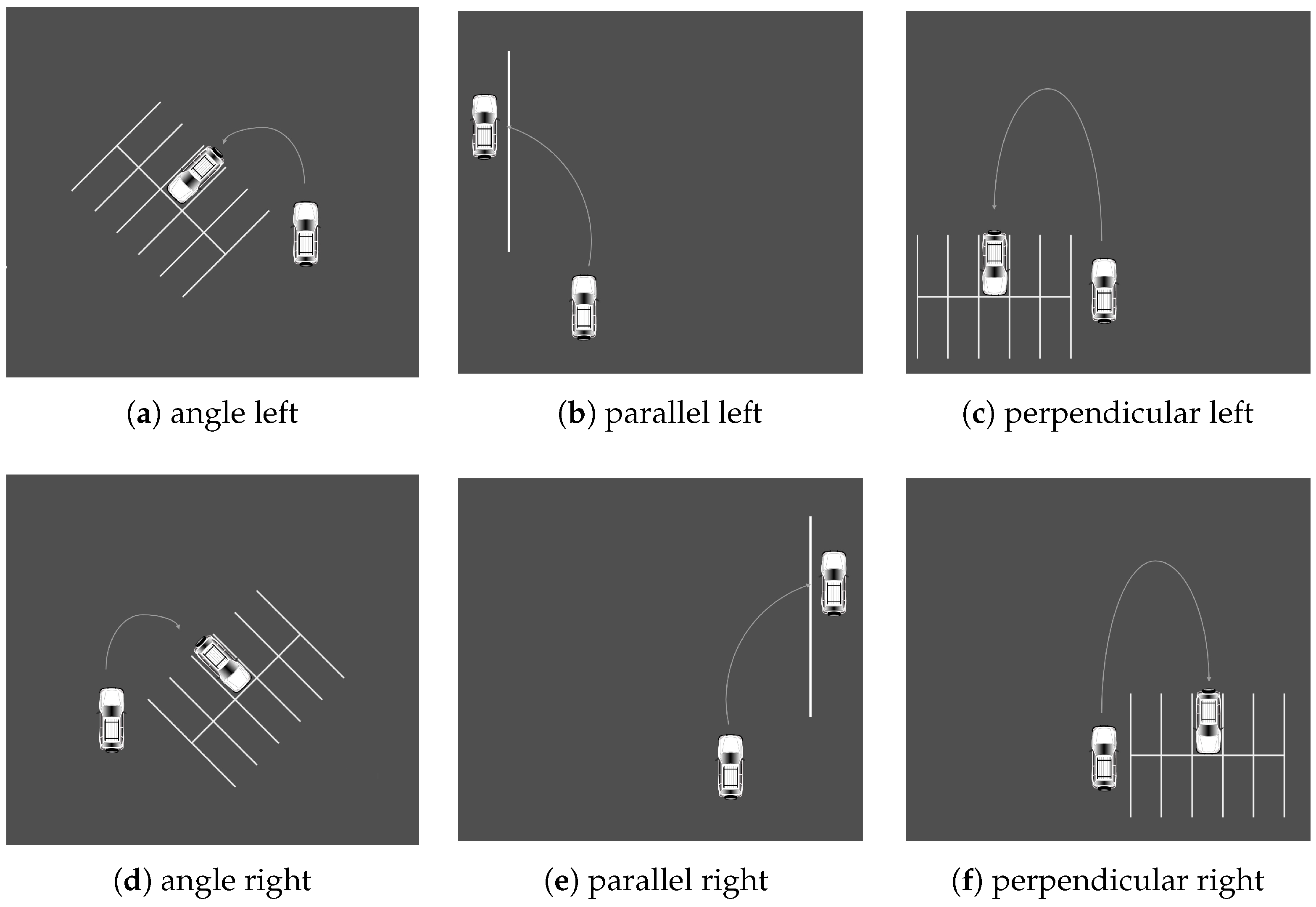

- Perform one of the following parking maneuvers: perpendicular, angle, and parallel.

3.1.2. Basic Driving Activities

3.1.3. Distracting Driving Activities

3.1.4. Data Format and Label Information

- ACC acceleration on the X, Y, and Z axes.

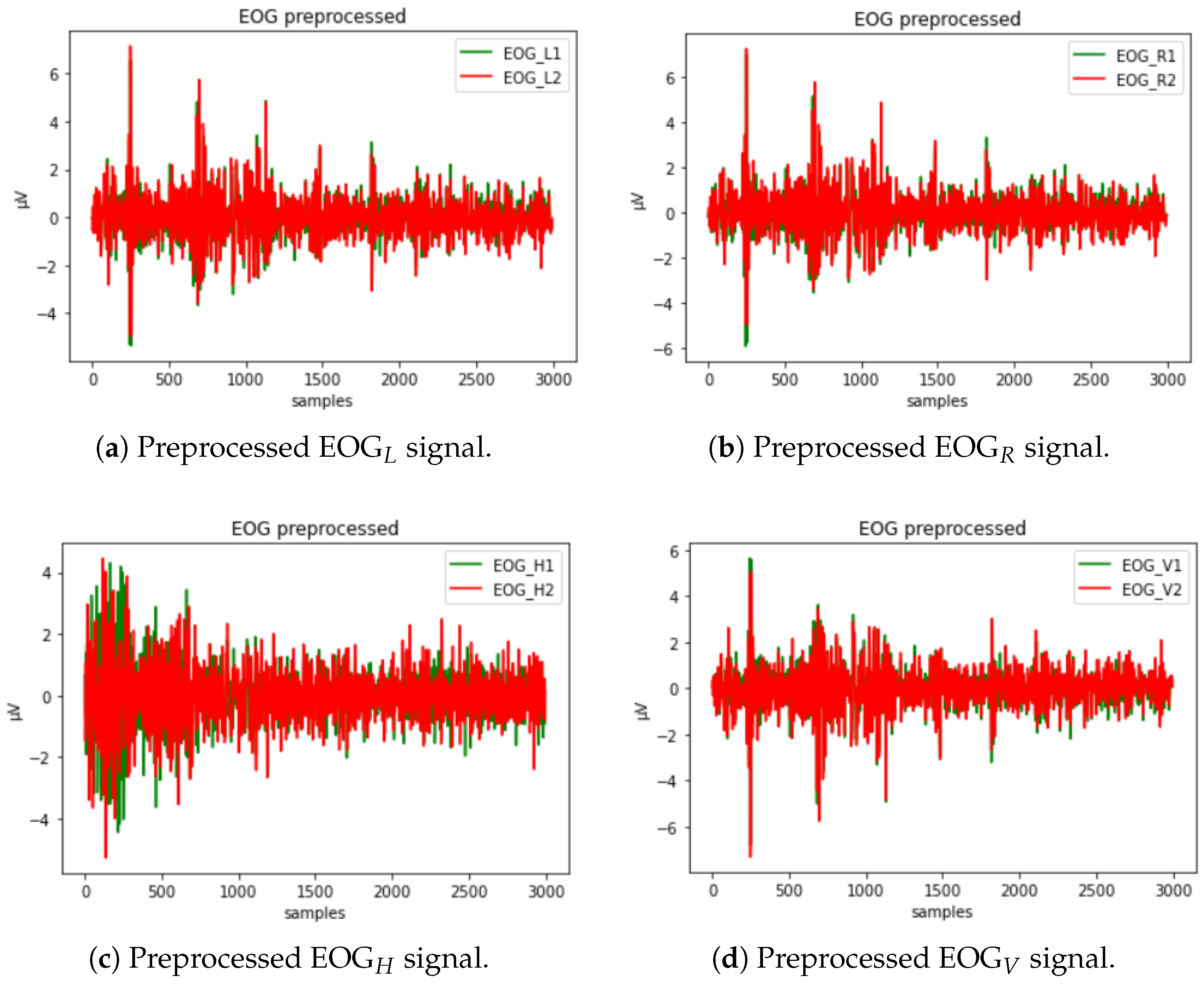

- EOG raw EOG signal from the left and right eye, respectively;

- EOG the difference between the left and right eye potential (EOG− EOG);

- EOG negative arithmetical mean of the left and right eye potential − (EOG + EOG)/2.

3.2. Preprocessing

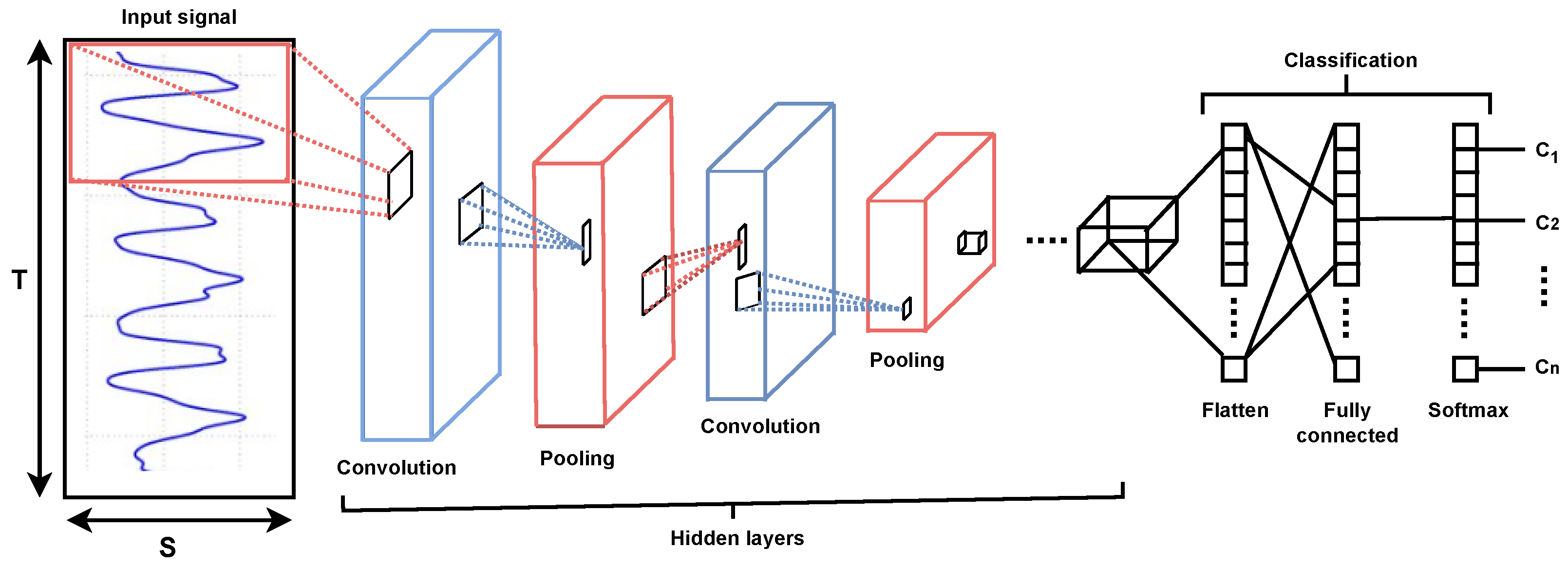

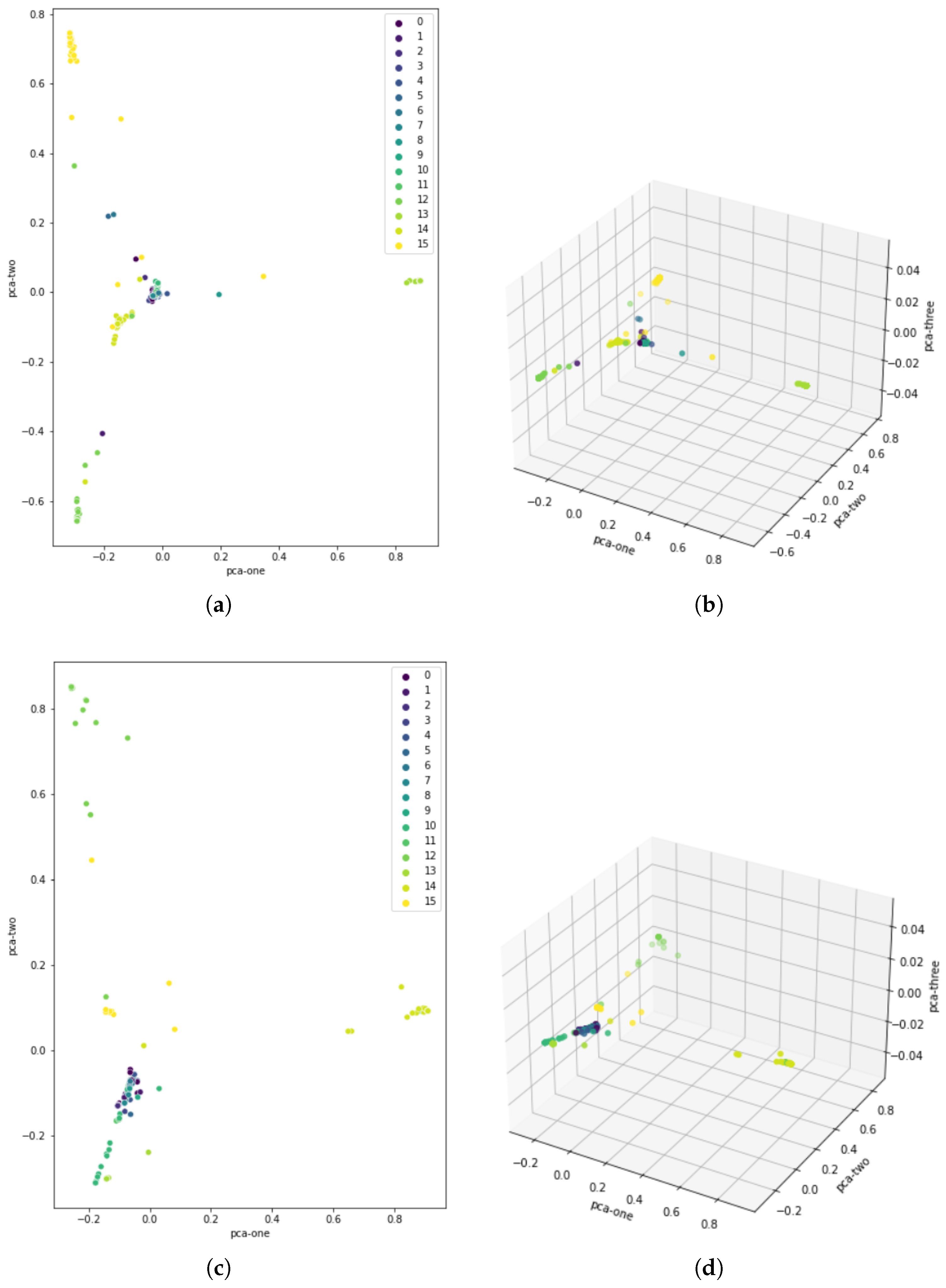

3.3. Classification

3.4. Evaluation

- -

- True positive ()—correctly classified trials;

- -

- False positive ()—incorrectly classified trials;

- -

- True negative ()—correctly classified nonevent trials;

- -

- False negative ()—incorrectly classified nonevent trials.

4. Results

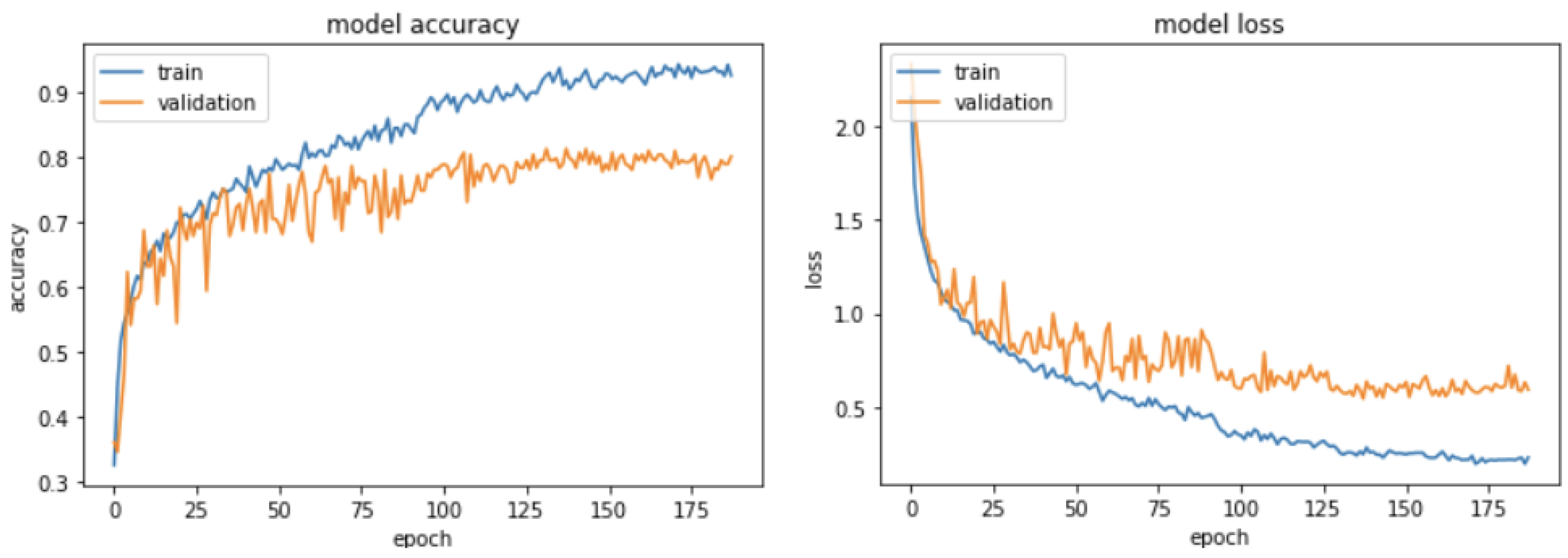

Accuracy and Loss While Training

5. Discussion

6. Conclusions

- We conducted a comparative analysis of wearable sensor data, including nine activities performed by the first driver and four activities performed by the second driver. Our proposed system achieves an impressive overall accuracy of 97% (±2) and an average F1 score of 95% (±2) in detecting these activities. Moreover, our model has the potential to prevent traffic accidents without requiring expensive safety equipment. To further validate our approach, future studies will involve acquiring additional data from real-world road conditions. Such an application would be beneficial for drivers, particularly older individuals or those with disabilities.

- Research involves a comparative analysis of wearable sensor data obtained from different driving activities in various scenarios. By analyzing the signals collected from these sensors, researchers can assess the different activities performed by drivers, gaining insight into driver behavior and activity patterns in various driving scenarios. The developed system holds promise in preventing traffic accidents without the need for costly safety equipment.

- Our investigation focuses on the utilization of sensor data from JINS MEME ES_R smart glasses and the development of an advanced machine learning model that can identify and classify primary and secondary activities of drivers. This state-of-the-art model learns the patterns associated with these activities and assigns them to their respective classes.

- The use of JINS MEME ES_R sensor data involves analyzing and recognizing activities and scenarios associated with driving a vehicle. By integrating data from these wearable glasses, we created an efficient machine learning model that can learn activity patterns and accurately classify them into respective classes. This novel approach to the use of wearable sensor data offers valuable insights into driver behavior and activity recognition.

- A notable contribution of this research is the realistic and noninvasive collection of data. The use of JINS MEME ES_R smart glasses provides a user-friendly and noninvasive method for gathering data during experiments. Unlike intrusive methods, these glasses capture data from the driver’s perspective without causing discomfort or interfering with the driving experience. This noninvasive approach ensures that the collected data closely resemble real-world driving scenarios, allowing for more precise analysis and classification of driver activities. By addressing the challenge of obtaining realistic data while prioritizing participant safety and comfort, this research underscores the importance of using such technology.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kinnear, N.; Stevens, A. The Battle for Attention: Driver Distraction—A Review of Recent Research and Knowledge; Technical Report; IAM: London, UK, 2015. [Google Scholar]

- Doniec, R.; Sieciński, S.; Piaseczna, N.; Mocny-Pachońska, K.; Lang, M.; Szymczyk, J. The Classifier Algorithm for Recognition of Basic Driving Scenarios. In Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2020; pp. 359–367. [Google Scholar] [CrossRef]

- Doniec, R.J.; Sieciński, S.; Duraj, K.M.; Piaseczna, N.J.; Mocny-Pachońska, K.; Tkacz, E.J. Recognition of Drivers’ Activity Based on 1D Convolutional Neural Network. Electronics 2020, 9, 2002. [Google Scholar] [CrossRef]

- Al-Hussein, W.A.; Kiah, M.L.M.; Yee, P.L.; Zaidan, B.B. A systematic review on sensor-based driver behaviour studies: Coherent taxonomy, motivations, challenges, recommendations, substantial analysis and future directions. PeerJ Comput. Sci. 2021, 7, e632. [Google Scholar] [CrossRef] [PubMed]

- Ping, P.; Qin, W.; Xu, Y.; Miyajima, C.; Kazuya, T. Spectral clustering based approach for evaluating the effect of driving behavior on fuel economy. In Proceedings of the 2018 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Houston, TX, USA, 14–17 May 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Jamroz, K.; Smolarek, L. Driver Fatigue and Road Safety on Poland’s National Roads. Int. J. Occup. Saf. Ergon. 2013, 19, 297–309. [Google Scholar] [CrossRef] [PubMed]

- Kurczynski, D.; Zuska, A. Analysis of the Impact of Invisible Road Icing on Selected Parameters of a Minibus Vehicle. Sensors 2022, 22, 9726. [Google Scholar] [CrossRef]

- National Center for Statistics and Analysis. Distracted Driving 2020; Technical Report; National Highway Traffic Safety Administration: Washington, DC, USA, 2022.

- Arevalo-Tamara, A.; Caicedo, A.; Orozco-Fontalvo, M.; Useche, S.A. Distracted driving in relation to risky road behaviors and traffic crashes in Bogota, Colombia. Saf. Sci. 2022, 153, 105803. [Google Scholar] [CrossRef]

- Brown, I.D. Driver Fatigue. Hum. Factors 1994, 36, 298–314. [Google Scholar] [CrossRef]

- Karthaus, M.; Wascher, E.; Getzmann, S. Distraction in the Driving Simulator: An Event-Related Potential (ERP) Study with Young, Middle-Aged, and Older Drivers. Safety 2021, 7, 36. [Google Scholar] [CrossRef]

- Moslemi, N.; Soryani, M.; Azmi, R. Computer vision-based recognition of driver distraction: A review. Concurr. Comput. Pract. Exp. 2021, 33, e6475. [Google Scholar] [CrossRef]

- Kashevnik, A.; Shchedrin, R.; Kaiser, C.; Stocker, A. Driver Distraction Detection Methods: A Literature Review and Framework. IEEE Access 2021, 9, 60063–60076. [Google Scholar] [CrossRef]

- Yan, Q.; Zhang, W.; Hu, W.; Cui, G.; Wei, D.; Xu, J. Gaze dynamics with spatiotemporal guided feature descriptor for prediction of driver’s maneuver behavior. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2021, 235, 3051–3065. [Google Scholar] [CrossRef]

- Uma, S.; Eswari, R. Accident prevention and safety assistance using IOT and machine learning. J. Reliab. Intell. Environ. 2022, 8, 79–103. [Google Scholar] [CrossRef]

- Hozhabr Pour, H.; Li, F.; Wegmeth, L.; Trense, C.; Doniec, R.; Grzegorzek, M.; Wismuller, R. A Machine Learning Framework for Automated Accident Detection Based on Multimodal Sensors in Cars. Sensors 2022, 22, 3634. [Google Scholar] [CrossRef] [PubMed]

- Sun, W.; Si, Y.; Guo, M.; Li, S. Driver Distraction Recognition Using Wearable IMU Sensor Data. Sustainability 2021, 13, 1342. [Google Scholar] [CrossRef]

- Rizzo, M.; Hurtig, R. Looking but not seeing. Neurology 1987, 37, 1642. [Google Scholar] [CrossRef] [PubMed]

- Eraqi, H.M.; Abouelnaga, Y.; Saad, M.H.; Moustafa, M.N. Driver Distraction Identification with an Ensemble of Convolutional Neural Networks. J. Adv. Transp. 2019, 2019, 4125865. [Google Scholar] [CrossRef]

- Ariansyah, D.; Pardamean, B.; Caruso, G. The effect of visual advanced driver assistance systems on a following human driver in a mixed-traffic condition. Procedia Comput. Sci. 2023, 216, 221–229. [Google Scholar] [CrossRef]

- Böddeker, B.; von Wendorff, W.; Nguyen, N.; Diehl, P.; Meertens, R.; Johannson, R. Automated driving safety—The art of conscious risk taking—Minimum lateral distances to pedestrians. In Proceedings of the 2021 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 1–5 February 2021; pp. 1466–1471, ISSN 1558-1101. [Google Scholar] [CrossRef]

- Lin, P.W.; Hsu, C.M. Innovative Framework for Distracted-Driving Alert System Based on Deep Learning. IEEE Access 2022, 10, 77523–77536. [Google Scholar] [CrossRef]

- Klauer, S.G.; Dingus, T.A.; Neale, V.L.; Sudweeks, J.; Ramsey, D.J. The Impact of Driver Inattention on Near-Crash/Crash Risk: An Analysis Using the 100-Car Naturalistic Driving Study Data; Technical Report; National Traffic Safety Administration, US Department of Transportation: Washington, DC, USA, 2006. [Google Scholar]

- Jomnonkwao, S.; Uttra, S.; Ratanavaraha, V. Analysis of a driving behavior measurement model using a modified driver behavior questionnaire encompassing texting, social media use, and drug and alcohol consumption. Transp. Res. Interdiscip. Perspect. 2021, 9, 100302. [Google Scholar] [CrossRef]

- Klauer, S.G.; Guo, F.; Simons-Morton, B.G.; Ouimet, M.C.; Lee, S.E.; Dingus, T.A. Distracted Driving and Risk of Road Crashes among Novice and Experienced Drivers. N. Engl. J. Med. 2014, 370, 54–59. [Google Scholar] [CrossRef]

- Goodman, M.J.; Tijerina, L.; Bents, F.D.; Wierwille, W.W. Using Cellular Telephones in Vehicles: Safe or Unsafe? Transp. Hum. Factors 1999, 1, 3–42. [Google Scholar] [CrossRef]

- Ishimaru, S.; Hoshika, K.; Kunze, K.; Kise, K.; Dengel, A. Towards reading trackers in the wild: Detecting reading activities by EOG glasses and deep neural networks. In Proceedings of the 2017 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2017 ACM International Symposium on Wearable Computers, Maui, HI, USA, 11–15 September 2017; pp. 704–711. [Google Scholar] [CrossRef]

- Nisar, M.A.; Shirahama, K.; Li, F.; Huang, X.; Grzegorzek, M. Rank Pooling Approach for Wearable Sensor-Based ADLs Recognition. Sensors 2020, 20, 3463. [Google Scholar] [CrossRef] [PubMed]

- Uddin, M.Z.; Soylu, A. Human activity recognition using wearable sensors, discriminant analysis, and long short-term memory-based neural structured learning. Sci. Rep. 2021, 11, 16455. [Google Scholar] [CrossRef]

- Yazdansepas, D.; Niazi, A.H.; Gay, J.L.; Maier, F.W.; Ramaswamy, L.; Rasheed, K.; Buman, M.P. A Multi-featured Approach for Wearable Sensor-Based Human Activity Recognition. In Proceedings of the 2016 IEEE International Conference on Healthcare Informatics (ICHI), Chicago, IL, USA, 4–7 October 2016; pp. 423–431. [Google Scholar] [CrossRef]

- Bao, L.; Intille, S.S. Activity Recognition from User-Annotated Acceleration Data. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1–17. [Google Scholar] [CrossRef]

- Chen, L.; Hoey, J.; Nugent, C.D.; Cook, D.J.; Yu, Z. Sensor-Based ActivityRecognition. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012, 42, 790–808. [Google Scholar] [CrossRef]

- D’Souza, S.; Natarajan, S. Recognition of EOG based reading task using AR features. In Proceedings of the International Conference on Circuits, Communication, Control and Computing, Bangalore, India, 21–22 November 2014; pp. 113–117. [Google Scholar] [CrossRef]

- Huang, X.; Shirahama, K.; Irshad, M.T.; Nisar, M.A.; Piet, A.; Grzegorzek, M. Sleep Stage Classification in Children Using Self-Attention and Gaussian Noise Data Augmentation. Sensors 2023, 23, 3446. [Google Scholar] [CrossRef]

- Huang, X.; Shirahama, K.; Li, F.; Grzegorzek, M. Sleep stage classification for child patients using DeConvolutional Neural Network. Artif. Intell. Med. 2020, 110, 101981. [Google Scholar] [CrossRef]

- Doniec, R.; Piaseczna, N.; Li, F.; Duraj, K.; Hozhabr Pour, H.; Grzegorzek, M.; Mocny-Pachońska, K.; Tkacz, E. Classification of Roads and Types of Public Roads Using EOG Smart Glasses and an Algorithm Based on Machine Learning While Driving a Car. Electronics 2022, 11, 2960. [Google Scholar] [CrossRef]

- Bulling, A.; Roggen, D.; Tröster, G. Wearable EOG goggles: Seamless sensing and context-awareness in everyday environments. J. Ambient. Intell. Smart Environ. 2009, 1, 157–171. [Google Scholar] [CrossRef]

- Gruenewald, A.; Kroenert, D.; Poehler, J.; Brueck, R.; Li, F.; Littau, J.; Schnieber, K.; Piet, A.; Grzegorzek, M.; Kampling, H.; et al. Biomedical Data Acquisition and Processing to Recognize Emotions for Affective Learning. In Proceedings of the 2018 IEEE 18th International Conference on Bioinformatics and Bioengineering (BIBE), Taichung, Taiwan, 29–31 October 2018. [Google Scholar] [CrossRef]

- Lin, C.T.; Jiang, W.L.; Chen, S.F.; Huang, K.C.; Liao, L.D. Design of a Wearable Eye-Movement Detection System Based on Electrooculography Signals and Its Experimental Validation. Biosensors 2021, 11, 343. [Google Scholar] [CrossRef]

- Irshad, M.T.; Nisar, M.A.; Huang, X.; Hartz, J.; Flak, O.; Li, F.; Gouverneur, P.; Piet, A.; Oltmanns, K.M.; Grzegorzek, M. SenseHunger: Machine Learning Approach to Hunger Detection Using Wearable Sensors. Sensors 2022, 22, 7711. [Google Scholar] [CrossRef]

- Aksjonov, A.; Nedoma, P.; Vodovozov, V.; Petlenkov, E.; Herrmann, M. Detection and Evaluation of Driver Distraction Using Machine Learning and Fuzzy Logic. IEEE Trans. Intell. Transp. Syst. 2019, 20, 2048–2059. [Google Scholar] [CrossRef]

- Alotaibi, M.; Alotaibi, B. Distracted driver classification using deep learning. Signal Image Video Process. 2020, 14, 617–624. [Google Scholar] [CrossRef]

- Wang, J.; Xu, Y.; Tian, J.; Li, H.; Jiao, W.; Sun, Y.; Li, G. Driving Fatigue Detection with Three Non-Hair-Bearing EEG Channels and Modified Transformer Model. Entropy 2022, 24, 1715. [Google Scholar] [CrossRef] [PubMed]

- Braunagel, C.; Geisler, D.; Rosenstiel, W.; Kasneci, E. Online recognition of driver-activity based on visual scanpath classification. IEEE Intell. Transp. Syst. Mag. 2017, 9, 23–36. [Google Scholar] [CrossRef]

- Pansare, D.J.; Deshpande, R.; Shingare, S.; Deokar, H.; Manwar, P. Real-time Driver Drowsiness Detection with Android. Int. J. Res. Appl. Sci. Eng. Technol. 2022, 10, 441–445. [Google Scholar] [CrossRef]

- Mateos-García, N.; Gil-González, A.B.; Reboredo, A.d.L.; Pérez-Lancho, B. Driver Stress Detection in Simulated Driving Scenarios with Photoplethysmography. In Proceedings of the Distributed Computing and Artificial Intelligence, 19th International Conference, L’Aquila, Italy, 13–15 July 2022; Omatu, S., Mehmood, R., Sitek, P., Cicerone, S., Rodríguez, S., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 291–301. [Google Scholar]

- Jahan, I.; Uddin, K.M.A.; Murad, S.A.; Miah, M.S.U.; Khan, T.Z.; Masud, M.; Aljahdali, S.; Bairagi, A.K. 4D: A Real-Time Driver Drowsiness Detector Using Deep Learning. Electronics 2023, 12, 235. [Google Scholar] [CrossRef]

- Galarza, E.E.; Egas, F.D.; Silva, F.M.; Velasco, P.M.; Galarza, E.D. Real Time Driver Drowsiness Detection Based on Driver’s Face Image Behavior Using a System of Human Computer Interaction Implemented in a Smartphone. In Proceedings of the International Conference on Information Technology & Systems (ICITS 2018), Libertad City, Ecuador, 10–12 January 2018; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 563–572. [Google Scholar] [CrossRef]

- Alvaro, P.K.; Burnett, N.M.; Kennedy, G.A.; Min, W.Y.X.; McMahon, M.; Barnes, M.; Jackson, M.; Howard, M.E. Driver education: Enhancing knowledge of sleep, fatigue and risky behaviour to improve decision making in young drivers. Accid. Anal. Prev. 2018, 112, 77–83. [Google Scholar] [CrossRef]

- AlZubi, A.A.; Abugabah, A.; Al-Maitah, M.; Ibrahim AlZobi, F. DL Multi-sensor information fusion service selective information scheme for improving the Internet of Things based user responses. Measurement 2021, 185, 110008. [Google Scholar] [CrossRef]

- Naqvi, R.A.; Arsalan, M.; Batchuluun, G.; Yoon, H.S.; Park, K.R. Deep learning-based gaze detection system for automobile drivers using a NIR camera sensor. Sensors 2018, 18, 456. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, Y.; Gao, C. Deep unsupervised multi-modal fusion network for detecting driver distraction. Neurocomputing 2021, 421, 26–38. [Google Scholar] [CrossRef]

- Vaegae, N.K.; Pulluri, K.K.; Bagadi, K.; Oyerinde, O.O. Design of an Efficient Distracted Driver Detection System: Deep Learning Approaches. IEEE Access 2022, 10, 116087–116097. [Google Scholar] [CrossRef]

- Cheng, S.; Yang, B.; Wang, Z.; Nakano, K. Spatio-Temporal Image Representation and Deep-Learning-Based Decision Framework for Automated Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 24866–24875. [Google Scholar] [CrossRef]

- Ping, P.; Huang, C.; Ding, W.; Liu, Y.; Chiyomi, M.; Kazuya, T. Distracted driving detection based on the fusion of deep learning and causal reasoning. Inf. Fusion 2023, 89, 121–142. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Wang, H.; Cao, D.; Velenis, E.; Wang, F.Y. Driver Activity Recognition for Intelligent Vehicles: A Deep Learning Approach. IEEE Trans. Veh. Technol. 2019, 68, 5379–5390. [Google Scholar] [CrossRef]

- Vora, S.; Rangesh, A.; Trivedi, M.M. Driver Gaze Zone Estimation Using Convolutional Neural Networks: A General Framework and Ablative Analysis. arXiv 2018, arXiv:180202690 Cs. [Google Scholar] [CrossRef]

- Li, F.; Shirahama, K.; Nisar, M.; Köping, L.; Grzegorzek, M. Comparison of Feature Learning Methods for Human Activity Recognition Using Wearable Sensors. Sensors 2018, 18, 679. [Google Scholar] [CrossRef]

- Irshad, M.T.; Nisar, M.A.; Gouverneur, P.; Rapp, M.; Grzegorzek, M. AI Approaches towards Prechtl’s Assessment of General Movements: A Systematic Literature Review. Sensors 2020, 20, 5321. [Google Scholar] [CrossRef]

- Banerjee, S.; Khadem, N.K.; Kabir, M.M.; Jeihani, M. Driver Behavior Post Cannabis Consumption: A Driving Simulator Study in Collaboration with Montgomery County Maryland. arXiv 2021, arXiv:2112.12026. [Google Scholar]

- JINS, Inc. JINS MEME Glasses Specifications. Available online: https://jins-meme.github.io/apdoc/en/ (accessed on 9 June 2023).

- Sun, Q.; Guo, Y.; Liu, Y.; Wang, C.; Gu, M.; Su, Y. Comparing the Effects of Visual Distraction in a High-Fidelity Driving Simulator and on a Real Highway. IEEE Trans. Intell. Transp. Syst. 2021, 24, 3738–3747. [Google Scholar] [CrossRef]

- Ministry of Infrastructure of the Republic of Poland. Rozporządzenie Ministra Infrastruktury z Dnia 28 Czerwca 2019 r. w Sprawie Egzaminowania Osób Ubiegających Się o Uprawnienia do Kierowania Pojazdami, Szkolenia, Egzaminowania i Uzyskiwania Uprawnień przez Egzaminatorów Oraz Wzorów Dokumentów Stosowanych w Tych Sprawacz, Dz.U. 2019 poz. 1206. 2019. Available online: https://www.dziennikustaw.gov.pl/D2019000120601.pdf (accessed on 9 June 2023).

- Gu, J.J.; Meng, M.Q.H.; Cook, A.; Faulkner, M.G. A study of natural eye movement detection and ocular implant movement control using processed EOG signals. In Proceedings of the 2001 ICRA. IEEE International Conference on Robotics and Automation (Cat. No.01CH37164), Seoul, Korea, 21–26 May 2001; Volume 2, pp. 1555–1560. [Google Scholar]

- Zhao, Z.; Zhou, N.; Zhang, L.; Yan, H.; Xu, Y.; Zhang, Z. Driver Fatigue Detection Based on Convolutional Neural Networks Using EM-CNN. Comput. Intell. Neurosci. 2020, 2020, 7251280. [Google Scholar] [CrossRef]

- Yan, C.; Coenen, F.; Zhang, B. Driving posture recognition by convolutional neural networks. IET Comput. Vis. 2016, 10, 103–114. [Google Scholar] [CrossRef]

- Shahverdy, M.; Fathy, M.; Berangi, R.; Sabokrou, M. Driver behavior detection and classification using deep convolutional neural networks. Expert Syst. Appl. 2020, 149, 113240. [Google Scholar] [CrossRef]

- Deng, T.; Yan, H.; Qin, L.; Ngo, T.; Manjunath, B.S. How Do Drivers Allocate Their Potential Attention? Driving Fixation Prediction via Convolutional Neural Networks. IEEE Trans. Intell. Transp. Syst. 2020, 21, 2146–2154. [Google Scholar] [CrossRef]

- Dhuliawala, M.; Lee, J.; Shimizu, J.; Bulling, A.; Kunze, K.; Starner, T.; Woo, W. Smooth eye movement interaction using EOG glasses. In Proceedings of the ICMI ’16: Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 307–311. [Google Scholar] [CrossRef]

- Díaz, D.; Yee, N.; Daum, C.; Stroulia, E.; Liu, L. Activity Classification in Independent Living Environment with JINS MEME Eyewear. In Proceedings of the 2018 IEEE International Conference on Pervasive Computing and Communications (PerCom), Athens, Greece, 19–23 March 2018; pp. 1–9. [Google Scholar] [CrossRef]

| Eating | Drinking | Turning | Bending |

|---|---|---|---|

| Taking a bite of any food. | Taking a sip of water. | Turning back and reaching to a passenger’s seat. | Bending and picking up a fallen object. |

| Label | Activity |

|---|---|

| 0 | P_Crossroad_Left |

| 1 | P_Crossroad_Right |

| 2 | P_Crossroad_Straight |

| 3 | P_Parking_Diagonal_Left |

| 4 | P_Parking_Diagonal_Right |

| 5 | P_Parking_Parallel_Left |

| 6 | P_Parking_Parallel_Right |

| 7 | P_Parking_Perpendicular_Left |

| 8 | P_Parking_Perpendicular_Right |

| 9 | P_Roundabout_Left |

| 10 | P_Roundabout_Right |

| 11 | P_Roundabout_Straight |

| 12 | S_Bending |

| 13 | S_Drinking |

| 14 | S_Eating |

| 15 | S_Turning_Back |

| Layer Name | No. Kernels (Units) | Kernel (Pool) Size | Stride Size | Activation |

|---|---|---|---|---|

| Convolution | 128 | 5 | 1 | ReLU |

| Batch norm | - | - | - | - |

| Max pooling | - | 3 | - | - |

| Convolution | 128 | 5 | 1 | ReLU |

| Batch norm | - | - | - | - |

| Convolution | 128 | 5 | 1 | ReLU |

| Batch norm | - | - | - | - |

| Global avg. pooling | - | - | - | - |

| Dense | 2 | - | - | Softmax |

| Label | Activity | Precision | Recall | F1 Score |

|---|---|---|---|---|

| 0 | P_Crossroad_Left | 1.00 | 0.67 | 0.80 |

| 1 | P_Crossroad_Right | 0.75 | 0.75 | 0.75 |

| 2 | P_Crossroad_Straight | 0.75 | 0.90 | 0.82 |

| 3 | P_Parking_Diagonal_Left | 0.67 | 0.75 | 0.71 |

| 4 | P_Parking_Diagonal_Right | 0.50 | 0.50 | 0.50 |

| 5 | P_Parking_Parallel_Left | 0.44 | 0.40 | 0.42 |

| 6 | P_Parking_Parallel_Right | 0.86 | 0.60 | 0.71 |

| 7 | P_Parking_Perpendicular_Left | 0.38 | 1.00 | 0.55 |

| 8 | P_Parking_Perpendicular_Right | 1.00 | 0.29 | 0.44 |

| 9 | P_Roundabout_Left | 1.00 | 0.88 | 0.93 |

| 10 | P_Roundabout_Right | 0.82 | 0.86 | 0.84 |

| 11 | P_Roundabout_Straight | 0.86 | 0.75 | 0.80 |

| 12 | S_Bending | 0.95 | 0.90 | 0.93 |

| 13 | S_Drinking | 1.00 | 1.00 | 1.00 |

| 14 | S_Eating | 0.90 | 0.95 | 0.93 |

| 15 | S_Turning_Back | 0.88 | 0.94 | 0.91 |

| Activity | Accuracy [%] | Precision | Recall | F1 Score |

|---|---|---|---|---|

| Crossroad | 97.9 | 0.97 | 0.91 | 0.94 |

| Parking | 96.8 | 0.92 | 0.96 | 0.94 |

| Roundabout | 97.4 | 0.94 | 0.92 | 0.93 |

| Secondary | 99.5 | 0.99 | 1.00 | 0.99 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Doniec, R.; Konior, J.; Sieciński, S.; Piet, A.; Irshad, M.T.; Piaseczna, N.; Hasan, M.A.; Li, F.; Nisar, M.A.; Grzegorzek, M. Sensor-Based Classification of Primary and Secondary Car Driver Activities Using Convolutional Neural Networks. Sensors 2023, 23, 5551. https://doi.org/10.3390/s23125551

Doniec R, Konior J, Sieciński S, Piet A, Irshad MT, Piaseczna N, Hasan MA, Li F, Nisar MA, Grzegorzek M. Sensor-Based Classification of Primary and Secondary Car Driver Activities Using Convolutional Neural Networks. Sensors. 2023; 23(12):5551. https://doi.org/10.3390/s23125551

Chicago/Turabian StyleDoniec, Rafał, Justyna Konior, Szymon Sieciński, Artur Piet, Muhammad Tausif Irshad, Natalia Piaseczna, Md Abid Hasan, Frédéric Li, Muhammad Adeel Nisar, and Marcin Grzegorzek. 2023. "Sensor-Based Classification of Primary and Secondary Car Driver Activities Using Convolutional Neural Networks" Sensors 23, no. 12: 5551. https://doi.org/10.3390/s23125551

APA StyleDoniec, R., Konior, J., Sieciński, S., Piet, A., Irshad, M. T., Piaseczna, N., Hasan, M. A., Li, F., Nisar, M. A., & Grzegorzek, M. (2023). Sensor-Based Classification of Primary and Secondary Car Driver Activities Using Convolutional Neural Networks. Sensors, 23(12), 5551. https://doi.org/10.3390/s23125551