1. Introduction

Machine learning algorithms have been used over the last ten years in almost all fields where problems associated with data classification, pattern recognition, non-linear regression, etc., have to be solved. The application of such algorithms has also intensified in the field of queueing theory. While the first steps in the successful application of machine learning to evaluate the performance characteristics of simple and complex queueing systems have already been taken, the total number of works on this topic still remains modest. As for reviews, we can only refer to a recent paper by Vishnevsky and Gorbunova [

1] which proposes a systematic introduction to the use of machine learning in the study of queueing systems and networks. Before we formulate our specific problem we would like also to make a small contribution to the popularisation of machine learning in the queueing theory by describing briefly the latest works. In Stintzing and Norrman [

2], an artificial neural network was used for predicting the number of busy servers in the

queueing system. The papers of Nii et al. [

3] and Sherzer et al. [

4] have answered positively the question regarding whether the machines could be useful for solving the problems in general queueing systems. They employed a neural network approach to estimate the mean performance measures of the multi-server queues

based on the first two moments of the inter-arrival and service time distributions. A machine learning approach was used in the work of Kyritsis and Deriaz [

5] to predict the waiting time in queueing scenarios. The combination of a simulation and machine learning techniques for assessing the performance characteristics was illustrated in Vishnevsky et al. [

6] on a queueing system

with

K priority classes. Markovian queues were simulated using artificial neural networks in Sivakami et al. [

7]. Neural networks were used also in research by Efrosinin and Stepanova [

8] to estimate the optimal threshold policy in a heterogeneous

queueing system. The combination of the Markov decision problem and neural networks for the heterogeneous queueing model with process sharing was studied by Efrosinin et al. [

9]. The performance parameters of the closed queueing network by means of a neural network were evaluated in Gorbunova and Vishnevsky [

10]. In addition to the presented results of using neural networks in hypothetical queueing theory models, academic studies in this area with real-world applications have gradually been proposed. For example, the problem regarding the choice of an optimum charging–discharging schedule for electric vehicles with the usage of a neural network is proposed by Aljafari et al. [

11]. The main conclusion to be drawn from the previous results obtained via the application of machine learning to models of the queueing theory is that the neural networks cannot be treated as a replacement for classical methods in system performance analysis, but rather as a complement to the capabilities of such an analysis.

The systems with parallel queues and one server are known also as polling systems which have found wide application in various fields such as computer networks, telecommunications systems, control in manufacturing and road traffic. For analytic and numerical results in various types of polling systems with applications to broadband wireless Wi-Fi and Wi-MAX networks, we refer interested readers to the textbook by Vishnevsky and Semenova [

12] and the references therein. The same authors in [

13] developed their research on polling systems to systems with correlated arrival flows such as

,

, and the group Poisson arrivals. In Vishnevskiy et al. [

14], it was shown that the results obtained by a neural network are close enough to the results of analytical or simulation calculations for the

and

-type polling systems with cyclic polling. Markovian versions of a single-server model with parallel queues have been investigated by a number of authors. The two-queue homogeneous model with equal service rates and holding costs was studied in Horfi and Ross [

15], where it was shown that the queues must be serviced exhaustively according to the optimal policy. In research by Liu et al. [

16], it was shown that the scheduling policy that routes the server with respect to the LQF (Longest Queue First) policy is optimal when all queue lengths are known and that the cyclic scheduling policy is optimal in cases where the only information available is the previous decisions. The systems with multiple heterogeneous queues in different settings, also known as asymmetric polling systems, have been studied intensively in cases where there are no switching costs by Buyukkoc et al. [

17], Cox and Smith [

18], where the optimality of the static

-rule was proved. This policy schedules a server first to the queue

i with a maximum weight

consisting of the holding cost and service rate. In Koole [

19], the problem of optimal control in a two-queue system was analysed by means of the continuous-time Markov decision process and dynamic programming approach. The author found numerically that the optimal policy which minimizes the average cost per unit of time can be quite complex if there are both holding and switching costs. The threshold-based policy for such a queueing system was applied by Avram and Gómez-Corral [

20], where the expressions for the long-run expected average cost of holding units and switching actions of the server were given. The queueing system with general service times and set-up costs which have an effect on the instantaneous switch from one queue to another was studied in Duenyas and Van Oyen [

21]. The authors proposed a simple heuristic scheduling policy for the system with multiple queues. A rather similar model is described in Matsumoto [

22], where the optimal scheduling problem is solved in a system with arbitrary time distributions. Here, instead of switching costs, the corresponding set-up time intervals required for switching are used. The system is controlled by the Learning Vector Quantization (LVQ) network, see Kohonen [

23] for details, which classifies the system state by the closest codebook vector of a certain class in terms of the Euclidean metric. The problem with this approach is the large number of parameters associated with the codebook vectors, where it is normally required that several vectors per class must be estimated for a given control policy using computationally expensive recurrent algorithms.

This paper proposes a fairly universal method for solving the problem of optimal dynamic scheduling or allocation in queueing systems of the general type, i.e., where the times between events are arbitrarily distributed, and in queueing systems with correlated inter-arrival and service times. Furthermore, it can provide a performance analysis of complex controlled systems described by multidimensional random processes, for which finding analytical, approximate or heuristic solutions is a difficult task. The main idea of the paper is to use a multi-layer neural network for server scheduling. The parameters of this neural network trained first on some arbitrary control policy are optimized then with the aim to minimize a specified average cost function. Moreover, such a cost function for systems with arbitrary inter-arrival and service time distributions can only be computed via simulation. We consider this approach, which combines neural networks with simulation technique, to be quite universal to obtain an optimal deterministic control policy in complicated queueing systems. The method is exemplified by some version of a single-server system with parallel queues equipped with a controller for scheduling a server. The system under study is assumed to have heterogeneous arrival and service attributes, i.e., unequal arrival and service rates, as well as holding and switching costs. Systems with arbitrary distributions and switching costs have not yet been considered by other authors. It is assumed in our model that the queue currently being served by the server is serviced exhaustively. The next queue to be served by the server is selected according to a dynamic scheduling policy based on the queue state information, i.e., on the number of customers waiting in each of parallel queues. It is expected that the changing of the serviced queue involves the switching costs. The holding of a customer in the system is also linked to the corresponding cost. Clearly, even with some fixed scheduling control policy, calculating any characteristics of the proposed queueing system with arbitrary inter-arrival and service time distributions in explicit form is not a trivial task. It is also difficult to fix the dynamic control policy defining the scheduling in large systems in a standard way, e.g., through a control matrix that would contain the corresponding control action for all possible states of the system. Therefore, in such a case we consider it justified to solve the problem of finding the optimal scheduling policy with the aim to minimize the average cost per unit of time by combining the simulation as a tool to calculate the performance characteristics of the system with a machine learning technique, where the neural network will be responsible for dynamic control. By training a neural network for some initial control policy, we obtain characteristics of the network in the form of a matrix of weights and a vector of biases. The process of solving the optimal scheduling problem is then reduced to a discrete parametric optimization. The parameters of the neural network must be optimized in such a way that this network can guarantee the minimal values of the average cost functional by generating control actions at decision epochs. For this purpose, we have chosen one of the random search methods, such as simulated annealing, see, e.g., in Aarts and Korst [

24], Ahmed [

25]. It is a heuristic method based on a concept of heating and controlled cooling in metallurgy and is normally used for global optimization problems in a large search space without any assumption on the form of the objective function. This algorithm was implemented by Gallo and Capozzi [

26] specifically for the probabilistic scheduling problem. The algorithm will be adapted for a non-explicitly defined parametric function with a large number of variables defined on a discrete domain.

To verify the quality of the calculated optimal parameters of the neural network, the values of the average cost functional for the markovian version of the queueing system are compared with the results obtained by solving the Markov decision problem (MDP). The general theory on MDP models is discussed in Puterman [

27] and Tijms [

28]. The details on application of MDP to controlled queueing systems with heterogeneous servers can be found in Efrosinin [

29]. The optimal control policy and the corresponding objective function are calculated in the paper via a policy-iteration algorithm proposed in Howard [

30] for an arbitrary finite-state Markov decision process. According to the MDP, the router in our system has to find an optimal control action in the state visited at a decision epoch with the aim to minimize the long-run average cost. Note that for our queueing model under general assumptions the semi-Markov decision problem (SMDP) can be formulated. The SMDP is a more powerful model than the MDP since the time spent by the system in each state before a transition is taken into account by calculating the objective function. The objective function must be calculated here also by means of a simulation. In this case, the reinforcement learning algorithm, e.g.,

Q-

P-Learning, can be applied. The main problem of this approach consists of the fact that many pairs of state and action can remain non-observable for deterministic control policy and as a result the control actions in such states can not be optimized. However, in our opinion, neural networks can also be used to solve this problem which presents a potential task for further research. The SMDP topic is outside the scope of this article but we refer readers to work by Gosavi [

31], where one can find a very interesting overview on reinforcement learning and a well-designed classification of simulated-based optimization algorithms.

Summarising our research in this paper we can highlight the following main contributions: (a) We propose a new controlled single-server system with parallel queues where the router uses a trained multi-level neural network to perform a scheduling control: (b) A simulated annealing method is adapted to optimize the weights and biases of the neural network with the aim to minimize the average cost function which can be calculated only via simulation; (c) The quality of the resulting optimal scheduling policy is verified solving a Markov decision problem for the Markovian analog of the queueing system; (d) We provide detailed numerical analysis of the optimal scheduling policy and discuss its sensitivity to the shape of the inter-arrival and service time distributions; (e) The distinctive feature of our paper is the presence of algorithms employed in the form of pseudocodes with detailed descriptions of relevant steps.

The rest of the paper is organized as follows.

Section 2 presents a formal description of the queueing system and optimization problem.

Section 3 describes the Markov decision problem and the policy-iteration algorithm used to calculate optimal scheduling policy. In

Section 4, the event-based simulation procedure of the proposed queueing system is discussed. The neural network architecture, parametrization and training algorithm are summarized in

Section 5.

Section 6 presents simulated annealing optimization algorithm. The numerical analysis is shown in

Section 7 and concluding remarks are provided in

Section 8.

The following notations are introduced for use in sequel. Let denote the vector of appropriate dimension with 1 in the jth position beginning from 0th and 0 elsewhere, denote the indicator function which takes the value 1 if the event A occurs and 0 otherwise. The notations and mean the minimum and maximum of the values that a can assume, and , denote the element index associated, respectively, with the minimum and maximum value.

2. Single-Server System with Parallel Queues

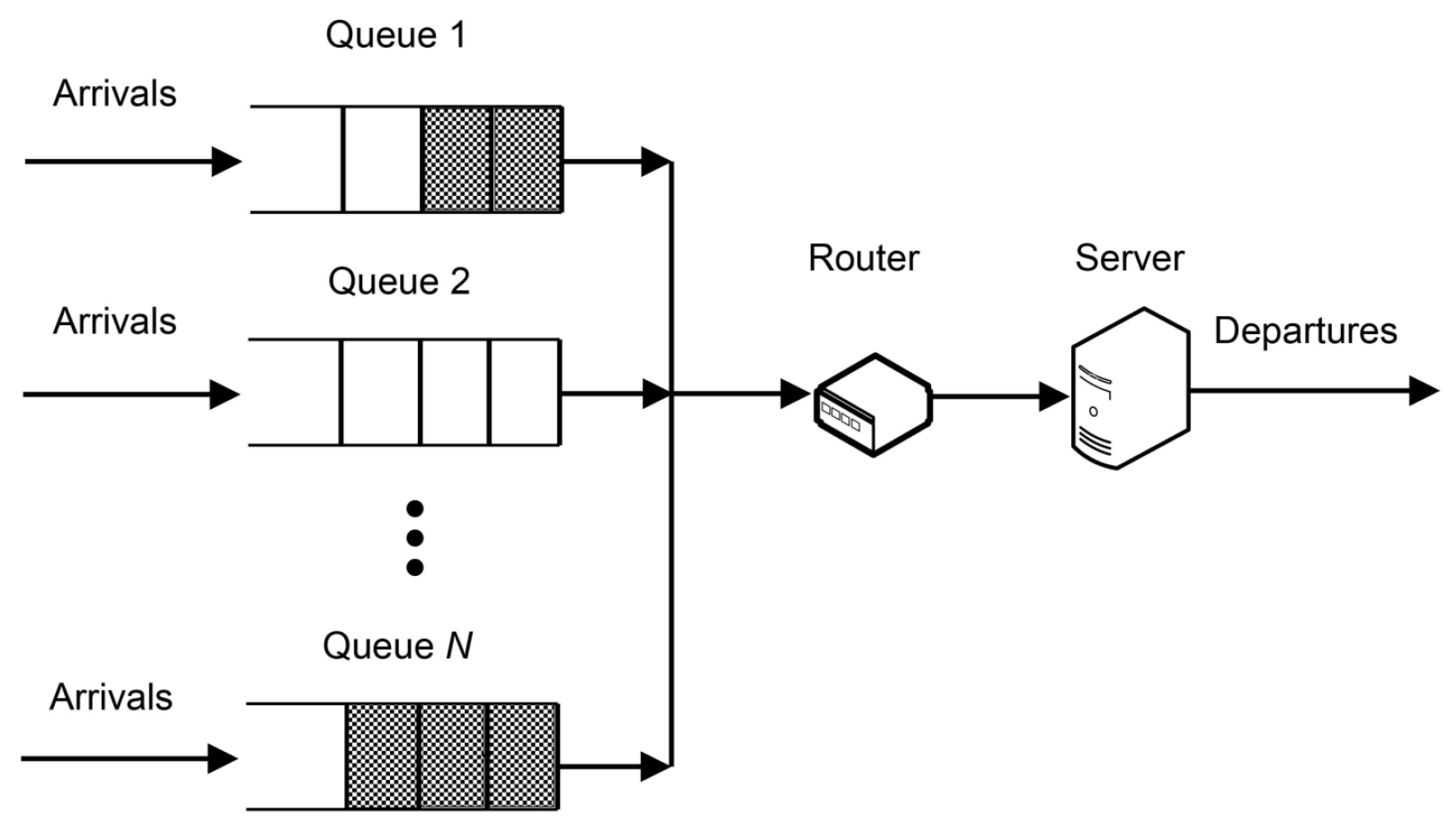

Consider a single-server system with

N parallel heterogeneous queues of the type

and router for scheduling the server across the queues. Heterogeneity here refers to unequal distributions associated with inter-arrival and service times of customers in different queues, as well as unequal holding and switching costs. The queue that is currently being serviced is exhaustively serviced. Denote

as a queue index set. The proposed queueing system is shown schematically in

Figure 1.

Denote

,

as the time instants of arrivals to queue

i and

,

as the sequence of mutually independent and identically distributed inter-arrival times with a CDF

,

. Further denote by

,

, the service time of the

nth customer in the

ith queue. These random variables are also assumed to be mutually independent and generally distributed with CDF

,

. We assume that the random variables

and

have at least two first finite moments

The squared coefficients of variation are defined then, respectively, as

This characteristic will be required to provide a comparison analysis of the optimal scheduling policy for different types of inter-arrival and service time distributions. From now it is assumed that the ergodicity condition is fulfilled, i.e., the traffic load

.

Let

indicate the sequence number of the queue currently being serviced by the server at time

t, and

denote the number of customers in the

ith queue at time

t, where

. The states of the system at time

t are then given by a multidimensional random process

with a state space

Further in this section, the notations

and

will be used to identify the corresponding components of the vector state

. The cost structure consists of the holding cost

per unit of time the customer spends in queue

i and the switching cost

to switch the server from queue

i to queue

j.

It is assumed that the system states

are constantly monitored by the router which defines the queue index to be serviced next after a current queue becomes empty. In initial state, when the total system is empty, a server is randomly scheduled to some queue. If the

ith queue to be served becomes empty, such a moment we call a decision epoch, the router makes a decision by means of the trained neural network whether it must leave the server at the current queue or dispatch it to another queue. The routing to an idle queue is also possible. We remind that the server allocated by the router to a certain queue serves it exhaustively, i.e., it is only possible to change the queue if it becomes empty. Denote by

an action space with elements

, where

a indicates the queue index to be served next after the current queue has been emptied. The subsets

of control actions in state

with

coincide with the action space

A. In all other states

x from

the subsets

includes only a fictitious control action 0 which has no influence on the system’s behavior.

The router can operate according to some heuristic control policies. It could be for example a Longest Queue First (LQF) policy which is a dynamic one and it prescribes at decision epochs to serve the next queue with the highest number of customers. If there are more than one queue with the same maximal number of customers, the queue number is selected randomly. Alternatively, the static

-rule, which needs only the information if a certain queue in non-empty, can be used for scheduling. According to this control policy the queue

i with the highest factor

which is the product of the holding cost and the service intensity, must be serviced next. In the system with totally symmetric queues the former policy is according to [

16] optimal. The latter control policy is optimal due to [

17] if there is no switching costs, i.e.,

. Otherwise, in case of positive switching costs and asymmetric or heterogeneous queues such policies are not optimal with respect to minimization of the average cost per unit of time.

The main idea of an optimal scheduling in our general model is as follows. We will equip the router with a trained neural network which will inform it on the index number of the next queue to which the server should be routed with the aim to reach formulated optimization aims. Obviously, we can only train the neural network on available data sets, i.e., on some heuristic control policy, and then we will need to optimize the network parameters such as the weights and the biases to solve the problem of finding the optimal scheduling policy. In the average cost criterion the limit of the expected average cost over finite time intervals is minimized in a set of admissible policies. The control policy

is a stationary policy which prescribes the usage of a control action

whenever at a decision epoch the system state is

. The decision epochs arise whenever after serving any queue that queue becomes empty. For studied controllable queueing system operating under a control policy

f, the average cost per unit of time for the ergodic system is of the form

where

is the random number of switches from queue

i to queue

j in time interval

. Expectation

must be calculated with respect to the control policy

f. The policy

is said to be optimal when for any admissible policy

f,

Our purpose focuses on a combination of simulation and neural network techniques. To verify the quality of results obtained by solving the optimization problem (

4) we formulate an appropriate Markov decision problem. Then we compute the optimal control policy together with the corresponding average cost

using a policy iteration algorithm, see, e.g., in Howard [

30], Puterman [

27], Tijms [

28], which will be discussed in detail in a subsequent section.

3. Markov Decision Problem Formulation

Assume that the inter-arrival and service times are exponentially distributed, i.e.,

and

,

. Under Markovian assumption the process (

1) is a continuous-time Markov chain with a state space

E. The MDP associated with this Markov process is represented as a five-tuple:

where state space

E, action spaces

A and

have been already defined in the previous section.

- –

is a transition rate to go from state

x to state

y by choosing a control action

a is defined as

where

.

- –

is an immediate cost in state

by selecting an action

a,

Here the first summand denotes the total holding cost of customers in all parallel queues in state x which is independent of a control action. Let and if , , we get the number of customers in state x. The second summand includes the fixed cost for switching the server from the current queue j to the next queue with an index a.

The optimal control policy

and the corresponding average cost

are the solutions of the system of Bellman optimality equations,

where

B is a dynamic programming operator acting on value function

.

Proposition 1. The dynamic programming operator B is defined as Proof. From the Markov decision theory, e.g., [

27,

28], it is known that for continuous time Markov chain the operator

B can be defined as

. This equality for the proposed system can be obviously rewritten in form (

8). In this equation, the first term

represents the immediate holding cost of customers in state

x. The second term by

describes the changes in value function due to new arrivals to the system. The third term by

for

stands for the value function by service completion in the queue

j where there are customers waiting for service. The last term by

for

describes also a service completion which leads now to the state with an empty queue when a control action must be performed. Hence only the last term occurs with a min operator. □

Note that the state space of the Markov decision model is countable infinite and the immediate costs

are unbounded. The existence of the optimal stationary policy and convergence of the policy iteration algorithm can be verified for the system under study in a similar way as in Özkan and Kharoufeh [

32], where first, the convergence of the value iteration algorithm for the equivalent discounted model is proved, and then, using the criteria proposed in Sennott [

33], this result is extended to the policy iteration algorithm for the average cost criterion.

To solve Equation (

8) in the policy iteration algorithm required to calculate the optimal control policy, we convert the multidimensional state space into a one-dimensional space by mapping

. The buffer sizes of the queues must be obviously truncated, namely

. Thereby the state

can be rewritten in the following form:

where

with

. The notation

will be used for the inverse function. In one-dimensional case the state transitions can be expressed as

The set of states

E in truncated model is finite with a cardinality

. The policy iteration Algorithm 1 consists of two main steps: Policy evaluation and policy improvement. In first step for the given initial control policy, it can be for example the LQF policy, the system of linear equations with constant coefficients must be solved. To make the system solvable the value function

for one of the states can be assumed to be an arbitrary constant, e.g.,

in the first state with

and

. In this case we obtain from the optimality Equation (

7) the equality

. The remaining equations can be solved numerically. As a solution we get the

values

and the current value of the average cost

g. In the policy improvement step, a control action

a that minimizes the test value in the right-hand side of Equation (

7) must be evaluated. The algorithm generates a sequence of control policies that converges to the optimum one. The convergence of the algorithm requires that the control actions in two adjacent iterations coincide in each state. To avoid policy improvement bouncing between equally good control actions in a given state, one can simply keep the previous control action unchanged if the corresponding test function is at least as large as for any other policy in determining the new policy. As an alternative to the proposed convergence criterion, one can use the values of average costs the variation of which should be for example less than a given some small value.

Example 1. Consider the queueing system with

queues. The buffer sizes are equal to

,

. At these settings the number of states already reaches large values,

, which confirms one of significant restrictions on application of dynamic programming for this type of control problems. The switching costs can be defined for example as

. The holding costs

for simplicity are assumed to be equal. The values of system parameters

,

,

and

are summarized in

Table 1 and reflect heterogeneity of the system parameters, i.e.,

and

.

| Algorithm 1 Policy iteration algorithm |

- 1:

procedure PIA() - 2:

▹ Initial policy -

- 3:

- 4:

▹ Policy evaluation - 5:

for do - 6:

-

- 7:

end for - 8:

▹ Policy improvement -

- 9:

if then return - 10:

else , go to step 4 - 11:

end if - 12:

end procedure

|

These values correspond to the system load , that is the system is stable. This value is enough small to ensure on the one hand that the system is sufficiently loaded so that states appear where all queues are not empty, and on the other hand to minimize the probability of losing an arriving customer for given rather small buffer sizes. The solution of the large system of optimality equations is carried out numerically. The optimized average cost is .

Using Algorithm 1, we calculate the optimal scheduling policy. For some of states with fixed number of customers in the third and the fourth queues and varied number of customers in the first two queues the control actions are listed in

Table 2. The first row of the table contains the values of the number of customers

and

in the second or first queue when a decision is made, respectively, when the first or second queue is emptied. The first column contains some selected states of the system for the fixed levels

and

of the third and fourth queues. As we can see, the optimal scheduling policy has a complex structure with a large number of thresholds, making it difficult to obtain any acceptable heuristic solution explicitly. To better visualise the complexity in structure of the optimal control policy, the background of the table cells changes in grey colour from darker to lighter backgrounds as the queue index decreases. The

-rule as expected is not optimal here,

that is almost two and a half times more than the value of the average cost under the optimal policy. When the values

and

are small, the router schedules the server to serve the queues with low service rates. In this case the switching costs are low as well. According to the optimal scheduling policy the initiative to route a server to the queue with a higher service rate and switching costs increases as the length of the first two queues increases.

Example 2. In this example we increase the arrival rates

as given in

Table 3. The other parameters are fixed at the same values as in the previous example. The load factor now is

, and the corresponding optimized average cost is

and

.

The

Table 4 of scheduling policy shows that as the system load increases the router switches the server to queue 2 or to queue 1 with a higher service rates at almost all queue lengths

and

, respectively.

4. Event-Based Simulation for General Model

We use an event-based simulation to simulate the proposed queueing system. This technique is suitable for random process evaluation where it is sufficient to have the information about the time instants when changes in states occur. Such changes will be referred to as events. Note that although simulation modelling is extensively used in queueing theory, many papers lack explicitly described algorithms that readers can use for independent research. For more information on simulation methods with applications to single- and multi-server queueing systems, we can recommend Ebert et al. [

34] and Franzl [

35]. In this regard, it will certainly not be superfluous if we present and discuss here an algorithm for the system simulation which is not difficult to adapt for other similar systems.

In our case, the events are the arrivals to one of N parallel queues and the departures of customers from the queue d currently being served by the server. The present time is selected as a global time reference.

In

Figure 2, on the time axis we mark the moments of arrival of new customers and the moments of their service in a fixed queue with index

d by means of arrows above and below the axis, respectively. The dotted arrows indicate the arrival of new customers in other queues. The successive events are denoted by

and the corresponding time moments by

. In the proposed queue simulation Algorithm 2 all the times are referred to the present time. Suppose that at the present moment of time there is a new arrival to the queue with the number

d, which is serviced by the server, i.e.,

. Denote by

the holding time of the system in state

x up to the occurrence of the event

. According to the time schema the holding time in a previous state is defined as

, where

is a remaining inter-arrival time to the queue

d,

stands for the generated service time after the event

of the previously occurred departure and the dots replace the time intervals associated with arrivals of customers in other queues. The next event is determined then by subtracting the holding time

from the all event time intervals. In this case the current event is a new arrival. Thus, the holding time

in state up to the event

of an arrival to some other queue which not equal to

d is calculated by

. The subsequent holding times are calculated as follows,

, i.e., the event

is then the next departure from queue

d,

, where

is the next generated service time,

and

is a remaining inter-arrival time for the next arrival to the queue

d. Continuing the process in a similar manner, all holding times of the system in the corresponding states are evaluated. By summing up the times

we obtain the total simulation running time of the system

. The average cost per unit of time is then obtained by division of the accumulated cost by the time

.

The time instants of arrival events to the queue

are stored in vector variable

and the departure events in the queue with a number

q in

. The Algorithm 2 contains pseudo-code of the main elements of the event based simulation procedure.

| Algorithm 2 Queue simulation algorithm |

- 1:

procedure QSIM(, , ,) ▹ Initialization - 2:

- 3:

- 4:

while do ▹ State recording - 5:

- 6:

- 7:

for do - 8:

- 9:

end for - 10:

if then - 11:

▹ Simulation time - 12:

▹ Sum up the cost - 13:

end if - 14:

- 15:

for do - 16:

if then return - 17:

▹ Generate interarrival time - 18:

, - 19:

if then return - 20:

▹ Generate service time - 21:

end if - 22:

end if - 23:

if then return - 24:

▹ Index of the current departure - 25:

▹ Remove current departure - 26:

- 27:

if then return - 28:

- 29:

end if - 30:

if then return - 31:

, ▹ New server scheduling - 32:

- 33:

- 34:

if then return - 35:

▹ Generate service time - 36:

end if - 37:

end if - 38:

end if - 39:

if then return - 40:

▹ Generate inter-arrival time - 41:

, - 42:

end if - 43:

end for - 44:

end while - 45:

- 46:

end procedure

|

5. Neural Network Architecture

In our model, we propose to equip the router with a trained neural network. This network will determine an index of the queue that the server will serve next based on the information about the system state at a decision epoch when the server finishes service of the current queue. We have chosen a simple architecture for the neural network consisting of only two layers in such a way that, on the one hand, it would have a small number of parameters for further optimization and, on the other hand, that the quality of correct classification of some fixed initial control policy would be equal to at least 95%. The proposed neural network has one linear layer which represents an affine transformation and softmax normalization layer as illustrated in

Figure 3.

The input includes

neurons according to the system state

, where

. The neuron 0 gets the information on

, the

ith neuron for

gets the information on the state of

ith queue. When the server finishes service at queue

d, then the neural network classifies this state to one of

N classes which defines a current control action

in state

x. The hidden linear layer consists of

N neurons

which are connected with an input neurons via the system of linear equations

or in matrix form

with

and

, where

with

are, respectively, the matrix of weights and the vector of biases of the given neural network which must be estimated by means of the training set. The softmax layer

is a final layer of the multiclass classification. The softmax layer generates as an output the vector of

N estimated probabilities of the input sample

, where the

ith entry is the likelihood that

x belongs to class

i. The vector

y is normalized by the transformation

The class number is then defined as

. Hence, the output

z is a mapping of the form

, where

is the parameter vector of the neural network which includes all entries of the weight matrix

and the bias vector

, i.e.,

The values of the parameter vector

of the initial control policy, which in the next section will be used as a starting solution for optimization procedure, are obtained by training the neural network on some known heuristic control policy. In our case this policy is the LQF. In the training phase the following optimization problem must be solved given the training set

,

where a non-negative loss function

with

takes the value 0 only if the class of the

kth element of a sample is

i, i.e.,

. The problem (

12) can be solved in a usual way by the stochastic gradient descent method, where a single learning rate

to update all parameters is maintained. The corresponding iterative expression is given below,

where

is a Nabla-operator defining the gradient of the function relative to the parameter vector

. In our calculations we use the adaptive moment estimation algorithm (ADAM) to solve the problem (

12). It updates iteratively the parameters of the neural network based on training data. The ADAM calculates independent adaptive learning rates for the elements of

by evaluating the first-moment and second moment estimation of the gradient. The method is simple to implement, computationally efficient, requires little memory and is invariant to diagonal changes in gradients. The further detailed information regarding ADAM algorithm can be found in Kingma and Ba [

36]. Despite the fact that the ADAM algorithm can be found across various sources, we have also chosen to cite it in this article. The main steps required for the iterative updating the parameter vector

are summarized in the Algorithm 3.

The parameters of the Algorithm 3 are fixed to , , , and . The classification accuracy of the proposed neural network trained on the LQF policy is over 97%. The test phases of the trained network were conducted on system states with a queue length of up to 100 customers per queue. Thus, this starting network can be used to generate control actions of the initial control policy for subsequent parameters’ optimization of this neural network.

| Algorithm 3 Adaptive moment estimation algorithm |

- 1:

procedure ADAM(,,,,) - 2:

▹ Initialisation of the moment 1 - 3:

▹ Initialisation of the moment 2 - 4:

▹ Convergence index - 5:

- 6:

while do - 7:

- 8:

▹ Calculate the gradient at step n - 9:

▹ Update the biased first moment - 10:

▹ Update the biased second moment - 11:

▹ The bias-corrected first moment - 12:

▹ The bias-corrected second moment - 13:

▹ Update the parameter vector - 14:

if then return - 15:

▹ Check the convergence - 16:

end if - 17:

end while - 18:

end procedure

|

6. Optimization of the Neural-Network-Based Scheduling Policy

Denote by

the known parameter vector of the trained neural network as was defined in (

11). The function

means the average cost for the queueing system where the router chooses an action obtained from the trained neural network with the parameter vector

. We adapt further a simulated annealing method described in Algorithm 4 for discrete stochastic optimization of the average cost function

with a multidimensional parameter vector

. This algorithm is quite straightforward. It needs some starting solution and in each iteration the algorithm evaluates for the randomly selected neighbor values of the function parameters the corresponding function value. If the neighbor occurs to be better than the current solution with respect to value of the objective function, algorithms replaces the current solution with a new one. If the neighbor value is worse, the algorithm keeps the current solution with a high probability and chooses a new value with a specified low probability.

The simulated annealing requires the finite discrete space for the parameters of the optimized function. It is assumed that all weights and biases of the neural network summarized in the vector take values in the interval with a low bound and an upper bound . Moreover, this interval is quantized in such a way that , , takes only discrete values , , where is a quantization level. Note that the domains for the elements of the parameter vector can be specified separately, and the values of the vector obtained by training the neural network based on the optimal policy of the Markov model will be suitable for determining the possible maximum and minimum bounds. In this case it is possible to achieve faster convergence of Algorithm 4 to the optimal value.

| Algorithm 4 Simulated annealing algorithm |

- 1:

procedure SA(,,m,,,,,) ▹ Initialisation - 2:

- 3:

- 4:

- 5:

, - 6:

while do - 7:

▹ Perturbation - 8:

- 9:

- 10:

- 11:

▹ Acceptance - 12:

if then return - 13:

- 14:

else - 15:

end if - 16:

- 17:

if then return , - 18:

else , - 19:

end if - 20:

end while - 21:

end procedure

|

Since the average cost function

g can not be calculated analytically, for this purpose a simulation technique is used. As shown in Algorithm 4, at each iteration at the step where the current solution can be accepted with a given probability we need to calculate the difference between the object functions. Due to the fact that this function can only be calculated numerically, it is necessary to check whether this difference is statistically significant at each iteration of the algorithm. The algorithm is modified in such a way that the

t-test for two samples is used to compare the expected values of two normally distributed samples with unknown but equal variances. Denote by

and

, respectively, the current and the modified parameter vector and by

two corresponding first empirical moments of the objective function. According to the

t-test the null hypothesis which states that for the modified vector the average cost is statistically smaller then the previous solution is rejected if

where

stands for the

q-quantile of the

t-distribution and statistics

is defined as

with empirical variances

and

.

Below, we briefly describe the main steps of the Algorithm 4. At the initialisation step of the algorithm, the neural network is trained based on the LQF control policy. The parameter vector is then equal to the initial vector

to be optimized. The simulation Algorithm 2 is then used to calculate the initial sample

with

of the average cost function for a given initial parameter vector

and the corresponding first empirical moment

. These values are set as the current solution

and

to the optimization problem (

13). At the perturbation step, a randomly chosen element of the previous parameter vector

must be randomly perturbed on the specified set

of admissible discrete domain. For a new parameter vector

next sample

of average costs must be calculated together with the first empirical moment

. At the acceptance step, a new policy

can be accepted as a current solution with a probability

p defined as

where

is the temperature at the

nth iteration. If a new policy

is accepted, then it is defined together with a corresponding average cost

as a current solution. Otherwise, the last change in the parameter vector

must be reversed, i.e.,

and the sample size

m for calculating the first moments is updated. Then the perturbation step must be repeated. For termination of the algorithm the stopping criteria

or

is used.

We note that the classical simulated annealing method generates for some function

a sample

which for the constant temperature

can be interpreted as a realization of a homogeneous Markov chain

with transition probabilities

where

is a uniformly distributed random variable on the interval

. It is easy to show that the modified transition probabilities, where the objective function is calculated numerically, converges to the transition probabilities (

17) which in turn can guarantee the convergence to an optimal solution.

Proposition 2. The acceptance probability satisfies the limit relation Proof. The probability

can be obviously rewritten as

where

. Then the following relation holds,

due to the strong law of large numbers and the fact that for

the sample size

and hence

□

7. Numerical Analysis

Consider the queueing system with

. We first analyse a Markov model, where the parallel queues are of the type

with

and

,

, the coefficient of variation

. The values of system parameters

and

are fixed as in examples 1 and 2 which will refer to as Cases 1 and 2. We compare the optimization results obtained by combining the simulation, neural network and simulated annealing algorithm with the results evaluated by the policy iteration algorithm. In Cases 1 and 2, the weights and the biases of the neural network trained on the calculated by PIA optimal scheduling policy take, respectively, the following values

On the basis of these values, we can estimate in the simulation annealing Algorithm 4 the domain or solution space for each element of the vector . For simplicity, in our experiments we set common boundaries for all elements as and . The length of the increment implies the quantization level . Next, we set , , and . As an initial vector we take the parameter vector obtained by training the neural network on the LQF policy. For the initial control policy, one could also choose the policy obtained by Algorithm 1. However, we would like to check the convergence of the algorithm when choosing not the best initial solution, since in general case one usually chooses either some heuristic policy or an arbitrary one. The empiric average cost for each iteration step is calculated based on sample with a size . The accumulation of sample data in QSIM Algorithm 2 is carried out after 1000 customers have entered the system and is completed after 5000 customers have entered the system.

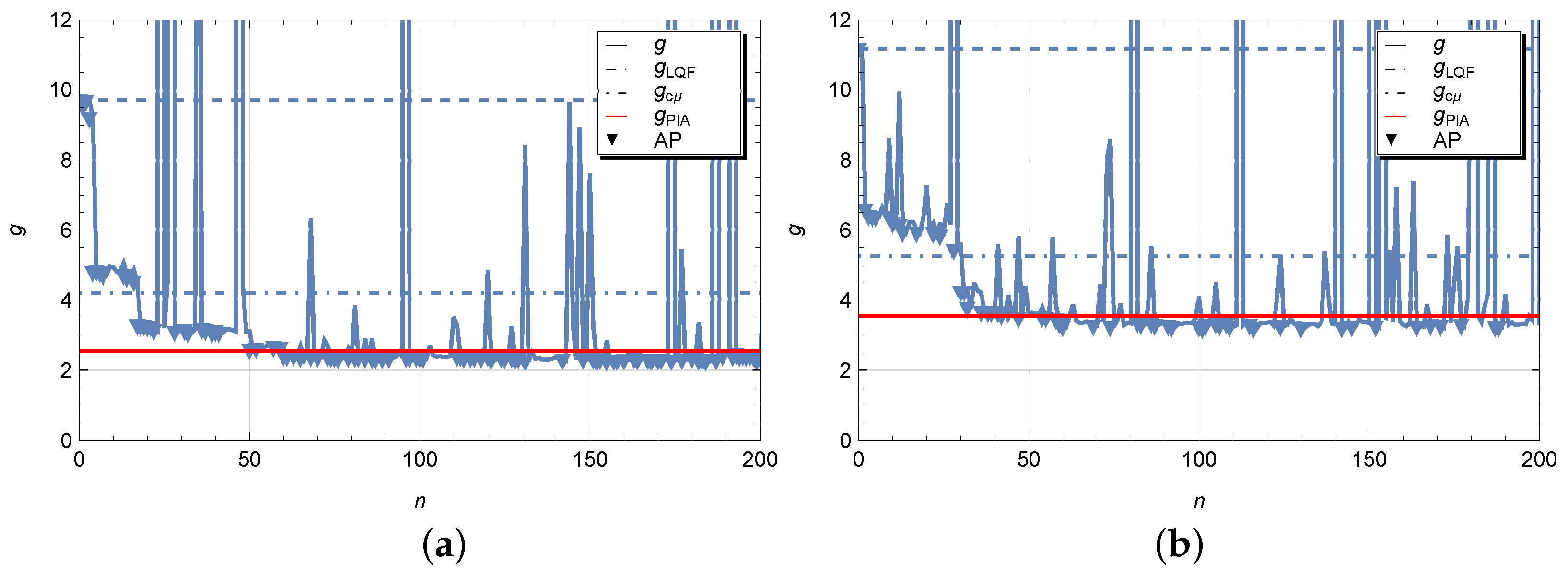

Application of the Algorithm 4 to a Markov model leads to the following optimal solutions:

- Case 1:

Optimal solution is reached at

,

,

- Case 2:

Optimal solution is reached at

,

,

We see that the elements of matrices

and

are different, but they are markedly similar in terms of the elements with dominant values. The optimization process of the scheduling policy is illustrated in

Figure 4. In addition to values of the average cost function obtained at each iteration step of the simulated annealing algorithm, the figures show horizontal dotted and dash-dotted lines, respectively, at level of the average cost

and

in figure labelled by (a) and

and

in figure labelled by (b) for the LQF and

heuristic policies. As expected, a non-optimal control policy LQF implies too high average cost. The results look much better for policy

, but still the presence of switching costs significantly worsens the performance of this policy. The red horizontal line indicates the average cost

and

obtained by solving the Markov decision problem using the policy iteration Algorithm 1. We can observe that the values are quite close to those obtained by random search. However, some small difference may be due, firstly, to the fact that the simulation is used for calculations and the results have a certain scattering, and, secondly, we do not exclude the influence of boundary states in the Markov model, where a buffer size truncation has been used. Testing the hypothesis for the difference between the optimal average costs

and

at least for our model showed the values to be statistically equivalent. In the figures, we have also marked with triangles those iteration steps with accepted policy (AP) where the perturbed parameter vector has been accepted. The number of accepted points in Case 1 and 2 is equal, respectively, to 98 and 110. From above results in case of exponential time distributions we can make the following observations. If the parameter vector

with elements

and

is used for the initial scheduling policy, then one can expect the faster convergence of the simulated annealing algorithm to the optimal solution which was confirmed numerically. If an optimal policy for a controlled Markov process is not available, e.g., when the number of queues is too large, in this case it is reasonable to use the static

-rule as an initial policy.

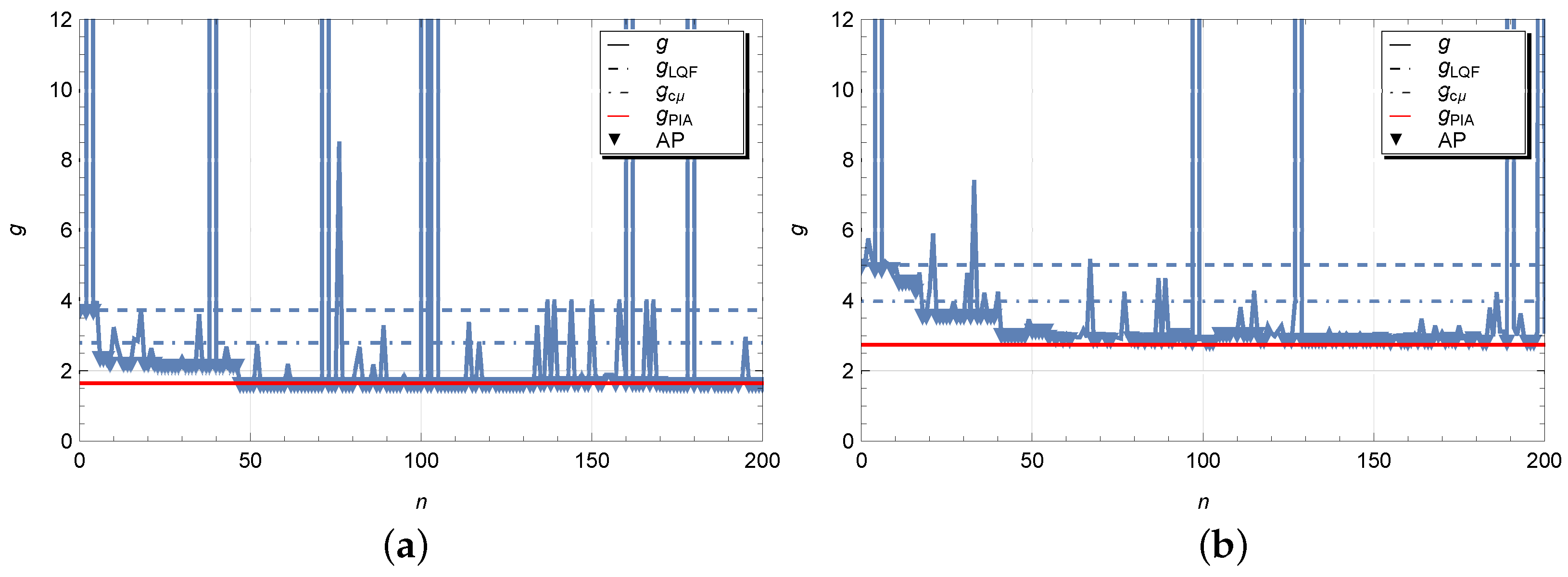

Figure 5 displays experiments realized for the queues of the type

with deterministic inter-arrival and service times which are equal to corresponding mean values

and

of the Markov model. Here the coefficient

. The SA algorithm converges to the values

and

, respectively, for Case 1 and 2 with the following optimal policies,

- Case 1:

- Case 2:

The average costs for heuristic policies take the values , , and , , .

It is observed that the optimal policy obtained by the SA algorithm is quite close to those obtained by the PIA. Nevertheless, from experiment to experiment certain deviations in the value of the average costs may appear. Therefore it is of interest for us to check whether such differences are statistically significant.

Further we analyse how sensitive is the optimal policy obtained in exponential case by the SA algorithm to the shape of arrival and service time distributions. The following distributions will be used to calculate the optimal control policy in the non-exponential case: gamma

, log-normal

and Pareto

distributions, where two last options belong to a set of heavy tail distributions. The parameters of these distributions are chosen so that their first and second moments coincide. Moreover, the first moments are the same as for exponential distributions. The moments need to be represented as functions depending on the corresponding sample moments as in the method of moments used for parameter estimation. In the following experiments, the first moments of the inter-arrival and service times are fixed at values of Case 2, and the squared coefficient of variation is varied as

and

. Denote by

a sample random variable

Z distributed according to the proposed distributions with two first sample moments

,

and squared empirical coefficient of variation

. Then for the gamma distribution

with a PDF

the parameters

and

satisfy the relations,

In case of the lognormal distribution

with a PDF

the parameters

and

are calculated by

In case of a Pareto distribution

with a PDF

the parameters

and

are calculated by relations

Parameters of the proposed probability distributions are listed in

Table 5 and

Table 6, respectively, for inter-arrival and service time distributions.

The sensitivity of the optimal control policy to the shape of the distributions is tested by means of a two-sided

t-test for samples with unknown but equal variances. Let

and

are the samples of the average cost values obtained for the optimal control policy in case of exponentially distributed times and for the system with proposed distributions for the inter-arrival and service times. These samples of size

m are associated with the normally distributed random variables

and

, where

and

. The test is defined then as

where statistics

is calculated by (

16). The results of tests in form of the

p-value, the values of the average costs

and

together with their

confidence intervals are summarized in

Table 7 and

Table 8 for the systems with different inter-arrival and service time distributions with smaller and greater levels of dispersion around the mean, d.h. for

in

Table 7 and

in

Table 8. Table cell contains two rows with the values for the average costs

and

together with confidence boundaries, and the third row has the

p-value.

From the numerical examples, it is observed that the shape of distributions expressed through a coefficient of variation has a high level of influence over the value of the average cost functions and . In almost all cases, the average cost increases significantly when the coefficient of variation increases. Only in the case of the Pareto distribution for the inter-arrival and service times is the change in values not significant. However, an examination of the entries in the last two tables reveals that in all experiments the p-value exceeds the significance level of . Furthermore, it is worth noting that in most cases this exceeding is sufficient large. In this regard, the statistical test fails to reject null hypothesis at a given significance level, in other words, the average cost values are statistically equal and the corresponding optimal control policies are equivalent. Therefore, at least within the framework of the experiments conducted, we can state that the optimal scheduling policy is insensitive to the shape of the inter-arrival and service time distributions given that the first moments are equal. For practical purposes, in general queueing systems one can either apply the proposed optimization method, or use the control policy optimized for the equivalent exponential model as a suboptimal scheduling policy.