Abstract

This paper proposes a near-central camera model and its solution approach. ’Near-central’ refers to cases in which the rays do not converge to a point and do not have severely arbitrary directions (non-central cases). Conventional calibration methods are difficult to apply in such cases. Although the generalized camera model can be applied, dense observation points are required for accurate calibration. Moreover, this approach is computationally expensive in the iterative projection framework. We developed a noniterative ray correction method based on sparse observation points to address this problem. First, we established a smoothed three-dimensional (3D) residual framework using a backbone to avoid using the iterative framework. Second, we interpolated the residual by applying local inverse distance weighting on the nearest neighbor of a given point. Specifically, we prevented excessive computation and the deterioration in accuracy that may occur in inverse projection through the 3D smoothed residual vectors. Moreover, the 3D vectors can represent the ray directions more accurately than the 2D entities. Synthetic experiments show that the proposed method can achieve prompt and accurate calibration. The depth error is reduced by approximately 63% in the bumpy shield dataset, and the proposed approach is noted to be two digits faster than the iterative methods.

1. Introduction

Various camera models and corresponding calibration methods have been developed to identify the relation between a two-dimensional (2D) image pixel and three-dimensional (3D) world point. Generally, this relation is defined as a 3D-to-2D perspective projection. Foley et al. [1], Brown et al. [2], and Zhang et al. [3] proposed a pinhole camera model with radial and tangential distortions of real lens models, which is widely used in camera calibration. Scaramuzza et al. [4] and Kannala et al. [5] modeled a fisheye camera using polynomial terms with additional distortion terms. Usenko et al. [6] proposed a double-sphere model with a closed form of both projection and back-projection procedures to reduce the computational complexity in projections. Jin et al. [7] showed that camera calibration requires a large number of images, although a point-to-point can be used to realize calibration using dozens of images.

Tezaur et al. [8] analyzed the ray characteristic of a fisheye lens, in which the rays converge on one axis. The authors extended the fisheye camera model of Scaramuzza et al. [4] and Kannala et al. [5] by adding terms to compensate for the convergence assumption of the model.

Notably, the abovementioned methods can only be applied to simple camera systems. Other methods have been developed to consider more complex systems, such as transparent shields or cameras behind reflective mirrors. Treibitz et al. [9] explicitly modeled a flat transparent shield. Additionally, Yoon et al. [10] used an explicit model that can be applied from a flat to a curved shield. Pável et al. [11] used the radial basis functions to model uneven outer surfaces of the shield.

Geyer et al. [12] used unifying models for various configurations of a catadioptric camera, which is a camera model that contains various lenses and mirrors. When the camera and mirror are aligned, the rays converge at one point; otherwise, the rays do not converge. However, this model cannot handle the case in which misalignment occurs between the camera and lens. The model proposed by Xiang et al. [13] can address such misalignments.

Certain other models can implicitly model the distortions caused by a transparent shield or reflective mirrors. For example, the fisheye camera model of Scaramuzza et al. [4] can be used for catadioptric cameras, in which implicit modeling is realized using polynomial terms.

The generalized camera model [14] defines a raxel as a 1:1 mapping relationship between the image pixels and rays. This model can be categorized as central or non-central depending on the ray configuration. The central camera model assumes that the rays converge at one point. Ramalingam et al. [15] used multiple 2D calibration patterns to alleviate the requirement for dense observations. The authors compensated for the sparsity using interpolation. Rosebrock et al. [16] and Beck et al. [17] used splines to obtain better interpolation results. The models of Nishimura et al. [18], Uhlig et al. [19], and Schops et al. [20] do not assume ray convergence and are thus non-central camera models.

In extended uses of cameras, when the camera is behind a transparent shield or reflective object, the induced distortion is more complicated and thus more complex models are required.

Compared with 2D observations, 3D observations are preferable for simplifying the calibration process and yielding more accurate calibration results. Although 3D observation devices such as Lidar and Vicon sensors were used only for industrial purposes in the past, they are being widely used by general users at present owing to their decreased costs and enhanced performance.

Several methods have been developed using 3D observations. Miraldo et al. [21] improved the generalized camera model. Verbiest et al. [22] observed the object through a pinhole camera behind a windshield. Both methods are effective if the neighboring points are smooth. However, the accuracy may decrease or the computational complexity may increase in the following cases:

- Near-central scenarios;

- Cases in which the distance between the observation points and camera increases;

- Cases in which the number of observation points increases.

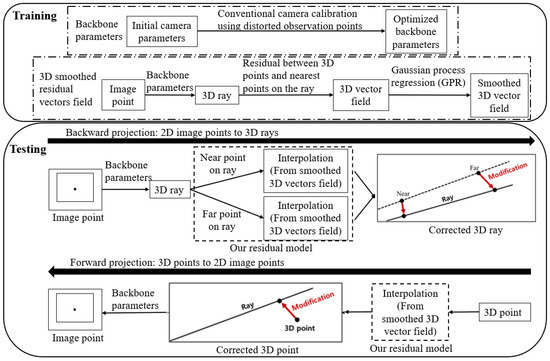

We propose a camera model that can be applied to the above mentioned cases. As shown in Figure 1, the backbone parameters and 3D residual vectors were calculated in the training process and used for the calibration in the testing process. The proposed camera model is divided into a backbone and residual model. The backbone can be any central camera model, and the residual model estimates the residual of the backbone model. The objectives of this study can be summarized as follows:

Figure 1.

Process flow of the proposed camera model.

- The development of a near-central camera model;

- The realization of noniterative projection by the 3D residual model;

- The establishment of a simple calibration method suitable for sparse and noisy observations.

The proposed frameworks were verified by performing experiments in near-central scenarios: (1) when the camera is behind a transparent shield with noise, and (2) when the lenses or mirrors of fisheye or omnidirectional cameras are misaligned and noisy. Notably, we have strengths in two scenarios. First, when the shield’s exterior is subjected to external pressure or heat, the interior remains unchanged, but the outer surface is deformed by approximately 3–6 mm. Therefore, the shape appears as slightly bumpy. Second, for the same reasons as before, or 1 mm misalignment can occur in fisheye or omnidirectional camera systems.

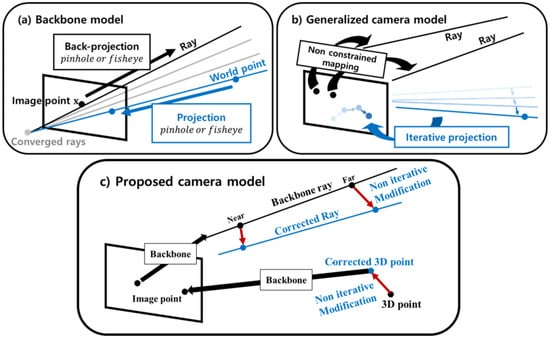

In the above mentioned cases, we can use the simple central camera model as the backbone. The use of this model facilitates the optimization and prompt computation of the forward and backward projection processes. Moreover, as shown in Figure 2c, we can perform noniterative calibration by simply calibrating the ray through the 3D residual framework.

Figure 2.

Differences in the existing and proposed camera models.

2. Proposed Camera Model

We define the two sub-models and describe the calibration process. Moreover, we describe the application of the proposed camera model to the forward and backward projection processes.

2.1. Definition

The proposed camera model consists of two sub-models: the backbone and residual models. The backbone model may be one of the central camera models, such as the simple pinhole or fisheye camera model. The projection and backward projection of the backbone model can be expressed as follows:

where is a forward projection of the backbone model, and is the inverse process of . and are image and world points, respectively. is a ray represented by two 3D points that the ray passes through.

The forward and backward projections for generalized camera models can be represented by and , respectively:

The generalized camera model involves the intricate process , which results in an iterative procedure, the complexity of which increases with a decrease in the smoothness of . Because we used a pinhole or fisheye camera model as the backbone model, we can use the simple projection or backward projection processes of the backbone model.

The residual model compensates for the error. The backbone with a 2D residual model can be represented as follows:

where is the residual model of the backbone model. The projection result of the backbone model is modified in an image plane. is the inverse process of Equation (5). This inverse process is iterative, requiring the model to find two world points projected onto the same image point. A representative example is the model proposed by Verbiest et al. [22]. This model uses the cubic spline, which involves four spline functions for a single point. Because the process must be repeatedly performed for each step in the forward projection, it is computationally expensive.

Unlike the 2D residual, we used the 3D residual, which can be defined as follows:

Similar to the 2D residual, the inverse process involves an intricate procedure. Specifically, it involves the iterative process of finding two world points that are projected onto one image point. To make the model simple and noniterative, we introduced the following backward process:

where is the residual model for the backward projection. We substituted the computationally expensive iterative process of the backward projection of to the two simple modifications of the backward residual model . We used the residual model to modify the ray from a backbone model. The ray was modified by modifying two points on the ray and obtaining the ray that passes through the two modified points.

The residual model of backward projection is simply an inverse residual of the forward projection, represented as follows:

where is a sparse 3D error vector field of the backbone model, which is calculated over calibration data. Because the calibration data are not dense, interpolation must be performed to estimate the residual. The determination of is described in Section 2.3.

In the following sections, we describe the calibration process of the backbone and residual models. Although the proposed camera model can jointly optimize both the backbone and residual models, an adequate performance can be achieved by two simple calibration procedures, as discussed herein.

2.2. Calibration of the Backbone Model

Verbiest et al. [22] proposed a backbone model that corresponds to a real camera model behind a windshield. In contrast, our backbone model is a simple central camera model with parameters optimized on training data. For example, when a transparent shield is present in front of the camera, we use the pinhole camera model as the backbone and optimize the parameters assuming the absence of the transparent shield. We used a simple central camera model to ensure a smooth residual that can be easily estimated even in situations involving sparse calibration data. Moreover, we optimized the parameters to fit into the calibration data to maximize the standalone performance of the backbone model.

Before optimizing the parameters of the backbone model, we assumed that the initial estimates of the parameters are available. This assumption is reasonable because the manufacturers’ specifications for a camera can be easily obtained. From the initial parameters of the pinhole or fisheye camera, we updated the parameters using gradient descent to minimize the reprojection error.

2.3. Calibration of the Residual Model

The calibration of the residual model is reduced to finding the following sparse 3D error vector field:

where is a sparse vector field and is the nearest point on the ray of the backbone model to the 3D point . In backward projection, the sparse vector field is a reversed version of , with the start point being and the vector being − .

2.4. Methodology

2.4.1. Smoothing of Sparse 3D Vectors Field

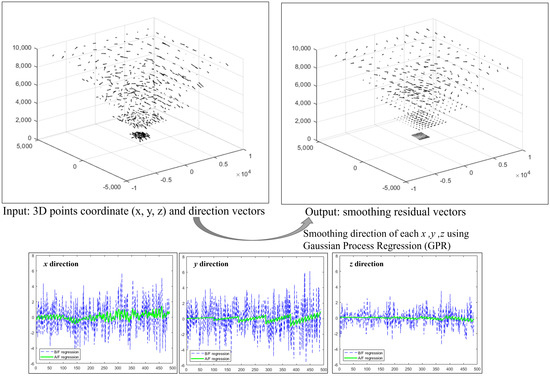

Because the observation data have noise, the sparse vector field should be smoothed to ensure robustness against noise. As shown in Figure 3, we used three independent Gaussian regression processes for each direction of the residual vector in 3D.

Figure 3.

Generating the smoothed 3D vectors field.

2.4.2. Interpolation

We used simple interpolation to obtain a dense vector field from the smoothed sparse vector field, considering fast forward and backward processes. To capture the local error structure in the training data, inverse distance weighting on nearest neighbors was applied for interpolation :

where and are the normalizing constant and number of neighbors for , respectively. was obtained by interpolating .

In addition, we calculated the magnitude of the vectors of the 3D residual to enable adaptive interpolation. If the magnitude of the residual vectors is large (small), a small (large) number of vectors are used for the interpolation.

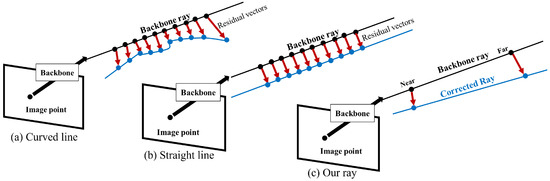

2.4.3. Straightness of the Back-Projected Ray

Using the 3D error vector field, the most intuitive strategy to modify the backbone ray is to modify every point on the ray. However, the modified ray will not be a straight line, as shown in Figure 4a; this is because the magnitude of the residual vectors has various values. This modification is invalid because the world points corresponding to one pixel should lie in a straight line. However, as shown in Figure 4b, we can solve this problem by constraining the construction of the residual vectors to have the same size.

Figure 4.

Our ray calibration method.

However, we did not use this constraint because we tried to capture the local error of the backbone model. We introduced a relaxed constraint to capture the local error effectively and consider the noise in the calibration data.

Thus, using the 3D vector field, we prepared a backward model depending on the depth. This backward model outputs not a ray but a curve in 3D. The residual vector compensates for errors at a specific 3D point for each depth.

However, many existing computer-vision algorithms require the camera model to yield a ray. Therefore, to ensure the usability of the camera model, we designed a camera model that outputs a ray from the backward projection.

As shown in Figure 4c, we prepared a ray from two modified points, each sampled from the near and far points of the backbone ray, where the range is within the area in which the calibration data exist. For more clarity, the estimated ray in the proposed camera model refers to the corrected ray. To evaluate the estimated ray, we measured its distance from the 3D ground truth point. This distance was calculated by measuring the distance between two points: one is the 3D GT point, and the other one is the closest point on the ray to the GT 3D.

3. Experiments

The proposed frameworks were verified by performing experiments involving near-central cases: a pinhole camera behind a transparent shield, a fisheye camera, and a catadioptric camera with a misaligned lens.

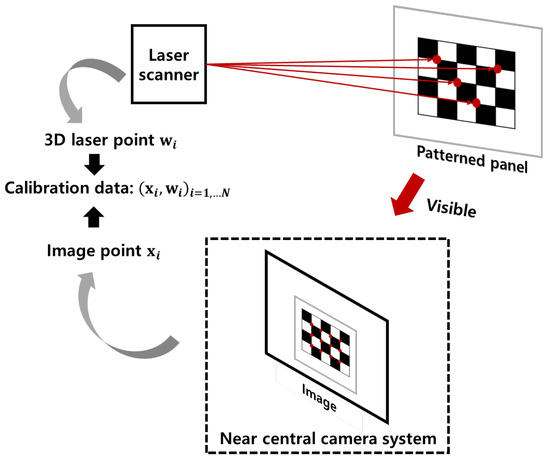

To ensure the accurate acquisition of 3D points, we assumed that the data could be obtained from a laser scanner. We simulated the data acquisition process using the laser scanner in Verbiest et al. [22], shown in Figure 5. The laser scanner is outside of the near-central camera, e.g., outside of the transparent shield, so that it is not affected by the shield. Then, the laser scanner shoots the laser toward the patterned panels, and the position of the 3D points in the panel can be obtained by the laser scanner. The 3D laser point in the panel can be visible in the near-central camera, so the position of the 2D image point of the corresponding 3D laser point can be obtained. The pairs of 2D image points and 3D laser points are the calibration (training) data. We simulated this process to obtain the data.

Figure 5.

The acquisition process of calibration data. The laser scanner shoots the laser to the patterned panel, and then the position of 3D points can be obtained by the laser scanner. Two-dimensional image points corresponding to the three-dimensional points in the panel can be obtained by the image of the near-central camera system. When the panel is positioned far away from the camera, using a non-patterned panel may cause the laser point to become invisible to the near central camera system. To overcome this issue, a patterned panel can be used instead. By directing the laser to the corner point of the patterned panel, it becomes possible to obtain the 2D image point corresponding to the 3D laser point.

Since the proposed camera model compensates for the error of the backbone model using interpolation of a sparse 3D residual vector field from training 3D points, the proposed camera model operates on the range of training 3D points. For this reason, we evaluated the performance of the proposed camera model at the 3D points that have the same range as training 3D points, but are more dense than training data.

We evaluated the performance of the calibration methods using the reprojection error, the distance between the estimated ray and ground truth (GT) point, and the depth. We calculated the absolute average error (MAE) of the three metrics, and each metric is measured as follows:

- Reprojection error is a metric used to assess the accuracy of the forward projection by measuring the Euclidean distance between the reprojected estimation of a model and its corresponding true projection.

- The Euclidean distance between the estimated ray and the ground truth (GT) point serves as a metric for evaluating the accuracy of the backward projection. This involves locating the nearest point on the estimated ray when provided with the GT point and calculating the distance between these two points.

- For the depth error evaluation, a straight line was drawn connecting the ray estimated from the left camera to the ray estimated from the right camera. The midpoint of this straight line represents the estimated depth. The depth error was determined by calculating the distance between the estimated depth and the GT point.

- Lastly, relative error rates were calculated to allow for comparison with other methods. The formula for calculating the relative error is as follows:where is each existing method and is the proposed method.

To make the experimental environment similar to a real environment, the evaluations were performed at different noise levels. To consider random noise, ten experiments were performed at the same noise level, and the MAE was measured as the final value to ensure a fair evaluation. Moreover, we added random noise to the image and 3D laser points in the training data.

3.1. Camera behind a Transparent Shield

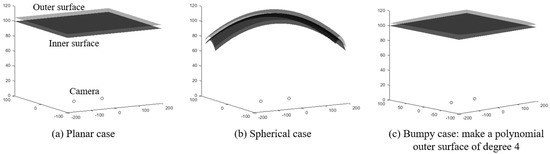

Three shield shapes were considered in this study: a plane, a sphere, and a hybrid shape with an inner plane surface and a slightly bumpy outer surface (approximately 3–6 mm). These shields have a uniform thickness of 5 mm and represent real-world scenarios, such as car windshields or camera shields for submarines or spaceships. Figure 6c illustrates the third shape.

Figure 6.

Three cases involving a transparent shield.

The camera used in the experiment has a focal length f of 1000 (in pixels), with the principal point located at = 960, = 540 (in pixels). The camera does not exhibit any radial or tangential distortions. The image has a width of 1920 (in pixels) and a height of 1080 (in pixels).

Depth estimation was performed using a stereo camera setup for all three shield types. The baseline distance between the left and right cameras is 120 mm, and both cameras share the same intrinsic parameters.

3.1.1. Calibration Data

Three-dimensional points were extracted from 1000 mm to 10,000 mm, with 1000 mm and 2000 mm intervals for the test and training datasets. Consequently, the training data comprised approximately 10% of the test data. The noise level with a random normal distribution of and from to was added to the image points, and noise with a random normal distribution of and from to was added to the 3D laser points.

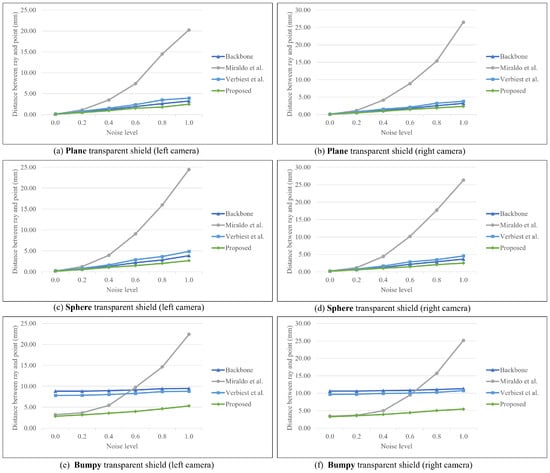

3.1.2. Results for the Transparent Shield Case

The experimental results for shields with different shapes are presented in Table 1 and Figure 7. The errors for planar and spherical shields are lower compared to the bumpy shield, and the error increases with the noise level. We add explanations about the calibration data at the beginning of the experiment section. The bumpy shield exhibits minimal sensitivity to noise, possibly due to the optimization of backbone parameters considering the shield’s characteristics. Determining the effect of noise is challenging due to the substantial distortion caused by refraction. The proposed method outperforms others as the noise level increases since most existing approaches focus on minimizing the reprojection error in 2D space, whereas the proposed method enables direct calibration in 3D space.

Table 1.

Results for transparent shields with different shapes.

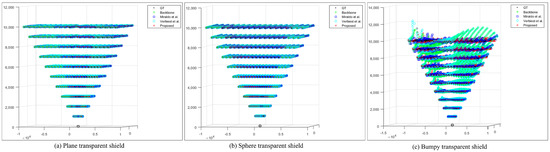

Figure 7.

Results of distance for planar, spherical, and bumpy shields [21,22].

The important hyperparameters of the two methods, control point and patch size, were set as 10 and 4 by 4, respectively, because they correspond to the lowest error. The method proposed by Miraldo et al. [21] has an excellent performance in the case of low-level noise. However, at high noise levels, their approach has a considerable error compared with those of other methods. Notably, this approach parameterizes the ray with the Plcker coordinate, composed of two orthogonal vectors, moment and direction. The moments and directions are optimized to minimize the objective of the distance between the 3D point and corrected ray.

However, the objective is only valid when the estimated vectors (moment and direction) are orthogonal. After optimization, we checked the orthogonality of the two vectors, as presented in Table 2. The results show that the average angle difference between two vectors is more than at high noise levels. Thus, significant errors are induced when noise is added.

Table 2.

Average angle deviation of the moment and direction vectors from (unit: degrees).

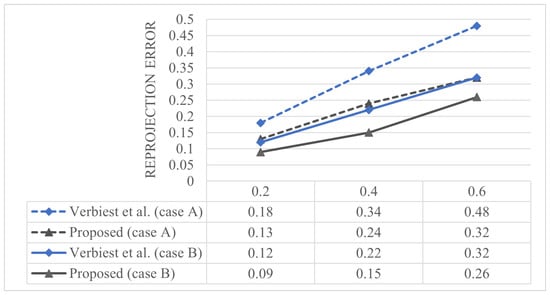

Verbiest et al. [22] modeled the spherical shield and used more training points than test points. In contrast, our datasets have denser test points than training points; therefore, we increased the number of training points to ensure a fair comparison, as shown in Figure 8. Case A corresponds to the same environment as that presented in Table 1, with 486 training points and 3913 test points. Case B has 1839 training points and 1535 test points. We can show that the reprojection error of case B is lower than that in case A; however, this observation is meaningless because another method was also improved.

Figure 8.

Comparison of the reprojection error by increasing the number of training points [22].

Figure 9 shows the depth results. To examine the depth results for different shapes, the results of noise level zero in Table 1 are visualized. In the case of planar and spherical shields, the differences across the methods are insignificant. However, in the case of the bumpy shield, the results of the proposed method are the closest to GT points. The planar and spherical shields exhibit minor errors; however, in the case of the bumpy shield, the gap between the rays of the left and right cameras is widened, and thus errors are present even after calibration.

Figure 9.

Results of the depth for planar, spherical, and bumpy shields [21,22].

3.2. Fisheye Camera with Misaligned Lens

Second, we simulate dthe fisheye camera, which has the following internal parameters. The polynomial coefficients for the projection function are described by the Taylor model proposed by Scaramuzza [4] and specified as an [348.35, 0, 0, 0] vector. The center of distortion in the pixels is specified as a [500, 500] vector, and the image size is pixels.

3.2.1. Calibration Data

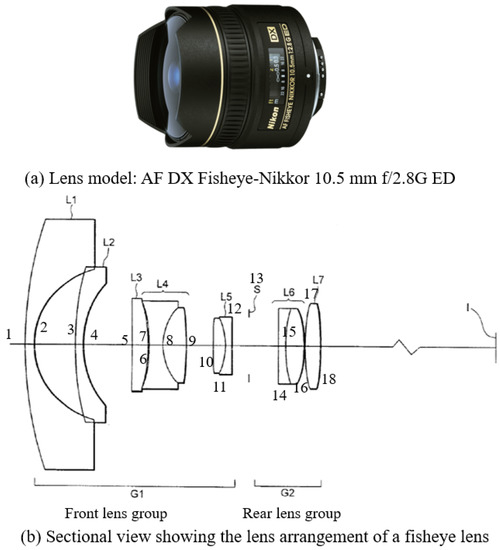

To simulate the fisheye lens, we used the Zemax OpticStudio [23] software, which is widely used in the optics field. We simulated the lens using the specifications presented in Figure 10a, referenced from Keiko Mizuguchi’s patent Fisheye lens [24].

Figure 10.

Reference fisheye lens model [24].

In the misaligned case #1, we tilted the L2 lens for the left camera and L2 lens for the right camera as shown in Figure 10b. In the case #2, the L1 lens was shifted by 1 mm and the L2 lens was tilted by for the left camera. In the right camera, the L1 lens was tilted by , and the L2 lens was shifted by 1 mm. The distance from the camera to the observation points for both datasets is 1000–4000 mm. The noise levels are the same as those in the shield case.

3.2.2. Result of Fisheye Camera Case

Table 3 and Table 4 and Figure 11 and Figure 12 show the results of each approach for the fisheye camera. As the noise level increases, the error for the approach of Miraldo et al. [21] increases significantly compared with those of the other methods, and thus we exclude dthis camera model. As in the shield case analysis, the moment and direction in Plcker coordinates should be perpendicular. However, this assumption does not hold due to the misaligned optical axis or noise. This is likely the reason for the significant increase in the errors.

Table 3.

Results for the misaligned #1 case of the fisheye camera.

Table 4.

Results for the misaligned #2 case of the fisheye camera.

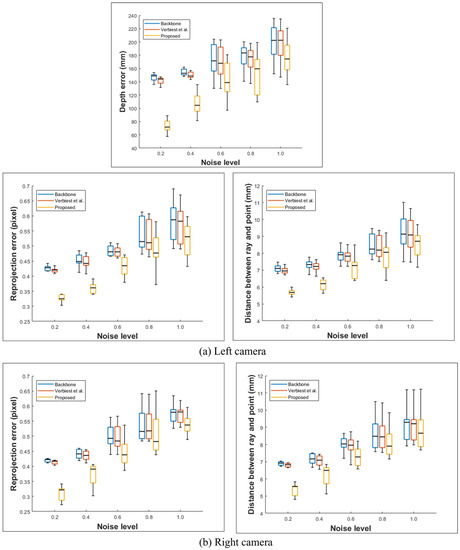

Figure 11.

Statistical results (min, max, and standard deviation) for the fisheye case #1 dataset [22].

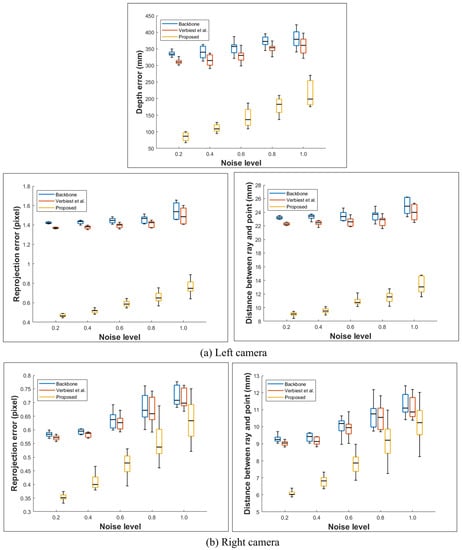

Figure 12.

Statistical results (min, max, and standard deviation) for the fisheye case #2 dataset [22].

We compared cases #1 and #2: case #2 has a larger error than that of case #1 because the lens is shifted and tilted, and a larger distortion occurs. As described in the previous configuration, the left and right camera misalignment methods are different, and the error of the left camera is larger. Therefore, tilting the L2 lens has a more significant effect than shifting it. The depth error also increases as the distance between the two rays increases. Similar to the bumpy shield, globally smooth models, such as the backbone and that proposed by Verbiest et al. [22], are inferior to the proposed approach. Moreover, because the method of Verbiest et al. [22] assumes a pinhole camera system, it cannot be applied for a fisheye camera system with a wide field of view.

As in the previous analysis, we performed ten experiments at the same noise level. Figure 11 and Figure 12 show the minimum, maximum, and standard deviation at this noise level. Although the deviation between the proposed method and existing methods decreases as the noise level increases, the error is lower than that in the other methods. Notably, noise levels above 1.0 are unlikely to occur in the natural environment.

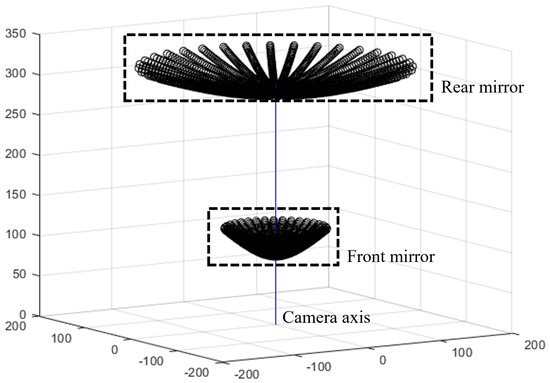

3.3. Catadioptric Camera with Misaligned Lens

The last camera system involves a catadioptric with two hyperboloid mirrors and one camera observing the reflections of two mirrors, as shown in Figure 13. Although various catadioptric camera configurations are available, we implemented that proposed by Luo et al. [25] owing to its convenience. This configuration has a common perspective camera coupled with two hyperbolic mirrors. The front mirror has a hole in the center, and thus the rear can be seen from this hole.

Figure 13.

Configuration of catadioptric stereo camera system.

We evaluated the effects of the tilt and shift, similar to that for the previous fisheye system. The difference with the fisheye camera is that, in this camera system, each mirror has a left and right camera in a pinhole stereo system. Similar to the fisheye camera, in case #1, the mirror was tilted randomly within . In case #2, tilting and shifting were randomly performed within and 1 mm, respectively. The focal length of the camera is f = 1000 (in pixels); the principal point is = 500, = 500 (in pixels); and the radial and tangential distortions are zero. The image has a width and height of 1000 and 1000 (in pixels).

3.3.1. Calibration Data

The catadioptric system captures images by the reflection of a spherical mirror. Therefore, we simulated the observation points to have a conical shape. These points lie within 1000–5000 mm and the distance was determined experimentally. The training and test points were extracted at 500 and 200 mm intervals, respectively. In addition, we evaluated the performance at the same noise levels as those in the previous camera systems. This camera system is less reliable than the pinhole and fisheye cameras because it is more complex. Therefore, we increased the number of training points over those in the previous two camera systems. A noise level with a random normal distribution of and from to was added to the image points, and noise with a random normal distribution of and from to was added to the 3D laser points.

3.3.2. Results for the Catadioptric Camera Case

The results of the reprojection error, distance, and depth error are presented in Table 5 and Table 6. In Figure 13, the front and rear mirrors are the left and right cameras, respectively. The front mirror is more curved than the rear mirror given the field of view of the image sensor. Therefore, the left camera has a larger error than the right camera.

Table 5.

Results for the misaligned #1 case of the catadioptric camera.

Table 6.

Results for the misaligned #2 case of the catadioptric camera.

Moreover, because this camera system captures images reflected in mirrors, convergence is not achieved when optimizing the parameters of the backbone, unlike the other systems. Therefore, the noise level in the experiments is smaller than in the different environments.

As noise increases, the relative error rate decreases. As mentioned in the introduction, the proposed method is designed to be suitable for near-central camera systems. These results are thought to appear the closer the noise becomes to the non-central camera. Although the relative error rate is lowered, the proposed method is superior to comparison methods and has the advantage of a small amount of computation.

3.4. Performance Analysis of the Proposed Camera Model

We show the computational efficiency of the proposed camera model by evaluating the sum of the computation time for forward and backward projection of the existing iterative methods and the proposed method. The dataset used for time measurement had a noise level of , for the image points and , for the 3D laser points. The measurement environment was AMD Ryzen 9 5900X 12-Core Processor 3.70 GHz, 128.0 GB RAM, 64-bit Windows 10, and MATLAB R2022b version. Time was measured as the total test time/number of points. We calculated this measured time as a scalar boost factor for a relative comparison with other methods.

The results are summarized in Table 7. We measured the computation time of each method and determined the speed improvement of the proposed method compared to the existing method by calculating the ratio of the computation time of the existing method to that of the proposed method. The proposed method shows significant computational advantages over other methods.

Table 7.

Relative performance analysis of the proposed method with respect to the existing method. For example, in the first row and third column, the proposed camera model is 251.5 times faster than Miraldo el al. [21] in a fisheye camera system (unit: scale factor). The computation time is calculated by sum of the forward and backward projection.

Because the proposed method interpolates the 3D residual vectors calculated in training, it requires less computation than other methods that are iterative in nature. Compared with the pinhole camera system, the other two systems have a wider angle of view, which makes it challenging to correct the ray. In addition, in an environment in which misalignment and noise occur, the optimization inevitably requires more time. Therefore, the method of Verbiest et al. [22], which requires four spline functions for one point, takes the most time, regardless of the number of points.

4. Conclusions

We proposed an efficient camera model and its calibration method that can be applied to a near-central camera system where the rays are converged to nearly one point. In this case, the existing central camera model cannot handle the non-central property of the rays. The non-central camera model can cover the non-central camera rays, but results in slow forward or backward projection and is vulnerable to observation noise. To lessen the computational burden and increase the robustness of the noise, we used the residual concept where the camera model is composed of a backbone model (any central camera model) and a residual model that compensates for the error of the backbone model.

To effectively capture the error of the backbone model, we used 3D observations for the calibration data. With the increased availability of 3D measurement devices, such as 3D laser scanners, we can obtain 3D points for calibration at affordable costs. From the 3D points that we observed, we calculated the residual of the backbone model.

The error of the backbone ray can be estimated by using two points: the training 3D laser point and its nearest point on the backbone ray. The vector starting from the nearest point to the 3D laser point is a residual vector. The vector and its starting point were calculated for all of the training 3D points, forming a sparse 3D vector field. The vector field is smoothed and can be converted to a dense 3D vector field using interpolation. We used this dense 3D vector field to compensate for the error of the forward and backward projection of the backbone model and we called it a residual model.

For the forward projection, the 3D point was first modified by the residual model using the dense 3D vector field. Then, the backbone model performed forward projection from the modified 3D point. For the backward projection, the 2D image point was first transformed into the ray using the backbone model. Then, the near and far points on the backbone ray were modified using the residual model. The ray through two points is then the corrected ray.

The experiments were conducted on a camera system that has a transparent shield in front of the camera, a fisheye camera with misaligned lenses, and a catadioptric camera where the lens or mirrors are tilted or moved slightly. Especially for the transparent shield, we made a case of a bumpy shield, where the outer surface of the shield is deformed, resulting in a non-uniform thickness of the shield. These camera systems result in a non-centrality of the rays that cannot be applied to the central camera model.

The proposed camera model shows a promising performance in forward and backward projection, even with the large noise of the observation. Also in the stereo camera settings, the proposed camera model performs well in estimating 3D points. With an increased accuracy, the computation time is largely reduced because of the non-iterative process in forward or backward projection.

Because the proposed camera model uses an interpolation of the 3D vector field, there is an operating range of the camera model. The range of 3D points is the range and the performance depends on the density of the observations in the 3D. In the future, we will try to research a near-central camera model that can operate on the outside of the training 3D points. This requires additional modeling for the extrapolation of the 3D vector field or some constraint about the straightness of the ray.

Author Contributions

Conceptualization, T.C. and S.S.; methodology, T.C. and S.Y.; software, T.C., S.Y. and J.K.; validation, T.C.; formal analysis, T.C.; investigation, J.K.; resources, T.C.; data curation, T.C., S.Y. and J.K.; writing—original draft preparation, T.C. and J.K.; writing—review and editing, T.C., J.K., S.Y. and S.S.; visualization, T.C.; supervision, S.S.; project administration, S.S.; funding acquisition, S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Foley, J.D.; Van, F.D.; Van Dam, A.; Feiner, S.K.; Hughes, J.F. Computer Graphics: Principles and Practice; Addison-Wesley Professional: Boston, MA, USA, 1996; Volume 12110. [Google Scholar]

- Brown, D.C. Decentering distortion of lenses. Photogramm. Eng. Remote Sens. 1966, 32, 444–462. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A toolbox for easily calibrating omnidirectional cameras. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Beijing, China, 9–15 October 2006; pp. 5695–5701. [Google Scholar]

- Kannala, J.; Brandt, S.S. A generic camera model and calibration method for conventional, wide-angle, and fish-eye lenses. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1335–1340. [Google Scholar] [CrossRef] [PubMed]

- Usenko, V.; Demmel, N.; Cremers, D. The double sphere camera model. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 552–560. [Google Scholar]

- Jin, Z.; Li, Z.; Gan, T.; Fu, Z.; Zhang, C.; He, Z.; Zhang, H.; Wang, P.; Liu, J.; Ye, X. A Novel Central Camera Calibration Method Recording Point-to-Point Distortion for Vision-Based Human Activity Recognition. Sensors 2022, 22, 3524. [Google Scholar] [CrossRef] [PubMed]

- Tezaur, R.; Kumar, A.; Nestares, O. A New Non-Central Model for Fisheye Calibration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5222–5231. [Google Scholar]

- Treibitz, T.; Schechner, Y.; Kunz, C.; Singh, H. Flat refractive geometry. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 51–65. [Google Scholar] [CrossRef] [PubMed]

- Yoon, S.; Choi, T.; Sull, S. Depth estimation from stereo cameras through a curved transparent medium. Pattern Recognit. Lett. 2020, 129, 101–107. [Google Scholar] [CrossRef]

- Pável, S.; Sándor, C.; Csató, L. Distortion estimation through explicit modeling of the refractive surface. In Proceedings of the 28th International Conference on Artificial Neural Networks (ICANN), Munich, Germany, 17–19 September 2019; Springer: Cham, Switzerland, 2019; pp. 17–28. [Google Scholar]

- Geyer, C.; Daniilidis, K. A unifying theory for central panoramic systems and practical implications. In Proceedings of the 6th European Conference on Computer Vision (ECCV), Dublin, Ireland, 26 June–1 July 2000; Springer: Berlin/Heidelberg, Germany, 2000; pp. 445–461. [Google Scholar]

- Xiang, Z.; Dai, X.; Gong, X. Noncentral catadioptric camera calibration using a generalized unified model. Opt. Lett. 2013, 38, 1367–1369. [Google Scholar] [CrossRef] [PubMed]

- Grossberg, M.D.; Nayar, S.K. A general imaging model and a method for finding its parameters. In Proceedings of the Eighth IEEE International Conference on Computer Vision (ICCV), Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 108–115. [Google Scholar]

- Sturm, P.; Ramalingam, S. A generic concept for camera calibration. In Proceedings of the 8th European Conference on Computer Vision (ECCV), Prague, Czech Republic, 11–14 May 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1–13. [Google Scholar]

- Rosebrock, D.; Wahl, F.M. Generic camera calibration and modeling using spline surfaces. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium (IV), Madrid, Spain, 3–7 June 2012; pp. 51–56. [Google Scholar]

- Beck, J.; Stiller, C. Generalized B-spline camera model. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 2137–2142. [Google Scholar]

- Nishimura, M.; Nobuhara, S.; Matsuyama, T.; Shimizu, S.; Fujii, K. A linear generalized camera calibration from three intersecting reference planes. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2354–2362. [Google Scholar]

- Uhlig, D.; Heizmann, M. A calibration method for the generalized imaging model with uncertain calibration target coordinates. In Proceedings of the Asian Conference on Computer Vision (ACCV), Kyoto, Japan, 30 November–4 December 2020; pp. 541–559. [Google Scholar]

- Schops, T.; Larsson, V.; Pollefeys, M.; Sattler, T. Why having 10,000 parameters in your camera model is better than twelve. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 2535–2544. [Google Scholar]

- Miraldo, P.; Araujo, H. Calibration of smooth camera models. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 2091–2103. [Google Scholar] [CrossRef] [PubMed]

- Verbiest, F.; Proesmans, M.; Gool, L.V. Modeling the effects of windshield refraction for camera calibration. In Proceedings of the 16th European Conference (ECCV), Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 397–412. [Google Scholar]

- Zemax. OpticsAcademy (Optics Studio), 2022. Available online: https://www.zemax.com/pages/opticstudio (accessed on 23 April 2023).

- Mizuguchi, K. Fisheye Lens. US Patent 6,844,991, 18 January 2005. [Google Scholar]

- Luo, C.; Su, L.; Zhu, F. A novel omnidirectional stereo vision system via a single camera. In Scene Reconstruction Pose Estimation and Tracking; IntechOpen: London, UK, 2007; pp. 19–38. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).