Abstract

Autonomous navigation requires multi-sensor fusion to achieve a high level of accuracy in different environments. Global navigation satellite system (GNSS) receivers are the main components in most navigation systems. However, GNSS signals are subject to blockage and multipath effects in challenging areas, e.g., tunnels, underground parking, and downtown or urban areas. Therefore, different sensors, such as inertial navigation systems (INSs) and radar, can be used to compensate for GNSS signal deterioration and to meet continuity requirements. In this paper, a novel algorithm was applied to improve land vehicle navigation in GNSS-challenging environments through radar/INS integration and map matching. Four radar units were utilized in this work. Two units were used to estimate the vehicle’s forward velocity, and the four units were used together to estimate the vehicle’s position. The integrated solution was estimated in two steps. First, the radar solution was fused with an INS through an extended Kalman filter (EKF). Second, map matching was used to correct the radar/INS integrated position using OpenStreetMap (OSM). The developed algorithm was evaluated using real data collected in Calgary’s urban area and downtown Toronto. The results show the efficiency of the proposed method, which had a horizontal position RMS error percentage of less than 1% of the distance traveled for three minutes of a simulated GNSS outage.

1. Introduction

Autonomous navigation relies on a global navigation satellite system (GNSS) to estimate the vehicle’s location in open-sky and suburban environments [1]. However, relying only on the GNSS is inadequate because the GNSS signal can be affected by the surrounding environment. For example, the GNSS signal will be blocked in underground and indoor areas. Moreover, the GNSS signal suffers from multipath errors and loss of lock in GNSS-challenging areas such as downtown [2]. On the other hand, an inertial navigation system (INS) can accurately estimate the vehicle’s relative position, velocity, and attitude but only for a short time, as the INS solution deteriorates with time [3]. Therefore, GNSS/INS integration is employed in various navigation applications to overcome the limitations of both the GNSS and INS. There are two major types of GNSS/INS integration: loosely coupled integration [4] and tightly coupled integration [5].

Although GNSS/INS integration estimates the vehicle’s navigation states with reliable accuracy in the short term, the accuracy will deteriorate during long GNSS outages. During these outages, the system relies only on inertial measurement unit (IMU) sensors (accelerometers and gyroscopes) whose output drifts rapidly with time, especially when utilizing low-cost IMUs [6]. Therefore, in autonomous navigation systems, more sensors, such as vehicle onboard sensors, lidar, vision sensors, and radar, are required to aid the GNSS/INS system. However, each of these sensors has its advantages and disadvantages.

Gao et al. [7] used a vehicle’s chassis sensors to compensate for the GNSS outage. Wheel speed sensors were used to estimate the vehicle’s forward velocity, while the lateral velocity was estimated using the steering wheel angle sensor. The fusion of the kinematic model with the estimated velocity from the onboard sensors improved the accuracy of the vehicle localization during GNSS signal outages.

Lidar has mainly been used to percept the surrounding environment [8]. Recently, lidar has been used to aid IMUs in GNSS-denied environments and for simultaneous localization and mapping (SLAM) [9,10,11]. However, lidar is affected by snowy weather and requires a high level of computational power. Moreover, lidar is a high-cost sensor. On the other hand, a vision sensor is mainly used to detect highway lanes and objects in the surrounding environment, and it can be fused with an INS to limit the IMU’s errors [12,13]. Nevertheless, the drawbacks of vision sensors are that they cannot work under different light conditions and are affected by rain, fog, and snowy weather. An odometer can also aid the INS in autonomous navigation applications by providing the vehicle’s forward velocity; however, it is affected by slippery roads, an unequal diameter, and unequal pressure [14].

In contrast, radar is a low-cost sensor that works in different weather and light conditions. Thus, it is known as an all-weather sensor. Therefore, the research in this paper is based on the fusion of radar and an INS in environments that are challenging for a GNSS. Furthermore, the motion constraints of a land vehicle [15] are also applied for better performance, e.g., the non-holonomic constraint (NHC) [15], zero-velocity update (ZUPT) [16], and the zero-integrated heading rate (ZIHR) [16].

Radar is mainly used in adaptive cruise control (ACC) to detect the ranges and relative speeds of surrounding vehicles and objects to make autonomous driving safe.

There are two types of radar: 360° radar and static radar. The 360° radar rotates 360 degrees to scan the surrounding environment. It is known as imaging radar since it returns only the range, azimuth, and density value for each detected object. In contrast, static radar does not rotate and has a limited field of view. There are two types of static radar. The first type, continuous wave (CW) radar, can measure the Doppler velocity between an object and the radar unit, whereas the second type can measure the range and the Doppler velocity of the objects; this type is known as frequency modulated CW (FMCW) radar. Both 360° radar and static radar are used in autonomous navigation applications.

Elkholy et al. [17,18] applied the oriented fast and rotated brief (ORB) technique to detect and match the objects from 360° radar frames to estimate the transition and rotation between every two frames. The estimated solution was integrated with an IMU through an EKF to improve the navigation solution. In Elkholy et al. [19], FMCW radar was utilized, and a novel algorithm was adopted to estimate the vehicle’s relative position and rotation. With the knowledge of the vehicle’s average speed and radar data rate, the change in the range and azimuth between the objects in any two successive radar frames can be estimated. Then, the corresponding objects can be determined. The corresponding points were used to estimate the rotation and transition between any two radar frames. Then, the radar solution was integrated with an IMU for an ego-motion estimation. Rashed et al. [20] used an FMCW radar fixed at the center of the front bumper to estimate the distance traveled. The radar output was integrated with a reduced inertial sensor system (RISS). A RISS employs only three sensors (one vertical gyroscope and two horizontal accelerometers), whereas the full IMU contains three gyroscopes and three accelerometers. In Abosekeen et al. [21], the FMCW radar was fixed facing the ground to estimate the vehicle’s forward speed from the Doppler frequency of the reflected radar waves. A RISS solution was integrated with an IMU through an EKF to improve the navigation solution in GNSS-denied environments.

The iterative closest point (ICP) method is the most commonly used method for estimating the relative transition and rotation between any two radar frames by minimizing the distance between point clouds [22,23,24]. Normal distribution transform (NDT) is another technique that relies on building a normal distribution model for point clouds in every radar frame. These models are then matched to estimate the vehicle’s ego-motion [25].

To improve the accuracy of the vehicle’s position, a predefined map was utilized to correct the integrated solution by matching the integrated position with map links. The predefined map can be generated using sensors such as cameras, lidar, or radar [26]. However, using these sensors to make the maps is time-consuming due to the high computational cost and the high volume of data to be stored. The best solution is to use navigation maps such as Google Maps or OpenStreetMap (OSM) [27]. OSM was created by volunteers using standard surveying techniques. OSM provides rich information, including road links, buildings, traffic signs, the number of lanes, and other data. Radar frames can be matched with predefined digital maps to estimate the vehicle’s transition and rotation [28].

The first step in map matching is to determine the vehicle’s location on the map. In other words, to select the correct map link where the position will be projected. Different algorithms can be utilized to determine this map link. Geometrical and topological techniques depend on finding the distances between the position and the map links, and then the map link with the minimum distance will be selected [29]. The second technique is the probabilistic algorithm. This algorithm draws a confidence ellipse from the position’s standard deviation and determines which map links intersect with this ellipse [30]. Another technique to determine the map link is to use the fuzzy logic algorithm. The fuzzy logic algorithm takes the inputs from the navigation sensors and returns the map links as likelihoods [31]. The main difference between the previous techniques is the method of selecting the correct map link for matching.

This research focuses on radar and INS integration to meet the continuity requirements, compensate for GNSS outages, and improve the navigation solution for land vehicles. Moreover, the integrated solution is corrected by applying map matching through open access OSM. Section 2 describes the methodology and the integration scheme used in this research. Section 3 shows the experimental work and the results from the proposed method. Finally, Section 4 concludes the presented work.

2. Methodology

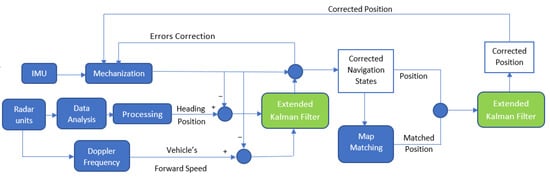

The navigation system proposed in this research relies on four FMCW radar units, an IMU, and OpenStreetMap (OSM). The four radar units were used for the ego-motion estimation, while two of the four units were used to estimate the vehicle’s forward speed. The map-matching technique was applied at the end to correct the integrated position to OSM. A successive EKF was implemented to correct the navigation solution and limit the IMU errors. Figure 1 shows a block diagram of the proposed method. The next subsections describe the techniques applied to use radar for an ego-motion estimation, to estimate the vehicle’s forward velocity, in the EKF prediction and update stages, and in map matching.

Figure 1.

Flow chart of the developed radar/INS/map-matching integration technique during GNSS outage.

2.1. Radar for Ego-Motion Estimation

The four static radar units were fixed on the roof of the vehicle to collect and detect the objects around the vehicle. The four frames from all the radar units were synchronized and combined into one frame representing the environment around the vehicle. Thus, the four static radar units worked as a 360° radar unit.

Before processing the radar data, noisy data and outliers were detected and removed by applying a set of constraints. First, the close and far points were removed because these points were considered ghost points. The Doppler frequency was also used to differentiate between static and moving objects. The moving objects were removed from the radar frames. Finally, a threshold was applied to remove the outliers with intensity values lower than the threshold.

After the data analysis and preprocessing, data association was applied to find the corresponding points between radar frames. The change in the range and azimuth between any two corresponding points in two successive radar frames could be estimated based on the knowledge of the radar data rate (about 8 Hz) and the average vehicle speed.

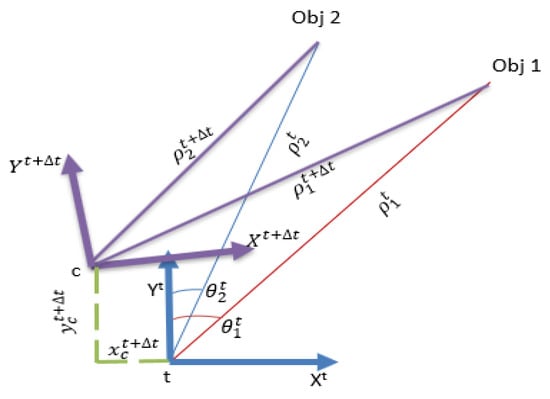

A novel algorithm was implemented to estimate the vehicle’s ego-motion (Figure 2). This algorithm calculates the coordinates of the objects (in the first radar frame. It then uses these calculated coordinates and the range in the second frame to estimate the coordinates of the radar center (, ). The difference between the calculated radar center in the second frame and the one in the first frame represents the transition between the two frames.

Figure 2.

The proposed method for estimating the vehicle’s ego-motion.

The rotation between the two frames can be estimated by calculating the difference between the bearings of the objects at a time () and time (

If we have multiple corresponding objects between any two radar frames, the least squares method is applied to obtain the final transformation and rotation between the two frames.

where = is the vector containing the corrections for the transition (dx and dy) and the rotation ( between any two radar frames.

A = the design matrix.

= the measurement variance-covariance matrix.

= the misclosure vector.

2.2. Estimating the Vehicle’s Forward Speed

FMCW radar provides information about the Doppler frequency between the radar unit and the detected objects. Therefore, the relative velocity between the radar and the objects can be estimated from the Doppler frequency. This relative velocity will be the vehicle’s forward velocity if the detected objects are static.

There were four radar units fixed on the roof of the vehicle. The two radar units at the front right and left of the vehicle were chosen to estimate the vehicle’s forward velocity. Then, the average forward velocity was calculated from the two units. The radar provides the relative velocity of each object based on the Doppler frequency. The relative velocity value could be positive if the objects are moving far from the vehicle and negative if the objects are coming toward the vehicle. The vehicle velocity estimation depends on the static objects, while the moving objects act as outliers and should be excluded. The static objects will appear to approach the vehicle through the two front radar units. Therefore, only objects with a negative relative velocity were kept to exclude the objects moving away from the vehicle.

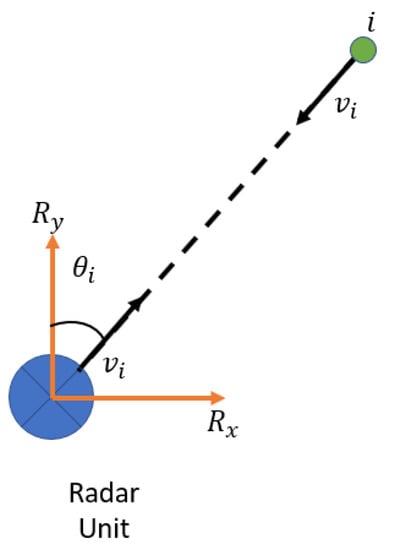

If a static object () is detected by a radar unit, as shown in Figure 3, the relative velocity between this object and the radar ( will represent the absolute radar velocity ( since the static object’s velocity is zero. Using the bearing angle (), the radar velocity can be decomposed into the and components in the radar frame using the equation:

where and are the radar velocity components in the radar frame ( and ).

Figure 3.

The relative motion between the radar unit and a static object ().

Suppose there are multiple objects () in the radar frame. Then, the radar velocities for and in radar local frame can be estimated. The least squares estimation is applied to estimate a reliable solution.

Now, the magnitude () and the orientation angle () can be calculated from and .

The final step is to convert the radar velocity () from the radar frame into the vehicle frame to estimate the vehicle’s forward speed ().

where is the boresight angle between the radar and vehicle frames.

Since the IMU body frame was aligned with the vehicle frame, the vehicle’s estimated forward speed is used to estimate the vehicle’s transition in the east and north directions. Then, the updated position of the vehicle can be estimated.

where and are the transitions in the east and north directions at time , is the time difference between the radar frames, and is the heading angle at time .

2.3. Radar/INS Integration

In open-sky environments, loosely coupled GNSS/INS integration was used to estimate the vehicle’s navigation solution. However, a successive Kalman filter was implemented in case of a GNSS outage. First, the radar solution was integrated with the INS solution through a closed-loop EKF. Then, the corrected position, obtained via map matching, was also integrated through an EKF to aid the IMU and overcome long-time errors. One of the practical aspects of multi-sensor fusion is data synchronization, which can be achieved in various ways, such as interpolation or by searching for the closest values [32]. In our work, due to the high IMU rate compared to the radar rate, the algorithm searched for the closest IMU timestamp for each radar frame.

A KF consists of two phases (the prediction stage and the update stage). There are two main models needed for the KF. The system model is the INS model, and it is the core for the prediction stage to predict the error states and the covariance matrix. The other model is the observation model, which depends on radar to update the navigation filter to estimate the error state to correct the navigation solution. There are 15 error states ().

where is the position error, is the velocity error, is the attitude error, is the accelerometer bias, and is the gyroscope bias.

The system model in the continuous case is described as follows:

where is the time rate of change of the state vector, is the dynamic matrix, is the state vector, is the noise coefficient matrix, and is the system noise.

The system model in the discrete case is as follows:

where is the predicted state vector at the time ), is the transition matrix, is the state vector at the time (t), is the predicted covariance matrix at the time ), is the covariance matrix at the time (), is the system noise at the time (), and is the process noise matrix.

The observation model is the core of the update stage, and it depends on the other available sensors to aid the IMU; in this case, the estimated position and heading from the radar were used to build the observation model.

where represents the measurements, represents the design matrix, and represents the measurement noise.

The updated stage is as follows:

where is the gain matrix, is the covariance matrix of the measurement noise, is the updated state vector, and is the updated covariance matrix of the state vector.

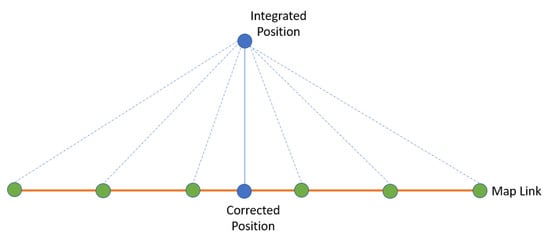

2.4. Map Matching

OSM is an open access map anyone can download, edit, or update. Maps of Calgary and Toronto were downloaded and employed in this research. The map-matching technique was applied in two steps. The first step was to select the correct map link. Geometrical and topological techniques were exploited to determine the correct map link. A map link consists of points or nodes. The distance between the integrated position and the nodes was calculated (Figure 4), and the map link with the minimum distance was selected. Another constraint that can be adapted to ensure the selected map link is correct is the azimuth. The integrated azimuth should be equal to the map link azimuth.

Figure 4.

Map-matching technique.

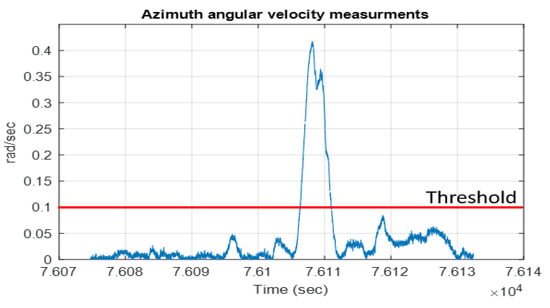

The second step was to project the integrated position onto the selected map link to find the vehicle’s corrected position. These two steps were implemented each time unless the vehicle began to turn. This turn could be detected by checking the azimuth angular velocity measurements. If the absolute azimuth angular velocity measurements were higher than a threshold, it meant that the vehicle had reached an intersection and was making a turn. Moreover, the azimuth angular velocity measurements could be utilized to determine if the vehicle was turning right or left, which aided in selecting the new map link (Figure 5).

Figure 5.

Angular velocity measurements.

3. Experimental Work and Results

Two real datasets were collected. The first one was collected in a suburban area in Calgary, Alberta, Canada, while the second data was collected in downtown Toronto, Ontario, Canada. For the Calgary dataset, four FMCW UMRR-11 Type 132 radar units were mounted on the vehicle’s roof [33]. In addition, the Xsens MTi-G-710 module was used to collect the IMU and GNSS measurements [34], and the reference data were collected by a Novatel SPAN-SE system with an IMU-FSAS (Figure 6 and Figure 7). On the other hand, in the Toronto test, four FMCW UMRR-96 Type 153 radar units were used [35]. In addition, the u-blox ZED-F9R module was used to collect the IMU and GNSS measurements [36]. Finally, the reference data were collected by a Novatel PwrPak7 system with an IMU-KVH1750.

Figure 6.

The four UMRR-11 Type 132 radar units used to collect the data in Calgary.

Figure 7.

The reference system and the Xsens unit in the Calgary test.

The first algorithm applied was to estimate the vehicle’s forward velocity from two of the four radar units. Data from the two radar units at the front right and left of the vehicle were utilized for this purpose. Then, radar odometry was applied to the four radar units. Finally, the radar solution was integrated with the INS, and map matching was applied to the integrated position. The next subsections show the results for the two datasets.

3.1. Calgary Data

For the Calgary data, Figure 8 shows the estimated average forward velocity from the two radar units compared to the forward velocity from the reference system. The RMSE for the estimated average forward velocity is 1.08 m/s, the distance traveled was 2.1 km, and the test duration was 9.98 min.

Figure 8.

The estimated average forward speed from the radar unit versus the reference forward speed for the Calgary data.

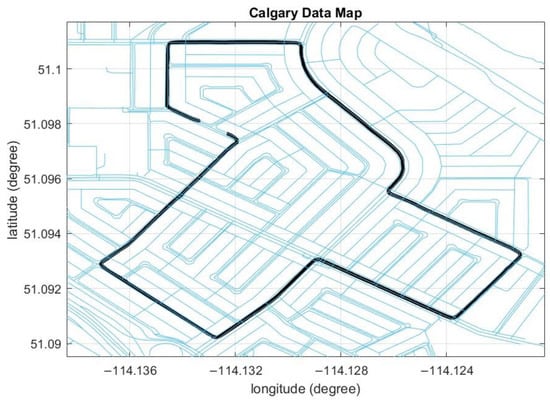

The map on which the data was collected was downloaded from the OSM website for map matching (Figure 9). A simulated GNSS outage was introduced for 90 s, and the corrected position after applying the map-matching technique is shown in Figure 10.

Figure 9.

The OSM for Calgary data. The black line is the reference trajectory.

Figure 10.

The estimated trajectory from radar/INS integration with map matching during a 90 s simulated GNSS outage in Calgary data.

Table 1 shows the position RMSE from the radar/INS integration through a closed-loop EKF with map matching during four simulated GNSS signal outages lasting 30 to 90 s. The position RMSE values are 2.69 m and 22.89 m for the 30 s and 90 s GNSS outages, respectively, while the percentage errors are about 1 % and 2.46 % for the traveled distances of 259.34 m and 929.82 m, respectively. These results indicate that applying the map-matching technique corrected the vehicle’s integrated position since the integrated position was projected onto the nearest point on the map lines. Therefore, the projected position was close to the reference position.

Table 1.

Position RMSE from radar/INS integration with map matching during different simulated GNSS outage durations.

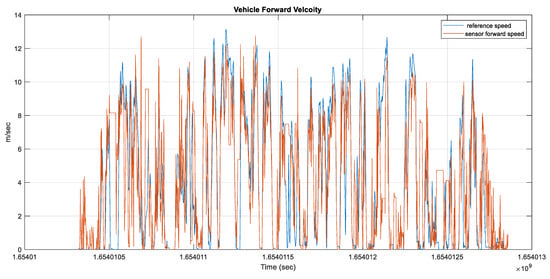

3.2. Toronto Data

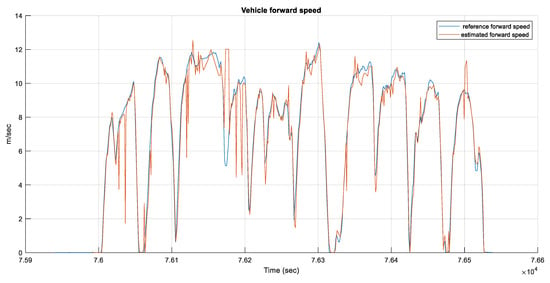

The vehicle’s forward velocity was estimated, as shown in Figure 11. The RMSE for the estimated average forward velocity is 2.65 m/s, the distance traveled was 9.94 km, and the test duration was 42.7 min.

Figure 11.

The estimated average forward speed from the radar unit versus the forward reference speed for Toronto data.

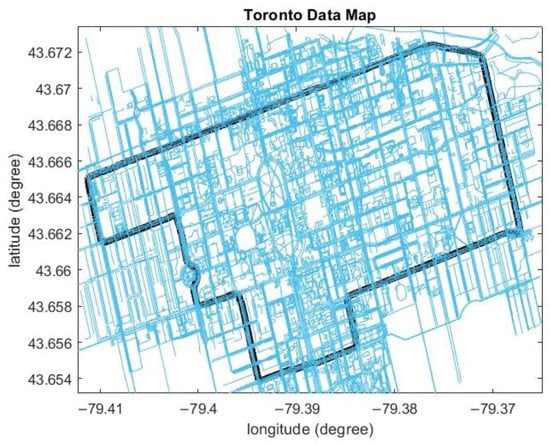

Two different simulated GNSS signal outages were introduced to prove the efficiency of the proposed algorithm. The simulated outage duration ranged from 30 s to 180 s. Finally, the road map was downloaded to apply the map-matching technique (Figure 12).

Figure 12.

The OSM for Toronto data. The black line is the reference trajectory.

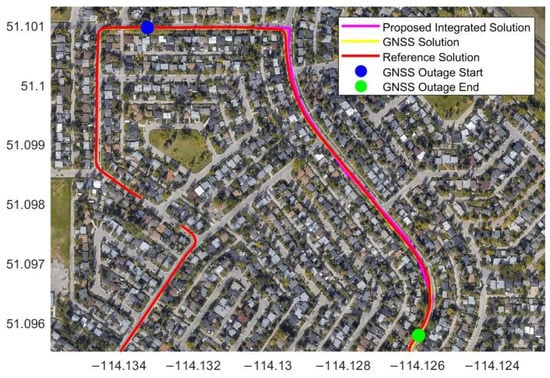

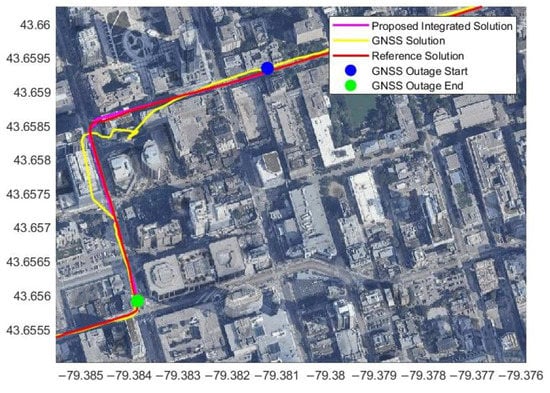

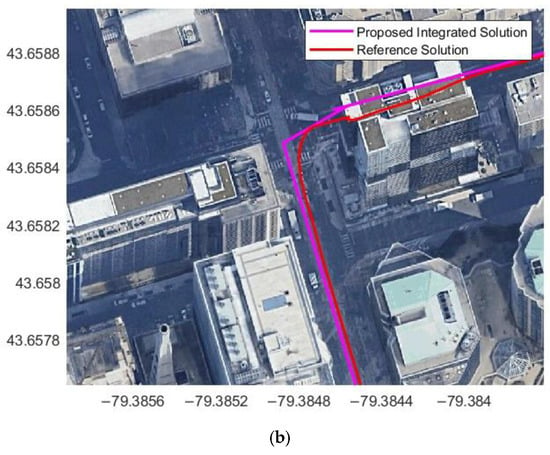

Since these data were collected in the downtown area, the GNSS signal suffered from blockage and multipath errors. However, Figure 13 shows that the radar/INS integrated solution with map matching is better than the GNSS solution, demonstrating the benefit of the proposed integrated solution.

Figure 13.

The estimated trajectory from radar/INS integration with map matching during three minutes of a simulated GNSS signal outage in Toronto data.

From Table 2, the position percentage error is less than 0.5% for the three minutes of the simulated GNSS outage. The position RMSE is 2.72 m, and the distance traveled was 611.69 m for the three-minute outage (Figure 13). The percentage errors are 0.61%, 0.86%, and 1.01% for the 60 s, 90 s, and 120 s GNSS outages, respectively, while the traveled distance ranged from 204 m to 304 m.

Table 2.

Position RMSE from radar/INS integration with map matching during different durations of a simulated GNSS signal outage.

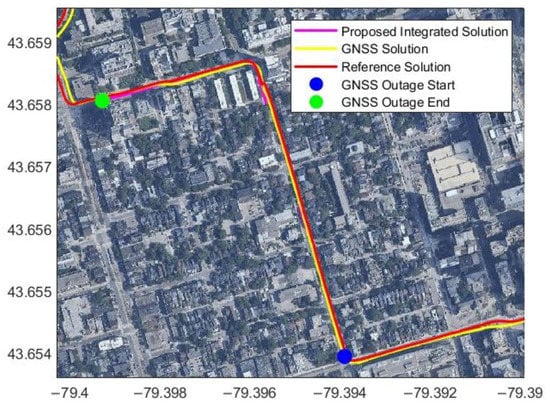

Figure 14 shows the integrated solution with map matching during another simulated GNSS outage. Table 3 shows the results for the position error for this GNSS simulated outage. The GNSS outage duration was from 30 s to 180 s. The position RMSE is 4.47 m for a traveled distance of 845.74 m, and the percentage error during the three-minute GNSS signal outage is 0.53%. For 60 s, 90 s, and 120 s of GNSS outage, the percentage errors are 0.71%, 0.64%, and 0.61%, respectively, and the traveled distance was from 495.16 m to 679.49 m.

Figure 14.

Another example of the estimated trajectory from radar/INS integration with map matching during three minutes of a simulated GNSS signal outage using Toronto data.

Table 3.

Position RMSE from radar/INS integration with map matching during different simulated GNSS signal outage durations.

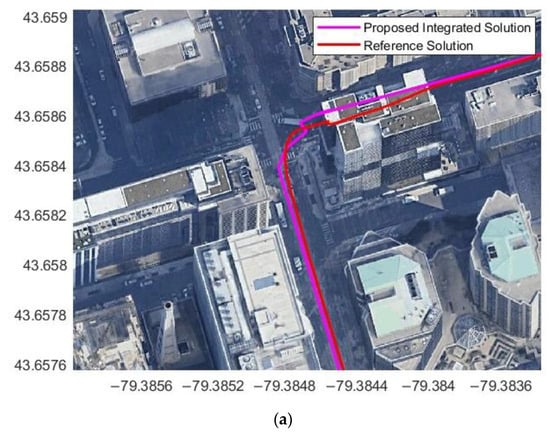

3.3. Enable and Disable Map Matching

The map-matching technique was applied during the GNSS outage. However, at the road intersections, the map-matching algorithm was disabled since the map lines intersected at 90° (straight lines) while the vehicle moved from one line to another line through a curve. Therefore, the map-matching technique was disabled during intersections and enabled when the vehicle left the intersection and moved in a straight line, as shown in Figure 15.

Figure 15.

Radar/INS integrated solution at the intersection (a) with map matching and (b) without map matching.

4. Conclusions

This research proposed a new technique based on radar/INS integration and map matching for robust vehicle navigation in GNSS-challenging environments. The proposed system consists of four static FMCW radar units and OSM for map matching. Two radar units were used to estimate the vehicle’s forward speed, while the four radar units were used to estimate the vehicle’s transition and rotation. The radar solution was first integrated with the INS solution, and then map-matching techniques were employed to correct the integrated position and obtain the final navigation solution. Road intersections were detected from the azimuth angular velocity measurements, and map matching was deactivated at the intersections. Real driving data were collected to test the proposed method, and simulated GNSS signal outages were introduced. The percentage RMSE error was less than 1% of the traveled distance in most cases during two and three minutes of GNSS signal outage.

One of the limitations of the proposed algorithm is that the map accuracy will limit the final accuracy. Additionally, the OSM maps are open source so anyone can edit or update the map. Therefore, it is recommended to use high-definition maps to improve the corrected position accuracy. In future work, a comparison of the estimated forward velocity from the radar units with the forward velocity from the vehicle odometer will be investigated. Furthermore, the use of one EKF or different sensor fusion filters and architectures will be studied. Finally, a smart module will be developed to automatically switch from GNSS/INS integration to radar/INS integration based on factors such as the satellite geometry and the number of visible satellites.

Author Contributions

Conceptualization, M.E. (Mohamed Elkholy), M.E. (Mohamed Elsheikh), and N.E.-S.; methodology, M.E. (Mohamed Elkholy); software, M.E. (Mohamed Elkholy) and M.E. (Mohamed Elsheikh); formal analysis, M.E. (Mohamed Elkholy) and M.E. (Mohamed Elsheikh); investigation, M.E. (Mohamed Elkholy); data curation, M.E. (Mohamed Elkholy); writing—original draft preparation, M.E. (Mohamed Elkholy); writing—review and editing, M.E. (Mohamed Elsheikh) and N.E.-S.; supervision, N.E.-S.; funding acquisition M.E. (Mohamed Elkholy) and N.E.-S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by funding from Prof. Naser El-Sheimy from the Natural Sciences and Engineering Research Council of Canada’s CREATE-MSS Program (Grant no. 10017043) and the Canada Research Chairs program (Grant no. 10012432).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study is available on request from the corresponding author. The data are not publicly available due to its large size.

Acknowledgments

Thanks to the Navigation and Instrumentation Research Lab (NAVINST) at the Royal Military College of Canada for collecting the Toronto data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rabbou, M.A.; El-Rabbany, A. Integration of GPS Precise Point Positioning and MEMS-Based INS Using Unscented Particle Filter. Sensors 2015, 15, 7228–7245. [Google Scholar] [CrossRef] [PubMed]

- Chiang, K.W.; Duong, T.T.; Liao, J.K. The Performance Analysis of a Real-Time Integrated INS/GPS Vehicle Navigation System with Abnormal GPS Measurement Elimination. Sensors 2013, 13, 10599–10622. [Google Scholar] [CrossRef] [PubMed]

- Iqbal, U.; Georgy, J.; Korenberg, M.J.; Noureldin, A. Augmenting Kalman Filtering with Parallel Cascade Identification for Improved 2D Land Vehicle Navigation. In Proceedings of the 2010 IEEE 72nd Vehicular Technology Conference-Fall, Ottawa, ON, Canada, 6–9 September 2010; pp. 1–5. [Google Scholar]

- Al Bitar, N.; Gavrilov, A.I. Comparative Analysis of Fusion Algorithms in a Loosely-Coupled Integrated Navigation System on the Basis of Real Data Processing. Gyroscopy Navig. 2019, 10, 231–244. [Google Scholar] [CrossRef]

- Li, T.; Zhang, H.; Niu, X.; Gao, Z. Tightly-Coupled Integration of Multi-GNSS Single-Frequency RTK and MEMS-IMU for Enhanced Positioning Performance. Sensors 2017, 17, 2462. [Google Scholar] [CrossRef] [PubMed]

- Falco, G.; Pini, M.; Marucco, G. Loose and Tight GNSS/INS Integrations: Comparison of Performance Assessed in Real Urban Scenarios. Sensors 2017, 17, 255. [Google Scholar] [CrossRef] [PubMed]

- Gao, L.; Xiong, L.; Xia, X.; Lu, Y.; Yu, Z.; Khajepour, A. Improved Vehicle Localization Using On-Board Sensors and Vehicle Lateral Velocity. IEEE Sens. J. 2022, 22, 6818–6831. [Google Scholar] [CrossRef]

- Gao, Y.; Liu, S.; Atia, M.M.; Noureldin, A. INS/GPS/LiDAR Integrated Navigation System for Urban and Indoor Environments Using Hybrid Scan Matching Algorithm. Sensors 2015, 15, 23286–23302. [Google Scholar] [CrossRef] [PubMed]

- Zou, Q.; Sun, Q.; Chen, L.; Nie, B.; Li, Q. A Comparative Analysis of LiDAR SLAM-Based Indoor Navigation for Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6907–6921. [Google Scholar] [CrossRef]

- Chang, L.; Niu, X.; Liu, T.; Tang, J.; Qian, C. GNSS/INS/LiDAR-SLAM Integrated Navigation System Based on Graph Optimization. Remote Sens. 2019, 11, 1009. [Google Scholar] [CrossRef]

- Song, K.-T.; Chiu, Y.-H.; Kang, L.-R.; Song, S.-H.; Yang, C.-A.; Lu, P.-C.; Ou, S.-Q. Navigation Control Design of a Mobile Robot by Integrating Obstacle Avoidance and LiDAR SLAM. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 1833–1838. [Google Scholar]

- Hide, C.; Botterill, T.; Andreotti, M. Low Cost Vision-Aided IMU for Pedestrian Navigation. In Proceedings of the 2010 Ubiquitous Positioning Indoor Navigation and Location Based Service, Kirkkonummi, Finland, 14–15 October 2010; pp. 1–7. [Google Scholar]

- Panahandeh, G.; Jansson, M. Vision-Aided Inertial Navigation Based on Ground Plane Feature Detection. IEEE/ASME Trans. Mechatron. 2014, 19, 1206–1215. [Google Scholar] [CrossRef]

- Borenstein, J.; Feng, L. Measurement and Correction of Systematic Odometry Errors in Mobile Robots. IEEE Trans. Robot. Autom. 1996, 12, 869–880. [Google Scholar] [CrossRef]

- Dissanayake, G.; Sukkarieh, S.; Nebot, E.; Durrant-Whyte, H. The Aiding of a Low-Cost Strapdown Inertial Measurement Unit Using Vehicle Model Constraints for Land Vehicle Applications. IEEE Trans. Robot. Autom. 2001, 17, 731–747. [Google Scholar] [CrossRef]

- Liu, C.Y.; Lin, C.A.; Chiang, K.W.; Huang, S.C.; Chang, C.C.; Cai, J.M. Performance Evaluation of Real-Time MEMS INS/GPS Integration with ZUPT/ZIHR/NHC for Land Navigation. In Proceedings of the 2012 12th International Conference on ITS Telecommunications, ITST 2012, Taipei, Taiwan, 5–8 November 2012; pp. 584–588. [Google Scholar]

- Elkholy, M.; Elsheikh, M.; El-Sheimy, N. Radar-Based Localization Using Visual Feature Matching. In Proceedings of the 34th International Technical Meeting of the Satellite Division of the Institute of Navigation, ION GNSS+ 2021, St. Louis, MO, USA, 20–24 September 2021; pp. 2353–2362. [Google Scholar] [CrossRef]

- Elkholy, M.; Elsheikh, M.; El-Sheimy, N. Radar/IMU Integration Using Visual Feature Matching. In Proceedings of the 35th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS+ 2022), Denver, Co, USA, 19–23 September 2022; pp. 1999–2010. [Google Scholar] [CrossRef]

- Elkholy, M.; Elsheikh, M.; El-Sheimy, N. Radar/INS Integration for Pose Estimation in GNSS-Denied Environments. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B1-2022, 137–142. [Google Scholar] [CrossRef]

- Rashed, M.A.; Abosekeen, A.; Ragab, H.; Noureldin, A.; Korenberg, M.J. Leveraging FMCW-Radar for Autonomous Positioning Systems: Methodology and Application in Downtown Toronto. In Proceedings of the 32nd International Technical Meeting of the Satellite Division of the Institute of Navigation, ION GNSS+ 2019, Miami, FL, USA, 16–20 September 2019; pp. 2659–2669. [Google Scholar] [CrossRef]

- Abosekeen, A.; Noureldin, A.; Korenberg, M.J. Utilizing the ACC-FMCW Radar for Land Vehicles Navigation. In Proceedings of the 2018 IEEE/ION Position, Location and Navigation Symposium, PLANS 2018-Proceedings, Monterey, CA, USA, 23–26 April 2018; pp. 124–132. [Google Scholar]

- Nießner, M.; Dai, A.; Fisher, M. Combining Inertial Navigation and ICP for Real-Time 3D Surface Reconstruction. Eurographics (Short Pap.) 2014, 13–16. [Google Scholar] [CrossRef]

- Xu, X.; Luo, M.; Tan, Z.; Zhang, M. Improved ICP Matching Algorithm Based on Laser Radar and IMU. In Proceedings of the 2018 5th IEEE International Conference on Cloud Computing and Intelligence Systems (CCIS), Nanjing, China, 23–25 November 2018; pp. 517–520. [Google Scholar]

- Censi, A. An ICP Variant Using a Point-to-Line Metric. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 19–25. [Google Scholar]

- Biber, P.; Strasser, W. The Normal Distributions Transform: A New Approach to Laser Scan Matching. In Proceedings of the Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27–31 October 2003; IEEE: New York, NY, USA; Volume 3, pp. 2743–2748. [Google Scholar]

- Levinson, J.; Thrun, S. Robust Vehicle Localization in Urban Environments Using Probabilistic Maps. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 4372–4378. [Google Scholar]

- Haklay, M.; Weber, P. OpenStreetMap: User-Generated Street Maps. IEEE Pervasive Comput. 2008, 7, 12–18. [Google Scholar] [CrossRef]

- Park, Y.; Kim, J.; Kim, A. Radar Localization and Mapping for Indoor Disaster Environments via Multi-modal Registration to Prior LiDAR Map. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1307–1314. [Google Scholar] [CrossRef]

- Greenfeld, J.S. Matching GPS Observations to Locations on a Digital Map. Transp. Res. Board 2002, 22, 576–582. [Google Scholar]

- Scott, C. Improved GPS Positioning for Motor Vehicles through Map Matching. Proc. ION GPS 1994, 2, 1391–1400. [Google Scholar]

- Syed, S.; Cannon, M.E. Fuzzy Logic Based-Map Matching Algorithm for Vehicle Navigation System in Urban Canyons. Proc. Natl. Tech. Meet. Inst. Navig. 2004, 2004, 982–993. [Google Scholar]

- Liu, W.; Xia, X.; Xiong, L.; Lu, Y.; Gao, L.; Yu, Z. Automated Vehicle Sideslip Angle Estimation Considering Signal Measurement Characteristic. IEEE Sens. J. 2021, 21, 21675–21687. [Google Scholar] [CrossRef]

- Radar UMRR-11 Type 132 Data Sheet. Available online: https://autonomoustuff.com/products/sms-automotive-radar-umrr-11 (accessed on 24 April 2023).

- Xsens MTi-G-710 Data Sheet. Available online: https://www.xsens.com/hubfs/Downloads/Leaflets/MTi-G-710.pdf (accessed on 24 April 2023).

- Radar UMRR-96 Type 153 Data Sheet. Available online: https://autonomoustuff.com/products/smartmicro-automotive-radar-umrr-96 (accessed on 23 April 2023).

- u-blox ZED-F9R module-Data Sheet. Available online: https://www.u-blox.com/en/product/zed-f9r-module (accessed on 17 May 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).