Abstract

Since introducing the Transformer model, it has dramatically influenced various fields of machine learning. The field of time series prediction has also been significantly impacted, where Transformer family models have flourished, and many variants have been differentiated. These Transformer models mainly use attention mechanisms to implement feature extraction and multi-head attention mechanisms to enhance the strength of feature extraction. However, multi-head attention is essentially a simple superposition of the same attention, so they do not guarantee that the model can capture different features. Conversely, multi-head attention mechanisms may lead to much information redundancy and computational resource waste. In order to ensure that the Transformer can capture information from multiple perspectives and increase the diversity of its captured features, this paper proposes a hierarchical attention mechanism, for the first time, to improve the shortcomings of insufficient information diversity captured by the traditional multi-head attention mechanisms and the lack of information interaction among the heads. Additionally, global feature aggregation using graph networks is used to mitigate inductive bias. Finally, we conducted experiments on four benchmark datasets, and the experimental results show that the proposed model can outperform the baseline model in several metrics.

1. Introduction

Originally designed to solve machine translation problems [,], the Transformer [,] model has been widely introduced into computer vision (CV) [,,], natural language processing (NLP) [], speech processing [,,], audio processing [,], chemistry [], and life sciences [] due to its powerful modelling capabilities and applicability. It has contributed significantly to the development of these fields.

In computer vision, Convolutional Neural Networks (CNNs) [,,] are traditionally used as the primary means of processing. Convolution is well suited for processing regular, high-dimensional data and allows for automatic feature extraction. However, convolution suffers from obvious localisation constraints. The conditional assumption is that points in the space are only associated with their neighbouring grids, whereas distant grids are not associated with each other. Although this limitation can be alleviated to some extent by expanding the convolution kernel, it still cannot solve the problem fundamentally. After introducing the Transformer, some researchers have tried to introduce the Transformer model architecture into the field of computer vision. Transformer has a larger field of perception than CNN, so it captures rich global information and can better understand the whole image. Ramachandran et al. [] constructed a vision model without using convolution, which uses a full-attention mechanism instead of convolution to improve the localisation constraint in convolution. In addition, Transformer has shown excellent performance in other CV areas such as image classification [,], object detection [,], semantic segmentation [], image processing [], and video understanding [].

Sequential data are more suitable for processing using Transformer than computer vision. In the traditional field of time series prediction, most of them rely on Recurrent Neural Network (RNN) [,] models, among which the more influential ones include Gated Recurrent Unit (GRU) [] and Long Short-term Memory (LSTM) [,] networks. For example, Mou et al. [] proposed a Time-Aware LSTM (T-LSTM) with temporal information enhancement, whose main idea is to divide memory states into short-term memory and long-term memory, adjust the influence of short-term memory according to the time interval between inputs (the longer the time interval, the smaller the influence of short-term memory), and then reorganise the adjusted short-term memory and long-term memory into a new memory state. However, the emergence of Transformer soon shook the dominance of RNN family models in the field of time series prediction because of the following bottlenecks of RNNs in dealing with long-time prediction problems.

(1) Parallelism bottleneck: The RNN family of models requires the input data to be arranged in temporal order and computed sequentially according to the order of arrangement. This serial structure has the advantage that it inherently contains the portrayal of positional relationships, but it also constrains the model from being computed in parallel. Especially when facing long sequences, the inability to parallelise means more time and cost.

(2) Gradient bottleneck []: One performance bottleneck of RNN networks is the frequent problem of gradient disappearance or gradient explosion during training. Most neural network models optimise model parameters by computing gradients. Gradient disappearance or gradient explosion can cause the model to fail to converge or converge too slowly, which means that for the RNN family of networks, it is difficult to make the model better by increasing the number of iterations or increasing the size of the network.

(3) Memory bottleneck: For each moment, the RNN network requires a positional input and a hidden input , which will be fused within the model according to the inherent rules to produce a hidden state . Therefore, when the sequence length is too long, the almost no longer contains the earlier positional input; that is, the “forgetting” phenomenon occurs.

Compared with the RNN family of models, Transformer portrays the positional relationships between sequences by positional encoding without recursively feeding sequential data. This processing makes the model more flexible and provides the maximum possible parallelisation for time series data. The positional encoding also ensures that no forgetting occurs. The information at each location has an equal status for the Transformer. Additionally, using an attention mechanism to extract internal features allows the model to choose to focus on important information. The problem of gradient disappearance or gradient explosion can be avoided by ignoring irrelevant and redundant information. Therefore, based on the above advantages of Transformer models, many scholars are now trying to use Transformer models for time series tasks.

2. Research Background

Transformer is a typical encoder-decoder-based sequence-to-sequence [] model, and this structure is well suited for processing sequence data. Several researchers have tried to improve the Transformer model to meet the needs of more complex applications. For example, Kitaev et al. [] proposed a Reformer model that uses Locality Sensitive Hashing Attention (LSH) to reduce the complexity of the original model from to . Zhou et al. [] proposed an Informer model for Long Sequence Time Series Forecasting (LSTF), which accurately captures the long-term dependence between output and input and exhibits high predictive power. Wu et al. [] proposed the Autoformer model, which uses a deep decomposition architecture and an autocorrelation mechanism to improve LSTF accuracy. The Autoformer model achieves desirable results even when the series is predicted much longer than the length of the input series, i.e., it can predict the longer-term future based on limited information. Zhou et al. [] proposed the FEDformer model, which provides a way to apply the attention mechanism in the frequency domain and can be used as an essential complement to the time domain analysis.

The Transformer model described above focuses on reducing its temporal and spatial complexity, but needs to enhance the diversity of the information it captures. The attention mechanism is the core part of the Transformer used for feature extraction. It is designed to allow the model to focus on more important information, which means there is a certain amount of information loss. The multi-head attention mechanism can compensate for this. However, since each attention head captures similarly, there is no way to ensure that each attention head is capturing different vital features. Since the multi-head attention mechanism essentially divides multiple mutually independent subspaces, this approach completely cuts off the connection between each subspace, which leads to a lack of interaction between the information captured by multiple heads. Based on these problems, this paper proposes a hierarchical attention mechanism that features each layer using a different attention mechanism to capture features. The higher layers will use the information captured by the lower layers, thus enhancing the Transformer’s ability to perceive deeper information.

3. Research Methodology

3.1. Problem Description

Initially, the Transformer model was proposed by Waswani et al. to solve the machine translation problem, so Vanilla Transformer is more suitable for processing textual data. For example, the primary processing unit of the Vanilla Transformer model is a word vector, and each word vector is called a token. In contrast, in the time series prediction problem, our basic processing unit becomes a timestamp. If we want to apply Transformer to a time series problem, the reasonable idea is to encode the multivariate sequence information of each timestamp into a token vector. This modelling approach is also the treatment of many mainstream Transformer-like models.

Here, for the convenience of the subsequent description, we define the dimension of the token as d, the input length of the model as I, and the output length as O. Further, the model’s input can be defined as , and the model’s output as . Therefore, this paper aims to learn a mapping from the input space to the output space.

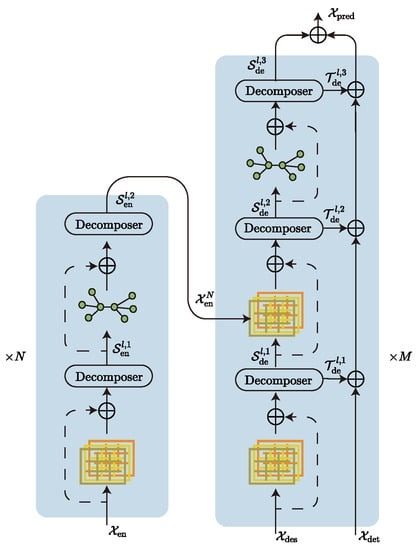

3.2. Model Architecture

Our model (Figure 1) continues the Transformer architecture in the main body, and we also added a decomposer to the model by referring to Autoformer’s sequence decomposition model. The function of the decomposer is to filter trend-cyclical and seasonal parts. The advantage is that removing trend parts from the series allows the model to focus better on the hidden periodic information of the series, and Wu et al. [] have shown that this decomposition is effective. In addition, the model uses a coder–decoder structure, where the encoder is responsible for mapping the information from the input space to the feature space, and the decoder is responsible for mapping the information from the feature space to the target space. The model is a typical sequence-to-sequence model, since both the input and output of the model are sequence-type data. In addition, we try to use a hierarchical attention mechanism instead of the original multi-head attention mechanism and a graph network instead of the original feedforward neural network inside the codec, which can improve the diversity of captured information and the mitigate token-uniformity inductive bias [,] of the model, respectively.

Figure 1.

Model Body Structure.

3.2.1. Decomposer

The main difficulty of time series forecasting lies in discovering the hidden trend-cyclical and seasonal parts information from the historical series. The trend-cyclical records the overall trend of the series, which has an essential influence on the long-term trend-cyclical of the series. The seasonal parts record the hidden cyclical pattern of the series, which mainly shows the regular fluctuation of the series in the short term. It is generally difficult to predict these two pieces of information simultaneously. The basic idea is to decompose the two, extracting the trend-cyclical from the sequence using average pooling and filtering the seasonal period using the trend-cyclical, which is how Decomposer implements the decomposed information, as shown in Algorithm 1.

| Algorithm 1 Decomposer |

| Require: |

| Ensure: |

|

Here, is the input sequence of length L. is the decomposed trend-cyclical and seasonal parts where the role of padding is to ensure that the decomposed series remains equal in dimension to the input sequence.

The decomposer module has a relatively simple structure. However, it can decompose the forecasting task into two subtasks, i.e., mining hidden periodic patterns and forecasting overall trends. This decomposition can reduce the difficulty of prediction to a certain extent and, thus, improve the final prediction results.

3.2.2. Encoder

The encoder is mainly responsible for encoding the input data and realizing the transformation from the input space to the feature space. The decomposer in the encoder is more like a filter because, in the encoder, we focus more on the seasonal parts of the sequence and ignore the trend-cyclical. The input data are passed through a hierarchical attention layer for initial key feature extraction. After which, the decomposer extracts the seasonal part’s features in the sequence and they are further fed into the graph network to mitigate inductive bias. After stacking N layers, The seasonal parts features thus obtained will be auxiliary inputs to the decoder. Algorithm 2 describes the computation procedure.

| Algorithm 2 Encoder |

| Require: |

| Ensure: |

|

Here, denotes the historical observation sequence. N denotes the number of stacked layers of the encoder. denotes the output of the N-th layer encoder. denotes the decomposer operator. denotes the graph network operator and denotes the hierarchical attention mechanism, the concrete implementation of which will be described later.

3.2.3. Decoder

The structure of the decoder is more complex than that of the encoder. However, its internal modules are identical to the encoder’s, but use a multi-input structure. It goes through two hierarchical attention calculations and three sequence decompositions in turn. Assuming that the model’s encoder is a feature catcher, the decoder is a feature fuser that fuses and corrects the inputs from different sources to obtain the correct prediction sequence. The decoder has three primary input sources: the seasonal parts and the trend-cyclical extracted from the original series, and the seasonal parts captured by the decoder. The computation of the trend-cyclical and seasonal parts is kept relatively independent throughout the computation process. Only at the final output is a linear layer used to fuse the two to obtain the final prediction . The computation process is described in Algorithm 3.

| Algorithm 3 Decoder |

| Require: |

| Ensure: |

|

Here, denotes the original sequence, which is also the input to the encoder. It is decomposed into trend-cyclical and season parts before feeding into the decoder as the initial input.

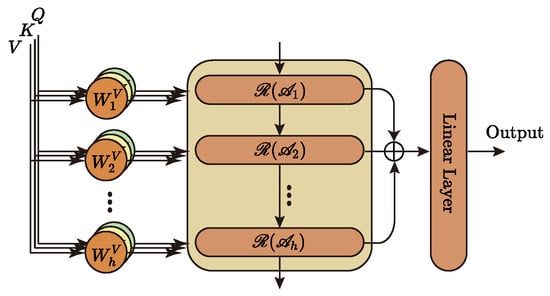

3.3. Hierarchical Attention Mechanism

The hierarchical attention mechanism, as the first feature capture unit of Metaformer, is at the model’s core and, therefore, has a significant impact on the subsequent work. Most Transformer-like models use the multi-head attention mechanism to complete the first step of feature extraction. However, the multi-head attention mechanism itself has significant drawbacks: (1) each head uses the exact attention mechanism, which cannot guarantee the diversity of captured information and may even miss some critical information. (2) Each head belongs to a separate subspace, and the lack of information interaction between heads is not conducive to the deep understanding of information by the model. Therefore, we propose a hierarchical attention mechanism for the first time. First, a hierarchical structure is used, where each layer uses a different attention mechanism to capture features separately, which ensures the diversity of information circulating in the network; second, a cascading interaction is used, where the information captured by the lower layer will be reused by the upper layer, which will deepen the depth of information understanding by the model. We know that when we humans understand language, we not only focus on the surface meaning of words, but can also understand the metaphors behind the words. Inspired by this, we use a hierarchical structure to model this phenomenon and, thus, improve the network’s ability to perceive information in three dimensions.

3.3.1. Traditional Multi-Head Attention Mechanism

In the multi-head attention mechanism, only one type of attention computation scaled dot-product attention is used. The multi-head attention mechanism first takes as input three vectors of queries, keys, and values with dimension, and each head is projected to and dimensions using a linear layer. The attention function is then computed to produce a dimensional output value. Finally, the output of each attention head is stitched together and passed through a linear layer to obtain the final output.

Equation (2) calculates the multi-headed attention mechanism, where denotes the linear layer with projection parameter matrix , respectively. h denotes the number of heads of attention. denotes scaled dot-product attention. ∐ denotes sequential cascade.

3.3.2. Hierarchical Attention Mechanism

We propose a hierarchical attention mechanism to address the shortcomings in the multi-head attention mechanism, aiming to enhance the model’s deep understanding of the information. Figure 2 depicts the central architecture of the hierarchical attention mechanism, and Algorithm 4 describes its implementation.

| Algorithm 4 Hierachical Attention |

| Require: |

| Ensure: |

|

Figure 2.

Hierarchical attention mechanism.

Here, has the same meaning as in Equation (2). denotes the GRU unit. records the information of each layer and finally maps it to the specified dimension as the model’s output by a linear layer. denotes different attention calculation methods. This paper mainly uses four common attention mechanisms: Vanilla Attention, ProbSparse Attention, LSH Attention, and AutoCorrelation. AutoCorrelation is not, strictly speaking, part of the attention mechanism family. However, its effect is similar to or even better than attention mechanisms, so it is introduced into our model and involved in feature extraction.

Attention is the core building block of Transformer and is considered an essential tool for information capture in both CV and NLP domains. Many researchers have worked on designing more efficient attention, so many variants based on Vanilla Attention have been proposed in succession. The following briefly describes the four attention mechanisms used in our model.

3.3.3. Vanilla Attention

Vanilla Attention was first proposed in the Transformer [], and its input consists of three vectors: queries, keys, and values (), whose dimensions are , respectively. Vanilla Attention is also known as Scaled Dot Product Attention because it is computed by dot product using and and then scaled by . The specific calculation process is shown in Equation (3).

Here, denotes the attention or autocorrelation mechanism. denotes the softmax activation function.

3.3.4. ProbSparse Attention

This attention mechanism, first proposed in Informer, considers the attention coefficients’ sparsity and specifies the query matrix using the exact query sparsity measurement method (Algorithm 5). Equation (4) gives the ProbSparse Attention calculation method.

Here, is the sparse matrix obtained by the sparsity measure. The prototype of is Kullback–Leibler (KL) divergence, see Equation (5).

| Algorithm 5 Explicit Query Sparisity Measurement |

| Require: |

| Ensure: |

|

3.3.5. LSH Attention

Like ProbSparse Attention, LSH Attention also uses a sparsification method to reduce the complexity of Vanilla Attention. The main idea is that for each query, only the nearest keys are focused on, where the nearest neighbour selection is achieved by locally sensitive hashing. The specific attentional process of LSH Attention is given in Equation (6), where the hash function used is Equation (7):

where denotes the set of key vectors that the i-th query focuses on. is used to measure the association of nodes i and j.

3.3.6. AutoCorrelation

AutoCorrelation mechanisms are different from the types of attention mechanisms above. Whereas the self-attentive family focuses on the correlation between points, the AutoCorrelation mechanism focuses on the correlation between segments. Therefore, AutoCorrelation mechanisms are an excellent complement to self-attentive mechanisms.

Equation (8) gives the procedure of calculating the AutoCorrelation mechanism, where Equation (9) is used to measure the correlation between two sequences, and denotes the order of the lag term. denotes the vector of -order lagged terms of vector obtained in a self-looping manner. Equation (10) is the Topk algorithm used to filter the set of k lagged terms with the highest correlation.

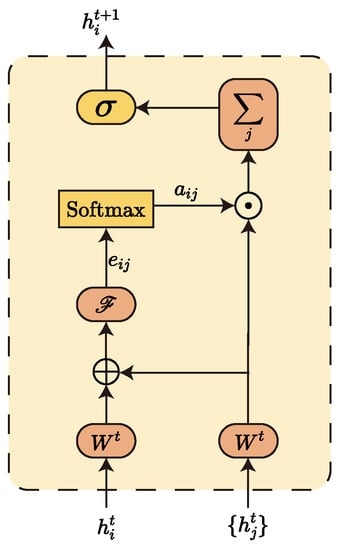

3.4. GAT Network

The Vanilla Transformer model embeds a Feedforward Network (FFN) [] layer at the end of each encoder–decoder layer. The FFN plays a crucial role in mitigating token-uniformity inductive bias. Inductive bias can be considered a learning algorithm as a heuristic or “value” for selecting hypotheses in ample hypothesis space. For example, convolutional networks assume that information is spatially local, spatially invariant, and translational equivalent, so that the parameter space can be reduced by sliding convolutional weight sharing; recurrent neural networks assume that information is sequential and invariant to temporal transformations, so that weight sharing is also possible. Similarly, the attention mechanism also has some assumptions, such as the uselessness of some information. If the attention mechanism is stacked, some critical information will be lost, so adding a layer of FNN can somehow alleviate the accumulation of inductive bias and avoid network collapse. Of course, not only does the FFN layer have a mitigating effect, but we find that a similar effect can be achieved using a Graph Neural Network (GNN) [,,]. Here, we use a two-layer GAT [,] network instead of the original FFN layer. The graph network has the property of aggregating the information of neighbouring nodes, i.e., through the aggregation of the graph network, each node will fuse some features of its neighbouring nodes. Additionally, we use random sampling to reduce the complexity. The reason is that our goal is not feature aggregation, but to mitigate the loss of crucial information. In particular, when the number of samples per node is 0, the graph network can be considered to ultimately degenerate into an FFN layer with a similar role to the original FFN.

Here, we model each token as a node in the graph and mine the dependencies between nodes using the graph attention algorithm. The input to GAT is defined as . Here, denotes the input vector of the i-th node, N denotes the number of nodes in the graph, and F denotes the dimensionality of the input vector. Through the computation of the GAT network, this layer generates a new set of node features . Similarly, here denotes the output vector of the i-th node, and denotes the dimensionality of the output vector.

Figure 3 gives the general flow of information aggregation for a single node. Equation (11) is a concrete implementation of calculating the attention coefficient for the i-th node and its neighbour node j one by one. Equation (12) is used to calculate the normalised attention factor :

Figure 3.

Feature aggregation process for node i in GAT network.

Here, denotes the set of all neighbouring nodes of the i-th node, and is a shared parameter for linear mapping of node features. is a single-layer feedforward neural network for mapping the spliced high-dimensional features into a real number . is the attention coefficient of node , and is its normalised value.

Finally, the new feature vector of the current node i is obtained by weighting and summing the feature vectors of each neighbouring node according to the calculated attention coefficients, where records the neighbourhood information of the current node.

Here, represents applying a non-linear activation function logistic sigmoid at the end.

Furthermore, if information aggregation is accomplished through the K head attention mechanism, the final output vector can be obtained by taking the average.

4. Experiment

4.1. Dataset Description

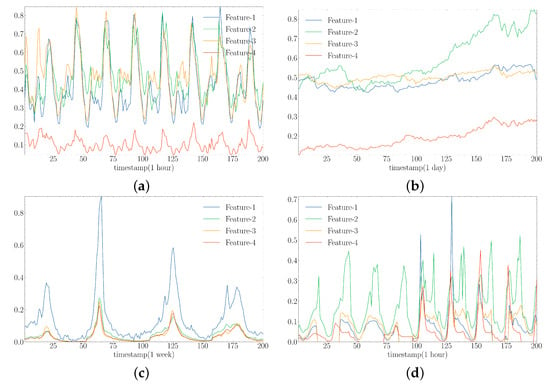

To evaluate the Metaformer model, we conducted experiments on four popular real-world datasets encompassing energy, economy, disease, and transportation domains. The Electricity (https://archive.ics.uci.edu/ml/datasets/ElectricityLoadDiagrams20112014, accessed on 24 February 2023) dataset describes the hourly electricity consumption of 321 customers; the Exchange [] dataset describes the daily exchange rates of eight countries; the Illness (https://gis.cdc.gov/grasp/fluview/fluportaldashboard.html, accessed on 24 February 2023) dataset is the weekly data of influenza-like illnesses recorded by the Centers for Disease Control; and the Traffic (http://pems.dot.ca.gov/, accessed on 24 February 2023) dataset describes the occupancy rate of roads in the San Francisco Bay area.

Table 1 shows detailed dataset statistics, where #Sample is the total number of samples, #Features is the number of features acquired per sampling, Period is the sampling period, and Span is the sampling time span.

Table 1.

Overall statistical indicators for the selected dataset.

Since the scale of each element in the dataset is not uniform, we need to normalise the data before formal training for the model to treat different features equally during training. Equations (15) and (16) are the normalisation and denormalisation calculation methods, respectively, where X denotes the original sampled dataset and denotes the normalised dataset. Figure 4 shows the variation of the four normalised features randomly selected from the four data sets.

Figure 4.

Variation of the four randomly selected normalised features in the dataset. (a) Electricity. (b) Exchange. (c) Illness. (d) Traffic.

4.2. Comparison Experiments

4.2.1. Baseline Models

To validate the predictive performance of our proposed model, we thoroughly compare it with some state-of-the-art time series prediction models, including Autoformer [], Informer [], Reformer [], LogTrans [], LSTNet [], LSTM [], and TCN []. Among them, Autoformer, Informer, Reformer, and LogTrans are all improved models based on Transformer. Autoformer uses an adaptive attention mechanism and dynamic feature transformation to adapt to different time steps and missing data, and can handle long sequences well. LogTrans is an autoregressive model that can take nonlinear and non-stationary data with good robustness and robustness by the logarithmic transformation of the input data. LSTM is a classical recurrent neural network model with a gating mechanism that can effectively deal with the forgetting problem of long-series data prediction. TCN is a convolutional neural network model that can handle the long-term dependence and nonlinear variation of long series by adding residual connections between the convolutional layers, and has high efficiency, good robustness, and small memory occupation.

4.2.2. Experimental Setup

To standardise the sequence input length for comparison, we use a 7:1:2 ratio to split the Electricity, Exchange, and Traffic datasets into training, validation, and test sets, respectively, and set the prediction length accordingly. For the ILI dataset, we use a 6:2:2 split and set the prediction length accordingly. We set the dimensionality of the model to and use a hierarchical attention mechanism with four layers, which stacks AutoCorrelation, Vanilla Attention, LSH Attention, and ProbSparse Attention from top to bottom. The number of attention heads is set to 2. Additionally, to ensure comparability, we uniformly set the number of heads to 8 for the multi-headed attention mechanism in the other Transformer families involved in the comparison. In the GAT network, we use a two-layer architecture with a middle hidden layer dimension of 1024, and each node is assigned to have only one edge pointing to itself (self-loop graph). The sliding window size of the decoder’s moving average is set to 25, the number of encoder layers is set to , and the number of decoder layers is set to . We use MSE as the loss function and Adam as the optimiser with a learning rate of 0.0001. We train the model for 20 iterations, but employ an early termination strategy with a tolerance of 3.

4.2.3. Experimental Result

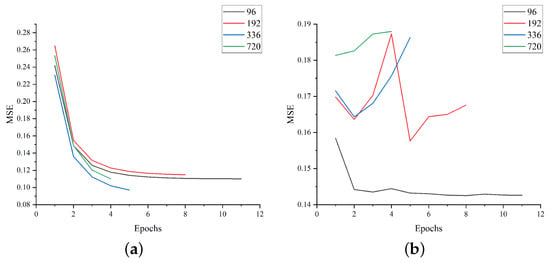

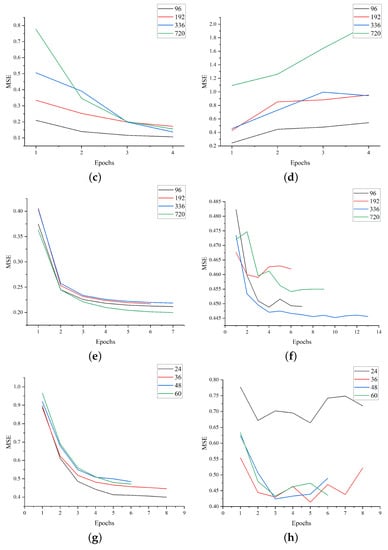

Figure 5 shows the decreasing trend of the loss value in the training set and the loss value in the test set of our model during the training process. Table 2 presents an overall comparison between our model and other baseline models. The table shows that the Transformer-based model delivers significantly better predictions than other models. Autoformer performs well on several datasets and exhibits lower MAE and MSE values than other models. Informer is also a good model, but does not perform as well as Autoformer on some datasets, where LSTM and TCN generally exhibit higher MAE and MSE values. In contrast, our model achieves optimal or suboptimal accuracy levels for different prediction lengths on different datasets. Its overall performance is better than other baseline models, indicating that our model can satisfy most sequence prediction tasks.

Figure 5.

The MSE loss plots for the Metaformer model on the training and test sets for the four datasets. (a) Electricity (train), (b) Electricity (vali), (c) Exchange (train), (d) Exchange (vali), (e) Traffic (train), (f) Traffic (vali), (g) ILI (train), (h) ILI (vali).

Table 2.

Multivariate long-term series prediction results for four datasets with an input length of and prediction length of . Lower MSE and MAE values indicate better results, and the best results are highlighted in bold.

4.3. Ablation Experiments

Additional ablation experiments were conducted to investigate further the impact of different graph structures in alleviating the inductive bias. Table 3 presents three different graph structures, where Meta-v1 indicates that all nodes in the graph use only a self-loop structure; Meta-v2 indicates that all nodes in the graph use full bi-directional connectivity; and Meta-v3 indicates that all nodes in the graph have a self-loop structure for each node, in addition to full bi-directional connectivity. Table 4 displays the performance of three variants of the Metaformer model on the four datasets.

Table 3.

Three variants of Metaformer. ✓ and ✗ indicate that the specified structure was or was not used, respectively.

Table 4.

Performance of three variants of the Metaformer model on four datasets.

As shown in Table 4, the Meta-v1 variant of the model, which uses only the self-loop graph, generally outperforms the other variants across multiple measures. This phenomenon may be because the self-loop edges are self-weighted, which is more effective in reducing the inductive bias of the attention mechanism in the Metaformer model by reinforcing the features of specific nodes. Conversely, adding a fully connected mechanism may further exacerbate the information perturbation. However, due to limited experimental resources, we cannot conduct a more in-depth study. In future work, we will further investigate how random sampling of neighbouring nodes, including more attention mechanisms, and the stacking order of these attention mechanisms affect the model’s performance.

5. Conclusions

This paper presents a redesigned sequence-to-sequence model based on the Transformer architecture. We draw inspiration from the sequence decomposition model of Autoformer and introduce a similar approach to separate trend and seasonal items. Additionally, we propose a hierarchical attention mechanism to address the problem of incomplete and insufficient information mining by multiple attention mechanisms in the Vanilla Transformer model. Our hierarchical attention mechanism employs different attention mechanisms simultaneously to ensure diversity in information mining. The hierarchical structure recursively passes information captured by lower-level attention upward, enabling interaction between multiple attention mechanisms and deepening the network’s understanding of more profound information. This mechanism is beneficial in capturing the metaphorical information present in both text and images. We also add a graph attention network to the model, allowing it to stand in a high-dimensional perspective to aggregate and mitigate the inductive bias of the information. Our experimental results demonstrate that our proposed model outperforms the baseline model across multiple datasets and significantly improves all evaluation metrics.

Author Contributions

Conceptualization, B.P. and Y.D.; data curation, B.P. and W.K.; formal analysis, B.P.; funding acquisition, Y.D.; investigation, B.P.; methodology, B.P.; project administration, Y.D.; writing—review and editing, B.P., Y.D. and W.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Science Foundation of China and General Project Fund in the Field of Equipment Development Department, grant number No. 61901079, No. 61403110308. The APC was funded by Dalian University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Wang, Q.; Li, B.; Xiao, T.; Zhu, J.; Li, C.; Wong, D.F.; Chao, L.S. Learning deep transformer models for machine translation. arXiv 2019, arXiv:1906.01787. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. 2017. Available online: http://arxiv.org/abs/1706.03762 (accessed on 23 January 2021).

- Lin, T.; Wang, Y.; Liu, X.; Qiu, X. A survey of transformers. AI Open 2022. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Parmar, N.; Vaswani, A.; Uszkoreit, J.; Kaiser, L.; Shazeer, N.; Ku, A.; Tran, D. Image transformer. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 4055–4064. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Chen, X.; Wu, Y.; Wang, Z.; Liu, S.; Li, J. Developing real-time streaming transformer transducer for speech recognition on large-scale dataset. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 July 2021; pp. 5904–5908. [Google Scholar]

- Dong, L.; Xu, S.; Xu, B. Speech-transformer: A no-recurrence sequence-to-sequence model for speech recognition. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5884–5888. [Google Scholar]

- Gulati, A.; Qin, J.; Chiu, C.C.; Parmar, N.; Zhang, Y.; Yu, J.; Han, W.; Wang, S.; Zhang, Z.; Wu, Y.; et al. Conformer: Convolution-augmented transformer for speech recognition. arXiv 2020, arXiv:2005.08100. [Google Scholar]

- Huang, C.Z.A.; Vaswani, A.; Uszkoreit, J.; Shazeer, N.; Simon, I.; Hawthorne, C.; Dai, A.M.; Hoffman, M.D.; Dinculescu, M.; Eck, D. Music transformer. arXiv 2018, arXiv:1809.04281. [Google Scholar]

- Huang, Y.S.; Yang, Y.H. Pop music transformer: Beat-based modeling and generation of expressive pop piano compositions. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 1180–1188. [Google Scholar]

- Schwaller, P.; Laino, T.; Gaudin, T.; Bolgar, P.; Hunter, C.A.; Bekas, C.; Lee, A.A. Molecular transformer: A model for uncertainty-calibrated chemical reaction prediction. ACS Cent. Sci. 2019, 5, 1572–1583. [Google Scholar] [CrossRef]

- Rives, A.; Meier, J.; Sercu, T.; Goyal, S.; Lin, Z.; Liu, J.; Guo, D.; Ott, M.; Zitnick, C.L.; Ma, J.; et al. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. Proc. Natl. Acad. Sci. USA 2021, 118, e2016239118. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 July 2016; pp. 770–778. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 1, 91–99. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Ramachandran, P.; Parmar, N.; Vaswani, A.; Bello, I.; Levskaya, A.; Shlens, J. Stand-alone self-attention in vision models. Adv. Neural Inf. Process. Syst. 2019, 32, 68–80. [Google Scholar]

- Chen, M.; Radford, A.; Child, R.; Wu, J.; Jun, H.; Luan, D.; Sutskever, I. Generative pretraining from pixels. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 1691–1703. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.; et al. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6881–6890. [Google Scholar]

- Van Der Westhuizen, J.; Lasenby, J. The unreasonable effectiveness of the forget gate. arXiv 2018, arXiv:1804.04849. [Google Scholar]

- Graves, A.; Graves, A. Long short-term memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin/Heidelberg, Germany, 2012; pp. 37–45. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Van Houdt, G.; Mosquera, C.; Nápoles, G. A review on the long short-term memory model. Artif. Intell. Rev. 2020, 53, 5929–5955. [Google Scholar] [CrossRef]

- Mou, L.; Zhao, P.; Xie, H.; Chen, Y. T-LSTM: A long short-term memory neural network enhanced by temporal information for traffic flow prediction. IEEE Access 2019, 7, 98053–98060. [Google Scholar] [CrossRef]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the difficulty of training recurrent neural networks. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 1310–1318. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. Adv. Neural Inf. Process. Syst. 2014, 27, 3104–3112. [Google Scholar]

- Kitaev, N.; Kaiser, Ł.; Levskaya, A. Reformer: The efficient transformer. arXiv 2020, arXiv:2001.04451. [Google Scholar]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond efficient transformer for long sequence time-series forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 11106–11115. [Google Scholar]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. Adv. Neural Inf. Process. Syst. 2021, 34, 22419–22430. [Google Scholar]

- Zhou, T.; Ma, Z.; Wen, Q.; Wang, X.; Sun, L.; Jin, R. FEDformer: Frequency enhanced decomposed transformer for long-term series forecasting. arXiv 2022, arXiv:2201.12740. [Google Scholar]

- Chollet, F. On the measure of intelligence. arXiv 2019, arXiv:1911.01547. [Google Scholar]

- Baxter, J. A model of inductive bias learning. J. Artif. Intell. Res. 2000, 12, 149–198. [Google Scholar] [CrossRef]

- Domingos, P. A few useful things to know about machine learning. Commun. ACM 2012, 55, 78–87. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Li, Y.; Tarlow, D.; Brockschmidt, M.; Zemel, R. Gated graph sequence neural networks. arXiv 2015, arXiv:1511.05493. [Google Scholar]

- Hamilton, W.; Ying, Z.; Leskovec, J. Inductive representation learning on large graphs. Adv. Neural Inf. Process. Syst. 2017, 30, 1025–1035. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Chen, H.; Hong, P.; Han, W.; Majumder, N.; Poria, S. Dialogue relation extraction with document-level heterogeneous graph attention networks. Cogn. Comput. 2023, 15, 793–802. [Google Scholar] [CrossRef]

- Lai, G.; Chang, W.C.; Yang, Y.; Liu, H. Modeling long-and short-term temporal patterns with deep neural networks. In Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018; pp. 95–104. [Google Scholar]

- Li, S.; Jin, X.; Xuan, Y.; Zhou, X.; Chen, W.; Wang, Y.X.; Yan, X. Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. Adv. Neural Inf. Process. Syst. 2019, 32, 5243–5253. [Google Scholar]

- Hao, H.; Wang, Y.; Xia, Y.; Zhao, J.; Shen, F. Temporal convolutional attention-based network for sequence modeling. arXiv 2020, arXiv:2002.12530. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).