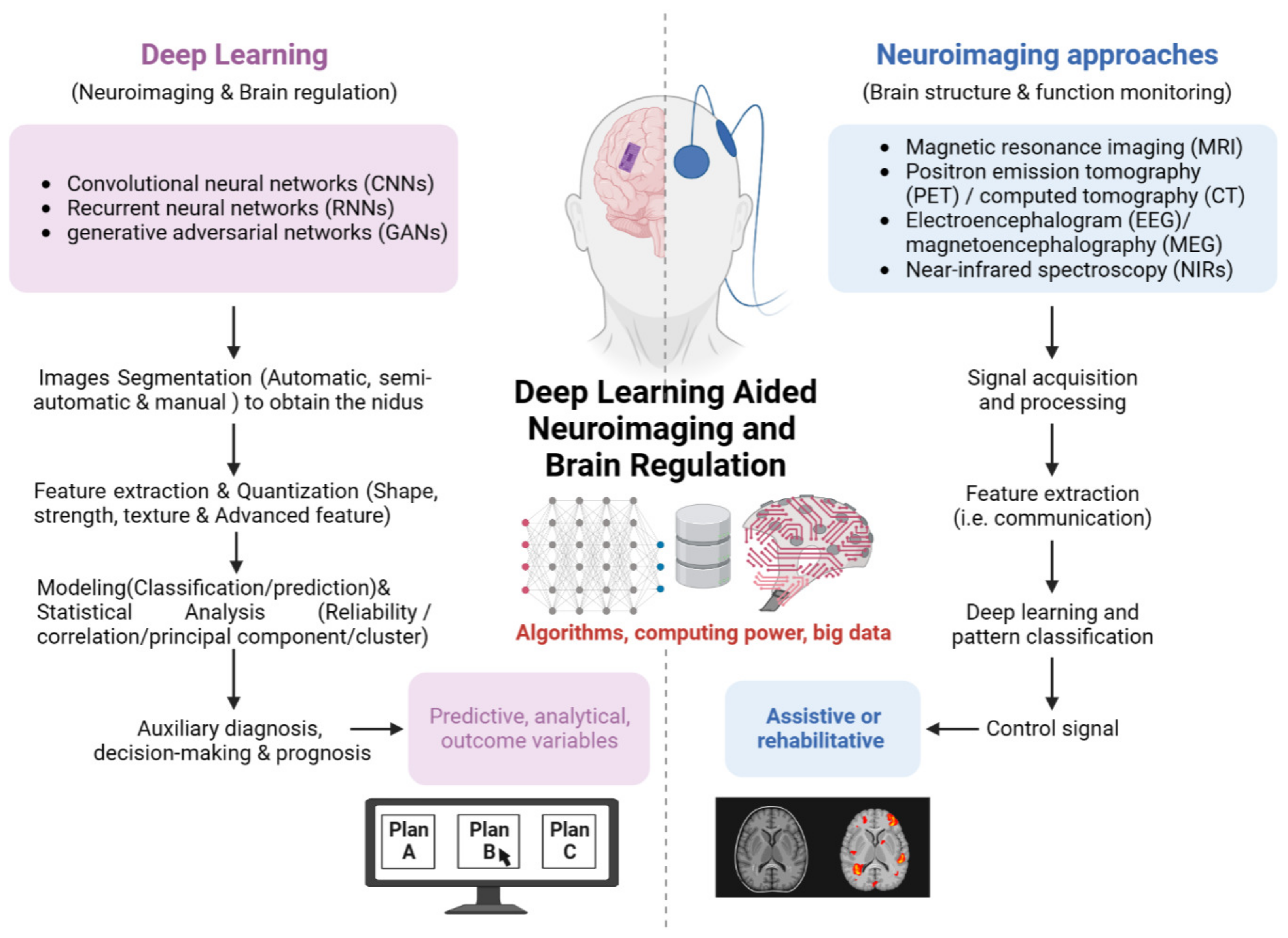

Deep Learning Aided Neuroimaging and Brain Regulation

Abstract

1. Introduction

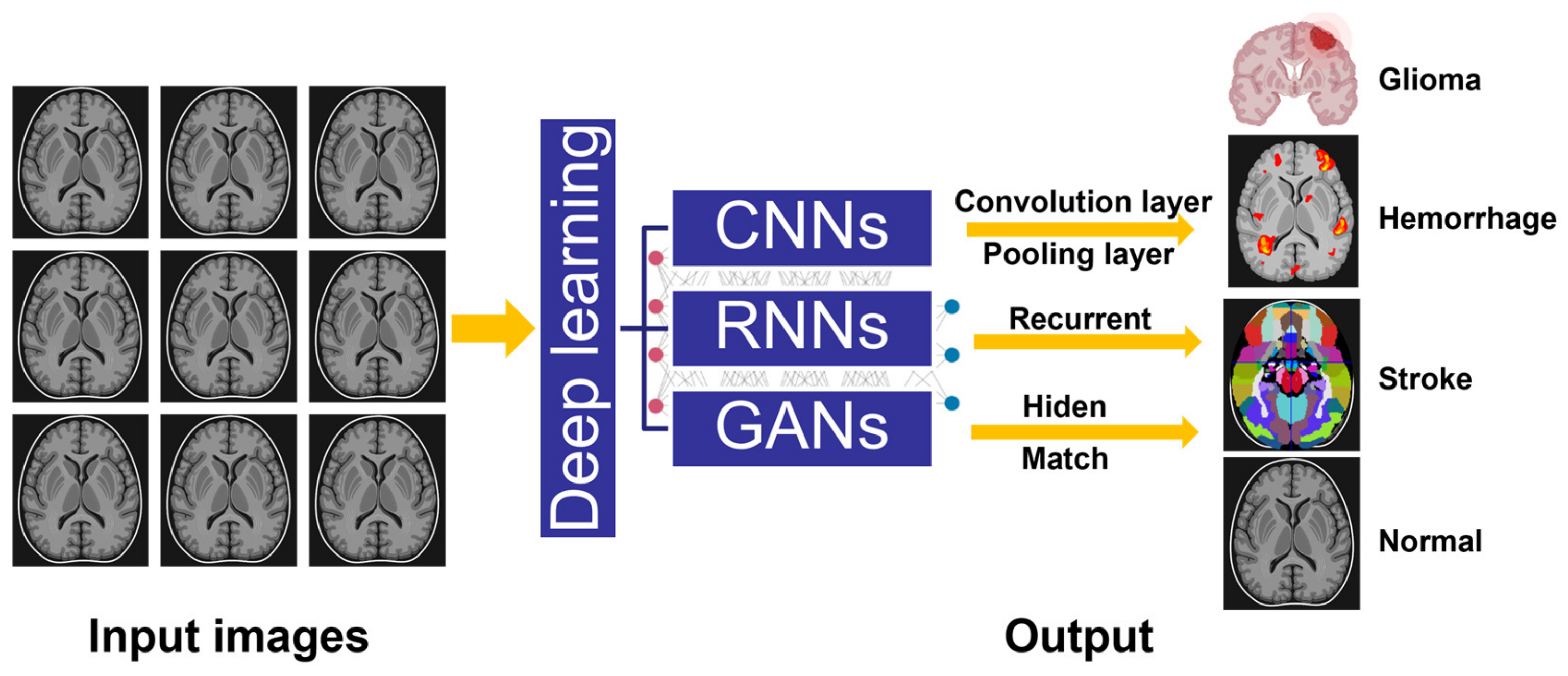

2. Evolution and Classification of Deep Learning Assisted Medical Imaging

2.1. Evolution of Artificial Intelligence in Medical Imaging

2.2. Convolutional Neural Networks (CNNs)

2.3. Recurrent Neural Networks (RNNs)

2.4. Generative Adversarial Networks (GANs)

3. Deep Learning Aided Neuroimaging for Brain Monitoring and Regulation

3.1. Deep Learning Assisted MRI

3.2. Deep Learning Assisted PET/CT

3.3. Deep Learning Assisted EEG/MEG

3.4. Deep Learning Assisted Optical Neuroimaging and Others

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Pesapane, F.; Codari, M.; Sardanelli, F. Artificial intelligence in medical imaging: Threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur. Radiol. Exp. 2018, 2, 35. [Google Scholar] [CrossRef] [PubMed]

- Bonacchi, R.; Filippi, M.; Rocca, M.A. Role of artificial intelligence in MS clinical practice. Neuroimage Clin. 2022, 35, 103065. [Google Scholar] [CrossRef] [PubMed]

- Diaz-Hurtado, M.; Martinez-Heras, E.; Solana, E.; Casas-Roma, J.; Llufriu, S.; Kanber, B.; Prados, F. Recent advances in the longitudinal segmentation of multiple sclerosis lesions on magnetic resonance imaging: A review. Neuroradiology 2022, 64, 2103–2117. [Google Scholar] [CrossRef] [PubMed]

- Guan, X.; Yang, G.; Ye, J.; Yang, W.; Xu, X.; Jiang, W.; Lai, X. 3D AGSE-VNet: An automatic brain tumor MRI data segmentation framework. BMC Med. Imaging 2022, 22, 6. [Google Scholar] [CrossRef]

- Saba, L.; Biswas, M.; Kuppili, V.; Cuadrado Godia, E.; Suri, H.S.; Edla, D.R.; Omerzu, T.; Laird, J.R.; Khanna, N.N.; Mavrogeni, S.; et al. The present and future of deep learning in radiology. Eur. J. Radiol. 2019, 114, 14–24. [Google Scholar] [CrossRef]

- Chen, R.; Huang, J.; Song, Y.; Li, B.; Wang, J.; Wang, H. Deep learning algorithms for brain disease detection with magnetic induction tomography. Med. Phys. 2021, 48, 745–759. [Google Scholar] [CrossRef]

- Graham, S.; Depp, C.; Lee, E.E.; Nebeker, C.; Tu, X.; Kim, H.C.; Jeste, D.V. Artificial Intelligence for Mental Health and Mental Illnesses: An Overview. Curr. Psychiatry Rep. 2019, 21, 116. [Google Scholar] [CrossRef]

- Scotton, W.J.; Bocchetta, M.; Todd, E.; Cash, D.M.; Oxtoby, N.; VandeVrede, L.; Heuer, H.; Prospect Consortium, R.C.; Alexander, D.C.; Rowe, J.B.; et al. A data-driven model of brain volume changes in progressive supranuclear palsy. Brain Commun. 2022, 4, fcac098. [Google Scholar] [CrossRef]

- Peeken, J.C.; Wiestler, B.; Combs, S.E. Image-Guided Radiooncology: The Potential of Radiomics in Clinical Application. Recent Results Cancer Res. 2020, 216, 773–794. [Google Scholar] [CrossRef]

- Wang, L.; Wu, Z.; Chen, L.; Sun, Y.; Lin, W.; Li, G. iBEAT V2.0: A multisite-applicable, deep learning-based pipeline for infant cerebral cortical surface reconstruction. Nat. Protoc. 2023, 18, 1488–1509. [Google Scholar] [CrossRef]

- Gillies, R.J.; Kinahan, P.E.; Hricak, H. Radiomics: Images Are More than Pictures, They Are Data1. Radiology 2016, 278, 563–577. [Google Scholar] [CrossRef]

- Tandel, S.G.; Biswas, M.; Kakde, O.G.; Tiwari, A.; Suri, H.S.; Turk, M.; Laird, J.R.; Asare, C.K.; Ankrah, A.A.; Khanna, N.N.; et al. A Review on a Deep Learning Perspective in Brain Cancer Classification. Cancers 2019, 11, 111. [Google Scholar] [CrossRef]

- Hou, Y.; Liu, Q.; Chen, J.; Wu, B.; Zeng, F.; Yang, Z.; Song, H.; Liu, Y. Application value of T2 fluid-attenuated inversion recovery sequence based on deep learning in static lacunar infarction. Acta Radiol. 2022, 64, 1650–1658. [Google Scholar] [CrossRef]

- Lambin, P.; Leijenaar, R.T.H.; Deist, T.M.; Peerlings, J.; de Jong, E.E.C.; van Timmeren, J.; Sanduleanu, S.; Larue, R.T.H.M.; Even, A.J.G.; Jochems, A.; et al. Radiomics: The bridge between medical imaging and personalized medicine. Nat. Rev. Clin. Oncol. 2017, 14, 749–762. [Google Scholar] [CrossRef]

- Wang, G.; Li, W.; Ourselin, S.; Vercauteren, T. Automatic brain tumor segmentation using cascaded anisotropic convolutional neural networks. In Proceedings of the Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries: Third International Workshop, BrainLes 2017, Held in Conjunction with MICCAI 2017, Quebec City, QC, Canada, 14 September 2017; pp. 178–190. [Google Scholar]

- Bi, X.; Wang, H. Early Alzheimer’s disease diagnosis based on EEG spectral images using deep learning. Neural Netw. 2019, 114, 119–135. [Google Scholar] [CrossRef]

- Tabar, Y.R.; Halici, U. A novel deep learning approach for classification of EEG motor imagery signals. J. Neural Eng. 2017, 14, 016003. [Google Scholar] [CrossRef]

- Sadat Shahabi, M.; Nobakhsh, B.; Shalbaf, A.; Rostami, R.; Kazemi, R. Prediction of treatment outcome for repetitive transcranial magnetic stimulation in major depressive disorder using connectivity measures and ensemble of pre-trained deep learning models. Biomed. Signal Process. Control 2023, 85, 104822. [Google Scholar] [CrossRef]

- Muhammad, K.; Khan, S.; Ser, J.D.; Albuquerque, V.H.C. Deep Learning for Multigrade Brain Tumor Classification in Smart Healthcare Systems: A Prospective Survey. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 507–522. [Google Scholar] [CrossRef] [PubMed]

- Park, J.E. Artificial Intelligence in Neuro-Oncologic Imaging: A Brief Review for Clinical Use Cases and Future Perspectives. Brain Tumor Res. Treat. 2022, 10, 69–75. [Google Scholar] [CrossRef]

- Bi, W.L.; Hosny, A.; Schabath, M.B.; Giger, M.L.; Birkbak, N.J.; Mehrtash, A.; Allison, T.; Arnaout, O.; Abbosh, C.; Dunn, I.F.; et al. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA Cancer J. Clin. 2019, 69, 127–157. [Google Scholar] [CrossRef]

- Alsubai, S.; Khan, H.U.; Alqahtani, A.; Sha, M.; Abbas, S.; Mohammad, U.G. Ensemble deep learning for brain tumor detection. Front. Comput. Neurosci. 2022, 16, 1005617. [Google Scholar] [CrossRef] [PubMed]

- Liang, B.; Wei, R.; Zhang, J.; Li, Y.; Yang, T.; Xu, S.; Zhang, K.; Xia, W.; Guo, B.; Liu, B.; et al. Applying pytorch toolkit to plan optimization for circular cone based robotic radiotherapy. Radiat. Oncol. 2022, 17, 82. [Google Scholar] [CrossRef] [PubMed]

- Lopes, E.M.; Rego, R.; Rito, M.; Chamadoira, C.; Dias, D.; Cunha, J.P.S. Estimation of ANT-DBS Electrodes on Target Positioning Based on a New Percept (TM) PC LFP Signal Analysis. Sensors 2022, 22, 6601. [Google Scholar] [CrossRef]

- Rogers, W.; Thulasi Seetha, S.; Refaee, T.A.G.; Lieverse, R.I.Y.; Granzier, R.W.Y.; Ibrahim, A.; Keek, S.A.; Sanduleanu, S.; Primakov, S.P.; Beuque, M.P.L.; et al. Radiomics: From qualitative to quantitative imaging. Br. J. Radiol. 2020, 93, 20190948. [Google Scholar] [CrossRef]

- Buchanan, B.G. A (Very) Brief History of Artificial Intelligence. AI Mag. 2006, 26, 53. [Google Scholar]

- Meyers, P.H.; Nice, C.M., Jr. Automated Computer Analysis of Radiographic Images. Arch. Environ. Health 1964, 8, 774–775. [Google Scholar] [CrossRef]

- Aloysius, N.; Geetha, M. A review on deep convolutional neural networks. In Proceedings of the 2017 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 6–8 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 588–592. [Google Scholar]

- Tang, X. The role of artificial intelligence in medical imaging research. BJR Open 2019, 2, 20190031. [Google Scholar] [CrossRef]

- Park, S.H.; Han, K. Methodologic Guide for Evaluating Clinical Performance and Effect of Artificial Intelligence Technology for Medical Diagnosis and Prediction. Radiology 2018, 286, 800–809. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Chen, E.; Banerjee, O.; Topol, E.J. AI in health and medicine. Nat. Med. 2022, 28, 31–38. [Google Scholar] [CrossRef]

- Bera, K.; Schalper, K.A.; Rimm, D.L.; Velcheti, V.; Madabhushi, A. Artificial intelligence in digital pathology—New tools for diagnosis and precision oncology. Nat. Rev. Clin. Oncol. 2019, 16, 703–715. [Google Scholar] [CrossRef]

- Driver, C.N.; Bowles, B.S.; Bartholmai, B.J.; Greenberg-Worisek, A.J. Artificial Intelligence in Radiology: A Call for Thoughtful Application. Clin. Transl. Sci. 2020, 13, 216–218. [Google Scholar] [CrossRef] [PubMed]

- Gore, J.C. Artificial intelligence in medical imaging. Magn. Reson. Imaging 2020, 68, A1–A4. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Mahmood, H.; Shaban, M.; Rajpoot, N.; Khurram, S.A. Artificial Intelligence-based methods in head and neck cancer diagnosis: An overview. Br. J. Cancer 2021, 124, 1934–1940. [Google Scholar] [CrossRef]

- Kim, J.S.; Kim, B.G.; Hwang, S.H. Efficacy of Artificial Intelligence-Assisted Discrimination of Oral Cancerous Lesions from Normal Mucosa Based on the Oral Mucosal Image: A Systematic Review and Meta-Analysis. Cancers 2022, 14, 3499. [Google Scholar] [CrossRef]

- Yao, K.; Unni, R.; Zheng, Y. Intelligent nanophotonics: Merging photonics and artificial intelligence at the nanoscale. Nanophotonics 2019, 8, 339–366. [Google Scholar] [CrossRef]

- Gruber, N.; Galijasevic, M.; Regodic, M.; Grams, A.E.; Siedentopf, C.; Steiger, R.; Hammerl, M.; Haltmeier, M.; Gizewski, E.R.; Janjic, T. A deep learning pipeline for the automated segmentation of posterior limb of internal capsule in preterm neonates. Artif. Intell. Med. 2022, 132, 102384. [Google Scholar] [CrossRef]

- Kim, D.; Lee, J.; Moon, J.; Moon, T. Interpretable deep learning-based hippocampal sclerosis classification. Epilepsia Open 2022, 7, 747–757. [Google Scholar] [CrossRef]

- Shabanpour, M.; Kaboodvand, N.; Iravani, B. Parkinson’s disease is characterized by sub-second resting-state spatio-oscillatory patterns: A contribution from deep convolutional neural network. Neuroimage Clin. 2022, 36, 103266. [Google Scholar] [CrossRef]

- Thakur, S.P.; Pati, S.; Panchumarthy, R.; Karkada, D.; Wu, J.; Kurtaev, D.; Sako, C.; Shah, P.; Bakas, S. Optimization of Deep Learning Based Brain Extraction in MRI for Low Resource Environments. Brainlesion 2022, 12962, 151–167. [Google Scholar] [CrossRef]

- Zou, A.; Ji, J.; Lei, M.; Liu, J.; Song, Y. Exploring Brain Effective Connectivity Networks Through Spatiotemporal Graph Convolutional Models. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–13. [Google Scholar] [CrossRef]

- Jiang, X.; Yan, J.; Zhao, Y.; Jiang, M.; Chen, Y.; Zhou, J.; Xiao, Z.; Wang, Z.; Zhang, R.; Becker, B.; et al. Characterizing functional brain networks via Spatio-Temporal Attention 4D Convolutional Neural Networks (STA-4DCNNs). Neural Netw. 2023, 158, 99–110. [Google Scholar] [CrossRef]

- Shen, X.; Lin, L.; Xu, X.; Wu, S. Effects of Patchwise Sampling Strategy to Three-Dimensional Convolutional Neural Network-Based Alzheimer’s Disease Classification. Brain Sci. 2023, 13, 254. [Google Scholar] [CrossRef]

- van Dyck, L.E.; Denzler, S.J.; Gruber, W.R. Guiding visual attention in deep convolutional neural networks based on human eye movements. Front. Neurosci. 2022, 16, 975639. [Google Scholar] [CrossRef]

- Xie, Y.; Zaccagna, F.; Rundo, L.; Testa, C.; Agati, R.; Lodi, R.; Manners, D.N.; Tonon, C. Convolutional Neural Network Techniques for Brain Tumor Classification (from 2015 to 2022): Review, Challenges, and Future Perspectives. Diagnostics 2022, 12, 1850. [Google Scholar] [CrossRef]

- Marini, N.; Otalora, S.; Wodzinski, M.; Tomassini, S.; Dragoni, A.F.; Marchand-Maillet, S.; Morales, J.P.D.; Duran-Lopez, L.; Vatrano, S.; Muller, H.; et al. Data-driven color augmentation for H&E stained images in computational pathology. J. Pathol. Inf. 2023, 14, 100183. [Google Scholar] [CrossRef]

- Xu, X.; Lin, L.; Sun, S.; Wu, S. A review of the application of three-dimensional convolutional neural networks for the diagnosis of Alzheimer’s disease using neuroimaging. Rev. Neurosci. 2023. [Google Scholar] [CrossRef]

- Kar, K.; Kubilius, J.; Schmidt, K.; Issa, E.B.; DiCarlo, J.J. Evidence that recurrent circuits are critical to the ventral stream’s execution of core object recognition behavior. Nat. Neurosci. 2019, 22, 974–983. [Google Scholar] [CrossRef]

- Zhang, X.Y.; Yin, F.; Zhang, Y.M.; Liu, C.L.; Bengio, Y. Drawing and Recognizing Chinese Characters with Recurrent Neural Network. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 849–862. [Google Scholar] [CrossRef]

- Mahmud, M.; Kaiser, M.S.; Hussain, A.; Vassanelli, S. Applications of Deep Learning and Reinforcement Learning to Biological Data. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2063–2079. [Google Scholar] [CrossRef]

- Cai, L.Y.; Lee, H.H.; Newlin, N.R.; Kerley, C.I.; Kanakaraj, P.; Yang, Q.; Johnson, G.W.; Moyer, D.; Schilling, K.G.; Rheault, F.; et al. Convolutional-recurrent neural networks approximate diffusion tractography from T1-weighted MRI and associated anatomical context. bioRxiv 2023. [Google Scholar] [CrossRef]

- Wan, Z.B.; Dong, Y.Q.; Yu, Z.C.; Lv, H.B.; Lv, Z.H. Semi-Supervised Support Vector Machine for Digital Twins Based Brain Image Fusion. Front. Neurosci. 2021, 15, 705323. [Google Scholar] [CrossRef] [PubMed]

- Hu, M.D.; Zhong, Y.; Xie, S.X.; Lv, H.B.; Lv, Z.H. Fuzzy System Based Medical Image Processing for Brain Disease Prediction. Front. Neurosci. 2021, 15, 714318. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.J.; Ye, M.; Pao, G.M.; Song, S.M.; Jhang, J.; Jiang, H.B.; Kim, J.H.; Kang, S.J.; Kim, D.I.; Han, S. Divergent brainstem opioidergic pathways that coordinate breathing with pain and emotions. Neuron 2022, 110, 857–873. [Google Scholar] [CrossRef]

- Munir, K.; Elahi, H.; Ayub, A.; Frezza, F.; Rizzi, A. Cancer Diagnosis Using Deep Learning: A Bibliographic Review. Cancers 2019, 11, 1235. [Google Scholar] [CrossRef]

- Zhao, X.M.; Wu, Y.H.; Song, G.D.; Li, Z.Y.; Zhang, Y.Z.; Fan, Y. A deep learning model integrating FCNNs and CRFs for brain tumor segmentation. Med. Image Anal. 2018, 43, 98–111. [Google Scholar] [CrossRef]

- Evans, D.A.; Stempel, A.V.; Vale, R.; Ruehle, S.; Lefler, Y.; Branco, T. A synaptic threshold mechanism for computing escape decisions. Nature 2018, 558, 590–594. [Google Scholar] [CrossRef]

- Gibson, E.; Li, W.Q.; Sudre, C.; Fidon, L.; Shakir, D.I.; Wang, G.T.; Eaton-Rosen, Z.; Gray, R.; Doel, T.; Hu, Y.P.; et al. NiftyNet: A deep-learning platform for medical imaging. Comput. Methods Programs Biomed. 2018, 158, 113–122. [Google Scholar] [CrossRef]

- Nie, D.; Trullo, R.; Lian, J.; Wang, L.; Petitjean, C.; Ruan, S.; Wang, Q.; Shen, D. Medical Image Synthesis with Deep Convolutional Adversarial Networks. IEEE Trans. Biomed. Eng. 2018, 65, 2720–2730. [Google Scholar] [CrossRef]

- Wang, Y.; Yu, B.T.; Wang, L.; Zu, C.; Lalush, D.S.; Lin, W.L.; Wu, X.; Zhou, J.L.; Shen, D.G.; Zhou, L.P. 3D conditional generative adversarial networks for high-quality PET image estimation at low dose. Neuroimage 2018, 174, 550–562. [Google Scholar] [CrossRef]

- Xue, Y.; Xu, T.; Zhang, H.; Long, L.R.; Huang, X.L. SegAN: Adversarial Network with Multi-scale L (1) Loss for Medical Image Segmentation. Neuroinformatics 2018, 16, 383–392. [Google Scholar] [CrossRef]

- Dar, S.U.H.; Yurt, M.; Karacan, L.; Erdem, A.; Erdem, E.; Cukur, T. Image Synthesis in Multi-Contrast MRI With Conditional Generative Adversarial Networks. IEEE Trans. Med. Imaging 2019, 38, 2375–2388. [Google Scholar] [CrossRef]

- Lundervold, A.S.; Lundervold, A. An overview of deep learning in medical imaging focusing on MRI. Z. Fur Med. Phys. 2019, 29, 102–127. [Google Scholar] [CrossRef]

- Ghassemi, N.; Shoeibi, A.; Rouhani, M. Deep neural network with generative adversarial networks pre-training for brain tumor classification based on MR images. Biomed. Signal Process. Control 2020, 57, 101678. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. Acm 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Ravishankar, S.; Ye, J.C.; Fessler, J.A. Image Reconstruction: From Sparsity to Data-Adaptive Methods and Machine Learning. Proc. IEEE 2020, 108, 86–109. [Google Scholar] [CrossRef]

- Chlap, P.; Min, H.; Vandenberg, N.; Dowling, J.; Holloway, L.; Haworth, A. A review of medical image data augmentation techniques for deep learning applications. J. Med. Imaging Radiat. Oncol. 2021, 65, 545–563. [Google Scholar] [CrossRef]

- Dalmaz, O.; Yurt, M.; Cukur, T. ResViT: Residual Vision Transformers for Multimodal Medical Image Synthesis. IEEE Trans. Med. Imaging 2022, 41, 2598–2614. [Google Scholar] [CrossRef]

- Geng, M.F.; Meng, X.X.; Yu, J.Y.; Zhu, L.; Jin, L.J.; Jiang, Z.; Qiu, B.; Li, H.; Kong, H.J.; Yuan, J.M.; et al. Content-Noise Complementary Learning for Medical Image Denoising. IEEE Trans. Med. Imaging 2022, 41, 407–419. [Google Scholar] [CrossRef]

- Hu, S.Y.; Lei, B.Y.; Wang, S.Q.; Wang, Y.; Feng, Z.G.; Shen, Y.Y. Bidirectional Mapping Generative Adversarial Networks for Brain MR to PET Synthesis. IEEE Trans. Med. Imaging 2022, 41, 145–157. [Google Scholar] [CrossRef]

- Korkmaz, Y.; Dar, S.U.H.; Yurt, M.; Ozbey, M.; Cukur, T. Unsupervised MRI Reconstruction via Zero-Shot Learned Adversarial Transformers. IEEE Trans. Med. Imaging 2022, 41, 1747–1763. [Google Scholar] [CrossRef]

- You, S.; Lei, B.; Wang, S.; Chui, C.K.; Cheung, A.C.; Liu, Y.; Gan, M.; Wu, G.; Shen, Y. Fine Perceptive GANs for Brain MR Image Super-Resolution in Wavelet Domain. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Ozkaraca, O.; Bagriacik, O.I.; Guruler, H.; Khan, F.; Hussain, J.; Khan, J.; Laila, U.E. Multiple Brain Tumor Classification with Dense CNN Architecture Using Brain MRI Images. Life 2023, 13, 349. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Fan, Q.; Bilgic, B.; Wang, G.; Wu, W.; Polimeni, J.R.; Miller, K.L.; Huang, S.Y.; Tian, Q. Diffusion MRI data analysis assisted by deep learning synthesized anatomical images (DeepAnat). Med. Image Anal. 2023, 86, 102744. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Pawar, K.; Ekanayake, M.; Pain, C.; Zhong, S.; Egan, G.F. Deep Learning for Image Enhancement and Correction in Magnetic Resonance Imaging-State-of-the-Art and Challenges. J. Digit Imaging 2023, 36, 204–230. [Google Scholar] [CrossRef]

- Gore, S.; Chougule, T.; Jagtap, J.; Saini, J.; Ingalhalikar, M. A Review of Radiomics and Deep Predictive Modeling in Glioma Characterization. Acad. Radiol. 2021, 28, 1599–1621. [Google Scholar] [CrossRef]

- Diaz-Gomez, L.; Gutierrez-Rodriguez, A.E.; Martinez-Maldonado, A.; Luna-Munoz, J.; Cantoral-Ceballos, J.A.; Ontiveros-Torres, M.A. Interpretable Classification of Tauopathies with a Convolutional Neural Network Pipeline Using Transfer Learning and Validation against Post-Mortem Clinical Cases of Alzheimer’s Disease and Progressive Supranuclear Palsy. Curr. Issues Mol. Biol. 2022, 44, 5963–5985. [Google Scholar] [CrossRef]

- Chattopadhyay, T.; Ozarkar, S.S.; Buwa, K.; Thomopoulos, S.I.; Thompson, P.M.; Alzheimer’s Disease Neuroimaging, I. Predicting Brain Amyloid Positivity from T1 weighted brain MRI and MRI-derived Gray Matter, White Matter and CSF maps using Transfer Learning on 3D CNNs. bioRxiv 2023. [Google Scholar] [CrossRef]

- Coll, L.; Pareto, D.; Carbonell-Mirabent, P.; Cobo-Calvo, A.; Arrambide, G.; Vidal-Jordana, A.; Comabella, M.; Castillo, J.; Rodriguez-Acevedo, B.; Zabalza, A.; et al. Deciphering multiple sclerosis disability with deep learning attention maps on clinical MRI. Neuroimage Clin. 2023, 38, 103376. [Google Scholar] [CrossRef]

- Zoetmulder, R.; Baak, L.; Khalili, N.; Marquering, H.A.; Wagenaar, N.; Benders, M.; van der Aa, N.E.; Isgum, I. Brain segmentation in patients with perinatal arterial ischemic stroke. Neuroimage Clin. 2023, 38, 103381. [Google Scholar] [CrossRef]

- Daveau, R.S.; Law, I.; Henriksen, O.M.; Hasselbalch, S.G.; Andersen, U.B.; Anderberg, L.; Hojgaard, L.; Andersen, F.L.; Ladefoged, C.N. Deep learning based low-activity PET reconstruction of [(11)C]PiB and [(18)F]FE-PE2I in neurodegenerative disorders. Neuroimage 2022, 259, 119412. [Google Scholar] [CrossRef]

- Etminani, K.; Soliman, A.; Davidsson, A.; Chang, J.R.; Martinez-Sanchis, B.; Byttner, S.; Camacho, V.; Bauckneht, M.; Stegeran, R.; Ressner, M.; et al. A 3D deep learning model to predict the diagnosis of dementia with Lewy bodies, Alzheimer’s disease, and mild cognitive impairment using brain 18F-FDG PET. Eur. J. Nucl. Med. Mol. Imaging 2022, 49, 563–584. [Google Scholar] [CrossRef]

- Maddury, S.; Desai, K. DeepAD: A deep learning application for predicting amyloid standardized uptake value ratio through PET for Alzheimer’s prognosis. Front. Artif. Intell. 2023, 6, 1091506. [Google Scholar] [CrossRef]

- Hamdi, M.; Bourouis, S.; Rastislav, K.; Mohmed, F. Evaluation of Neuro Images for the Diagnosis of Alzheimer’s Disease Using Deep Learning Neural Network. Front. Public Health 2022, 10, 834032. [Google Scholar] [CrossRef]

- Fu, F.; Shan, Y.; Yang, G.; Zheng, C.; Zhang, M.; Rong, D.; Wang, X.; Lu, J. Deep Learning for Head and Neck CT Angiography: Stenosis and Plaque Classification. Radiology 2023, 307, 220996. [Google Scholar] [CrossRef]

- Kok, Y.E.; Pszczolkowski, S.; Law, Z.K.; Ali, A.; Krishnan, K.; Bath, P.M.; Sprigg, N.; Dineen, R.A.; French, A.P. Semantic Segmentation of Spontaneous Intracerebral Hemorrhage, Intraventricular Hemorrhage, and Associated Edema on CT Images Using Deep Learning. Radiol. Artif. Intell. 2022, 4, e220096. [Google Scholar] [CrossRef]

- Moghadam, S.M.; Airaksinen, M.; Nevalainen, P.; Marchi, V.; Hellstrom-Westas, L.; Stevenson, N.J.; Vanhatalo, S. An automated bedside measure for monitoring neonatal cortical activity: A supervised deep learning-based electroencephalogram classifier with external cohort validation. Lancet Digit. Health 2022, 4, e884–e892. [Google Scholar] [CrossRef]

- Mughal, N.E.; Khan, M.J.; Khalil, K.; Javed, K.; Sajid, H.; Naseer, N.; Ghafoor, U.; Hong, K.S. EEG-fNIRS-based hybrid image construction and classification using CNN-LSTM. Front. Neurorobot 2022, 16, 873239. [Google Scholar] [CrossRef]

- Chambon, S.; Galtier, M.N.; Arnal, P.J.; Wainrib, G.; Gramfort, A. A Deep Learning Architecture for Temporal Sleep Stage Classification Using Multivariate and Multimodal Time Series. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 758–769. [Google Scholar] [CrossRef]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain-computer interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef]

- Dinov, M.; Leech, R. Tracking and optimizing human performance using deep reinforcement learning in closed-loop behavioral-and neuro-feedback: A proof of concept. bioRxiv 2017. [Google Scholar] [CrossRef]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A comprehensive review of EEG-based brain-computer interface paradigms. J. Neural Eng. 2019, 16, 011001. [Google Scholar] [CrossRef] [PubMed]

- Petrosyan, A.; Sinkin, M.; Lebedev, M.; Ossadtchi, A. Decoding and interpreting cortical signals with a compact convolutional neural network. J. Neural Eng. 2021, 18, 026019. [Google Scholar] [CrossRef] [PubMed]

- Zubarev, I.; Vranou, G.; Parkkonen, L. MNEflow: Neural networks for EEG/MEG decoding and interpretation. SoftwareX 2022, 17, 100951. [Google Scholar] [CrossRef]

- Shen, D.; Deng, Y.; Lin, C.; Li, J.; Lin, X.; Zou, C. Clinical Characteristics and Gene Mutation Analysis of Poststroke Epilepsy. Contrast Media Mol. Imaging 2022, 2022, 4801037. [Google Scholar] [CrossRef]

- Hosseini, M.P.; Tran, T.X.; Pompili, D.; Elisevich, K.; Soltanian-Zadeh, H. Multimodal data analysis of epileptic EEG and rs-fMRI via deep learning and edge computing. Artif. Intell. Med. 2020, 104, 101813. [Google Scholar] [CrossRef]

- Hosseini, M.P.; Tran, T.X.; Pompili, D.; Elisevich, K.; Soltanian-Zadeh, H. Deep Learning with Edge Computing for Localization of Epileptogenicity using Multimodal rs-fMRI and EEG Big Data. In Proceedings of the 2017 IEEE International Conference on Automatic Computing (ICAC), Columbus, OH, USA, 17–21 July 2017; pp. 83–92. [Google Scholar]

- Hussein, R.; Palangi, H.; Ward, R.; Wang, Z.J. Epileptic seizure detection: A deep learning approach. arXiv 2018, arXiv:1803.09848. [Google Scholar]

- Singh, K.; Malhotra, J. Deep learning based smart health monitoring for automated prediction of epileptic seizures using spectral analysis of scalp EEG. Phys. Eng. Sci. Med. 2021, 44, 1161–1173. [Google Scholar] [CrossRef]

- Markowitz, J.E.; Gillis, W.F.; Jay, M.; Wood, J.; Harris, R.W.; Cieszkowski, R.; Scott, R.; Brann, D.; Koveal, D.; Kula, T.; et al. Spontaneous behaviour is structured by reinforcement without explicit reward. Nature 2023, 614, 108–117. [Google Scholar] [CrossRef]

- Gröhl, J.; Schellenberg, M.; Dreher, K.; Maier-Hein, L. Deep learning for biomedical photoacoustic imaging: A review. Photoacoustics 2021, 22, 100241. [Google Scholar] [CrossRef]

- Guan, S.; Khan, A.A.; Sikdar, S.; Chitnis, P.V. Limited-View and Sparse Photoacoustic Tomography for Neuroimaging with Deep Learning. Sci. Rep. 2020, 10, 8510. [Google Scholar] [CrossRef]

- Cao, R.; Nelson, S.D.; Davis, S.; Liang, Y.; Luo, Y.; Zhang, Y.; Crawford, B.; Wang, L.V. Label-free intraoperative histology of bone tissue via deep-learning-assisted ultraviolet photoacoustic microscopy. Nat. Biomed. Eng. 2023, 7, 124–134. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, M.; Ouyang, Y.; Yuan, Z. Deep Learning Aided Neuroimaging and Brain Regulation. Sensors 2023, 23, 4993. https://doi.org/10.3390/s23114993

Xu M, Ouyang Y, Yuan Z. Deep Learning Aided Neuroimaging and Brain Regulation. Sensors. 2023; 23(11):4993. https://doi.org/10.3390/s23114993

Chicago/Turabian StyleXu, Mengze, Yuanyuan Ouyang, and Zhen Yuan. 2023. "Deep Learning Aided Neuroimaging and Brain Regulation" Sensors 23, no. 11: 4993. https://doi.org/10.3390/s23114993

APA StyleXu, M., Ouyang, Y., & Yuan, Z. (2023). Deep Learning Aided Neuroimaging and Brain Regulation. Sensors, 23(11), 4993. https://doi.org/10.3390/s23114993