A Systematic Solution for Moving-Target Detection and Tracking While Only Using a Monocular Camera

Abstract

1. Introduction

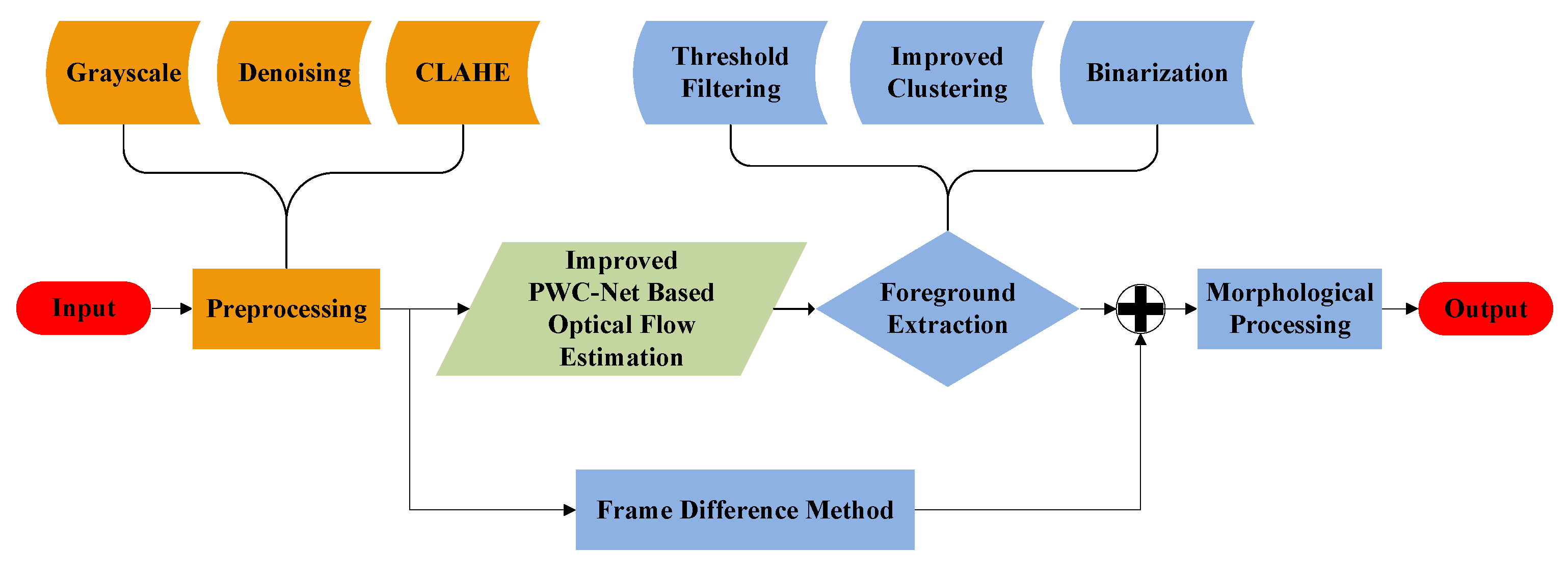

- An improved optical flow method with modifications in the PWC-Net is applied, and the K-means and aggregation clustering algorithms are improved. Thus, a moving target could be quickly detected and accurately extracted from a noisy background.

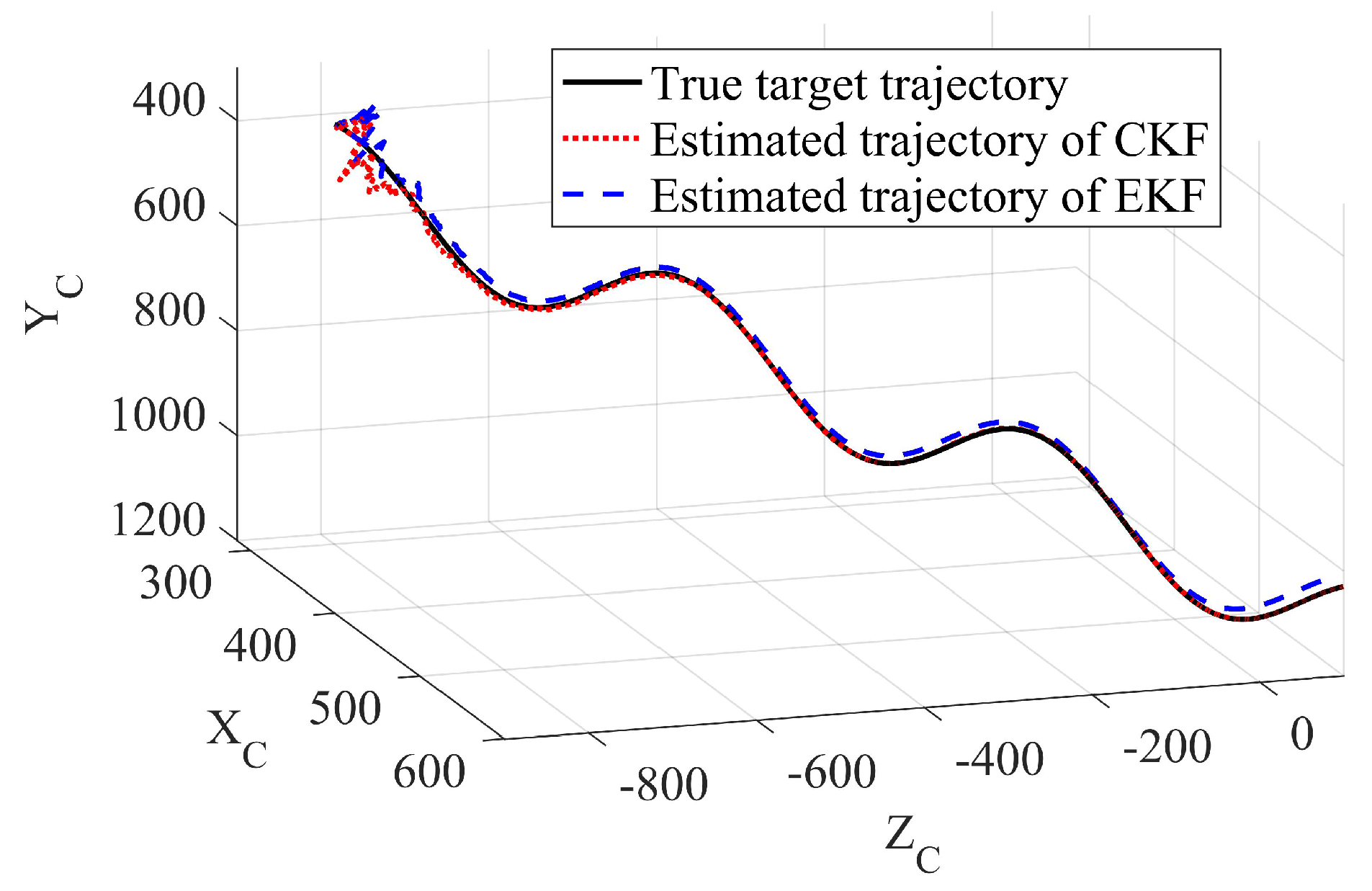

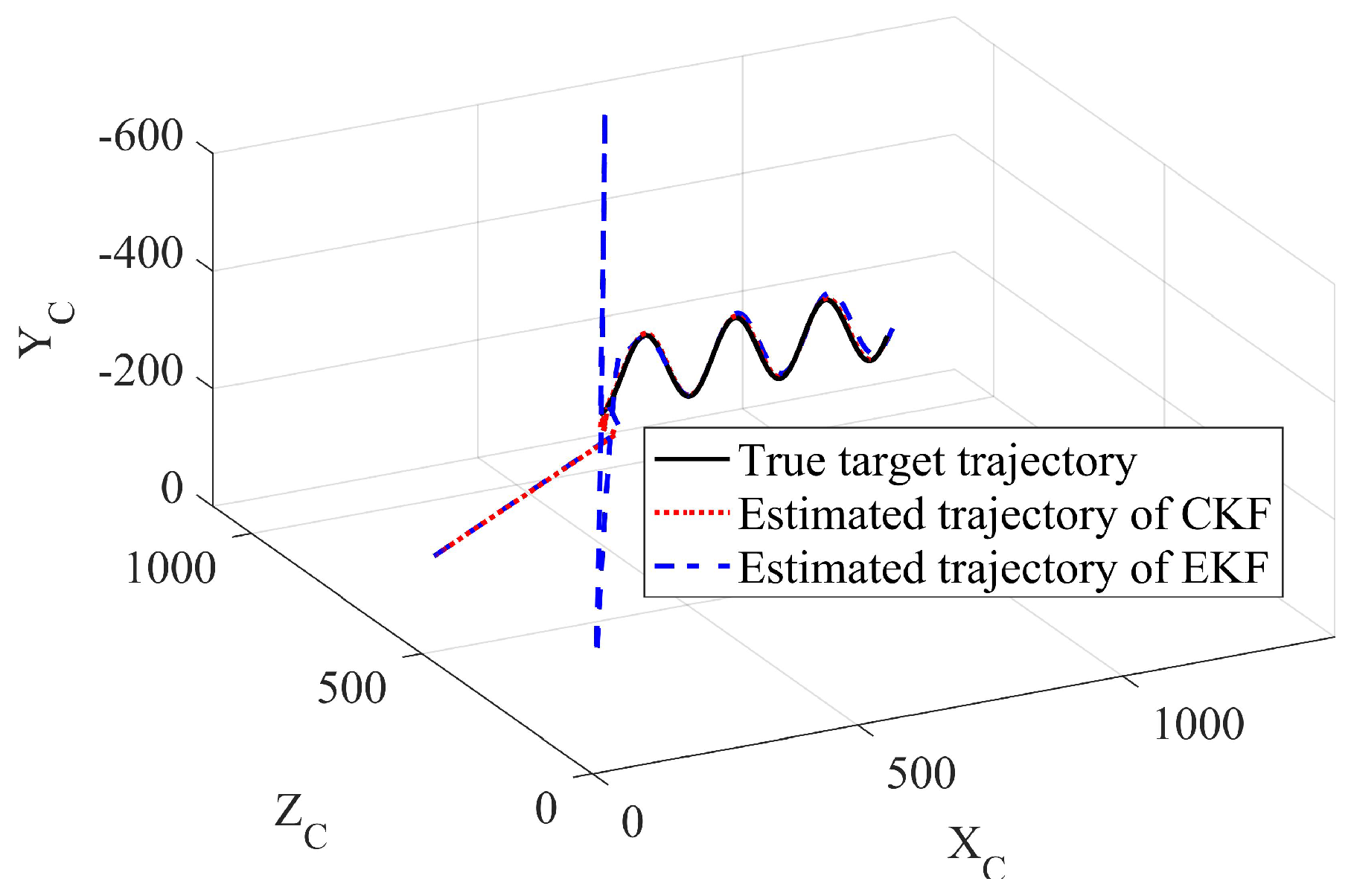

- A geometrical solution using pinhole imaging theory and the CKF algorithm is proposed that has a simple structure and fast computational speed.

- The proposed method was verified with sufficient simulation and experimental examples, which has significant value for practical applications.

2. Preliminaries

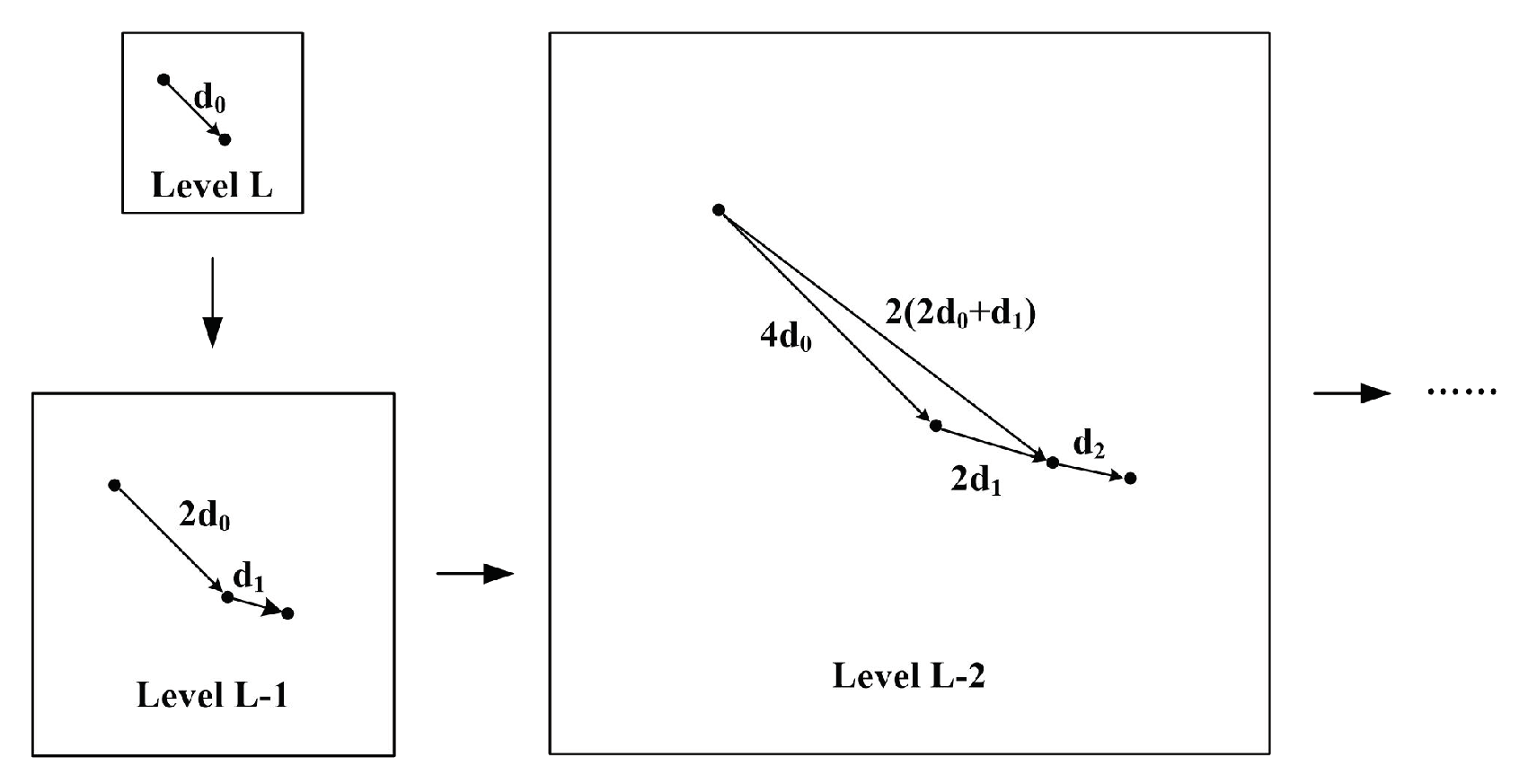

2.1. Pyramid Lucas–Kanade Optical Flow Method

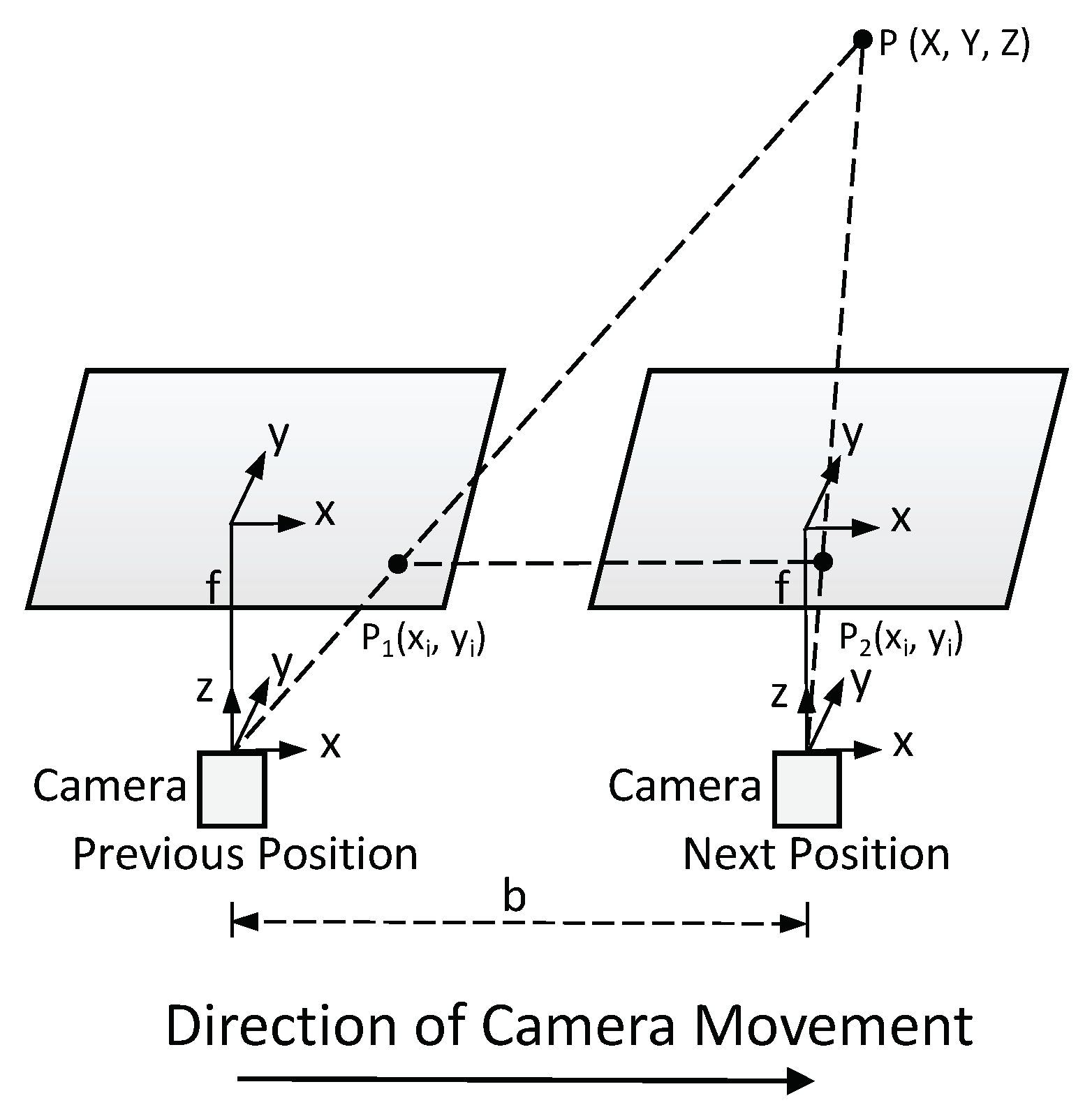

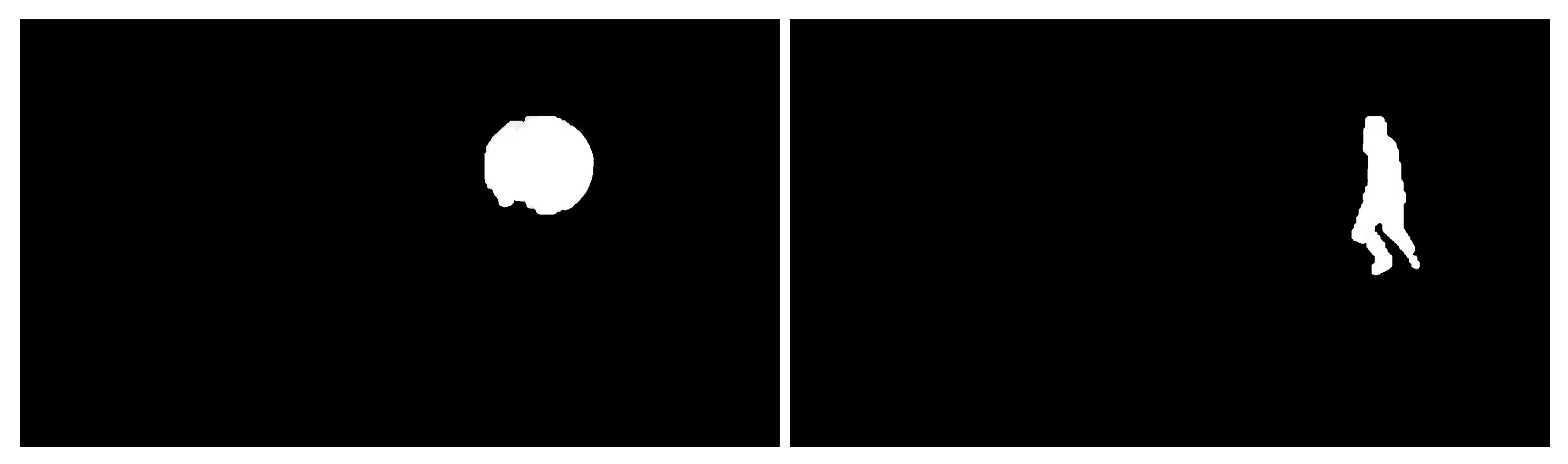

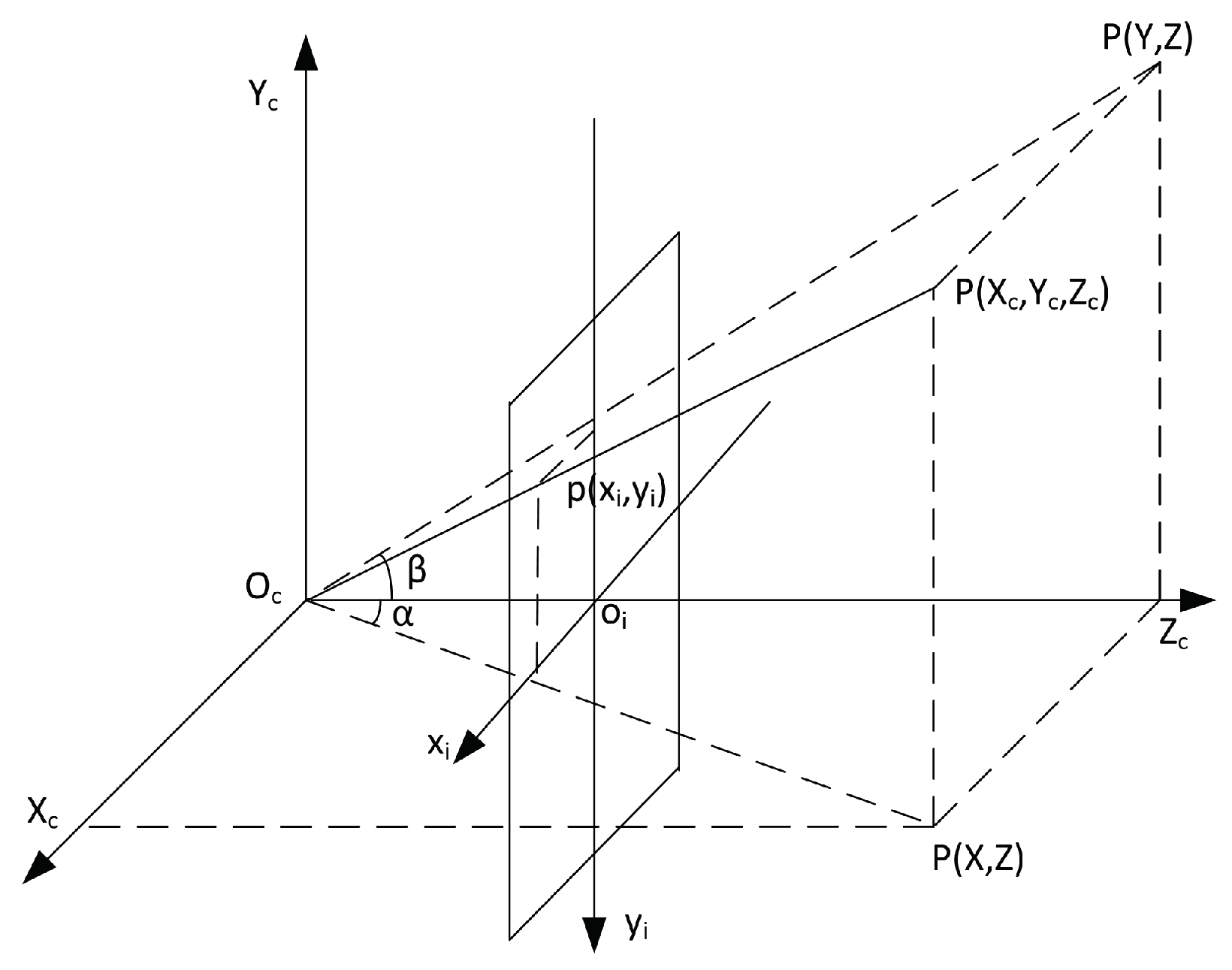

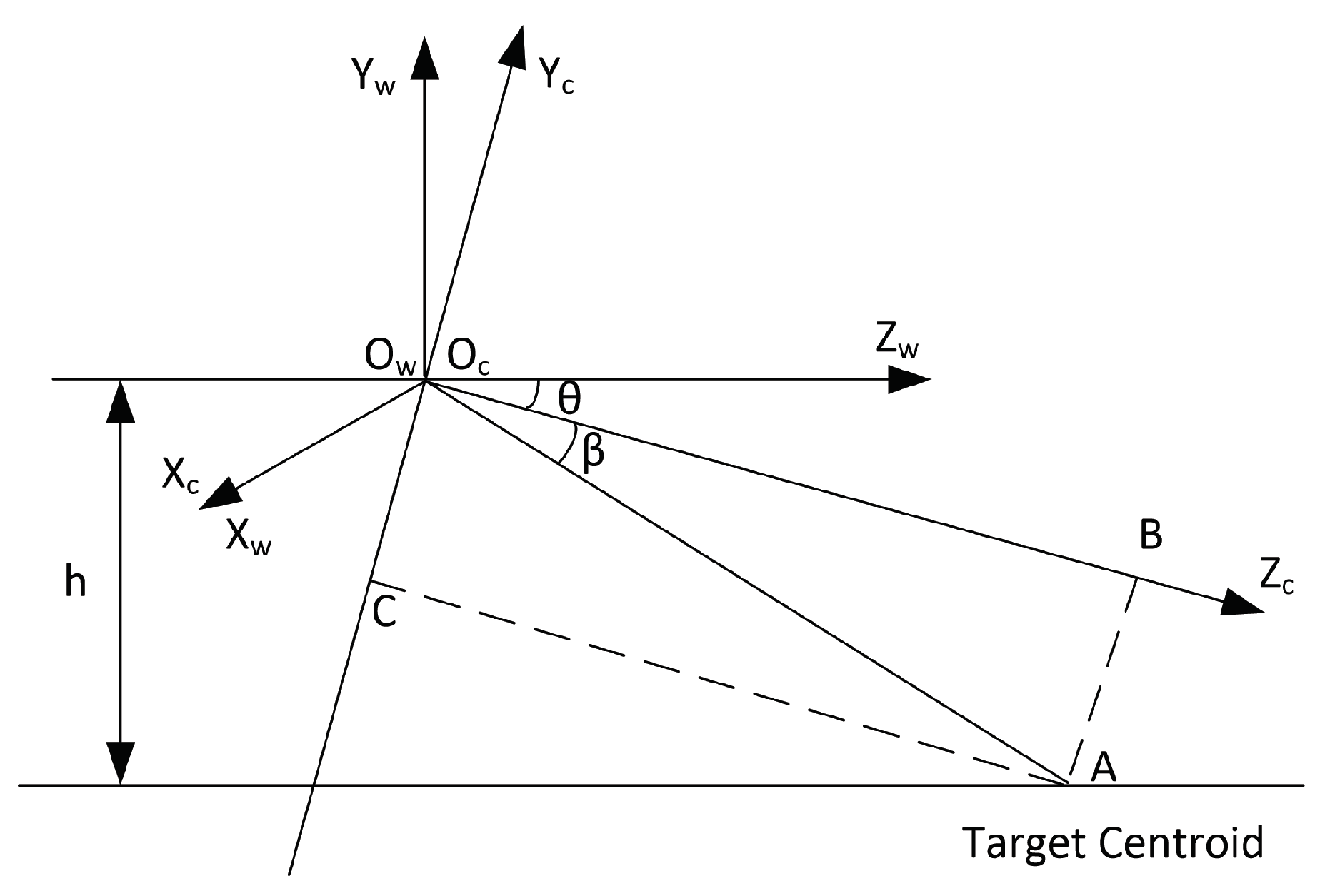

2.2. Target Localization Using Monocular Vision

2.3. Nonlinear Filter

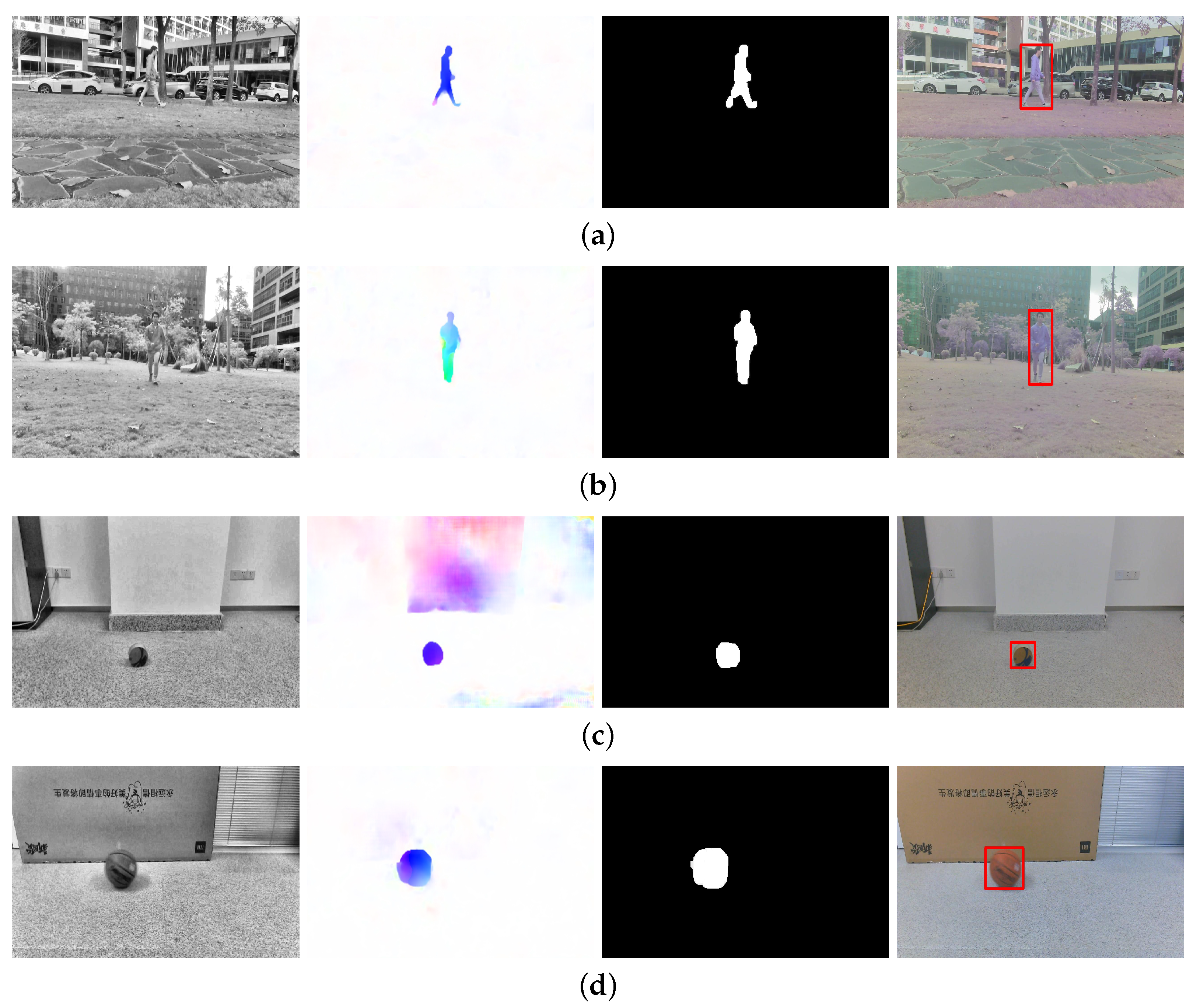

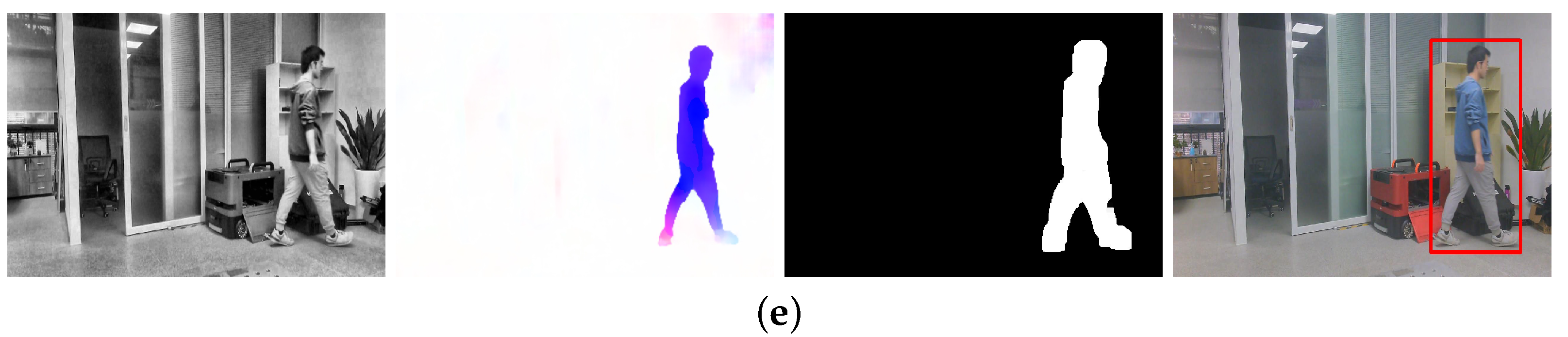

3. Moving-Target Detection and Extraction

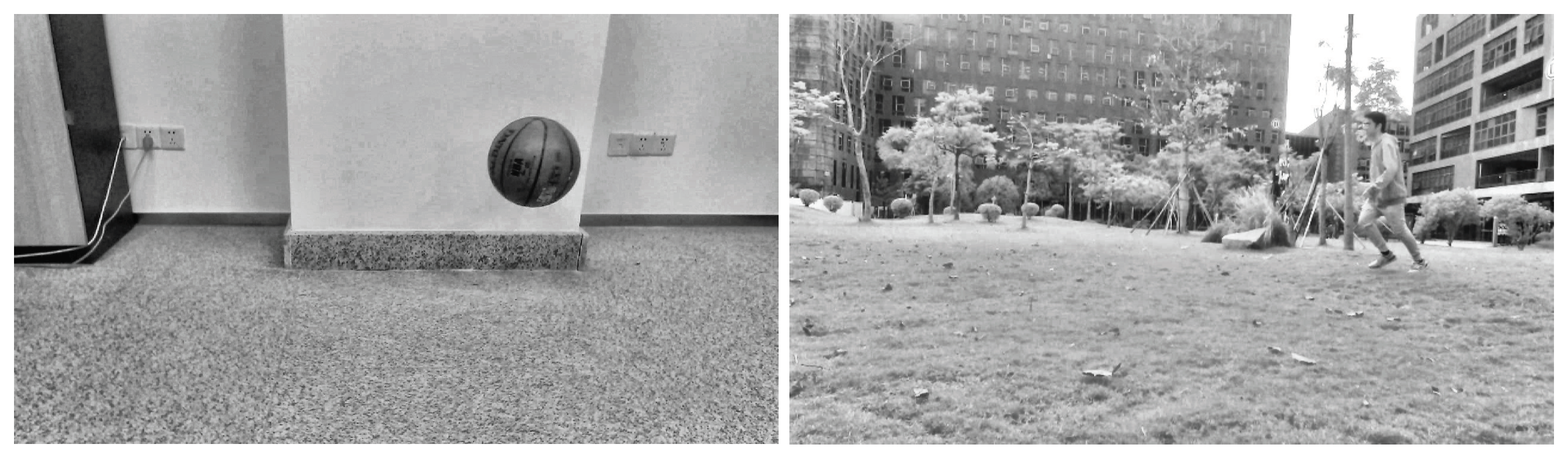

3.1. Video Image Preprocessing

3.1.1. Grayscale Processing

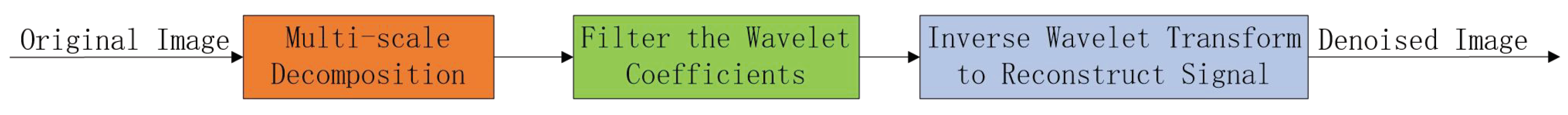

3.1.2. Wavelet Transform Threshold for Noise Elimination

3.1.3. Contrast Limited Adaptive Histogram

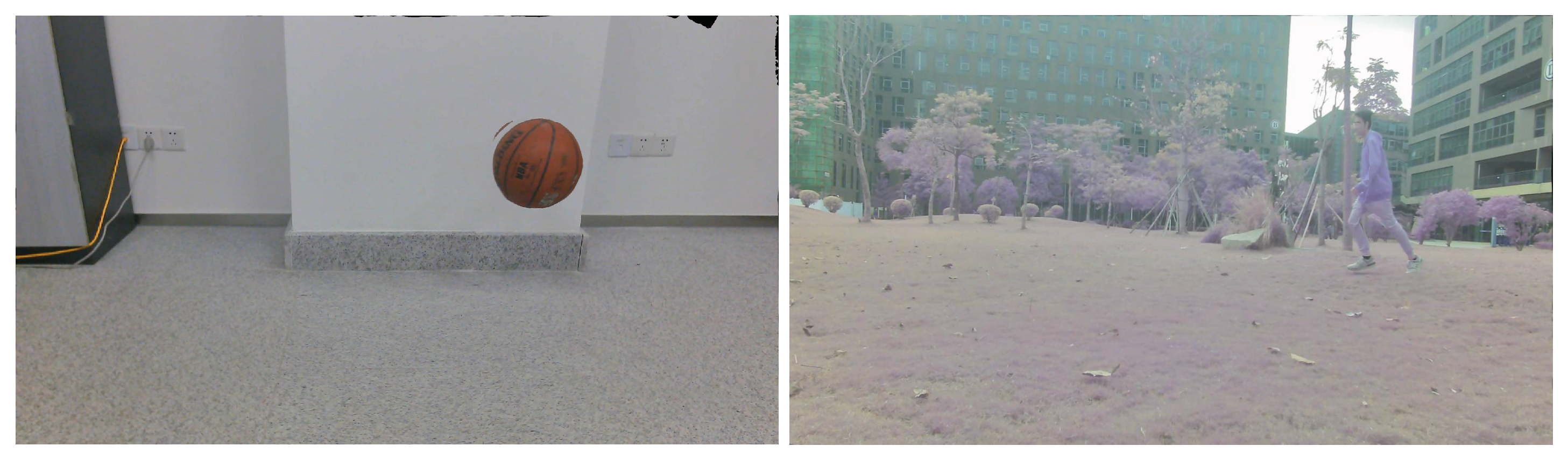

3.1.4. Optical Flow Estimation by Improved PWC-Net

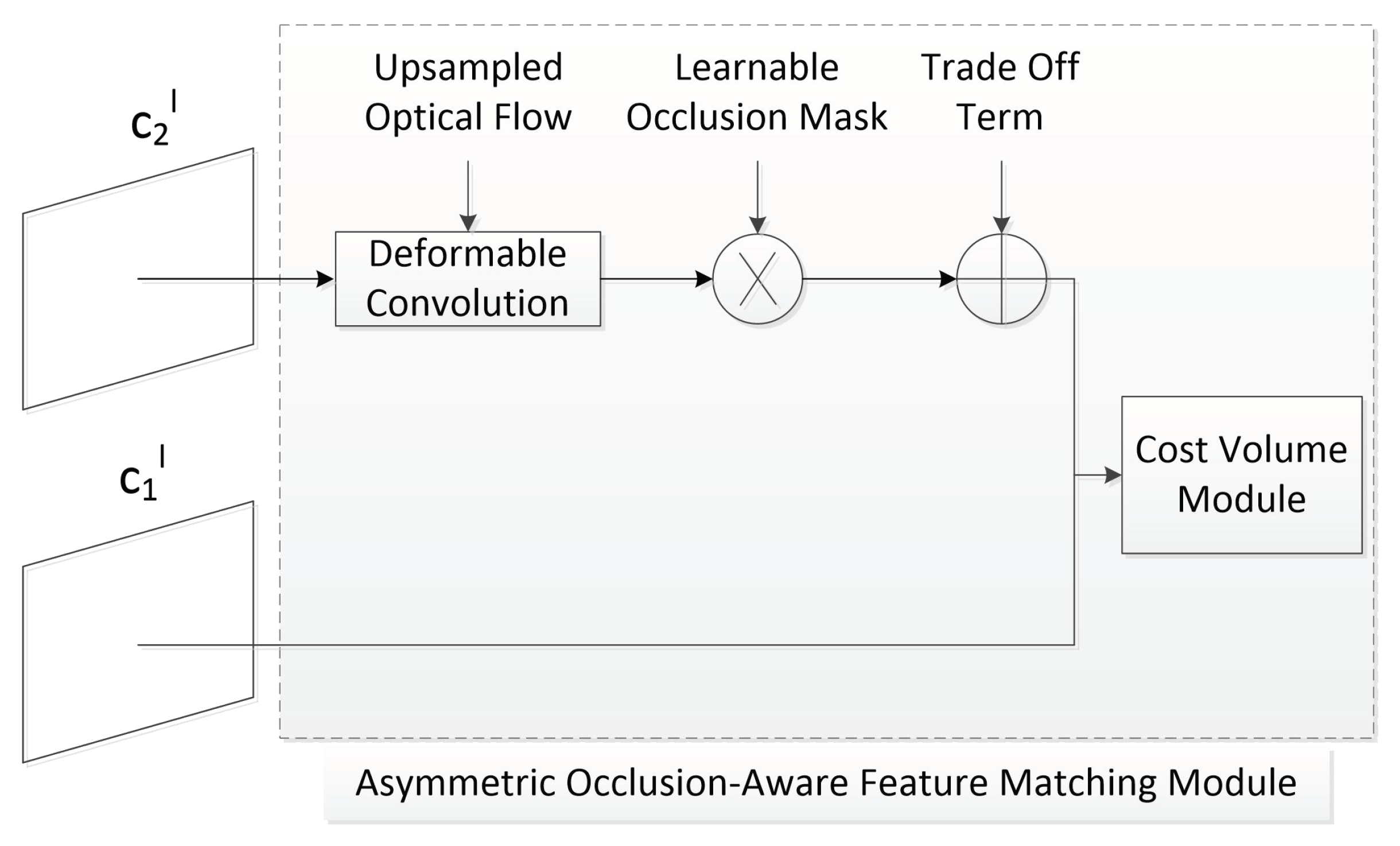

3.2. Target Extraction

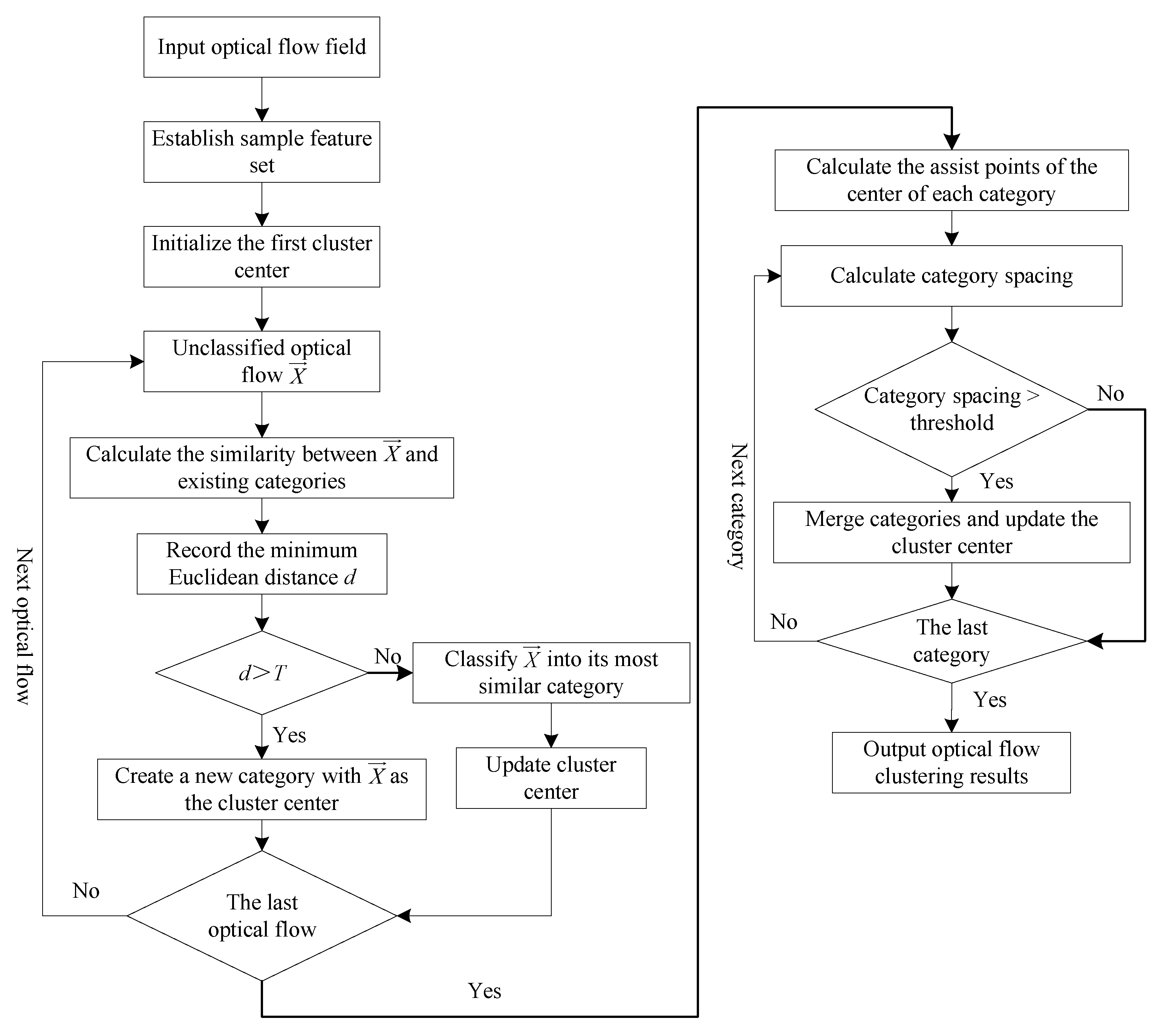

3.2.1. Improved K-Means and Agglomerative Clustering Algorithm

- (1)

- Initialize the first cluster center. Set the first optical flow class , randomly select a filtered optical flow vector (blue parts in Figure 13) assigned to and as the center of the , and assume its sample features as the central sample features of category .

- (2)

- Calculate the similarity. Select an unclassified optical flow in the optical flow field, calculate its similarity with the current existing clustering class, and record the Euclidean distance between its sample features and the sample features of the center of its most similar category.

- (3)

- Optical flow classification. Set a threshold of T. If , we set a new clustering class and classify the corresponding optical flow into the new class to serve as the center of the new class. If , we classify the corresponding optical flow into its most similar class, calculate the average value of all the optical flow sample features in this class, and take the average value as the central sample features of this class to complete the update of the center.

- (4)

- For each other unclassified optical flow in the optical flow field, repeat Steps 2 and 3 until the cluster center does not change, and output the final K cluster centers and corresponding cluster members.

- (1)

- Eight new data samples were calculated according to the position of each cluster center. The eight new data points were centered on one cluster center and arranged at a certain angle. Assuming that the cluster central position is , the new produced data point is , which haswhere, ;

- (2)

- Then, the distances between all newly generated data points from two different clusters (for example, 8 points for Cluster 1 and another 8 points from Cluster 2) are calculated, and the shortest distance is selected as the inter-class distance.

- (3)

- Set the threshold and merge the two categories whose inter-class distance between two clusters is less than the threshold.

- (4)

- Update and return the final cluster center and the cluster member.

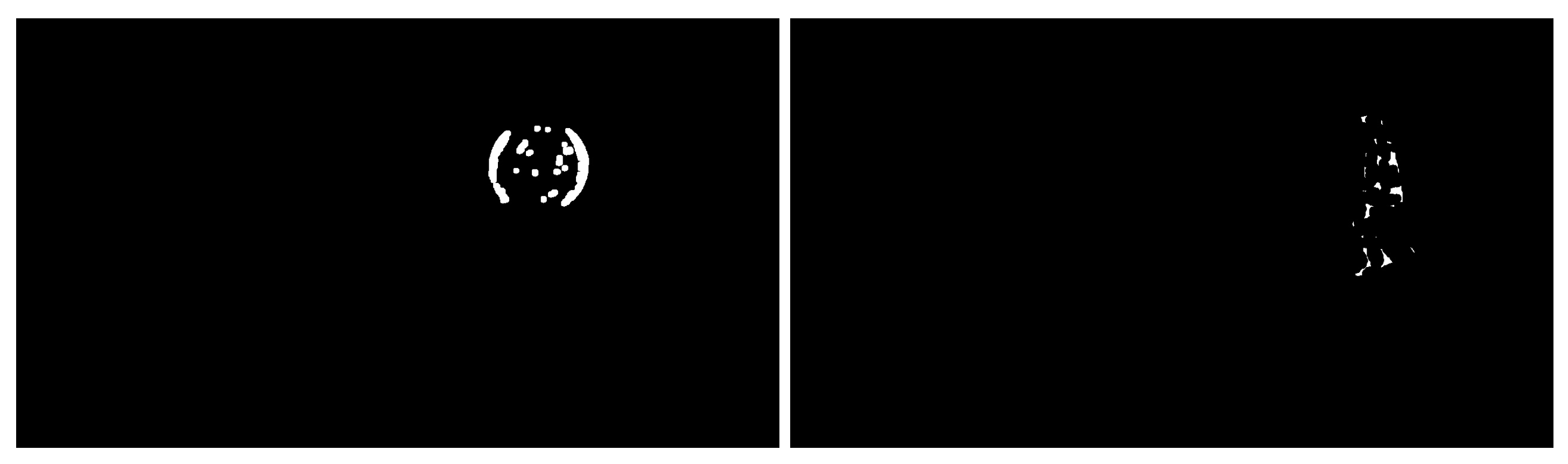

3.2.2. Accurate Extraction Based on Frame Difference Method and Morphological Operation

4. Target Localization Based on Pinhole Imaging Theory

4.1. Localization Problem Formulation

4.2. Angle and Distance Measurements

5. Moving-Target Tracking Using Visual Image and Cubature Kalman Filter

- Prediction update:

- (i)

- Decompose estimation error covariance matrix.

- (ii)

- Calculate the cubature point.

- (iii)

- Calculate the propagation cubature point of the state transfer function.where and are cubature points, m is the number of cubature points. When using the third-order spherical radial criterion, the number of cubature points should be twice the dimension n of the state vector of the nonlinear system. is the cubature point set, . . is the point set of n-dimensional space, where .

- (iv)

- Calculate status prediction value.

- (v)

- Calculate the prediction covariance matrix.

- (i)

- Decompose the prediction covariance matrix.

- (ii)

- Calculate the updated cubature point.

- (iii)

- Calculate the propagation cubature point of the measurement function.

- (iv)

- Calculate the measured predicted value.

- (v)

- Calculate the innovation,where is the measured value at .

- (vi)

- Calculate innovation covariance matrix.

- (vii)

- Calculate the cross covariance matrix.

- (viii)

- Calculate the cubature Kalman filter gain.

- (ix)

- Calculate the estimated state value at .

- (x)

- Calculation of estimation error covariance matrix.On the basis of the pinhole imaging and positioning model described in Section 4, the CKF is used to estimate the 3D target state compared with the assumed dynamic models.

6. Experimental Examples

6.1. Target Detection and Extraction (Using the Proposed Method in Section 3)

6.2. Moving Target Localization (Using the Proposed Methods in Section 4 and Section 5)

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xu, S.; Dogancay, K. Optimal sensor placement for 3-D angle-of-arrival target localization. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 1196–1211. [Google Scholar] [CrossRef]

- Wang, D.; Cao, W.; Zhang, F.; Li, Z.; Xu, S.; Wu, X. A review of deep learning in multiscale agricultural sensing. Remote Sens. 2017, 14, 559. [Google Scholar] [CrossRef]

- Xu, T.; Hao, Z.; Huang, C.; Yu, J.; Zhang, L.; Wu, X. Multi-modal Locomotion Control of Needle-like Microrobots Assembled by Ferromagnetic Nanoparticles. IEEE/ASME Trans. Mechatron. 2022, 27, 4327–4338. [Google Scholar] [CrossRef]

- Wu, Q.; Guo, H.; Wu, X.; He, T. Real-Time running detection from a patrol robot. J. Integr. Technol. 2017, 6, 50–58. [Google Scholar]

- Xu, T.; Guan, Y.; Liu, J.; Wu, X. Image-Based Visual Servoing of Helical Microswimmers for Planar Path Following. IEEE Trans. Autom. Sci. Eng. 2020, 17, 325–333. [Google Scholar] [CrossRef]

- Park, J.; Cho, J.; Lee, S.; Bak, S.; Kim, Y. An Automotive LiDAR Performance Test Method in Dynamic Driving Conditions. Sensors 2023, 23, 3892. [Google Scholar] [CrossRef]

- Li, M.; Fan, J.; Zhang, Y.; Lu, Y.; Su, G. Research of moving target detection technology in intelligent video surveillance system. J. Theor. Appl. Inf. Technol. 2013, 49, 613–617. [Google Scholar]

- Sun, W.; Du, H.; Ma, G.; Shi, S.; Wu, Y. Moving vehicle video detection combining ViBe and inter-frame difference. Int. J. Embed. Syst. 2020, 12, 371–379. [Google Scholar] [CrossRef]

- Xu, T.; Huang, C.; Lai, Z.; Wu, X. Independent Control Strategy of Multiple Magnetic Flexible Millirobots for Position Control and Path Following. IEEE Trans. Robot. 2022, 38, 2875–2887. [Google Scholar] [CrossRef]

- Xu, T.; Hwang, G.; Andreff, N.; Régnier, S. Planar Path Following of 3-D Steering Scaled-Up Helical Microswimmers. IEEE Trans. Robot. 2015, 31, 117–127. [Google Scholar] [CrossRef]

- Xu, S.; Liu, J.; Yang, C.; Wu, X.; Xu, T. A Learning-Based Stable Servo Control Strategy Using Broad Learning System Applied for Microrobotic Control. IEEE Trans. Cybern. 2021, 38, 2875–2887. [Google Scholar] [CrossRef]

- Jain, R.; Nagel, H. On the Analysis of Accumulative Difference Pictures from Image Sequences of Real World Scenes. IEEE Trans. Pattern Anal. Mach. Intell. 1979, 1, 206–214. [Google Scholar] [CrossRef]

- Li, C. Dangerous Posture Monitoring for Undersea Diver Based on Frame Difference Method. J. Coast. Res. 2020, 103 (Suppl. 1), 939–942. [Google Scholar] [CrossRef]

- Li, T.; Jiang, B.; Wu, D.; Yin, X.; Song, H. Tracking Multiple Target Cows’ Ruminant Mouth Areas Using Optical Flow and Inter-Frame Difference Methods. IEEE Access 2019, 7, 185520–185531. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Qu, B. Three-Frame Difference Algorithm Research Based on Mathematical Morphology. Procedia Eng. 2012, 29, 2705–2709. [Google Scholar] [CrossRef]

- Qu, J.; Xin, Y. Combined continuous frame difference with background difference method for moving object detection. Guangzi Xuebao/Acta Photonica Sin. 2014, 43, 213–220. [Google Scholar]

- Wahyono Filonenko, A.; Jo, K. Unattended Object Identification for Intelligent Surveillance Systems Using Sequence of Dual Background Difference. IEEE Trans. Ind. Inform. 2016, 12, 2247–2255. [Google Scholar] [CrossRef]

- Montero, V.; Jung, W.; Jeong, Y. Fast background subtraction with adaptive block learning using expectation value suitable for real-time moving object detection. J.-Real-Time Image Process. 2021, 18, 967–981. [Google Scholar] [CrossRef]

- Liu, Y.; Jiang, F.; Wang, Y.; Ouyang, L.; Zhang, B. Research on Design of Intelligent Background Differential Model for Training Target Monitoring. Complexity 2021, 2021, 5513788. [Google Scholar] [CrossRef]

- Mitiche, A.; Mansouri, A. On Convergence of the Horn and Schunck Optical-Flow Estimation Method. IEEE Trans. Image Process. 2004, 13, 848–852. [Google Scholar] [CrossRef]

- Sharmin, N.; Brad, R. Optimal Filter Estimation for Lucas-Kanade Optical Flow. Sensors 2012, 12, 12694–12709. [Google Scholar] [CrossRef]

- Bouguet, J. Pyramidal Implementation of the Lucas Kanade Feature Tracker. Opencv Documents. 2000. Available online: https://web.stanford.edu/class/cs231m/references/pyr-lucas-kanade-feature-tracker-bouget.pdf (accessed on 15 May 2023).

- Mahdianpari, M.; Salehi, B.; Rezaee, M.; Mohammadimanesh, F.; Zhang, Y. Very deep convolutional neural networks for complex land cover mapping using multispectral remote sensing imagery. Remote Sens. 2018, 10, 1119. [Google Scholar] [CrossRef]

- Ranjan, A.; Black, M. Optical Flow Estimation Using a Spatial Pyramid Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2720–2729. [Google Scholar]

- Sun, D.; Yang, X.; Liu, M.; Kautz, J. PWC-Net: CNNs for Optical Flow Using Pyramid, Warping and Cost. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8934–8943. [Google Scholar]

- Hui, T.; Tang, X.; Loy, C. LiteFlowNet: A Lightweight Convolutional Neural Network for Optical Flow Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8981–8989. [Google Scholar]

- Zhao, S.; Sheng, Y.; Dong, Y.; Chang, I.; Xu, Y. MaskFlowNet: Asymmetric feature matching with learnable occlusion mask. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–29 June 2020; pp. 6277–6286. [Google Scholar]

- Gao, K.L.; Liu, B.; Yu, X.C.; Qin, J.C.; Zhang, P.Q.; Tan, X. Deep relation network for hyperspectral image few-shot classification. Remote Sens. 2020, 12, 923. [Google Scholar] [CrossRef]

- Sa, I.; Popović, M.; Khanna, R.; Chen, Z.; Lottes, P.; Liebisch, F.; Nieto, J.; Stachniss, C.; Walter, A.; Siegwart, R. WeedMap: A large-scale semantic weed mapping framework using aerial multispectral imaging and deep neural network for precision farming. Remote Sens. 2018, 10, 1423. [Google Scholar] [CrossRef]

- Liu, J.; Liu, W.; Gao, L.; Li, L. Detection and localization of underwater targets based on monocular vision. In Proceedings of the International Conference on Advanced Robotics and Mechatronics (ICARM), Hefei and Tai’an, China, 29–31 August 2017; pp. 100–105. [Google Scholar]

- Bi, S.; Gu, Y.; Zhang, Z.; Liu, H.; Gong, M. Multi-camera stereo vision based on weights. In Proceedings of the IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Dubrovnik, Croatia, 25–28 May 2020; pp. 1–6. [Google Scholar]

- Ma, W.; Li, W.; Cao, P. Binocular Vision Object Positioning Method for Robots Based on Coarse-fine Stereo Matching. Int. J. Autom. Comput. 2020, 17, 86–95. [Google Scholar] [CrossRef]

- Mao, J.; Huang, W.; Sheng, W. Target distance measurement method using monocular vision. IET Image Process. 2020, 14, 3181–3187. [Google Scholar]

- Cao, Y.; Wang, X.; Yan, Z. Target azimuth estimation for automatic tracking in range-gated imaging. Int. Soc. Opt. Eng. 2012, 8558, 1429–1435. [Google Scholar]

- Park, J.; Kim, T.; Kim, J. Model-referenced pose estimation using monocular vision for autonomous intervention tasks. Auton. Robot. 2019, 44, 205–216. [Google Scholar] [CrossRef]

- Qiu, Z.; Hu, S.; Li, M. Single view based nonlinear vision pose estimation from coplanar points. Optik 2020, 208, 163639. [Google Scholar] [CrossRef]

- Penelle, B.; Debeir, O. Target distance measurement method using monocular vision. In Proceedings of the Virtual Reality International Conference, Laval, France, 9–11 April 2014; pp. 1–7. [Google Scholar]

- Vokorokos, L.; Mihalov, J.; Lescisin, L. Possibilities of depth cameras and ultra wide band sensor. In Proceedings of the IEEE International Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 21–23 January 2016; pp. 57–61. [Google Scholar]

- Xu, S.; Dogancay, K.; Hmam, H. 3D AOA Target Tracking Using Distributed Sensors with Multi-hop Information Sharing. Signal Process. 2018, 144, 192–200. [Google Scholar] [CrossRef]

- Nguyen, V.-H.; Pyun, J.-Y. Location Detection and Tracking of Moving Targets by a 2D IR-UWB Radar System. Sensors 2015, 15, 6740–6762. [Google Scholar] [CrossRef]

- Xu, S.; Dogancay, K.; Hmam, H. Distributed Pseudolinear Estimation and UAV Path Optimization for 3D AOA Target Tracking. Signal Process. 2017, 133, 64–78. [Google Scholar] [CrossRef]

- Wang, S.; Guo, Q.; Xu, S.; Su, D. A moving target detection and localization strategy based on optical flow and pin-hole imaging methods using monocular vision. In Proceedings of the IEEE International Conference on Real-Time Computing and Robotics (RCAR), Xining, China, 15–19 July 2021; pp. 147–152. [Google Scholar]

- Xu, S.; Wu, L.; Dogancay, K.; Alaee-Kerahroodi, M. A Hybrid Approach to Optimal TOA-Sensor Placement with Fixed Shared Sensors for Simultaneous Multi-Target Localization. IEEE Trans. Signal Process. 2022, 70, 1197–1212. [Google Scholar] [CrossRef]

- Arasaratnam, I.; Haykin, S. Cubature Kalman Filters. IEEE Trans. Autom. Control 2009, 54, 1254–1269. [Google Scholar] [CrossRef]

- Kumar, T.; Verma, K. A Theory Based on Conversion of RGB Image to Gray image. Int. J. Comput. Appl. 2010, 7, 7–10. [Google Scholar] [CrossRef]

- Sihotang, J. Implementation of Gray Level Transformation Method for Sharping 2D Images. INFOKUM 2019, 8, 16–19. [Google Scholar]

- Zuiderveld, K. Contrast limited adaptive histogram equalization. In Graphics Gems; Academic Press: Cambridge, MA, USA, 1994; pp. 474–485. [Google Scholar]

- Bahadarkhan, K.; Khaliq, A.; Shahid, M. A Morphological Hessian Based Approach for Retinal Blood Vessels Segmentation and Denoising Using Region Based Otsu Thresholding. PLoS ONE 2016, 11, e0162581. [Google Scholar] [CrossRef] [PubMed]

- Comer, M.L.; Delp, E.J., III. Morphological operations for color image processing. J. Electron. Imaging 1999, 8, 279–289. [Google Scholar] [CrossRef]

- Xu, S.; Ou, Y.; Wu, X. Optimal sensor placement for 3-D time-of-arrival target localization. IEEE Trans. Signal Process. 2019, 67, 5018–5031. [Google Scholar] [CrossRef]

- Xu, S. Optimal sensor placement for target localization using hybrid RSS, AOA and TOA measurements. IEEE Commun. Lett. 2020, 24, 1966–1970. [Google Scholar] [CrossRef]

| Forecast | Sintel Clean | Sintel Final | KITTI 2012 | KITTI 2015 | |||||

|---|---|---|---|---|---|---|---|---|---|

| Network | Time | AEPE | AEPE | AEPE | AEPE | AEPE | AEPE | FL-All | FL-All |

| (s) | Train | Test | Train | Test | Train | Test | Train | Test | |

| PWC-Net | 0.03 | 2.55 | 3.86 | 3.93 | 5.13 | 4.14 | 1.7 | 33.67% | 9.60% |

| Improvement | 0.03 | 2.33 | 2.77 | 3.72 | 4.38 | 3.21 | 1.1 | 23.58% | 6.81% |

| Sensor specification | Advanced CMOS photosensitive chip, 1/2.7 inch |

| Pixel size | 3 μm × 3 μm |

| Default speed | 30 frames/s |

| Camera lens | Infrared, 60 degrees, no distortion |

| Signal to noise ratio | 39 dB |

| Hardware | Industrial grade, 2 megapixels |

| Power | 1 w |

| Working voltage | 5 v |

| Output resolution | 1920 × 1080 |

| Interface | USB2.0, support UVC communication protocol |

| Focal length | 6 mm |

| Picture 1 | Picture 2 | Picture 3 | Picture 4 | Picture 5 | Picture 6 | |

|---|---|---|---|---|---|---|

| Camera height | 87.5 cm | 87.5 cm | 87.5 cm | 87.5 cm | 75.9 cm | 75.9 cm |

| Shooting angle | 45° | 45° | 45° | 45° | 30° | 15° |

| Centroid position | (479,259) | (1,474,275) | (1,476,843) | (439,829) | (340,181) | (293,967) |

| Azimuth | −13.52° | 14.41° | 14.47° | −14.60° | −17.22° | −18.44° |

| Reference azimuth | −15.16° | 20.5° | 21.72° | −15.65° | −18.94° | −20.21° |

| Pitch | −8.00° | −7.55° | 8.61° | 8.22° | −10.18° | 12.05° |

| Reference pitch | −9.85° | −9.54° | 10.92° | 10.79° | −11.69° | 13.49° |

| Distance | 149.5 cm | 148.5 cm | 112.1 cm | 112.8 cm | 234.1 cm | 175.5 cm |

| Reference distance | 157.4 cm | 160.7 cm | 113.4 cm | 109.7 cm | 241.3 cm | 182.6 cm |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Xu, S.; Ma, Z.; Wang, D.; Li, W. A Systematic Solution for Moving-Target Detection and Tracking While Only Using a Monocular Camera. Sensors 2023, 23, 4862. https://doi.org/10.3390/s23104862

Wang S, Xu S, Ma Z, Wang D, Li W. A Systematic Solution for Moving-Target Detection and Tracking While Only Using a Monocular Camera. Sensors. 2023; 23(10):4862. https://doi.org/10.3390/s23104862

Chicago/Turabian StyleWang, Shun, Sheng Xu, Zhihao Ma, Dashuai Wang, and Weimin Li. 2023. "A Systematic Solution for Moving-Target Detection and Tracking While Only Using a Monocular Camera" Sensors 23, no. 10: 4862. https://doi.org/10.3390/s23104862

APA StyleWang, S., Xu, S., Ma, Z., Wang, D., & Li, W. (2023). A Systematic Solution for Moving-Target Detection and Tracking While Only Using a Monocular Camera. Sensors, 23(10), 4862. https://doi.org/10.3390/s23104862