Abstract

With the increasing deployment of autonomous taxis in different cities around the world, recent studies have stressed the importance of developing new methods, models and tools for intuitive human–autonomous taxis interactions (HATIs). Street hailing is one example, where passengers would hail an autonomous taxi by simply waving a hand, exactly like they do for manned taxis. However, automated taxi street-hailing recognition has been explored to a very limited extent. In order to address this gap, in this paper, we propose a new method for the detection of taxi street hailing based on computer vision techniques. Our method is inspired by a quantitative study that we conducted with 50 experienced taxi drivers in the city of Tunis (Tunisia) in order to understand how they recognize street-hailing cases. Based on the interviews with taxi drivers, we distinguish between explicit and implicit street-hailing cases. Given a traffic scene, explicit street hailing is detected using three elements of visual information: the hailing gesture, the person’s relative position to the road and the person’s head orientation. Any person who is standing close to the road, looking towards the taxi and making a hailing gesture is automatically recognized as a taxi-hailing passenger. If some elements of the visual information are not detected, we use contextual information (such as space, time and weather) in order to evaluate the existence of implicit street-hailing cases. For example, a person who is standing on the roadside in the heat, looking towards the taxi but not waving his hand is still considered a potential passenger. Hence, the new method that we propose integrates both visual and contextual information in a computer-vision pipeline that we designed to detect taxi street-hailing cases from video streams collected by capturing devices mounted on moving taxis. We tested our pipeline using a dataset that we collected with a taxi on the roads of Tunis. Considering both explicit and implicit hailing scenarios, our method yields satisfactory results in relatively realistic settings, with an accuracy of 80%, a precision of 84% and a recall of 84%.

1. Introduction

Despite the uncertainty regarding their ability to deal with all real-world challenges, autonomous vehicle (AV) technologies have been receiving a lot of interest from industry, governmental authorities and academia [1]. Autonomous taxis (also called Robotaxis) are a recent example of such interest. Different companies are competing to lunch their autonomous taxis, such as Waymo (Google) [2], the Chinese Baidu [3], Hyundai with its Level 4 Ioniq 5s [4], NuTonomy (MIT) and the Japanese Tier IV, to mention a few. Robotaxis are not in the prototyping stage anymore, and different cities have regulated their usage on the roads, such as Seoul [5], Las Vegas [6], San Diego, and recently San Francisco, where California regulators gave Cruise’s robotic taxi service the permission to start driverless rides [7]. Uber and Lyft, two ride e-hailing giants, have partnered with AV technology companies to launch their driverless taxi services [8]. The Chinese DeepRoute.ai is planning to start the mass production of Level 4 autonomous taxis in 2024, to be available for consumer purchase afterwards [9]. In academia, recent research works have concluded that, besides the safety issue, the acceptance of Robotaxis by users is hugely affected by the user experience [10,11,12]. Researchers have identified different autonomous taxi service stages, mainly calling, pick-up, traveling and drop-off stages, and have conducted experiments to study the user experience during these different stages. Flexibility during the pick-up and drop-off stages is one of the issues raised by users. Currently, the users use mobile applications to “call” an autonomous taxi, and after boarding they interact either through a display installed in the vehicles or using a messenger app on their smartphones. One of the problems raised by participants is the difficulty of identifying the specific taxi they called, especially if there are many autonomous taxis around [10]. Mutual identification using QR code was inconvenient [10], and some participants suggested that other intuitive ways would be more interesting, such as hailing taxis through eye movement using Google glasses [12] and hailing gesture signals using smartwatches [12]. Participants also requested more flexibility with respect to the communication of the pick-up and drop-off locations to autonomous taxis. The current practice is to select pick-up and drop-off locations from a fixed list of stands, but users have the perception that taxis can be everywhere [10], and they expect autonomous taxis to be like traditional manned taxis in this respect [11].

These research findings show that scientists from different disciplines need to join their efforts to propose human–autonomous taxi interaction (HATI) models, frameworks and technologies that allow for developing efficient and intuitive solutions for the different service stages. In this paper, we address the case of one service stage that has been explored to a very limited extent, which is street hailing. The current state of the art reveals that only two works have studied taxi street hailing from an interaction perspective. The first work is the study of Anderson [13], a sociologist who explored traditional taxi street hailing as a social interaction between drivers and hailers. Based on a survey that he distributed to a sample of taxi drivers, he found that the hailing gestures used by passengers largely vary in relation to the visual proximity of the hailer to the taxi and to the speed at which the taxi is passing [13]. The second work, also from a social background, is the conceptual model proposed by [14] to design their vision of what would be humanized social interactions between future autonomous taxis and passengers during street-hailing tasks. Both works model taxi street hailing as a sequence of visually driven interactions using gestures that differ according to the distance between autonomous taxis and hailing passengers. Consequently, we believe that computer-vision techniques are fundamental in the development of automated HATI, particularly for the recognition of street-hailing situations. First, they are not intrusive, as they do not require passengers to use any device or application. Second, they mimic how passengers communicate their requests to taxi drivers in the real world, through visual communication. Third, like for manned taxis, they allow passengers to hail autonomous taxis everywhere without being limited to special stands [14]. Finally, they allow for better accessibility of the service, given that passengers who cannot/do not want to use mobile applications—for whatever reason—can still make their requests.

Apart from the work proposed in [15], our review of the state of the art revealed that there are no available automated solutions for taxi street-hailing recognition. In order to address this gap, in this paper, we propose a new method for the recognition of taxi street hailing using computer vision techniques. We report our preliminary results on the exploration of the role of the context in the detection of street-hailing scenes. In addition to the related state of the art, we conducted a survey in order to understand how 50 experienced taxi drivers in Tunis city (Tunisia) use contextual information to detect taxi street hailers. We distinguished between visual information and contextual information. The visual information captures the visual elements that taxi drivers rely on to identify explicit hailing behaviors, such as passenger gestures, relative position to the road and head orientation. Contextual information represents the non-visual information that taxi drivers rely on to recognize implicit hailing situations, such as time, space, etc. Elements of both visual and contextual information are included in a computer-vision pipeline that we designed to recognize taxi street hailers from video streams that were collected by capturing devices mounted on moving taxis. We tested our pipeline on a dataset collected by a taxi on the roads of Tunis, and our results are promising. Consequently, in this paper, we contribute with a new computer-vision-based method that uses visual and contextual information for the detection of both explicit and implicit hailing scenes from video streams.

The paper is structured as follows. In Section 2, we present a discussion of the related state of the art. In Section 3, we summarize the main findings from the taxi drivers’ survey regarding street-hailing detection and the role of visual and contextual information. In Section 4, we present our computer-vision-based approach for the detection of explicit and implicit street-hailing scenes. In Section 5, we present the training and test datasets that we used to experiment with our approach, along with the experimental settings. In Section 6, we present our quantitative and qualitative results and we discuss the strengths and weaknesses of our approach. We conclude in Section 7 by giving a brief overview of our future work.

2. Related Work

The state of the art on taxi street-hailing detection using computer vision techniques revealed the existence of only one work proposed by [15], which recognizes taxi street hailing from individual images based on the person’s pose and head orientation. We discuss this work in more detail in Section 6. Given the sparsity of research works about this specific topic, in the present section, we review the main works in the following related topics: (1) human–autonomous vehicle interaction (HAVI) and gesture recognition, (2) the detection of pedestrian intentions and (3) the identification of taxi street-hailing behavior.

2.1. Human–Autonomous Vehicle Interaction (HAVI) and Body Gesture Recognition

Since its introduction by W. Myron in 1991 [16], gesture detection and recognition have been widely used for the implementation of a variety of human–machine interaction applications, especially in robotics. With the recent technological progress in autonomous vehicles, gestures have become an intuitive choice for the interaction between autonomous vehicles and humans [17,18,19]. From an application perspective, gesture recognition techniques have been used to support both indoor and outdoor human–autonomous vehicle interactions (HAVIs). Indoor interactions are those between the vehicles and the persons inside them (drivers or passengers). Most of the indoor HAVI applications focus on the detection of unsafe driver behavior, such as fatigue [20] and on vehicle control [21]. Outdoor interactions are those between autonomous vehicles and persons outside them, such as pedestrians. Most of the outdoor HAVI applications focus on the car-to-pedestrian interaction [22,23] and car-to-cyclist communication [24] for road safety purposes, but other applications have been explored, such as traffic control gestures recognition [25], where traffic control officers can request an autonomous vehicle to stop or turn with specific hand gestures.

From a technological perspective, a lot of research work has been conducted on the recognition of body gestures from video data using computer-vision techniques. Skeleton-based recognition is one of the most widely used techniques [26], both for static and dynamic gesture recognition. A variety of algorithms have been used. Traditional techniques include Gaussian mixture models [27], recurrent neural network (RNN)with bidirectional long short-term memory (LSTM) cells [28], deep learning [29] and CNNs [30]. The current state of the art for indoor and outdoor gesture recognition builds on deep neural networks. A recent review of hand gesture recognition techniques in general can be found in [31,32].

2.2. Predicting Intentions of Pedestrians from 2D Scenes

The ability of autonomous vehicles to detect pedestrians’ road-crossing intentions is crucial for their safety. Approaches of pedestrian intention detection can be categorized into two major categories. The first category formalizes intention detection as a trajectory prediction problem. The second category considers pedestrian intention as a binary decision problem.

Several models and architectures have been developed and deployed, aiming at achieving a high-accuracy prediction of pedestrian intention using a binary classification approach. Unlike other methods, binary classification utilizes different tools and techniques depending on the data source and the data characteristics and features. For instance, the models based on RGB input use either 2D or 3D convolutions. In 2D convolution, a sliding filter is used along the height and width, and in 3D settings, the filter slides along the height, width and temporal depth. Using 2D convolutional networks, the information is propagated across time either via LSTMs or feature aggregation over time [33]. For instance, the authors in [34] proposed a two-stream architecture that takes as input a single excerpt from typical traffic scenes bounding an entity of interest (EoI) corresponding to the pedestrian. The EoI is processed by two independent CNNs producing two feature vectors that are then concatenated for classification. Authors in [35] presented an extension of these models by integrating LSTMs and 3D CNNs, and those in [36] did so by feeding many frames into the future and carrying out the classification using these frames.

Other methods that use the skeletal data extracted from the frames have been proposed. These methods directly operate on the skeleton of the pedestrians. The main advantage of these methods is that the data dimensions are significantly reduced. The yielded models are therefore less prone to overfitting [37]. Recently, a new method was proposed based on the individual keypoints in order to achieve the prediction of pedestrian intentions but from a single frame. Another method proposed by [38] exploits contextual features, such as the distance separating the pedestrian from the vehicle, his lateral motion, and his surroundings as well as the vehicle’s velocity as input to a CRF (conditional random field). The purpose of this model is to predict in an early and accurate fashion pedestrian’s crossing/not-crossing behavior in front of a vehicle.

2.3. Identification of Taxi Street-Hhailing Behavior

The topic of recognizing taxi street hailing has been studied by sociologists in order to explore how taxi drivers perceive and culturally interact with their environment, including passengers [39]. An interesting work is the work of Anderson, who studied gestures, in particular, as a communication channel between taxi drivers and passengers during hailing interactions [13]. Based on a survey that he distributed to a group of taxi drivers in San Francisco, CA, USA, the researcher wanted to explore how taxi drivers evaluate street hails in terms of clarity and propriety. Clarity refers to “the ability of clearly recognizing a hailing behavior and distinguishing it from other one”, such as waving to a friend. Propriety refers to“the ability to identify if the passenger can be trusted morally and socially” so the taxi driver can decide either to accept the hailing request or not [13]. In the context of our work, it is the clarity aspect that is more relevant, and with this respect, Anderson’s results are interesting. He found that the method of hailing adopted varies largely in relation to the visual proximity of the hailer to the taxi, and to the speed at which the vehicle is passing [13]. When the driver and the hailer are within range of eye contact, the hailer “can use any waving or beckoning gestures to communicate his intention, such as raising one’s hand, standing on the curb while sticking arms out sideways into the view of the oncoming driver” [13], etc. However, if the hailer and the driver are too far from each other, “hailers need to make clear that they are hailing a cab as opposed to waving to a friend, checking their watch, or making any number of other gestures which similarly involve one’s arm” [13]. Taxi drivers who participated in the survey specified that the best and most clear gesture, in this case, is the “Statue of Liberty”, where the“hailer stands on the curb, facing oncoming traffic, and sticks the street-side arm stiffly out at an angle of about 100–135 degree” [13]. However, taxi drivers pointed out that there are many other hailing gestures, and they may even depend on the hailers’ cultural backgrounds. Similarly, the conceptual model of human–taxi street-hailing interaction proposed in [14] assumed that, depending on the distance between taxis and passengers, different hailing gestures can be used, such as waving or nodding.

Both works stressed that passengers need to use explicit gestures in order to communicate their desire to ride a taxi to the drivers. The explicit gestures may vary according to the proximity between the passengers and the taxis, as well as according to the cultural background, but hand waving remains the most common hailing gesture. Nevertheless, in many real cases, and for different reasons, passengers may not be able to make explicit distinctive gestures. For example, a person who is standing on the curb of the street, holding bags in both hands and looking towards vehicles could be a potential taxi hailer. This could be confirmed by real-life observations of several cases, where vacant taxi drivers slow down after perceiving pedestrians standing/walking on the roadside, even though these pedestrians did not make any explicit hailing gestures. This is simply because taxi drivers consider these pedestrians to be potential street-hailing passengers, and they slow down to confirm or dispel their intuition. Consequently, there is a need to propose a method that can detect both explicit and implicit taxi street-hailing behavior from traffic scenes. For this purpose, we propose to combine both visual and contextual information in one computer-vision-based approach. To the best of our knowledge, the integration of visual and contextual information for the detection of both explicit and implicit street-hailing behavior has not been the main subject of any previous research work.

3. Visual and Contextual Information

One of the main concerns that motivated our work is how taxi drivers recognize a street hailing. To address this, we designed a survey that we used to interview a group of 50 experienced taxi drivers in the city of Tunis, the capital of Tunisia. Our initial results showed that, in general, taxi drivers use two types of information, visual and contextual information, respectively.

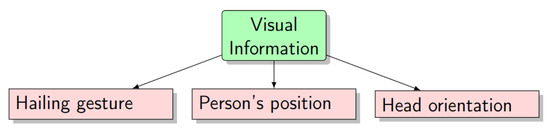

The visual information refers to the aspects that a taxi driver can visually verify to recognize any explicit taxi street-hailing scene while he is cruising the road network. All taxi drivers agreed that an obvious street-hailing scene is composed of a person who is standing on the roadside, looking towards the taxi and waving with his/her hand. Consequently, we decomposed the visual information of a street-hailing scene into three elements: (1) the presence of the hailing gesture, (2) the position of the person relative to the road, and (3) the orientation of the person’s head (Figure 1). The combination of these three elements allows for the estimation of the confidentiality of street-hailing detection. For example, a person who is standing far from the roadside, waving his/her hand and looking in the opposite direction of the taxi would have a low probability of corresponding to a street-hailing case. However, if the person is looking towards the taxi, the probability of having a street-hailing case will increase.

Figure 1.

Visual information.

In case all three visual information elements are present, the scene is automatically interpreted as an explicit street-hailing case, and there is no need to check other types of information. However, if one of the three visual information elements is not detected, there is a need to evaluate other pieces of information (that are not visual) in order to evaluate the presence of street hailing. We collectively refer to these non-visual pieces of information as contextual information.

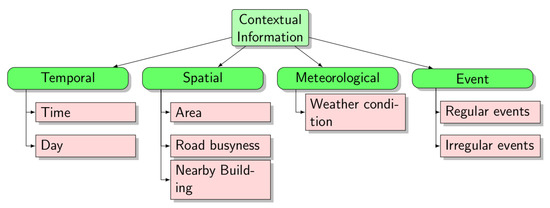

Contextual information corresponds to a kind of external knowledge (external to the visual hailing scenes) that taxi drivers often use to recognize implicit street-hailing scenes, i.e., cases where passengers do not use explicit hailing gestures. In order to identify the list of potential contextual information elements, we started by reviewing related works that studied the strategies used by taxi drivers to search for potential passengers [40,41,42,43,44]. We also reviewed some works about the factors that affect the variability and prediction of taxi demand [45,46,47,48]. We assumed that these factors could be good candidates for contextual information. For example, authors in [46] concluded that there exists a correlation between taxi demand prediction and temporal, spatial, weather and special events factors. The list of contextual information elements that we combined from the state of that art is illustrated in Figure 2. The taxi drivers that we interviewed collectively confirmed the same aspects.

Figure 2.

Contextual information.

Based on our survey, we concluded that taxi drivers recognize street hailing using both visual and contextual information according to a two-step reasoning process. In the first step, they evaluate the existence of the three elements of the visual information, i.e., the hailing gesture, the person’s relative position to the road and the head orientation. If the three elements are present, an explicit street-hailing case is automatically detected, and there is no need to use further information. However, if one or more elements of the visual information is/are not confirmed, a second step is required, which consists of evaluating elements of contextual information to have better judgment. In case enough elements of contextual information are detected, an implicit street-hailing case is detected; otherwise, no street hailing is recognized.

In this paper, we propose to implement a computer-vision-based pipeline for taxi street-hailing detection that mimics the two-step reasoning process used by taxi drivers. We believe that it is important to consider both explicit and implicit street-hailing cases. Explicit cases can be relatively well described using the three elements of information that we identified. Implicit cases are more challenging to detect, and we believe that the use of contextual information could be helpful. This could accommodate real situations, where passengers are not able to make explicit hailing gestures (for any reason) but still desire to hail a taxi. In the next section, we present our proposed computer-vision-based method for the detection of both explicit and implicit taxi street-hailing cases.

4. Proposed Method

In this section, we present the new method that we propose to recognize street-hailing cases from video sequences. The method comprises three stages. The first stage consists of detecting the objects of interest (persons and road segments) from the sequences. The second stage consists of detecting the visual information components presented in the previous section, i.e., the hailing gesture, the position of the detected person(s) relative to the road, and the orientation of the potential passengers’ heads. The third stage consists of the integration of the contextual information, if needed, in order to have a final decision about whether street hailing is detected or not.

4.1. First Stage: Detection of Objects of Interest

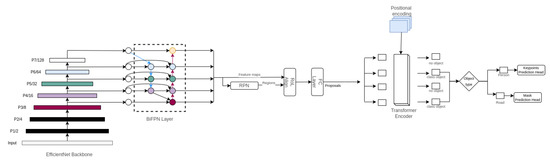

The first stage consists of detecting the persons and the road segments from the video data, and is implemented as a neural network architecture (Figure 3) that takes an image (frame) in the input and returns the road segmentation mask and the persons’ keypoints in the output. It is an end-to-end model to extract the keypoints masks for persons and the mask map for the road. The architecture that we used is based on the transformer-based set prediction with RCNN (TSP-RCNN) proposed by [49], which solves the slow convergence problem of the detection transformers (DETR) [50].

Figure 3.

Network architecture of the first stage.

In the following, we present each architectural choice of the proposed method TSP-RCNN and we explain how we adapt it to our requirements.

Elharrous and his team [51] presented a comprehensive study that compares the more efficient and accurate feature extractors in all deep learning tasks, including the computer vision ones. EfficientNet-B3 + BiFPN [52] and ResNet-50 + FPN are two of the most widely used backbones. EfficientNet-B3 + BiFPN has superior precision, computational cost, and inference latency. Since our project requires detecting large-scale objects (roads) and small objects (crowded persons), we opted for utilizing the EfficientNet-B3 backbone with bi-directional FPN (BiFPN), which provides a feature extraction procedure that extends to both small and large objects.

The proposed method is based on TSP-RCNN [49], which uses an RCNN mechanism plus the intrusion of a transformer decoder to generate the class object boxes while changing the backbone to Efficient-B3 + BiFPN based on the EfficentDet architecture and adding multi-head for each of the detection and segmentation tasks.

4.2. Second Stage: Extraction of the Visual Information

After extracting the persons and road segments in the first stage, the second stage consists of extracting the person’s position estimation, head direction, and hailing gesture detection from consecutive frames.

4.2.1. Person’s Position Estimation

In general, a person’s relative position estimation is not trivial. However, after the extraction of the persons and road segments in the first stage, we can estimate if a detected person is located on the side of the road, on the road, or away from both.

Let K be a set of n keypoints of a given person, where , and each represents the point’s position in an image of width w and height h ().

The standing position of the person is a circle with a radius r and origin I, where

The circle’s origin I is the closest point of the detected person to the ground. The farthest two points represent the circle’s diameter and the person’s distance from the camera.

Let us consider R a set of m points (pixels) of the detected road, where , and each represents the pixel position in an image.

The detected person is

We assume that a person who is looking for a taxi should be very close to the road. Consequently, we interpret a detected person as a potential street hailer if they are not very far from the road.

4.2.2. Person’s Head Direction

Let us consider the set , with K being the set of n keypoints of a given person defined in Section 4.2.1, where A, B and C respectively correspond to the left eye, right eye, and nose if detected, and otherwise to the right shoulder, the left shoulder, and head if detected.

The head direction is a vector defined as follows:

4.2.3. Person Tracking and Hailing Gesture Detection

Let us recall that the input of our method is a video sequence composed of a set of frames. Hailing is a dynamic scene in which the same person is performing waving gestures over a consecutive set of frames. Consequently, before we detect the waving gesture, we need to track the identical person(s) over the sequence of frames using object-tracking techniques. In our method, we implemented object tracking by simply checking if a person in the frame t has any corresponding elements in the frame by using a Hungarian algorithm. The score assignment for the Hungarian algorithm can be established by IoU (intersection over union), meaning that if the bounding box overlaps with the previous one, we conclude that it is probably the same person.

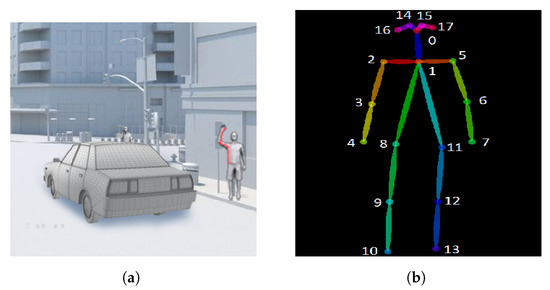

To detect the hailing gesture, we analyzed multiple videos of a person performing hand-hailing movements. After running several hailing examples, we noticed that the following four key points can disguise the specific hand movement: shoulder, elbow, wrist, and hip.

Figure 4a illustrates the area we focus on during the hailing detection, and Figure 4b shows the key points map of a human body and their respective identifiers.

Figure 4.

Hailing Example showing (a) Focus key points and (b) Human body key points.

Finally, we proposed a key points angle approach defined as follows:

- Let A, B, C, and D be the key points for the shoulder, elbow, wrist, and hip, which respectively correspond to key points numbers 2, 3, 4, and 8 in Figure 4b, for the right side and numbers 5, 6, 7 and 11 for the left side, respectively.

- We define as the angle between hip, shoulder and elbow, so .

- We define as the angle between shoulder, elbow, and wrist, so where the angle between 3 points in 2-dimensional space is defined as follows:

- –

- –

- , arctan2 is the element-wise arc tangent of choosing the quadrant correctly.

- –

Following this strategy, we then measure the two angles in each frame of a clip of a hailing person twice, one for his right hand and the other for his left hand.

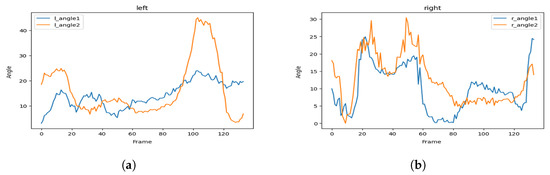

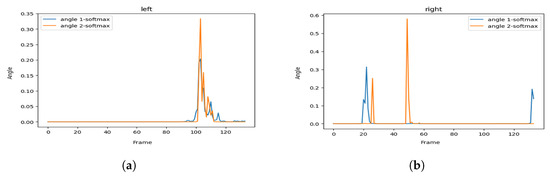

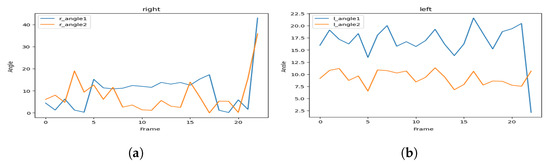

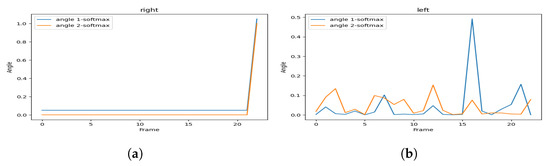

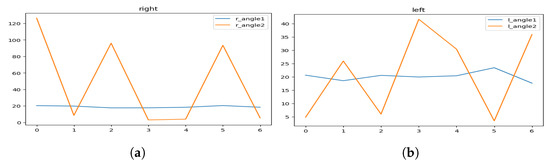

Figure 5 represents the visualization of the variation of the angles, and Figure 6 represents the visualization of the variation of the angles after applying soft-max in order to smooth it.

Figure 5.

Hailing example: angles variation of (a) the left side and (b) the right side.

Figure 6.

Hailing example: smoothed (soft-max) angles variation for (a) the left side and (b) the right side.

As we can see, the hailing movement is very clear after applying smoothness to the variation of the angles. In the left side of Figure 6, we can identify a hailing movement between the 90th frame and the 110th frame. By applying the same logic, we can identify a hailing on the right side between the 15th frame and the 30th frame.

4.3. Final Stage: Scoring and Hailing Detection

In this section, we explain how street-hailing cases are detected based on a scoring scheme that integrates the extracted visual information with the contextual information.

As we explained in Section 3, the interviews of taxi drivers show that street hailing can be explicit or implicit. Explicit hailing is mainly detected based on the visual information (a person waving on the road to stop a taxi); otherwise, implicit hailing is evaluated relying on contextual information. Consequently, we designed the final stage of street-hailing detection using the following decision flow:

- (1)

- Elements of the explicit street hailing are evaluated first, and if they are all detected, a street-hailing case is recognized.

- (2)

- If one or more elements of the visual information is/are not detected, contextual information is used in order to evaluate the existence of implicit street hailing.

The decision flow is implemented using the following scoring scheme relying on two main components: visual and contextual information.

Visual information:

The three components of the visual information are scored as follows:

- –

- Hailing gesture is scored as if detected and zero otherwise.

- –

- The score of the standing position is if the person is on the side road, if the person is close to the side road (e.g., on the road), and zero otherwise.

- –

- The score of the head direction is if the person is looking toward the road or the taxi and zero otherwise.

The variables , , and represent a scoring system of 100 points, where if one of these variables is absent, then the score never reaches 90, and hence the contextual information is used for further evaluation.

Contextual information:

- –

- Spatiotemporal information: can go as high as 60 points in the case of a high-demand area and time and as low as zero contrariwise.

- –

- Meteorological information: can go as high as 30 points in the case of very bad weather and as low as zero contrariwise.

- –

- Event information: if there an event in the corresponding place–date and zero otherwise.

The scoring system works as follows:

- –

- The maximum score is 100 points.

- –

- If the visual information reaches = 90 points, the person is classified as a street hailer, and no contextual information is used.

- –

- Otherwise, the contextual information is used as a percentage of the difference between 100 and the visual information points, e.g., if the visual information is 60 points and the contextual information is 50 points, the score is points.

5. Dataset and Experimental Settings

We implemented the proposed method using the PyTorch deep learning library, OpenCV computer vision library, and Numpy. Python was selected because it is suitable for deep learning and computer vision tasks, is supported in multiple platforms and is capable of handling real-time computer vision tasks.

5.1. Datasets

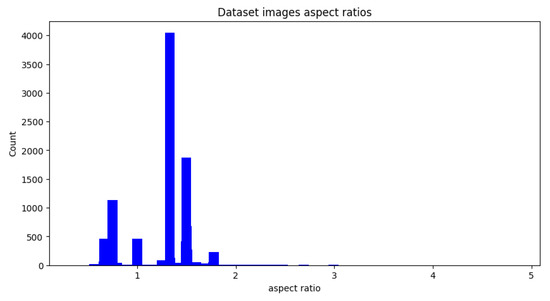

For the training part, we used the Common Object in Context (COCO) dataset [53], a large-scale object detection, segmentation, and captioning dataset that is the benchmark for most state-of-the-art methods in various computer vision tasks. Since our task is not a general-purpose detection/segmentation task, we sub-sampled the COCO-stuff dataset into 15,402 images containing the road and person categories. We found that the average height and width of images are 477.23 and 587.85, respectively (Figure 7). The 587.85 aspect ratio is 1.23, which is fine to use with a non-destructive resize and with a padding approach to resize our images to the desired size suitable for our backbone input.

Figure 7.

Data set aspect ratio.

For the test dataset, we opted to mix (1) the previously presented COCO dataset, excluding the part we used for the training, and (2) a set of images that we filmed by ourselves on the roads of Tunis city, Tunisia.

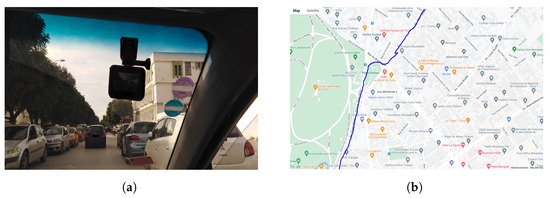

We mounted a Go-Pro Hero 10 camera on the front glass of a probe taxi, on the passenger side facing the road and the sidewalk at the same time to capture pedestrians and roads, as shown in Figure 8a. The recording process took place at different times and locations in the Tunisian capital with a constant resolution of 1080 × 1920 using 120 frames per s. The purpose was to capture different scenes at different spatial–temporal moments corresponding to both high and low taxi street demand (crowded vs. uncrowded areas).

Figure 8.

Recording settings showing (a) the camera mounting and (b) an example of position recording.

The data collection trips were tracked using a GPS-tracking mobile application, so all the collected video sequences are time- and GPS stamped (Figure 8b). The GPS trajectories were then augmented with other contextual information using Google Maps, such as temperature, the busyness of the roads and special events, if any.

Finally, we filtered the collected data, and we selected only the best clips from the recorded videos. We obtained about 3 h of good-quality clips that we used as a test dataset. More data are being collected to build a complete dataset that can be used for training, validation, and testing.

Figure 9 shows a collection of images that we captured: Figure 9a shows an area with high taxi demand (Beb Alioua, Tunis) with many people and cars; Figure 9b shows the same area but at a different location with fewer cars and people; Figure 9c shows an area with high demand (Monplaisir, Tunis) but in a secondary road such that we have many cars and no people; and Figure 9d shows very visible people and far away cars. The objective is to capture different situations that will help us evaluate our model in extracting many anchor sizes for persons and roads and to make sure it will work in various scenarios.

Figure 9.

Examples from the test dataset showing different situations of taxi street-hailing contexts including (a) a crowded area, (b) an area with few cars and people, (c) a secondary road with no people and (d) and an area with many people and far away cars.

5.2. Experiment Settings

For the training parameters, we used the same training process for the proposed TSP-RCNN method since it is our base model.

As mentioned in Section 4.1, we used the EfficientNet-B3 backbone, a weighted bi-directional feature pyramid network as described in [52], where the output of BiFPN is passed to the RPN selecting 700 scored features. The RoI Align operation and a fully connected layer were applied to extract the proposed features from the ROIs. We used a 6-layer transformer encoder of width 512 with 8 attention heads. The hidden size of the feed-forward network (FFN) in the transformer was set to 2048. Finally, for the detection heads, we used the default training parameters in detectron2 [54], as we used the default mask head for road segmentation and the pre-trained keypoints head for keypoints detection.

Unlike the multi-task end-to-end networks, e.g., HybridNets [55] and YOLOP [56] which use Dice and Focal losses, we used only Focal loss, as it is an object detection problem for the first stage of the network.

Following TSP-RCNN, we used AdamW to optimize the transformer, and SGD with a momentum of 0.9 to optimize the other parts with a 3× scheduler.

As evaluation metrics, we used the average precision (AP) and recall IoU (%) as primary metrics and FLOPs for the number of computations. All are provided by Detectron2.

6. Experimental Results and Discussion

In order to evaluate the performance of the detection of objects of interest, we followed the same training protocol used in the TSP-RCNN model, which is described in [49], and we utilized a combination of L1 and Generalized IoU losses for regression. Focal loss is used for weighting positive and negative examples in classification for both TSP-FCOs and TSP-RCNN.

To evaluate the performance of our proposed method for street taxi-hailing detection, we calculated the accuracy, precision, recall and specificity metrics based on the calculated values of true positive (TP), true negative (TN), false positive (FP), and false negative (FN) rates, as follows:

- Accuracy: It measures the overall correctness of the model’s predictions and is defined by the following:

- Precision: It measures the proportion of positive predictions that are correct, and is defined by

- Recall (also known as sensitivity): It measures the proportion of actual positive cases that were correctly identified by the model. It is defined by

- Specificity: It measures the proportion of actual negative cases that were correctly identified by the model. It is defined by the ratio

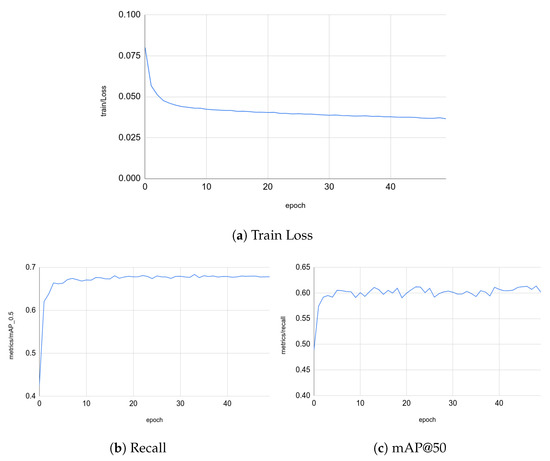

6.1. Detection of Objects of Interest

With respect to the results of this training process, we tackled only the accuracy of the bounding boxes at the end of the transformer encoder since we are using pre-trained head keypoints. As illustrated in Figure 10, the precision is 68%, and the recall value is 60%. These values are acceptable with respect to the COCO dataset [57].

Figure 10.

Training results.

Figure 11 illustrates the results of two different examples of the road-segmentation tasks. The image highlights the pixels of the road and manages to distinguish the road from the sidewalk. However, the segmentation does not extend to the far-away road, which will not affect our detection since the proposed method works on video streams and the far-away road will eventually become the up-front road for the recording camera.

Figure 11.

Road segmentation results of (a) example 1 and (b) example 2.

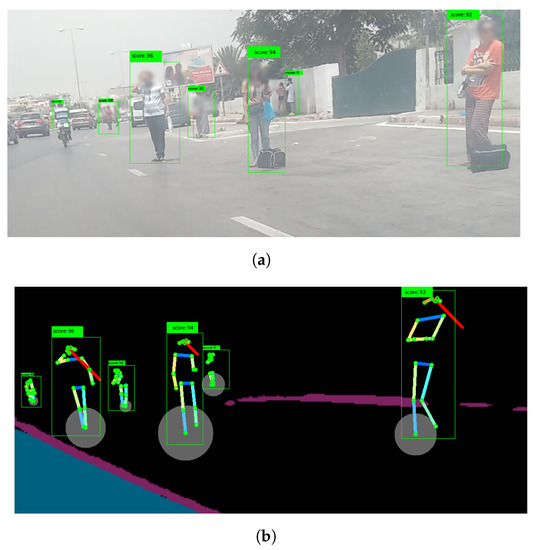

Lastly, Figure 12 illustrates an example of human keypoints detection results. Our model succeeds in detecting the keypoints of a crowded area (people very close to each other) and since the keypoints detection plays a major role in the second stage, we used a higher confidence level of 70%.

Figure 12.

People keypoint detection.

6.2. Detection of Street Hailing

In our proposed architecture, we used an unsupervised method for the detection of street hailing, which does not require a training process. However, given that we used a dataset that we collected as a test set, we evaluated the performance of the overall street-hailing detection process (including both explicit and implicit) using true positive (TP), true negative (TN), false negative (FN) and false positive (FN) metrics. Once a street-hailing detection was carried out, we evaluated the ability of the proposed method to discern the type of hailing (explicit or implicit). In this case, we used only the false negative and true positive metrics since the existence of a street-hailing action was already confirmed. Table 1 reports the accuracy, precision, recall and specificity scores of the obtained results. Our method achieved an accuracy of 80% and, most importantly, a precision and recall of 84%, which implies that the method can correctly, in most cases, identify both explicit and implicit street hailing.

Table 1.

Reported performance results of taxi street-hailing detection.

In the following examples, we depict four scenarios corresponding to explicit street hailing, implicit street hailing, no street hailing, and undetected street hailing, respectively. Each of these examples illustrates qualitatively the performance of the proposed method in various contexts.

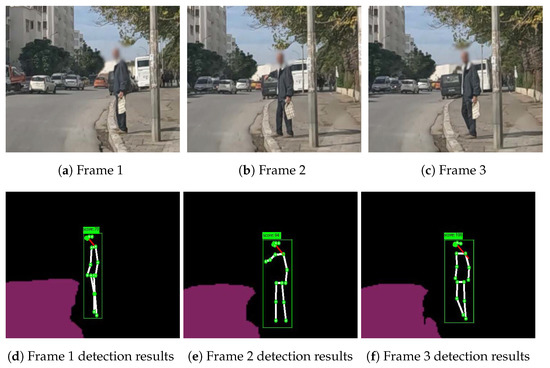

6.2.1. Example 1: Explicit StreetHailing

In Figure 13, we illustrate an example of an explicit hailing scenario captured from a 20-frame scene (we display only 3 due to the page width limit). Figure 14 displays the angle variation of the person performing the hailing without smoothing out the values, and Figure 15 illustrates the same variation with soft-max as the smoothing function.

Figure 13.

Example 1: Explicit hailing.

Figure 14.

Non-smoothed angles variation of (a) the right side and (b) the left side.

Figure 15.

Smoothed (soft-max) angles variation of (a) the right side and (b) the left side.

These visualizations show a simultaneous peak (Figure 15a) in the tracked angles between the shoulder, elbow, wrist, and hip, similar to the one in Figure 6a that represents, in most cases, a hand-waving action (which can be interpreted as a hailing action), different from the one in the next example.

Table 2 illustrates the score of the visual information corresponding to the explicit hailing case, which is 100 points, given that all the elements of visual information are detected. Consequently, the person is flagged as a street-hailing case and no contextual information is used (Figure 13d–f).

Table 2.

Visual information score of the explicit hailing scene in Figure 13.

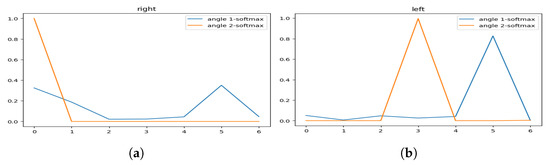

6.2.2. Example 2: Implicit Street Hailing

Figure 16a illustrates an example of a potential implicit street-hailing scene. We can recognize three people close to the taxi’s camera, all of them in an idle position, standing by the roadside. The front two people present no hand movement, but the third shows a hand movement (compared to previous frames) which is not recognized as a hailing action because his hand is on his head. As shown in Figure 17 and Figure 18, for the person resting his hand on his head (left), there is no simultaneous peak in the angle variation, even though the action is similar to hand waving or hand hailing.

Figure 16.

Example 2: Implicit hailing illustrating (a) a scene and (b) its visual detection.

Figure 17.

Example 2: Non smoothed angles variation of (a) the right side and (b) the left side.

Figure 18.

Example 2: Smoothed (soft-max) angles variation of (a) the right side and (b) the left side.

Table 3 illustrates the obtained visual and contextual information scores corresponding to the case illustrated in Figure 16 (the person at the left). The visual information score does not reach the 90 points threshold, and therefore, contextual information is used. Given that the scene was captured in Beb Alioua (an area with high taxi demand) at 11:00 a.m. (peak time) and with a temperature of 35 °C in the summer, the score of the contextual information is 90 points as shown in the same table. The final score is points, and the person is considered a street-hailing passenger.

Table 3.

Visual and contextual information scores of the implicit hailing scene in Figure 16.

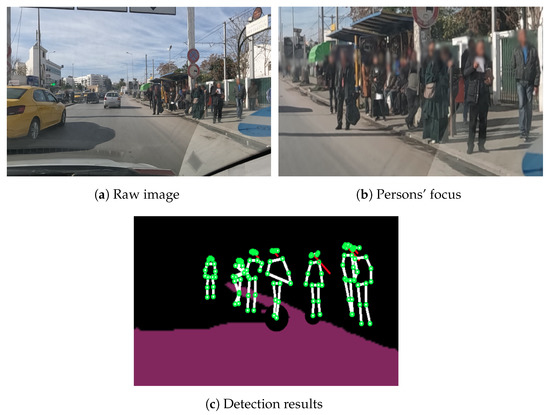

6.2.3. Example 3: No Street Hailing

In the example illustrated in Figure 19, we present a situation of no street hailing. It is a crowded area, but there is no overlap with people. Individuals are accurately detected, and after tracking each one, we can see that few of them make good candidates for taxi street hailing from the visual information perspective (the people are sitting, not looking at the road/taxi, etc.). The contextual information also does not reveal a sufficient score since this is a bus station where passengers are waiting for the bus. Consequently, no street hailing is detected.

Figure 19.

Example 3: No street hailing case illustrating (a) a raw image, (b) the persons’ focus and (c) the corresponding detection results.

Table 4 illustrates the obtained visual and contextual information scores corresponding to the case illustrated in Figure 19. The visual score for the persons in this scene is 30 points for the standing position, 10 points for the head direction, and 0 for the hailing gesture, with a total of 40 points. The contextual information score has 30 points for the spatial–temporal parameter given that the time corresponds to a peak interval but the location corresponds to a low-demand area. The weather information achieves 10 points because of the heat, while no event is detected in the area. The contextual information score is equal to 40 points, and the final score is points, and consequently no street hailing is detected.

Table 4.

Visual and contextual information score of the implicit hailing scene in Figure 19.

6.2.4. Example 4: Undetected Street Hailing

In the example illustrated in Figure 20, we present another scenario, where our method fails to detect a hailing case. As illustrated in Figure 20a,b, the frames in the observed scene show a crowded environment with overlapping between the pedestrians and vehicles, which hides crucial body parts required for the keypoints detection. Therefore, the accurate tracking of keypoints is impeded. This scenario illustrates the limitations of using pure computer vision methods for the detection of street hailing.

Figure 20.

Example 4: Undetected street hailing illustration with 2 frames (a,b).

6.3. Discussion

As we previously mentioned, taxi street hailing has been explored to a very limited extent in the state of the art. To the best of our knowledge, the only automated solution for taxi street-hailing recognition is the computer-vision-based approach proposed in [15] to detect car street-hailing intentions from individual images. Even though we share the common motivation that computer-vision-based car-hailing recognition is more convenient for autonomous taxis than traditional online booking methods, our approach is fundamentally different with respect to two main perspectives. The solution proposed in [15] detects the street-hailing intention from individual images by fusing two main elements: (1) the hailing pose of the person and (2) the attention of the person to the taxi (expressed by the eyes’ orientation towards the taxi). In simple words, a person who is making the hailing pose and looking towards the taxi potentially has the intention of hailing. By considering only individual images, the temporal dynamic hailing scenes (such as waving hands) are not considered, and this is the reason why the authors talk about the hailing pose (the famous“Statue of Liberty” pose) and not hailing gestures. Our solution, in contrast, analyzes a temporal sequence of images (frames) in order to detect both explicit and implicit hailing scenes. Explicit hailing is decomposed into three visual components, which are the person’s hailing gestures, their relative position to the road, and head orientation. Implicit hailing can be recognized using other contextual information (other than the visual elements). Our solution is also different regarding the perception of the hailing concept.While the work in [15] analyzes car hailing from a computer vision perspective only, our work is inspired by previous studies of taxi hailing as a social interaction between taxi drivers and passengers. This is why we believe that our solution can be easily integrated into a more complete framework of interaction between autonomous taxis and passengers, where hailing is only an aspect of the interaction [14]. Overall, the proposed method yields satisfactory results in relatively realistic settings. However, we noticed that the street-hailing detection fails when the observed scene presents overlapping between vehicles and pedestrians. When this situation occurs, crucial body parts necessary for the accurate detection of hailing gestures are hidden. Therefore, there is still a need for more adjustments of the detection scheme by incorporating more contextual information and by aggregating observations from other monitoring devices, such as other vehicles driving by, on-site CCTV, etc.

7. Conclusions

In this paper, we proposed a new method for street-hailing detection based on computer-vision techniques augmented with contextual information. Besides the originality of its deep-learning-based pipeline, our method allows for the detection of both explicit and implicit street-hailing scenes by the integration of visual and contextual information in a logic flow based on a defined scoring scheme. The design of the pipeline was inspired by the feedback collected from 50 experienced taxi drivers that we interviewed in order to understand the intuitive way of identifying prospect street-hailing passengers. We experimentally studied our proposed method using a dataset of urban road video scenes that we collected from a real taxi in the city of Tunis, Tunisia. The study showed that the proposed method achieves satisfactory results with respect to the detection of explicit and implicit street-hailing cases.

Nevertheless, there is still room for future improvement. First, we are currently working on further developing the concept of context. A formal definition of the context is required in order to better model, represent and use contextual information for the detection of both explicit and implicit street-hailing behaviors. Second, the context is also fundamental for the implementation of a more extended street-hailing service stage as a social interaction between Robotaxis and passengers. As we mentioned earlier, hailing recognition is only one part of the hailing interaction, and there is a gap in the state-of-the-art of human–autonomous taxi interaction (HATI) with respect to this aspect. Third, we are collecting more data in order to build a complete dataset dedicated to taxi street-hailing scenes in urban environments that we will use to test and improve the performance of our approach. The dataset will be used to automatically learn the weights of the parameters of the scoring scheme from the real data and shall also exhibit more complex hailing behaviors, as well as monitoring settings, such as different weather, lighting and crowd/traffic conditions.

Author Contributions

Conceptualization, Z.B., H.H., M.M., L.H. and N.J.; methodology, M.M., Z.B., H.H., L.H. and N.J.; software, M.M.; validation, Z.B., M.M., H.H. and L.H.; formal analysis, Z.B., M.M., H.H. and L.H.; investigation, H.H. and Z.B.; resources, Z.B. and H.H.; data curation, M.M.; writing—original draft preparation, M.M., H.H., Z.B. and L.H.; writing—review and editing, Z.B. and H.H.; visualization, M.M.; supervision, Z.B., H.H. and L.H.; project administration, H.H. and Z.B.; funding acquisition, H.H. and Z.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially funded by TRC of Oman, grant number BFP/RGP/ICT/21/235.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data used in this work are confidential.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Faisal, A.; Kamruzzaman, M.; Yigitcanlar, T.; Currie, G. Understanding autonomous vehicles. J. Transp. Land Use 2019, 12, 45–72. [Google Scholar] [CrossRef]

- McFarland, M. Waymo to Expand Robotaxi Service to Los Angeles. Available online: https://edition.cnn.com/2022/10/19/business/waymo-los-angeles-rides/index.html (accessed on 4 March 2023).

- CBS NEWS. Robotaxis Are Taking over China’s Roads. Here’s How They Stack Up to the Old-Fashioned Version. Available online: https://www.cbsnews.com/news/china-robotaxis-self-driving-cabs-taking-over-cbs-test-ride (accessed on 11 December 2022).

- Hope, G. Hyundai Launches Robotaxi Trial with Its Own AV Tech. Available online: https://www.iotworldtoday.com/2022/06/13/hyundai-launches-robotaxi-trial-with-its-own-av-tech/ (accessed on 11 December 2022).

- Yonhap News. S. Korea to Complete Preparations for Level 4 Autonomous Car by 2024: Minister. Available online: https://en.yna.co.kr/view/AEN20230108002100320 (accessed on 4 March 2023).

- Bellan, R. Uber and Motional Launch Robotaxi Service in Las Vegas. Available online: https://techcrunch.com/2022/12/07/uber-and-motional-launch-robotaxi-service-in-las-vegas/ (accessed on 4 March 2023).

- npr. Driverless Taxis Are Coming to the Streets of San Francisco. Available online: https://www.npr.org/2022/06/03/1102922330/driverless-self-driving-taxis-san-francisco-gm-cruise (accessed on 11 December 2022).

- Bloomberg. Uber Launches Robotaxis But Driverless Fleet Is ‘Long Time’ Away. Available online: https://europe.autonews.com/automakers/uber-launches-robotaxis-us (accessed on 13 December 2022).

- Cozzens, T. DeepRoute.ai Unveils Autonomous ‘Robotaxi’ Fleet. Available online: https://www.gpsworld.com/deeproute-ai-unveils-autonomous-robotaxi-fleet/ (accessed on 11 December 2022).

- Kim, S.; Chang, J.J.E.; Park, H.H.; Song, S.U.; Cha, C.B.; Kim, J.W.; Kang, N. Autonomous taxi service design and user experience. Int. J. Hum.-Interact. 2020, 36, 429–448. [Google Scholar] [CrossRef]

- Lee, S.; Yoo, S.; Kim, S.; Kim, E.; Kang, N. Effect of robo-taxi user experience on user acceptance: Field test data analysis. Transp. Res. Rec. 2022, 2676, 350–366. [Google Scholar] [CrossRef]

- Hallewell, M.; Large, D.; Harvey, C.; Briars, L.; Evans, J.; Coffey, M.; Burnett, G. Deriving UX Dimensions for Future Autonomous Taxi Interface Design. J. Usability Stud. 2022, 17, 140–163. [Google Scholar]

- Anderson, D.N. The taxicab-hailing encounter: The politics of gesture in the interaction order. Semiotica 2014, 2014, 609–629. [Google Scholar] [CrossRef]

- Smith, T.; Vardhan, H.; Cherniavsky, L. Humanising Autonomy: Where are We Going; USTWO: London, UK, 2017. [Google Scholar]

- Wang, Z.; Lian, J.; Li, L.; Zhou, Y. Understanding Pedestrians’ Car-Hailing Intention in Traffic Scenes. Int. J. Automot. Technol. 2022, 23, 1023–1034. [Google Scholar] [CrossRef]

- Krueger, M.W. Artificial Reality II; Addison-Wesley: Boston, MA, USA, 1991. [Google Scholar]

- Ohn-Bar, E.; Trivedi, M.M. Hand gesture recognition in real time for automotive interfaces: A multimodal vision-based approach and evaluations. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2368–2377. [Google Scholar] [CrossRef]

- Rasouli, A.; Tsotsos, J.K. Autonomous vehicles that interact with pedestrians: A survey of theory and practice. IEEE Trans. Intell. Transp. Syst. 2019, 21, 900–918. [Google Scholar] [CrossRef]

- Holzbock, A.; Tsaregorodtsev, A.; Dawoud, Y.; Dietmayer, K.; Belagiannis, V. A Spatio-Temporal Multilayer Perceptron for Gesture Recognition. arXiv 2022, arXiv:2204.11511. [Google Scholar]

- Martin, M.; Roitberg, A.; Haurilet, M.; Horne, M.; Reiß, S.; Voit, M.; Stiefelhagen, R. Drive&act: A multi-modal dataset for fine-grained driver behavior recognition in autonomous vehicles. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 2801–2810. [Google Scholar]

- Meyer, R.; Graf von Spee, R.; Altendorf, E.; Flemisch, F.O. Gesture-based vehicle control in partially and highly automated driving for impaired and non-impaired vehicle operators: A pilot study. In Proceedings of the International Conference on Universal Access in Human-Computer Interaction, Las Vegas, NV, USA, 15–20 July 2018; pp. 216–227. [Google Scholar]

- Rasouli, A.; Kotseruba, I.; Tsotsos, J.K. Towards social autonomous vehicles: Understanding pedestrian-driver interactions. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 729–734. [Google Scholar]

- Shaotran, E.; Cruz, J.J.; Reddi, V.J. Gesture Learning For Self-Driving Cars. In Proceedings of the 2021 IEEE International Conference on Autonomous Systems (ICAS), Montréal, QC, Canada, 11–13 August 2021; pp. 1–5. [Google Scholar]

- Hou, M.; Mahadevan, K.; Somanath, S.; Sharlin, E.; Oehlberg, L. Autonomous vehicle-cyclist interaction: Peril and promise. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–12. [Google Scholar]

- Mishra, A.; Kim, J.; Cha, J.; Kim, D.; Kim, S. Authorized traffic controller hand gesture recognition for situation-aware autonomous driving. Sensors 2021, 21, 7914. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Li, B.; Gao, M. Skeleton-based Approaches based on Machine Vision: A Survey. arXiv 2020, arXiv:2012.12447. [Google Scholar]

- De Smedt, Q.; Wannous, H.; Vandeborre, J.P. Skeleton-based dynamic hand gesture recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 27–30 June 2016; pp. 1–9. [Google Scholar]

- Brás, A.; Simão, M.; Neto, P. Gesture Recognition from Skeleton Data for Intuitive Human-Machine Interaction. arXiv 2020, arXiv:2008.11497. [Google Scholar]

- Chen, L.; Li, Y.; Liu, Y. Human body gesture recognition method based on deep learning. In Proceedings of the 2020 Chinese Control And Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 587–591. [Google Scholar]

- Nguyen, D.H.; Ly, T.N.; Truong, T.H.; Nguyen, D.D. Multi-column CNNs for skeleton based human gesture recognition. In Proceedings of the 2017 9th International Conference on Knowledge and Systems Engineering (KSE), Hue, Vietnam, 19–21 October 2017; pp. 179–184. [Google Scholar]

- Yuanyuan, S.; Yunan, L.; Xiaolong, F.; Kaibin, M.; Qiguang, M. Review of dynamic gesture recognition. Virtual Real. Intell. Hardw. 2021, 3, 183–206. [Google Scholar]

- Oudah, M.; Al-Naji, A.; Chahl, J. Hand gesture recognition based on computer vision: A review of techniques. J. Imaging 2020, 6, 73. [Google Scholar] [CrossRef]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Fei-Fei, L. Large-scale Video Classification with Convolutional Neural Networks. In Proceedings of the CVPR, Columbus, OH, USA, 23–38 June 2014. [Google Scholar]

- Rasouli, A.; Kotseruba, I.; Tsotsos, J.K. Understanding pedestrian behavior in complex traffic scenes. IEEE Trans. Intell. Veh. 2017, 3, 61–70. [Google Scholar] [CrossRef]

- Saleh, K.; Hossny, M.; Nahavandi, S. Real-time intent prediction of pedestrians for autonomous ground vehicles via spatio-temporal densenet. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 9704–9710. [Google Scholar]

- Gujjar, P.; Vaughan, R. Classifying Pedestrian Actions In Advance Using Predicted Video Of Urban Driving Scenes. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 2097–2103. [Google Scholar] [CrossRef]

- Shahroudy, A.; Liu, J.; Ng, T.T.; Wang, G. Ntu rgb+ d: A large scale dataset for 3d human activity analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1010–1019. [Google Scholar]

- Neogi, S.; Hoy, M.; Chaoqun, W.; Dauwels, J. Context based pedestrian intention prediction using factored latent dynamic conditional random fields. In Proceedings of the 2017 IEEE Symposium Series on Computational Intelligence (SSCI), Honolulu, HI, USA, 27 November–1 December 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Ross, J.I. Taxi driving and street culture. In Routledge Handbook of Street Culture; Routledge: Abingdon, UK, 2020. [Google Scholar]

- Matsubara, Y.; Li, L.; Papalexakis, E.; Lo, D.; Sakurai, Y.; Faloutsos, C. F-trail: Finding patterns in taxi trajectories. In Proceedings of the Advances in Knowledge Discovery and Data Mining: 17th Pacific-Asia Conference, PAKDD 2013, Gold Coast, Australia, 14–17 April 2013; pp. 86–98. [Google Scholar]

- Hu, X.; An, S.; Wang, J. Taxi driver’s operation behavior and passengers’ demand analysis based on GPS data. J. Adv. Transp. 2018, 2018, 6197549. [Google Scholar] [CrossRef]

- Li, B.; Zhang, D.; Sun, L.; Chen, C.; Li, S.; Qi, G.; Yang, Q. Hunting or waiting? Discovering passenger-finding strategies from a large-scale real-world taxi dataset. In Proceedings of the 2011 IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOM Workshops), Kyoto, Japan, 11–15 March 2011; pp. 63–68. [Google Scholar]

- Yuan, J.; Zheng, Y.; Zhang, L.; Xie, X.; Sun, G. Where to find my next passenger. In Proceedings of the 13th International Conference on Ubiquitous Computing, Beijing, China, 17–21 September 2011; pp. 109–118. [Google Scholar]

- Zhang, D.; Sun, L.; Li, B.; Chen, C.; Pan, G.; Li, S.; Wu, Z. Understanding taxi service strategies from taxi GPS traces. IEEE Trans. Intell. Transp. Syst. 2014, 16, 123–135. [Google Scholar] [CrossRef]

- Kamga, C.; Yazici, M.A.; Singhal, A. Hailing in the rain: Temporal and weather-related variations in taxi ridership and taxi demand-supply equilibrium. In Proceedings of the Transportation Research Board 92nd Annual Meeting, Washington, DC, USA, 13–17 January 2013; Volume 1. [Google Scholar]

- Tong, Y.; Chen, Y.; Zhou, Z.; Chen, L.; Wang, J.; Yang, Q.; Ye, J.; Lv, W. The simpler the better: A unified approach to predicting original taxi demands based on large-scale online platforms. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1653–1662. [Google Scholar]

- Zhang, J.; Zheng, Y.; Qi, D. Deep spatio-temporal residual networks for citywide crowd flows prediction. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Boumeddane, S.; Hamdad, L.; Bouregag, A.A.E.F.; Damene, M.; Sadeg, S. A Model Stacking Approach for Ride-Hailing Demand Forecasting: A Case Study of Algiers. In Proceedings of the 2020 2nd International Workshop on Human-Centric Smart Environments for Health and Well-being (IHSH), Boumerdes, Algeria, 9–10 February 2021; pp. 16–21. [Google Scholar]

- Sun, Z.; Cao, S.; Yang, Y.; Kitani, K.M. Rethinking transformer-based set prediction for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3611–3620. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Elharrouss, O.; Akbari, Y.; Almaadeed, N.; Al-Maadeed, S. Backbones-review: Feature extraction networks for deep learning and deep reinforcement learning approaches. arXiv 2022, arXiv:2206.08016. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. Available online: https://github.com/facebookresearch/detectron2 (accessed on 11 April 2023).

- Dai, X. HybridNet: A fast vehicle detection system for autonomous driving. Signal Process. Image Commun. 2019, 70, 79–88. [Google Scholar] [CrossRef]

- Han, C.; Zhao, Q.; Zhang, S.; Chen, Y.; Zhang, Z.; Yuan, J. YOLOPv2: Better, Faster, Stronger for Panoptic Driving Perception. arXiv 2022, arXiv:2208.11434. [Google Scholar]

- Caesar, H.; Uijlings, J.; Ferrari, V. Coco-stuff: Thing and stuff classes in context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1209–1218. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).