Heatmap-Guided Selective Feature Attention for Robust Cascaded Face Alignment

Abstract

1. Introduction

- We propose an effective attention method by selecting multi-level features through the estimated heatmap.

- We propose backward propagation connections between a heatmap regression network and a coordinate regression network for effective multi-task learning to improve performance.

- We designed a heatmap-cascaded coordinate regression network and verified its performance for the proposed network through ablation studies.

2. Related Work

2.1. Coordinate Regression Methods

2.2. Heatmap Regression Methods

2.3. Hybrid Methods

2.4. Multi-Task Learning

3. Proposed Network

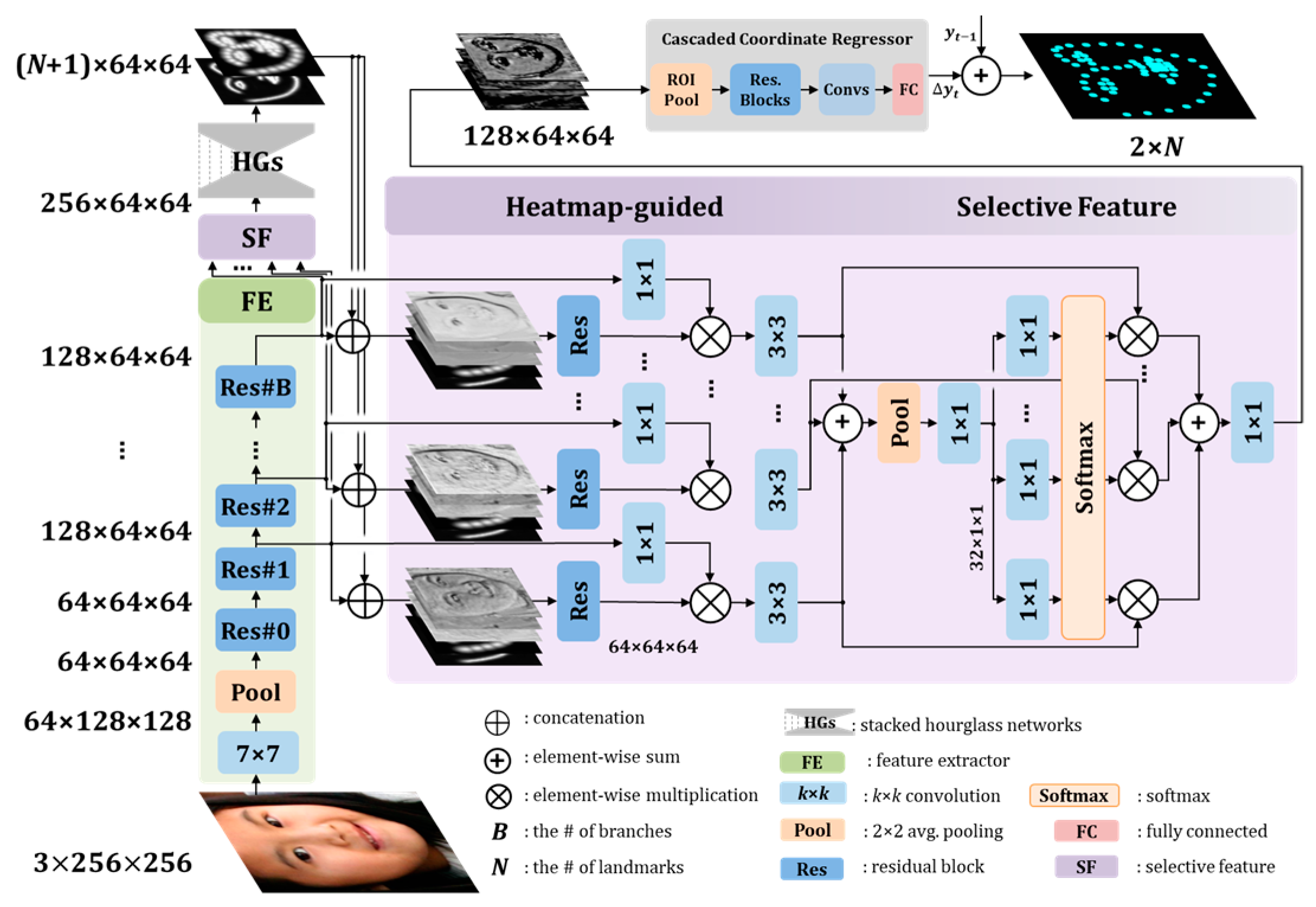

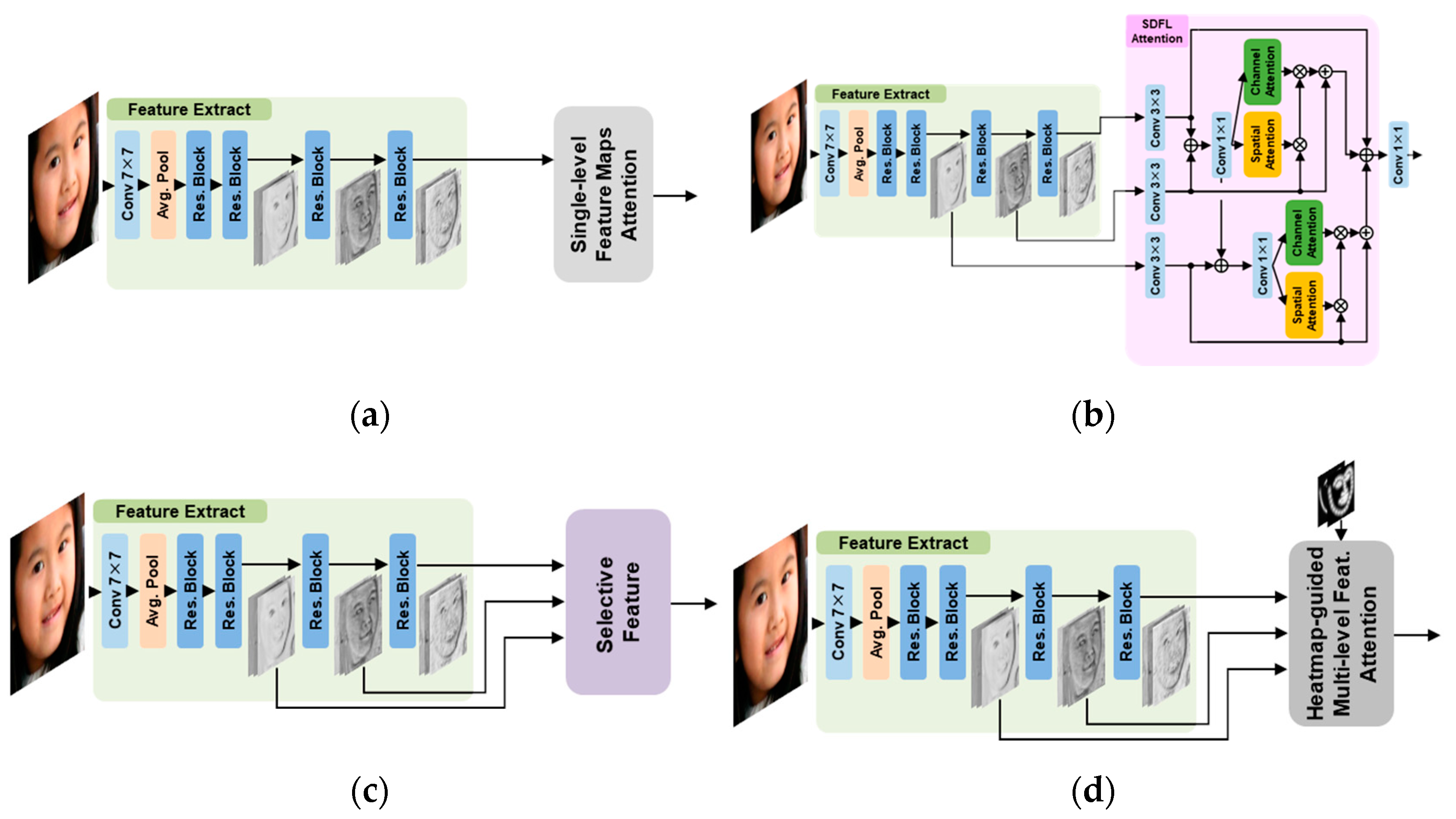

3.1. Heatmap-Guided Selective Feature Attention

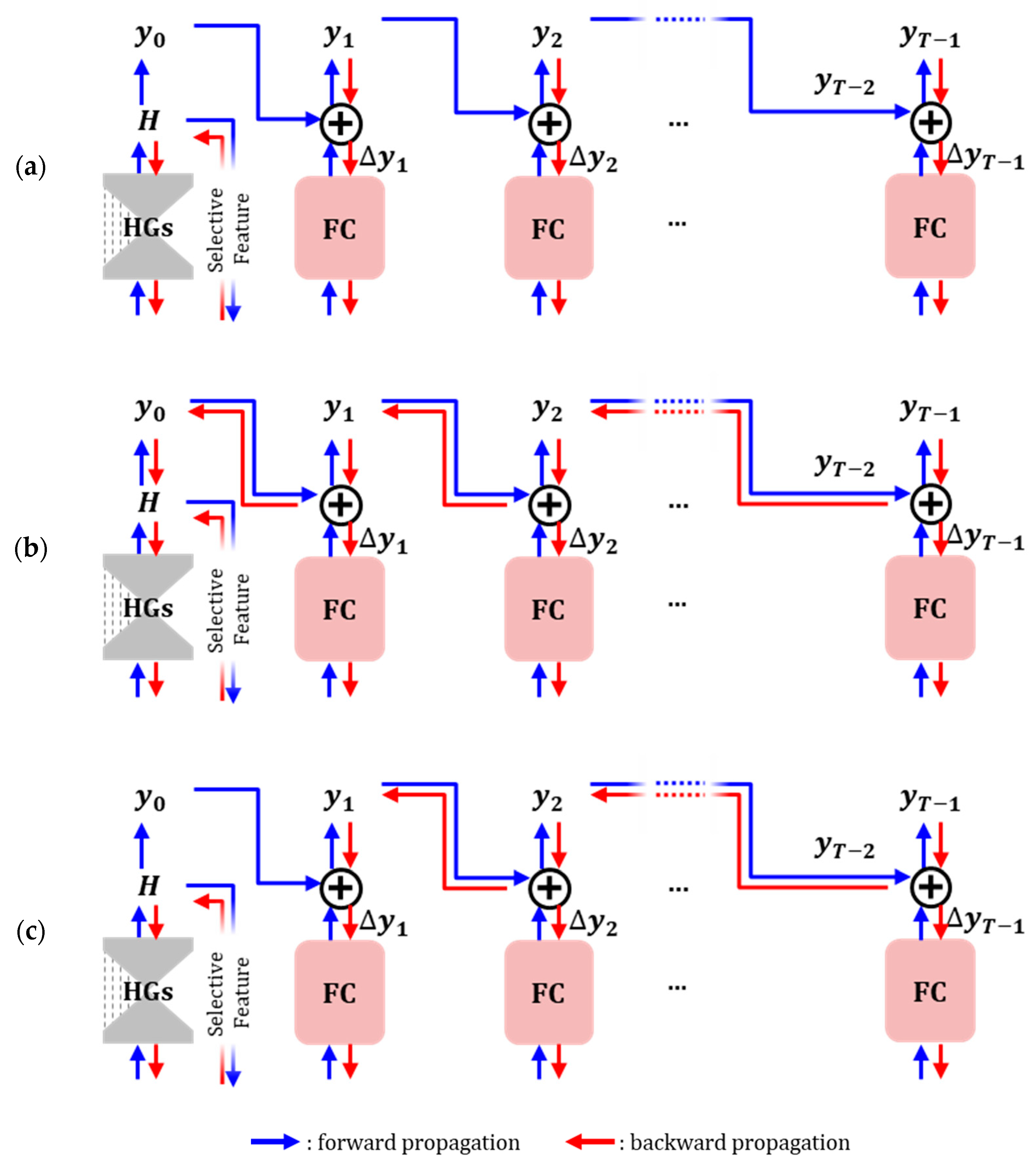

3.2. Designing Backward Propagation Connections

- Task-wise connection: No backward propagation connection for the summation component in all stage tasks (Figure 5a). It is a common structure for multi-task learning, and all tasks share the feature extractor module in the early network layer. The shared feature extractor prevents overfitting for a single task type. Because the feature extractor module is a front-end network module, it slightly impacts performance.

- Fully connection: A backward propagation connection for the summation component in all stage tasks (Figure 5b). The backward information of tasks affects not only the feature extractor shared by all tasks but also task-specific layers. Because the information from the neighbor stage is backward-propagated to the specific task layers, an improvement or deterioration of performance is clearly observed for the backward propagation of the neighbor stage.

- CCR connection: Having a backward propagation connection for the summation component only in the CCR tasks, except in the heatmap regression task (Figure 5c). Compared with the full connection, it removes the backward propagation connection between the heatmap regression task and the first CCR task. By not propagating the bad backward information of the first CCR task to the heatmap regression network, it improves the performance of CCR tasks and makes the training for each task manageable.

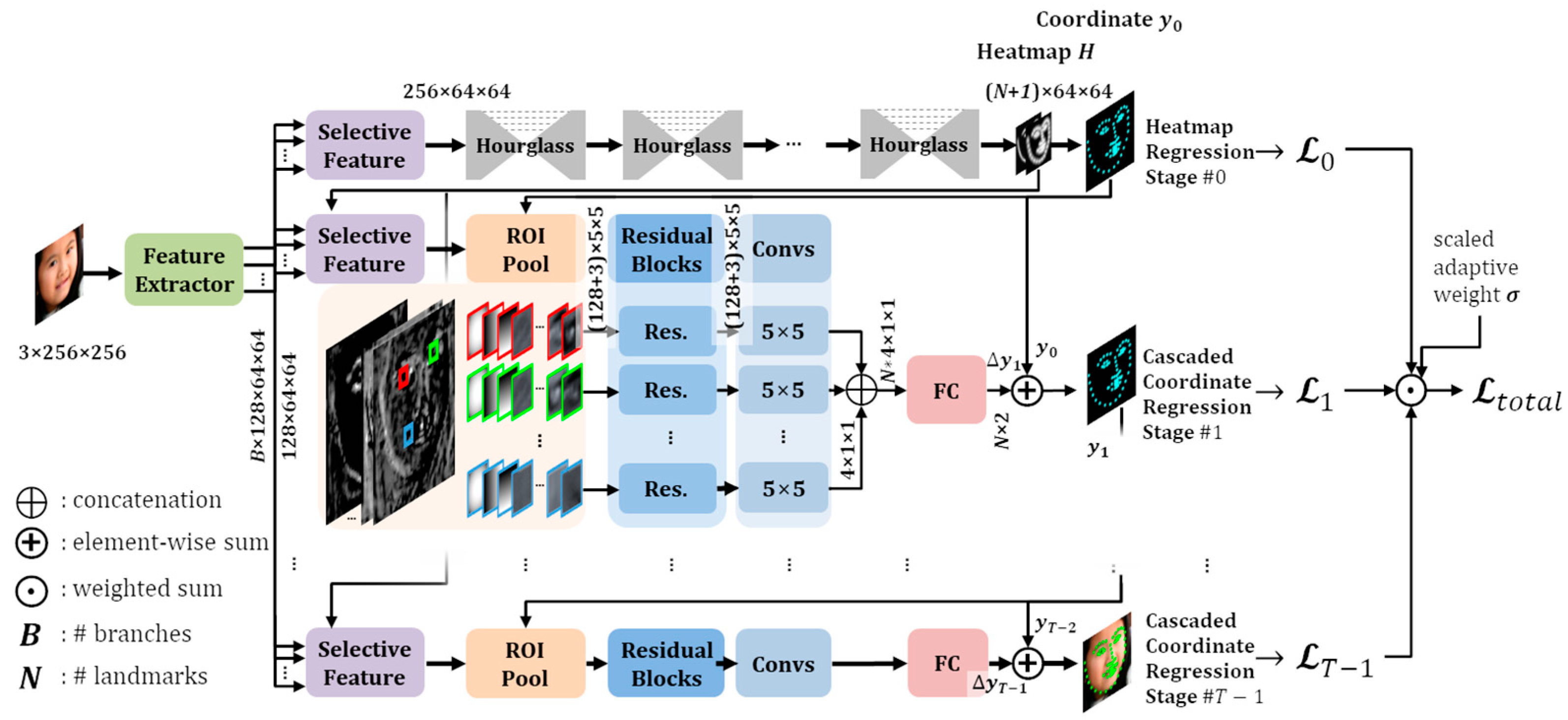

3.3. Cascaded Face Alignment Network with Heatmap-Guided Selective Feature

- Feature extractor: The feature extractor extracts feature maps from an input image, and they are shared by all tasks. It consists of a convolution layer and B + 1 residual blocks for B input branches of the selective feature module.

- Selective feature module: The selective feature module in this paper selects valid feature maps from several branches extracted from the feature extractor.

- Heatmap regression network: The heatmap regression network estimates landmark heatmaps and a boundary heatmap, such as the stacked hourglass network in AWing [28].

- Cascaded coordinate regression network: The CCR network extracts the ROI feature map for each landmark through the ROI pooling layer and concatenates the coordinate channels [43]. The coordinate channels that represent the coordinates in the feature map can improve coordinate regression performance by concatenating the original feature channels [28,43]. In this paper, the coordinate channels are concatenated to the feature map for each landmark to improve CCR performance. Here, the ROI feature map was independently created through a residual block and a convolution layer for each landmark. The feature maps were concatenated in the last layer and used to estimate the offset coordinates using the fully connected layer. The global landmark coordinates of the current stage were obtained by adding the estimated offset to the global landmark coordinates of the previous stage.

4. Experiments

4.1. Evaluation Metrics

4.1.1. Normalized Mean Error

4.1.2. Failure Rate

4.1.3. Area under the Curve

4.2. Implementation Details

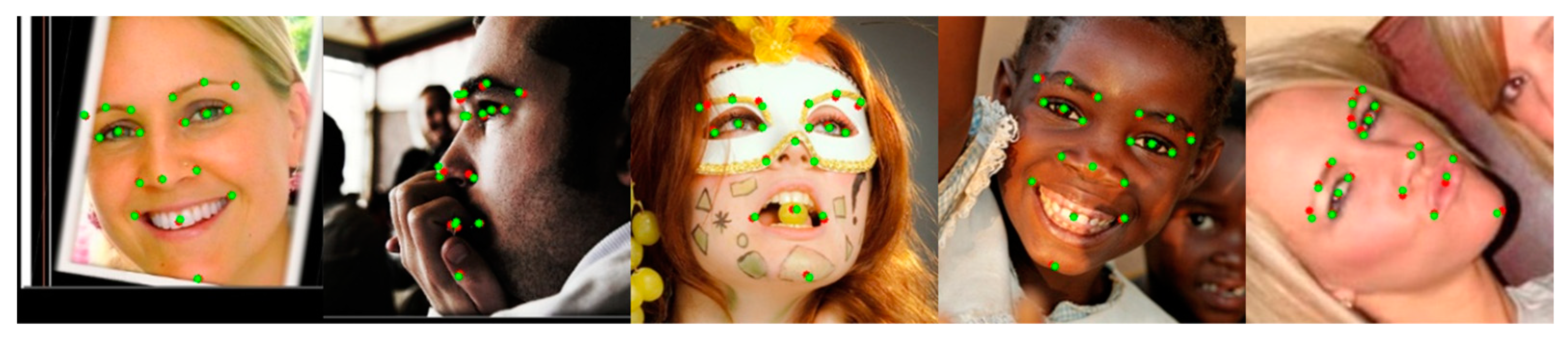

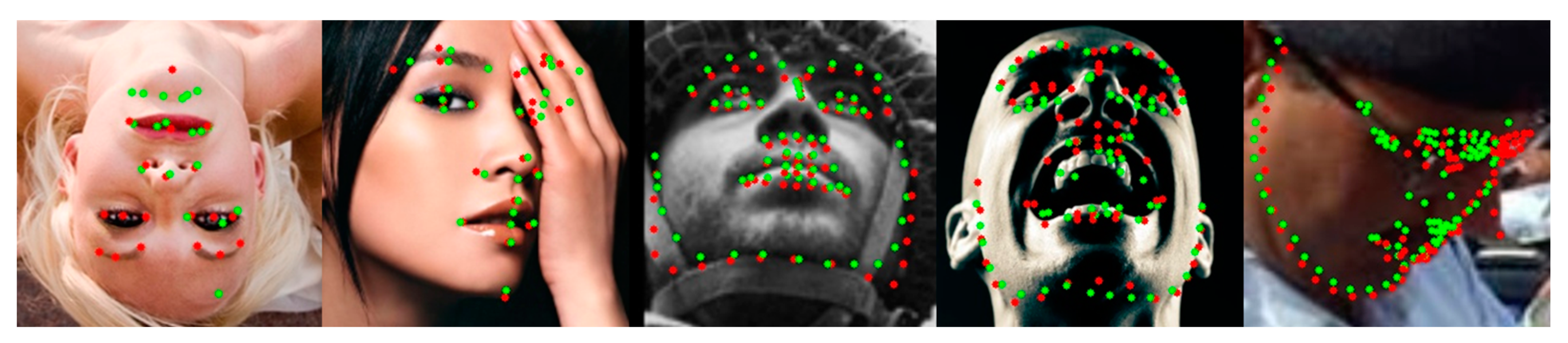

4.3. Evaluation

4.3.1. Evaluation of 300W

4.3.2. Evaluation of AFLW

4.3.3. Evaluation of COFW

4.3.4. Evaluation of WFLW

5. Ablation Study

5.1. Evaluation of Different Components

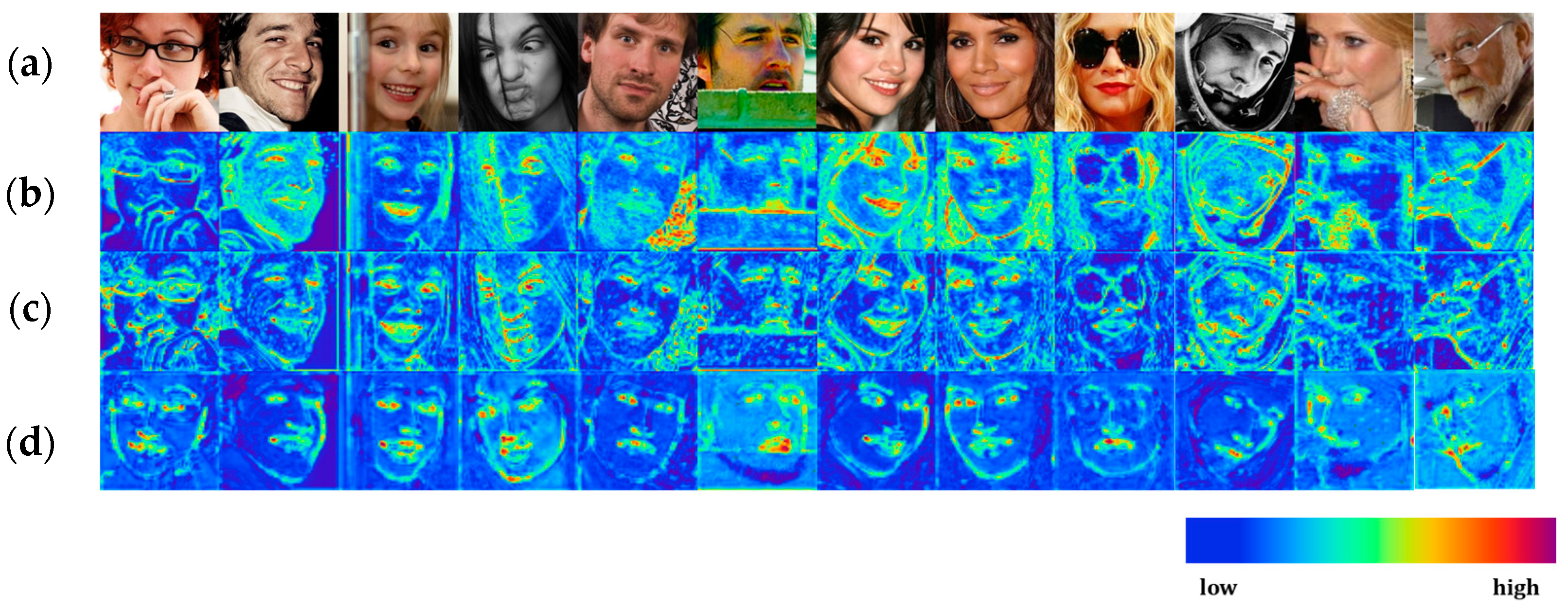

5.2. Comparison of Feature Map Attention Methods

5.3. Evaluation of Different Backward Propagation Settings in the CCR Stage

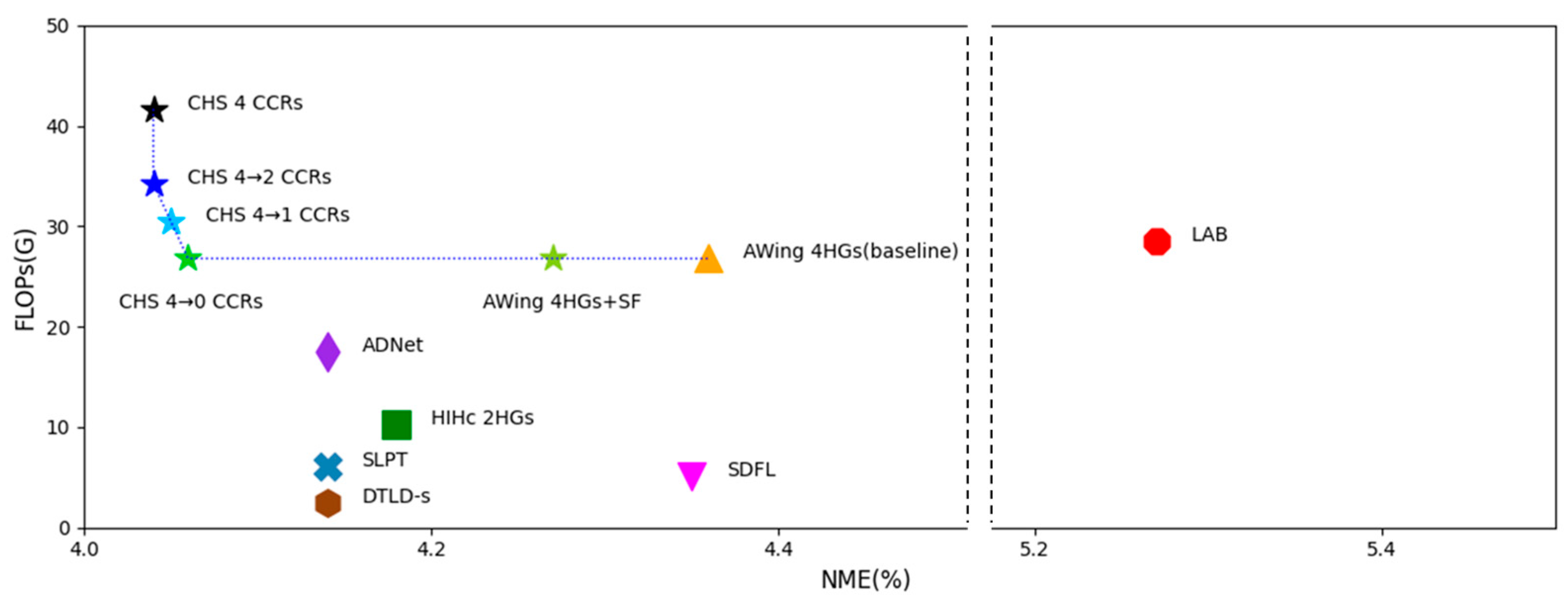

5.4. Model Complexity

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.-H.; et al. Challenges in representation learning: A report on three machine learning contests. In International Conference on Neural Information Processing; Springer: Berlin/Heidelberg, Germany, 2013; pp. 117–124. [Google Scholar]

- Savchenko, A.V. Facial expression and attributes recognition based on multi-task learning of lightweight neural networks. In Proceedings of the 2021 IEEE 19th International Symposium on Intelligent Systems and Informatics (SISY), Subotica, Serbia, 16–18 September 2021; pp. 119–124. [Google Scholar]

- Hempel, T.; Abdelrahman, A.A.; Al-Hamadi, A. 6d rotation representation for unconstrained head pose estimation. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 2496–2500. [Google Scholar]

- Valle, R.; Buenaposada, J.M.; Baumela, L. Multi-task head pose estimation in-the-wild. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2874–2881. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 483–499. [Google Scholar]

- Lan, X.; Hu, Q.; Cheng, J. Revisting quantization error in face alignment. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 1521–1530. [Google Scholar]

- Wu, W.; Qian, C.; Yang, S.; Wang, Q.; Cai, Y.; Zhou, Q. Look at boundary: A boundary-aware face alignment algorithm. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2129–2138. [Google Scholar]

- Park, H.; Kim, D. Acn: Occlusion-tolerant face alignment by attentional combination of heterogeneous regression networks. Pattern Recognit. 2021, 114, 107761. [Google Scholar] [CrossRef]

- Sagonas, C.; Tzimiropoulos, G.; Zafeiriou, S.; Pantic, M. 300 faces in-the-wild challenge: The first facial landmark localization challenge. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 1–8 December 2013; pp. 397–403. [Google Scholar]

- Koestinger, M.; Wohlhart, P.; Roth, P.M.; Bischof, H. Annotated facial landmarks in the wild: A large-scale, real-world database for facial landmark localization. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 2144–2151. [Google Scholar]

- Burgos-Artizzu, X.P.; Perona, P.; Dollár, P. Robust face landmark estimation under occlusion. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1513–1520. [Google Scholar]

- Cootes, T.F.; Edwards, G.J.; Taylor, C.J. Active appearance models. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 681–685. [Google Scholar] [CrossRef]

- Cristinacce, D.; Cootes, T.F. Feature detection and tracking with constrained local models. In Proceedings of the British Machine Vision Conference, Edinburgh, Scotland, 4–7 September 2006; Volume 1, p. 3. [Google Scholar]

- Ren, S.; Cao, X.; Wei, Y.; Sun, J. Face alignment at 3000 fps via regressing local binary features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1685–1692. [Google Scholar]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Feng, Z.-H.; Kittler, J.; Awais, M.; Huber, P.; Wu, X.-J. Wing loss for robust facial landmark localization with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2235–2245. [Google Scholar]

- Su, J.; Wang, Z.; Liao, C.; Ling, H. Efficient and accurate face alignment by global regression and cascaded local refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019; pp. 267–276. [Google Scholar]

- Li, W.; Lu, Y.; Zheng, K.; Liao, H.; Lin, C.; Luo, J.; Cheng, C.-T.; Xiao, J.; Lu, L.; Kuo, C.-F.; et al. Structured landmark detection via topology- adapting deep graph learning. In Computer Vision-ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part IX 16; Springer: Berlin/Heidelberg, Germany, 2020; pp. 266–283. [Google Scholar]

- Lin, C.; Zhu, B.; Wang, Q.; Liao, R.; Qian, C.; Lu, J.; Zhou, J. Structure coherent deep feature learning for robust face alignment. IEEE Trans. Image Process. 2021, 30, 5313–5326. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Li, B.; Geng, M.; Yuan, Y.; Yu, G. Anchorface: An anchor-based facial landmark detector across large poses. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BA, Canada, 2–9 February 2021; pp. 3092–3100. [Google Scholar]

- Zheng, Y.; Yang, H.; Zhang, T.; Bao, J.; Chen, D.; Huang, Y.; Yuan, L.; Chen, D.; Zeng, M.; Wen, F. General facial representation learning in a visual-linguistic manner. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 18697–18709. [Google Scholar]

- Li, H.; Guo, Z.; Rhee, S.-M.; Han, S.; Han, J.-J. Towards accurate facial landmark detection via cascaded transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 4176–4185. [Google Scholar]

- Xia, J.; Qu, W.; Huang, W.; Zhang, J.; Wang, X.; Xu, M. Sparse local patch transformer for robust face alignment and landmarks inherent relation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 4052–4061. [Google Scholar]

- Bulat, A.; Tzimiropoulos, G. How far are we from solving the 2d & 3d face alignment problem? (and a dataset of 230,000 3d facial landmarks). In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1021–1030. [Google Scholar]

- Yang, J.; Liu, Q.; Zhang, K. Stacked hourglass network for robust facial landmark localisation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 79–87. [Google Scholar]

- Wang, X.; Bo, L.; Fuxin, L. Adaptive wing loss for robust face alignment via heatmap regression. In Proceedings of the IEEE/CVF international conference on computer vision, Seoul, South Korea, 27 October–2 November 2019; pp. 6971–6981. [Google Scholar]

- Zhang, J.; Hu, H.; Feng, S. Robust facial landmark detection via heatmap offset regression. IEEE Trans. Image Process. 2020, 29, 5050–5064. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Huang, X.; Deng, W.; Shen, H.; Zhang, X.; Ye, J. Propagationnet: Propagate points to curve to learn structure information. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7265–7274. [Google Scholar]

- Jin, H.; Liao, S.; Shao, L. Pixel-in-pixel net: Towards effcient facial landmark detection in the wild. Int. J. Comput. Vis. 2021, 129, 3174–3194. [Google Scholar] [CrossRef]

- Bulat, A.; Sanchez, E.; Tzimiropoulos, G.; Center, S.A. Subpixel heatmap regression for facial landmark localization. arXiv 2021, arXiv:2111.02360. In the British Machine Vision Conference, 22–25 November 2021. [Google Scholar]

- Valle, R.; Buenaposada, J.M.; Valdes, A.; Baumela, L. A deeply-initialized coarse-to-fine ensemble of regression trees for face alignment. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 585–601. [Google Scholar]

- Valle, R.; Buenaposada, J.M.; Vald, A.; Baumela, L. Face alignment using a 3d deeply-initialized ensemble of regression trees. Comput. Vis. Image Underst. 2019, 189, 102846. [Google Scholar] [CrossRef]

- Ruder, S. An overview of multi-task learning in deep neural networks. arXiv 2017, arXiv:1706.05098. [Google Scholar]

- Ranjan, R.; Patel, V.M.; Chellappa, R. Hyperface: A deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 121–135. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Marks, T.K.; Mou, W.; Wang, Y.; Jones, M.; Cherian, A.; Koike-Akino, T.; Liu, X.; Feng, C. Luvli face alignment: Estimating landmarks’ location, uncertainty, and visibility likelihood. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8236–8246. [Google Scholar]

- Prados-Torreblanca, A.; Buenaposada, J.M.; Baumela, L. Shape preserving facial landmarks with graph attention networks. In BMVC. arXiv 2022, arXiv:2210.07233v1. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liu, R.; Lehman, J.; Molino, P.; Such, F.P.; Frank, E.; Sergeev, A.; Yosinski, J. An intriguing failing of convolutional neural networks and the coordconv solution. Adv. Neural Inf. Process. Syst. 2018, 9605–9616. [Google Scholar]

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7482–7491. [Google Scholar]

- Bulat, A.; Tzimiropoulos, G. Binarized convolutional landmark localizers for human pose estimation and face alignment with limited resources. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3706–3714. [Google Scholar]

- Ghiasi, G.; Fowlkes, C.C. Occlusion coherence: Detecting and localizing occluded faces. arXiv 2015, arXiv:1506.08347. [Google Scholar]

- Huang, Y.; Yang, H.; Li, C.; Kim, J.; Wei, F. Adnet: Leveraging error bias towards normal direction in face alignment. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 3080–3090. [Google Scholar]

- Iranmanesh, S.M.; Dabouei, A.; Soleymani, S.; Kazemi, H.; Nasrabadi, N. Robust facial landmark detection via aggregation on geometrically manipulated faces. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 330–340. [Google Scholar]

- Zhu, S.; Li, C.; Loy, C.-C.; Tang, X. Unconstrained face alignment via cascaded compositional learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3409–3417. [Google Scholar]

- Dong, X.; Yan, Y.; Ouyang, W.; Yang, Y. Style aggregated network for facial landmark detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 379–388. [Google Scholar]

- Chen, L.; Su, H.; Ji, Q. Face alignment with kernel density deep neural network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, South Korea, 27 October–2 November 2019; pp. 6992–7002. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

| Normalization | Method | Common Subset | Challenging Subset | Fullset |

|---|---|---|---|---|

| Inter-Pupil Distance Normalization | 3DDE [35] | 3.73 | 7.10 | 4.39 |

| Wing [18] | 3.27 | 7.18 | 4.04 | |

| LAB [9] | 3.42 | 6.98 | 4.12 | |

| AWing [28] | 3.77 | 6.52 | 4.31 | |

| LRefNet [19] | 3.76 | 6.89 | 4.37 | |

| SHN-GCN [29] | 3.78 | 6.69 | 4.35 | |

| SLD [20] | 3.64 | 6.88 | 4.27 | |

| ADNet [47] | 3.51 | 6.47 | 4.08 | |

| SPIGA [39] | 3.59 | 6.73 | 4.20 | |

| CHS (Ours) | 3.49 | 6.47 | 4.07 | |

| Inter-Ocular Distance Normalization | 3DDE [35] | 2.69 | 4.92 | 3.13 |

| LAB [9] | 2.98 | 5.19 | 3.49 | |

| LRefNet [19] | 2.71 | 4.78 | 3.12 | |

| AWing [28] | 2.72 | 4.52 | 3.07 | |

| LUVLi [38] | 2.76 | 5.16 | 3.23 | |

| SHN-GCN [29] | 2.73 | 4.64 | 3.10 | |

| GEAN [48] | 2.68 | 4.71 | 3.05 | |

| ACN [10] | 2.56 | 4.81 | 3.00 | |

| HIH [8] | 2.93 | 5.00 | 3.33 | |

| SDFL [21] | 2.88 | 4.93 | 3.28 | |

| SLD [20] | 2.62 | 4.77 | 3.04 | |

| ADNet [47] | 2.53 | 4.58 | 2.93 | |

| FaRL (Scratch) [23] | 2.90 | 5.19 | 3.35 | |

| SLPT [25] | 2.75 | 4.90 | 3.17 | |

| DTLD-s [24] | 2.67 | 4.56 | 3.04 | |

| SPIGA [39] | 2.59 | 4.66 | 2.99 | |

| CHS (Ours) | 2.52 | 4.48 | 2.91 |

| Method | 300W Private | COFW-68 |

|---|---|---|

| SHN [27] | 4.05 | - |

| LAB [9] | - | 4.62 |

| 3DDE [35] | 3.74 | - |

| LRefNet [19] | - | 4.40 |

| AWing [28] | 3.56 | - |

| GEAN [48] | - | 4.24 |

| ACN [10] | 3.55 | 3.83 |

| SLD [20] | - | 4.22 |

| SDFL [21] | - | 4.18 |

| SLPT [25] | - | 4.10 |

| SPIGA [39] | 3.43 | 3.93 |

| CHS (Ours) | 3.35 | 3.78 |

| Bounding Box | Method | NMEdiag | NMEbox | AUC7,box | ||

|---|---|---|---|---|---|---|

| Full | Frontal | Full | Frontal | Full | ||

| Dataset Bounding Box | CCL [49] | - | - | 2.27 | 2.17 | - |

| LAB [9] | - | - | 1.84 | 1.62 | - | |

| Wing [18] | - | - | 1.65 | - | - | |

| SAN [50] | - | - | 1.91 | 1.85 | - | |

| 3DDE [35] | - | - | 2.01 | - | ||

| SHN-GCN [29] | 2.15 | - | - | |||

| LRefNet [19] | - | - | 1.63 | 1.46 | - | |

| AWing [28] | - | - | 1.53 | 1.38 | - | |

| GEAN [48] | - | - | 1.59 | 1.34 | - | |

| LUVLi [38] | 1.39 | 1.19 | - | - | - | |

| FaRL (Scratch) [23] | 1.05 | 0.88 | 1.48 | 79.3 | ||

| DTLD-s [24] | - | - | 1.39 | - | - | |

| CHS(Ours) | 0.96 | 0.86 | 1.36 | 1.23 | 81.1 | |

| GT Bounding Box | SAN [50] | - | - | 4.04 | - | 54.0 |

| Wing [18] | - | - | 3.56 | - | 53.5 | |

| KDN [51] | - | - | 2.80 | - | 60.3 | |

| LUVLi [38] | - | - | 2.28 | - | 68.0 | |

| CHS(Ours) | 1.26 | 1.08 | 1.91 | 1.55 | 73.5 | |

| Normalization | Method | NME (↓) | FR (↓) | AUC10 (↑) |

|---|---|---|---|---|

| Inter-Pupil Distance Normalization | SHN [27] | 5.60 | - | - |

| Wing [18] | 5.44 | 3.75 | - | |

| 3DDE [35] | 5.11 | |||

| AWing [28] | 4.94 | 0.99 | 0.6440 | |

| SHN-GCN [29] | 5.67 | - | - | |

| ADNet [47] | 4.68 | 0.59 | 0.5317 | |

| SLPT [25] | 4.79 | 1.18 | - | |

| CHS (Ours) | 4.56 | 0.39 | 0.5441 | |

| Inter-Ocular Distance Normalization | SHN [27] | 4.00 | - | - |

| LAB (wo/B) [9] | 5.58 | 2.76 | ||

| SDFL [21] | 3.63 | 0.00 | - | |

| HIH [8] | 3.28 | 0.00 | 0.6720 | |

| DTLD-s [24] | 3.18 | - | - | |

| SLPT [25] | 3.32 | 0.00 | - | |

| CHS (Ours) | 3.16 | 0.00 | 0.6833 |

| Method | Test Set | PoseSubset | Expression Subset | Illumination Subset | Make-Up Subset | Occlusion Subset | BlurSubset |

|---|---|---|---|---|---|---|---|

| NME (↓) | |||||||

| LAB [9] | 5.27 | 10.24 | 5.51 | 5.23 | 5.15 | 6.79 | 6.32 |

| 3DDE [35] | 4.68 | 8.62 | 5.21 | 4.65 | 4.60 | 5.77 | 5.41 |

| AWing [28] | 4.36 | 7.38 | 4.58 | 4.32 | 4.27 | 5.19 | 5.32 |

| LUVLi [38] | 4.37 | 7.56 | 4.77 | 4.30 | 4.33 | 5.29 | 4.94 |

| AnchorFace [22] | 4.32 | 7.51 | 4.69 | 4.20 | 4.11 | 4.98 | 4.82 |

| HIH [8] | 4.18 | 7.20 | 4.19 | 4.45 | 3.97 | 5.00 | 4.81 |

| SDFL [21] | 4.35 | 7.42 | 4.63 | 4.29 | 4.22 | 5.19 | 5.08 |

| SLD [20] | 4.21 | 7.36 | 4.49 | 4.12 | 4.05 | 4.98 | 4.82 |

| ADNet [47] | 4.14 | 6.96 | 4.38 | 4.09 | 4.05 | 5.06 | 4.79 |

| FaRL (Scratch) [23] | 4.80 | 8.78 | 5.09 | 4.74 | 4.99 | 6.01 | 5.35 |

| DTLD-s [24] | 4.14 | - | - | - | - | - | - |

| SLPT [25] | 4.14 | 6.96 | 4.45 | 4.05 | 4.00 | 5.06 | 4.79 |

| SPIGA [39] | 4.06 | 7.14 | 4.46 | 4.00 | 3.81 | 4.95 | 4.65 |

| CHS (Ours) | 4.04 | 6.76 | 4.33 | 3.98 | 3.87 | 4.71 | 4.64 |

| FR10 (↓) | |||||||

| LAB [9] | 7.56 | 28.83 | 6.37 | 6.73 | 7.77 | 13.72 | 10.74 |

| 3DDE [35] | 5.04 | 22.39 | 5.41 | 3.86 | 6.79 | 9.37 | 6.72 |

| AWing [28] | 2.84 | 13.50 | 2.23 | 2.58 | 2.91 | 5.98 | 3.75 |

| LUVLi [38] | 3.12 | 15.95 | 3.18 | 2.15 | 3.40 | 6.39 | 3.23 |

| AnchorFace [22] | 2.96 | 16.56 | 2.55 | 2.15 | 2.43 | 5.30 | 3.23 |

| HIH [8] | 2.96 | 15.03 | 1.59 | 2.58 | 1.46 | 6.11 | 3.49 |

| SDFL [21] | 2.72 | 12.88 | 1.59 | 2.58 | 2.43 | 5.71 | 3.62 |

| SLD [20] | 3.04 | 15.95 | 2.86 | 2.72 | 1.45 | 5.29 | 4.01 |

| ADNet [47] | 2.72 | 12.72 | 2.15 | 2.44 | 1.94 | 5.79 | 3.54 |

| FaRL (Scratch) [23] | 5.72 | - | - | - | - | - | - |

| DTLD-s [24] | 3.44 | - | - | - | - | - | - |

| SLPT [25] | 2.76 | 12.27 | 2.23 | 1.86 | 3.40 | 5.98 | 3.88 |

| SPIGA [39] | 2.08 | 11.66 | 2.23 | 1.58 | 1.46 | 4.48 | 2.20 |

| CHS (Ours) | 1.80 | 9.51 | 1.59 | 1.72 | 1.46 | 3.13 | 2.46 |

| AUC10 (↑) | |||||||

| LAB [9] | 0.5323 | 0.2345 | 0.4951 | 0.5433 | 0.5394 | 0.4490 | 0.4630 |

| 3DDE [35] | 0.5544 | 0.2640 | 0.5175 | 0.5602 | 0.5536 | 0.4692 | 0.4957 |

| AWing [28] | 0.5719 | 0.3120 | 0.5149 | 0.5777 | 0.5715 | 0.5022 | 0.5120 |

| LUVLi [38] | 0.5770 | 0.3100 | 0.5490 | 0.5840 | 0.5880 | 0.5050 | 0.5250 |

| AnchorFace [22] | 0.5769 | 0.2923 | 0.5440 | 0.5865 | 0.5914 | 0.5193 | 0.5286 |

| HIH [8] | 0.5970 | 0.3420 | 0.5900 | 0.6060 | 0.6040 | 0.5270 | 0.5490 |

| SDFL [21] | 0.5759 | 0.3152 | 0.5501 | 0.5847 | 0.5831 | 0.5035 | 0.5147 |

| SLD [20] | 0.5893 | 0.3150 | 0.5663 | 0.5953 | 0.6038 | 0.5235 | 0.5329 |

| ADNet [47] | 0.6022 | 0.3441 | 0.5234 | 0.5805 | 0.6007 | 0.5295 | 0.5480 |

| FaRL (Scratch) [23] | 0.5454 | - | - | - | - | - | - |

| SLPT [25] | 0.5950 | 0.3480 | 0.5740 | 0.6010 | 0.6050 | 0.5150 | 0.5350 |

| SPIGA [39] | 0.6056 | 0.3531 | 0.5797 | 0.6131 | 0.6224 | 0.5331 | 0.5531 |

| CHS (Ours) | 0.6015 | 0.3552 | 0.5792 | 0.6080 | 0.6155 | 0.5403 | 0.5462 |

| Component | Choice | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 4-HGs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Selective Feature | - | ✓ | - | - | - | - | ✓ | ✓ | ✓ | ✓ | ✓ |

| 2-CCRs | ✓ | - | |||||||||

| 4-CCRs | - | - | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | - | ✓ | - |

| 6-CCRs | - | ✓ | |||||||||

| Adaptive Weight | - | - | - | ✓ | - | ✓ | ✓ | - | ✓ | ✓ | ✓ |

| Fixed Loss Weight | - | - | - | - | ✓ | ✓ | - | ✓ | ✓ | ✓ | ✓ |

| NME (↓) | 4.44 | 4.24 | 4.20 | 4.18 | 4.15 | 4.14 | 4.12 | 4.11 | 4.12 | 4.07 | 4.10 |

| Evaluation | Without Attention | Single-Level, without Heatmap | Multi-Level | ||||

|---|---|---|---|---|---|---|---|

| without Heatmap | Heatmap-Guided | ||||||

| CBAM [42] | SK [52] | SDFL [21] | SF (Ours) | SDFL [21] | SF (Ours) | ||

| NME (%) | 4.14 | 4.17 | 4.17 | 4.17 | 4.15 | 4.13 | 4.07 |

| Method | NMEheatmap-stage (%) | NME4th-CCR-stage (%) | NMEheatmap-stage–NME4th-CCR-stage |

|---|---|---|---|

| Task-Wise Connection | 4.22 | 4.11 | 0.11 |

| Fully Connection | 4.24 | 4.11 | 0.13 |

| CCRs Connection | 4.21 | 4.07 | 0.14 |

| Method | #Params (M) | FLOPs (G) | NME (↓) |

|---|---|---|---|

| LAB [9] | 32.05 | 28.58 | 5.27 |

| AWing (baseline) [28] | 24.15 | 26.79 | 4.36 |

| SDFL [21] | 24.68 | 5.17 | 4.35 |

| HIHc (2 HGs) [10] | 14.47 | 10.29 | 4.18 |

| ADNet [47] | 13.48 | 17.47 | 4.14 |

| DTLD-s [24] | 13.30 | 2.50 | 4.14 |

| SLPT [25] | 13.19 | 6.12 | 4.14 |

| AWing 4HGs + SF | 24.15 | 26.80 | 4.27 |

| CHS 4 CCRs | 154.04 | 41.69 | 4.04 |

| CHS 4 → 2 CCRs | 89.09 | 34.25 | 4.04 |

| CHS 4 → 1 CCR | 56.62 | 30.52 | 4.05 |

| CHS 4 → 0 CCR | 24.15 | 26.80 | 4.06 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

So, J.; Han, Y. Heatmap-Guided Selective Feature Attention for Robust Cascaded Face Alignment. Sensors 2023, 23, 4731. https://doi.org/10.3390/s23104731

So J, Han Y. Heatmap-Guided Selective Feature Attention for Robust Cascaded Face Alignment. Sensors. 2023; 23(10):4731. https://doi.org/10.3390/s23104731

Chicago/Turabian StyleSo, Jaehyun, and Youngjoon Han. 2023. "Heatmap-Guided Selective Feature Attention for Robust Cascaded Face Alignment" Sensors 23, no. 10: 4731. https://doi.org/10.3390/s23104731

APA StyleSo, J., & Han, Y. (2023). Heatmap-Guided Selective Feature Attention for Robust Cascaded Face Alignment. Sensors, 23(10), 4731. https://doi.org/10.3390/s23104731