The experimental dataset has an input image resolution of 416 × 416, a hyperparameter bach size of 4, an initial learning rate of 0.01, an iteration round epoch of 50 and a SGD optimizer for gradient descent.

4.1. Date Set and Evaluation Index

The RFM dataset contains a total of 12,133 images, of which 80% are used as the training and validation set and the remaining 2427 images are used for testing. In total, 8738 images are used for training and 968 images for validation. The dataset is divided into three categories: the face category, the normative mask category and the irregular mask-wearing category. The data images are not entirely ideal. To better match the actual human activity, some images are not masked but have their faces obscured, so these object are labelled as the face category. The mask dataset was created with a face category number of 0, labelled face; an irregularly worn mask number of 1, labelled WMI; and an irregularly worn mask number of 2, labelled face_mask.

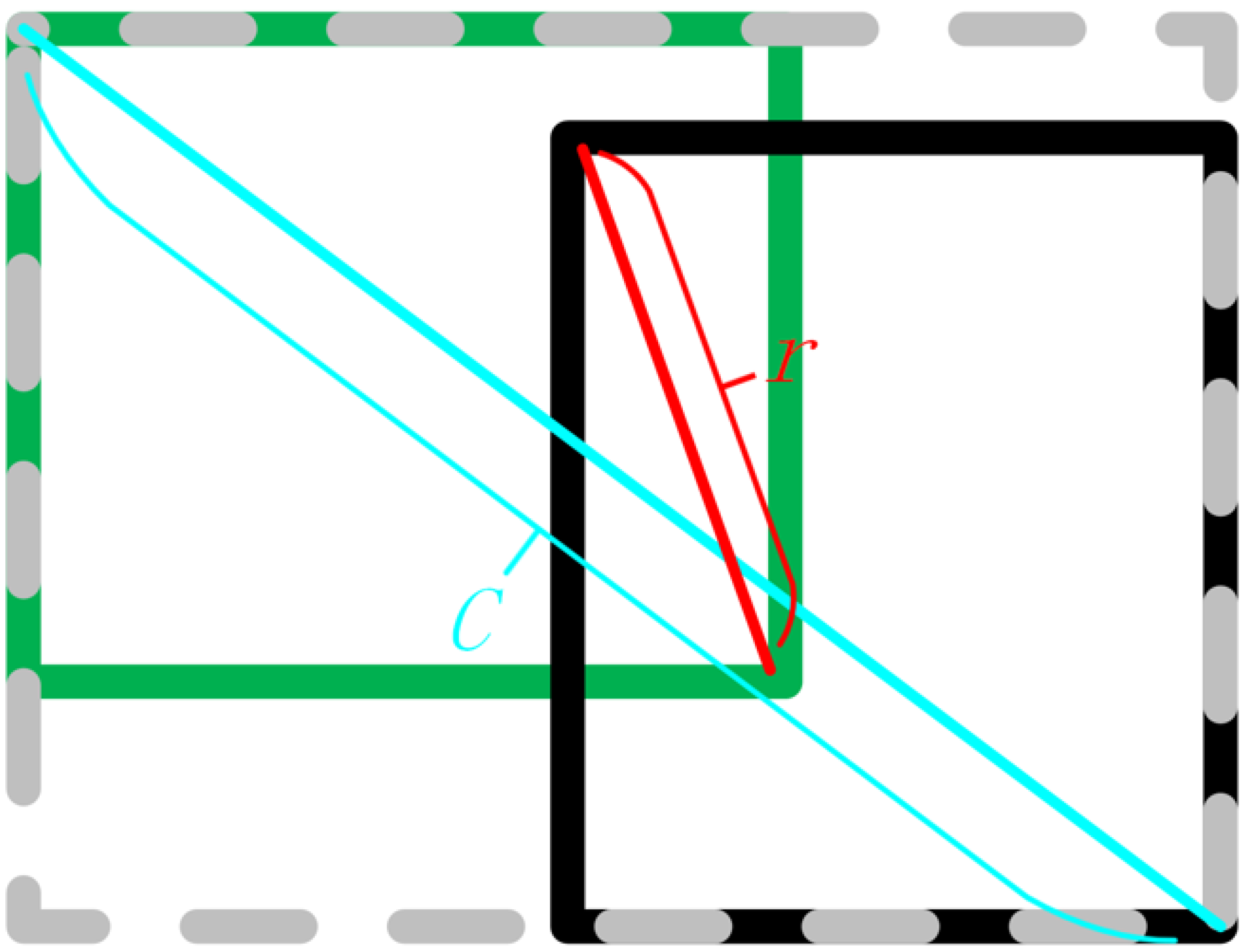

To obtain more accurate detection results, reasonable prior bounding box were obtained for the multi-scale objects by clustering, as shown in

Table 2 for the RFM dataset.

The following indicators are used to evaluate the performance improvement of the proposed method on YOLOv4. Mean Average Precision (mAP), which is the average of the accuracy of all categories, the expression is:

where

N represents the number of all categories,

is the average accuracy rate of a category and the expression is:

where

P (Precision) is the accuracy rate of a certain type of sample,

R (Recall) is the recall rate, and their expression are:

where

represents the number of positive samples that are correctly divided;

is the number of positive samples predicted but actually negative samples;

refers to the number of negative samples predicted but actually positive samples.

F1-Score is a measure of classification problems and is often used as the final indicator for multi-classification problems. It is the harmonic mean of Precision and Recall.

The Log-Average Miss Rate (LAMR) indicates the omission of the test set in the dataset. Larger LAMR, more missed object, smaller LAMR, and less missed object, also indicate better model performance.The miss rate expression is:

4.2. Ablation Studies

A combination experiment of different modules is set up in order to analyze the influence of the NCIoU loss function, the improved confidence function and AM-NFPN on the model performance. Results of the experiment are shown in

Table 3.

The first group in the ablation study table is the benchmark model experimental data. Compared to the benchmark model, when using the three strategies alone, all evaluation indicators have improved. The NCIoU strategy has the best improvement effect. Specifically, mAP@.5:.95 and AP75 increased by 3.34% and 4.91%, respectively. The least effective improvement was achieved by using the confidence scheme, but this resulted in at least a 2% improvement in all indicators compared to the benchmark model. All three sets of experiments using a combination of the two strategies showed better results than one. In all three sets of experiments, the model with the NCIoU strategy was better than the model without it. It can be seen that the NCIoU strategy has the greatest impact on the model and the best improvement effect among the three strategies. When the three strategies are used together, the comprehensive performance of the model is the best, with optimal performance across multiple indicators, mAP@.5:.95, AP75 and Recall have been improved by 3.98%, 5.69% and 5.08%, respectively, and the missed detection rate has been reduced by 5.7%. It is the final algorithm used to detect faces and identify whether masks are worn in a standardized manner.

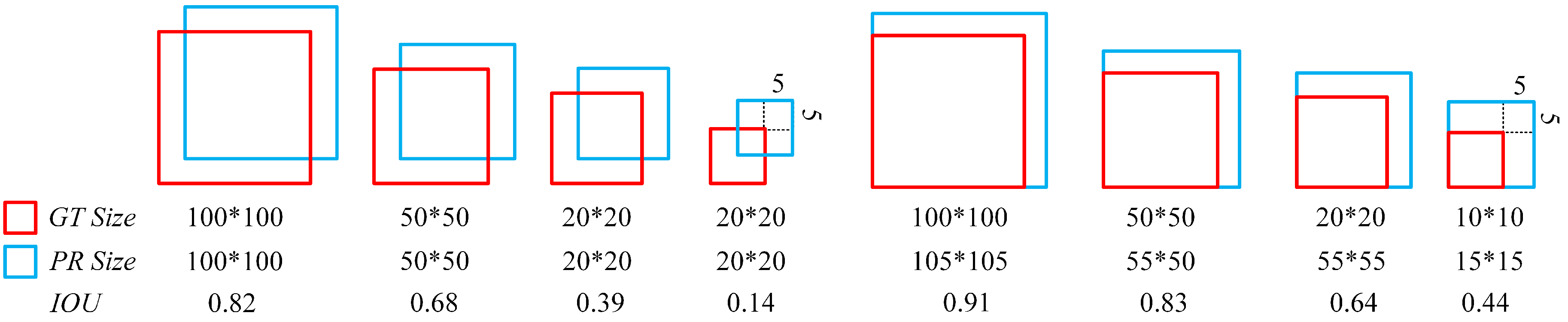

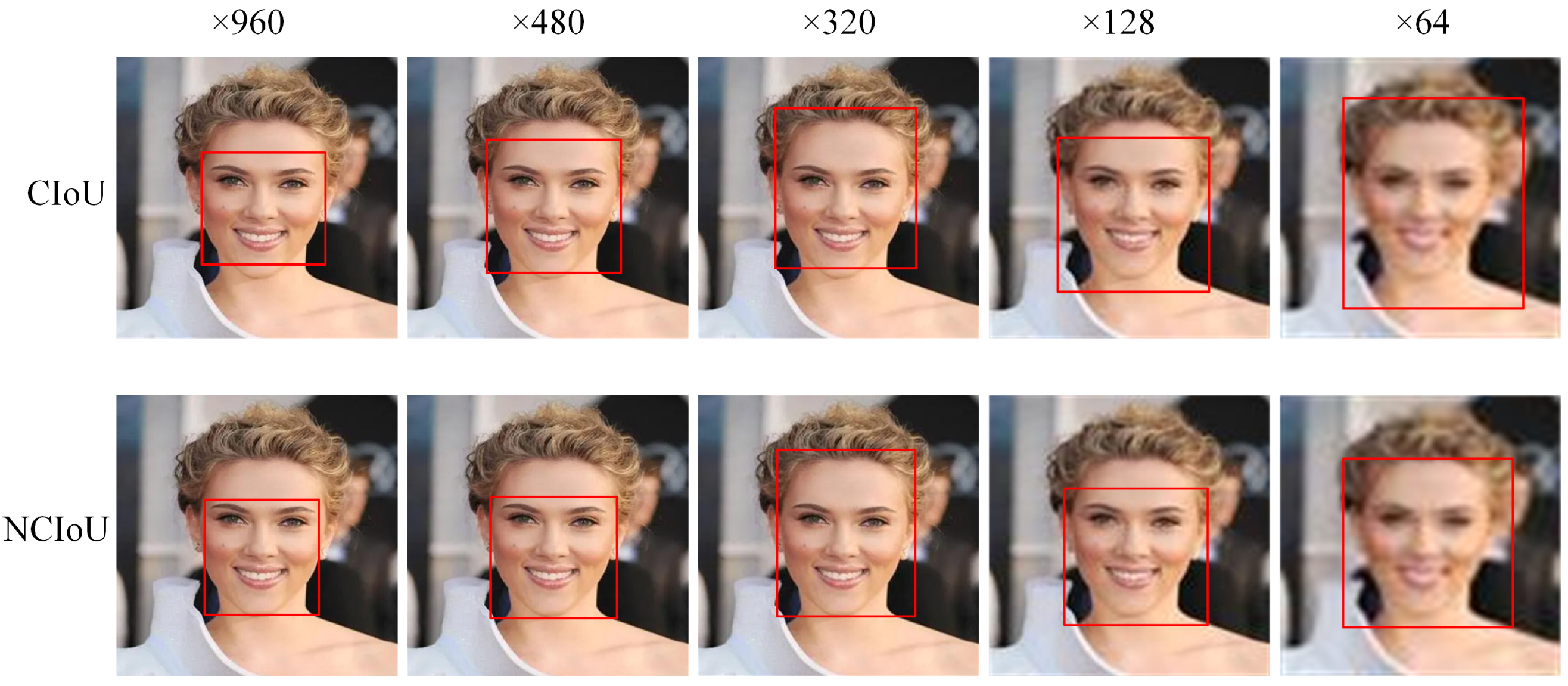

Figure 10 shows test images with pixel values from 64 to 960, and applied CIoU and NCIoU functions on the image pyramid. The object in the test image is a face. The results show that the lower the pixel value, the more difficult the loss function is to process the object. As discussed in

Section 3.1, rich bounding box information can provide more accurate prediction boxes to help detect more objects, accompanied by higher confidence. In addition, additional box information does not only make the prediction box closer to the real box, but also adjust the aspect ratio of the bounding box to obtain a higher intersection ratio to make the detection effect more accurate, which is fully demonstrated in the test diagram. It is worth noting that NCIoU can still provide a more reasonable prediction box compared to CIoU in extreme situations.

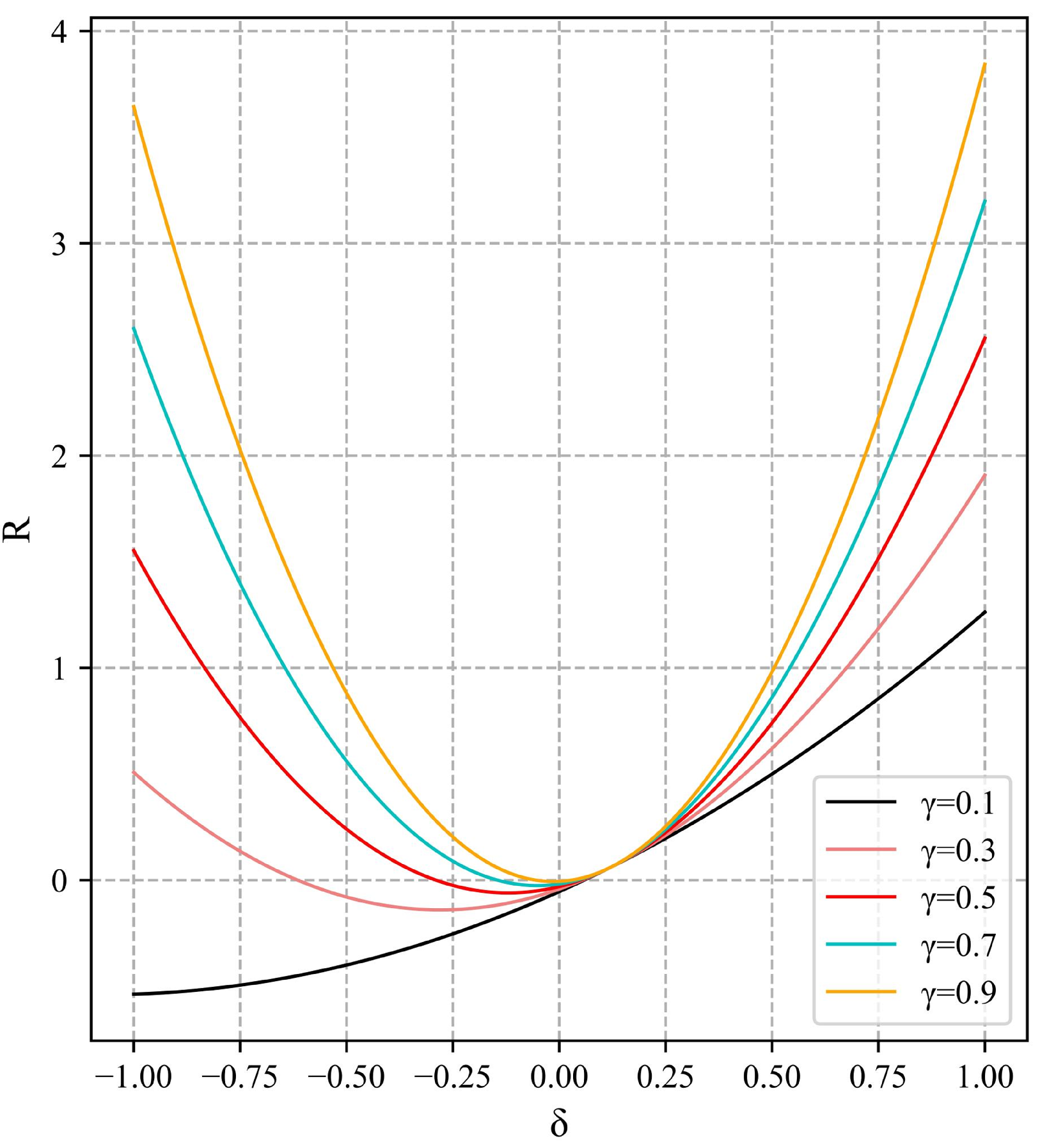

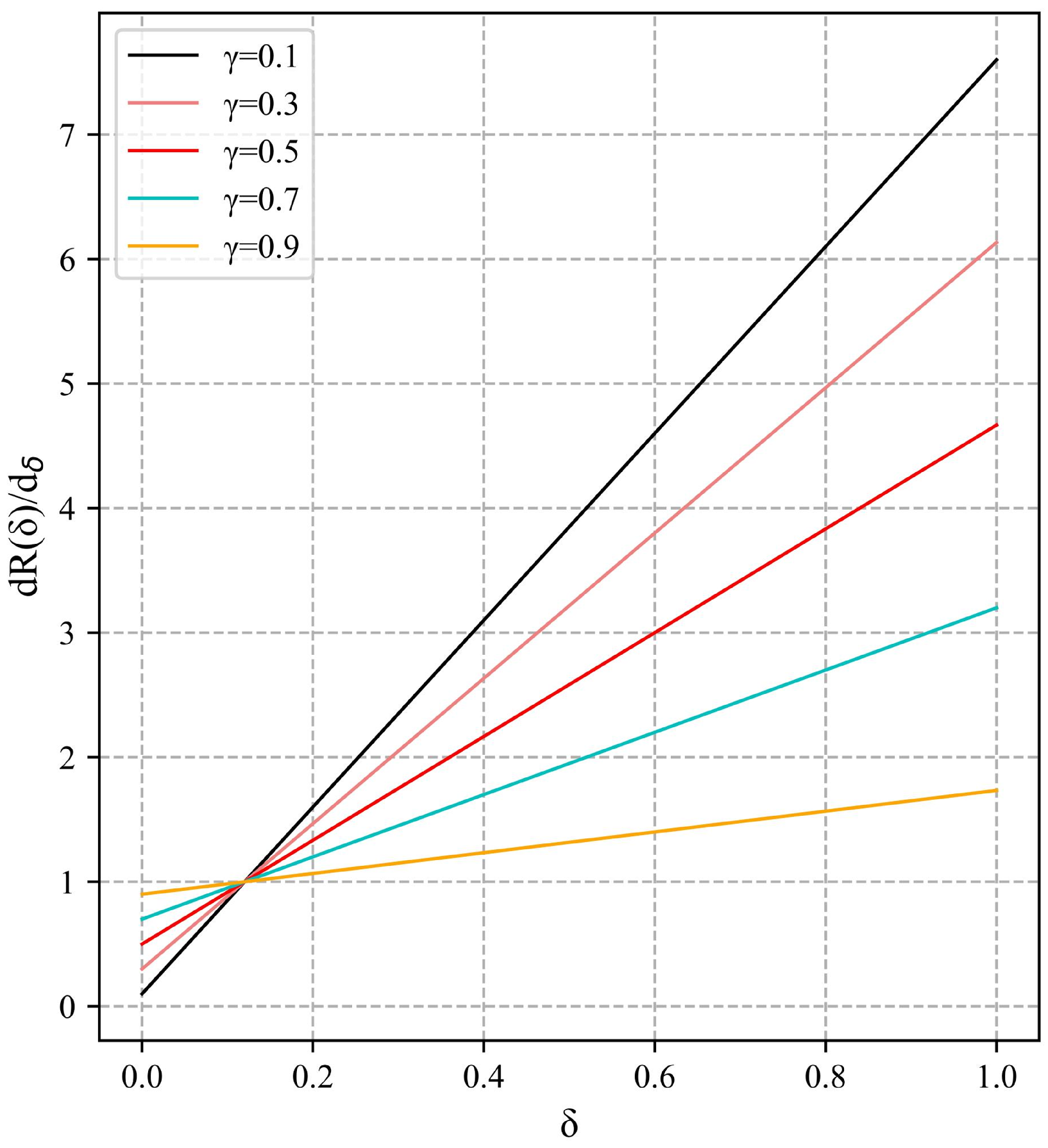

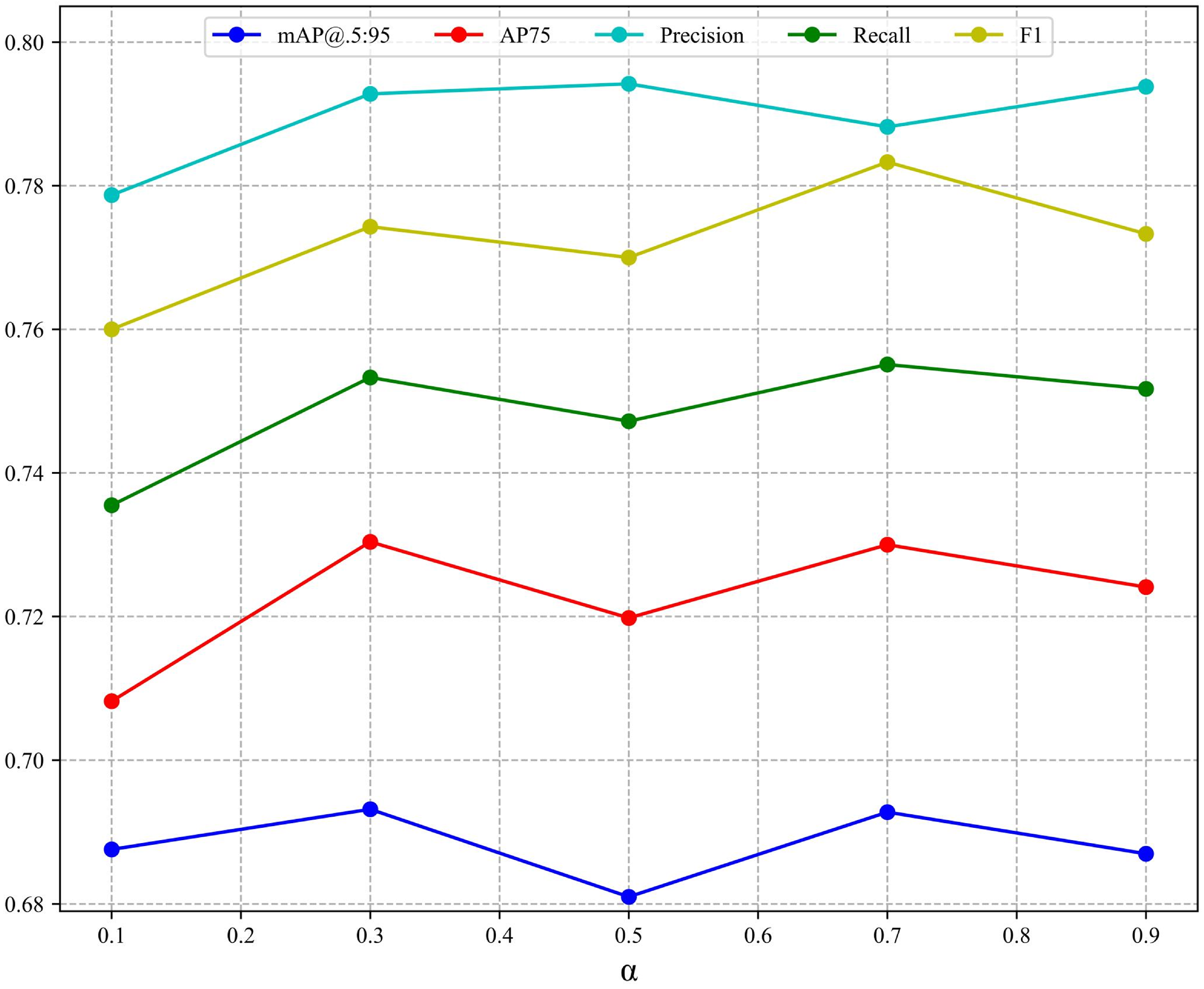

The advantage of NCIoU is that the parameters can be adjusted to adapt the function to changing detection scenarios, and varying

allow the function to obtain different characteristics to cope with complex scenarios. The variation of each index of NCIoU for different values of

is shown in

Figure 11. There are five groups of experiments with

values of 0.1, 0.3, 0.5, 0.7, and 0.9. It can be seen from the figure that each metric has good improvement when

is 0.3 and 0.7, but the F1 score decreases abruptly when

is 0.7. Overall, the model performance is optimal when

is 0.3. When

is 0.5, the model has the least improvement effect, but the performance of NICIoU is also better than CIoU at this time.

CIoU is gaining a high degree of recognition, and many better functions have been proposed [

39,

40,

41] based on it since then. We conducted some comparative experiments on RFM with some bounding box regression functions, as shown in

Table 4. EIoU is the most effective of these published methods, but has a 2% difference in several metrics compared to NCIoU.

The penalty term applied to CIoU is also applied to the commonly used bounding box regression loss functions DIoU and GIoU to verify the effect of the penalty term on the other functions, as shown in

Table 5.

It can be seen that all the metrics of both loss functions are improved after adding the penalty term, with the most pronounced performance on mAP@.5:.95. Overall, the penalty term improves GIoU in a away that is better than DIoU, which is due to the fact that GIoU contains less bounding box information than DIoU itself, so it plays a bigger role when adding box information, and also shows that the more information the loss function contains, the better the performance. The parameter of the penalty term can be adjusted for different functions to obtain better results for each function, and the generalization performance of the function can be improved by adjusting the parameter for different datasets to make the function more adaptable to different scenarios.

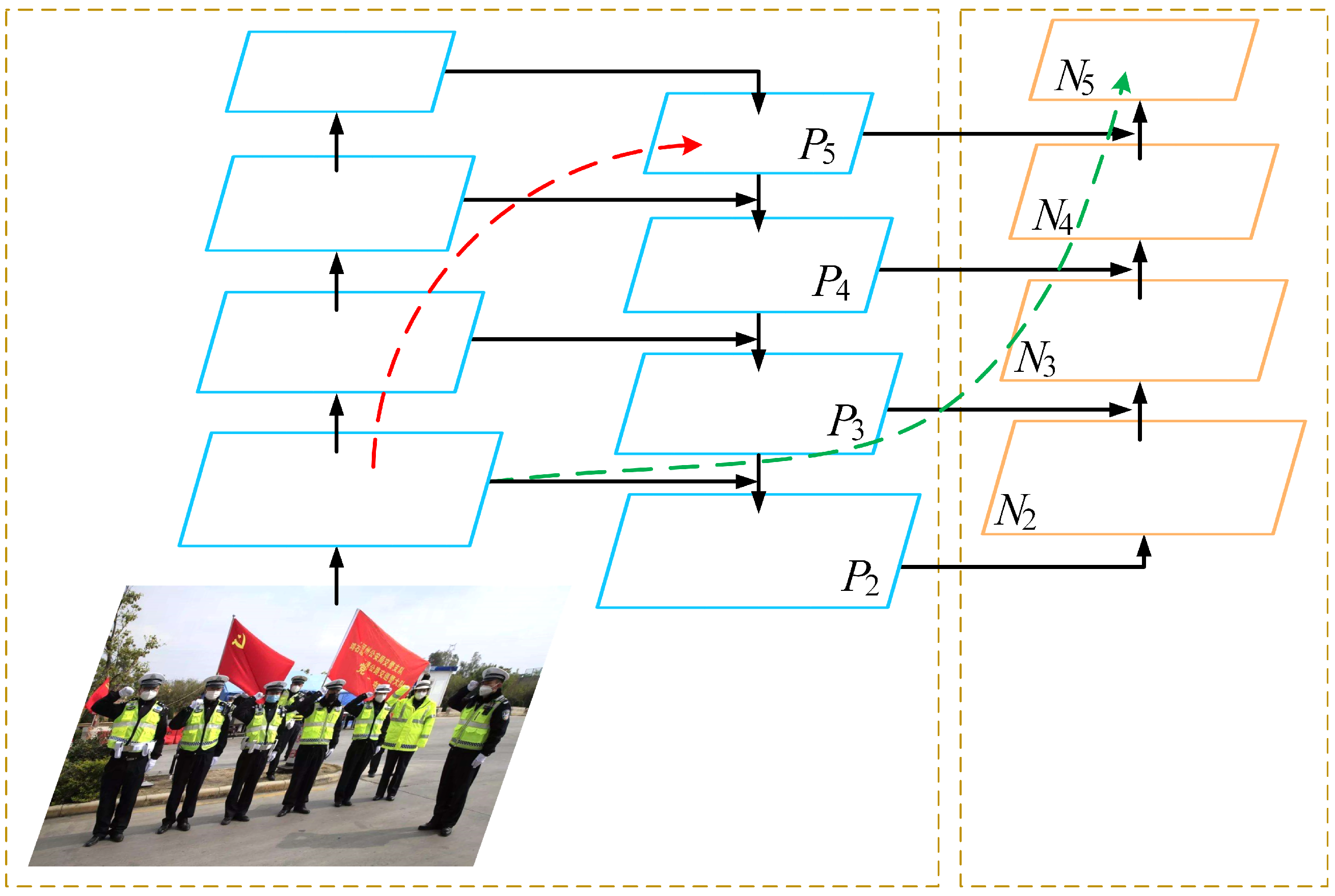

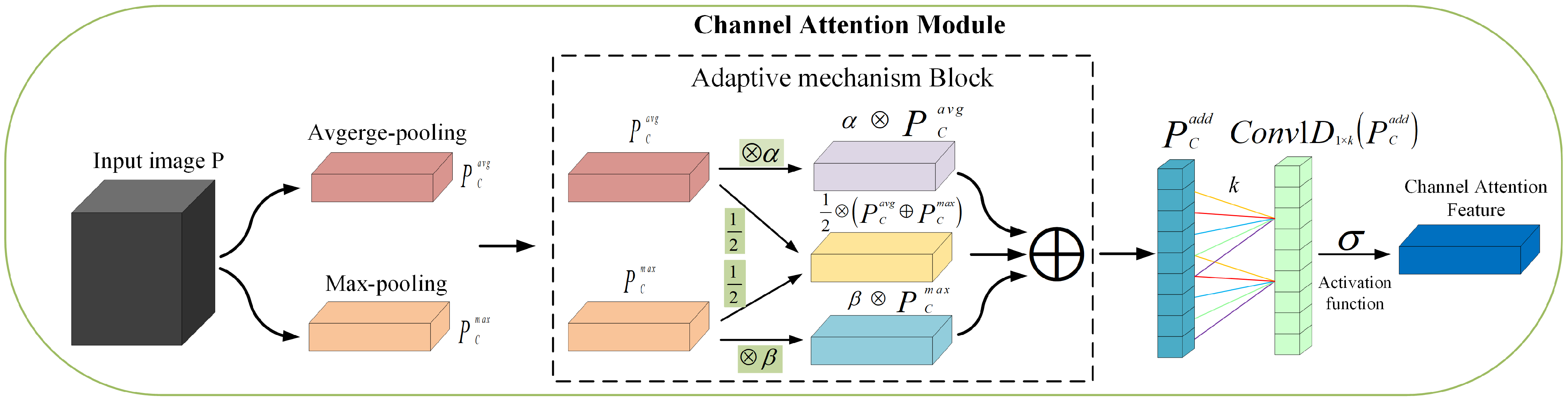

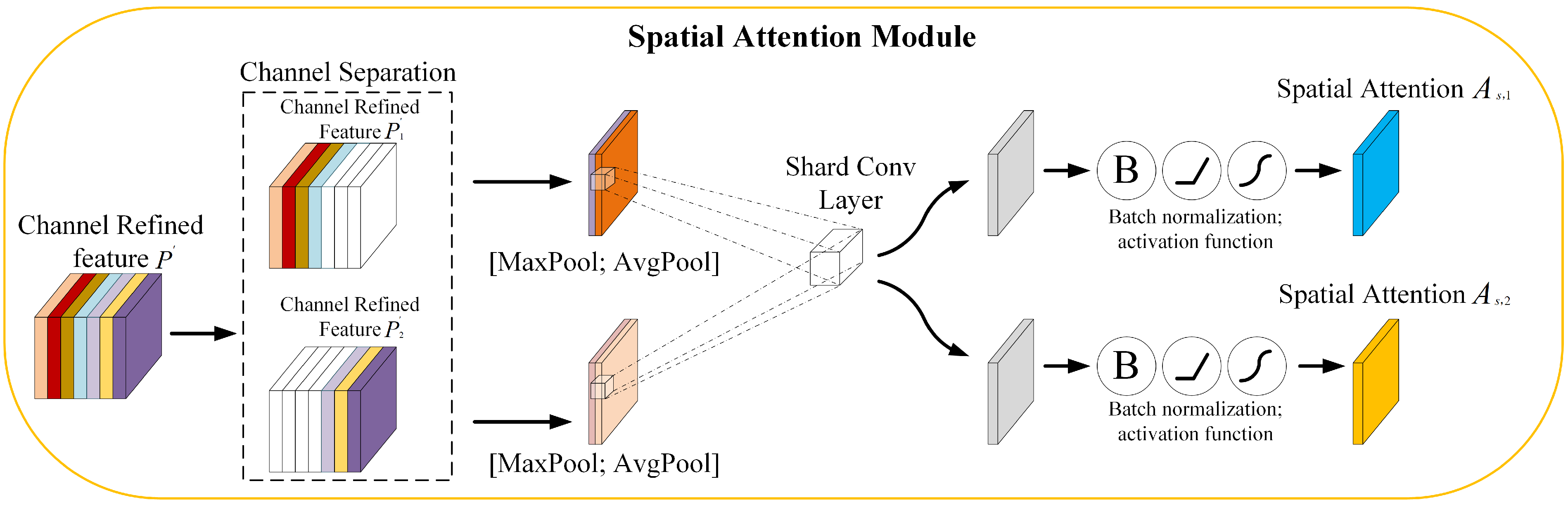

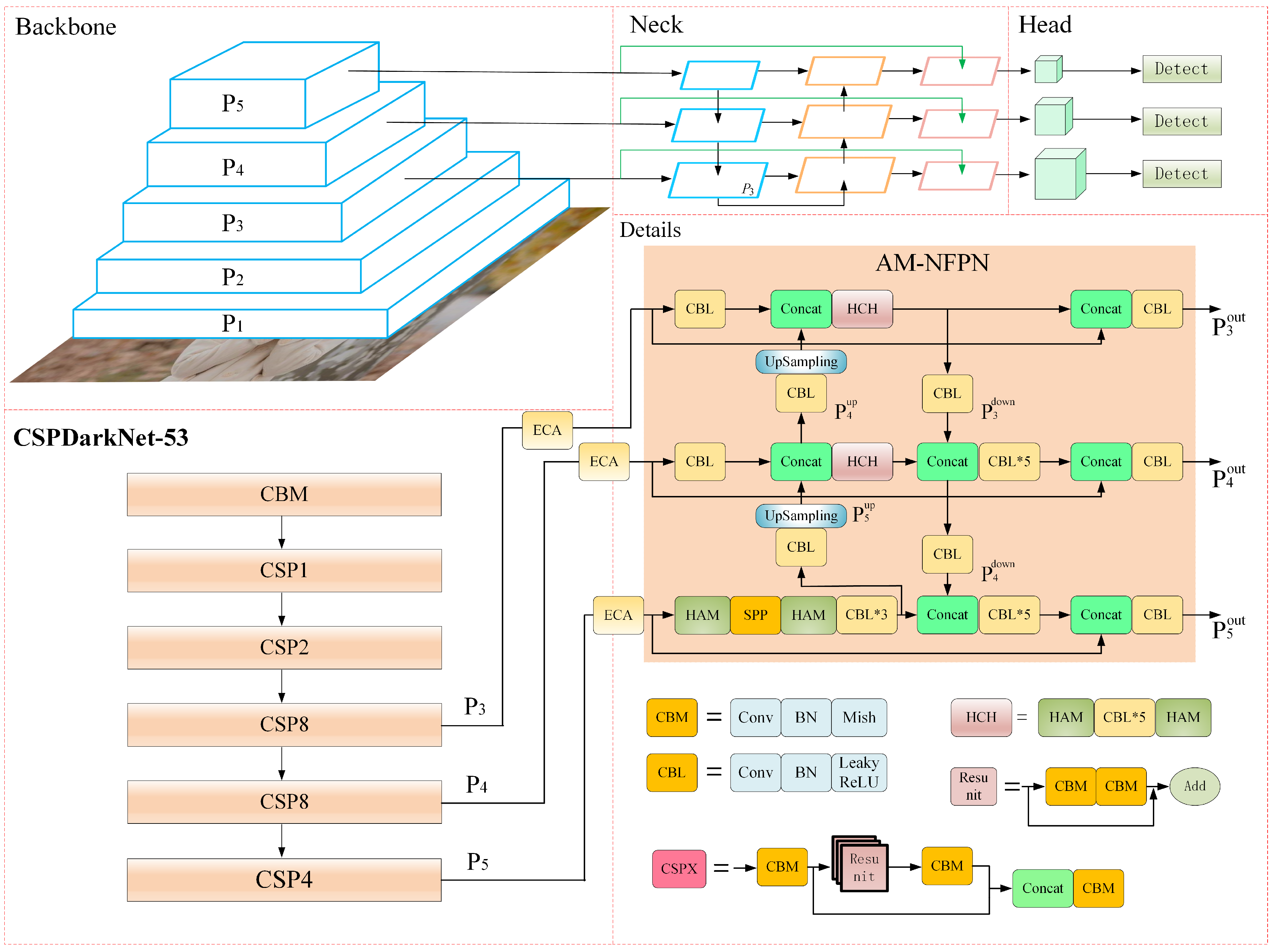

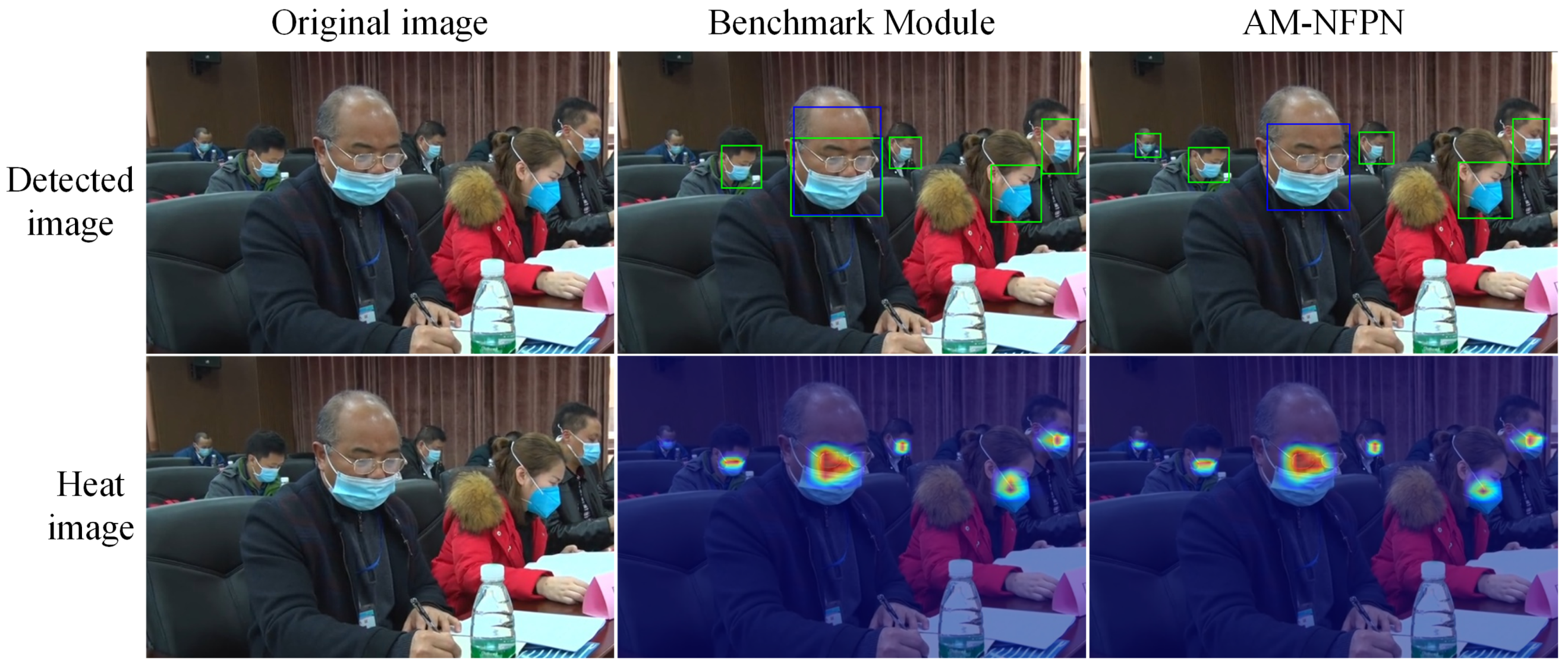

As shown in

Figure 12, the actual detection map is visualized with the heat map; the blue boxes represent irregular mask wear and the green boxes represent regulated mask wear. The heat map shows that the baseline network does not pay any attention to the smaller objects, and less information makes the model think that the probability of the presence of the object here is low and the missed detection is more prominent.

After AM-NFPN compensates and highlights the object information, the feature map can obtain enough information for even smaller objects to make the model identify the presence of an object and thus improve the detection accuracy. In addition, since there are common attributes and features for the three categories of face, normative mask wear and irregular mask wear, a mask can be used to describe whether or not the mask is normatively worn in both cases when the mask wear is between or close to the defining line of the two categories, it is easy to cause the model to return two different categories of prediction boxes for the same object. This situation is especially prominent when the information is not sufficient. The benchmark module lacks sufficient detailed information to predict the irregular category as both normative and irregular mask wear, while the ability to incorporate more target information AM-NFPN greatly avoids this situation and reduces the false detection rate.

Commonly used feature fusion networks are to fuse multi-scale feature maps in a ratio, such as FSSD’s [

42] feature fusion module(FFM), FPN and PANet. We compared these methods experimentally with the Pyramidal feature hierarchy(PFH) method on RFM, as shown in

Table 6. It can be clearly seen that AM-NFPN achieves better results. This better performance mainly lies in the loss of less object information during the fusion process, which is mainly shown in the actual detection results for small objects, blurred objects and obscured objects in the gap.

In addition, several confidence loss functions commonly used today are also compared for experiments on RFM, as shown in

Table 7. The Focal function with the worst overall performance obtained better results on Precision, which is the result of sacrificing the performance of Recall. The difference between the overall performance of MSE and BCE is very small, and it is difficult to have a significant difference between the performance of the two functions in practical applications. The combination of these two functions with targeted calculation of confidence loss can realize the performance of the two functions to some extent and show more impressive results.

4.3. Comparative Experiment

As shown in

Table 8, the experimental comparisons of several models with different sizes of input images are presented. It can be seen that the larger the input image is for each model, the better the training results are. Several evaluation metrics can get some improvement, which is due to the higher resolution of the input image, and the image packs more detailed information, but a larger image will take more time to train.

Our algorithm achieves the highest values for AP75 and mAP@.5:.95; this is still 2% higher than the highly acclaimed YOLOv7-X, which achieved the second best result in the comparison experiments for three sizes of input images. Compared with the benchmark model YOLOv4, there are good improvements in several metrics, and the improvement in model performance is mainly due to the inclusion of more detailed information in the final feature map to be detected. For the YOLOX algorithm with improved CSPDarkNet-53 network, although its S, M, and L type feature extraction networks are relatively small, the accuracy rate is insufficient in detecting faces and masks, and the mAP is greatly reduced under different thresholds. For the most stringent mAP@.5:.95, when the input image size is 416 × 416, our algorithm is 2.55% higher than the second best YOLOv7-X, 12.64% higher than the worst performing Faster R-CNN, and still 12.23% higher compared to the SSD with 512 × 512 input image.

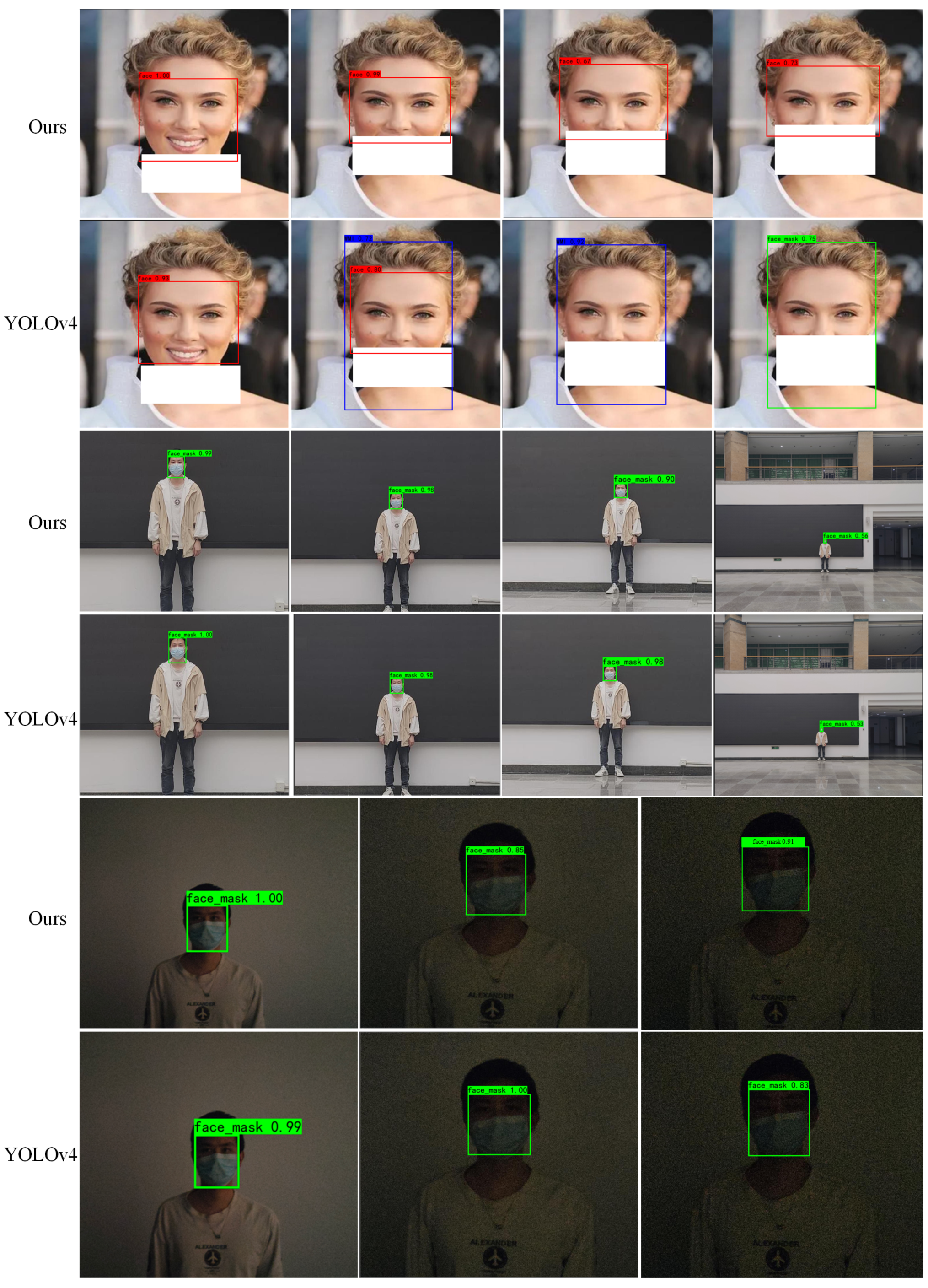

We selected YOLOv4 and YOLOv7-X, which have better algorithm performance, and did the visualization and analysis of the detection results of each algorithm in the same experimental environment, as shown in

Figure 13. The standard mask object is marked as face_mask in green, followed by the number of confidence degrees; the irregular mask-wearing object is marked as WMI in blue; the face object is marked as face in red.

Compared with the other two algorithms, the detection results of our algorithm are better, not only being able to detect objects missed by the other two algorithms, but also objects detected by all algorithms tend to have a higher confidence level. YOLOv4 and YOLOv7-X are able to detect most of the objects, but due to the influence of factors such as object attitude, focal distance during imaging and occlusion, there are cases of missed detection, and these factors are precisely the key issues that limit the accuracy of object detection algorithms. While our algorithm is more capable of coping with unfavorable factors and is able to detect small or occluded objects that are difficult to detect by other algorithms, reducing the overall misdetection rate and false detection rate, and is able to adapt to more and more complex scenarios.

The heat map visualization of this paper with YOLOv4 and YOLOv7-X is shown in

Figure 14, where the darker the color, the higher the confidence level of the object. It can be seen that the heat map of this algorithm has the darkest color in the region with objects, and has a higher confidence level when detecting the objects, which is confirmed by the detection results in

Figure 13. Compared with the other two algorithms, our algorithm focuses on more objects and has a certain degree of attention to the objects missed by other algorithms, thus detecting more objects with higher detection accuracy. Although the color of YOLOv4 heat map is lighter than ours, it is darker than YOLOv7-X, and the focus range is smaller, which means it has higher confidence but the border position is not accurate enough.

Measuring the superiority of an algorithm mainly requires not only considering the detection accuracy of the algorithm, but also weighing the detection speed. For detecting faces and masks in public places, an algorithm with excellent accuracy and speed is needed; high accuracy and low speed or vice versa will not accomplish the desired task. For this purpose, the accuracy and speed of several algorithms are shown in

Figure 15. It shows that the detection accuracy of our algorithm is the most competitive. Although the detection speed is not the fastest, it is only less than two milliseconds compared to the YOLOv4 algorithm, which has achieved a high balance of accuracy and speed and is widely used, and slightly better than YOLOv7-X in terms of speed and accuracy. The YOLOX algorithm increases the detection speed as it reduces the complexity of its backbone feature extraction network, but the accuracy rate decreases and it is difficult to complete the detection task. YOLOX-X algorithm has good speed and accuracy, but it still has some gap from our algorithm. Other algorithms such as SSD and EfficientDet can meet the requirements in terms of detection speed but still have a certain gap in accuracy. Overall, our algorithm is a balanced algorithm in terms of detection accuracy and degree and can meet the requirement of detecting faces and masks in real time.

The

Figure 16 shows the comparison of our algorithm and YOLOv4 algorithm detection results under different occlusion level, illumination and distance. For varying degrees of facial occlusion, YOLOv4 again appears to return two detection boxes, while the false detection is more serious. Our algorithm detects occlusions better than YOLOv4, but more difficult facial occlusion cases also show examples of false detection. YOLOv7-X does not appear to show two predicted boxes, but instead does not detect objects, as shown in the two images with more occlusions. Our algorithm performs consistently with the YOLOv4 algorithm at different distances and illumination, and the given examples all detect the object correctly. For other examples such as less illumination or longer distances, all algorithms fail to detect the object. The farthest distance image and the darkest image given in the figure YOLOv7-X both fail to detect the object. Our algorithm outperforms the other algorithms in the comparison experiments, and the combined detection performance of our algorithm is better than the other algorithms for different scenarios, which is beneficial for more applications.