Validation of an Algorithm for Measurement of Sedentary Behaviour in Community-Dwelling Older Adults

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Experimental Protocol

2.3. Procedure

2.3.1. Scripted Activities

2.3.2. Non-Scripted Activities

2.3.3. Data Management

2.3.4. Algorithm Implementation

| Algorithm 1 Pseudocode for sitting, lying, and upright bouts |

| Data: acc = [ax, ay, az] 1 axfiltered ← butterworth (ax, order = 2, cutoff = 17 Hz) ayfiltered ← butterworth (ay, order = 2, cutoff = 17 Hz) azfiltered ← butterworth (az, order = 2, cutoff = 17 Hz) for (every 0.1 s) std_axfiltered ← stdev(axfiltered) std_ayfiltered ← stdev(ayfiltered) std_azfiltered ← stdev(azfiltered) tilt_angle_VT ← ·() tilt_angle_ML ← ·() tilt_angle_AP ← ·() end std_sum ← std_axfiltered + std_ayfiltered + std_azfiltered std_sumfiltered ← butterworth (std_sum, order = 2, cutoff = 1 Hz) tilt_angle_VTfiltered ← butterworth (tilt_angle_VT, order = 2, cutoff = 0.25 Hz) tilt_angle_MLfiltered ← butterworth (tilt_angle_ML, order = 2, cutoff = 0.25 Hz) tilt_angle_APfiltered ← butterworth (tilt_angle_AP, order = 2, cutoff = 0.25 Hz) for (every 0.1 s) create empty array to store upright movement end if ceiling(mean(tilt_angle_VTfiltered) ≥ 150 2 if ceiling(mean(tilt_angle_MLfiltered) ≥ 90 2 if ceiling(mean(tilt_angle_APfiltered) ≥ 90 2 for (every 0.1 s) if std_sumfiltered ≥ mean(std_sumfiltered) assign 1 to the array 3 end end end end else if tilt_angle_VTfiltered ≥ 140 2 and tilt_angle_APfiltered ≥ 75 2 for (every 0.1 s) assign 1 to the array 3 end end find start_frame and end_frame of potential upright bouts and store in an array Result: MoveArray [start_frame, end_frame] 4 for every two consecutive potential upright bouts if mean(tilt_angle_APfiltered) of current potential upright bout < 40 5 label current potential upright bout as “Lying” else if mean(tilt_angle_APfiltered) of current potential upright bout < 80 5 label current potential upright bout as “Sitting” else label current potential upright bout as “Upright” end if mean(tilt_angle_APfiltered) of current non-upright bout < mean(tilt_angle_APfiltered)/2.5 of preceding upright bout label current non-upright bout as “Lying” else if mean(tilt_angle_APfiltered) of current non-upright bout < mean(tilt_angle_APfiltered) of preceding upright bout label current non-upright bout as “Sitting” else label current non-upright bout as “Upright” end end Result: Data_Label = [array of labelled bouts] |

2.3.5. Data Analysis

3. Results

3.1. Participants

3.2. Inter-Rater Reliability

3.3. Criterion Validity

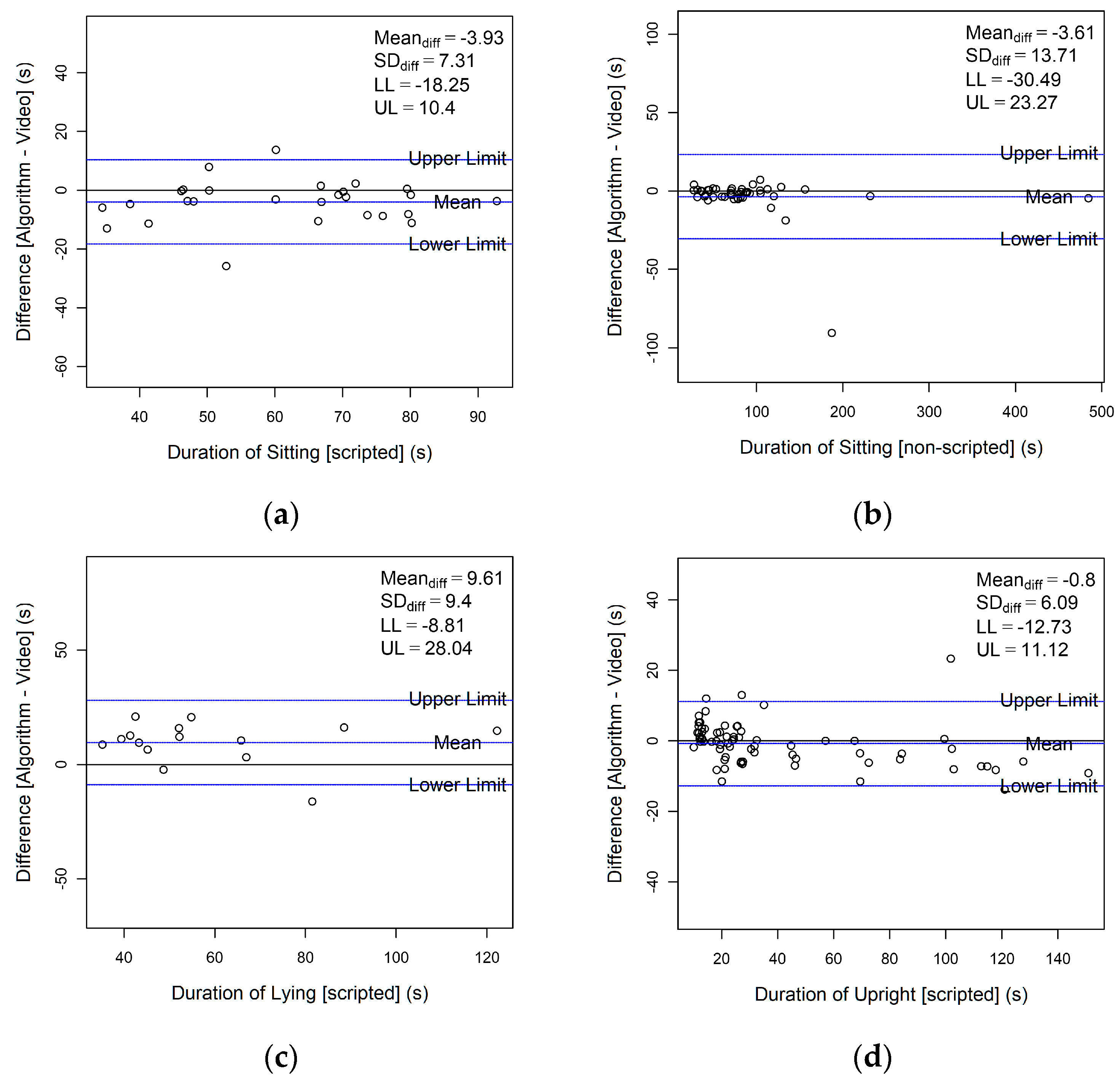

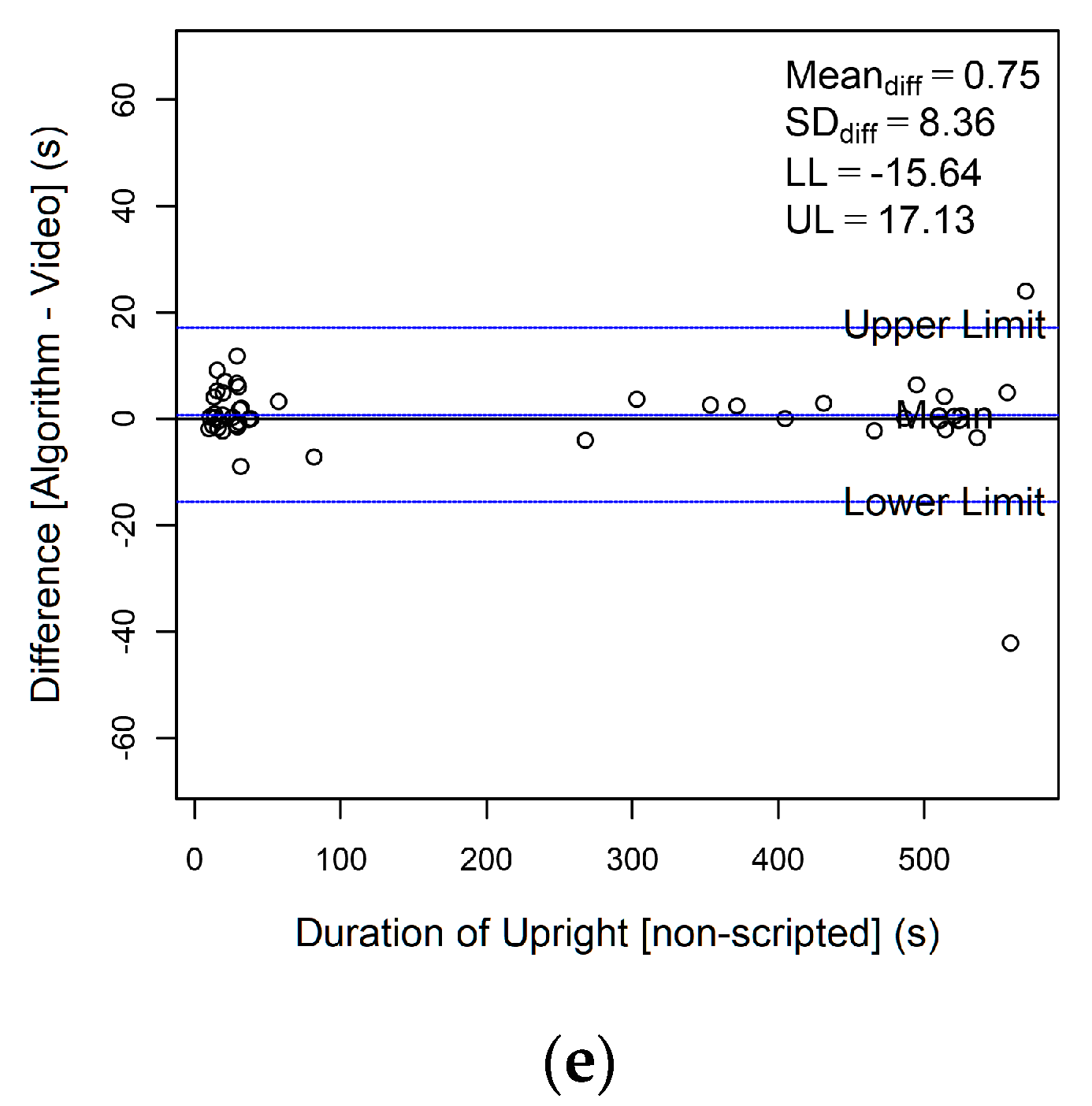

3.4. Limits of Agreement

4. Discussion

Study Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tremblay, M.S.; Aubert, S.; Barnes, J.D.; Saunders, T.J.; Carson, V.; Latimer-Cheung, A.E.; Chastin, S.F.; Altenburg, T.M.; Chinapaw, M.J. Sedentary behavior research network (SBRN)–terminology consensus project process and outcome. Int. J. Behav. Nutr. Phys. Act. 2017, 14, 75. [Google Scholar] [CrossRef] [PubMed]

- Saunders, T.J.; McIsaac, T.; Douillette, K.; Gaulton, N.; Hunter, S.; Rhodes, R.E.; Prince, S.A.; Carson, V.; Chaput, J.-P.; Chastin, S. Sedentary behaviour and health in adults: An overview of systematic reviews. Appl. Physiol. Nutr. Metab. 2020, 45, S197–S217. [Google Scholar] [CrossRef] [PubMed]

- Mitsutake, S.; Shibata, A.; Ishii, K.; Amagasa, S.; Kikuchi, H.; Fukushima, N.; Inoue, S.; Oka, K. Clustering of domain-specific sedentary behaviors and their association with physical function among community-dwelling older adults. J. Phys. Act. Health 2020, 17, 709–714. [Google Scholar] [CrossRef] [PubMed]

- Falck, R.S.; Davis, J.C.; Liu-Ambrose, T. What is the association between sedentary behaviour and cognitive function? A systematic review. Br. J. Sport. Med. 2017, 51, 800–811. [Google Scholar] [CrossRef]

- Lakerveld, J.; Mackenbach, J.D.; Horvath, E.; Rutters, F.; Compernolle, S.; Bardos, H.; De Bourdeaudhuij, I.; Charreire, H.; Rutter, H.; Oppert, J.M.; et al. The relation between sleep duration and sedentary behaviours in European adults. Obes. Rev. 2016, 17 (Suppl. S1), 62–67. [Google Scholar] [CrossRef]

- Madden, K.M.; Ashe, M.C.; Lockhart, C.; Chase, J.M. Sedentary behavior and sleep efficiency in active community-dwelling older adults. Sleep Sci. 2014, 7, 82–88. [Google Scholar] [CrossRef]

- Van Holle, V.; Van Cauwenberg, J.; De Bourdeaudhuij, I.; Deforche, B.; Van de Weghe, N.; Van Dyck, D. Interactions between neighborhood social environment and walkability to explain Belgian older adults’ physical activity and sedentary time. Int. J. Environ. Res. Public Health 2016, 13, 569. [Google Scholar] [CrossRef]

- Vancampfort, D.; Hallgren, M.; Schuch, F.; Stubbs, B.; Smith, L.; Rosenbaum, S.; Firth, J.; Van Damme, T.; Koyanagi, A. Sedentary behavior and depression among community-dwelling adults aged ≥50 years: Results from the irish longitudinal study on ageing. J. Affect. Disord. 2020, 262, 389–396. [Google Scholar] [CrossRef]

- Dowd, K.P.; Szeklicki, R.; Minetto, M.A.; Murphy, M.H.; Polito, A.; Ghigo, E.; van der Ploeg, H.; Ekelund, U.; Maciaszek, J.; Stemplewski, R. A systematic literature review of reviews on techniques for physical activity measurement in adults: A DEDIPAC study. Int. J. Behav. Nutr. Phys. Act. 2018, 15, 15. [Google Scholar] [CrossRef]

- Lord, S.; Teh, R.; Gibson, R.; Smith, M.; Wrapson, W.; Thomson, M.; Rolleston, A.; Neville, S.; McBain, L.; Del Din, S. Optimising function and well-being in older adults: Protocol for an integrated research programme in Aotearoa/New Zealand. BMC Geriatr. 2022, 22, 215. [Google Scholar] [CrossRef]

- Chastin, S.; McGregor, D.; Palarea-Albaladejo, J.; Diaz, K.M.; Hagströmer, M.; Hallal, P.C.; van Hees, V.T.; Hooker, S.; Howard, V.J.; Lee, I.-M. Joint association between accelerometry-measured daily combination of time spent in physical activity, sedentary behaviour and sleep and all-cause mortality: A pooled analysis of six prospective cohorts using compositional analysis. Br. J. Sport. Med. 2021, 55, 1277–1285. [Google Scholar] [CrossRef] [PubMed]

- Shephard, R.J. Limits to the measurement of habitual physical activity by questionnaires. Br. J. Sport. Med. 2003, 37, 197–206. [Google Scholar] [CrossRef] [PubMed]

- Harvey, J.A.; Chastin, S.F.; Skelton, D.A. How sedentary are older people? A systematic review of the amount of sedentary behavior. J. Aging Phys. Act. 2015, 23, 471–487. [Google Scholar] [CrossRef] [PubMed]

- Heesch, K.C.; Hill, R.L.; Aguilar-Farias, N.; Van Uffelen, J.G.; Pavey, T. Validity of objective methods for measuring sedentary behaviour in older adults: A systematic review. Int. J. Behav. Nutr. Phys. Act. 2018, 15, 119. [Google Scholar] [CrossRef]

- Sasaki, J.E.; Hickey, A.; Staudenmayer, J.; John, D.; Kent, J.A.; Freedson, P.S. Performance of activity classification algorithms in free-living older adults. Med. Sci. Sport. Exerc. 2016, 48, 941. [Google Scholar] [CrossRef]

- Shei, R.-J.; Holder, I.G.; Oumsang, A.S.; Paris, B.A.; Paris, H.L. Wearable activity trackers–advanced technology or advanced marketing? Eur. J. Appl. Physiol. 2022, 122, 1975–1990. [Google Scholar] [CrossRef]

- Taylor, L.M.; Klenk, J.; Maney, A.J.; Kerse, N.; MacDonald, B.M.; Maddison, R. Validation of a body-worn accelerometer to measure activity patterns in octogenarians. Arch. Phys. Med. Rehabil. 2014, 95, 930–934. [Google Scholar] [CrossRef]

- Dijkstra, B.; Kamsma, Y.P.; Zijlstra, W. Detection of gait and postures using a miniaturized triaxial accelerometer-based system: Accuracy in patients with mild to moderate Parkinson’s disease. Arch. Phys. Med. Rehabil. 2010, 91, 1272–1277. [Google Scholar] [CrossRef]

- Jung, S.; Michaud, M.; Oudre, L.; Dorveaux, E.; Gorintin, L.; Vayatis, N.; Ricard, D. The use of inertial measurement units for the study of free living environment activity assessment: A literature review. Sensors 2020, 20, 5625. [Google Scholar] [CrossRef]

- Awais, M.; Chiari, L.; Ihlen, E.A.; Helbostad, J.L.; Palmerini, L. Classical machine learning versus deep learning for the older adults free-living activity classification. Sensors 2021, 21, 4669. [Google Scholar] [CrossRef]

- Ustad, A.; Logacjov, A.; Trollebø, S.Ø.; Thingstad, P.; Vereijken, B.; Bach, K.; Maroni, N.S. Validation of an Activity Type Recognition Model Classifying Daily Physical Behavior in Older Adults: The HAR70+ Model. Sensors 2023, 23, 2368. [Google Scholar] [CrossRef] [PubMed]

- Wullems, J.A.; Verschueren, S.M.; Degens, H.; Morse, C.I.; Onambele, G.L. Performance of thigh-mounted triaxial accelerometer algorithms in objective quantification of sedentary behaviour and physical activity in older adults. PLoS ONE 2017, 12, e0188215. [Google Scholar] [CrossRef] [PubMed]

- Giurgiu, M.; Bussmann, J.B.; Hill, H.; Anedda, B.; Kronenwett, M.; Koch, E.D.; Ebner-Priemer, U.W.; Reichert, M. Validating accelerometers for the assessment of body position and sedentary behavior. J. Meas. Phys. Behav. 2020, 3, 253–263. [Google Scholar] [CrossRef]

- Soltani, A.; Paraschiv-Ionescu, A.; Dejnabadi, H.; Marques-Vidal, P.; Aminian, K. Real-world gait bout detection using a wrist sensor: An unsupervised real-life validation. IEEE Access 2020, 8, 102883–102896. [Google Scholar] [CrossRef]

- Chigateri, N.G.; Kerse, N.; Wheeler, L.; MacDonald, B.; Klenk, J. Validation of an accelerometer for measurement of activity in frail older people. Gait Posture 2018, 66, 114–117. [Google Scholar] [CrossRef]

- Farrahi, V.; Niemelä, M.; Kangas, M.; Korpelainen, R.; Jämsä, T. Calibration and validation of accelerometer-based activity monitors: A systematic review of machine-learning approaches. Gait Posture 2019, 68, 285–299. [Google Scholar] [CrossRef]

- Del Din, S.; Galna, B.; Godfrey, A.; Bekkers, E.M.; Pelosin, E.; Nieuwhof, F.; Mirelman, A.; Hausdorff, J.M.; Rochester, L. Analysis of free-living gait in older adults with and without Parkinson’s disease and with and without a history of falls: Identifying generic and disease-specific characteristics. J. Gerontol. Ser. A 2019, 74, 500–506. [Google Scholar] [CrossRef]

- Del Din, S.; Godfrey, A.; Rochester, L. Validation of an accelerometer to quantify a comprehensive battery of gait characteristics in healthy older adults and Parkinson’s disease: Toward clinical and at home use. IEEE J. Biomed. Health Inform. 2015, 20, 838–847. [Google Scholar] [CrossRef]

- Hickey, A.; Del Din, S.; Rochester, L.; Godfrey, A. Detecting free-living steps and walking bouts: Validating an algorithm for macro gait analysis. Physiol. Meas. 2016, 38, N1. [Google Scholar] [CrossRef]

- Geraedts, H.A.; Zijlstra, W.; Van Keeken, H.G.; Zhang, W.; Stevens, M. Validation and User Evaluation of a Sensor-Based Method for Detecting Mobility-Related Activities in Older Adults. PLoS ONE 2015, 10, e0137668. [Google Scholar] [CrossRef]

- Bourke, A.K.; Ihlen, E.A.F.; Bergquist, R.; Wik, P.B.; Vereijken, B.; Helbostad, J.L. A physical activity reference data-set recorded from older adults using body-worn inertial sensors and video technology—The ADAPT study data-set. Sensors 2017, 17, 559. [Google Scholar] [CrossRef] [PubMed]

- Bourke, A.K.; Ihlen, E.A.F.; Helbostad, J.L. Development of a gold-standard method for the identification of sedentary, light and moderate physical activities in older adults: Definitions for video annotation. J. Sci. Med. Sport 2019, 22, 557–561. [Google Scholar] [CrossRef] [PubMed]

- Godfrey, A.; Bourke, A.K.; Olaighin, G.M.; van de Ven, P.; Nelson, J. Activity classification using a single chest mounted tri-axial accelerometer. Med. Eng. Phys. 2011, 33, 1127–1135. [Google Scholar] [CrossRef] [PubMed]

- Lyons, G.; Culhane, K.; Hilton, D.; Grace, P.; Lyons, D. A description of an accelerometer-based mobility monitoring technique. Med. Eng. Phys. 2005, 27, 497–504. [Google Scholar] [CrossRef] [PubMed]

- Kinoshita, S.; Kiyama, R.; Yoshimoto, Y. Effect of handrail height on sit-to-stand movement. PLoS ONE 2015, 10, e0133747. [Google Scholar] [CrossRef]

- Ishigaki, N.; Kimura, T.; Usui, Y.; Aoki, K.; Narita, N.; Shimizu, M.; Hara, K.; Ogihara, N.; Nakamura, K.; Kato, H. Analysis of pelvic movement in the elderly during walking using a posture monitoring system equipped with a triaxial accelerometer and a gyroscope. J. Biomech. 2011, 44, 1788–1792. [Google Scholar] [CrossRef]

- Atrsaei, A.; Dadashi, F.; Hansen, C.; Warmerdam, E.; Mariani, B.; Maetzler, W.; Aminian, K. Postural transitions detection and characterization in healthy and patient populations using a single waist sensor. J. NeuroEngineering Rehabil. 2020, 17, 70. [Google Scholar] [CrossRef]

- Pham, M.H.; Warmerdam, E.; Elshehabi, M.; Schlenstedt, C.; Bergeest, L.-M.; Heller, M.; Haertner, L.; Ferreira, J.J.; Berg, D.; Schmidt, G. Validation of a lower back “wearable”-based sit-to-stand and stand-to-sit algorithm for patients with Parkinson’s disease and older adults in a home-like environment. Front. Neurol. 2018, 9, 652. [Google Scholar] [CrossRef]

- Bland, J.M.; Altman, D. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1986, 327, 307–310. [Google Scholar] [CrossRef]

- Fleiss, J.L. Design and Analysis of Clinical Experiments; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Rosenberg, D.; Walker, R.; Greenwood-Hickman, M.A.; Bellettiere, J.; Xiang, Y.; Richmire, K.; Higgins, M.; Wing, D.; Larson, E.B.; Crane, P.K. Device-assessed physical activity and sedentary behavior in a community-based cohort of older adults. BMC Public Health 2020, 20, 1256. [Google Scholar] [CrossRef]

- Zhang, Z.; Xiao, X.; Ma, W.; Li, J. Napping in older adults: A review of current literature. Curr. Sleep Med. Rep. 2020, 6, 129–135. [Google Scholar] [CrossRef] [PubMed]

- Grant, P.M.; Granat, M.H.; Thow, M.K.; Maclaren, W.M. Analyzing free-living physical activity of older adults in different environments using body-worn activity monitors. J. Aging Phys. Act. 2010, 18, 171–184. [Google Scholar] [CrossRef] [PubMed]

- Dijkstra, B.; Kamsma, Y.; Zijlstra, W. Detection of gait and postures using a miniaturised triaxial accelerometer-based system: Accuracy in community-dwelling older adults. Age Ageing 2010, 39, 259–262. [Google Scholar] [CrossRef] [PubMed]

- Trost, S.G.; Mciver, K.L.; Pate, R.R. Conducting accelerometer-based activity assessments in field-based research. Med. Sci. Sport. Exerc. 2005, 37, S531–S543. [Google Scholar] [CrossRef] [PubMed]

| Event/Activity | Definitions |

|---|---|

| Sitting |

|

| Lying |

|

| Upright |

|

| Scripted (n = 18) | Non-Scripted (n = 17) | |

|---|---|---|

| Age (yrs.) | 81.1 ± 6.2 | 80.5 ± 5.9 |

| Female | 12 (66.7%) | 11 (64.7%) |

| Weight (kg) | 71.2 ± 13.1 | 72.2 ± 12.7 |

| Height (cm) | 163.1 ± 9.4 | 163.8 ± 9.2 |

| BMI | 26.6 ± 3.3 | 26.7 ± 3.3 |

| Total Duration (in secs) | Average Duration 1 (in secs) | |||||

|---|---|---|---|---|---|---|

| Activity | Scripted | Non-Scripted | Overall | Scripted | Non-Scripted | Overall |

| Sitting | 1654.8 | 4111.2 | 5766.0 | 61.3 ± 16.0 | 89.4 ± 72.5 | 79.0 ± 59.7 |

| Lying | 879.7 | NA 2 | 879.7 | 58.6 ± 23.4 | NA 2 | 58.6 ± 23.4 |

| Upright | 2840.9 | 8109.6 | 10,950.4 | 40.0 ± 35.6 | 180.2 ± 217.1 | 94.4 ± 153.3 |

| Video Reference (in secs) | Algorithm (in secs) | ICC(2,1) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Activity | Scripted | Non-Scripted | Overall | Scripted | Non-Scripted | Overall | Scripted | Non-Scripted | Overall |

| Sitting | 61.3 ± 16.0 | 89.4 ± 72.5 | 80.0 ± 59.7 | 57.4 ± 18.1 | 85.8 ± 70.4 | 75.3 ± 58.3 | 0.888 | 0.981 | 0.923 |

| Lying | 58.6 ± 23.4 | NA | 58.6 ± 23.4 | 68.3 ± 24.4 | NA | 68.3 ± 24.4 | 0.858 | NA | 0.858 |

| Upright | 37.4 ± 33.7 | 180.2 ± 217.1 | 102.4 ± 163.9 | 39.4 ± 32.3 | 183.0 ± 219.0 | 104.7 ± 165.1 | 0.946 | 0.997 | 0.997 |

| Sensitivity (%) | Specificity (%) | PPV (%) | NPV (%) | |||||

|---|---|---|---|---|---|---|---|---|

| Scripted | Non-Scripted | Scripted | Non-Scripted | Scripted | Non-Scripted | Scripted | Non-Scripted | |

| Sitting | 76.90 | 92.25 | 94.84 | 99.48 | 89.17 | 98.99 | 88.14 | 95.83 |

| Lying | 70.39 | NA | 95.71 | NA | 83.10 | NA | 91.51 | NA |

| Upright | 77.30 | 96.94 | 92.54 | 98.99 | 93.07 | 99.48 | 75.89 | 94.25 |

| Activity | Scripted | Non-Scripted | Scripted | Non-Scripted |

|---|---|---|---|---|

| Sitting | 10.8 ± 11.8 | 5.0 ± 7.6 | 5.9 ± 5.8 | 4.9 ± 13.3 |

| Lying | 22.4 ± 11.9 | NA | 12.1 ± 5.6 | NA |

| Upright | 16.1 ± 16.9 | 10.0 ± 13.5 | 4.5 ± 4.2 | 6.4 ± 14.8 |

| Activity | Current | Dijkstra et al. [44] 1 | Taylor et al. [17] 2 |

|---|---|---|---|

| Sitting | 89.2 | 76.8 | 85.2 |

| Lying | 83.1 | 64.6 | 98.0 |

| Upright 1 | 93.1 | 80.2 | 56.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdul Jabbar, K.; Sarvestan, J.; Zia Ur Rehman, R.; Lord, S.; Kerse, N.; Teh, R.; Del Din, S. Validation of an Algorithm for Measurement of Sedentary Behaviour in Community-Dwelling Older Adults. Sensors 2023, 23, 4605. https://doi.org/10.3390/s23104605

Abdul Jabbar K, Sarvestan J, Zia Ur Rehman R, Lord S, Kerse N, Teh R, Del Din S. Validation of an Algorithm for Measurement of Sedentary Behaviour in Community-Dwelling Older Adults. Sensors. 2023; 23(10):4605. https://doi.org/10.3390/s23104605

Chicago/Turabian StyleAbdul Jabbar, Khalid, Javad Sarvestan, Rana Zia Ur Rehman, Sue Lord, Ngaire Kerse, Ruth Teh, and Silvia Del Din. 2023. "Validation of an Algorithm for Measurement of Sedentary Behaviour in Community-Dwelling Older Adults" Sensors 23, no. 10: 4605. https://doi.org/10.3390/s23104605

APA StyleAbdul Jabbar, K., Sarvestan, J., Zia Ur Rehman, R., Lord, S., Kerse, N., Teh, R., & Del Din, S. (2023). Validation of an Algorithm for Measurement of Sedentary Behaviour in Community-Dwelling Older Adults. Sensors, 23(10), 4605. https://doi.org/10.3390/s23104605