Passive Fingerprinting of Same-Model Electrical Devices by Current Consumption

Abstract

1. Introduction

- All devices have exactly the same hardware and software and, therefore, similar consumption profiles.

- A very low-frequency sampling rate of 50 kHz was applied. This sampling frequency is below the commonly applied bandwidth in RF-based fingerprinting [14] and is four times smaller than the one reported in the magnetic induction fingerprinting study reported in [2]. It is also lower than the switching frequency of common switched-mode power supplies (SMPSs) (see also Section 2 below).

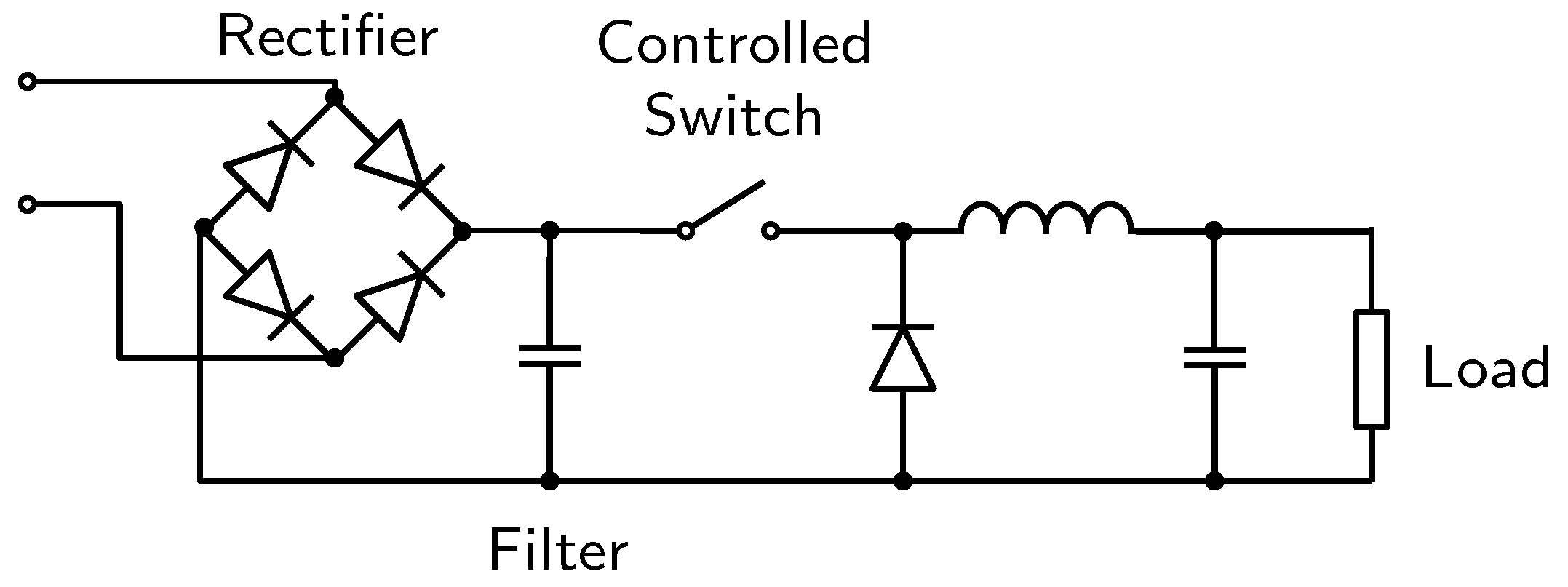

2. Switch-Mode Power Supply Background

2.1. Basic Principles

2.2. Electromagnetic Interference Filtering

3. Time-Series Classification Considerations

3.1. Data-Based Approach

3.2. Feature-Based Approach

- A sequence-dependent feature-extraction (FE) stage that transforms time-series into numerical features that can be processed, while preserving the information in the original data set. It yields better results than applying machine learning directly to the raw data.

- Feature-based classification of the resulting numerical features. This stage can also include the process of reducing the number of features required for effective classification.

3.3. Hybrid Approaches

3.4. Deep-Learning-Based Approaches

3.5. Classifier Selection Discussion

4. Experimental Design

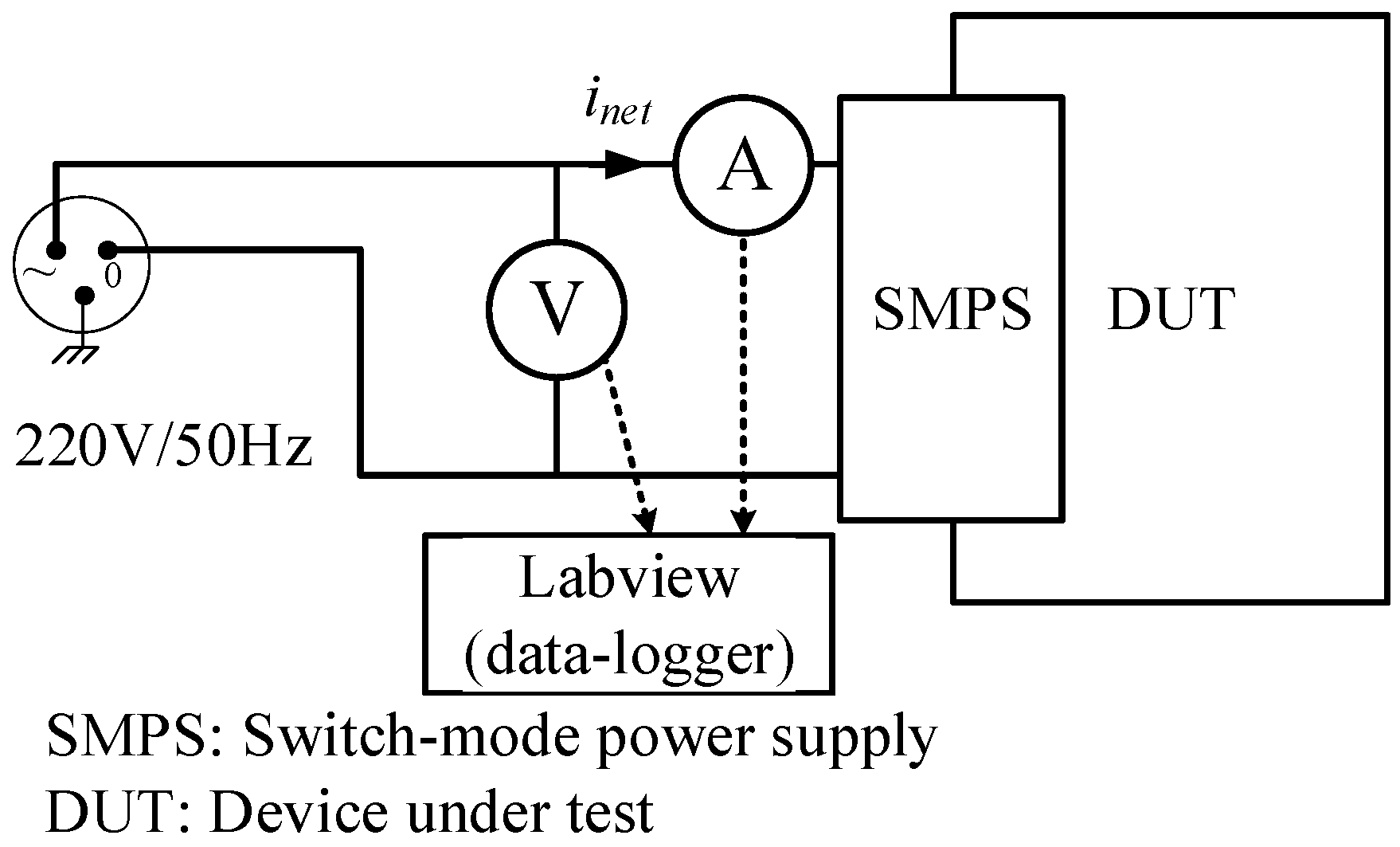

4.1. Electrical Setup

4.2. Data Collection

4.3. Database

4.4. Experimental Assumptions

5. Evaluation

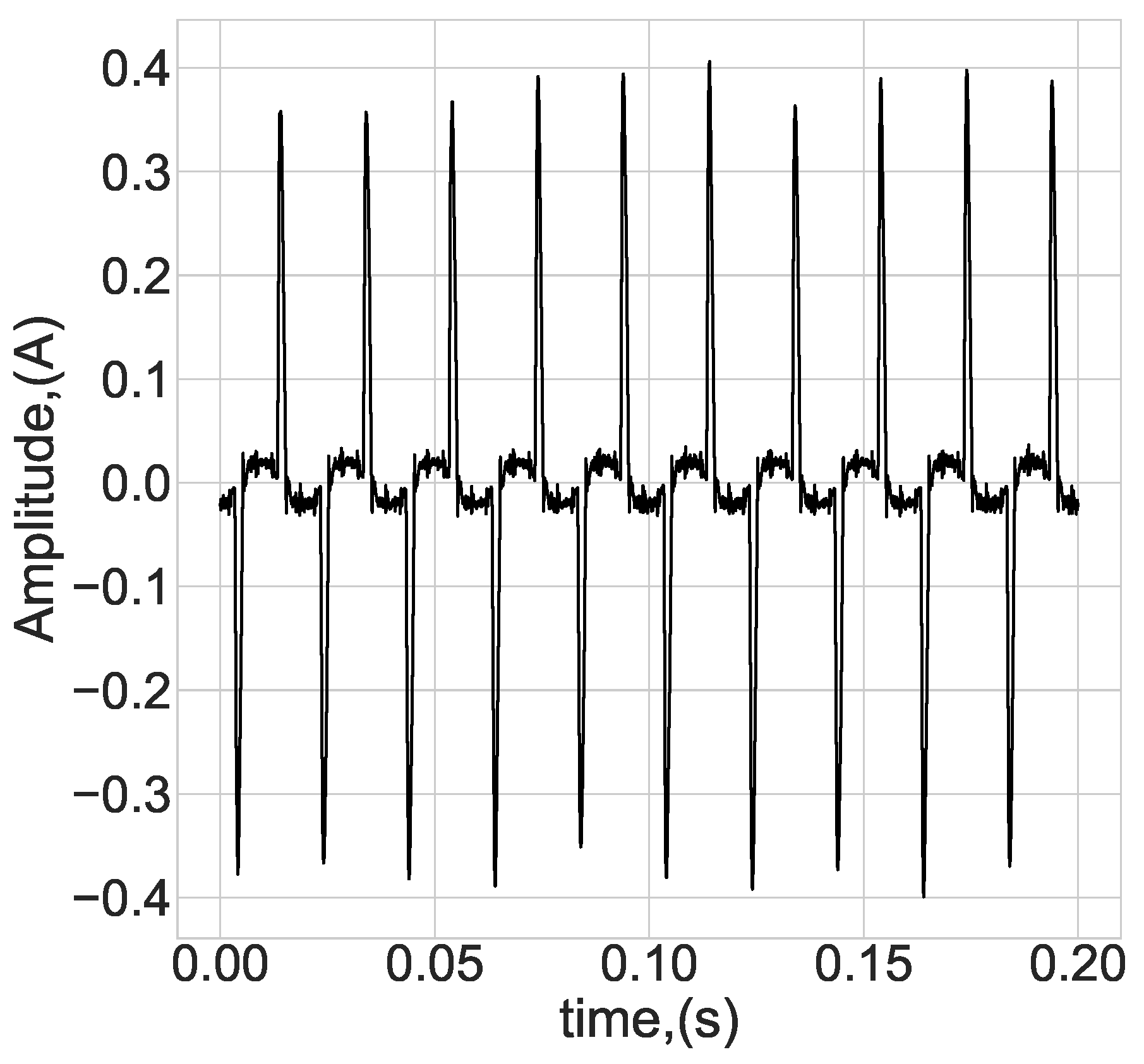

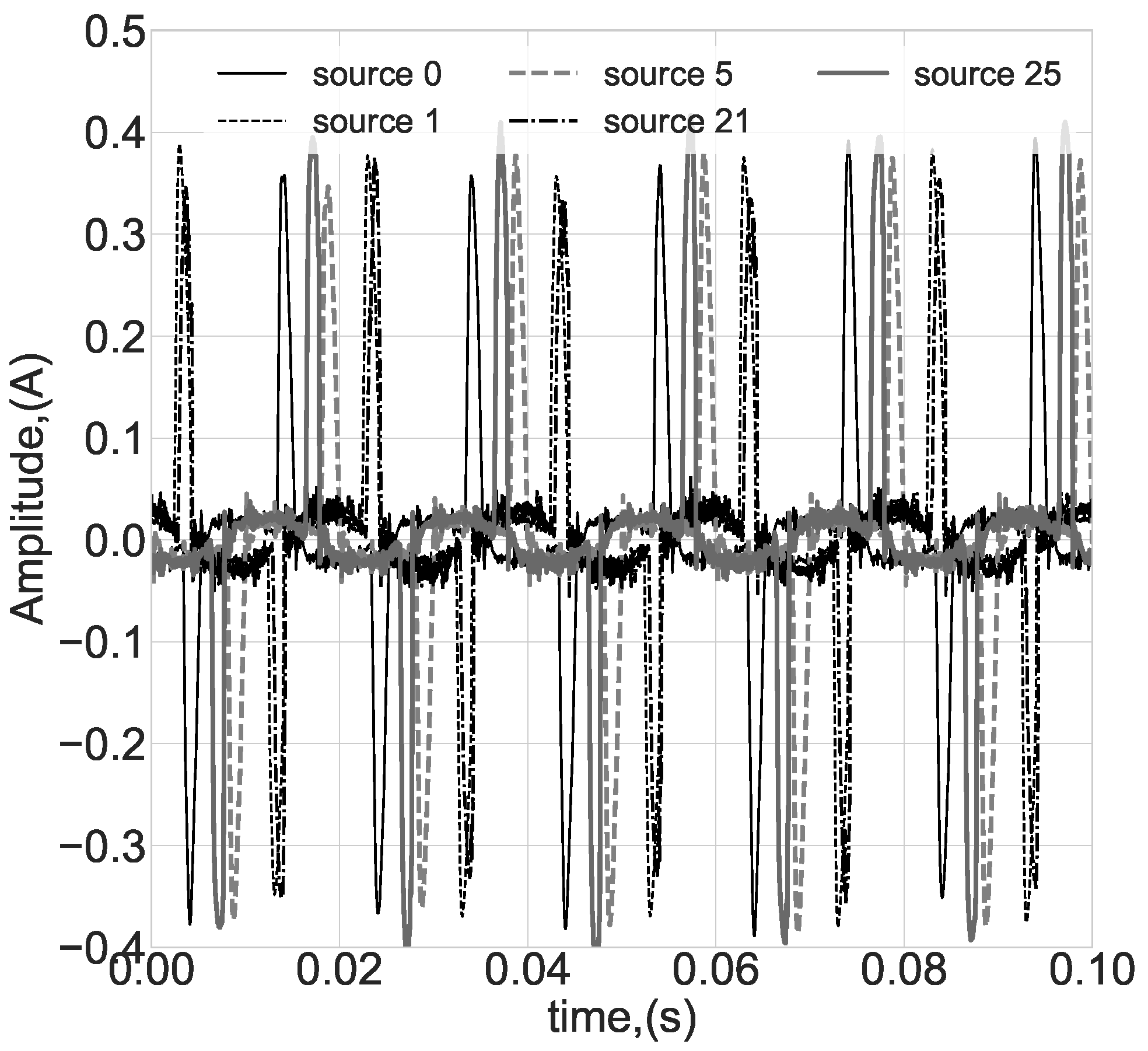

5.1. Preliminary Analysis

5.2. Feature-Extraction

5.2.1. TSFEL

5.2.2. MiniROCKET

5.2.3. Empirical Wavelet Transform (EWT)

5.3. Feature Classification

- Logistic regression (LR) classifier;

- Random forest (RF) classifier with Gini-index-based splitting criteria, ensemble of 100 classifiers and unlimited tree depth;

- LDA classifier with a pre-selected tolerance threshold for singular values of data decomposition (SVD). The threshold was selected using a grid search in the range from up to 1. This search was done because we had noticed the significant influence of the tolerance threshold value on the obtained results;

- Naive Bayes (NB) classifier;

- k-nearest neighbors (kNN) classifier with k = 1 (1-NN). This classifier was used as a baseline due to its relatively high computational time and low classification accuracy.

5.4. Evaluation Results

- Full feature-space of the feature extraction method.

- Reduced feature-space with feature selection by correlation coefficient. Features with a correlation coefficient of 0.95 or higher were removed (cor.select).

- The previous feature subset further reduced by random-forest feature selection, i.e., selection by feature importance with threshold values 20% of importance (cor.+rf).

- Reduced feature-space only by random-forest feature selection (rf select).

6. Discussion

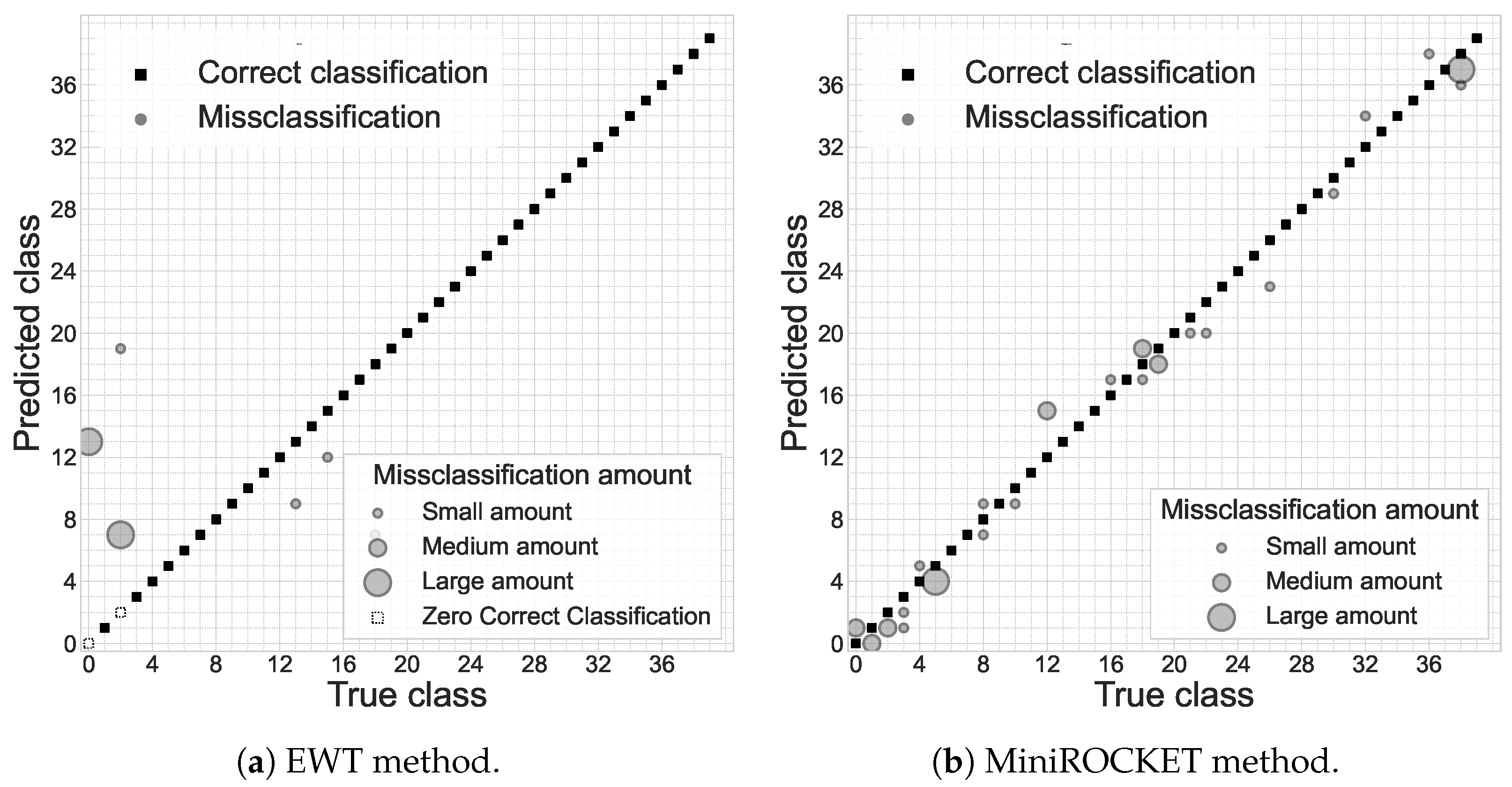

6.1. MiniROCKET

6.2. TSFEL and Empirical Wavelet Transform

6.3. General Aspects

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Configuration Details

Appendix A.1. MiniROCKET Configuration

- kernel size set 9;

- kernel weights are initialized with values −1 and 2 in proportion 2:1, so as to have a sum of values equal to 0 (at all 84 kernels);

- kernel dilation rates from 1 to 903 with algorithmically increasing steps (at all 23 values, but 21 rest unique with float32 precision);

- kernel padding calculated as where k is the kernel size; d is the dilation rate; is the floor (integer part) operation.

- the bias values taken during training are quantiles from the kernel acting (convolution) output for one randomly selected example. Either just one quantile or more quantiles could be used.

Appendix A.2. EWT-Based FE

- For all segments, the auto-covariance function was calculated and 19 peaks were selected using a common find-peaks routine with an adjusted peak value threshold and peak-peak distance.

- Start and stop cutting frequencies for filtration bands were determined as middle points between peak positions. For all these bands, we take bands that include one peak, then two peaks, and so on.

- Filtration is implemented by a rectangular window in the frequency domain. The same filtering, but mirrored and shifted on one point to the left, is performed for a range from up to in order to avoid Hilbert filtration.

- band summary statistics (mean value, standard deviation, kurtosis, skewness, median value);

- absolute values of band summary statistics (mean value, standard deviation, kurtosis, skewness, median value);

- first-order autoregression coefficient for band;

- variance of residuals from 1-order autoregression for band;

- barycetner frequency, calculated aswhere is the estimated frequency, is the k-th value of the DFT of segment , corresponds to the highest positive frequency of the transform and is the k-th value of the frequency of a signal sampled with frequency ;

- correlation-based point-wise frequency, calculated as

References

- Kohno, T.; Broido, A.; Claffy, K. Remote physical device fingerprinting. IEEE Trans. Dependable Secur. Comput. 2005, 2, 93–108. [Google Scholar] [CrossRef]

- Ji, X.; Cheng, Y.; Zhang, J.; Chi, Y.; Xu, W.; Chen, Y.C. Device Fingerprinting with Magnetic Induction Signals Radiated by CPU Modules. ACM Trans. Sens. Netw. 2022, 18, 1–28. [Google Scholar] [CrossRef]

- Chen, Y.; Jin, X.; Sun, J.; Zhang, R.; Zhang, Y. POWERFUL: Mobile app fingerprinting via power analysis. In Proceedings of the IEEE INFOCOM 2017—IEEE Conference on Computer Communications, Atlanta, GA, USA, 1–4 May 2017; pp. 1–9. [Google Scholar] [CrossRef]

- Hernandez Jimenez, J.; Goseva-Popstojanova, K. Malware Detection Using Power Consumption and Network Traffic Data. In Proceedings of the 2019 2nd International Conference on Data Intelligence and Security (ICDIS), South Padre Island, TX, USA, 28–30 June 2019; pp. 53–59. [Google Scholar] [CrossRef]

- Kocher, P.; Jaffe, J.; Jun, B. Differential power analysis. In Advances in Cryptology—CRYPTO’ 99, 19th Annual International Cryptology Conference; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 1999; pp. 388–397. [Google Scholar]

- Guri, M.; Zadov, B.; Bykhovsky, D.; Elovici, Y. PowerHammer: Exfiltrating data from air-gapped computers through power lines. IEEE Trans. Inf. Forensics Secur. 2019, 15, 1879–1890. [Google Scholar] [CrossRef]

- Sehatbakhsh, N.; Yilmaz, B.B.; Zajic, A.; Prvulovic, M. A New Side-Channel Vulnerability on Modern Computers by Exploiting Electromagnetic Emanations from the Power Management Unit. In Proceedings of the 2020 IEEE International Symposium on High Performance Computer Architecture (HPCA), San Diego, CA, USA, 22–26 February 2020; pp. 123–138. [Google Scholar] [CrossRef]

- Sense Labs. Available online: https://sense.com/ (accessed on 2 January 2023).

- Formby, D.; Srinivasan, P.; Leonard, A.; Rogers, J.; Beyah, R. Who’s in Control of Your Control System? Device Fingerprinting for Cyber-Physical Systems. In Proceedings of the 2016 Network and Distributed System Security Symposium, San Diego, CA, USA, 21–24 February 2016. [Google Scholar] [CrossRef]

- Pu, H.; He, L.; Zhao, C.; Yau, D.K.; Cheng, P.; Chen, J. Detecting replay attacks against industrial robots via power fingerprinting. In Proceedings of the 18th ACM Conference on Embedded Networked Sensor Systems, Virtual Event, 16–19 November 2020; pp. 285–297. [Google Scholar]

- Aksu, H.; Uluagac, A.S.; Bentley, E.S. Identification of Wearable Devices with Bluetooth. IEEE Trans. Sustain. Comput. 2021, 6, 221–230. [Google Scholar] [CrossRef]

- Marchal, S.; Miettinen, M.; Nguyen, T.D.; Sadeghi, A.R.; Asokan, N. AuDI: Toward Autonomous IoT Device-Type Identification Using Periodic Communication. IEEE J. Sel. Areas Commun. 2019, 37, 1402–1412. [Google Scholar] [CrossRef]

- Babun, L.; Aksu, H.; Uluagac, A.S. CPS Device-Class Identification via Behavioral Fingerprinting: From Theory to Practice. IEEE Trans. Inf. Forensics Secur. 2021, 16, 2413–2428. [Google Scholar] [CrossRef]

- Soltanieh, N.; Norouzi, Y.; Yang, Y.; Karmakar, N.C. A Review of Radio Frequency Fingerprinting Techniques. IEEE J. Radio Freq. Identif. 2020, 4, 222–233. [Google Scholar] [CrossRef]

- Ronkin, M.; Bykhovsky, D. Electrical Equipment Fingerprinting with Electrical Network Current Consumption. In Proceedings of the 2022 45th International Conference, Virtual Conference, 13–15 July 2022. [Google Scholar]

- Bykhovsky, D. Experimental Lognormal Modeling of Harmonics Power of Switched-Mode Power Supplies. Energies 2022, 15, 653. [Google Scholar] [CrossRef]

- Lines, J.; Taylor, S.; Bagnall, A. HIVE-COTE: The hierarchical vote collective of transformation-based ensembles for time series classification. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016; pp. 1041–1046. [Google Scholar]

- Maharaj, E.; D’Urso, P.; Caiado, J. Time Series Clustering and Classification; Chapman & Hall/CRC Computer Science & Data Analysis; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Deng, H.; Runger, G.; Tuv, E.; Vladimir, M. A time series forest for classification and feature extraction. Inf. Sci. 2013, 239, 142–153. [Google Scholar] [CrossRef]

- Dau, H.A.; Bagnall, A.; Kamgar, K.; Yeh, C.C.M.; Zhu, Y.; Gharghabi, S.; Ratanamahatana, C.A.; Keogh, E. The UCR time series archive. IEEE/CAA J. Autom. Sin. 2019, 6, 1293–1305. [Google Scholar] [CrossRef]

- Cabello, N.; Naghizade, E.; Qi, J.; Kulik, L. Fast and accurate time series classification through supervised interval search. In Proceedings of the 2020 IEEE International Conference on Data Mining (ICDM), Sorrento, Italy, 17–20 November 2020; pp. 948–953. [Google Scholar]

- Lubba, C.H.; Sethi, S.S.; Knaute, P.; Schultz, S.R.; Fulcher, B.D.; Jones, N.S. catch22: Canonical time-series characteristics. Data Min. Knowl. Discov. 2019, 33, 1821–1852. [Google Scholar] [CrossRef]

- Christ, M.; Braun, N.; Neuffer, J.; Kempa-Liehr, A.W. Time series feature extraction on basis of scalable hypothesis tests (tsfresh–a python package). Neurocomputing 2018, 307, 72–77. [Google Scholar] [CrossRef]

- Barandas, M.; Folgado, D.; Fernandes, L.; Santos, S.; Abreu, M.; Bota, P.; Liu, H.; Schultz, T.; Gamboa, H. TSFEL: Time series feature extraction library. SoftwareX 2020, 11, 100456. [Google Scholar] [CrossRef]

- Fulcher, B.D.; Jones, N.S. hctsa: A computational framework for automated time-series phenotyping using massive feature extraction. Cell Syst. 2017, 5, 527–531. [Google Scholar] [CrossRef] [PubMed]

- Middlehurst, M.; Large, J.; Flynn, M.; Lines, J.; Bostrom, A.; Bagnall, A. HIVE-COTE 2.0: A new meta ensemble for time series classification. Mach. Learn. 2021, 110, 3211–3243. [Google Scholar] [CrossRef]

- Shifaz, A.; Pelletier, C.; Petitjean, F.; Webb, G.I. TS-CHIEF: A scalable and accurate forest algorithm for time series classification. Data Min. Knowl. Discov. 2020, 34, 742–775. [Google Scholar] [CrossRef]

- Ismail Fawaz, H.; Lucas, B.; Forestier, G.; Pelletier, C.; Schmidt, D.F.; Weber, J.; Webb, G.I.; Idoumghar, L.; Muller, P.A.; Petitjean, F. InceptionTime: Finding AlexNet for time series classification. Data Min. Knowl. Discov. 2020, 34, 1936–1962. [Google Scholar] [CrossRef]

- Langford, Z.; Eisenbeiser, L.; Vondal, M. Robust signal classification using siamese networks. In Proceedings of the ACM Workshop on Wireless Security and Machine Learning, Miami, FL, USA, 15–17 May 2019; pp. 1–5. [Google Scholar]

- Ismail Fawaz, H.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef]

- Lara-Benítez, P.; Carranza-García, M.; Riquelme, J.C. An experimental review on deep learning architectures for time series forecasting. Int. J. Neural Syst. 2021, 31, 2130001. [Google Scholar] [CrossRef]

- Dempster, A.; Petitjean, F.; Webb, G.I. ROCKET: Exceptionally fast and accurate time series classification using random convolutional kernels. Data Min. Knowl. Discov. 2020, 34, 1454–1495. [Google Scholar] [CrossRef]

- Dempster, A.; Schmidt, D.F.; Webb, G.I. MINIROCKET: A very fast (almost) deterministic transform for time series classification. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Virtual Event, 14–18 August 2021; pp. 248–257. [Google Scholar]

- Anthony, B.; Eamonn, K.; Jason, L.; Aaron, B.; James, L.; Matthew, M. UEA & UCR Time Series Classification. 2022. Available online: https://www.timeseriesclassification.com/ (accessed on 2 January 2023).

- Löning, M.; Bagnall, A.; Ganesh, S.; Kazakov, V.; Lines, J.; Király, F.J. sktime: A unified interface for machine learning with time series. arXiv 2019, arXiv:1909.07872. [Google Scholar]

- Oguiza, I. tsai—A State-of-the-Art Deep Learning Library for Time Series and Sequential Data. Github. 2022. Available online: https://github.com/timeseriesAI/tsai (accessed on 2 January 2023).

- Bykhovsky, D.; Cohen, A. Electrical network frequency (ENF) maximum-likelihood estimation via a multitone harmonic model. IEEE Trans. Inf. Forensics Secur. 2013, 8, 744–753. [Google Scholar] [CrossRef]

- tsfel v 0.1.4 Feature List. 2022. Available online: https://tsfel.readthedocs.io/en/latest/descriptions/feature_list.html (accessed on 2 January 2023).

- Gilles, J. Empirical wavelet transform. IEEE Trans. Signal Process. 2013, 61, 3999–4010. [Google Scholar] [CrossRef]

- Faouzi, J. Time Series Classification: A review of Algorithms and Implementations. Ketan Kotecha. In Machine Learning (Emerging Trends and Applications); Proud Pen, 2022; in press, ffhal-03558165f; ISBN 978-1-8381524-1-3. Available online: https://hal.inria.fr/hal-03558165/document (accessed on 2 January 2023).

- Tong, Y.; Liu, J.; Yu, L.; Zhang, L.; Sun, L.; Li, W.; Ning, X.; Xu, J.; Qin, H.; Cai, Q. Technology investigation on time series classification and prediction. Comput. Sci. 2022, 8, e982. [Google Scholar] [CrossRef] [PubMed]

- Ronkin, M. Dsatools. 2020. Available online: https://github.com/MVRonkin/dsatools (accessed on 2 January 2023).

- Ronkin, M.V.; Kalmykov, A.A.; Polyakov, S.O.; Nagovicin, V.S. Numerical analysis of adaptive signal decomposition methods applied for ultrasonic gas flowmeters. In AIP Conference Proceedings; AIP Publishing LLC, 2022; Volume 2425, p. 130009. [Google Scholar] [CrossRef]

| Method | Feature-Space | LR | RF | LDA | NB | 1-NN |

|---|---|---|---|---|---|---|

| TSFEL | 390 features | 0.63 | 0.85 | 0.91 | 0.84 | 0.63 |

| cor.select (248) | 0.59 | 0.78 | 0.87 | 0.83 | 0.59 | |

| cor.+rf (30) | 0.65 | 0.85 | 0.89 | 0.84 | 0.70 | |

| rf select (24) | 0.63 | 0.93 | 0.91 | 0.91 | 0.83 | |

| 0]1.4cmMini ROCKET | 1924 features | 0.67 | 0.84 | 0.81 | 0.72 | 0.69 |

| cor.select (435) | 0.59 | 0.76 | 0.80 | 0.70 | 0.67 | |

| cor.+rf (18) | 0.65 | 0.90 | 0.94 | 0.85 | 0.78 | |

| rf select (19) | 0.63 | 0.92 | 0.88 | 0.92 | 0.88 | |

| 0]*EWT | 2658 features | 0.65 | 0.88 | 0.94 | 0.81 | 0.57 |

| cor.select (781) | 0.57 | 0.64 | 0.92 | 0.57 | 0.54 | |

| cor.+rf (19) | 0.56 | 0.89 | 0.89 | 0.88 | 0.66 | |

| rf select (145) | 0.88 | 0.92 | 0.92 | 0.91 | 0.75 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ronkin, M.; Bykhovsky, D. Passive Fingerprinting of Same-Model Electrical Devices by Current Consumption. Sensors 2023, 23, 533. https://doi.org/10.3390/s23010533

Ronkin M, Bykhovsky D. Passive Fingerprinting of Same-Model Electrical Devices by Current Consumption. Sensors. 2023; 23(1):533. https://doi.org/10.3390/s23010533

Chicago/Turabian StyleRonkin, Mikhail, and Dima Bykhovsky. 2023. "Passive Fingerprinting of Same-Model Electrical Devices by Current Consumption" Sensors 23, no. 1: 533. https://doi.org/10.3390/s23010533

APA StyleRonkin, M., & Bykhovsky, D. (2023). Passive Fingerprinting of Same-Model Electrical Devices by Current Consumption. Sensors, 23(1), 533. https://doi.org/10.3390/s23010533