Privacy Preserving Image Encryption with Optimal Deep Transfer Learning Based Accident Severity Classification Model

Abstract

1. Introduction

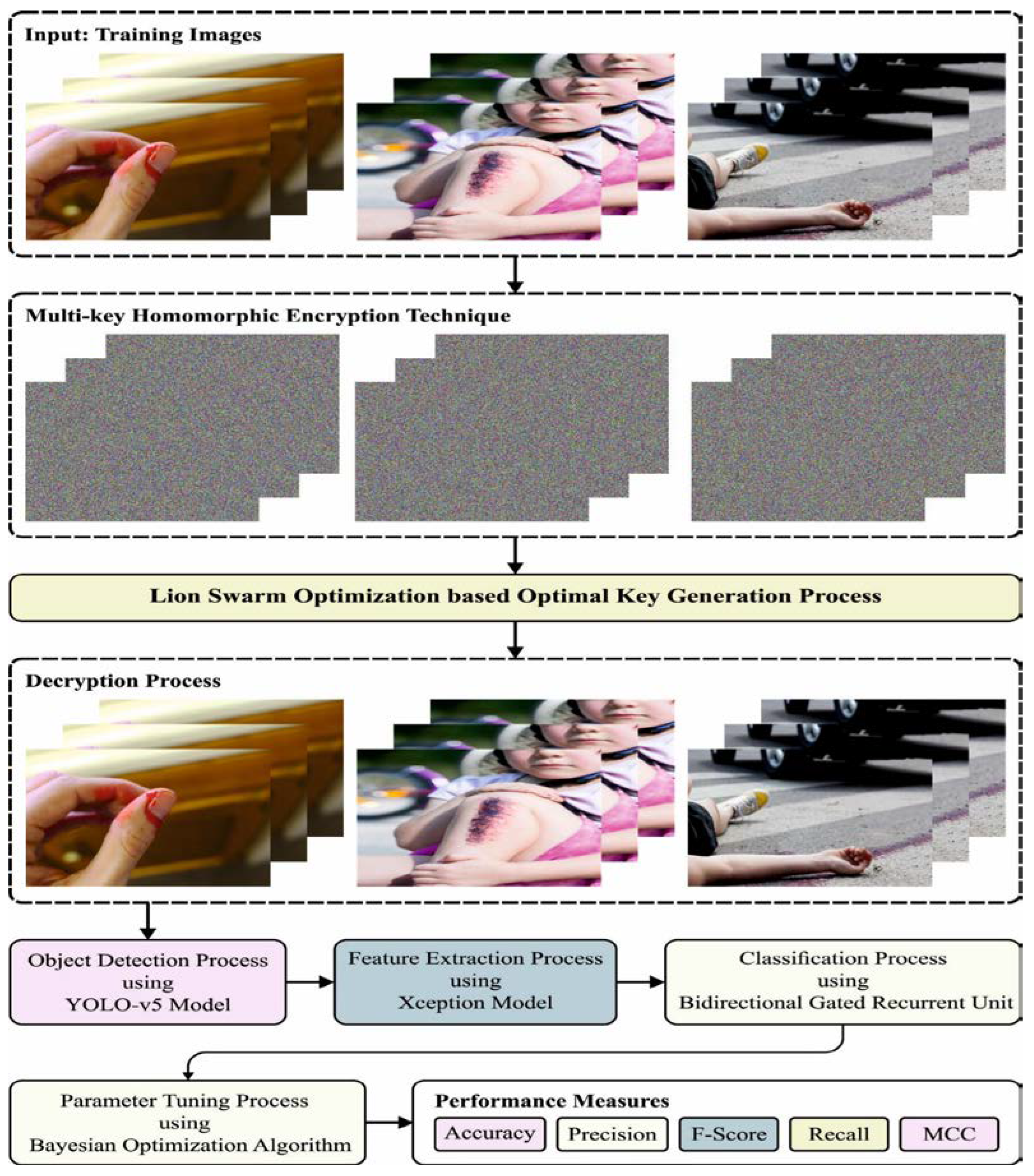

- This article presents a novel Privacy Preserving Image Encryption with Optimal Deep-Learning-based Accident Severity Classification (PPIE-ODLASC) model. The goal of the presented PPIE-ODLASC technique is to accomplish secure image transmission via encryption and accident severity classification (i.e., high, medium, low, and normal).

- For accident image encryption, multi-key homomorphic encryption (MKHE) technique with lion swarm optimization (LSO)-based optimal key generation process is involved.

- In addition, the PPIE-ODLASC algorithm involves YOLO-v5 object detector to identify the region of interest (ROI) in the accident images. Moreover, the accident severity classification module encompasses Xception feature extractor, bidirectional gated recurrent unit (BiGRU) classification, and Bayesian optimization (BO)-based hyperparameter tuning.

- The experimental validation of PPIE-ODLASC technique is tested using accident images and the results are investigated in terms of several measures.

2. Literature Review

3. The Proposed Model

3.1. Image Encryption Module

- Setup: .. Proceed with the secure parameters as input and return the public parameterization. Consider that other techniques indirectly get as an input.

- Key Generation: .. Resulting in a pair of public and confidential keys.

- Encryption: .. Encrypt a plain image and resultant a cipher-image

- Decryption: .. For providing a cipher image with equal order of confidential key, outcome a plain image

3.2. Accident Severity Classification Model

3.2.1. Accident Region Detection Using YOLO-v5

- Backbone: Backbone has frequently been utilized for extracting the main features in input images. CSP (Cross Stage Partial Network) is utilized as the backbone in YOLOv5 for extracting rich suitable features in an input image.

- Neck: The Neck model was frequently utilized for creating feature pyramids. The feature pyramids aid methods in effective generalizations once it derives to object scaling. It supports the detection of similar objects in several scales and sizes. The feature pyramids can be quite useful in supporting methods for performing effectually on earlier unseen data. Other methods such as PANet, FPN, and BiFPN utilize several sorts of feature pyramid methods. PANet was utilized as a neck from YOLOv5 for obtaining feature pyramid.

- Head: A typical Head was frequently accountable for the last detection stage. It utilizes anchor boxes for constructing last outcome vectors with class probability, objectiveness score, and bounding box.

3.2.2. Xception Based Feature Extraction

3.2.3. Severity Classification Using Optimal BiGRU Model

4. Experimental Validation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alkhelaiwi, M.; Boulila, W.; Ahmad, J.; Koubaa, A.; Driss, M. An efficient approach based on privacy-preserving deep learning for satellite image classification. Remote Sens. 2021, 13, 2221. [Google Scholar] [CrossRef]

- Rehman, M.U.; Shafique, A.; Ghadi, Y.Y.; Boulila, W.; Jan, S.U.; Gadekallu, T.R.; Driss, M.; Ahmad, J. A Novel Chaos-Based Privacy-Preserving Deep Learning Model for Cancer Diagnosis. IEEE Trans. Netw. Sci. Eng. 2022, 9, 4322–4337. [Google Scholar] [CrossRef]

- Nakamura, K.; Nitta, N.; Babaguchi, N. Encryption-free framework of privacy-preserving image recognition for photo-based information services. IEEE Trans. Inf. Secur. 2018, 14, 1264–1279. [Google Scholar] [CrossRef]

- Ito, H.; Kinoshita, Y.; Aprilpyone, M.; Kiya, H. Image to perturbation: An image transformation network for generating visually protected images for privacy-preserving deep neural networks. IEEE Access 2021, 9, 64629–64638. [Google Scholar] [CrossRef]

- Popescu, A.B.; Taca, I.A.; Vizitiu, A.; Nita, C.I.; Suciu, C.; Itu, L.M.; Scafa-Udriste, A. Obfuscation Algorithm for Privacy-Preserving Deep Learning-Based Medical Image Analysis. Appl. Sci. 2022, 12, 3997. [Google Scholar] [CrossRef]

- Kaissis, G.; Ziller, A.; Passerat-Palmbach, J.; Ryffel, T.; Usynin, D.; Trask, A.; Lima, I.; Mancuso, J.; Jungmann, F.; Steinborn, M.M.; et al. End-to-end privacy preserving deep learning on multi-institutional medical imaging. Nat. Mach. Intell. 2021, 3, 473–484. [Google Scholar] [CrossRef]

- Huang, Q.X.; Yap, W.L.; Chiu, M.Y.; Sun, H.M. Privacy-Preserving Deep Learning With Learnable Image Encryption on Medical Images. IEEE Access 2022, 10, 66345–66355. [Google Scholar] [CrossRef]

- Abdullah, S.M. Survey: Privacy-Preserving in Deep Learning based on Homomorphic Encryption. J. Basrah Res. (Sci.) 2022, 48. [Google Scholar] [CrossRef]

- Boulila, W.; Ammar, A.; Benjdira, B.; Koubaa, A. Securing the Classification of COVID-19 in Chest X-ray Images: A Privacy-Preserving Deep Learning Approach. arXiv 2022, arXiv:2203.07728. [Google Scholar]

- El Saj, R.; Sedgh Gooya, E.; Alfalou, A.; Khalil, M. Privacy-preserving deep neural network methods: Computational and perceptual methods—An overview. Electronics 2021, 10, 1367. [Google Scholar] [CrossRef]

- Praveen, S.P.; Sindhura, S.; Madhuri, A.; Karras, D.A. A Novel Effective Framework for Medical Images Secure Storage Using Advanced Cipher Text Algorithm in Cloud Computing. In Proceedings of the 2021 IEEE International Conference on Imaging Systems and Techniques (IST), Kaohsiung, Taiwan, 24–26 August 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Boulila, W.; Khlifi, M.K.; Ammar, A.; Koubaa, A.; Benjdira, B.; Farah, I.R. A Hybrid Privacy-Preserving Deep Learning Approach for Object Classification in Very High-Resolution Satellite Images. Remote Sens. 2022, 14, 4631. [Google Scholar] [CrossRef]

- Chuman, T.; Kiya, H. Block scrambling image encryption used in combination with data augmentation for privacy-preserving DNNs. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW), Penghu, Taiwan, 15–17 September 2021; pp. 1–2. [Google Scholar]

- He, W.; Li, S.; Wang, W.; Wei, M.; Qiu, B. CryptoEyes: Privacy Preserving Classification over Encrypted Images. In Proceedings of the IEEE INFOCOM 2021-IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021; pp. 1–10. [Google Scholar]

- Shen, M.; Tang, X.; Zhu, L.; Du, X.; Guizani, M. Privacy-preserving support vector machine training over blockchain-based encrypted IoT data in smart cities. IEEE Internet Things J. 2019, 6, 7702–7712. [Google Scholar] [CrossRef]

- Ito, H.; Kinoshita, Y.; Kiya, H. Image transformation network for privacy-preserving deep neural networks and its security evaluation. In Proceedings of the 2020 IEEE 9th Global Conference on Consumer Electronics (GCCE), Kobe, Japan, 13–16 October 2020; pp. 822–825. [Google Scholar]

- Tanwar, V.K.; Raman, B.; Rajput, A.S.; Bhargava, R. SecureDL: A privacy preserving deep learning model for image recognition over cloud. J. Vis. Commun. Image Represent. 2022, 86, 103503. [Google Scholar] [CrossRef]

- Ahmad, I.; Shin, S. A Pixel-based Encryption Method for Privacy-Preserving Deep Learning Models. arXiv 2022, arXiv:2203.16780. [Google Scholar]

- Li, Y.; Tao, X.; Zhang, X.; Liu, J.; Xu, J. Privacy-preserved federated learning for autonomous driving. IEEE Trans. Intell. Transp. Syst. 2021, 23, 8423–8434. [Google Scholar] [CrossRef]

- Salem, M.; Taheri, S.; Yuan, J.S. Utilizing transfer learning and homomorphic encryption in a privacy preserving and secure biometric recognition system. Computers 2018, 8, 3. [Google Scholar] [CrossRef]

- Song, L.; Ma, C.; Zhang, G.; Zhang, Y. Privacy-preserving unsupervised domain adaptation in federated setting. IEEE Access 2020, 8, 143233–143240. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, Y.; Tian, A.; Yu, Y.; Du, X. Blockchain based privacy-preserving software updates with proof-of-delivery for internet of things. J. Parallel Distrib. Comput. 2019, 132, 141–149. [Google Scholar] [CrossRef]

- Ibarrondo, A.; Önen, M. Fhe-compatible batch normalization for privacy preserving deep learning. In Data Privacy Management, Cryptocurrencies and Blockchain Technology; Springer: Cham, Switzerland, 2018; pp. 389–404. [Google Scholar]

- Chen, H.; Dai, W.; Kim, M.; Song, Y. Efficient multi-key homomorphic encryption with packed ciphertexts with application to oblivious neural network inference. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 395–412. [Google Scholar]

- Fang, W.; Guo, W.; Liu, Y. Research and application of a new lion swarm algorithm. IEEE Access 2022, 10, 116205–116223. [Google Scholar] [CrossRef]

- Arava, K.; Paritala, C.; Shariff, V.; Praveen, S.P.; Madhuri, A. A Generalized Model for Identifying Fake Digital Images through the Application of Deep Learning. In Proceedings of the 2022 3rd International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 17–19 August 2022; pp. 1144–1147. [Google Scholar] [CrossRef]

- Sirisha, U.; Sai Chandana, B. Semantic interdisciplinary evaluation of image captioning models. Cogent Eng. 2022, 9, 2104333. [Google Scholar] [CrossRef]

- Murthy, J.S.; Siddesh, G.M.; Lai, W.C.; Parameshachari, B.D.; Patil, S.N.; Hemalatha, K.L. ObjectDetect: A Real-Time Object Detection Framework for Advanced Driver Assistant Systems Using YOLOv5. Wirel. Commun. Mob. Comput. 2022, 2022. [Google Scholar] [CrossRef]

- Raza, R.; Zulfiqar, F.; Tariq, S.; Anwar, G.B.; Sargano, A.B.; Habib, Z. Melanoma classification from dermoscopy images using ensemble of convolutional neural networks. Mathematics 2021, 10, 26. [Google Scholar] [CrossRef]

- Kumar, A.; Abirami, S.; Trueman, T.E.; Cambria, E. Comment toxicity detection via a multichannel convolutional bidirectional gated recurrent unit. Neurocomputing 2021, 441, 272–278. [Google Scholar]

- Khan, M.A.; Sahar, N.; Khan, W.Z.; Alhaisoni, M.; Tariq, U.; Zayyan, M.H.; Kim, Y.J.; Chang, B. GestroNet: A Framework of Saliency Estimation and Optimal Deep Learning Features Based Gastrointestinal Diseases Detection and Classification. Diagnostics 2022, 12, 2718. [Google Scholar] [CrossRef]

- Shah, A.P.; Lamare, J.B.; Nguyen-Anh, T.; Hauptmann, A. CADP: A novel dataset for CCTV traffic camera-based accident analysis. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; IEEE: Piscataway, NJ, USA, 2018. Available online: https://ankitshah009.github.io/accident_forecasting_traffic_camera (accessed on 3 June 2020).

- Duraisamy, M.; Balamurugan, S.P. Multiple share creation scheme with optimal key generation for secure medical image transmission in the internet of things environment. Int. J. Electron. Healthc. 2021, 11, 307–330. [Google Scholar] [CrossRef]

- Pashaei, A.; Ghatee, M.; Sajedi, H. Convolution neural network joint with mixture of extreme learning machines for feature extraction and classification of accident images. J. Real-Time Image Process. 2020, 17, 1051–1066. [Google Scholar] [CrossRef]

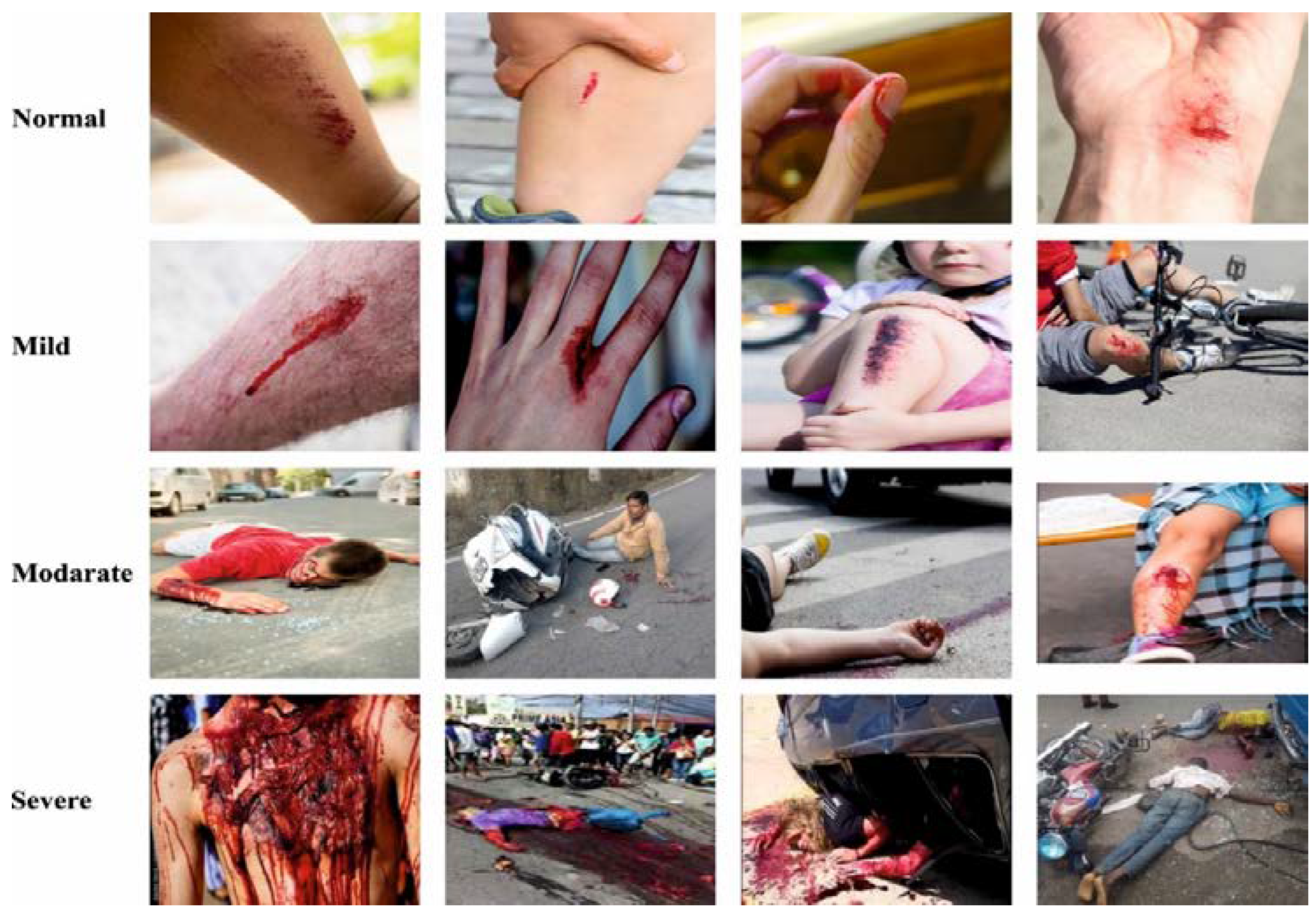

| Class | No. of Instances |

|---|---|

| Normal | 5000 |

| low | 5000 |

| medium | 5000 |

| high | 5000 |

| Total number of Instances | 20,000 |

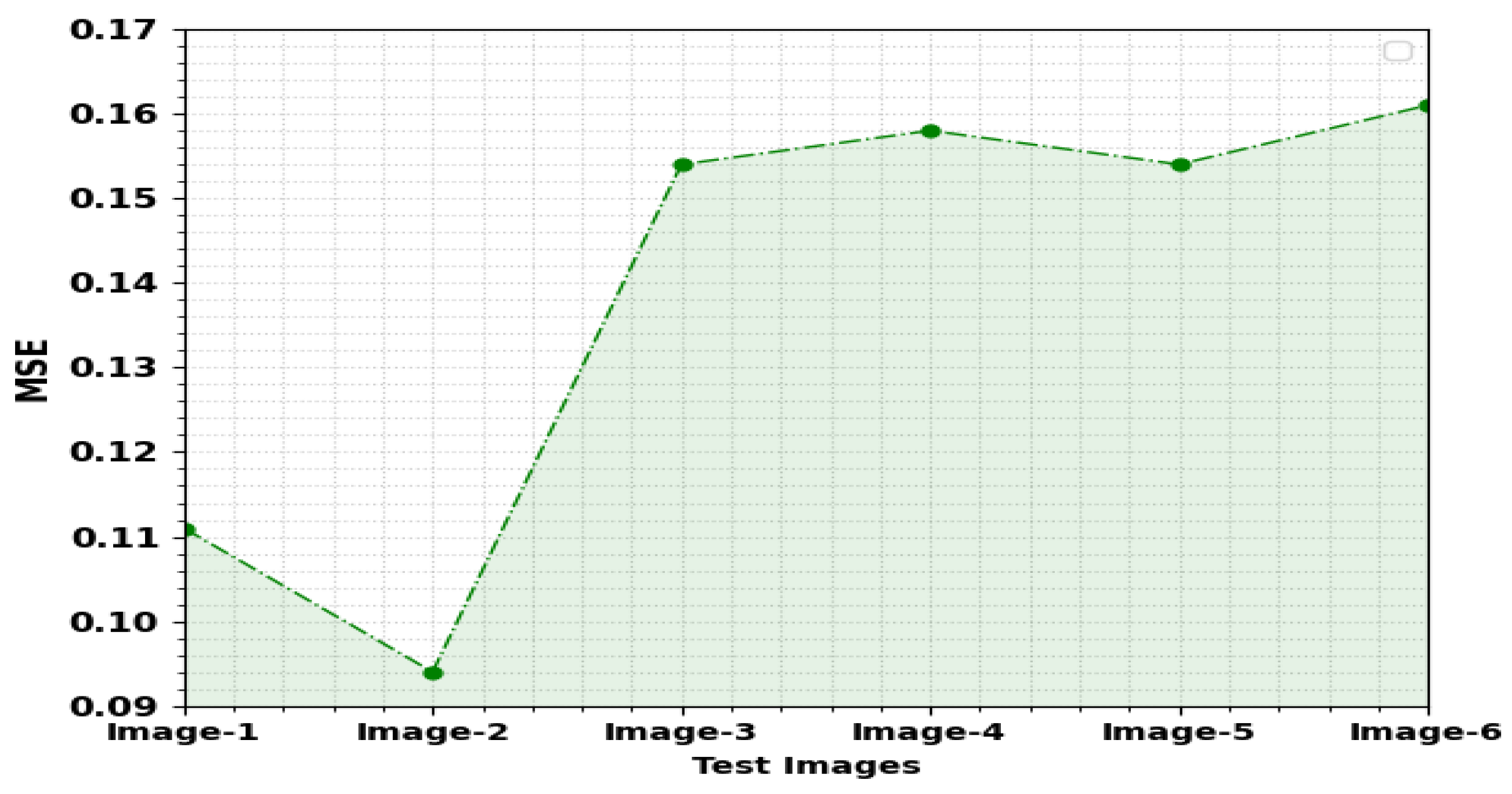

| Test Images | MSE | RMSE | PSNR | SSIM (%) |

|---|---|---|---|---|

| Image1 | 0.1110 | 0.3332 | 57.68 | 99.81 |

| Image2 | 0.0940 | 0.3066 | 58.40 | 99.95 |

| Image3 | 0.1540 | 0.3924 | 56.26 | 99.95 |

| Image4 | 0.1580 | 0.3975 | 56.14 | 99.86 |

| Image5 | 0.1540 | 0.3924 | 56.26 | 99.80 |

| Image6 | 0.1610 | 0.4012 | 56.06 | 99.87 |

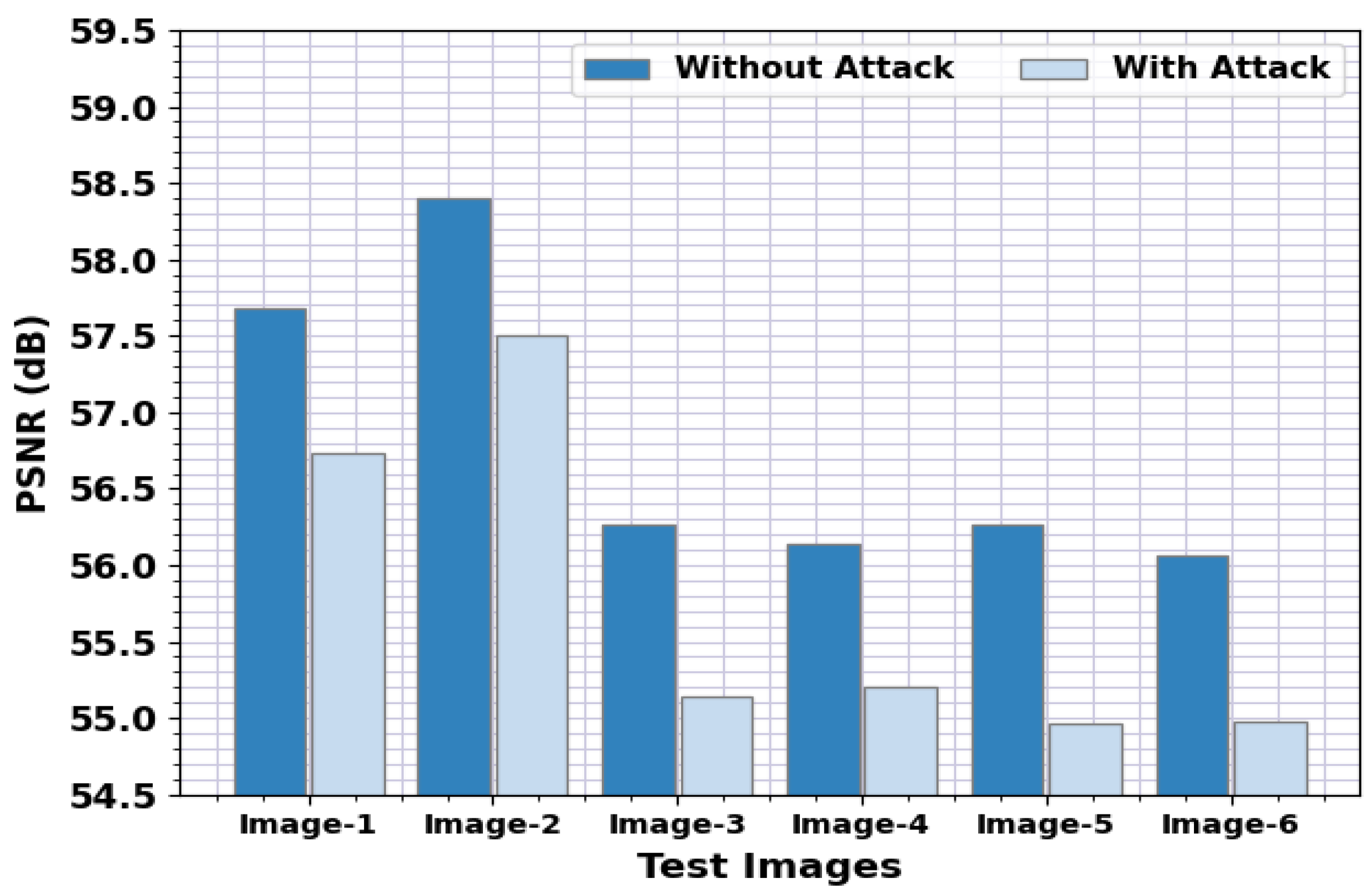

| Test Images | Without Attack | With Attack |

|---|---|---|

| Image-1 | 57.68 | 56.73 |

| Image-2 | 58.40 | 57.50 |

| Image-3 | 56.26 | 55.14 |

| Image-4 | 56.14 | 55.21 |

| Image-5 | 56.26 | 54.96 |

| Image-6 | 56.06 | 54.98 |

| PSNR (dB) | |||||

|---|---|---|---|---|---|

| Test Images | PPIE-ODLASC | MSC-OKG | HSP-ECC | OGWO-ECC | DM-CM |

| Image-1 | 57.68 | 55.14 | 51.60 | 48.45 | 45.37 |

| Image-2 | 58.40 | 54.54 | 51.84 | 49.56 | 46.47 |

| Image-3 | 56.26 | 54.02 | 51.77 | 48.26 | 45.88 |

| Image-4 | 56.14 | 52.00 | 48.47 | 45.69 | 43.17 |

| Image-5 | 56.26 | 51.65 | 48.89 | 46.49 | 43.21 |

| Image-6 | 56.06 | 53.86 | 50.36 | 47.72 | 44.69 |

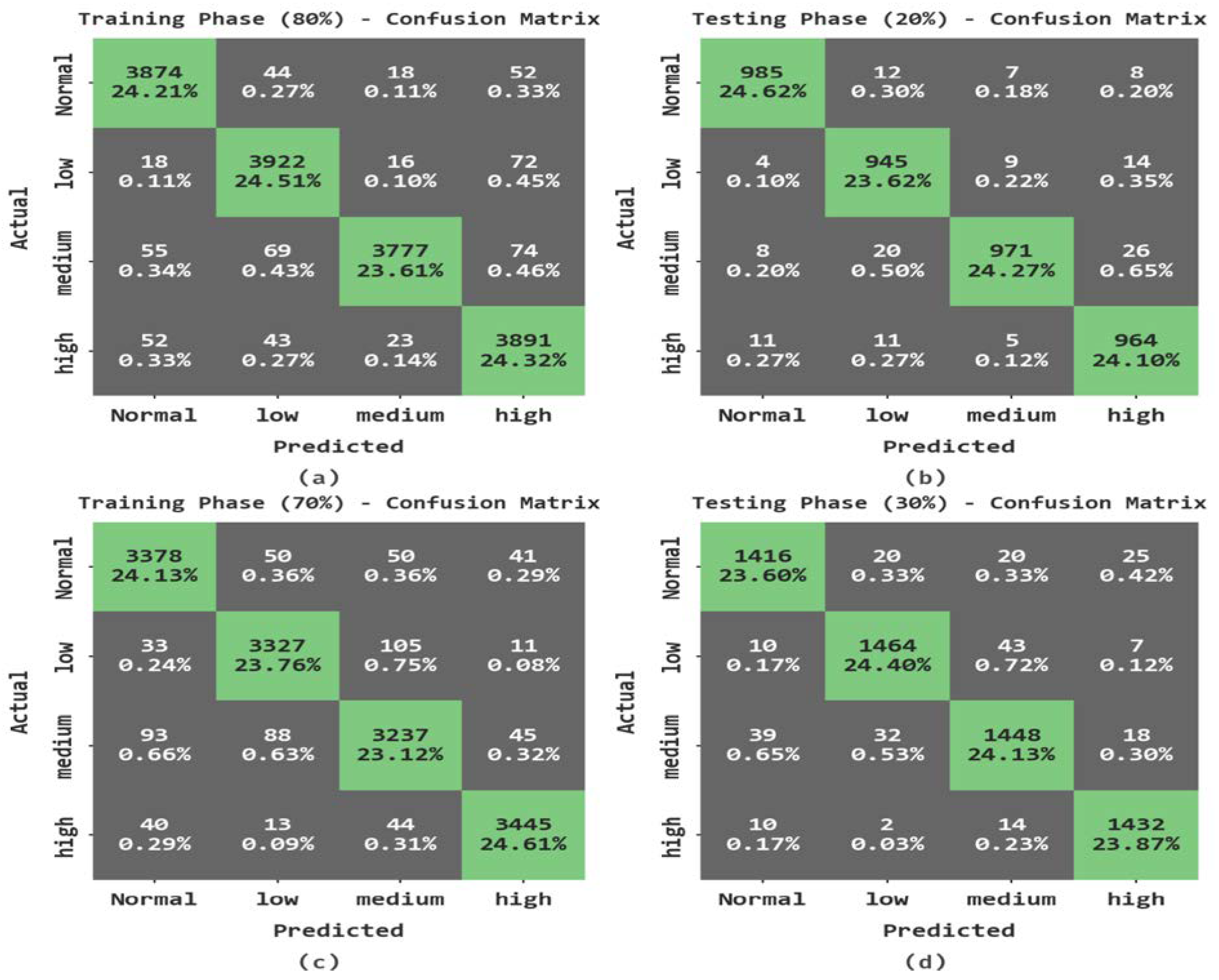

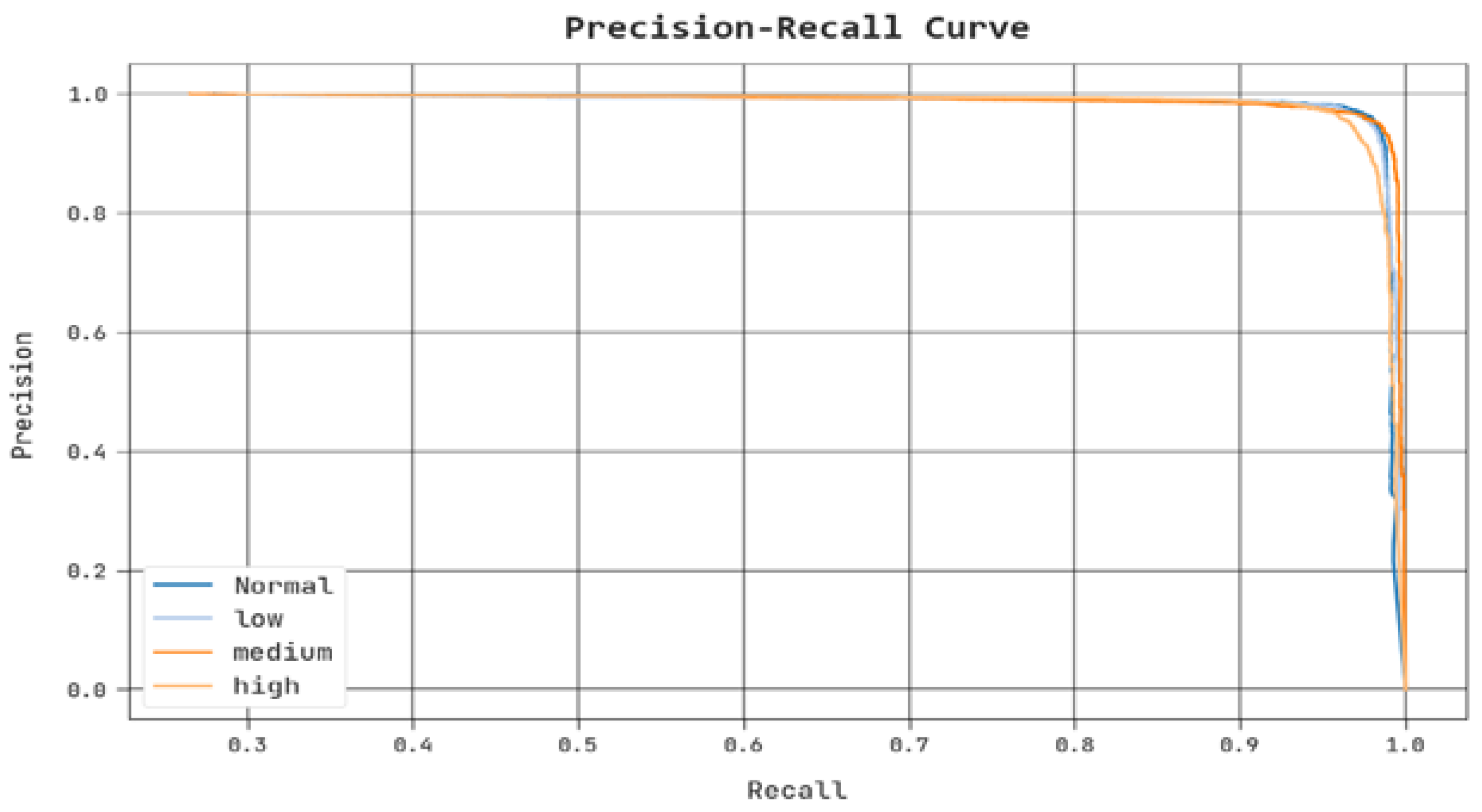

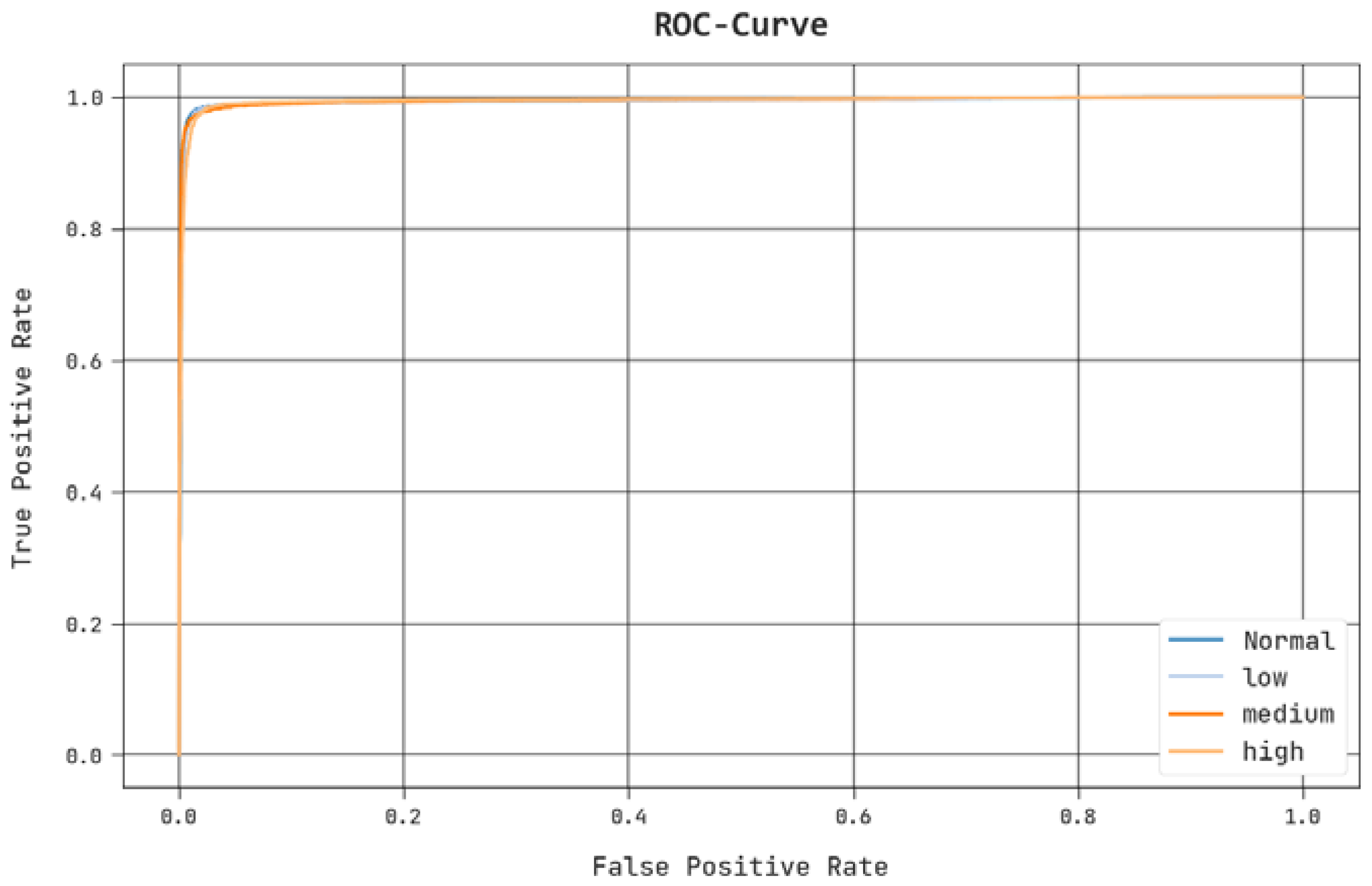

| Class | MCC | ||||

|---|---|---|---|---|---|

| Training Phase (80%) | |||||

| Normal | 98.51 | 96.87 | 97.14 | 97.01 | 96.01 |

| low | 98.36 | 96.17 | 97.37 | 96.77 | 95.67 |

| medium | 98.41 | 98.51 | 95.02 | 96.73 | 95.71 |

| high | 98.02 | 95.16 | 97.06 | 96.10 | 94.78 |

| Average | 98.32 | 96.68 | 96.65 | 96.65 | 95.54 |

| Testing Phase (20%) | |||||

| Normal | 98.75 | 97.72 | 97.33 | 97.52 | 96.69 |

| low | 98.25 | 95.65 | 97.22 | 96.43 | 95.28 |

| medium | 98.12 | 97.88 | 94.73 | 96.28 | 95.05 |

| high | 98.12 | 95.26 | 97.28 | 96.26 | 95.01 |

| Average | 98.31 | 96.63 | 96.64 | 96.62 | 95.51 |

| Training Phase (70%) | |||||

| Normal | 97.81 | 95.32 | 95.99 | 95.65 | 94.19 |

| low | 97.86 | 95.66 | 95.71 | 95.69 | 94.26 |

| medium | 96.96 | 94.21 | 93.47 | 93.84 | 91.83 |

| high | 98.61 | 97.26 | 97.26 | 97.26 | 96.33 |

| Average | 97.81 | 95.61 | 95.61 | 95.61 | 94.15 |

| Testing Phase (30%) | |||||

| Normal | 97.93 | 96.00 | 95.61 | 95.81 | 94.43 |

| low | 98.10 | 96.44 | 96.06 | 96.25 | 94.98 |

| medium | 97.23 | 94.95 | 94.21 | 94.58 | 92.72 |

| high | 98.73 | 96.63 | 98.22 | 97.41 | 96.58 |

| Average | 98.00 | 96.00 | 96.03 | 96.01 | 94.68 |

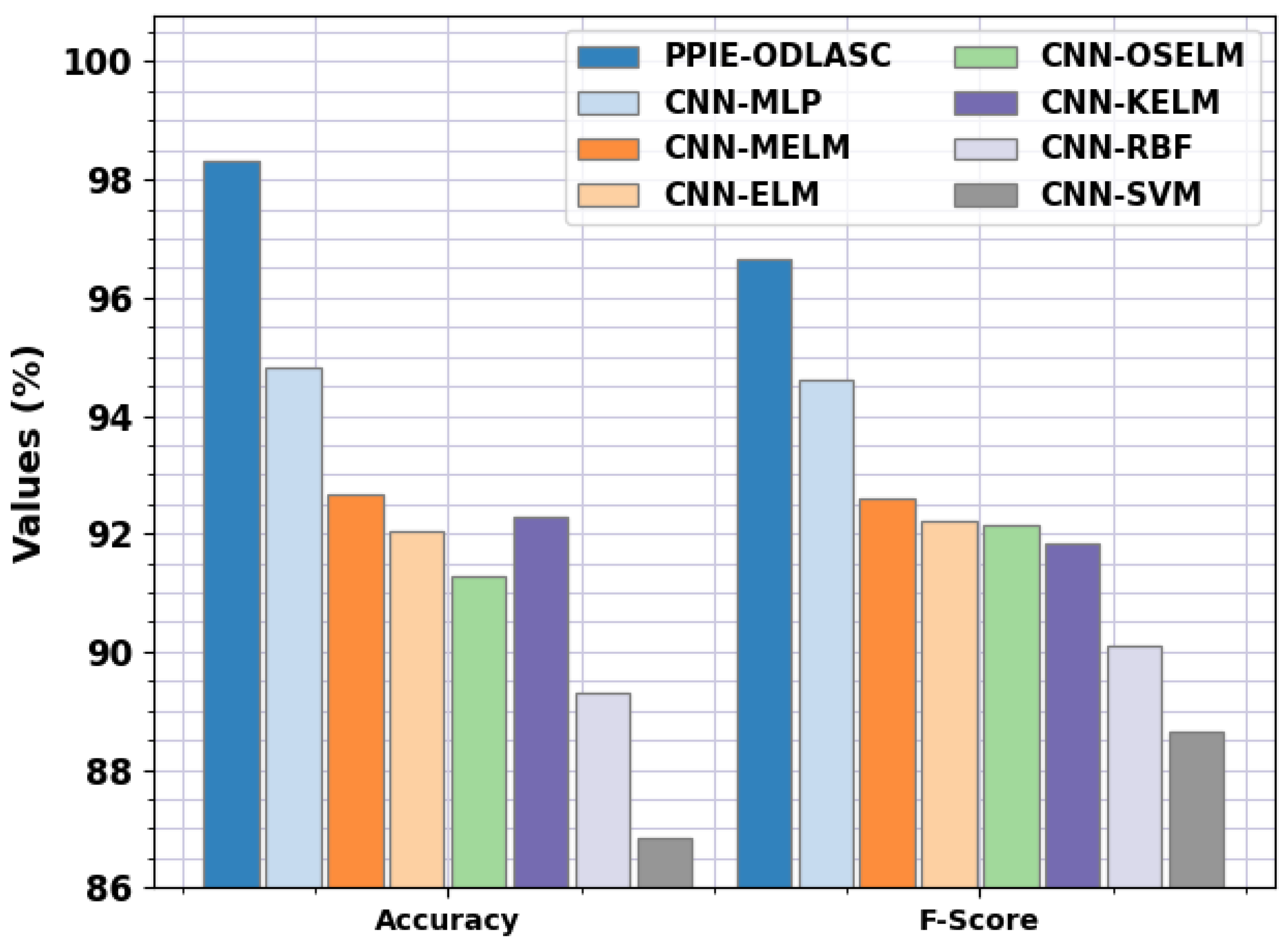

| Methods | Training Time (s) | ||||

|---|---|---|---|---|---|

| PPIE-ODLASC | 96.68 | 96.65 | 96.65 | 98.32 | 04.39 |

| CNN-MLP | 94.28 | 94.94 | 94.60 | 94.80 | 07.87 |

| CNN-MELM | 92.73 | 92.60 | 92.60 | 92.66 | 56.16 |

| CNN-ELM | 92.33 | 92.22 | 92.20 | 92.03 | 242.18 |

| CNN-OSELM | 92.16 | 92.22 | 92.13 | 91.28 | 942.86 |

| CNN-KELM | 92.05 | 91.84 | 91.84 | 92.29 | 295.14 |

| CNN-RBF | 89.40 | 89.70 | 90.10 | 89.30 | 10.94 |

| CNN-SVM | 88.66 | 89.00 | 88.66 | 86.83 | 206.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sirisha, U.; Chandana, B.S. Privacy Preserving Image Encryption with Optimal Deep Transfer Learning Based Accident Severity Classification Model. Sensors 2023, 23, 519. https://doi.org/10.3390/s23010519

Sirisha U, Chandana BS. Privacy Preserving Image Encryption with Optimal Deep Transfer Learning Based Accident Severity Classification Model. Sensors. 2023; 23(1):519. https://doi.org/10.3390/s23010519

Chicago/Turabian StyleSirisha, Uddagiri, and Bolem Sai Chandana. 2023. "Privacy Preserving Image Encryption with Optimal Deep Transfer Learning Based Accident Severity Classification Model" Sensors 23, no. 1: 519. https://doi.org/10.3390/s23010519

APA StyleSirisha, U., & Chandana, B. S. (2023). Privacy Preserving Image Encryption with Optimal Deep Transfer Learning Based Accident Severity Classification Model. Sensors, 23(1), 519. https://doi.org/10.3390/s23010519