Siam Deep Feature KCF Method and Experimental Study for Pedestrian Tracking

Abstract

1. Introduction

2. Materials and Methods

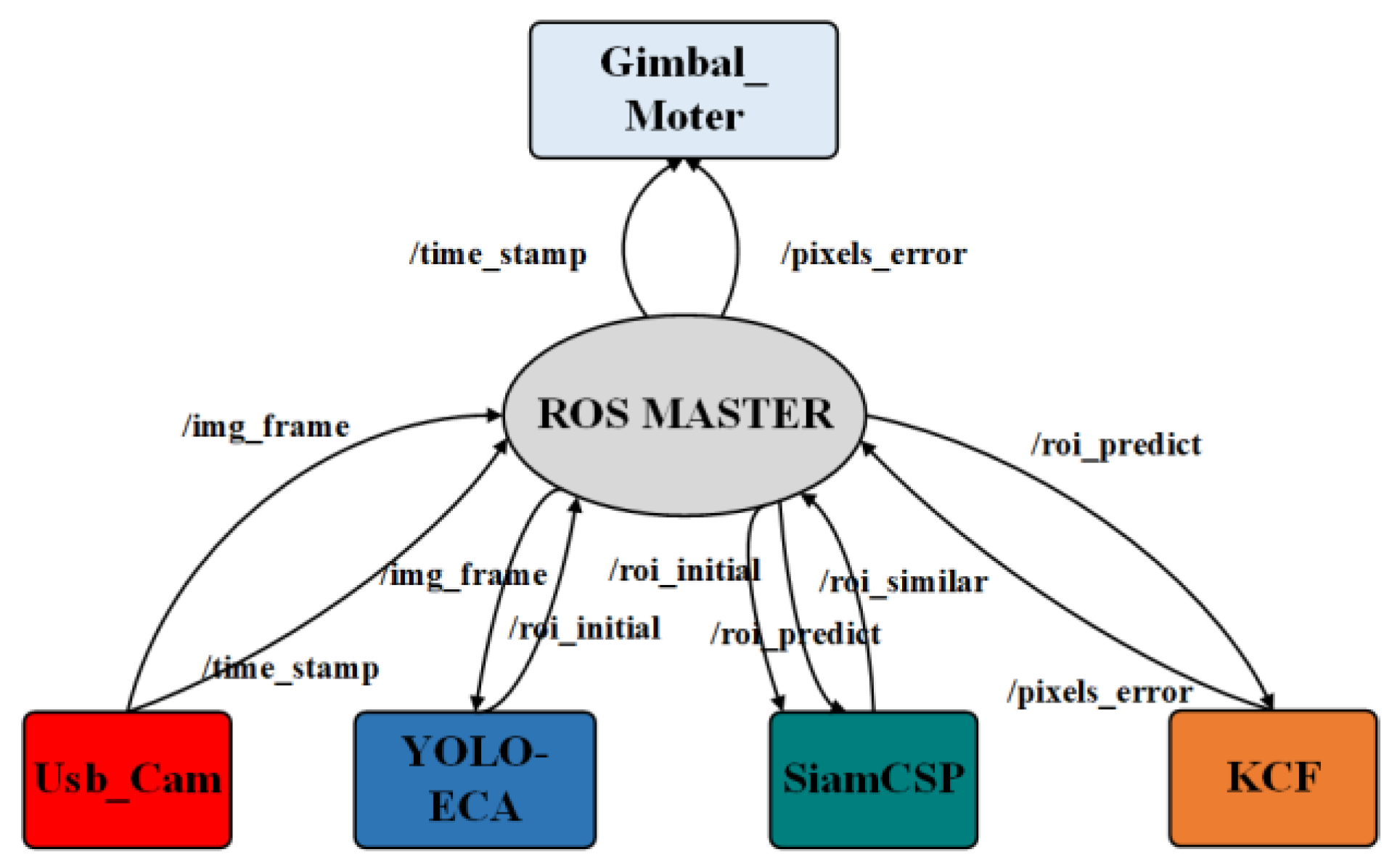

2.1. DFKCF Method Coupled with the SiamCSP

- (1)

- Feature extraction: The Usb_Cam node publishes the frame to the YOLO-ECA node, and the YOLO-ECA node detects and extracts the received frame to obtain the feature of the target (/roi_initial), which is received by the KCF node and the SiamCSP node.

- (2)

- Feature tracking: The tracking box (/roi_predict), including both size and the relative location, is predicted by the KCF node through correlation filter processing. The topic about the location is published to the Gimbal_Motor node so that the camera can track the pedestrian in real time. Thereafter, the received feature (/roi_initial) is compared with the predicted result (/roi_predict) by the SiamCSP node to obtain the similarity (/similarity).

- (3)

- Feature re-extraction: When the image similarity (/similarity) is below the minimum threshold, go to step (1) to recalculate the correlation feature model in the KCF node. Otherwise, go to the next step.

- (4)

- Execution stage: The relative position of (/roi_predict) in the previous frame and the current frame is calculated and then transferred to the Gimbal_Motor node to perform tracking.

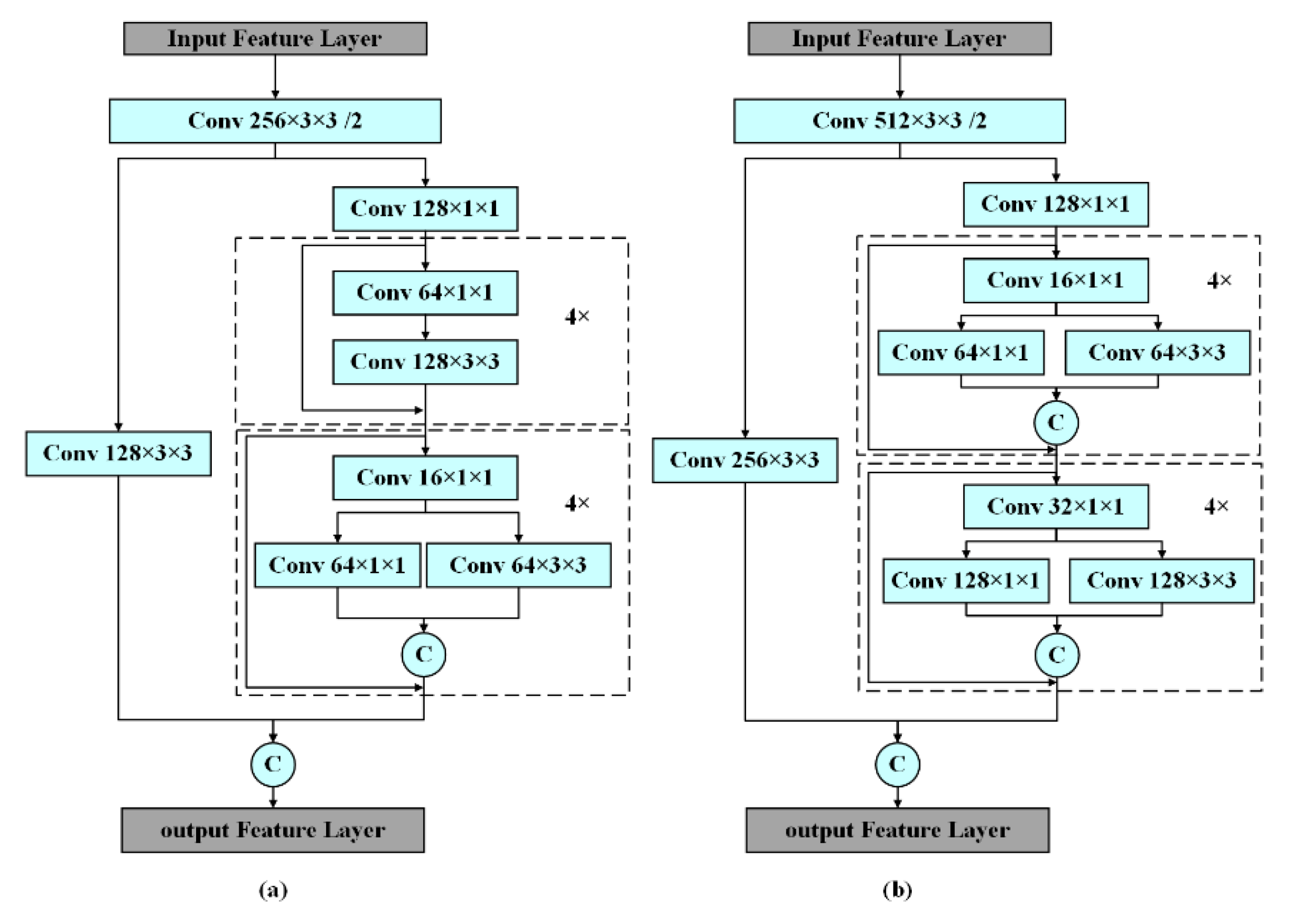

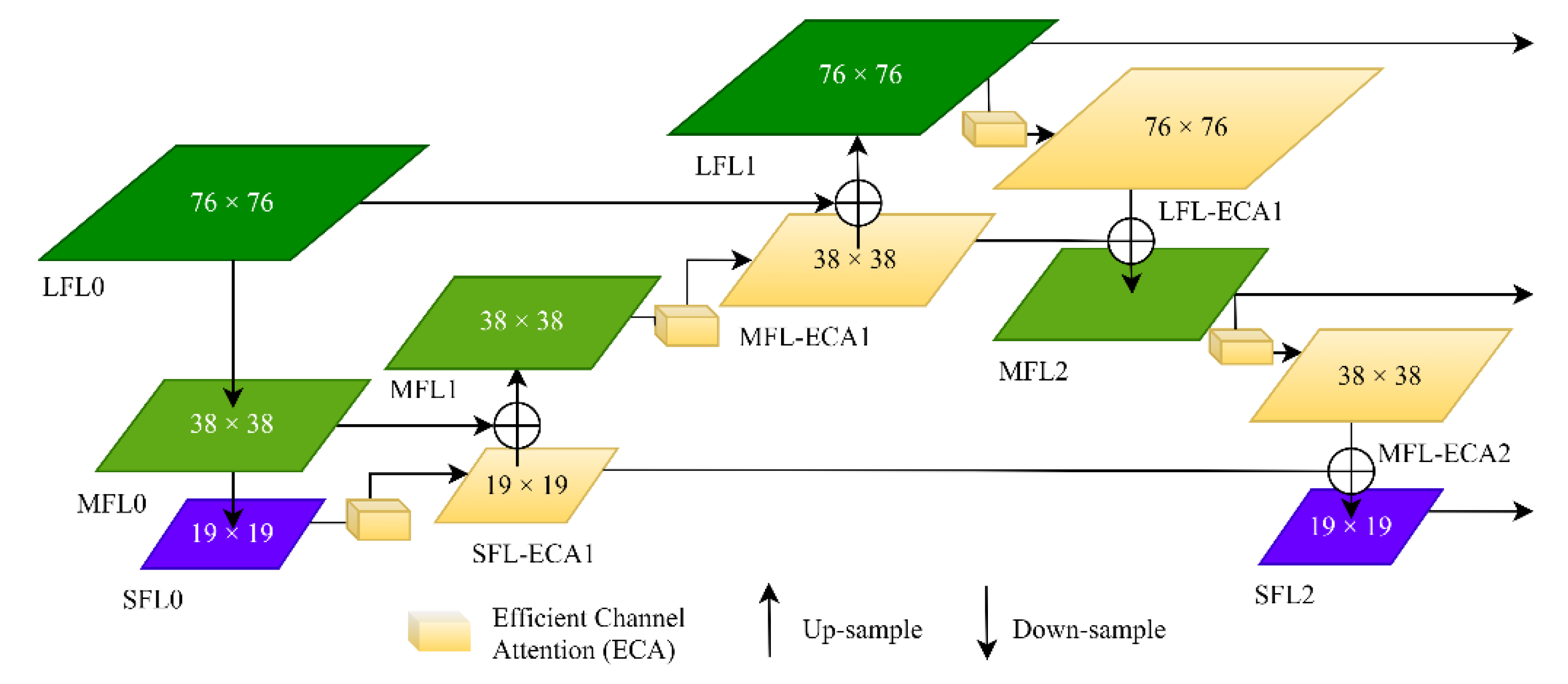

2.2. An Improved YOLOv3 Based on Efficient Channel Attention

- (1)

- Feature Extraction Network:

- (2)

- Feature Pyramid Network (FPN)

- (3)

- Detection Network

2.3. The Siamese CNN with the Cross Stage Partial

- (1)

- Feature Enhancement Network

- (2)

- Feature Extraction Layer

- (3)

- Decision Layer

- (4)

- Loss function

2.4. Improved Loss Function of the Siam-DFKCF Model

3. Experiments and Results

3.1. Dummy Tracking Experiments Result

3.1.1. Dataset

3.1.2. Experiment Arrangement

3.1.3. Experimental Analysis

- Anti-occlusion performance of the algorithm

- Scale adaptation performance of the algorithm

- Loss and re-tracking of Targets

3.2. Pedestrian Tracking Experiments Result

3.2.1. Dataset

3.2.2. Evaluation Criterion

- (1)

- Distance Precision (DP):

- (2)

- Overlap Precision (OP)

3.2.3. Experiment Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schuurman, B. Research on terrorism, 2007–2016: A review of data, methods, and authorship. Terror. Political Violence 2020, 32, 1011–1026. [Google Scholar] [CrossRef]

- Zhang, X.; Yao, L.; Wang, X.; Monaghan, J.; Mcalpine, D.; Zhang, Y. A survey on deep learning-based non-invasive brain signals: Recent advances and new frontiers. J. Neural Eng. 2021, 18, 031002. [Google Scholar] [CrossRef]

- Esteva, A.; Chou, K.; Yeung, S.; Naik, N.; Madani, A.; Mottaghi, A.; Liu, Y.; Topol, E.; Dean, J.; Socher, R. Deep learning-enabled medical computer vision. NPJ Digit. Med. 2021, 4, 5. [Google Scholar] [CrossRef] [PubMed]

- Qayyum, A.; Qadir, J.; Bilal, M.; Al-Fuqaha, A. Secure and robust machine learning for healthcare: A survey. IEEE Rev. Biomed. Eng. 2020, 14, 156–180. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Shu, L.; Chen, J.; Ferrag, M.A.; Wu, J.; Nurellari, E.; Huang, K. A survey on smart agriculture: Development modes, technologies, and security and privacy challenges. IEEE/CAA J. Autom. Sin. 2021, 8, 273–302. [Google Scholar] [CrossRef]

- Meneghello, F.; Calore, M.; Zucchetto, D.; Polese, M.; Zanella, A. IoT: Internet of threats? A survey of practical security vulnerabilities in real IoT devices. IEEE Internet Things J. 2019, 6, 8182–8201. [Google Scholar] [CrossRef]

- Li, H.; Xiezhang, T.; Yang, C.; Deng, L.; Yi, P. Secure video surveillance framework in smart city. Sensors 2021, 21, 4419. [Google Scholar] [CrossRef]

- Hu, W.; Li, X.; Luo, W.; Zhang, X.; Maybank, S.; Zhang, Z. Single and multiple object tracking using log-Euclidean Riemannian subspace and block-division appearance model. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2420–2440. [Google Scholar] [CrossRef]

- Yu, F.; Li, W.; Li, Q.; Liu, Y.; Shi, X.; Yan, J. Poi: Multiple object tracking with high performance detection and appearance feature. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 36–42. [Google Scholar] [CrossRef]

- Jain, V.; Learned-Miller, E. Fddb: A benchmark for face detection in unconstrained settings. In UMass Amherst Technical Report UM-CS-2010-009; University of Massachusetts: Amherst, MA, USA, 2010; Volume 2. [Google Scholar]

- Kuo, C.H.; Huang, C.; Nevatia, R. Multi-target tracking by on-line learned discriminative appearance models. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 685–692. [Google Scholar] [CrossRef]

- Kim, Y.; Bang, H. Introduction to Kalman filter and its applications. In Introduction and Implementations of the Kalman Filter; IntechOpen: London, UK, 2018; pp. 1–16. [Google Scholar] [CrossRef]

- Milan, A.; Rezatofighi, S.H.; Dick, A.; Reid, I.; Schindler, K. Online multi-target tracking using recurrent neural networks. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar] [CrossRef]

- Wen, Q.; Luo, Z.; Chen, R.; Yang, Y.; Li, G. Deep learning approaches on defect detection in high resolution aerial images of insulators. Sensors 2021, 21, 1033. [Google Scholar] [CrossRef]

- Glowacz, A. Fault diagnosis of electric impact drills using thermal imaging. Measurement 2021, 171, 108815. [Google Scholar] [CrossRef]

- Fan, P.; Shen, H.M.; Zhao, C.; Wei, Z.; Yao, J.G.; Zhou, Z.Q.; Fu, R.; Hu, Q. Defect identification detection research for insulator of transmission lines based on deep learning. J. Phys. Conf. Ser. 2021, 1828, 012019. [Google Scholar] [CrossRef]

- Masita, K.L.; Hasan, A.N.; Shongwe, T. Deep learning in object detection: A review. In Proceedings of the 2020 International Conference on Artificial Intelligence, Big Data, Computing and Data Communication Systems (icABCD), Durban, South Africa, 6–7 August 2020; pp. 1–11. [Google Scholar] [CrossRef]

- Miao, X.; Liu, X.; Chen, J.; Zhuang, S.; Fan, J.; Jiang, H. Insulator detection in aerial images for transmission line inspection using single shot multibox detector. IEEE Access 2019, 7, 9945–9956. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, USA, 7–12 December 2015; Volume 28. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Li, Y.; Wang, J.; Huang, J.; Li, Y. Research on Deep Learning Automatic Vehicle Recognition Algorithm Based on RES-YOLO Model. Sensors 2022, 22, 3783. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, X.; Li, Y.; Chen, T.; Mou, L. Pedestrian detection algorithm based on improved Yolo v3. In Proceedings of the 2021 IEEE International Conference on Power, Intelligent Computing and Systems (ICPICS), Shenyang, China, 29–31 July 2021; pp. 180–183. [Google Scholar] [CrossRef]

- Yi, Z.; Yongliang, S.; Jun, Z. An improved tiny-yolov3 pedestrian detection algorithm. Optik 2019, 183, 17–23. [Google Scholar] [CrossRef]

- Wilson, S.; Varghese, S.P.; Nikhil, G.A.; Manolekshmi, I.; Raji, P.G. A Comprehensive Study on Fire Detection. In Proceedings of the 2018 Conference on Emerging Devices and Smart Systems (ICEDSS), Tiruchengode, India, 2–3 March 2018; pp. 242–246. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, Y.; Sun, M.; Zhao, X. Deep-learning-based polar-body detection for automatic cell manipulation. Micromachines 2019, 10, 120. [Google Scholar] [CrossRef]

- He, W.; Han, Y.; Ming, W.; Du, J.; Liu, Y.; Yang, Y.; Wang, L.; Wang, Y.; Jiang, Z.; Cao, C.; et al. Progress of Machine Vision in the Detection of Cancer Cells in Histopathology. IEEE Access 2022, 10, 46753–46771. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 702–715. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef]

- Davide, C. Siamese neural networks: An overview. In Artificial Neural Networks; Springer: Cham, Switzerland, 2021; pp. 73–94. [Google Scholar] [CrossRef]

- Wendt, A.; Schüppstuhl, T. Proxying ROS communications—enabling containerized ROS deployments in distributed multi-host environments. In Proceedings of the 2022 IEEE/SICE International Symposium on System Integration (SII), Virtual, 9–12 January 2022; pp. 265–270. [Google Scholar] [CrossRef]

- Yi, X.; Song, Y.; Zhang, Y. Enhanced darknet53 combine MLFPN based real-time defect detection in steel surface. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Shenzhen, China, 4–7 November 2020; Springer: Cham, Switzerland, 2020; pp. 303–314. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv, 2016; arXiv:1602.07360. [Google Scholar] [CrossRef]

- Qin, Q.; Hu, W.; Liu, B. Feature projection for improved text classification. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 8161–8171. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Lee, H.J.; Ullah, I.; Wan, W.; Gao, Y.; Fang, Z. Real-time vehicle make and model recognition with the residual SqueezeNet architecture. Sensors 2019, 19, 982. [Google Scholar] [CrossRef]

- Ma, Z.; Yang, X.; Zhang, Y. Driver Hand Detection Using Squeeze-and-Excitation YOLOv4 Network. In Proceedings of the 2020 2nd International Conference on Big-Data Service and Intelligent Computation, Xiamen, China, 3–5 December 2020; pp. 25–30. [Google Scholar] [CrossRef]

- Kolchev, A.; Pasynkov, D.; Egoshin, I.; Kliouchkin, I.; Pasynkova, O.; Tumakov, D. YOLOv4-based CNN model versus nested contours algorithm in the suspicious lesion detection on the mammography image: A direct comparison in the real clinical settings. J. Imaging 2022, 8, 88. [Google Scholar] [CrossRef] [PubMed]

- Xue, H.; Sun, M.; Liang, Y. ECANet: Explicit cyclic attention-based network for video saliency prediction. Neurocomputing 2022, 468, 233–244. [Google Scholar] [CrossRef]

- Cui, Z.; Wang, N.; Su, Y.; Zhang, W.; Lan, Y.; Li, A. ECANet: Enhanced context aggregation network for single image dehazing. Signal Image Video Process. 2022, 1–9. [Google Scholar] [CrossRef]

- Kim, J.Y.; Kim, L.S.; Hwang, S.H. An advanced contrast enhancement using partially overlapped sub-block histogram equalization. IEEE Trans. Circuits Syst. Video Technol. 2001, 11, 475–484. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar] [CrossRef]

- Kristan, M.; Matas, J.; Leonardis, A.; Felsberg, M.; Cehovin, L.; Fernandez, G.; Vojir, T.; Hager, G.; Nebehay, G.; Pflugfelder, R. The visual object tracking vot2015 challenge results. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 11–18 December 2015; pp. 1–23. [Google Scholar] [CrossRef]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 1409–1422. [Google Scholar] [CrossRef]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8971–8980. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware siamese networks for visual object tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 101–117. [Google Scholar] [CrossRef]

| Type | Filters | Size | Output | ||

|---|---|---|---|---|---|

| Conv | 32 | 3 × 3/2 | 32 × 304 × 304 | ||

| Conv | 64 | 3 × 3/1 | 64 × 304 × 304 | ||

| Conv | 64 | 3 × 3/2 | 64 × 152 × 152 | ||

| Conv | 128 | 3 × 3/1 | 128 × 152 × 152 | ||

| Conv 128 × 3 × 3 | Conv | 256 | 3 × 3/2 | 256 × 76 × 76 | |

| Conv | 128 | 1 × 1/1 | |||

| 4× | Conv | 64 | 1 × 1/1 | ||

| Conv | 128 | 3 × 3/1 | |||

| Residual | |||||

| 4× | Conv | 16 | 1 × 1/1 | ||

| Conv | 64 | 1 × 1/1 | |||

| Conv | 64 | 3 × 3/1 | |||

| Fire | |||||

| Conv 256 × 3 × 3 | Conv | 512 | 3 × 3/2 | 512 × 38 × 38 | |

| Conv | 128 | 1 × 1/1 | |||

| 4× | Conv | 16 | 1 × 1/1 | ||

| Conv | 64 | 1 × 1/1 | |||

| Conv | 64 | 3 × 3/1 | |||

| Fire | |||||

| 4× | Conv | 32 | 1 × 1/1 | ||

| Conv | 128 | 1 × 1/1 | |||

| Conv | 128 | 3 × 3/1 | |||

| Fire | |||||

| 1× | Conv | 512 | 3 × 3/2 | 512 × 19 × 19 | |

| Direct | Conv Max5 × 5 | Conv Max9 × 9 | Conv Max13 × 13 | 1024 × 19 × 19 | |

| SPP | |||||

| Type | Filters | Size | Output | ||

|---|---|---|---|---|---|

| Large | 3× | Convolutional | 256 | 3 × 3/1 | 256 × 76 × 76 |

| BN | |||||

| LeakReLU | |||||

| Medium | 3× | Convolutional | 512 | 3 × 3/1 | 512 × 38 × 38 |

| BN | |||||

| LeakReLU | |||||

| Small | 3× | Convolutional | 1024 | 3 × 3/1 | 1024 × 19 × 19 |

| BN | |||||

| LeakReLU |

| Type | Filters | Size | Output | ||

|---|---|---|---|---|---|

| Resize | 3 × 304 × 304 | ||||

| Poshe | 3 × 304 × 304 | ||||

| Conv 64 × 3 × 3 | Conv | 128 | 3 × 3/2 | 128 × 152 × 152 | |

| Conv | 64 | 3 × 3/1 | |||

| 1× | Conv | 32 | 1 × 1/1 | ||

| Conv | 64 | 3 × 3/1 | |||

| Residual | |||||

| Conv | 64 | 3 × 3/1 | |||

| MaxPooling | 2 × 2 | 128 × 76 × 76 | |||

| Conv 128 × 3 × 3 | Conv | 128 | 3 × 3 | 256 × 38 × 38 | |

| Conv | 64 | 3 × 3/1 | |||

| 1× | Conv | 32 | 1 × 1/1 | ||

| Conv | 64 | 3 × 3/1 | |||

| Residual | |||||

| Conv | 128 | 3 × 3/1 | |||

| MaxPooling | 2 × 2/1 | 256 × 19 × 19 | |||

| Conv | 512 | 3 × 3 | 512 × 19 × 19 | ||

| Direct | Conv Max5 × 5 | Conv Max9 × 9 | Conv Max13 × 13 | 512 × 7×7 | |

| SPP | |||||

| FC | |||||

| FC&Sigmoid | Similarity |

| Number |

Tracking Distance (m) |

Linear Velocity (m/s) |

Angular Velocity (rad/s) |

|---|---|---|---|

| 1 | 5 | 1 | 0 |

| 2 | 5 | 1 | |

| 3 | 5 | 2 | 0 |

| 4 | 5 | 2 | |

| 5 | 5 | 3 | 0 |

| 6 | 5 | 3 |

| Detection Method | The Difference in Sizes Between the Initial and Final Tracking Box | |||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | ||||

| 0 | 0 | 0 | ||||

| Siam-DFKCF | 1.72% | 1.52% | 4.55% | 2.6% | 5.56% | 4.44% |

| Traditional KCF | 3.33% | 20.99% | 4.76% | 28.57% | 6.41% | 38.75% |

| Method | DP | OP | FPS | GPU Cost | CPU Cost |

|---|---|---|---|---|---|

| TLD | 0.653 | 0.628 | 32 | 0 | 36 |

| KCF | 0.694 | 0.645 | 241 | 0 | 33 |

| SiamRPN | 0.868 | 0.843 | 134 | 32 | 0 |

| DaSiamRPN | 0.917 | 0.892 | 117 | 34 | 0 |

| Proposed method | 0.934 | 0.909 | 93 | 23 | 18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, D.; Jin, W.; Liu, D.; Che, J.; Yang, Y. Siam Deep Feature KCF Method and Experimental Study for Pedestrian Tracking. Sensors 2023, 23, 482. https://doi.org/10.3390/s23010482

Tang D, Jin W, Liu D, Che J, Yang Y. Siam Deep Feature KCF Method and Experimental Study for Pedestrian Tracking. Sensors. 2023; 23(1):482. https://doi.org/10.3390/s23010482

Chicago/Turabian StyleTang, Di, Weijie Jin, Dawei Liu, Jingqi Che, and Yin Yang. 2023. "Siam Deep Feature KCF Method and Experimental Study for Pedestrian Tracking" Sensors 23, no. 1: 482. https://doi.org/10.3390/s23010482

APA StyleTang, D., Jin, W., Liu, D., Che, J., & Yang, Y. (2023). Siam Deep Feature KCF Method and Experimental Study for Pedestrian Tracking. Sensors, 23(1), 482. https://doi.org/10.3390/s23010482