Abstract

Radio signals are polluted by noise in the process of channel transmission, which will lead to signal distortion. Noise reduction of radio signals is an effective means to eliminate the impact of noise. Using deep learning (DL) to denoise signals can reduce the dependence on artificial domain knowledge, while traditional signal-processing-based denoising methods often require knowledge of the artificial domain. Aiming at the problem of noise reduction of radio communication signals, a radio communication signal denoising method based on the relativistic average generative adversarial networks (RaGAN) is proposed in this paper. This method combines the bidirectional long short-term memory (Bi-LSTM) model, which is good at processing time-series data with RaGAN, and uses the weighted loss function to construct a noise reduction model suitable for radio communication signals, which realizes the end-to-end denoising of radio signals. The experimental results show that, compared with the existing methods, the proposed algorithm has significantly improved the noise reduction effect. In the case of a low signal-to-noise ratio (SNR), the signal modulation recognition accuracy is improved by about 10% after noise reduction.

1. Introduction

As the premise of signal demodulation, radio signal modulation recognition is an important research content in the field of communication. It plays an important role in military and civil communications, and is of great significance to future 6G communications [1]. However, due to the complexity of the signal transmission environment and the problems of the receiving equipment, the received signal always has a certain amount of noise, which brings difficulties to the modulation recognition of the received signal. In recent years, modulation recognition algorithms have developed rapidly, but there is a common problem that the recognition accuracy is not ideal in low SNR scenarios [2]. The noise reduction of the received signal is an effective means to solve this problem. Therefore, it is of great significance to obtain a noise reduction algorithm that can reduce the signal noise while maintaining the basic characteristics of the signal for signal modulation recognition.

Signal denoising is to extract clean signals from noisy signals, which has always been a problem in the field of signal processing. Effective signal denoising is of great significance for radio signal communication. The existing signal denoising methods are mostly based on signal processing methods, such as wavelet transform (WT)-based denoising methods [3,4], empirical mode decomposition (EMD)-based denoising methods [5], etc. The denoising effect based on WT is closely related to the number of decomposition layers, wavelet basis function, threshold selection, etc. Too few decomposition layers affect the denoising effect, and too many decomposition layers will lead to signal distortion, while the selection of the wavelet basis function and the optimal threshold is usually determined by experience. Although the noise reduction algorithm based on EMD has advantages in dealing with nonlinear and nonstationary signals, there are problems such as mode aliasing and end effect.

However, DL can extract the hidden feature values from data relatively accurately and conveniently, and has efficient data processing capability. In recent years, DL technology has been widely used in speech processing [6], image processing [7], natural language processing [8], and other fields. With the development of technology, DL technology is also used in the field of signal processing. In addition, signal denoising has always been a difficult problem in the field of signal processing, so some scholars have tried to use DL to solve this problem. A detailed description of the signal noise reduction method based on DL will be discussed later in the second section. Although these works have achieved good results, there are still some drawbacks: (i) The perfect separation of signal and noise cannot be realized [9,10,11]; (ii) The existing noise reduction methods based on the generative adversarial network (GAN) [12] still have the problems of long training time and slow convergence speed in the training process [13,14]; (iii) The requirements for the number of signal sampling points are high. When the number of signal sampling points is too small and the waveform is not obvious, a satisfactory denoising effect cannot be achieved [15].

To solve the above problems, this paper proposes a RaGAN-based radio signal noise reduction method. This method takes the time-domain waveform of the radio-received signal as the processing object, uses RaGAN [16] to replace the original GAN to accelerate the convergence speed of the model, uses Bi-LSTM [17] as the core to build a generator and discriminator, effectively extracts the time-dimension characteristics of the signal, and retains the essential feature information of the signal after noise reduction. Compared with the existing methods, this method has a satisfactory denoising effect and requires fewer sampling points of target signals. In addition, we use the CNN2 [18], IQCNet [19], and IQCLNet [19] classification networks for the modulation recognition of noise-reduced signals, effectively improving the recognition accuracy of low SNR signals by about 10%, which is of great significance for signal modulation recognition.

To summarize, the main contributions of our work are:

- A noise reduction algorithm based on RaGAN is proposed for time-series data of radio communication signals;

- Our method preserves the essential characteristics of the signal after noise reduction of the radio signal;

- The experimental results show that the accuracy of modulation recognition is improved by about 10% after using this method to denoise the signal with low SNR compared with the signal before noise reduction.

The remainder of this paper is organized as follows. The second section describes the related work; the third section introduces the background, including the definition of the problem, the system model, and the theory of GAN; in the fourth section, the noise reduction model, dataset, network structure, and loss function are described; the fifth section shows the experimental process and discusses the results; finally, the paper is summarized in the sixth section, and further work is pointed out. Table 1 provides the abbreviations that appear in the paper.

Table 1.

List of abbreviations.

2. Related Work

In the past few years, research based on DL has become an active topic in the field of signal processing [20]. Many scholars have proposed some signal denoising methods based on DL. These methods use a DL framework to extract signal features for signal denoising in an end-to-end manner. Wang et al. preprocessed a signal with impulse noise before signal modulation recognition [21]. In the case of fewer labeled samples, the modulation recognition accuracy of the underwater acoustic signal was improved by more effective extraction of signal features through denoising and task driving. However, this method is only suitable for the underwater impulsive noise environment, and is not suitable for the additive white Gaussian noise (AWGN) channel. In References [13,14], the authors used the convolutional neural network (CNN) as the core to build a generation countermeasure network to denoise electrocardiogram (ECG) signals. Although the least-squares generative adversarial network (LSGAN) [22], the conditional generative adversarial network (CGAN) [23], and other methods were used to improve the model and achieved a better denoising effect than traditional methods, there are still problems such as slow model convergence. In References [9,10,11], the authors transformed the problem of signal denoising into that of image denoising, processed the image with CNN, and achieved good results in the field of seismic signal and transient electromagnetic (TEM) signal denoising. This method will not cause large deviation in amplitude, time, and phase information after signal denoising, but it cannot achieve perfect separation of signal and noise. Soltani et al. [15] used the artificial neural network (ANN) to denoise the measured radio frequency (RF) signal sent by the local discharge source, and converted the denoising problem into a curve-fitting problem. However, this method can achieve the denoising effect only when there are enough sampling points and the waveform is obvious, while when there are too few sampling points and the waveform is not obvious, it cannot achieve a good denoising effect.

3. Background

In this section, we describe the problem definition and system model. In addition, since the method we use is based on GAN, we also briefly explain its basic principle and an improved training method, namely RaGAN.

3.1. Problem Definition and System Model

The signal in the wireless channel is transmitted through the propagation of electromagnetic waves in space, while there are some unwanted signals in the channel, which are collectively called noise. Noise is a kind of interference in the channel. Since the noise is superimposed on the signal, it is also called additive interference. Noise will have adverse effects on signal transmission. It not only limits the transmission rate of information, but also leads to signal distortion.

Gaussian white noise reflects the additive noise in the actual channel, and can nearly represent the characteristics of channel noise [24]. Therefore, the proposed method is applied to the AWGN channel. In the AWGN channel, the output signal is expressed as the superposition of input signal and Gaussian noise signal :

where . The purpose of denoising is to filter the noise from the signal polluted by noise as much as possible (i.e., denoised signal), , so as to minimize the expected error between the input signal and the denoised signal:

where represents the expectation operator.

3.2. Generative Adversarial Network

GAN was put forward by Goodfellow et al. in 2014 [12] and soon became a research hotspot. GAN mainly includes two parts, discriminator (D) and generator (G), which solve the minimum and maximum problem of antagonism through alternate training. The idea of this model is that D tries to distinguish whether the input data are real data or data generated by G, and the distribution of data generated by G is as close to the real data distribution as possible . In this way, G can learn to create solutions that are highly close to real data, and it is difficult to distinguish them by D. The objective optimization function of GAN is as follows:

In the above formula, is the input following the random distribution , is the combined loss function of GAN, represents the data generated by G, and and represent the probability that D gives correct discrimination to real data and generated data.

Although GAN has achieved great success, there are some problems in training stability, and the emergence of RaGAN has solved this problem well. The D of standard GAN will estimate the probability of whether an input data is generated data or real data , while the relative discriminator of RaGAN will average the probability that the real data is more real than the generated data , so that G can generate higher-quality samples and accelerate the model convergence. We define the relative D as , as shown below:

In the above formula, , represents sigmoid function, and represents the output of the discriminator without transformation, i.e., . The loss functions and of RaGAN G and D are, respectively, expressed as:

4. Signal Denoising Method Based on RaGAN

In this section, the noise reduction model and dataset details used are described, and the design of the network structure is given in detail. Finally, the design of the loss function is given.

4.1. Denoising Model

The noise in the wireless channel can interfere with the radio signal transmission. By reducing the noise component in the received signal, the original useful signal can be further restored. Using traditional signal denoising methods to separate signal from noise usually requires a lot of artificial knowledge. Noise reduction through deep DL technology can reduce the dependence on artificial domain knowledge. Therefore, a noise reduction algorithm based on RaGAN for radio communication signals is proposed in this paper. The noise reduction model and its training and testing process are shown in Figure 1.

Figure 1.

Signal noise reduction model based on RaGAN and training and testing process.

First, the model is trained, and the signal of superimposed noise is taken as the input of the generator. The generator generates the signal by extracting the characteristics of the input signal. Then, the generated signal and the corresponding clean signal are input into the discriminator in turn. The discriminator judges the input signal and determines whether the input signal is generated by the generator or a clean signal without noise. After iterative training, the error backpropagation algorithm is used to optimize the model itself. The final discriminator cannot determine whether the input signal is the signal generated by the generator or the clean signal without noise. After the model training is completed, the generator has the ability to map the input noisy signal to the corresponding clean signal. At this time, the generator can be used to restore the noisy signal to a clean signal. This is the principle of RaGAN noise reduction in this paper.

4.2. Dataset

This paper adopts the public dataset RML2016.10a [25]. Since this dataset was originally used for signal modulation recognition, in order to make this dataset more suitable for signal noise reduction training, signals with SNR of 18 dB of eight modulation methods in the RML2016.10a dataset were selected for wavelet denoising and smoothing to form clean signal samples. We randomly add Gaussian white noise with different SNRs to the clean signal samples, and finally generate the corresponding noisy signal samples. In practice, if the signal SNR is too high, it will be too ideal, while if the SNR is too low, the characteristics of the signal will be completely covered by noise. Therefore, the SNR of the noisy signal is between −8 dB and 10 dB, with an interval of 2 dB. The visual image of the signal is shown in Figure 2. There are 80,000 signal samples in total. The details of the dataset are shown in Table 2.

Figure 2.

Radio signal dataset: (a) original clean signal waveform; (b) noisy signal waveform.

Table 2.

Dataset details.

4.3. Network Structure

Extracting time-dimension information is a key step in radio signal processing. Bi-LSTM [17] is composed of forward long short-term memory (LSTM) [26] and backward LSTM, which makes up for the defect that one-way LSTM cannot encode backward to forward information. It can effectively extract the time-dimension features of signals, and has a good performance in processing time-series data. So, we build generators and discriminators with Bi-LSTM as the core.

4.3.1. Generator Network Structure

As shown in Figure 3, the generator is composed of two full connection layers and two layers of Bi-LSTM. The input and output dimensions of the generator are 128. The input dimension of the Bi-LSTM layer is 1, the number of hidden layer nodes is 128, and the output dimension is 128. After the full connection layer output, Dropout [27] and Leaky ReLU are used to activate the output nonlinearly. Therefore, after the generator inputs the signal, it performs layer normalization (LN) [28], and then extracts the waveform features and time-dimension features of the noisy signal through full connection mapping and the Bi-LSTM layer to achieve the purpose of signal denoising. Before outputting the data, it performs normalization restoration to ensure the generalization ability of the model, and finally outputs the denoised signal.

Figure 3.

Generator network structure.

4.3.2. Discriminator Network Structure

The discriminator network structure is shown in Figure 4, which is also composed of two layers of a full connection layer and two layers of Bi-LSTM. The discriminator input dimension is 128, and the output dimension is 1. The input dimension in the Bi-LSTM layer is 1, the number of hidden layer nodes is 128, and the output dimension is 128. After the full connection layer is output, Leaky ReLU and Dropout are introduced to prevent the model from overfitting. Therefore, after the discriminator inputs the signal data, it performs LN on the input, extracts the difference between the real signal data and the waveform in the generated signal data through the Bi-LSTM layer, and then outputs the decision result through the mapping of the full connection layer.

Figure 4.

Discriminator network structure.

4.4. Loss Function

The definition of the loss function is very important for model performance. In this paper, the relative discriminator is used. Compared with the standard discriminator, which will judge whether the input signal is clean signal or generated signal , the relative discriminator will estimate the relatively more true probability of clean signal than generated signal . The loss function of discriminator is defined as follows:

The counter loss function of the generator is expressed as:

where . By using a relative discriminator to accelerate the model convergence, the generator can produce a higher-quality denoising signal.

Inspired by [29,30], we express the overall loss function of the generator as the weighted sum of content loss and confrontation loss, so that the generator is affected by both content loss and confrontation loss, as shown below:

where represents the content loss, represents the confrontation loss, and is the coefficient to balance different loss items. Through the common constraint of content loss and confrontation loss, the generator can better restore noisy signals to clean signals . and are used to construct the content loss function.

In the above formula, represents the mean square error between the denoised signal and the clean signal , and represents the absolute value error between the denoised signal and the clean signal , where is the output of the generator and is the length of a single sample. The content loss function is expressed as:

5. Experiments

In this section, the method proposed in this paper is applied to wireless signal noise reduction, and the training details and parameters are described. In order to verify the effectiveness of the proposed method, we compare it with the existing algorithm and make a comparative analysis. Finally, a Bi-LSTM analysis experiment was conducted. The hardware and software environment of the experiment are shown in Table 3.

Table 3.

Configuration of hardware and software.

5.1. Training Details and Parameters

We divide the dataset into a training set and a test set according to 4:1. The training set contains 64,000 signal data, and the test set contains 16,000 signal data. Because there are few hyperparameters, we use grid search to select hyperparameters. First, define the traversal interval = {0.5, 0.05, 0.005, 0.0005}, batch size = {256, 512, 1024, 2048}, learning rate = {0.0001, 0.001,0.01, 0.1}, and optimizer = {Adam}, and then calculate the cost function of all hyperparametric combinations on the validation set to obtain the optimal hyperparametric set in the interval. The epoch is determined by observing the convergence of the loss function. The final hyperparameters are shown in Table 4:

Table 4.

Hyperparameter of model.

The initial learning rate of the generator and discriminator is set to 0.001, and the learning rate is halved after 50 times of training. During the training process, the model is iteratively optimized by calculating the loss function. After the model training is completed, the final weight model of the generator and discriminator is saved.

5.2. Experimental Result

In order to verify the noise reduction performance of this method, the test set is tested on the trained generator, and the signal noise reduction performance is compared with WT, EMD, and standard GAN noise reduction models under different input signal-to-noise ratios. The output SNR is used as the evaluation standard of noise reduction performance.

where and are the effective power of signal and noise, respectively. The input signal-to-noise ratio of the noisy signal test set is within the range of [−8, 10] dB, and the step size is 2 dB. The comparison diagram of time-domain waveforms of 8PSK signals before and after noise reduction by different methods is shown in Figure 5.

Figure 5.

Time-domain waveform comparison of 8PSK signal before and after noise reduction: (a) original clean signal waveform; (b) noisy signal waveform; (c) time-domain waveform of signal after RaGAN noise reduction; (d) time-domain waveform of signal after GAN noise reduction; (e) time-domain waveform of signal after WT noise reduction; (f) time-domain waveform of signal after EMD noise reduction.

It can be seen from Figure 6 and Table 5 that the noise reduction performance of this method is significantly improved compared with the two traditional methods. The SNR is improved by about 10 dB for signals with an input of −8 dB to 0 dB, and the SNR increases by about 8 dB for signals with an input of 0 dB to 10 dB. The noise reduction performance is also improved compared with the standard GAN when the input signal is more than −2 dB. Compared with the WT and EMD noise reduction methods, the output signal-to-noise ratio is improved by about 4dB under the condition of 0 dB signal-to-noise ratio. This shows that the method has significantly improved the performance of signal noise reduction.

Figure 6.

Comparison of noise reduction performance of different methods.

Table 5.

Specific data of noise reduction performance comparison of different methods.

In order to verify that the model can retain the original features of the signal after signal noise reduction, we use the RaGAN noise reduction model to reduce the noise of the signal in the original RML2016.10a data set, and use CNN2, IQCNet and IQCLNet classification networks to classify the modulation recognition of the signal before and after noise reduction. By comparing the recognition rate of the signal modulation classification before and after noise reduction with the RaGAN noise reduction algorithm, we can judge the degree of the original features of the signal after noise reduction. The hyperparameter of the classification network model is shown in Table 6.

Table 6.

Hyperparameter of the classification network model.

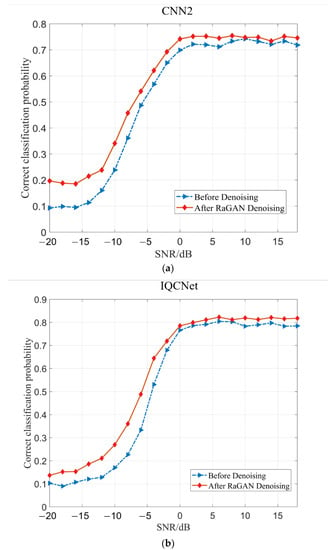

It can be seen from Figure 7 that the accuracy of signal modulation recognition is effectively improved after noise reduction of the signal through this model, especially in the low SNR range. In (a), the CNN2 classification network is used for the modulation recognition of signals. The recognition accuracy is improved by nearly 10% when the input signal SNR is between −14 dB and −20 dB. In (b), the IQCNet classification network is used for signal modulation recognition. The recognition accuracy is improved by nearly 10% when the input signal SNR is between −4 dB and −12 dB, and by nearly 5% when the SNR is between −12 dB and −20 dB. In (c), the IQCLNet classification network is used for the modulation recognition of signals. The recognition accuracy is improved by nearly 10% when the input signal SNR is between −8 dB and −14 dB, and by nearly 6% when the SNR is between −14 dB and −20 dB. However, in the range of high SNR, since the signal features are obvious, the feature prominence effect of a high SNR signal after noise reduction is not as good as that of the low SNR signal, so the improvement of the high SNR signal recognition accuracy is not high.

Figure 7.

Comparison of recognition rate before and after RaGAN noise reduction: (a) CNN2 recognition comparison; (b) IQCNet recognition comparison; (c) IQCLNet recognition comparison.

Because the algorithm is used for communication applications, the complexity of a large number of calculations and algorithms should also be considered. A total of 1024 noisy signals are processed by the algorithm, WT, and EMD in this paper, and the processing time comparison is shown in Table 7. It can be seen from Table 7 that when processing the same number of noisy signals, the algorithm proposed in this paper takes the least time, which is more conducive to the application of the algorithm in practice.

Table 7.

Comparison of time spent in processing 1024 noisy signals.

5.3. Exploration of Bi-LSTM in the Model

During the experiment, we found that the number of hidden layer nodes used in the Bi-LSTM layer will affect the noise reduction effect of the model. In order to find the optimal number of solution points, we made an experimental comparison of the number of hidden layer nodes used by Bi-LSTM.

It can be seen from Figure 8 and Table 8 that the noise reduction performance of the model is closely related to the number of hidden layer nodes in Bi-LSTM. With the increase in the number of nodes used, the noise reduction performance of the model is also improved. When the number of hidden layer nodes is 128, the noise reduction performance of the model is the highest. However, when the number of hidden layer nodes exceeds 128, due to the large number of model parameters, the performance of the model cannot be improved by increasing the number of nodes.

Figure 8.

Comparison of noise reduction performance of different number of hidden nodes.

Table 8.

Specific data of noise reduction performance Comparison of different number of hidden layer nodes.

6. Conclusions

Aiming at the degradation of radio communication signal reception quality, this paper proposes a RaGAN-based radio communication signal noise reduction model. This model uses Bi-LSTM as the core to build a generator and discriminator. Through Bi-LSTM, signal features are effectively extracted, the time dependence of data is learned, and the weighted loss function is used to train the model to achieve end-to-end denoising of radio signals. Compared with several signal noise reduction methods, this method has significantly improved the signal noise reduction performance, and has been verified on the public dataset RML2016.10a, which proves the effectiveness of this model.

However, in this experiment, we only processed the signal from the perspective of the time domain, which has certain limitations. Therefore, it is the work we need to conduct in the future to use deep learning technology combined with signal frequency domain and other aspects to process signals. In addition, our method is mainly applicable to signal propagation in the AWGN channel. In future research, we will apply the proposed method to Rayleigh fading to further verify the feasibility of this method, so as to improve the generalization ability of the model.

Author Contributions

Software, L.P.; data curation, M.W.; writing—original draft preparation, L.P.; writing—review and editing, Y.F.; project administration, Z.M.; funding acquisition, S.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Basic Research Projects of the Basic Strengthening Program, Grant Number 2020-JCJQ-ZD-071, and the National Key Laboratory of Science and Technology on Space Microwave, No. HTKJ2021KL504012.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Banafaa, M.; Shayea, I.; Din, J.; Azmi, M.H.; Alashbi, A.; Daradkeh, Y.I.; Alhammadi, A. 6G Mobile Communication Technology: Requirements, Targets, Applications, Challenges, Advantages, and Opportunities. Alex. Eng. J. 2022. [Google Scholar] [CrossRef]

- Xiao, W.; Luo, Z.; Hu, Q. A Review of Research on Signal Modulation Recognition Based on Deep Learning. Electronics 2022, 11, 2764. [Google Scholar] [CrossRef]

- Dautov, Ç.P.; Özerdem, M.S. Wavelet transform and signal denoising using Wavelet method. In Proceedings of the 2018 26th Signal Processing and Communications Applications Conference (SIU), Izmir, Turkey, 2–5 May 2018; pp. 1–4. [Google Scholar]

- Guo, T.; Zhang, T.; Lim, E.; Lopez-Benitez, M.; Ma, F.; Yu, L. A Review of Wavelet Analysis and Its Applications: Challenges and Opportunities. IEEE Access 2022, 10, 58869–58903. [Google Scholar] [CrossRef]

- Wei, H.; Qi, T.; Feng, G.; Jiang, H. Comparative research on noise reduction of transient electromagnetic signals based on empirical mode decomposition and variational mode decomposition. Radio Sci. 2021, 56, e2020RS007135. [Google Scholar] [CrossRef]

- Mohamed, A.; Lee, H.-y.; Borgholt, L.; Havtorn, J.D.; Edin, J.; Igel, C.; Kirchhoff, K.; Li, S.-W.; Livescu, K.; Maaløe, L. Self-Supervised Speech Representation Learning: A Review. arXiv 2022, arXiv:2205.10643. [Google Scholar] [CrossRef]

- Celard, P.; Iglesias, E.; Sorribes-Fdez, J.; Romero, R.; Vieira, A.S.; Borrajo, L. A survey on deep learning applied to medical images: From simple artificial neural networks to generative models. Neural Comput. Appl. 2022, 1–33. [Google Scholar] [CrossRef]

- Kaur, N.; Singh, P. Conventional and contemporary approaches used in text to speech synthesis: A review. Artif. Intell. Rev. 2022, 1–44. [Google Scholar] [CrossRef]

- Zhu, W.; Mousavi, S.M.; Beroza, G.C. Seismic signal denoising and decomposition using deep neural networks. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9476–9488. [Google Scholar] [CrossRef]

- Wang, M.; Lin, F.; Chen, K.; Luo, W.; Qiang, S. TEM-NLnet: A Deep Denoising Network for Transient Electromagnetic Signal with Noise Learning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Chen, K.; Pu, X.; Ren, Y.; Qiu, H.; Lin, F.; Zhang, S. TEMDNet: A novel deep denoising network for transient electromagnetic signal with signal-to-image transformation. IEEE Trans. Geosci. Remote Sens. 2020, 60, 1–18. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Singh, P.; Pradhan, G. A new ECG denoising framework using generative adversarial network. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020, 18, 759–764. [Google Scholar] [CrossRef]

- Wang, X.; Chen, B.; Zeng, M.; Wang, Y.; Liu, H.; Liu, R.; Tian, L.; Lu, X. An ECG Signal Denoising Method Using Conditional Generative Adversarial Net. IEEE J. Biomed. Health Inform. 2022, 26, 2929–2940. [Google Scholar] [CrossRef]

- Soltani, A.A.; El-Hag, A. Denoising of radio frequency partial discharge signals using artificial neural network. Energies 2019, 12, 3485. [Google Scholar] [CrossRef]

- Jolicoeur-Martineau, A. The relativistic discriminator: A key element missing from standard GAN. arXiv 2018, arXiv:1807.00734. [Google Scholar] [CrossRef]

- Zhou, P.; Shi, W.; Tian, J.; Qi, Z.; Li, B.; Hao, H.; Xu, B. Attention-based bidirectional long short-term memory networks for relation classification. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Berlin, Germany, 7–12 August 2016; pp. 207–212. [Google Scholar]

- O’Shea, T.J.; Corgan, J.; Clancy, T.C. Convolutional radio modulation recognition networks. In Proceedings of the International Conference on Engineering Applications of Neural Networks, Aberdeen, UK, 2–5 September 2016; pp. 213–226. [Google Scholar]

- Cui, T.S. A Deep Learning Method for Space-Based Electromagnetic Signal Recognition. Ph.D. Thesis, University of Chinese Academy of Sciences (National Center for Space Science, Chinese Academy of Sciences), Beijing, China, 2021. [Google Scholar]

- Senapati, R.K.; Tanna, P.J. Deep Learning-Based NOMA System for Enhancement of 5G Networks: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–15. [Google Scholar] [CrossRef]

- Wang, H.; Wang, B.; Li, Y. IAFNet: Few-Shot Learning for Modulation Recognition in Underwater Impulsive Noise. IEEE Commun. Lett. 2022, 26, 1047–1051. [Google Scholar] [CrossRef]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.; Wang, Z.; Paul Smolley, S. Least squares generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2794–2802. [Google Scholar]

- Odena, A.; Olah, C.; Shlens, J. Conditional Image Synthesis with Auxiliary Classifier GANs. In Proceedings of the 34th International Conference on Machine Learning, Proceedings of Machine Learning Research, Sydney, Australia, 6–11 August 2017; pp. 2642–2651. [Google Scholar]

- Awon, N.T.; Islam, M.; Rahman, M.; Islam, A. Effect of AWGN & Fading (Raleigh & Rician) channels on BER performance of a WiMAX communication System. arXiv 2012, arXiv:1211.4294. [Google Scholar] [CrossRef]

- O’Shea, T.J.; West, N. Radio Machine Learning Dataset Generation with GNU Radio. In Proceedings of the GNU Radio Conference, Charlotte, NC, USA, 20–24 September 2016. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Baby, D.; Verhulst, S. Sergan: Speech enhancement using relativistic generative adversarial networks with gradient penalty. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 106–110. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).