Honeycomb Artifact Removal Using Convolutional Neural Network for Fiber Bundle Imaging

Abstract

1. Introduction

2. Materials and Methods

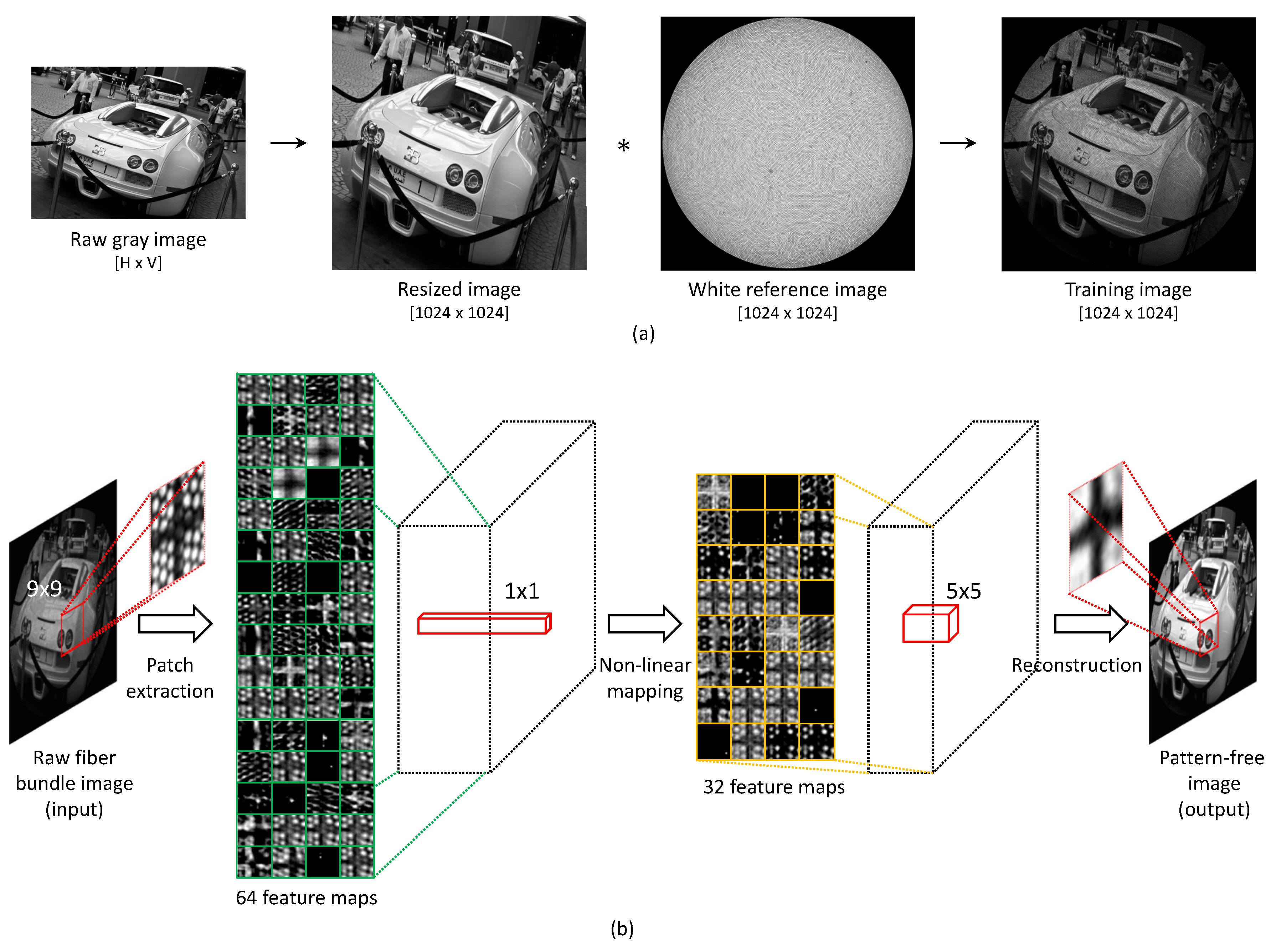

2.1. Deep Learning-Based Honeycomb Pattern Removal

2.1.1. Training Dataset Generation via Honeycomb Pattern Synthesis

2.1.2. Deep Neural Network Architecture for Honeycomb Pattern Removal

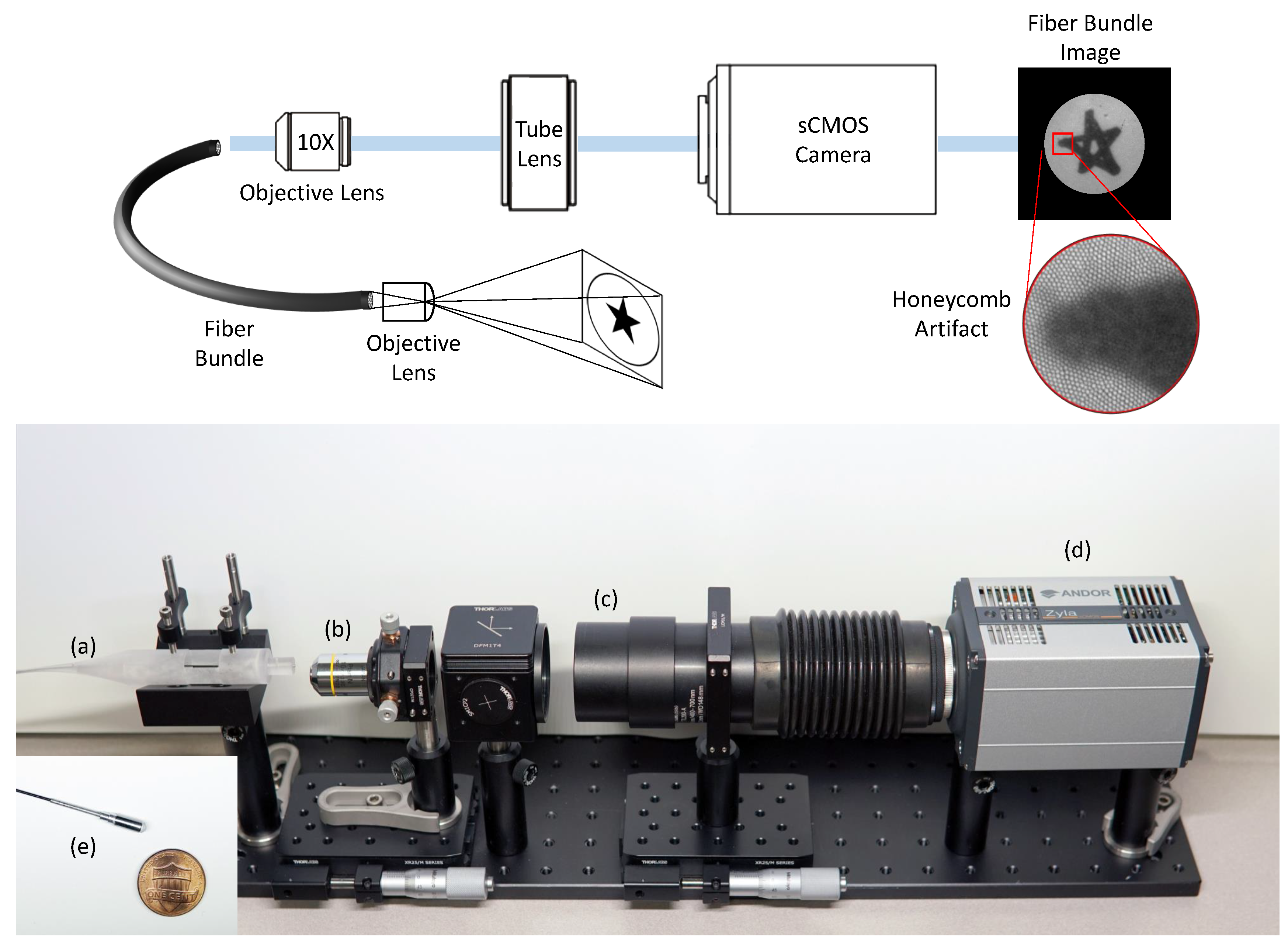

2.2. Experimental Setup

3. Results

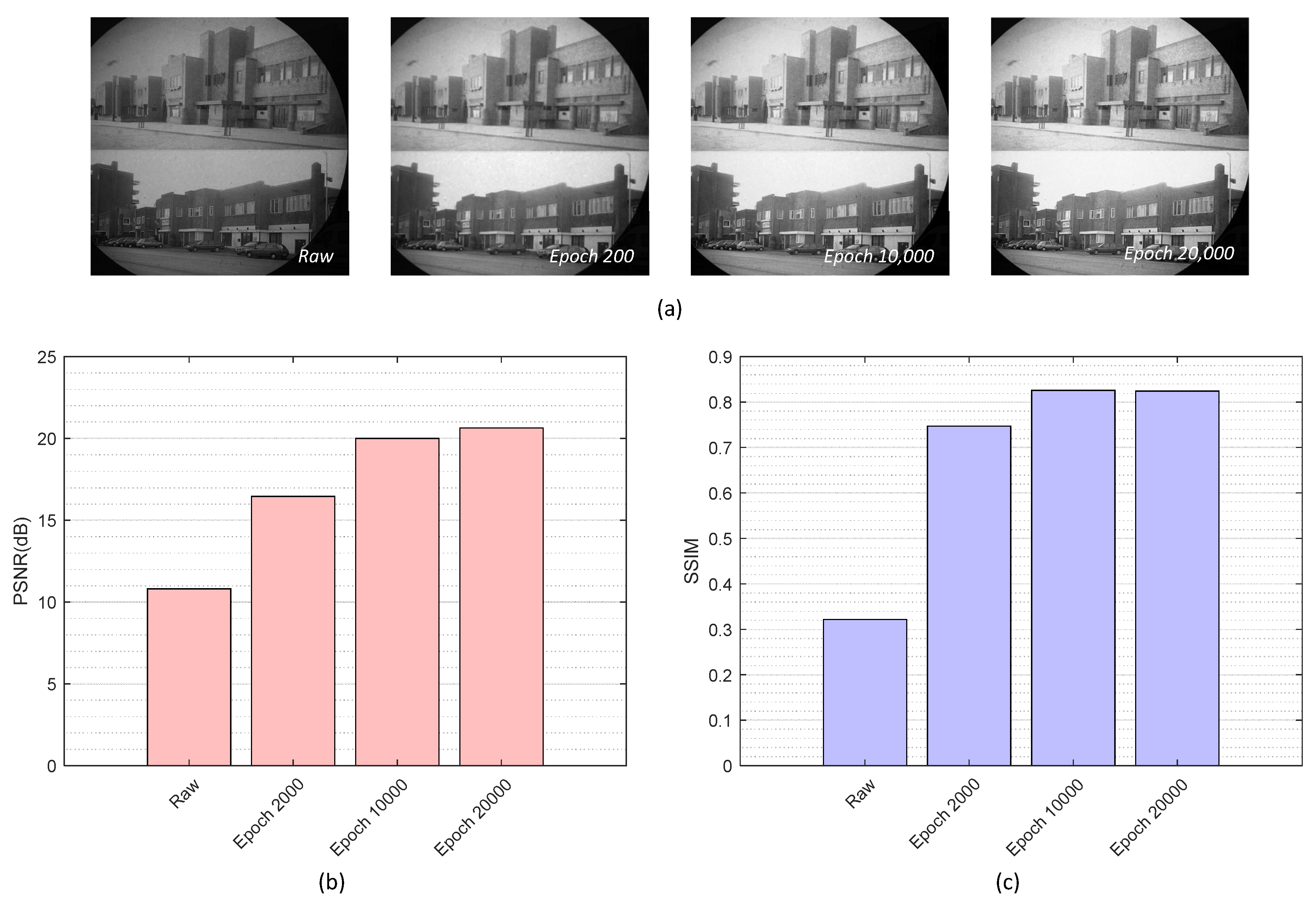

3.1. Validation of HAR-CNN on Synthetic Images

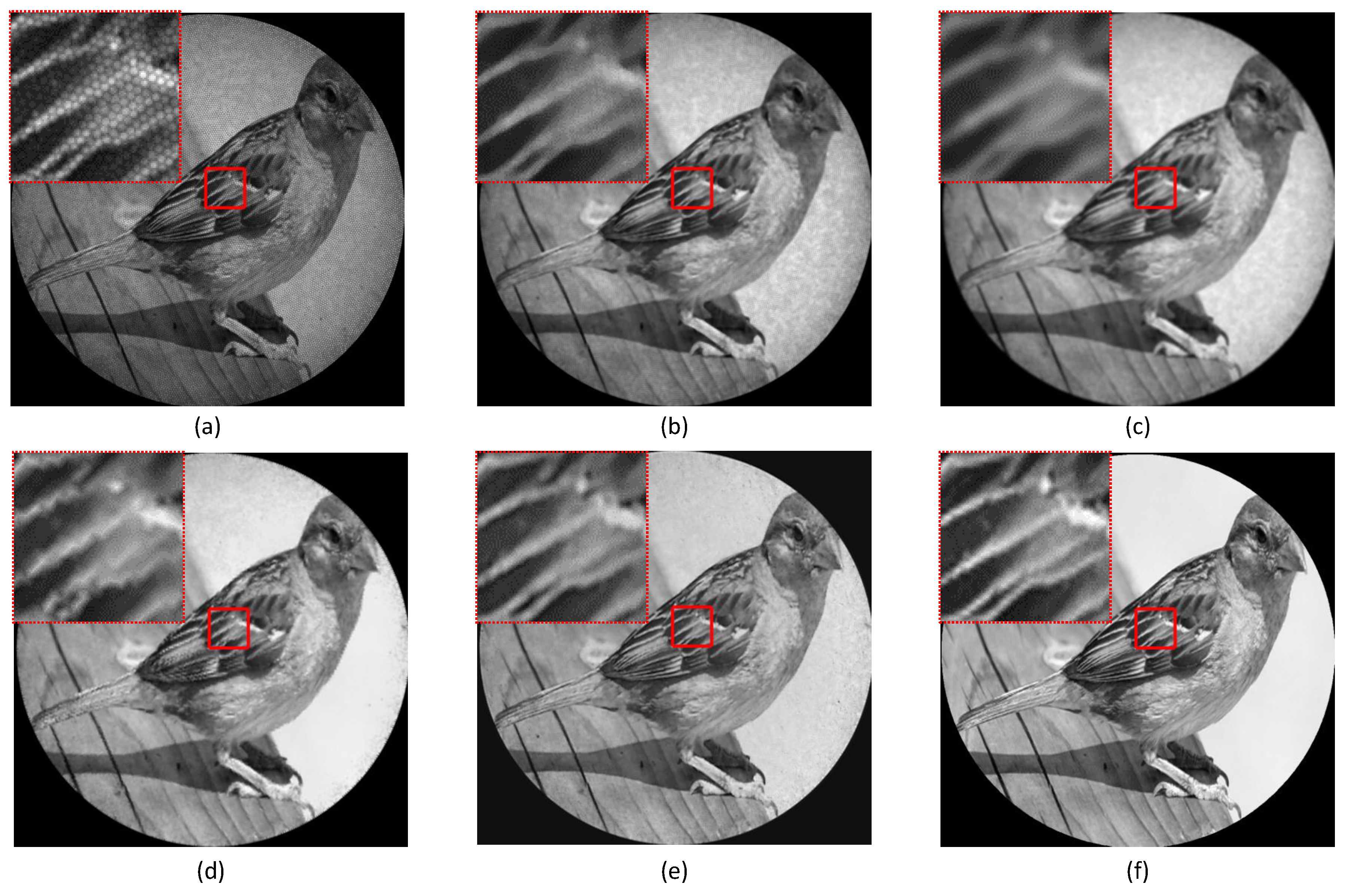

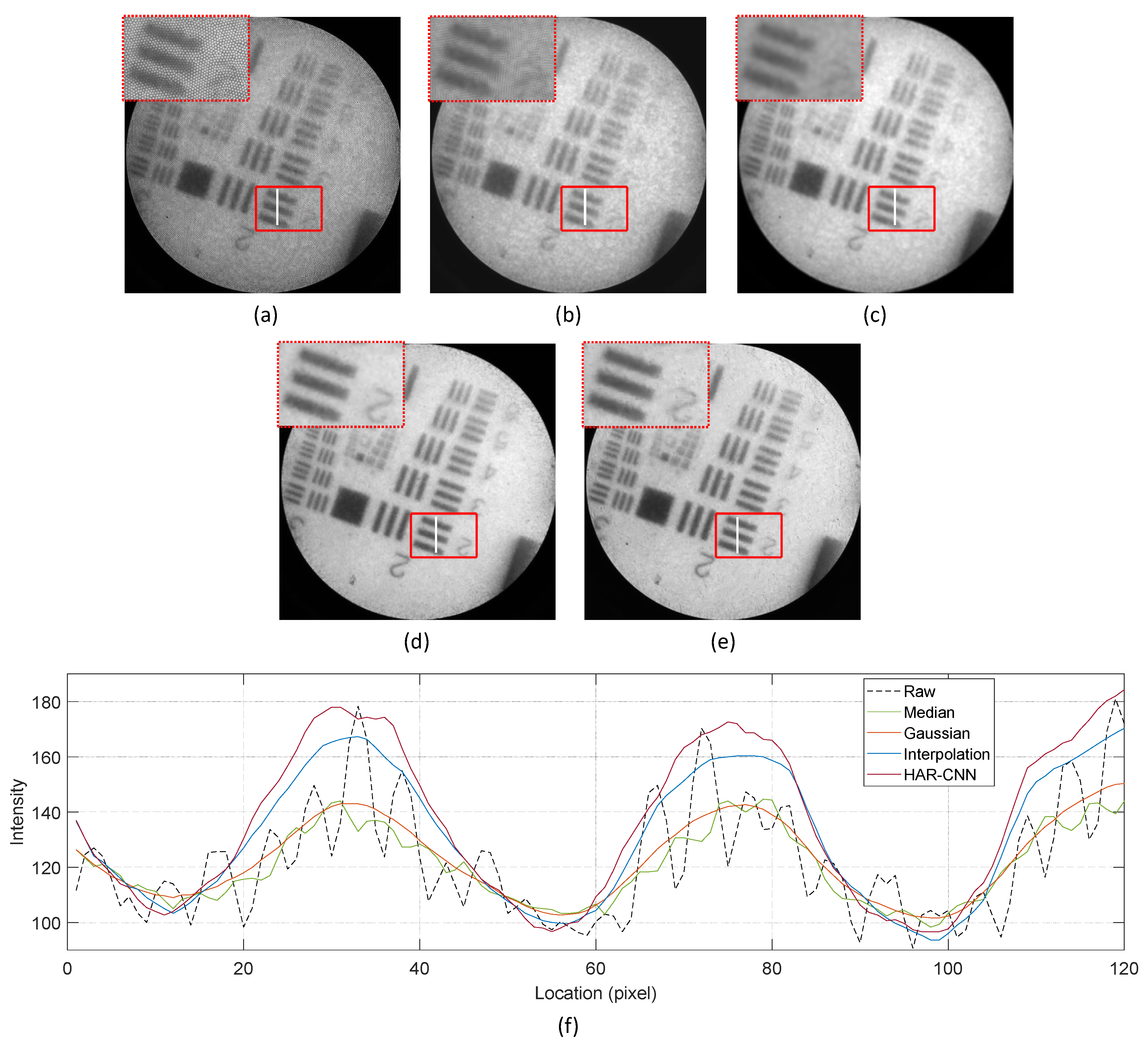

3.2. Evaluation of Honeycomb Pattern Removal on 1951 USAF Target

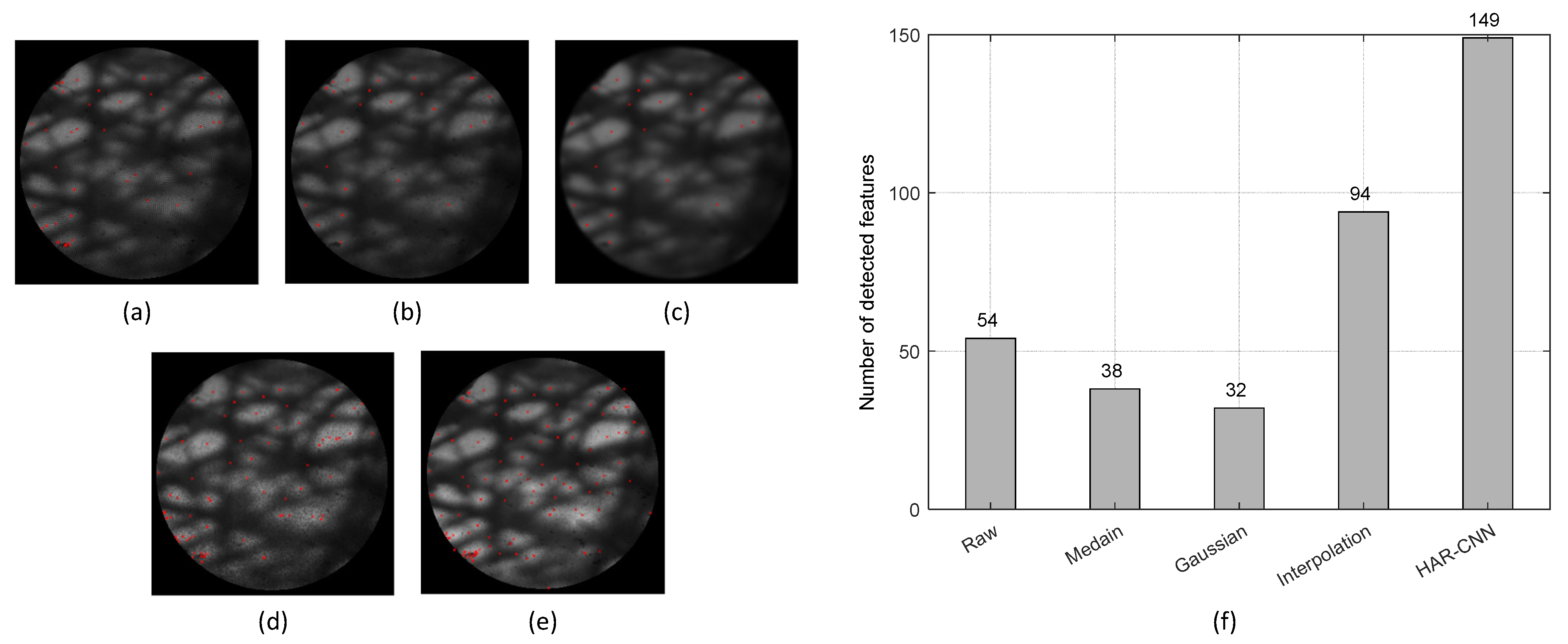

3.3. Honeycomb Pattern Removal and Image Mosaicking on Lens Tissue Sample

4. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Orth, A.; Ploschner, M.; Wilson, E.; Maksymov, I.; Gibson, B. Optical fiber bundles: Ultra-slim light field imaging probes. Sci. Adv. 2019, 5, eaav1555. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Yserbyt, J.; Dooms, C.; Janssens, W.; Verleden, G. Endoscopic advanced imaging of the respiratory tract: Exploring probe-based confocal laser endomicroscopy in emphysema. Thorax 2018, 73, 188–190. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Wang, T.; Uckermann, O.; Galli, R.; Schackert, G.; Cao, L.; Czarske, J.; Kuschmierz, R. Learned end-to-end high-resolution lensless fiber imaging towards real-time cancer diagnosis. Sci. Rep. 2022, 12, 18846. [Google Scholar] [CrossRef]

- Han, J.H.; Lee, J.; Kang, J.U. Pixelation effect removal from fiber bundle probe based optical coherence tomography imaging. Opt. Express 2010, 18, 7427–7439. [Google Scholar] [CrossRef]

- Winter, C.; Rupp, S.; Elter, M.; Munzenmayer, C.; Gerhauser, H.; Wittenberg, T. Automatic adaptive enhancement for images obtained with fiberscopic endoscopes. IEEE Trans. Biomed. Eng. 2006, 53, 2035–2046. [Google Scholar] [CrossRef]

- Dumripatanachod, M.; Piyawattanametha, W. A fast depixelation method of fiber bundle image for an embedded system. In Proceedings of the 2015 8th Biomedical Engineering International Conference (BMEiCON), Pattaya, Thailand, 25–27 November 2015; pp. 1–4. [Google Scholar]

- Regeling, B.; Thies, B.; Gerstner, A.O.; Westermann, S.; Müller, N.A.; Bendix, J.; Laffers, W. Hyperspectral imaging using flexible endoscopy for laryngeal cancer detection. Sensors 2016, 16, 1288. [Google Scholar] [CrossRef] [PubMed]

- Perperidis, A.; Dhaliwal, K.; McLaughlin, S.; Vercauteren, T. Image computing for fibre-bundle endomicroscopy: A review. Med. Image Anal. 2020, 62, 101620. [Google Scholar] [CrossRef] [PubMed]

- Elter, M.; Rupp, S.; Winter, C. Physically motivated reconstruction of fiberscopic images. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 599–602. [Google Scholar]

- Wang, P.; Turcatel, G.; Arnesano, C.; Warburton, D.; Fraser, S.E.; Cutrale, F. Fiber pattern removal and image reconstruction method for snapshot mosaic hyperspectral endoscopic images. Biomed. Opt. Express 2018, 9, 780–790. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Z.; Cai, B.; Kou, J.; Liu, W.; Wang, Z. A Honeycomb Artifacts Removal and Super Resolution Method for Fiber-Optic Images. In Proceedings of the International Conference on Intelligent Autonomous Systems, Shanghai, China, 3–7 July 2016; pp. 771–779. [Google Scholar]

- Lee, C.Y.; Han, J.H. Elimination of honeycomb patterns in fiber bundle imaging by a superimposition method. Opt. Lett. 2013, 38, 2023–2025. [Google Scholar] [CrossRef] [PubMed]

- Cheon, G.W.; Cha, J.; Kang, J.U. Random transverse motion-induced spatial compounding for fiber bundle imaging. Opt. Lett. 2014, 39, 4368–4371. [Google Scholar] [CrossRef] [PubMed]

- Renteria, C.; Suárez, J.; Licudine, A.; Boppart, S.A. Depixelation and enhancement of fiber bundle images by bundle rotation. Appl. Opt. 2020, 59, 536–544. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J.; Zhou, X.; Liu, J.; Pan, L.; Pan, Z.; Zou, F.; Li, Z.; Li, F.; Ma, X.; Geng, C.; et al. Optical Fiber Bundle-Based High-Speed and Precise Micro-Scanning for Image High-Resolution Reconstruction. Sensors 2021, 22, 127. [Google Scholar] [CrossRef] [PubMed]

- Shao, J.; Zhang, J.; Huang, X.; Liang, R.; Barnard, K. Fiber bundle image restoration using deep learning. Opt. Lett. 2019, 44, 1080–1083. [Google Scholar] [CrossRef] [PubMed]

- Shao, J.; Zhang, J.; Liang, R.; Barnard, K. Fiber bundle imaging resolution enhancement using deep learning. Opt. Express 2019, 27, 15880–15890. [Google Scholar] [CrossRef] [PubMed]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

| Method | Raw | Median 1 | Gaussian 2 | Interpolation | HAR-CNN |

|---|---|---|---|---|---|

| PSNR (dB) | 20.58 | 19.74 | 18.98 | 23.66 | 26.52 |

| SSIM | 0.7301 | 0.7978 | 0.6878 | 0.7719 | 0.9119 |

| Method | s1 | r2 | q3 | |

|---|---|---|---|---|

| Raw image | 0 | 0.4654 | 0.2327 | 0.0931 |

| Median | 0.6610 | 0.2880 | 0.4745 | 0.5864 |

| Gaussian | 0.7725 | 0.2821 | 0.5273 | 0.6744 |

| Interpolation | 0.7509 | 0.4044 | 0.5886 | 0.6992 |

| HAR-CNN | 0.7758 | 0.4569 | 0.6164 | 0.7120 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, E.; Kim, S.; Choi, M.; Seo, T.; Yang, S. Honeycomb Artifact Removal Using Convolutional Neural Network for Fiber Bundle Imaging. Sensors 2023, 23, 333. https://doi.org/10.3390/s23010333

Kim E, Kim S, Choi M, Seo T, Yang S. Honeycomb Artifact Removal Using Convolutional Neural Network for Fiber Bundle Imaging. Sensors. 2023; 23(1):333. https://doi.org/10.3390/s23010333

Chicago/Turabian StyleKim, Eunchan, Seonghoon Kim, Myunghwan Choi, Taewon Seo, and Sungwook Yang. 2023. "Honeycomb Artifact Removal Using Convolutional Neural Network for Fiber Bundle Imaging" Sensors 23, no. 1: 333. https://doi.org/10.3390/s23010333

APA StyleKim, E., Kim, S., Choi, M., Seo, T., & Yang, S. (2023). Honeycomb Artifact Removal Using Convolutional Neural Network for Fiber Bundle Imaging. Sensors, 23(1), 333. https://doi.org/10.3390/s23010333