Abstract

A multi-line structured light measurement method that combines a single-line and a three-line laser, in which precision sliding rails and displacement measurement equipment are not required, is proposed in this paper. During the measurement, the single-line structured light projects onto the surface of an object and the three-line structured light remains fixed. The single-line laser is moved and intersects with the three-line laser to form three intersection points. The single-line light plane can be solved using the camera coordinates of three intersection points, thus completing the real-time calibration of the scanned light plane. The single-line laser can be scanned at any angle to determine the overall complete three-dimensional (3D) shape of the object during the process. Experimental results show that this method overcomes the difficulty of obtaining information about certain angles and locations and can effectively recover the 3D shape of the object. The measurement system’s repetition error is under 0.16 mm, which is sufficient to measure the 3D shapes of complicated workpieces.

1. Introduction

At present, 3D measurement is widely used in the fields of heritage restoration and machinery production [1]. Among them, 3D object surface contour measurement methods are generally divided into two types, contact and non-contact. The current contact measurement methods, such as coordinate measuring machines [2], can basically meet the requirements of measurement accuracy, but the contact measurement methods have problems such as slow measurement speed and easy damage to the contact surface, which limit their measurement accuracy. Non-contact measurements comprise projected structured light, interferometry, ultrasonic measurement, and other techniques for applications in the field of quick measurement [3,4,5].Structured light projection 3D measurement technology, for example, is easy to use and may provide accurate real-time measurements. In structured light projection 3D measurement technology, laser projection 3D measurement technology is a main research technique. This technique is gradually displacing contact measuring methods in a variety of industries, including car assembly [6], reverse engineering, and part quality inspection [7,8,9].

Currently, line laser is mainly used in laser projection 3D measurements to scan and reconstruct the object under test [10,11,12]. The line structured light system emits a laser beam that forms a strip projection on the surface of the object. Afterwards, the camera captures the laser stripe and extracts the center of the laser stripe. Finally, the system converts the light strip pixel information into physical information based on the laser plane equation and camera parameters and fits these 3D point cloud to obtain the 3D shape of the object [13,14,15,16].

To calibrate the structured light planes, Dewar [17] projected the laser onto some fine non-coplanar threads mounted in 3D space, and then defined the structured light planes by obtaining images of bright light points from the camera as control points. Huang [18] presented a new calibration method for line structured light multi-vision sensors based on combined targets. Each line structured light multi-vision sensor is calibrated locally with high accuracy by combining targets. The position of the target has no impact on the profile feature imaging for ball targets. Using a single ball target as his foundation, Zhou [19] proposed a technique for the quick calibration of a line-structured light system. Xie [20] used a planar target and a raising block to complete the field calibration of the linear structured light sensor. The three-dimensional measurement method based on line-structured light can only measure 3D contour information on one cross-section of an object at a time. Therefore, motion devices are required to sweep the laser plane across the surface of the measured object to gather 3D data on the complete surface [21]. Both translational and rotational scanning methods used slides and rotary tables and are commonly used to create these 3D measurement systems.

To estimate the size of big forgings in thermal condition, Zhang [22] used high-precision guides in combination with line structured light. The experimental results revealed that the procedure is effective with an error of less than 1 mm, which meets the accuracy criteria. For remote inspection of internal delamination in wind turbine blades, Hwang [23] presented a continuous line laser scanning thermography (CLLST) method. The test results have shown that the 10 mm diameter internal delamination located 1 mm underneath the blade surface was successfully detected even 10 m from the target blade. The above methods usually require the scanning direction to be strictly perpendicular to the structured light plane, in which the strict position relationship is too difficult to achieve in practical measurements. Then, a number of improved methods have been proposed. Wu [24] designed and manufactured a calibration device through the perspective invariance and the projective invariant cross-ratio properties. Experimental results with different test pieces demonstrated that the minimum geometric accuracy was 18 μm and the repeatability was less than ±3 μm. Zeng [25] used a planar target to calibrate the translation vector, which solved the traditional inconvenience of calibrating the precise movement direction by high-precision auxiliary equipment. Lin [26] proposed a binocular stereo vision based on the camera translation direction cosine calibration method to realize the conversion of point clouds from local to a global coordinate system, so as to obtain the 3D data of the object under test. When it is necessary to measure the inner surface of an object or complete information about the outside of an object, a series of methods were proposed in which rotary tables or rotating guides were applied. Cai [27] used a line laser in combination with a motor, measuring the 3D shape of potatoes, which can help farmers to analyze their phenotypic characteristics and grade them. Guo [28] used a high precision air floating rotary table and a line structure light sensor to rapidly obtain information on the 3D shape of gear. Liu [29] placed a mouse on a metal part on a rotating platform with a motor to measure its 3D shape by modulated the average stripe width within a favorable range. Measurements on different parts show that the method improves the integrity of the surface. Wang [30] put the pipe on a turntable and the linear structured light measuring device on the middle of the pipe. The three-dimensional measurement of the inner wall of the pipe was realized by rotating the pipe. In panoramic 3D shape measurement based on a turntable, the point cloud registration accuracy will be influenced to some extent by the calibration of the rotation axis. Therefore, some methods of calibrating the rotation axis have been proposed. Cai [31] proposed an auxiliary camera-based calibration method. The experimental results show that the method improves the calibration accuracy of the rotation axis vector. Although all of the approaches above provide reasonable measurement precision, they are extremely dependent on the equipment’s accuracy, both in the experimental setup and during the scanning process. For example, the slide’s sliding accuracy, the rotary stage’s rotation accuracy, and the stepper motor’s displacement accuracy will all have an impact on the final measurement results. Furthermore, in these methods, the angle of light plane scans is frequently fixed and cannot be adjusted during the scanning process, which can be easily affected by noise and folds, resulting in missing image information in certain areas.

In order to lessen the inaccuracies caused by the equipment, several academics have proposed using handheld laser measurement systems. Simon et al. [32] presented a low-cost system for 3D data acquisition and fast pairwise surface registration. The object can simply be placed under a background plate with a priori information, and the laser can be held in the hand to obtain the complete 3D shape of the object. However, this method often requires a background plate larger than the object when measuring the object, which is not conducive to industrial field measurements.

This paper completes the construction of a multi-line structured light 3D measurement system combining single-line and three-line lasers. The procedure can be summarized as: first, a structured light system is calibrated, which includes a monocular camera and a three-line structured light. Second, the pixel coordinates of three intersection points are detected, which are generated when single-line structure light intersects with three-line structure light during scanning. Finally, the single-line light plane can be calibrated in real-time by means of the camera coordinates of the three intersection points, which are solved by the three-line structured light system. During the measurement, the single-line structured light can be scanning at any angle, ensuring that no information is lost in a particular location of the object. The difficulty of obtaining information about certain angles and locations when the laser travels on a set track is overcome, and the demand for equipment, especially precision mobile equipment, is reduced.

The organization of this paper is as follows. Section 2 describes the principle of multi-line structured light measurement system. Section 3 introduces the calibration of structured light systems. Section 4 describes the method of 3D measurement. Finally, the experimental results and conclusions are presented in Section 5 and Section 6, respectively.

2. The Principle of Multi-Line Structured Light Measurement System

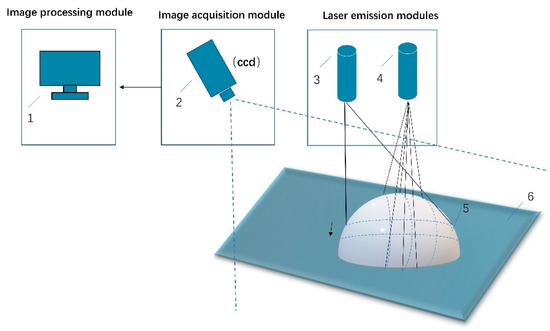

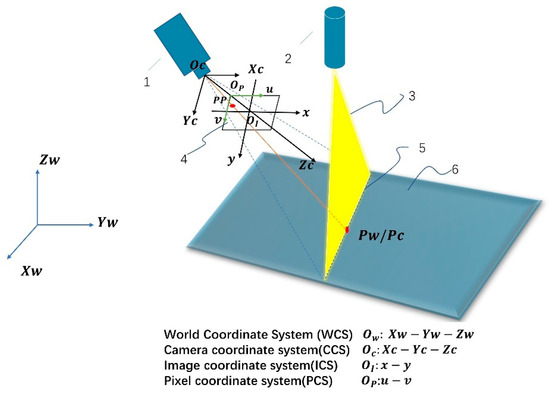

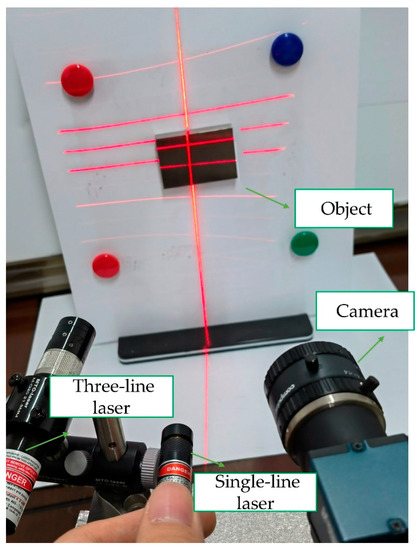

The measurement system designed in this paper consists of three main components: a laser emission module, an image acquisition module and an image processing module, as shown in Figure 1. The laser transmitter module consists of a three-line laser and a single-line laser. The image acquisition module captures line laser streak images that are distorted due to height fluctuations on the surface of the object, mainly through a charge-coupled device (CCD) image sensor. The image processing module performs the subsequent processing of the image acquired by the CCD camera, for example, by obtaining the pixel coordinate values of each light stripe center in the image. As a result of the spatial relationship between the world coordinate system, the camera coordinate system, and the image coordinate system, in combination with the pixel coordinate system, the coordinates of the object in the camera coordinate system can be obtained by a coordinate transformation for non-contact measurement. The coordinate relationships are shown in Figure 2.

Figure 1.

The diagram of the measurement system. (1) Computer, (2) Camera, (3) Single-line laser, (4) Three-line laser, (5) Object, (6) Experiment platform.

Figure 2.

The geometric structure of the line structured vision sensor. (1) Camera, (2) Single-line laser, (3) Laser projector, (4) Image plane, (5) Light stripe, (6) Experiment platform.

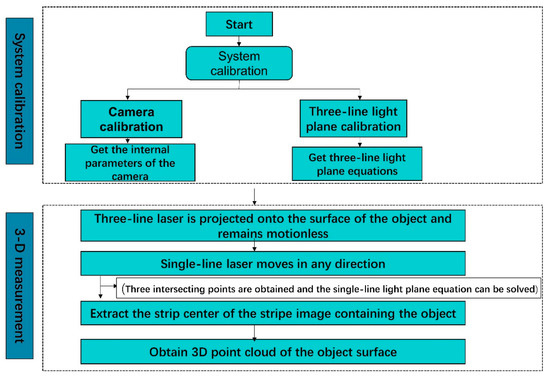

As shown in Figure 3, the measurement scheme of the system consists of two steps: system calibration and 3D measurement. The system calibration includes camera calibration and three-line light plane calibration. The camera is calibrated according to the acquired calibration plate image to obtain the internal parameters of the camera. Next, the calibration of the three-line light plane is completed by a flat target with a black square pattern. Then, the equation of the single-line light plane can be derived from the three intersections of the three-line light plane and the single-line light plane. During the 3D measurement, the centerline of the light-strip image of the object surface can be extracted using the Steger algorithm [33]. Then, the extracted pixel coordinates are converted to 3D coordinates in the camera coordinate system by combining the camera internal parameters and the equation of the single-line light plane to complete the 3D measurement of the object.

Figure 3.

System measurement flow chart.

3. Calibration of Structured Light Systems

The calibration of the structured light system in this paper is divided into two parts. The camera calibration is the first step to obtaining the camera internal parameters, and the three-line light plane calibration is the second step to obtaining the three-line light plane equations.

3.1. Camera Calibration

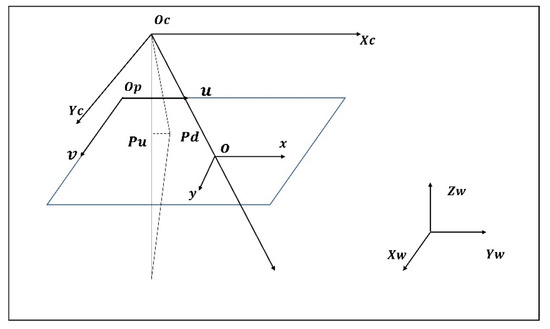

Figure 4 shows a schematic diagram of a typical coordinate system. is a random point in 3D space, the world coordinates of the change point are (), the camera coordinates are (), is the actual projection of on the image, and is the ideal projection point. The image coordinates are , and () are the pixel coordinates of . The positional relationship [34] between the world coordinate system and the camera coordinate system can be denoted by

Figure 4.

Coordinate system of measurement system.

In order to obtain more accurate camera parameters, a non-linear imaging model of the camera is used [35]. Taking into account the effect of radial and tangential aberrations, the meaning of the actual projection coordinates is as follows.

where , are the radial and tangential distortion coefficients, respectively. This produces a nonlinear optimization function. The function can be solved precisely by means of the Levenberg–Marquardt (L–M) algorithm. Finally, the intrinsic matrix and the distortion coefficient are obtained.

3.2. The Principle of Three-Line Light Plane Calibration

The three-line structured light’s calibration precision will have a direct impact on the measurement accuracy because it is utilized to position the scanning light plane in real-time.

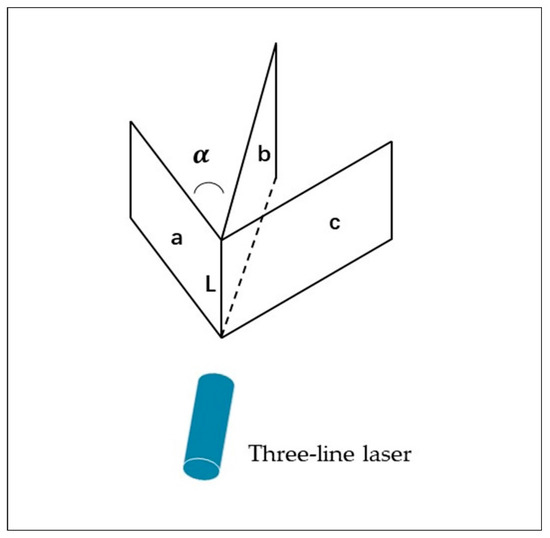

In this paper, an intersection style multi-line structured light system is mainly used, where the laser throws three light planes intersecting on the same line, and the angle between the light planes is a constant α, as shown in Figure 5.

Figure 5.

Spatial geometric relationship of the structured light planes.

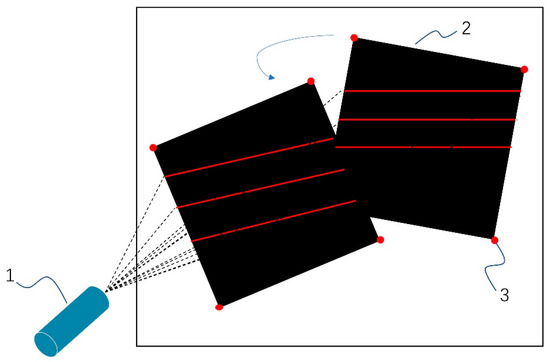

In order to obtain the light plane equations, this section consists of the following three main steps: the first step determines the external parameters of the flat target with a black square pattern, as shown in Figure 6. The second step uses the Steger method to obtain the coordinates of the center point of the light stripe on the target image. The center point coordinates are the projected into the camera coordinate system by the internal parameters of the camera and the external parameters of the target as shown in Figure 7. The third step uses these three sets of points as control points to determine the equations of the structured light plane with the a priori position relationship of the structured light plane as the constraint which is the angle between the light planes (the constant α = 5°) as shown in Figure 8. The light plane equations are as follows

where are the light plane equation coefficient, is a scale factor.

Figure 6.

Three-line light plane calibration. (1) Three-line laser, (2) A flat target with black square pattern, (3) Corner Point.

Figure 7.

Three sets of 3-D points.

Figure 8.

Three light planes in space.

A uniform objective function is established:

under the constraint

which ensures that the angle between the light planes is constant. Here, , represents three sets of 3D points corresponding to three planes. Finally, the coefficients of Equation (6) are solved using the L–M algorithm. In this way, the a priori geometric relation of the planes can be accurately transplanted into the camera coordinate frame.

4. Method of Three-Dimensional Measurement

The measurement process in this paper is achieved by single-line structured light scanning. Therefore, real-time calibration of the single-line light plane is essential to the measurement process. The real-time calibration method is divided into three main steps:

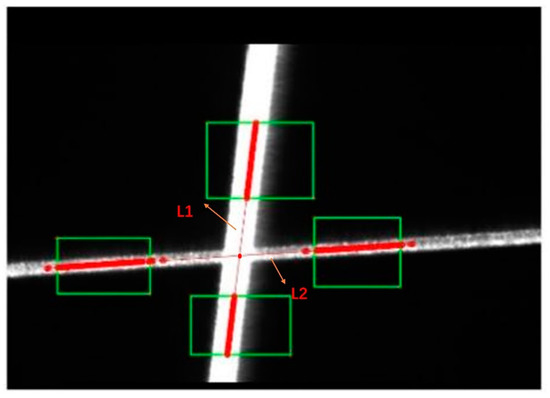

- Step 1: Detect the pixel coordinates of the intersection points

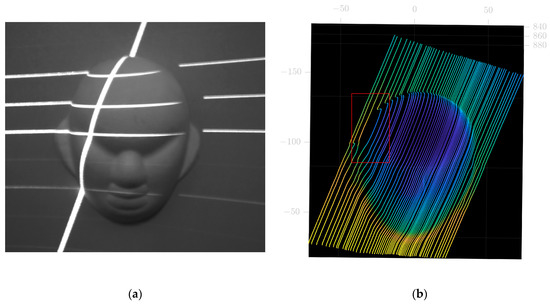

Since the single-line laser must remain intersected by the three-line laser during the measurement, three intersection points are generated. As shown in Figure 9, the centerline of the light stripe in the area near the intersection point is detected, and the coordinates of the intersection point are calculated by fitting the lines L1 and L2.

Figure 9.

Detect the pixel coordinates of the intersection point.

- Step 2: Calculate the intersection points in camera coordinates

The three intersection points are located both in the single-line structured light plane and in each plane of the three-line structured light respectively. Therefore, by using the internal parameters of the camera and the three-line structured light plane equation, the three-dimensional coordinates of the three intersection points in the camera coordinate system can be solved.

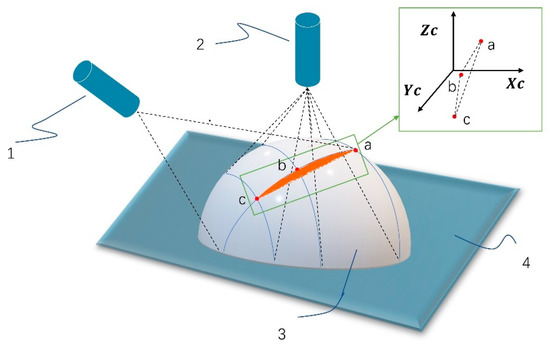

- Step 3: Fitting the space plane equation

The space plane equation, which is the single-line light plane equation, can be fitted using the three-dimensional coordinates of the three intersection points, as illustrated in Figure 10.

Figure 10.

Three points in space determine a plane. (1) Single-line laser, (2) Three-line laser, (3) Object, (4) Experiment platform.

After completing the real-time calibration, the feature coordinates in a specific plane in the 3D space are obtained by the single-line structured light in the case of a single frame. Multiple frames of data can be registered under the same coordinate system to obtain the original point cloud data of the object to be reconstructed with the single-line laser scanning at any angle.

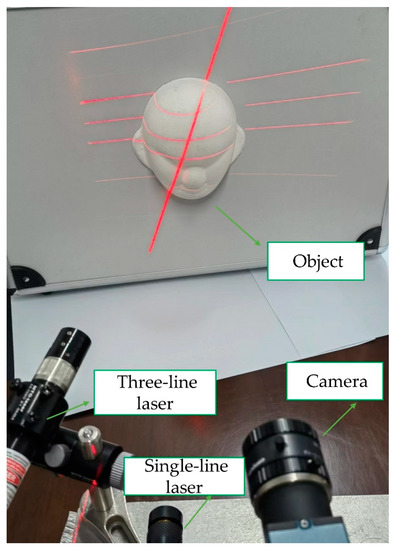

5. Experiment

In this paper, the need for precision sliding rails and other auxiliary equipment in the traditional method is eliminated, and the 3D information of the object can be obtained by simply irradiating two lasers onto the surface of the object to be measured. The measurement system is shown in Figure 11. The configuration used in this study is extremely simple. It generally constitutes a three-line laser (Lasiris; Coherent, Inc., Santa Clara, CA, USA) emitting a Gaussian beam at a wave-length of 660 nm with an output power of 20 mW, a single-line laser emitting a Gaussian beam at a wave-length of 650 nm with an output power of 50 mW (Lei xin Yanxian-LXL65050) and an 8 mm lens camera (MER-1070-14U3M; DaHeng, Beijing, China). The single-line laser can be moved freely during the measurement, and can even be moved by hand. The experiments in this paper consist of camera calibration, three-line structured light calibration, and real-time measurement.

Figure 11.

Measurement image of the face model.

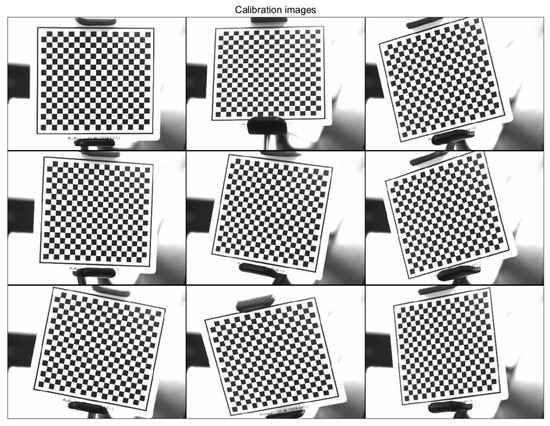

5.1. Calibration of the Camera

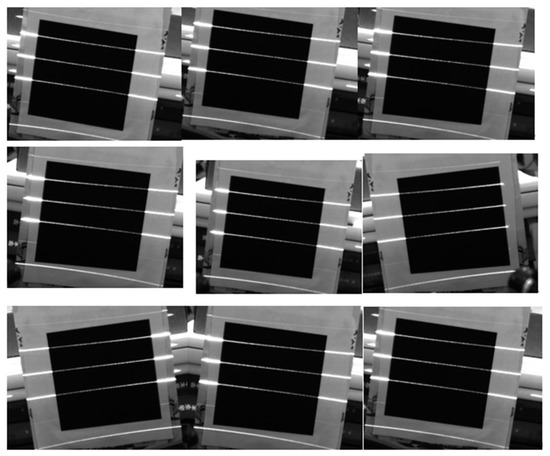

As shown in Figure 12, the checkerboard target is used, and the size of the checker is 4 × 4 mm. Four sets of calibration experiments were carried out, with nine images acquired by each camera set. The overall mean pixel error of the calibrated images was selected as the basis for judging the camera calibration results.

Figure 12.

Camera calibration.

Finally, a set of camera internal parameters and distortion parameters were obtained.

5.2. Calibration of Three-Line Light Plane

Nine patterns of the planar target at different orientations in space are acquired by the camera, and three-line laser is needed to be projected on the target synchronously, as shown in Figure 13. Using the method proposed in Section 3.2, the three structured light planes can be solved, and the equations of the planes are as follows:

Figure 13.

The image of light stripe centerline extraction with black plate.

5.3. Object Measurement in Real Time

In this experiment, a face mask model and a standard gauge block were measured separately.

5.3.1. Face Mask Model Measurement in Real Time

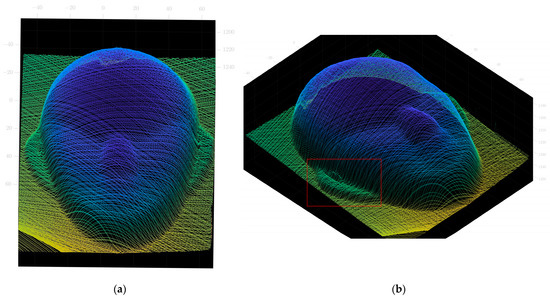

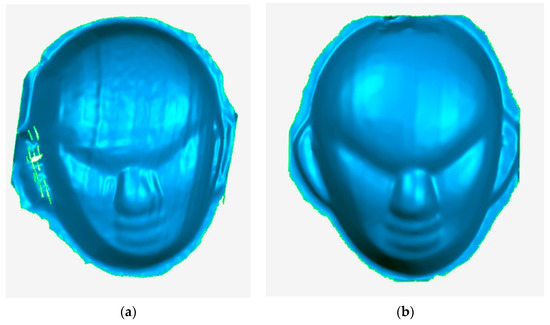

For comparison purposes, the point cloud of the face mask model obtained by traditional methods using slide rails is shown in Figure 14. It can be observed that the point cloud of face model has relatively few data points within the ear region. This is due to the presence of folds in the ear area, causing occlusions or discontinuities in the scanned image. Unlike traditional methods, the method proposed in this paper allows free control of the scanning direction, thus reducing the influence of the folded area on the image and increasing the effective data information in this area. The point cloud data obtained using the method is shown in Figure 15. The 3D reconstruction images obtained by the traditional method and the method proposed in this paper are shown in Figure 16. The results show that the measurements using the method proposed in this paper are effective.

Figure 14.

Traditional method of measurement. (a) Face mask model from camera view. (b) Point cloud image from the traditional method.

Figure 15.

Point cloud image from this paper. (a) Point cloud image from frontal perspective (b) Point cloud image from the side perspective.

Figure 16.

3D reconstruction image. (a) Traditional method (b) The method of this paper.

5.3.2. Standard Gauge Block Measurement in Real Time

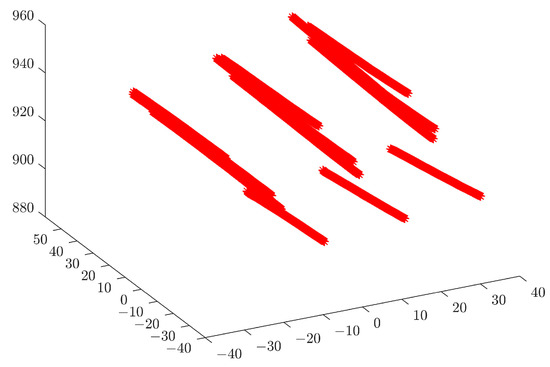

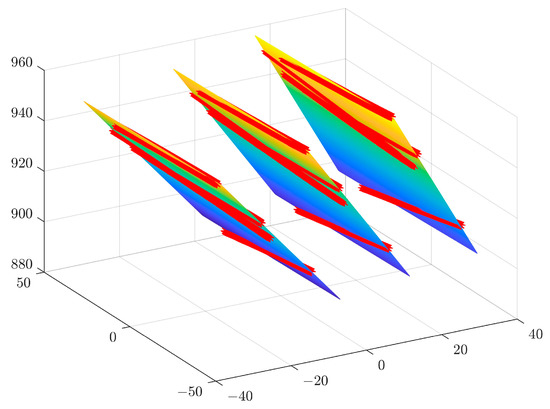

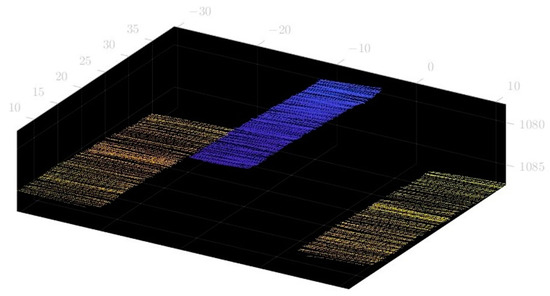

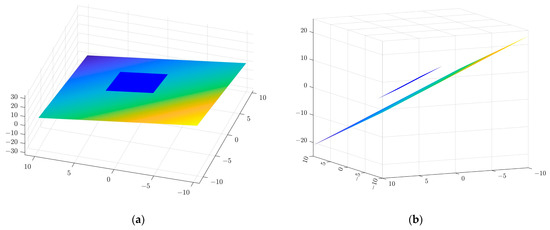

In order to determine the spatial measurement accuracy of the system, the example of four measuring blocks with known thicknesses of 70 mm, 60 mm, 50 mm and 40 mm, respectively, is used. The accuracy of a standard part is 0.0015 mm. The real-time scan image is shown in Figure 17. The acquired point cloud image is shown in Figure 18. The point cloud data from the surface of the Gauge block and the point cloud scanned at the bottom are used to fit two parallel planes, and the distance between the planes is the thickness of the gauge block. The fitted spatial plane images are shown in Figure 19. The measurement results are shown in Table 1.

Figure 17.

Measurement image of the standard gauge block.

Figure 18.

Point cloud image of a gauge block.

Figure 19.

Fitting spatial plane images. (a) Top view. (b) Side view.

Table 1.

Measurement results of the standard gauge blocks (mm).

The RMSE is the root mean square error. The STD is the standard deviation. Table 2 shows that between the measurement ranges between 40 mm and 70 mm, the standard deviation is less than 0.11 mm, and the root mean square error is less 0.11 mm, demonstrating the measurement system’s high level of stability. The maximum measurement error for the standard gauge block does not exceed 0.16 mm. In conclusion, it can be stated that the system’s spatial measurement accuracy is 0.16 mm when considering the maximum measurement variation.

Table 2.

Comparison of the measured value with the actual value.

6. Conclusions

This paper proposes a three-dimensional measurement system that combines a single-line and a three-line laser. The measurement system experimental platform was developed without the need of typical equipment for this technique, such as slide rails and step motors. This method therefore reduces experimental error caused by sophisticated equipment or complicated protocols. Thus, the proposed method is more convenient and straightforward. In addition, when measuring the object from multiple angles, the traditional methods could not deal with folded area (such as a human ear region) well. This issue is solved by the method proposed in this paper. Experiments show that the measurement approach given in this paper can successfully reconstruct the human face mask model and gauge block, as well as highlighting this method’s simplicity and effectiveness. Experiments from measuring blocks of known thickness show that the spatial measurement accuracy of the system is 0.16 mm when the maximum measurement variation is considered.

Author Contributions

Conceptualization, Q.S. and Z.R.; methodology, Q.S. and Z.R.; software, Q.S. and Z.R.; validation, Q.S. and Z.R.; investigation, Q.S.; resources, Z.R.; data curation, Q.S., Z.R., M.W. and W.D.; writing—original draft preparation, Q.S. and Z.R.; project administration, Q.S. and Z.R.; supervision, funding acquisition, Q.S., J.Z. and M.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work described in this paper is supported by Foundation of Science and Technology Department of Jilin Province under Grant No. YDZJ202201ZYTS531.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available from the first author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, W.; Luo, Z.; Hou, D.; Mao, X. Multi-line laser projection 3D measurement simulation system technology. Optik 2021, 231, 166390. [Google Scholar] [CrossRef]

- Uddin, M.S.; Mak, C.Y.E.; Callary, S.A. Evaluating hip implant wear measurements by CMM technique. Wear Int. J. Sci. Technol. Frict. Lubr. Wear 2016, 364/365, 193–200. [Google Scholar] [CrossRef]

- Rodríguez, J.A.M.; Rodríguez-Vera, R.; Asundi, A.; Campos, G.G. Shape detection using light line and Bezier approximation network. Imaging Sci. J. 2007, 55, 29–39. [Google Scholar] [CrossRef]

- Apolinar, J.; Rodríguez, M. Three-dimensional microscope vision system based on micro laser line scanning and adaptive genetic algorithms. Opt. Commun. 2017, 385, 1–8. [Google Scholar] [CrossRef]

- Alanis, F.; Munoz, A. Microscope vision system based on micro laser line scanningfor characterizing micro scale topography. Appl. Opt. 2020, 59, D189–D200. [Google Scholar] [CrossRef]

- Wu, Z.-F.; Fan, Q.-L.; Ming, L.; Yang, W.; Lv, K.-L.; Chang, Q.; Li, W.-Z.; Wang, C.-J.; Pan, Q.-M.; He, L.; et al. A comparative study between traditional head measurement and structured light three-dimensional scanning when measuring infant head shape. Transl. Pediatr. 2021, 10, 2897–2906. [Google Scholar] [CrossRef] [PubMed]

- Tran, T.T.; Ha, C.K. Non-contact Gap and Flush Measurement Using Monocular Structured Multi-line Light Vision for Vehicle Assembly. Int. J. Control Autom. Syst. 2018, 16, 2432–2445. [Google Scholar] [CrossRef]

- Xue-Jun, Q.U.; Zhang, L. 3D Measurement Method Based on Binocular Vision Technique. Comput. Simul. 2011, 2, 373–377. [Google Scholar]

- Wu, F.; Mao, J.; Zhou, Y.F.; Qing, L. Three-line structured light measurement system and its application in ball diameter measurement. Opt. Z. Fur Licht Und Elektronenoptik J. Light Electronoptic 2018, 157, 222–229. [Google Scholar] [CrossRef]

- Li, W.; Fang, S.; Duan, S. 3D shape measurement based on structured light projection applying polynomial interpolation technique. Opt. Int. J. Light Electron Opt. 2013, 124, 20–27. [Google Scholar] [CrossRef]

- Zhao, H. High-Precision 3D Reconstruction for Small-to-Medium-Sized Objects Utilizing Line-Structured Light Scanning: A Review. Remote Sens. 2021, 13, 4457. [Google Scholar]

- Zhang, G.; Liu, Z.; Sun, J.; Wei, Z. Novel calibration method for a multi-sensor visual measurement system based on structured light. Opt. Eng. 2010, 49, 43602. [Google Scholar] [CrossRef]

- Zhang, R.; Liu, W.; Lu, Y.; Zhang, Y.; Ma, J.-W.; Jia, Z. Local high precision 3D measurement based on line laser measuring instrument. In Proceedings of the Young Scientists Forum, Shanghai, China, 24–26 November 2017. [Google Scholar]

- Bleier, M.; Lucht, J.; Nüchter, A. SCOUT3D—AN UNDERWATER LASER SCANNING SYSTEM FOR MOBILE MAPPING. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 13–18. [Google Scholar] [CrossRef]

- Yao, L.; Liu, H. Design and Analysis of High-Accuracy Telecentric Surface Reconstruction System Based on Line Laser. Appl. Sci. 2021, 11, 488. [Google Scholar] [CrossRef]

- Yang, G.; Wang, Y. High resolution laser fringe pattern projection based on MEMS micro-vibration mirror scanning for 3D measurement. Opt. Laser Technol. 2021, 142, 107189. [Google Scholar] [CrossRef]

- Dewar, R.; Engineers, S.O.M. Self-Generated Targets for Spatial Calibration of Structured-Light Optical Sectioning Sensors with Respect to an External Coordinate System; Society of Manufacturing Engineers: Southfield, MI, USA, 1988. [Google Scholar]

- Huang, Y.-G.; Li, X.; Chen, P.-F. Calibration method for line-structured light multi-vision sensor based on combined target. EURASIP J. Wirel. Commun. Netw. 2013, 2013, 92. [Google Scholar] [CrossRef]

- Zhou, F.; Zhang, G. Complete calibration of a structured light stripe vision sensor through planar target of unknown orientations. Image Vis. Comput. 2005, 23, 59–67. [Google Scholar] [CrossRef]

- Ze-xiao, X.; Weitong, Z.; Zhiwei, Z.; Ming, J. A novel approach for the field calibration of line structured-light sensors. Measurement 2010, 43, 190–196. [Google Scholar] [CrossRef]

- Li, W.; Li, H.; Zhang, H. Light plane calibration and accuracy analysis for multi-line structured light vision measurement system. Optik 2020, 207, 163882. [Google Scholar] [CrossRef]

- Zhang, Y.-C.; Han, J.-X.; Fu, X.-B.; Lin, H. An online measurement method based on line laser scanning for large forgings. Int. J. Adv. Manuf. Technol. 2014, 70, 439–448. [Google Scholar] [CrossRef]

- Hwang, S.; An, Y.-K.; Yang, J.; Sohn, H. Remote Inspection of Internal Delamination in Wind Turbine Blades using Continuous Line Laser Scanning Thermography. Int. J. Precis. Eng. Manuf. Green Technol. 2020, 7, 699–712. [Google Scholar] [CrossRef]

- Wu, X.; Tang, N.C.; Liu, B.; Long, Z. A novel high precise laser 3D profile scanning method with flexible calibration. Opt. Lasers Eng. 2020, 132, 105938. [Google Scholar] [CrossRef]

- Zeng, X.; Huo, J.; Wu, Q. Calibrate Method for Scanning Direction of 3D Measurement System Based on Linear-Structure Light. Zhongguo Jiguang Chin. J. Lasers 2012, 39, 108002-5. [Google Scholar] [CrossRef]

- Lin, Z.; Wang, T.; Nan, G.; Zhang, R. Three-dimensional Data Measurement of Engine Cylinder Head Blank Based on Line Structured Light. Opto Electron. Eng. 2014, 41, 46–51. [Google Scholar]

- Cai, Z.; Jin, C.; Xu, J.; Yang, T. Measurement of Potato Volume with Laser Triangulation and Three-Dimensional Reconstruction. IEEE Access 2020, 8, 176565–176574. [Google Scholar] [CrossRef]

- Xiaozhong, G.; Shi, Z.; Yu, B.; Zhao, B.; Ke, L.-D.; Yanqiang, S. 3D measurement of gears based on a line structured light sensor. Precis. Eng. J. Int. Soc. Precis. Eng. Nanotechnol. 2020, 61, 160–169. [Google Scholar]

- Zhou, J.; Pan, L.; Li, Y.; Liu, P.; Liu, L. Real-Time Stripe Width Computation Using Back Propagation Neural Network for Adaptive Control of Line Structured Light Sensors. Sensors 2020, 20, 2618. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, S.; Hu, J.; Zhang, W.; Huang, H.; Liu, J. Line structured light 3D measurement technology for pipeline microscratches based on telecentric lens. Opt. Eng. 2021, 60, 124108. [Google Scholar] [CrossRef]

- Cai, X.; Zhong, K.; Fu, Y.; Chen, J.; Liu, Y.; Huang, C. Calibration method for the rotating axis in panoramic 3D shape measurement based on a turntable. Meas. Sci. Technol. 2020, 32, 35004. [Google Scholar] [CrossRef]

- Winkelbach, S.; Molkenstruck, S.; Wahl, F.M. Low-Cost Laser Range Scanner and Fast Surface Registration Approach. DBLP 2006, 718–728. [Google Scholar] [CrossRef]

- Steger, C. An Unbiased Detector of Curvilinear Structures. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 113–125. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Ricolfe-Viala, C.; Sánchez-Salmerón, A.-J. Correcting non-linear lens distortion in cameras without using a model. Opt. Laser Technol. 2010, 42, 628–639. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).