Research on Fast Recognition and Localization of an Electric Vehicle Charging Port Based on a Cluster Template Matching Algorithm

Abstract

:1. Introduction

2. Materials and Methods

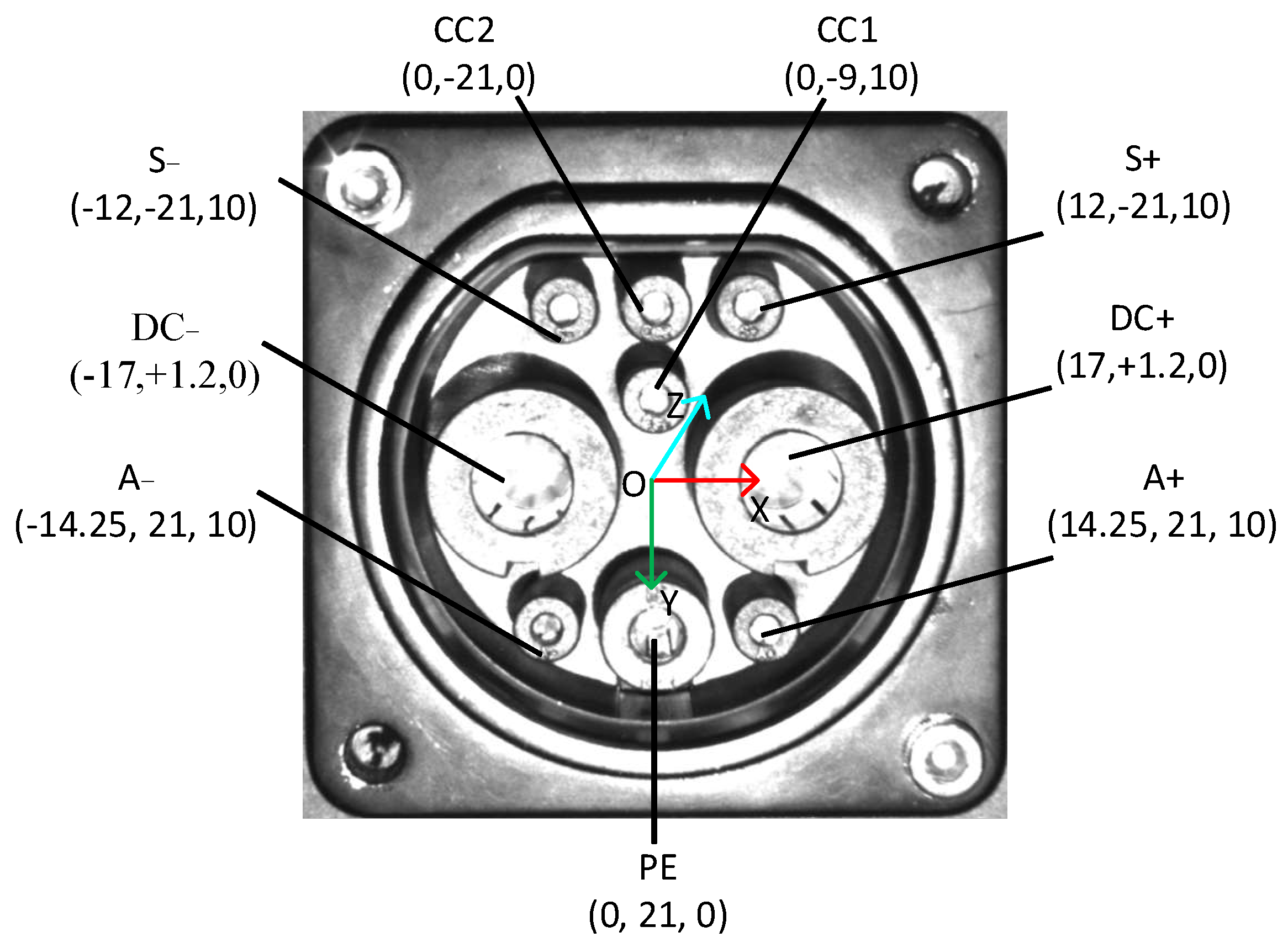

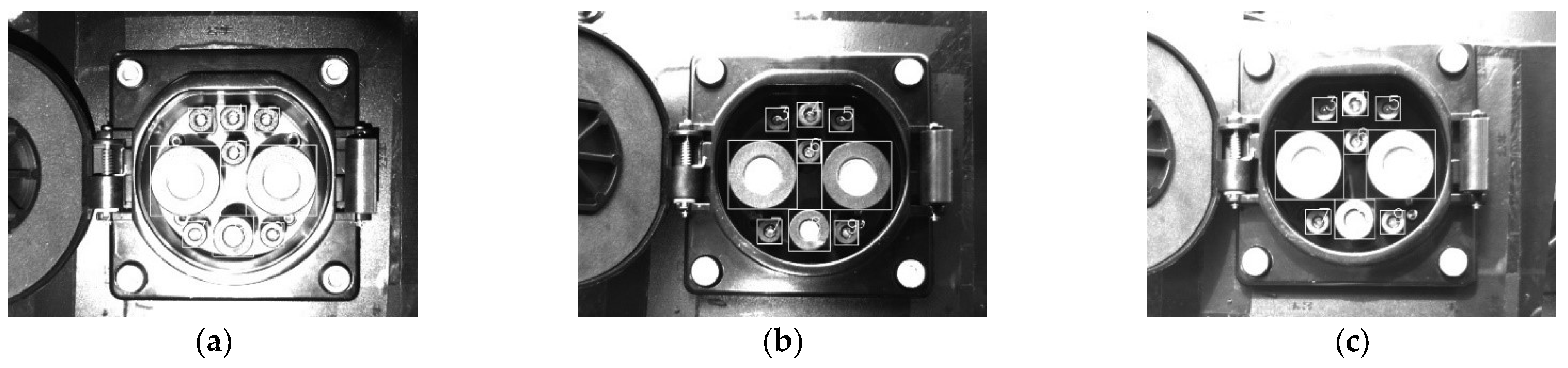

2.1. CP Structure and Complex Scene Description

2.2. Experimental Platform

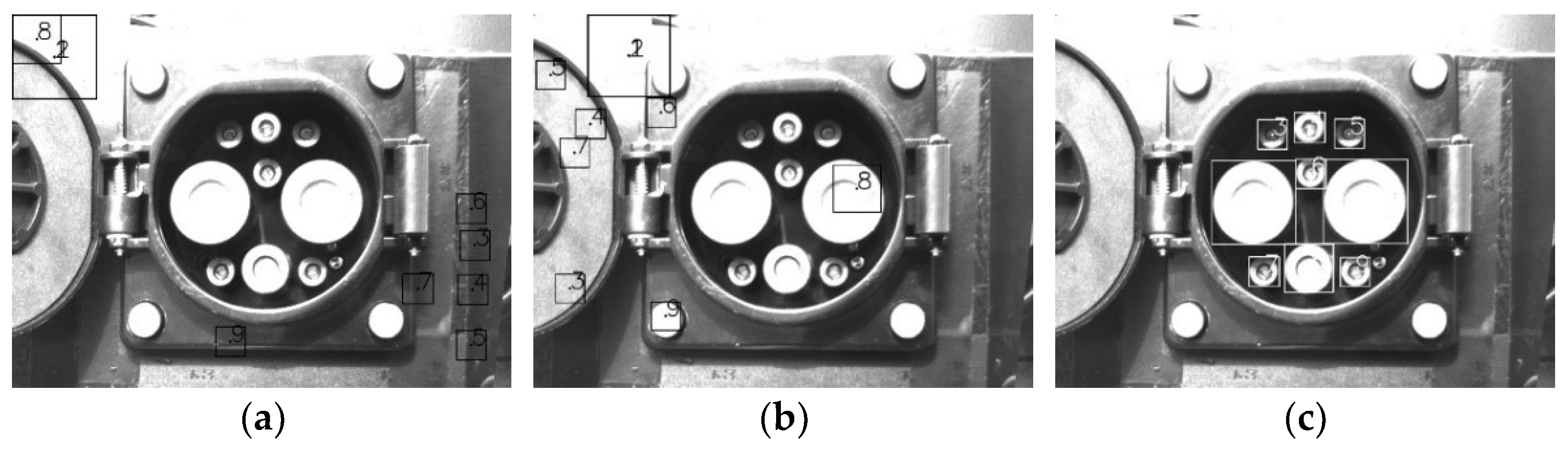

2.3. Image Data Collection

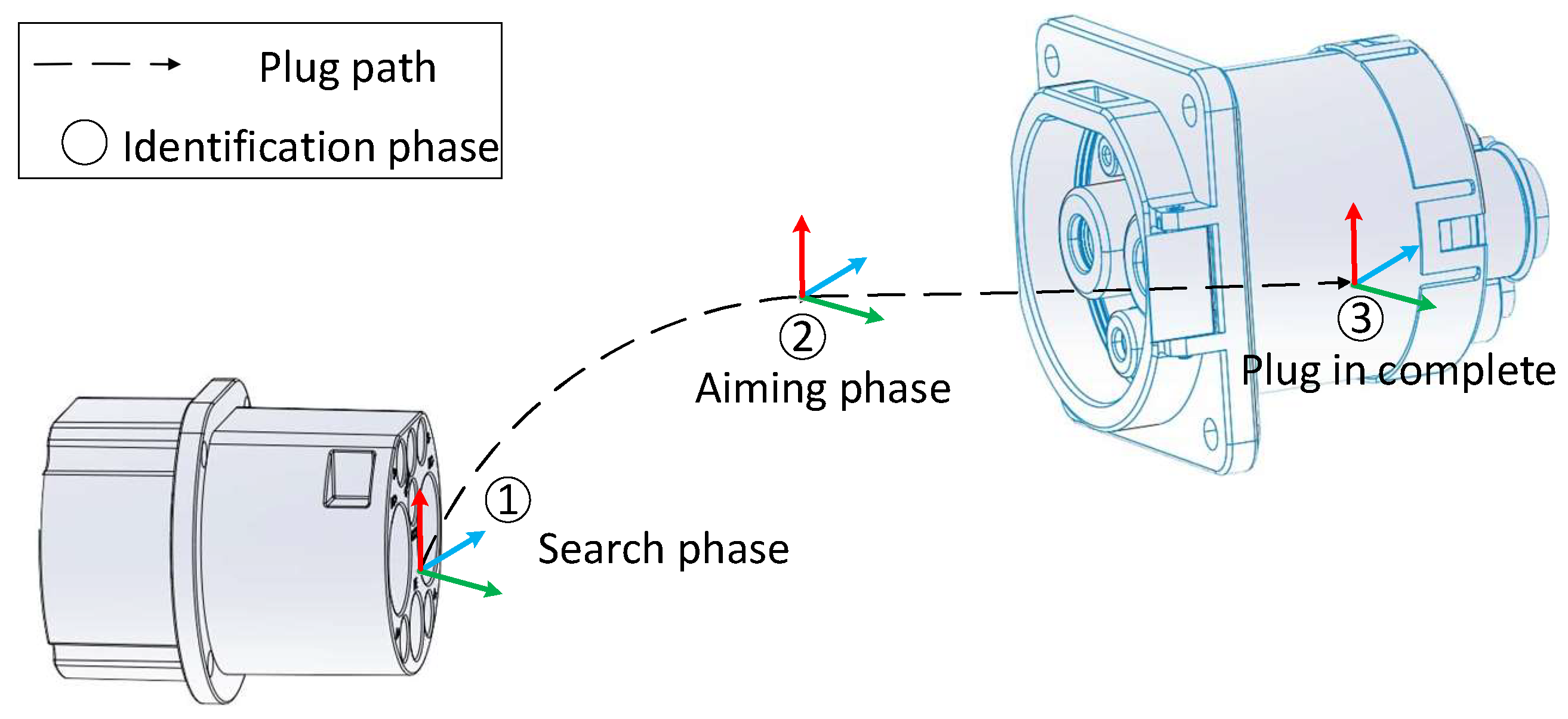

2.4. Identification and Positioning Methods

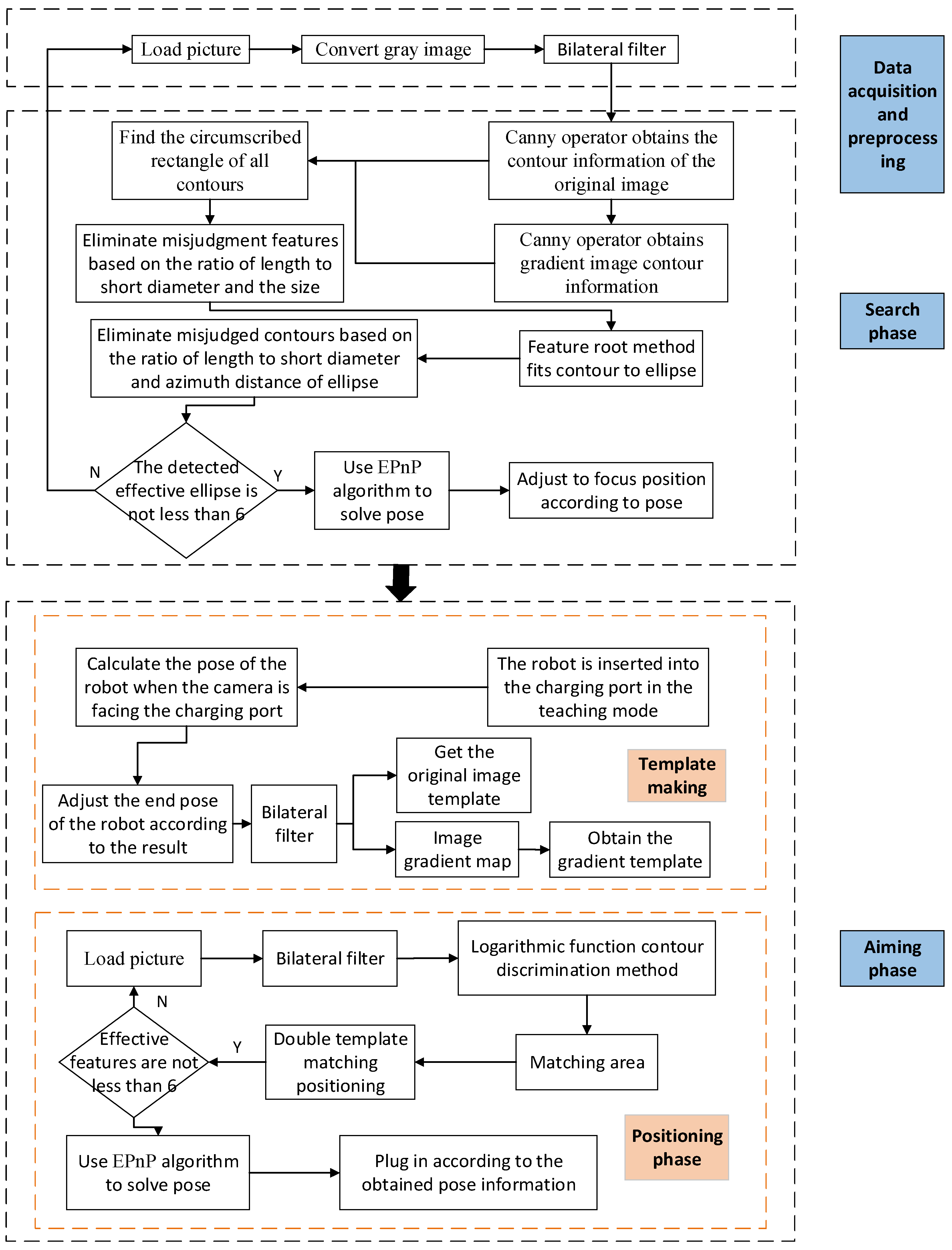

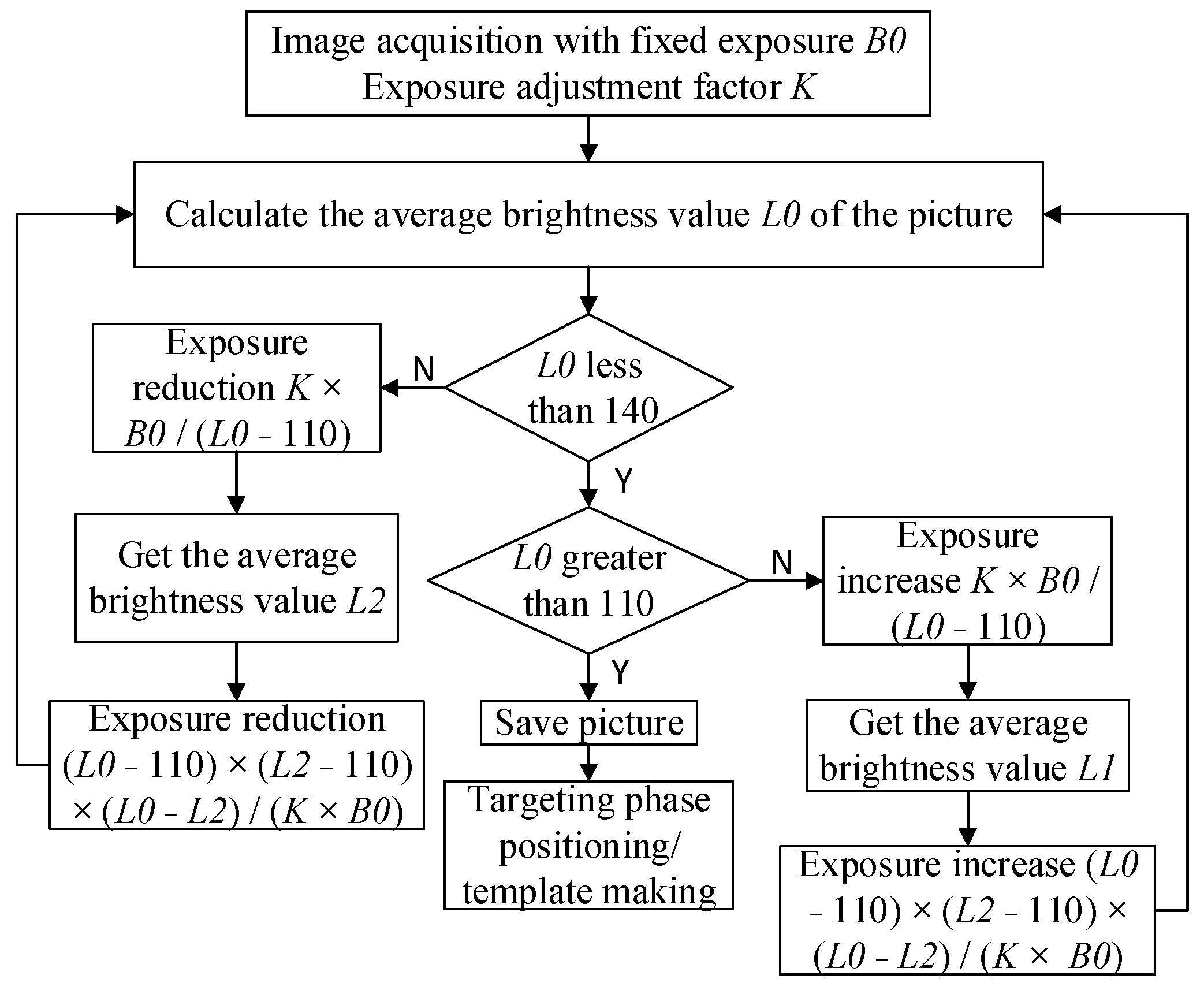

2.4.1. Technical Route

- (1)

- The image data are collected and converted into a gray image, and bilateral filtering is performed on the obtained gray image.

- (2)

- The canny algorithm is used to obtain contours, and the smaller contours are eliminated. We will re-screen the outline based on the length breadth ratio of the minimum outer rectangle.

- (3)

- The characteristic root method is used to fit the contour to an ellipse, and eliminate irrelevant ellipses according to the discrimination conditions.

- (4)

- When the number of qualified feature points is not less than six, the qualified feature information is converted into a pixel position matrix, the corresponding three-dimensional space position information is formed into a space position matrix, and the EPnP algorithm is used to solve the pose so as to obtain the pose information on the CP relative to the camera.

- (1)

- Before the recognition, the robotic arm is inserted into the CP in the robot teaching mode, and then, according to the result of the hand–eye calibration, the robot arm is controlled to pull out the CP.

- (2)

- Images are collected in front of the CP as a template, and the developed template extraction software is used to make the feature template and gradient feature template of the CP.

- (3)

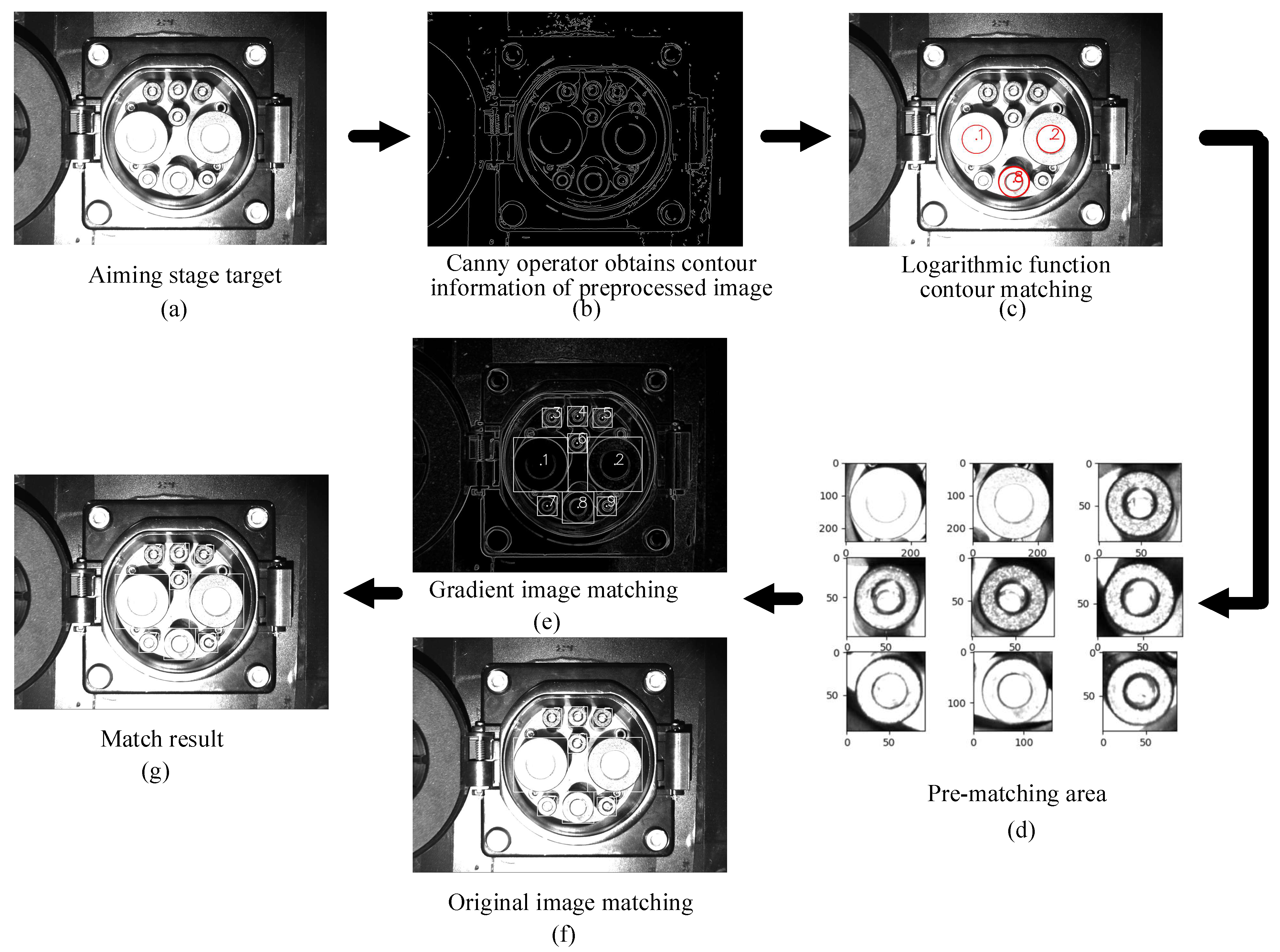

- During recognition, image information is collected at the aiming position. First, the bilateral filtering is performed on the image, and then the image contour information is extracted using the canny operator.

- (4)

- To improve the accuracy and efficiency of template matching, this paper proposes a method based on the CTMA. In the proposed method, the area of each feature point is matched according to the contour information, thereby reducing the matching time and improving the robustness of the template matching algorithm in an unstable light field environment.

- (5)

- Effective features are selected according to decision-making conditions; the effective feature center point position is converted into a pixel position matrix, and the position corresponding to the effective feature is transferred into a spatial position matrix. The EPnP algorithm is used to obtain the CP pose relative to the camera.

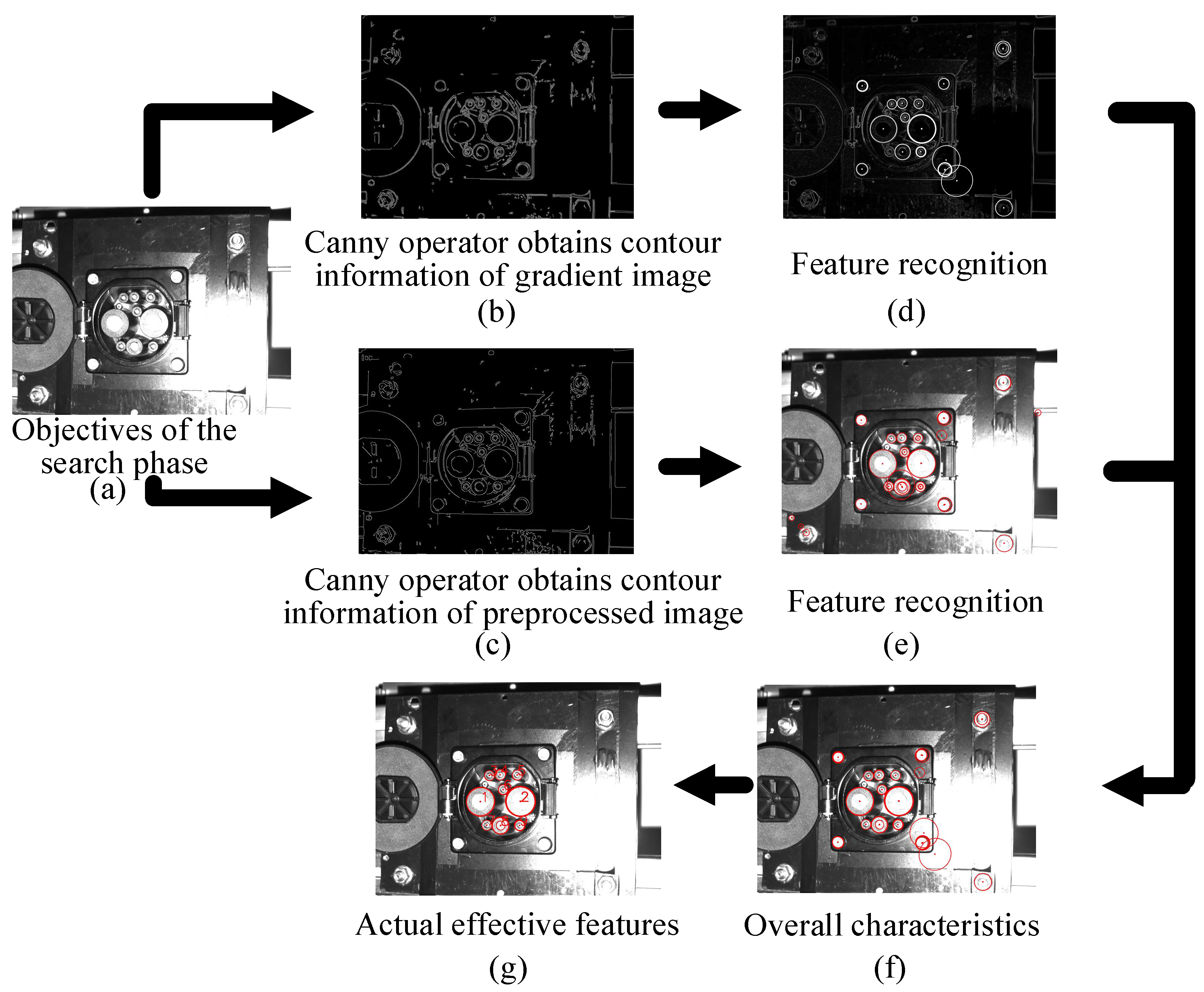

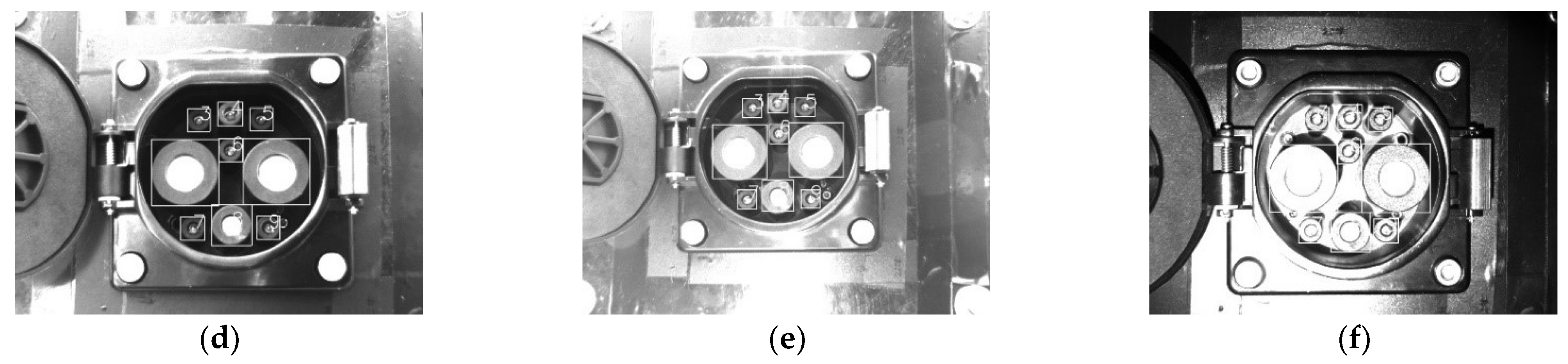

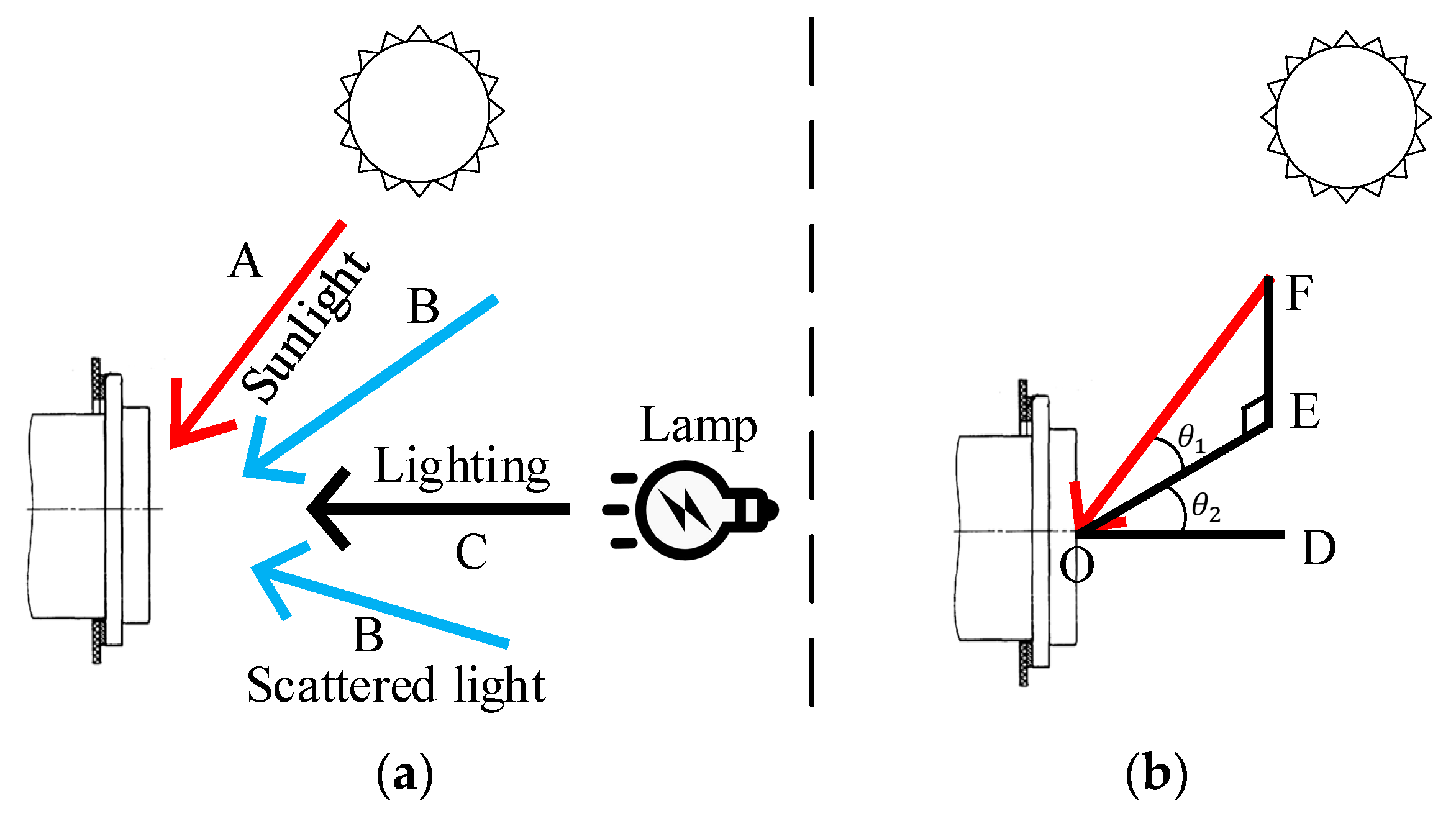

2.4.2. Feature Recognition Method of a CP in Search Phase

2.4.3. CP Pose Calculation

- (1)

- Select at least four feature points in the world coordinate system;

- (2)

- Calculate the weighting factor ;

- (3)

- Calculate the feature points in the camera coordinate system;

- (4)

- Calculate the minimum error by the Gauss–Newton algorithm and define the error as follows:where is the nth feature point of in the camera coordinate system;

- (5)

- Obtain the three-dimensional coordinates of the feature in the camera coordinate system;

- (6)

- Calculate the translation vector T and rotation matrix R of the CP pose;

- (7)

- The x, y, and z values of the CP pose are the components of the translation vector T; and

- (8)

- Solve Equation (14) to obtain the rotation values of the CP pose, namely, Rx, Ry, and Rz:

3. Results

3.1. CP Pose Error

- (1)

- The world coordinates of the robot base and the CP were kept unchanged.

- (2)

- When the robot was in the state of teaching, the charging gun was moved into the CP, and this pose was used as the robot’s zero pose.

- (3)

- The charging gun was moved out of the CP, and the robot moved randomly within the recognition range to obtain image information.

- (4)

- Based on the zero-pose information and pose information of the robot, the pose information of the camera relative to the CP was obtained, which denoted the actual pose information of the camera relative to the CP.

- (5)

- The absolute value of the difference between the actual pose information and the theoretical pose was used as a basis for error judgment.

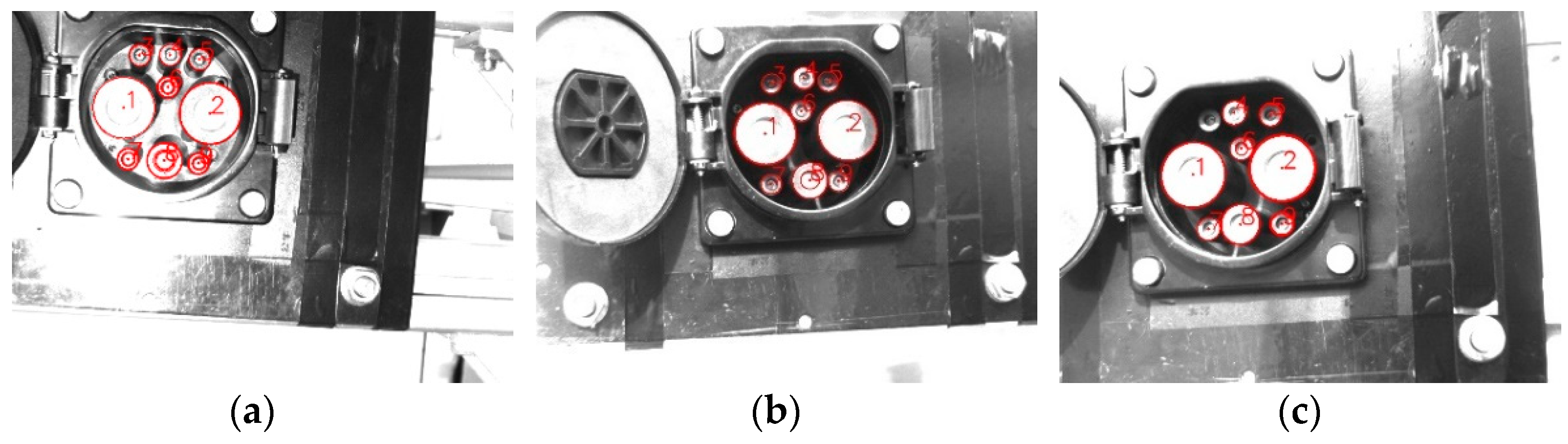

3.2. Search-Phase Pose Accuracy Test

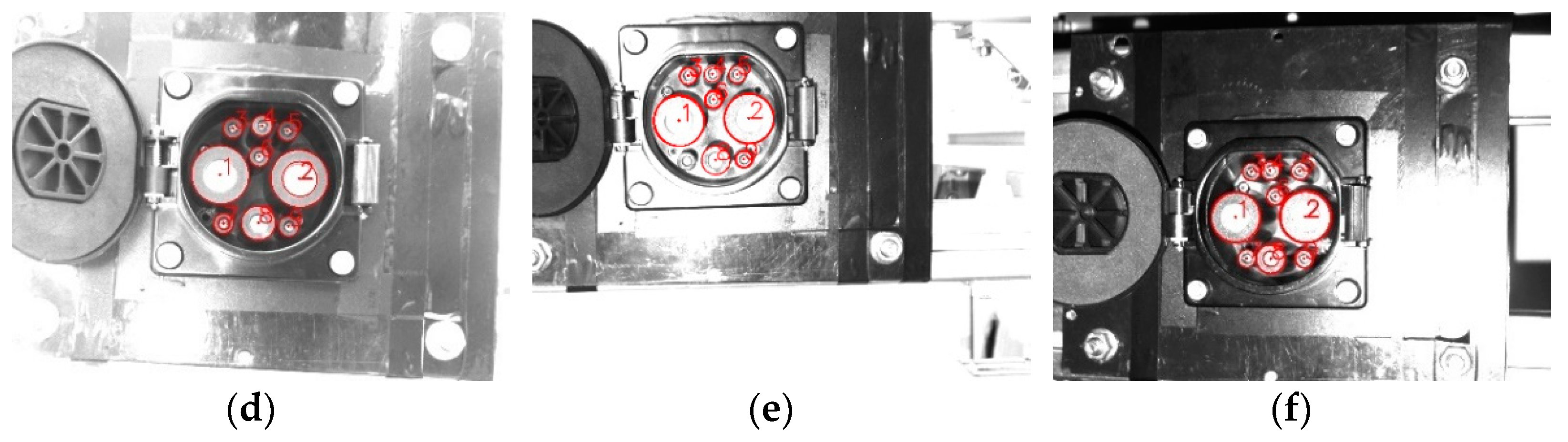

3.3. Aiming-Phase Pose Accuracy Test

3.4. Charging Gun Insertion Test Verification

4. Discussion

4.1. Results Comparison

4.2. System Error

4.3. Feature Point Recognition Deviation

4.3.1. Feature Point Recognition Deviation in Search Phase

4.3.2. Feature Point Recognition Deviation in Aiming Phase

4.4. Feature Point Pose Calculation Error

4.5. Calibration Influence on Result Accuracy

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yu, P.; Zhang, J.; Yang, D.; Lin, X.; Xu, T. The Evolution of China’s New Energy Vehicle Industry from the Perspective of a Technology–Market–Policy Framework. Sustainability 2019, 11, 1711. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Jiang, C. The Signal Effect of New Energy Vehicles Promotion on Enterprise Innovation. Complexity 2021, 2021, 9920740. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, B.; Wu, T.; Hao, H. Effects of Urban Environmental Policies on Improving Firm Efficiency: Evidence from Chinese New Energy Vehicle Firms. J. Clean. Prod. 2019, 215, 600–610. [Google Scholar] [CrossRef]

- Zhao, D.; Ji, S.; Wang, H.; Jiang, L. How Do Government Subsidies Promote New Energy Vehicle Diffusion in the Complex Network Context? A Three-Stage Evolutionary Game Model. Energy 2021, 230, 120899. [Google Scholar] [CrossRef]

- Khan, P.W.; Byun, Y.-C. Smart Contract Centric Inference Engine for Intelligent Electric Vehicle Transportation System. Sensors 2020, 20, 4252. [Google Scholar] [CrossRef] [PubMed]

- Gao, Z.; Sun, T.; Xiao, H. Decision-Making Method for Vehicle Longitudinal Automatic Driving based on Reinforcement Q-Learning. Int. J. Adv. Robot. Syst. 2019, 16, 172988141985318. [Google Scholar] [CrossRef]

- He, C.; Chen, J.; Feng, Q.; Yin, X.; Li, X. Safety Analysis and Solution of Electric Vehicle Charging. Distrib. Util. 2017, 34, 12–18. [Google Scholar] [CrossRef]

- Lou, Y.; Lin, H.; Quan, P.; Wei, D.; Di, S. Robust Adaptive Control of Fully Constrained Cable-Driven Serial Manipulator with Multi-Segment Cables Using Cable Tension Sensor Measurements. Sensors 2021, 21, 1623. [Google Scholar] [CrossRef]

- Yuan, H.; Wu, Q.; Zhou, L. Concept Design and Load Capacity Analysis of a Novel Serial-Parallel Robot for the Automatic Charging of Electric Vehicles. Electronics 2020, 9, 956. [Google Scholar] [CrossRef]

- Zhang, C.; Ao, H.; Jiang, H.; Zhou, N. Investigations on Start-up Performances of Novel Hybrid Metal Rubber-Bump Foil Bearings. Tribol. Int. 2021, 154, 106751. [Google Scholar] [CrossRef]

- Li, X.; Gu, J.; Sun, X.; Li, J.; Tang, S. Parameter Identification of Robot Manipulators with Unknown Payloads Using an Improved Chaotic Sparrow Search Algorithm. Appl. Intell. 2022. [Google Scholar] [CrossRef]

- Feng, Z.; Wang, H.; Wang, C.; Sun, X.; Zhang, S. Analysis of the Influencing Factors of FDM-Supported Positions for the Compressive Strength of Printing Components. Materials 2021, 14, 4008. [Google Scholar] [CrossRef] [PubMed]

- Lu, X. Research on Robotic Charging Technology for Electric Vehicles Based on Monocular Vision and Force Sensing Technology. Master’s Thesis, Harbin Institute of Technology School, Harbin, China, 2020. (In Chinese). [Google Scholar] [CrossRef]

- Pan, M.; Sun, C.; Liu, J.; Wang, Y. Automatic Recognition and Location System for Electric Vehicle Charging Port in Complex Environment. IET Image Process. 2020, 14, 2263–2272. [Google Scholar] [CrossRef]

- Miseikis, J.; Ruther, M.; Walzel, B.; Hirz, M.; Brunner, H. 3D Vision Guided Robotic Charging Station for Electric and Plug-in Hybrid Vehicles. arXiv 2017, arXiv:1703.05381. [Google Scholar]

- Duan, Z. Recognition and Positioning of Automatic Charging Interface of Electric Vehicle based on Image Recognition Algorithm and Its Control Method. Master’s Thesis, Xiamen University, Xiamen, China, 2017. (In Chinese). [Google Scholar]

- Yao, A.; Xu, J. Electric Vehicle Charging Hole Recognition and Positioning System Based on Binocular Vision. Sens. Microsyst. 2021, 40, 81–84. [Google Scholar] [CrossRef]

- Yin, K. Research on the visual positioning technology of electric vehicle charging port position. Master’s Thesis, Harbin Institute of Technology School, Harbin, China, 2020. (In Chinese). [Google Scholar] [CrossRef]

- Quan, P.; Lou, Y.; Lin, H.; Liang, Z. Research on Fast Identification and Location of Contour Features of Electric Vehicle Charging Port in Complex Scenes. IEEE Access 2021, 10, 26702–26714. [Google Scholar] [CrossRef]

- Jin, S.; Li, X.; Yang, X.; Zhang, J.A.; Shen, D. Identification of Tropical Cyclone Centers in SAR Imagery Based on Template Matching and Particle Swarm Optimization Algorithms. IEEE Trans. Geosci. Remote Sens. 2019, 57, 598–608. [Google Scholar] [CrossRef]

- Lu, Y.; Zhang, X.; Pang, S.; Li, H.; Zhu, B. A Robust Edge-Based Template Matching Algorithm for Displacement Measurement of Compliant Mechanisms under Scanning Electron Microscope. Rev. Sci. Instrum. 2021, 92, 033703. [Google Scholar] [CrossRef]

- Jung, J.-H.; Lee, H.-S.; Kim, B.-G.; Park, D.-J. Fast Block Matching Algorithm Using Spatial Intensity Distribution; IEEE: Piscataway, NJ, USA, 2005; p. 185. ISBN 978-0-7695-2358-3. [Google Scholar]

- Cui, Z.; Qi, W.; Liu, Y. A Fast Image Template Matching Algorithm Based on Normalized Cross Correlation. J. Phys. Conf. Ser. 2020, 1693, 012163. [Google Scholar] [CrossRef]

- Yang, Y.; Chen, Z.; Li, X.; Guan, W.; Zhong, D.; Xu, M. Robust Template Matching with Large Angle Localization. Neurocomputing 2020, 398, 495–504. [Google Scholar] [CrossRef]

- Tsai, C.-Y.; Yu, C.-C. Real-Time Textureless Object Detection and Recognition Based on an Edge-Based Hierarchical Template Matching Algorithm. J. Appl. Sci. Eng. 2018, 21, 229–240. [Google Scholar] [CrossRef]

- He, Z.; Jiang, Z.; Zhao, X.; Zhang, S.; Wu, C. Sparse Template-Based 6-D Pose Estimation of Metal Parts Using a Monocular Camera. IEEE Trans. Ind. Electron. 2020, 67, 390–401. [Google Scholar] [CrossRef]

- Han, Y. Reliable Template Matching for Image Detection in Vision Sensor Systems. Sensors 2021, 21, 8176. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Machine Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Pan, H.; Wang, N.L.; Qin, Y.S. A Closed-Form Solution to Eye-to-Hand Calibration towards Visual Grasping. Ind. Robot. Int. J. 2014, 41, 567–574. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An Accurate O(n) Solution to the PnP Problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef] [Green Version]

| Scenes | Weather | TimePeriod | Min Light Intensity (Klux) | Max Light Intensity (Klux) | Number of Samples |

|---|---|---|---|---|---|

| Indoor | Sunny/Overcast | Time 1/2/3 | 3.1 | 4.8 | 100 |

| Outdoor | Sunny | Time 1/3 | 7.4 | 43.8 | 100 |

| Sunny | Time 2 | 12.9 | 52.3 | 100 | |

| Overcast | Time 1/3 | 7.3 | 15.4 | 100 | |

| Overcast | Time 2 | 5.4 | 22.8 | 100 | |

| Indoor/Outdoor | Sunny/Overcast | Time 4 | 0.7 | 3.0 | 100 |

| Scenes | Weather | TimePeriod | Min Light Intensity (Klux) | Max Light Intensity (Klux) | Number of Samples |

|---|---|---|---|---|---|

| Indoor | Sunny/Overcast | Time 1/2/3 | 4.5 | 5.4 | 100 |

| Outdoor | Sunny | Time 1/3 | 8.3 | 43.5 | 100 |

| Sunny | Time 2 | 12.6 | 52.4 | 100 | |

| Overcast | Time 1/3 | 6.9 | 17.1 | 100 | |

| Overcast | Time 2 | 7.2 | 21.7 | 100 | |

| Indoor/Outdoor | Sunny/Overcast | Time 4 | 2.9 | 3.5 | 100 |

| Scenes | Weather | Time Period | x, mm | y, mm | z, mm | Rx, Deg | Ry, Deg | Rz, Deg |

|---|---|---|---|---|---|---|---|---|

| Indoor | Sunny/Overcast | Time 1/2/3 | 1.62 | 1.77 | 1.98 | 2.10 | 2.19 | 1.65 |

| Outdoor | Sunny | Time 1/3 | 2.12 | 2.17 | 2.45 | 2.44 | 2.48 | 1.94 |

| Sunny | Time 2 | 2.31 | 2.36 | 2.71 | 2.61 | 2.87 | 1.99 | |

| Overcast | Time 1/3 | 1.73 | 1.89 | 2.11 | 2.07 | 2.16 | 1.85 | |

| Overcast | Time 2 | 1.76 | 1.82 | 2.21 | 2.13 | 2.21 | 1.88 | |

| Indoor/Outdoor | Sunny/Overcast | Time 4 | 1.51 | 1.82 | 2.05 | 1.82 | 2.15 | 1.69 |

| Scenes | Weather | Time Period | x, mm | y, mm | z, mm | Rx, Deg | Ry, Deg | Rz, Deg |

|---|---|---|---|---|---|---|---|---|

| Indoor | Sunny/Overcast | Time 1/2/3 | 0.52 | 0.67 | 1.04 | 1.07 | 0.77 | 0.41 |

| Outdoor | Sunny | Time 1/3 | 0.74 | 1.05 | 1.32 | 1.21 | 1.23 | 0.7 |

| Sunny | Time 2 | 0.85 | 1.11 | 1.51 | 1.26 | 1.34 | 0.75 | |

| Overcast | Time 1/3 | 0.62 | 0.75 | 1.22 | 1.04 | 0.79 | 0.44 | |

| Overcast | Time 2 | 0.68 | 0.84 | 1.26 | 1.13 | 0.89 | 0.57 | |

| Indoor/Outdoor | Sunny/Overcast | Time 4 | 0.51 | 0.64 | 1.06 | 0.94 | 0.69 | 0.43 |

| Positioning Phase | Scenes | Weather | Time Period | Min Light Intensity (Klux) | Max Light Intensity (Klux) | Number of Samples |

|---|---|---|---|---|---|---|

| Search phase | Indoor | Sunny/Overcast | Time 1/2/3/4 | 1.74 | 3.68 | 100 |

| Outdoor | Sunny | Time 1/2/3 | 10.23 | 48.09 | 100 | |

| Overcast | Time 1/2/3 | 6.29 | 18.90 | 100 | ||

| Aiming phase | Indoor | Sunny/Overcast | Time 1/2/3/4 | 3.92 | 4.59 | 100 |

| Outdoor | Sunny | Time 1/2/3 | 10.48 | 48.12 | 100 | |

| Overcast | Time 1/2/3 | 6.95 | 19.36 | 100 |

| Positioning Phase | Scenes | Weather | Time Period | Successfully Identified/Plugged (Times) | Successful Recognition/Plugging Rate (%) |

|---|---|---|---|---|---|

| Search/Aiming phase | Indoor | / | / | 99 | 99 |

| Outdoor | Sunny | AM/PM | 92 | 92 | |

| Overcast | AM/PM | 94 | 94 |

| Positioning Phase | Method | x, mm | y, mm | z, mm | Rx, Deg | Ry, Deg | Rz, Deg | Running Time (s) |

|---|---|---|---|---|---|---|---|---|

| Search phase | Our + AP3P | 2.24 | 2.46 | 3.15 | 6.56 | 4.21 | 2.01 | 0.27 |

| Our + P3P | 2.23 | 2.47 | 3.14 | 5.67 | 4.11 | 1.99 | 0.27 | |

| Our + UPNP | 1.88 | 1.97 | 2.28 | 2.34 | 2.39 | 1.88 | 0.27 | |

| Our + ITERATIVE | 1.91 | 1.99 | 2.34 | 2.39 | 2.41 | 1.91 | 0.27 | |

| Our + EPNP | 1.84 | 1.97 | 2.25 | 2.20 | 2.34 | 1.83 | 0.27 | |

| Quan [18] | 2.27 | 2.53 | 2.67 | / | / | / | 1.72 | |

| Yinkai [19] | / | / | / | / | / | / | 1.14 | |

| Aiming phase | Our + AP3P | 1.13 | 1.32 | 2.13 | 4.45 | 2.34 | 0.71 | 0.21 |

| Our + P3P | 1.10 | 1.34 | 2.11 | 4.16 | 2.15 | 0.69 | 0.21 | |

| Our + UPNP | 0.66 | 0.85 | 1.26 | 1.13 | 0.98 | 0.55 | 0.21 | |

| Our + ITERATIVE | 0.81 | 0.89 | 1.33 | 1.25 | 1.13 | 0.61 | 0.21 | |

| Our + EPNP | 0.65 | 0.84 | 1.24 | 1.11 | 0.95 | 0.55 | 0.21 | |

| Quan [18] | 0.67 | 0.88 | 1.26 | 1.24 | 1.01 | 0.58 | 1.21 | |

| Yinkai [19] | 0.89 | 1.11 | 1.31 | 1.23 | 1.14 | 0.63 | 6.75 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Quan, P.; Lou, Y.; Lin, H.; Liang, Z.; Wei, D.; Di, S. Research on Fast Recognition and Localization of an Electric Vehicle Charging Port Based on a Cluster Template Matching Algorithm. Sensors 2022, 22, 3599. https://doi.org/10.3390/s22093599

Quan P, Lou Y, Lin H, Liang Z, Wei D, Di S. Research on Fast Recognition and Localization of an Electric Vehicle Charging Port Based on a Cluster Template Matching Algorithm. Sensors. 2022; 22(9):3599. https://doi.org/10.3390/s22093599

Chicago/Turabian StyleQuan, Pengkun, Ya’nan Lou, Haoyu Lin, Zhuo Liang, Dongbo Wei, and Shichun Di. 2022. "Research on Fast Recognition and Localization of an Electric Vehicle Charging Port Based on a Cluster Template Matching Algorithm" Sensors 22, no. 9: 3599. https://doi.org/10.3390/s22093599

APA StyleQuan, P., Lou, Y., Lin, H., Liang, Z., Wei, D., & Di, S. (2022). Research on Fast Recognition and Localization of an Electric Vehicle Charging Port Based on a Cluster Template Matching Algorithm. Sensors, 22(9), 3599. https://doi.org/10.3390/s22093599