6.1. Implementation

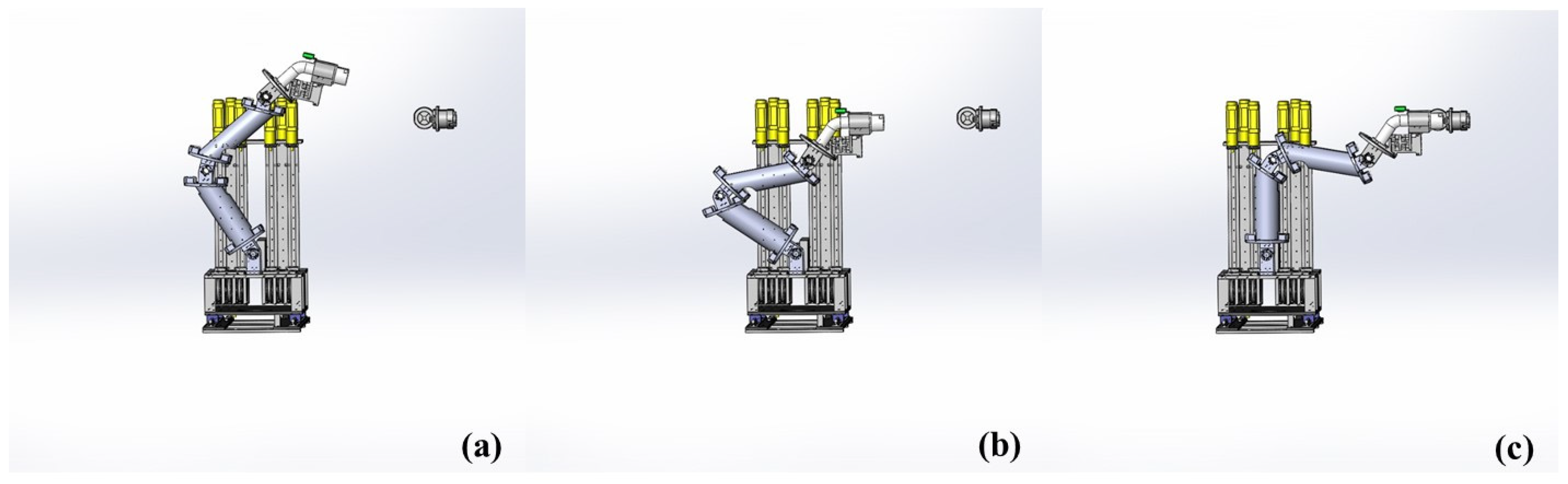

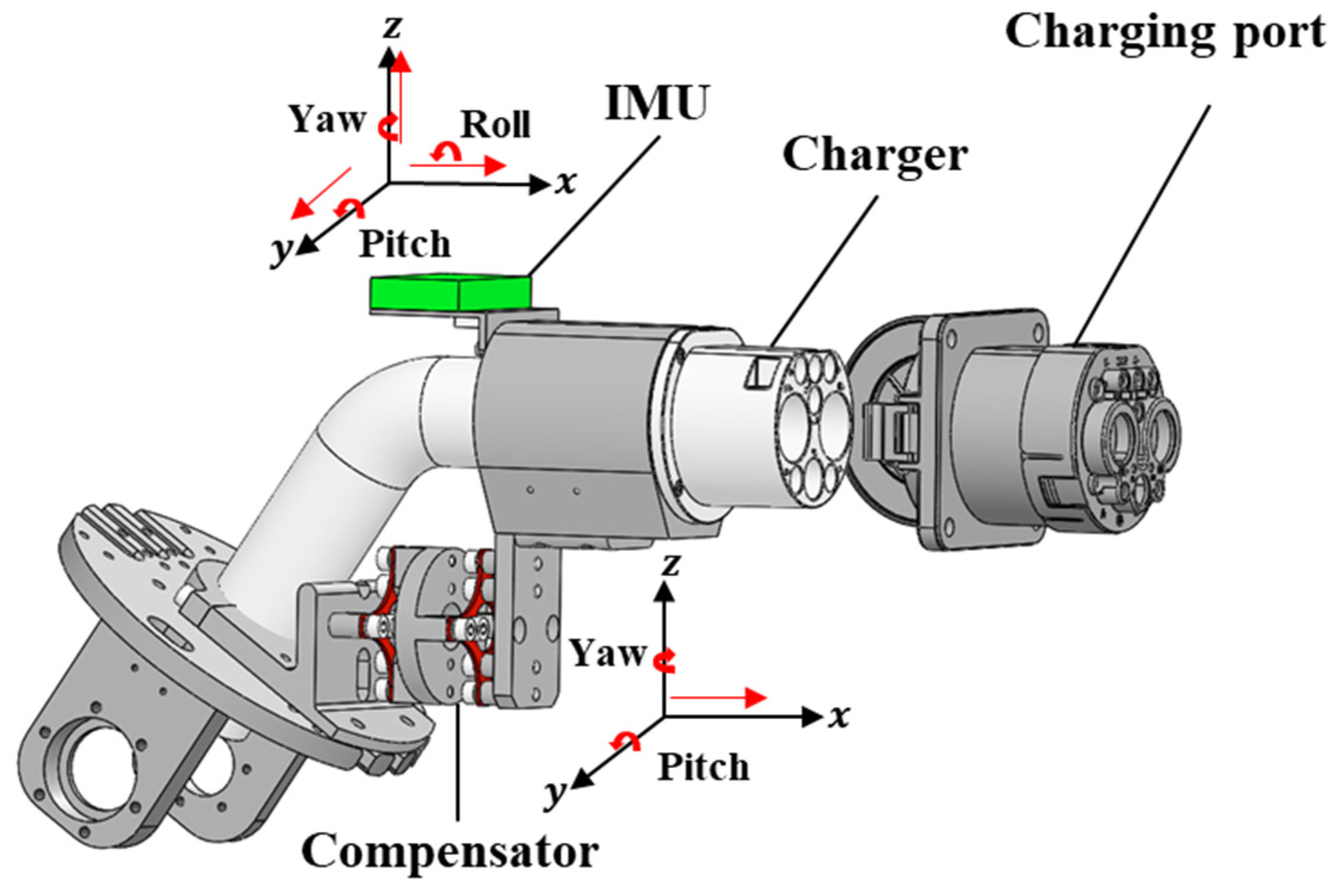

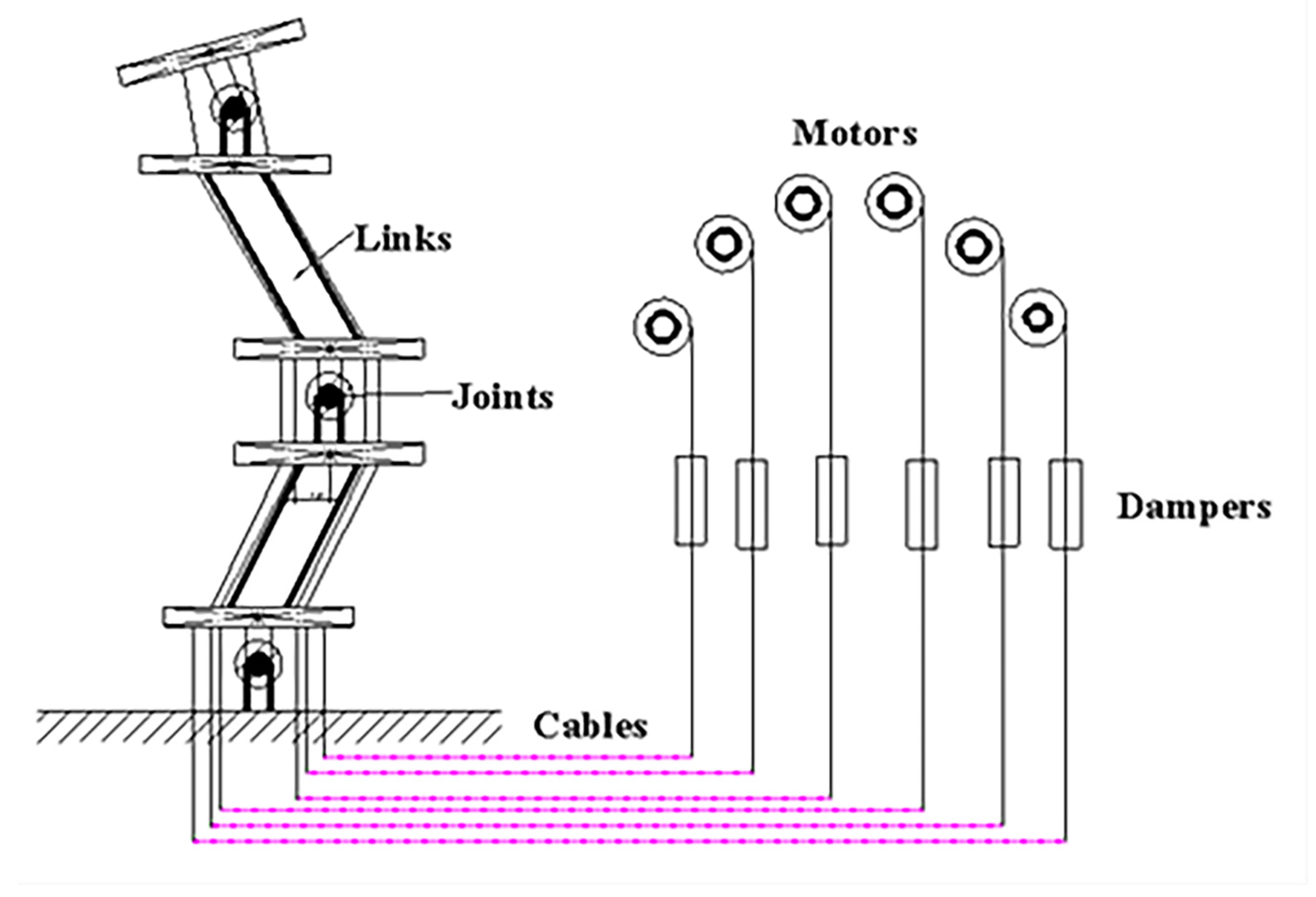

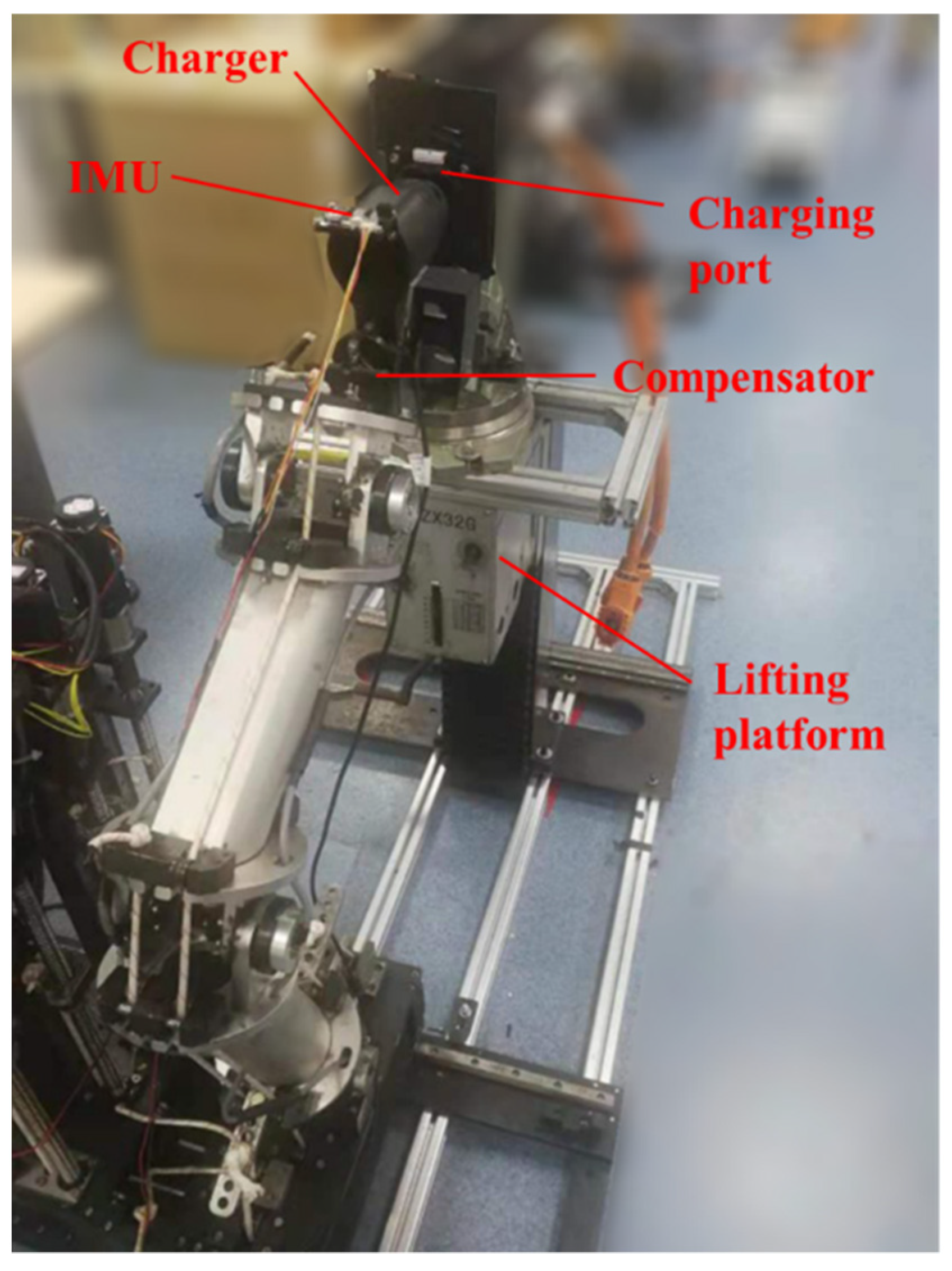

As shown in

Figure 10, in the experiment, all the data were collected using the IMU mounted on the top of the charger. The charger was connected with the cable-driven manipulator using the compensator. Due to the very small deformation of the charger after collision, the collected data mainly included the vibration information from the compensator and the cable-driven manipulator. The manipulator was controlled using the PI scheme designed in [

6], which includes two controllers controlling motors and cables, respectively. In the collision simulation experiment, the end effector of the manipulator moved in a straight line at a speed of 16.7 mm/s.

In terms of the quantity of data collection, in order to reduce the impact of repeated positioning errors of the manipulator on the results, in each group, each

normal point was collected 40 times. For the same reason, each

acceptable point and each

vulnerable point were collected 30 times, respectively. Meanwhile, due to the obvious characteristic differences between

contact and

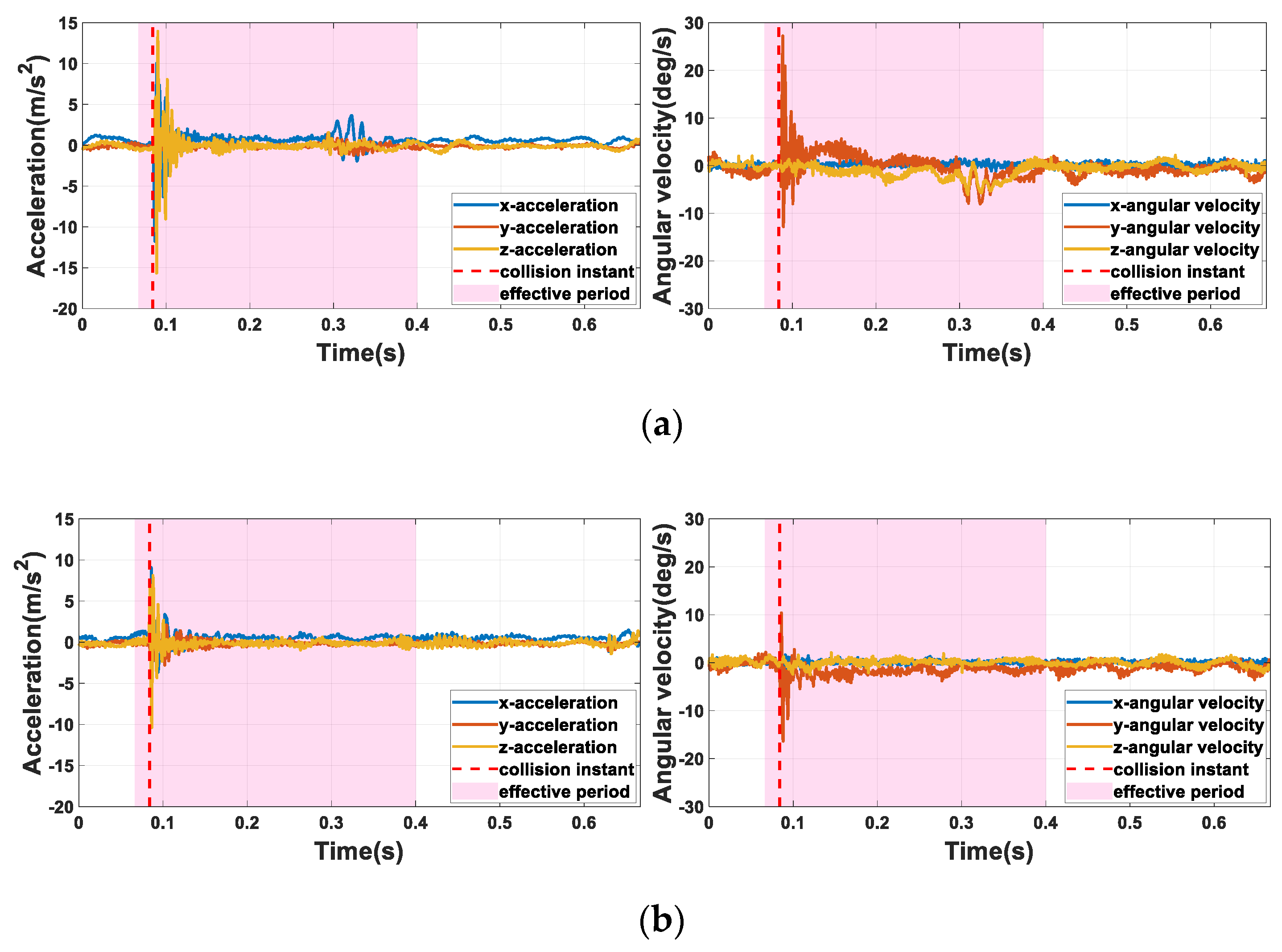

free, as shown in

Figure 6, not too many

free samples were needed. The ratio of

free and

contact samples was set as 1:2 in the experiment. The sample distribution in each group is listed in

Table 2. To explore the influence of different joint configurations on the results, one group was randomly selected as the testing data set, which is never used in a single training process. The remaining three groups were shuffled and divided into the training data set and validation data set in a ratio of 8:2.

To illustrate the effectiveness of the proposed DCNN–SVM algorithm, we compared the results with a long short-term memory (LSTM) model [

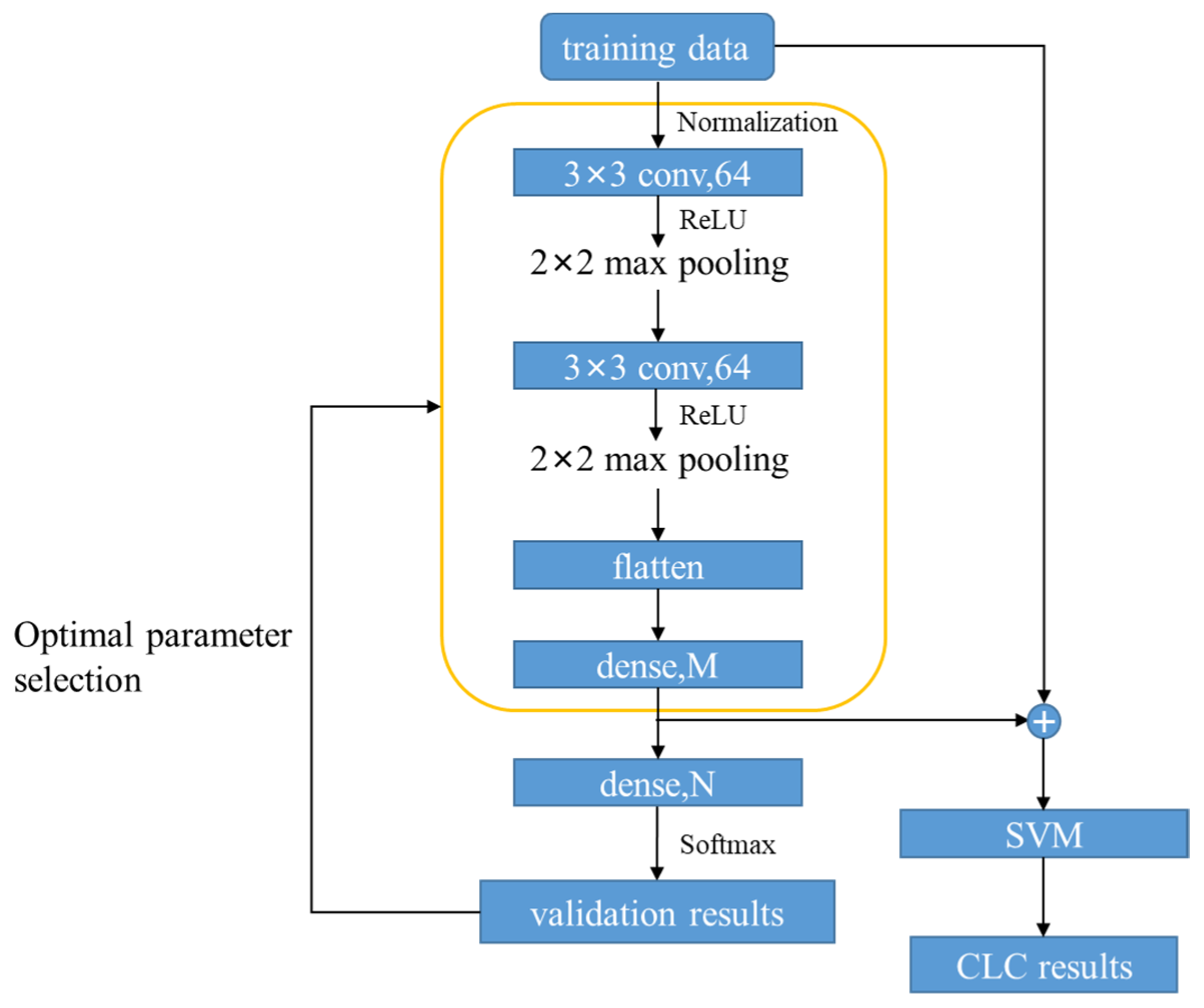

55] and the plain CNN in DCNN–SVM. These three methods were essentially automatic feature extraction methods, and the inputs were generated by normalizing the raw signals. For the DCNN–SVM, of which the structure is illustrated in

Figure 9, M was set as 1024, and N was set as equal to the total number of the classes. For maxpooling layers in DCNN–SVM, the stride was set as 2 and the padding method was set as “same”. For the SVM in DCNN–SVM, by grid search, the penalty coefficient was set as 6 and the kernel was set as “rbf”. The learning rate was set as 0.0001, and the optimizer of the extractor part was set as Adam. For the CNN model design, its parameters were set as consistent with those of the DCNN–SVM. Thus, we refer to the plain CNN as DCNN here. For the LSTM model design, the structure is composed of an LSTM layer, three fully connected layers and finally a softmax layer. The LSTM layer was set as the first layer. The number of the hidden units of the LSTM layer was set as 110. The LSTM layer converted the initial input into the high-dimensional output feature matrix. The output of the LSTM layer was flattened and then fed into three fully connected layers whose sizes were 5000-way, 500-way and N-way, respectively. N was equal to the number of the classes. The learning rate was set as 0.0001, and the optimizer was set as Adam. All these three models were trained and tested using the Tensorflow 2.0 library. Other than the above two comparison models, the results of our proposed model were also compared with SVM and k-nearest neighbors (kNN) models, which are both artificial feature extraction methods. The features selected in these two models are similar to those in [

17]. In more detail, the features are listed in

Table 3. In addition, using the grid search method, the penalty coefficient of the SVM here was set as 10. Using the same method, for kNN, the number of neighbors was set as 7, the leaf size was set as 1 and “distance” was chosen as weights. To test these two models, we used the machine learning library from scikit-learn. To clearly describe the hyper-parameters of the mentioned methods, relative settings are listed in

Table 4.

As mentioned in

Section 5.2, the computational complexity of the DCNN model can be expressed as follows:

On the other hand, the computational complexity of LSTM mainly depends on the number of weights per time step and the length of inputs. Given a number of weights

and the length of inputs

, the complexity of the LSTM layer can be expressed as

. Considering the fully connected layers, the complexity of the compared LSTM model can be expressed as

. Furthermore, given the number of training instances

and the dimensionality of training space

, the computational complexity of kNN can be expressed as

[

56].

In this experiment, we used three cross-validation to train and validate models. According to the accuracy of the validation results, we chose the best models in the DCNN–SVM, CNN and LSTM methods. For the SVM and the kNN models, we used the grid search method to obtain the optimal hyper-parameters. For each group, the process was repeated three times, and the results from each testing set were averaged. To explore the influence of on the prediction results, we also created several test sets with various values, which represented the segmented vibration signals with different proportions of . Here, we set 0.0667 s 0.3333 s and the interval was set as 0.0133 s.

6.2. Results and Discussion

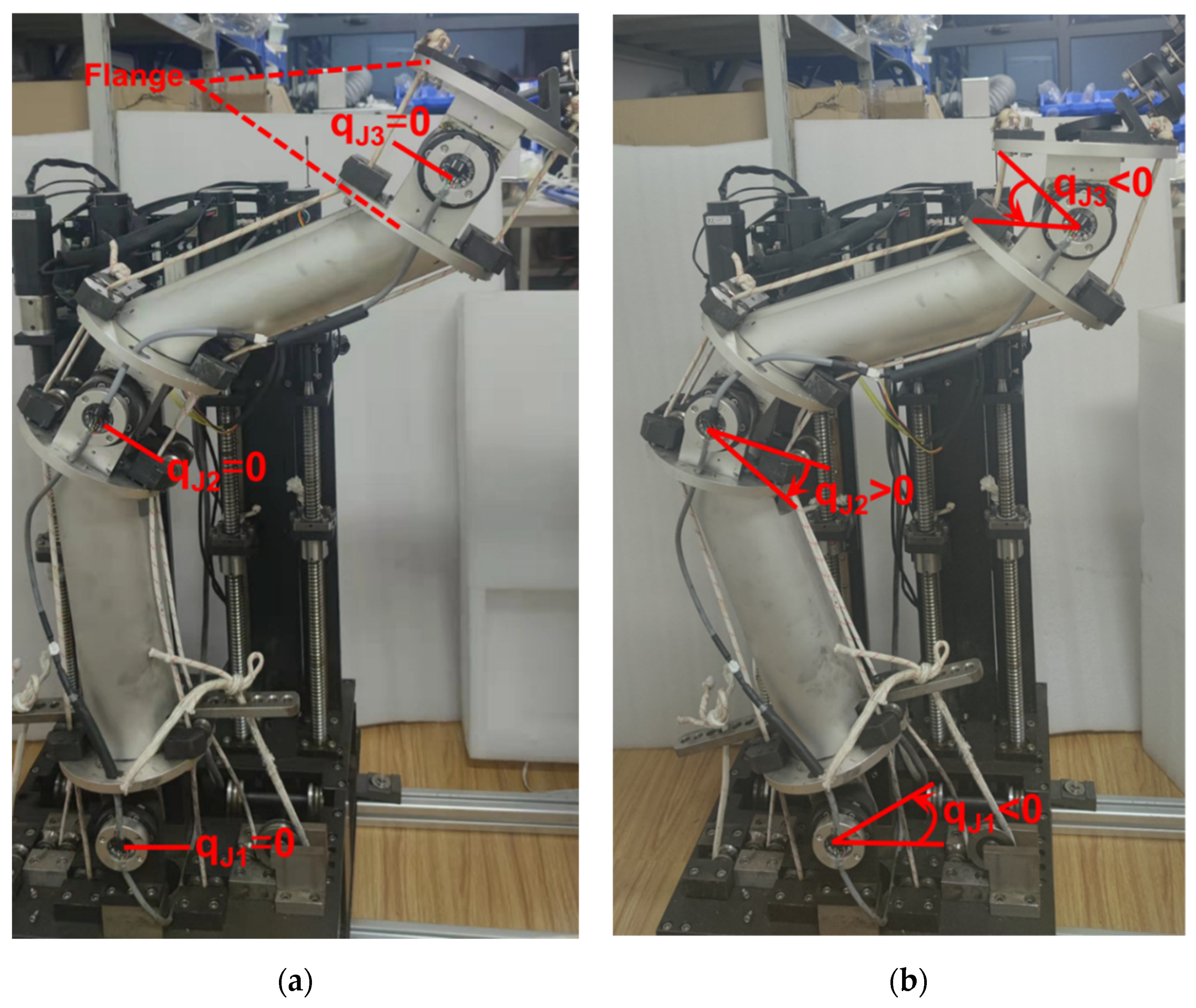

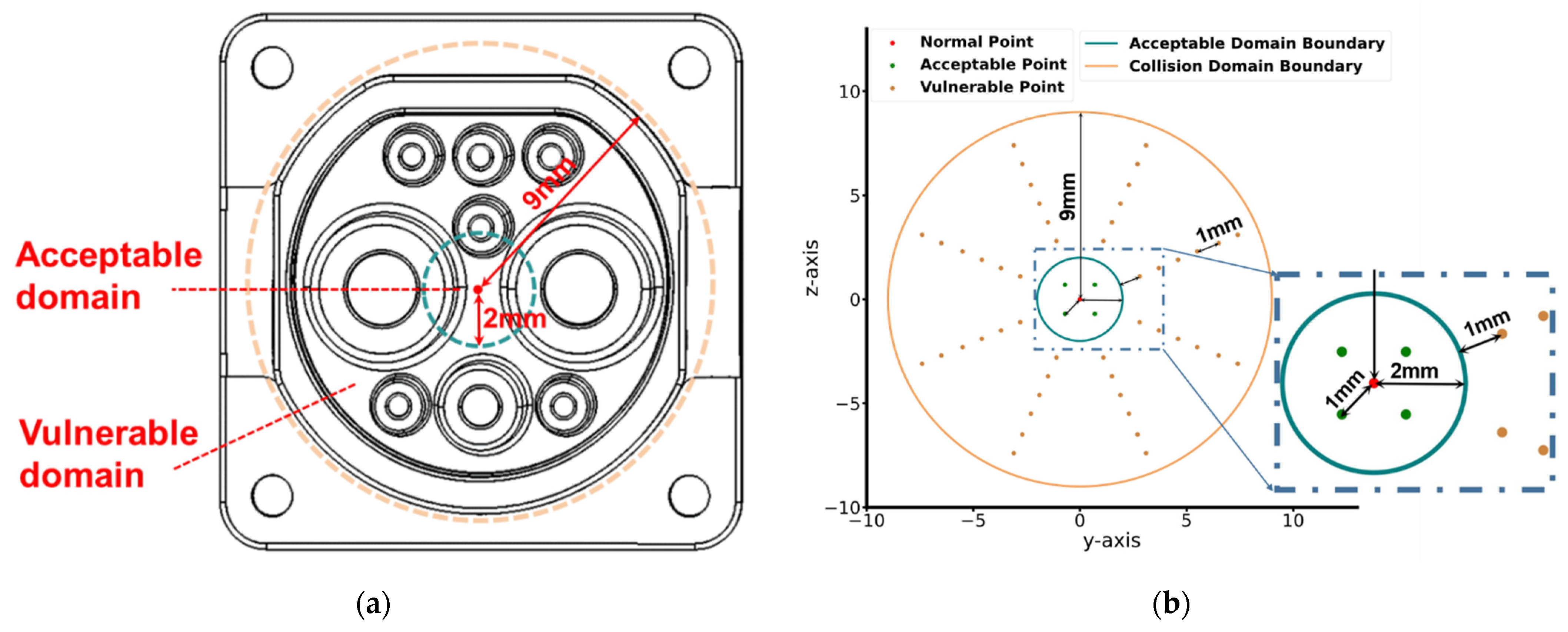

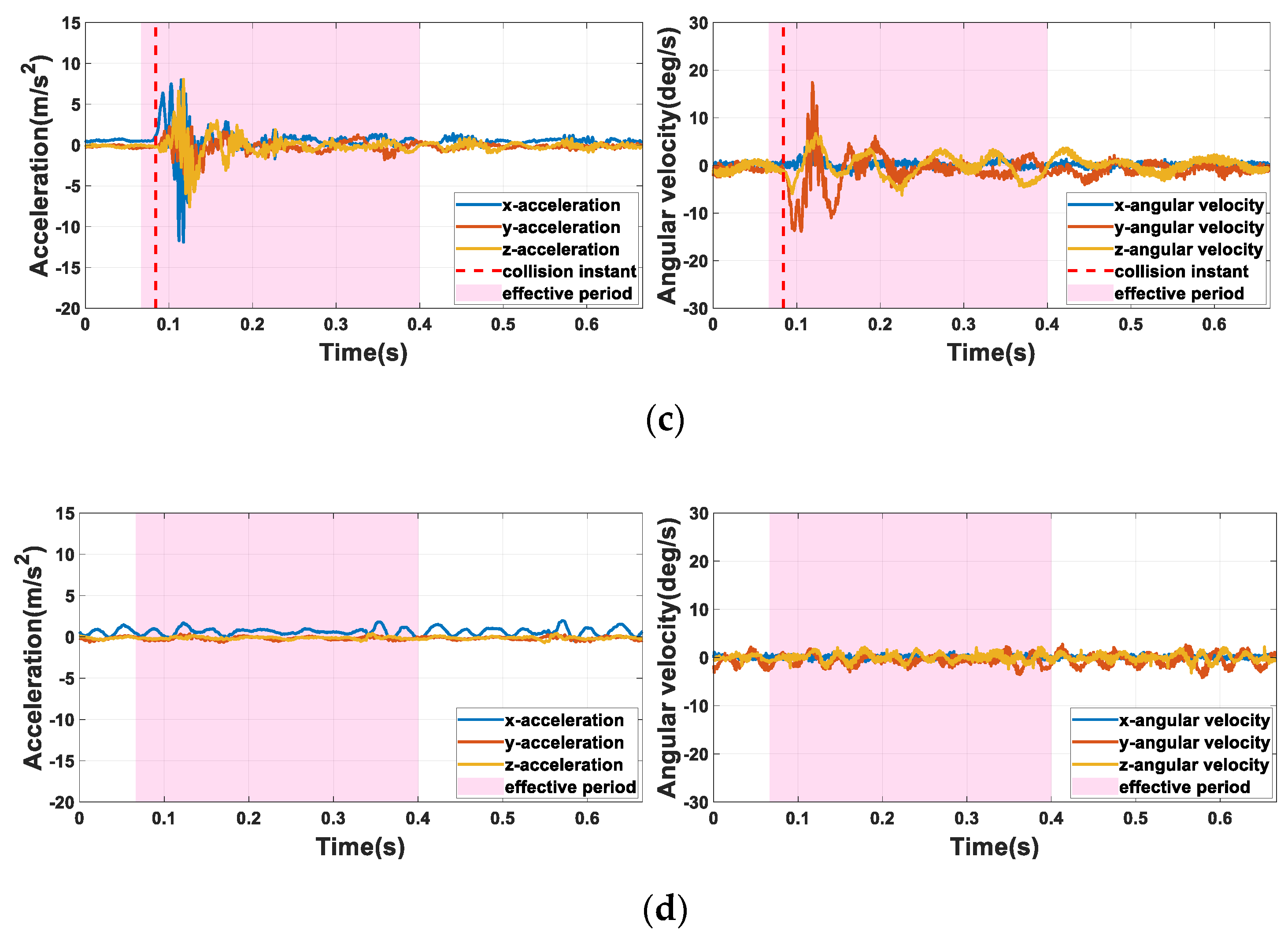

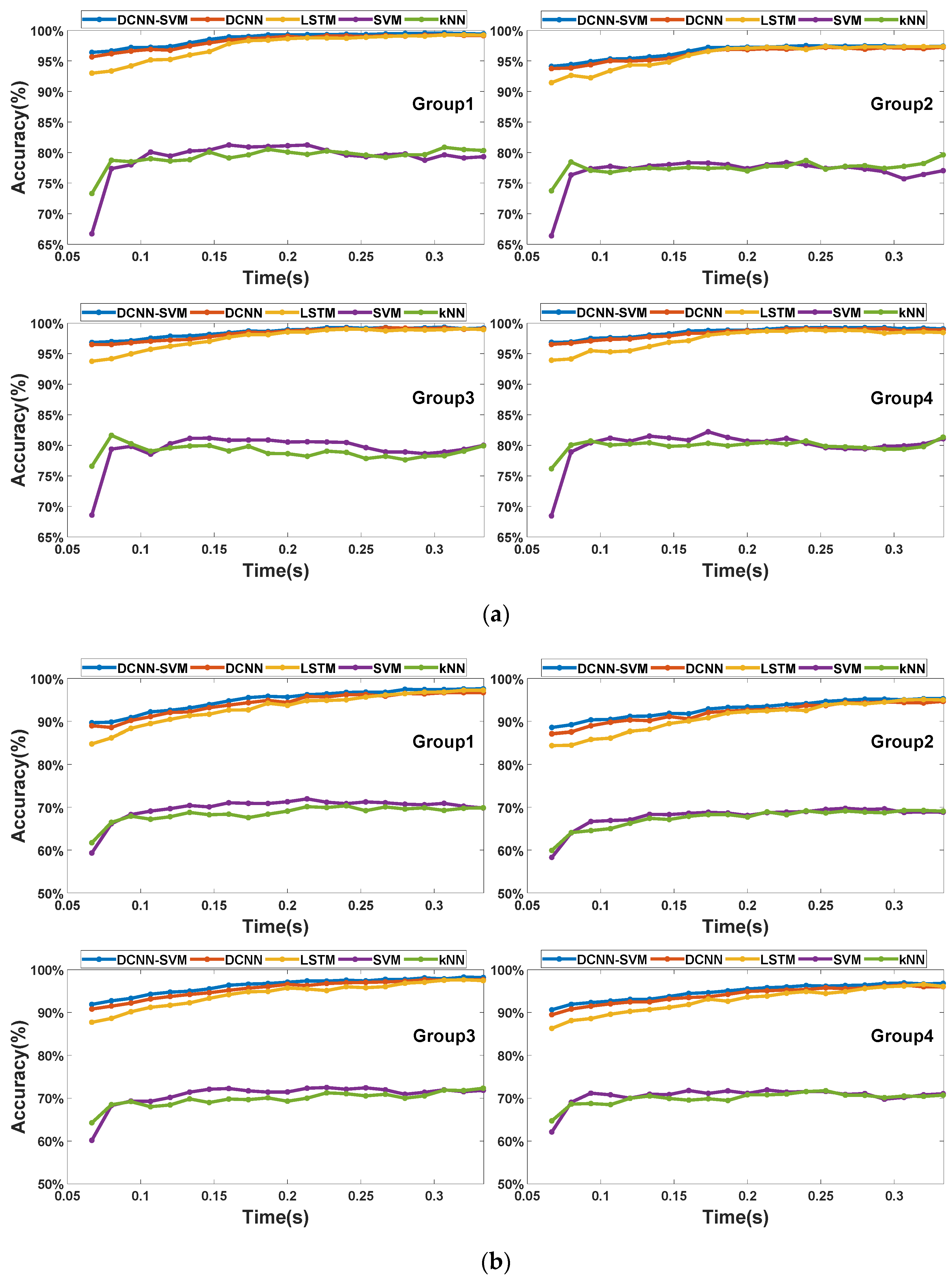

To illustrate, we define the situation shown in

Figure 7a and the situation shown in

Figure 7b as Case 1 and Case 2, respectively. The accuracy scores of the testing data sets with different

values are illustrated in

Figure 11. In both cases, the automatic feature extraction methods performed much better than the artificial feature extraction methods, including the SVM and the kNN methods. The maximum accuracy scores of the two artificial feature extraction methods were lower than 85% in Case 1 and lower than 80% in Case 2. In contrast, the minimum accuracy scores of the three feature extraction methods were higher than 90% in Case 1 and higher than 80% in Case 2. Among the three automatic feature extraction methods, the DCNN–SVM worked best with various

values. As shown in

Figure 11a, when

, the accuracy scores of the three automatic feature extraction methods increased rapidly with the increase in the

value. When

, the accuracy scores tended to be stable. As shown in

Figure 11b, when

, the accuracy scores of the three automatic feature extraction methods increased rapidly with the increase in

values. When

, the accuracy scores tended to be stable. In both cases, in the stage of rapid growth in accuracy, the LSTM model performed poorly compared with the DCNN and the DCNN–SVM models, while, in the stage of accuracy tending to be stable, the LSTM model showed a similar effect to the DCNN and the DCNN–SVM models. To some extent, this means that the feature extractor that does not pay too much attention to time features has a better effect when collision information is insufficient. The reason for the above results is that the vulnerable and acceptable domains were divided based on spatial location. In the actual collision process, the contact mode of the two domains in the early stage of the collision was highly similar, so the collision signals of the two domains were also extremely similar in a very short period after collision, as shown in

Figure 6. Therefore, it is difficult to extract sufficient effective features that can distinguish this similarity using the artificial feature extraction method, as corroborated by the results. In contrast, the features extracted by automatic feature extraction methods have higher dimensions, and therefore the possibility of extracting effective features is greater. However, it should be noted that at the early stage of collision, vibration signals have a high variation frequency, and sufficient time-related information may be not able to be obtained at the current sampling frequency. Thus, excessive attention to time-related features may make the model more sensitive to the specific features of some samples, resulting in a decrease in the generalization ability of the model. This is also related to the poorer performance of LSTM compared with the other two models when there is less collision information. Based on this point, using DCNN alone is also an alternative choice for this CLC problem.

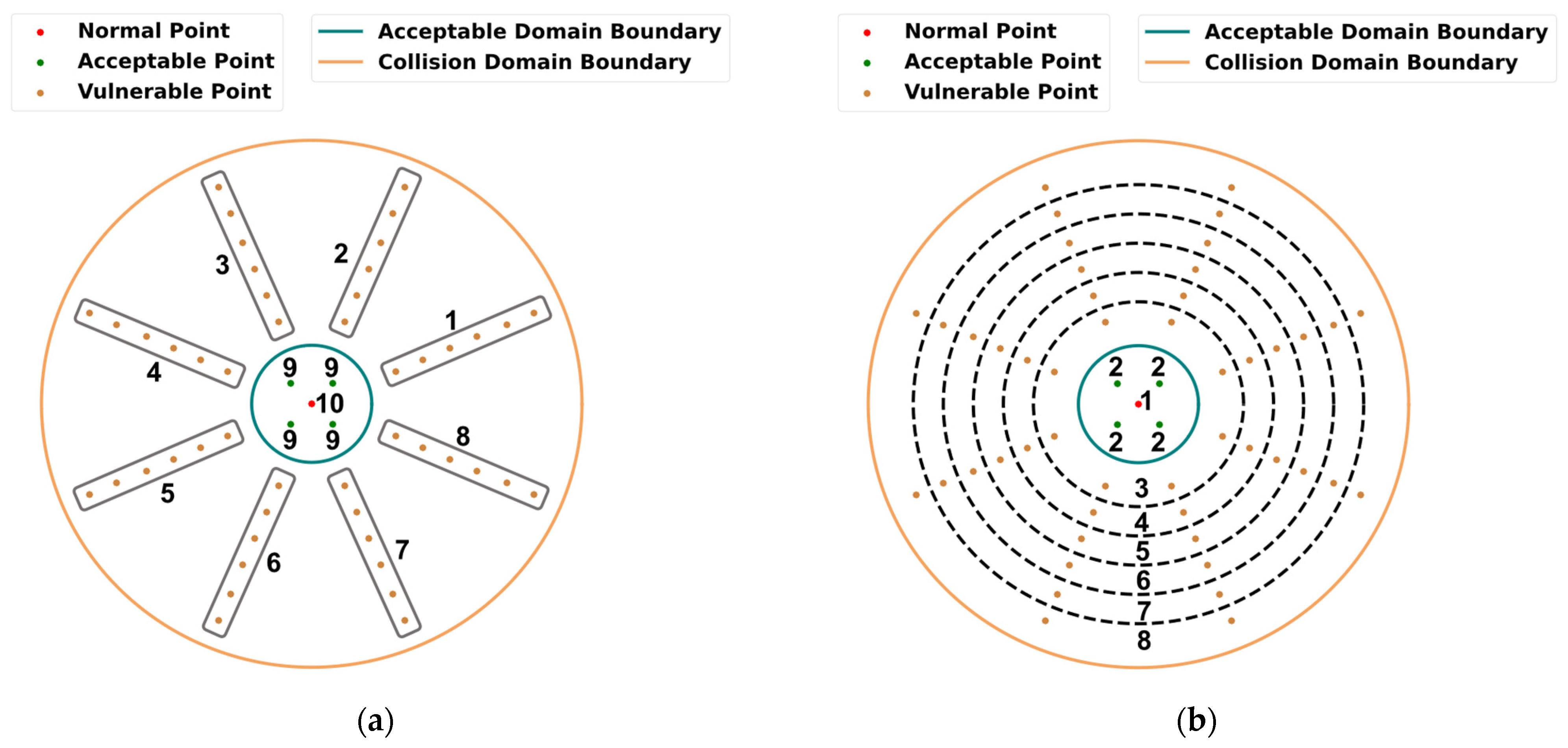

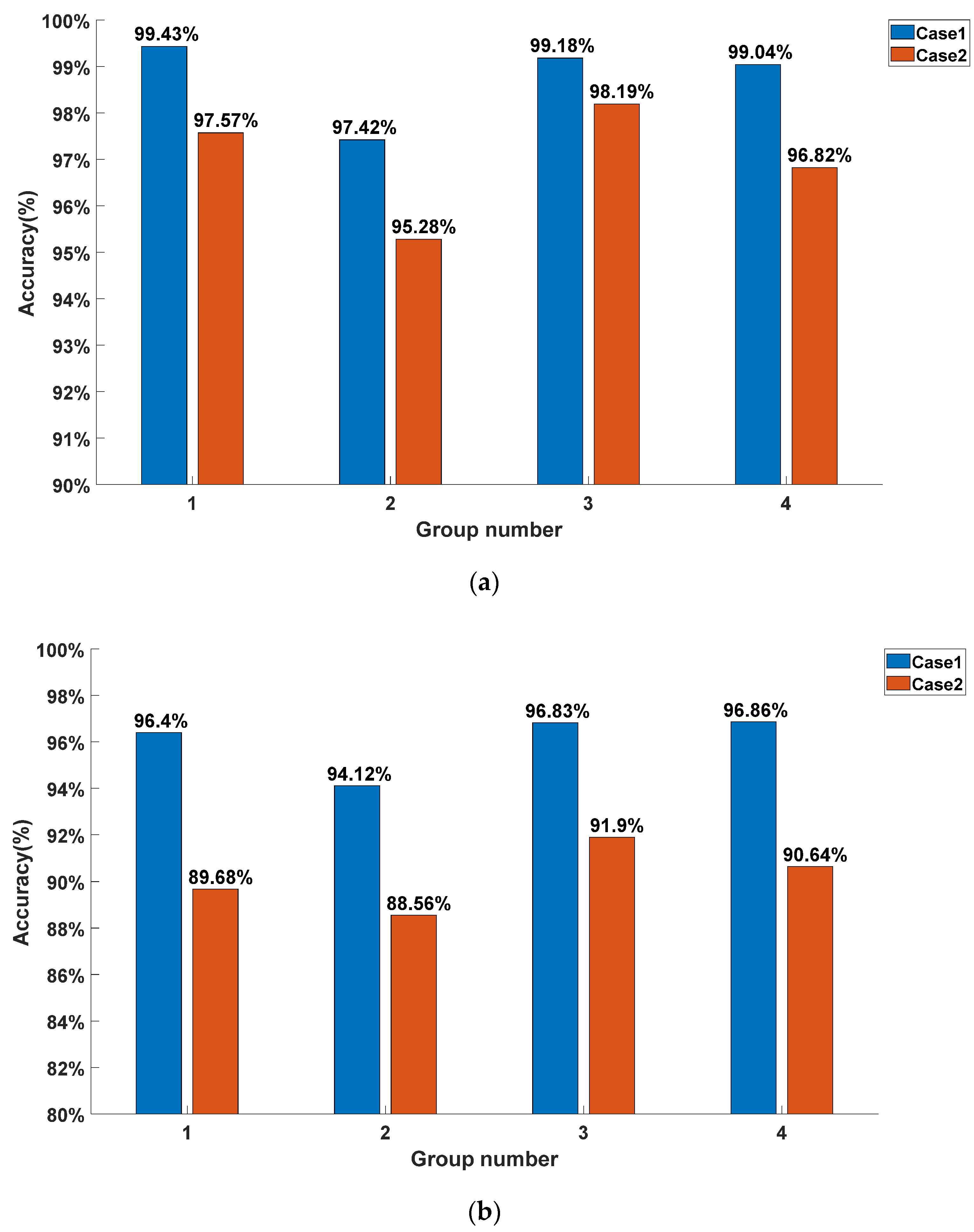

The accuracy scores in different groups achieved by the DCNN–SVM model are shown in

Figure 12. Here, we choose the situation in which

and

. As shown in

Figure 12a,b, there were significant differences in the accuracy of different groups. This means that the joint configuration has an impact on our proposed CLC method. Furthermore, calculating the standard deviation of the accuracy scores of different groups in both cases, we could deduce that when

, the standard deviations were 0.91% and 1.25%, respectively, in Cases 1 and 2, and when

, the standard deviations were 1.3% and 1.42%, respectively, in Cases 1 and 2. This indicates that our method is robust, to some extent, against the influence of different joint configurations. Moreover, the smaller the

was, the smaller the standard deviation was, which reveals that sufficient collision information reduces the influence of the joint configuration on the prediction results of the DCNN–SVM model. When

was the same, the standard deviations were also different in these two cases. This means that the robustness of the DCNN–SVM model on joint configurations varies in different CLC tasks. Furthermore, we observed that the model’s performance in Case 1 was better than that in Case 2. This may be because, when the collision occurred on the collision point in the same radial direction, the direction of the resultant torque of the elastic compensator was more similar. In contrast, the collision occurred on the collision point in the same circumferential direction, but could not contribute the same property to the collision information. From the results, the situation in which the model had a better CLC effect in the circumferential direction was Case 1, which is consistent with the actual physical situation.

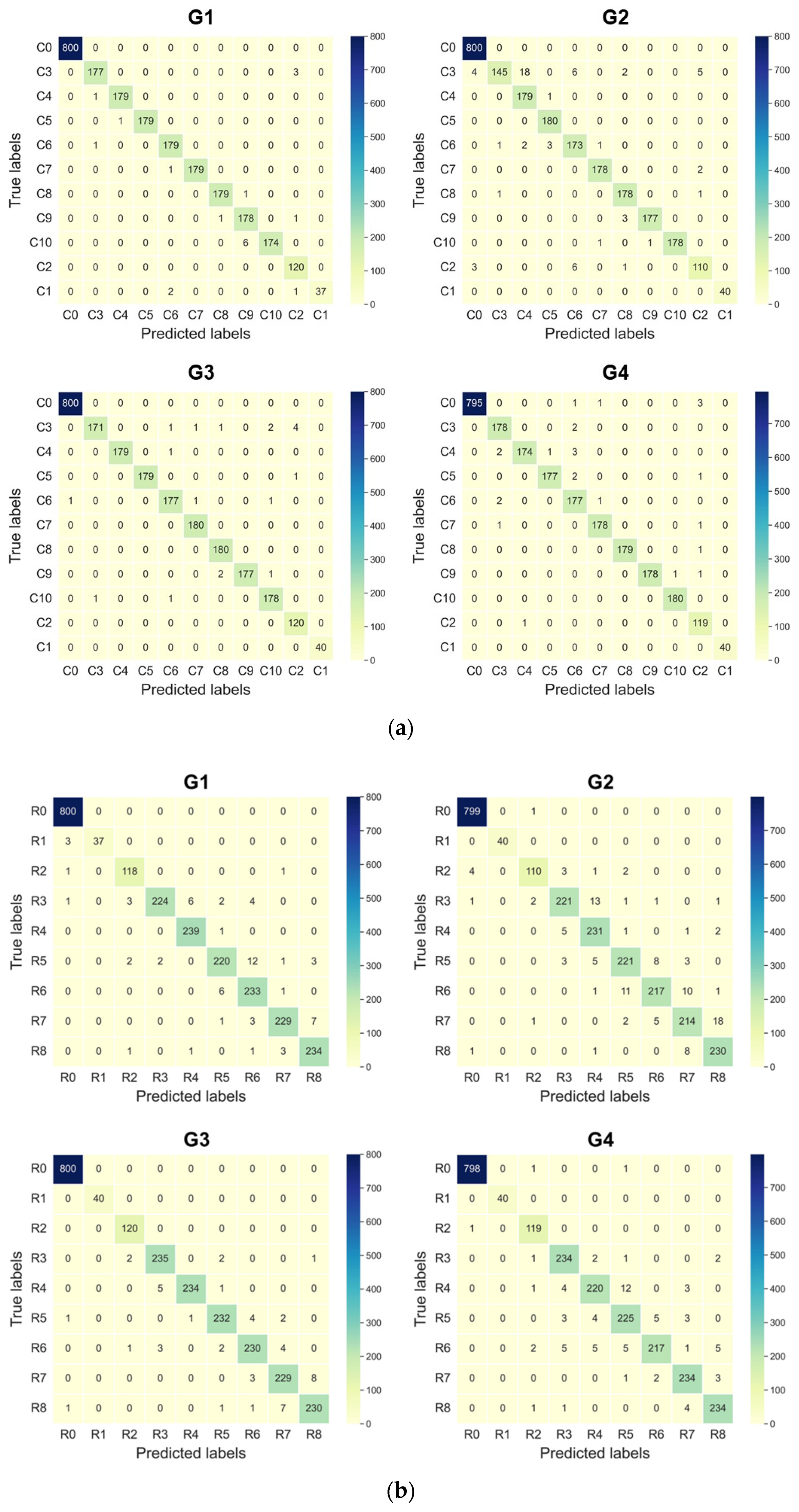

Hereinbefore, the prediction results of the collision localization and the collision classification have been discussed at the same time. Here, we conducted a more specific analysis of the collision classification and the collision localization, respectively. We chose the DCNN–SVM model with the best effect compared with other models as the analysis object. The results above were averaged, and in the process, three models needed to be trained to obtain each result. To illustrate, we chose one model from the three. The confusion matrices of the collision localization and the collision classification in Cases 1 and 2 are shown in

Figure 13a,b, respectively. In

Figure 13a, as illustrated in

Section 4, C1 and C2 represent the situation when contact occurs in the acceptable domain, C3-C10 represent the situation when collision occurs in the vulnerable domain, and C0 represents the situation when no contact occurs. In

Figure 13b, R1 and R2 represent the situation when contact occurs in the acceptable domain, R3-R8 represent the situation when collision occurs in the vulnerable domain, and R0 represents the situation when no contact occurs. The prediction precisions of the DCNN–SVM model for the three situations in both cases are listed in

Table 5. The precisions of

free and

vulnerable were higher than 99% in both cases. Low misjudgment rates of

free and

vulnerable can reduce the possibility of misstopping the manipulator. In contrast, the prediction precisions of

acceptable were lower, and the minimum precision was as low as 94.94%. From the confusion matrices in

Figure 13, we can also see that the mistake mainly occurred in mispredicting some

vulnerable instances as

acceptable instances. The reason for this result is that our

vulnerable and

acceptable domains were defined based on their geometry position, and the collision modes in these two situations were much more similar at the boundary of these two domains, in contrast to

free and

normal instances. Thus, the likelihood of misjudgment at the boundary was greater. This can also be seen from

Figure 13b, in which more R3 instances were wrongly judged as R2 instances than other instances in the rest of the vulnerable domain. This kind of mistake may cause serious damage to the manipulator. Additionally, how to improve the prediction precision of

acceptable should be the focus of our follow-up research. In collision localization, we neglected the

free instances. The prediction precision in Cases 1 and 2 is, respectively, listed in

Table 6 and

Table 7. In Case 1, the mean precision of each group was higher than or equal to 96.82%, and in Case 2, the mean precision of each group was higher than or equal to 94.12%. This means that, to some extent, our proposed method can effectively deal with the collision localization problems that occur on the end effector. Note that in Case 1, the mean precision of each group was higher than that of the same group in Case 2. This means that the DCNN–SVM model performed better in collision localization along the circumferential direction than along the radial direction. Additionally, this result is consistent with the above overall analysis of CLC.

In order to explore the influence of the kernel size of the convolutional layer on our proposed method, we selected DCNN–SVM with three different convolution kernels to conduct CLC for collision signals with

and

. Our experiments used Windows on the following system: Processor: Intel (R) Core (TM) i7-10700K CPU @ 3.80 GHz, Memory: 31.9 GiB, GPU: NVIDIA GeForce RTX 3080. The results in different cases are listed in

Table 8 and

Table 9, respectively. By comparing the accuracy of CLC with different models, we can see that the model with the

convolution kernel was slightly better than that with the

convolution kernel, while it was significantly better than the model with the

convolution kernel. In terms of run time, to predict a single sample, the time consumed by models with different convolution kernels was similar. The above results indicate that an increase in convolution kernel size is helpful to improve the performance of the model. This may be because the convolution kernel with a large size can fuse vibration signals from more dimensions together in a single sampling, which is conducive to the extraction of more effective features.