1. Introduction

Information on the spatial distribution of evapotranspiration (ET) is becoming increasingly important in agriculture and in water management with the higher frequency of droughts in many areas of the world [

1]. Satellite-based products/applications for ET mapping [

2] are already commonly available for this purpose. However, data products such as MODIS Evapotranspiration (500 m spatial resolution) or Landsat Provisional Actual Evapotranspiration (30 m spatial resolution) or Sen-ET produced ET maps by applying thermal sharpening procedure (20 m spatial resolution [

2]) have still too large pixel sizes regarding spatial accuracy for studies conducted on, e.g., evaluating small scale field plot experiments or correlating the modelled latent heat flux/evapotranspiration data to plant ecophysiological data (for example leaf/stand gas exchange or leaf area index) possibly measured on the ground. Other factors such as cloudiness or time of satellite overpass may also limit the periods when satellite imagery can be used. The effect of pixel size on ET model performance was also shown to be significant when modelling systems consisting of different plant functional types [

3], ET was accurately simulated when the pixel scale was suitably small (<5 m) to robustly discriminate between grass and tree pixels, and model uncertainty drastically increased at a spatial resolution greater than 10 m [

3]. In Nassar et al. [

4] model-measurement differences were also shown to be increasing in a vineyard (e.g., no occurrence of different plant functional types) when decreasing the spatial resolution. Moreover, Kustas et al. [

5] pointed out that when there is a significant discontinuity in surface conditions, the subpixel variability in energy fluxes will likely cause unacceptable errors in the ET prediction. These findings support the need for high spatial resolution ET mapping (e.g., from drones) to enhance the accuracy. The trade-offs between the expectations towards efficient resource use, high spatial resolution, and accuracy of thermal images led to consider solutions where main parts (basically the thermal and the RGB/multispectral cameras) can be flexibly and independently used in UAV based systems.

With the development of UAVs and imaging sensors attached to them [

6,

7] it became possible to collect information from suitably large areas and thereby to provide valuable field-scale information also for precision agriculture [

8] applications. Along with this trend, there was an increased interest in UAV based ET estimation in the scientific community. Niu et al., in [

9], summarized the studies published on this topic in the last couple of years and found that the UAV based remote sensing can be a more flexible solution in precision agriculture applications then satellite based. UAV based ET mapping was applied for various vegetation types, from grasslands through barley fields to olive orchards and vineyards (

Table 1).

Table 1 is based on Niu et al.’s [

9] summary, but it is complemented with a few more recent studies and the ground resolution of the different cameras. It must be noted that the type of UAVs and imaging sensors vary in a wide range in the early studies, while in the most recent manuscripts the DJI drones and MicaSense cameras are dominating.

Applications using two or more different proximal sensing systems on one UAV platform on the same occasion are frequent and the expectations toward such integrated systems—considering both the higher number of applications in a single flight and the geolocation accuracy—are increasing. Proximal sensing of temperature in parallel with multispectral RGB sensing is an example of that, but there are other ones including (RGB + radar, multispectral + non-imaging spectrometers). While the popularity of the off-the-shelf drones is understandably high (no need to bother with solutions/applications often difficult and time-consuming to develop), the price of these systems and flexibility/quality expectations against the applications may require investing some work for integrating different systems into one functional unit. High spatial resolution (higher than achievable by off-the-shelf solutions) of thermal cameras can be such a reason for using a thermal camera together with an RGB camera to apply the imagery as input to an energy balance model (e.g., the pyTSEB model, [

13,

14]) to create evapotranspiration maps. Emerging needs for better spatial resolution of thermal cameras are also shown for example by the observable trends of the industry to replace existing solutions of multispectral/thermal applications with ones of higher thermal resolution. Higher spatial (geolocation) accuracy is another issue when the goal is plant phenotyping in a small-scale field plot experiment setup. In such cases, commonly achievable 1–3 m accuracy as provided by onboard GNSS (Global Navigation Satellite Systems) rovers is not satisfactory. Use of ground control points (GCP) while overcomes this problem, is a tedious and time-consuming procedure, takes time from the short periods available for image acquisitions when other constraints (to have steady light conditions during flight, the time necessary for ground control measurements, the time necessary to put out and collect GCPs) should also be considered and met. Therefore, the use of RTK/PPK systems is necessary though the costs of the two approaches are highly different (RTK in most cases is more complex and therefore a more expensive solution).

The base of solutions flexible enough to incorporate a thermal camera or a chosen GNSS rover is frequently one of the Raspberry Pi microcomputers (RPi) models [

22]. RPis are widely used in different agricultural applications [

23,

24] where they are preferred for the availability of many different interfaces/ports (GPIO, UART, etc.) provided and for accessible software development kits (SDK) that can be installed on this platform. Integrated applications of component devices can be developed by (slightly) modifying the provided SDKs. It is to be noted here that, SDKs have usually passed a quality assurance process and many of the issues requiring a long time by an experienced engineer are already solved in them so that, limited programming experience may satisfy the needs of the development of a custom integrated application.

The development of such an integrated application was aimed to produce a UAV-based thermal and RGB image acquiring system that collects high spatial resolution thermal data while also enabling the user to high spatial location accuracy by applying PPK processing. The resulting imagery served as part of the input to model evapotranspiration by using the TSEB (pyTSEB) energy balance model [

15]. Modelled evapotranspiration/latent heat values from the eddy covariance (EC) footprint area were compared to the measured fluxes. Validation was carried out by EC data and modelled maps of ET using data from several flights. The application can be developed further by, e.g., including further miniature sensors (a spectrometer, or temperature/humidity/other scalars sensor) to be controlled from the same RPi or using possibilities provided by the onboard SDK. Knowledge of the spatial distribution of transpiration and evaporation is expected to have importance in evaluating small scale field plot experiments in field-scale plant phenotyping trials and generally in applications where subpixel heterogeneity may be expected to occur. Conforming to expectations towards producing ET maps of suitably large spatial coverage, resolution and accuracy at reasonable efforts may require considering use of independent parts that can flexibly used in a common UAV based system.

This study shows an example of conforming to the complex constraints of acquiring high spatial resolution thermal imagery of reasonably high spatial resolution and accuracy, and use this data in a spatial modelling context to produce evapotranspiration maps. These maps are good candidates to be used in evaluation of field scale trials on plant production, irrigation, plant phenotyping trials as ET is frequently dominated by transpiration, a single variable that is mechanistically linked to gross primary production through stomatal action. The performance of the procedure was evaluated by validation of the produced ET models against ET as measured by eddy covariance technique on a diverse dry grassland and on an arable land.

2. Materials and Methods

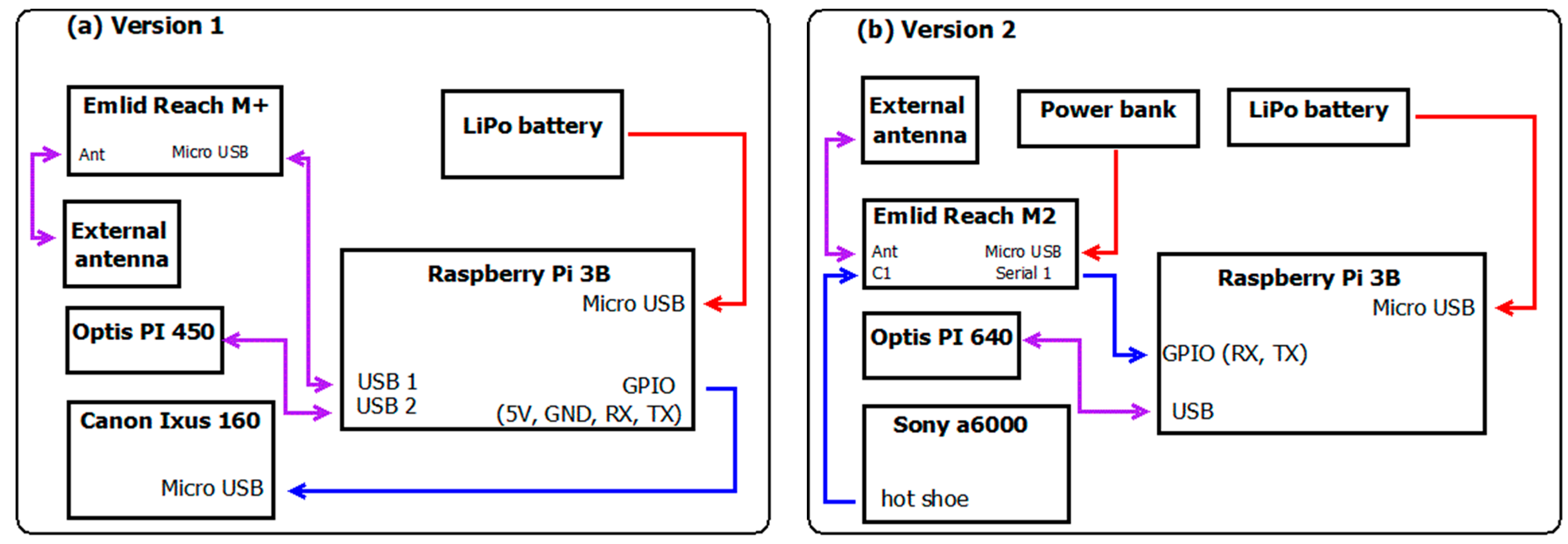

We aimed to set up a system onto the board of a UAV that can take RGB images and thermal snapshots while registering the geographical coordinates of the camera events and subsequently produce georeferenced RGB and thermal mosaics from the gained dataset to be used as inputs of a model estimating evapotranspiration. Our setup’s hardware consists of a commercially available digital camera (Canon Ixus 160/Sony a6000) a thermal camera (OPTRIS PI 400/640, Optris GmbH, Berlin, Germany), a GNSS receiver chip (M+/M2, EMLID Ltd., Hong Kong, China), while the main, controlling and data storing device, is a Raspberry PI 3B single-board microcomputer (Raspberry Ltd., Cambridge, UK). The control of the whole system was based on The Optris Software Development Kit (Evocortex GmbH, Nürnberg, Germany) installed on the RPi.

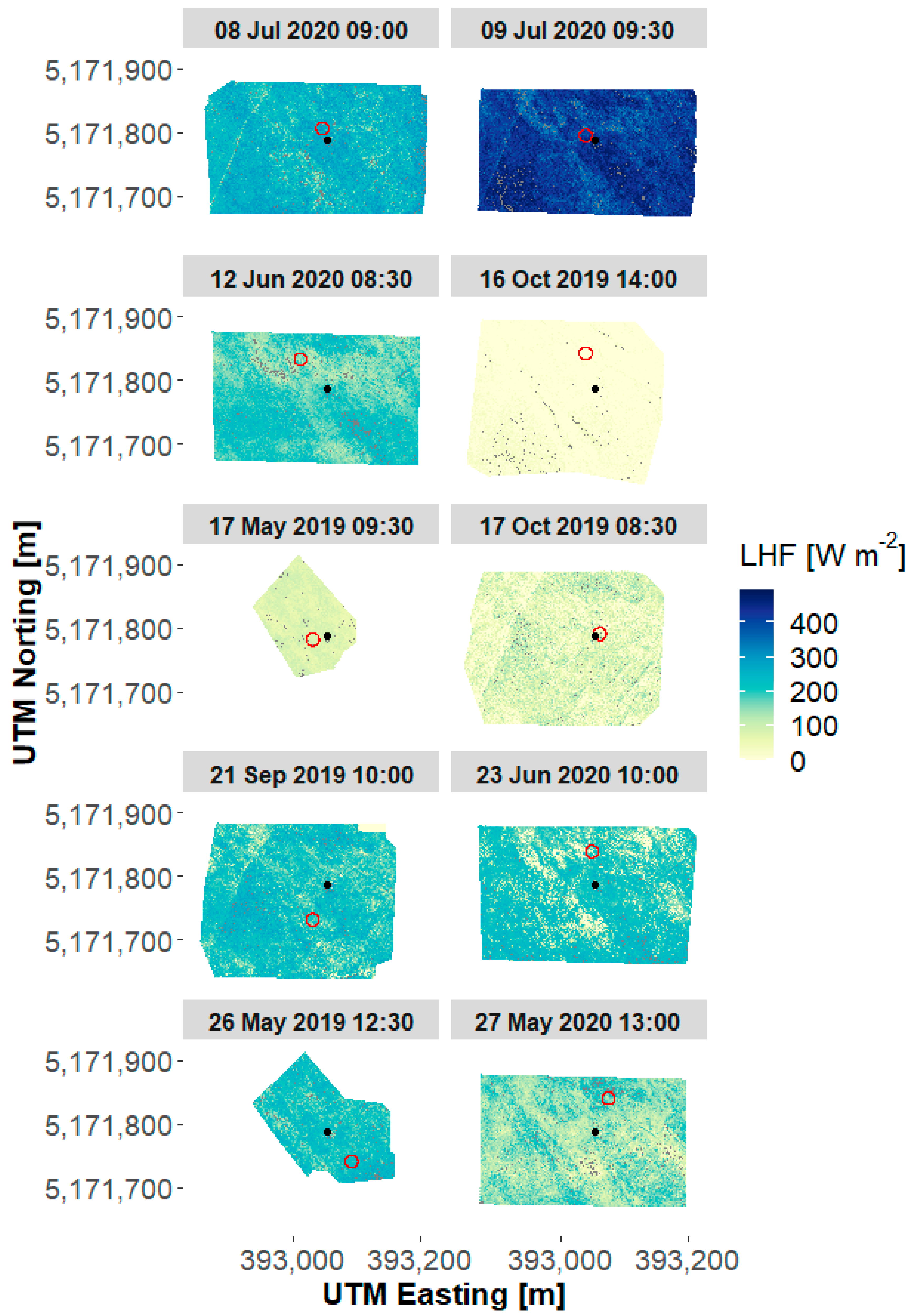

2.1. UAV Setup

The multicopter used for surveys is a DJI Matrice 600 Pro hexacopter equipped with a 2D carbon frame gimbal providing nadir view positions for the thermal and RGB cameras installed on the gimbal. The cameras have been fixed by using their ordinary main fixing screws (

Figure 1). Plastic structures were designed, and 3D printed to hold the gimbal and a plastic sheet as a platform (

Figure 1) for carrying the Raspberry Pi 3B, the M+/M2 GNSS (EMLID) receiver, the LiPo batteries (11.4 V, 3S) to supply current for the devices. All the cameras/devices (including the gimbal) obtained electric current supply independently from the drone’s power supply.

2.2. RGB and Thermal Cameras

In the initial setup, a Canon Ixus 160 digital camera was chosen considering its lightweight and high resolution (20 Mpix) and because of the possibility to be triggered through its mini-USB port. To establish the trigger a homemade printed circuit (PCB) had to be prepared (see details of the triggering later). After the first season’s flights, the Canon camera was replaced by a Sony a6000 (23.4 MP). On one hand, the Sony a6000 is a superior camera as compared to the Canon Ixus 160 (

Table 2), on the other a direct connection between the camera and the GNSS receiver could have been established through after the hot shoe cable becoming available on the market. The direct connection provides better spatial accuracy when using PPK (see sections on georeferencing and software control). Thermal images were taken by an Optris PI 400 thermal camera (replaced by an Optris PI 640 after the first year), with an optical resolution of 382 × 288 pixels (640 × 480) and spectral range of 8–14 µm. The camera connects via its USB port to the Raspberry Pi 3B.

2.3. Software Development for Control of the Devices and Data Storage

The IRImager Direct Software Development Kit (SDK, Copyright (c) 2016–2017, Optris GmbH/Evocortex GmbH, All rights reserved.) is published in C++ language. The SDK provides the users with the possibility to control (select operation mode, acquire image features, trigger etc.) the camera and save pictures from the data stream. The SDK was installed on the RPi running a Linux (Ubuntu Mate 16.04) operating system according to the instructions on the home page of the SDK (

http://documentation.evocortex.com/libirimager2/html/index.html accessed on 25 August 2017).

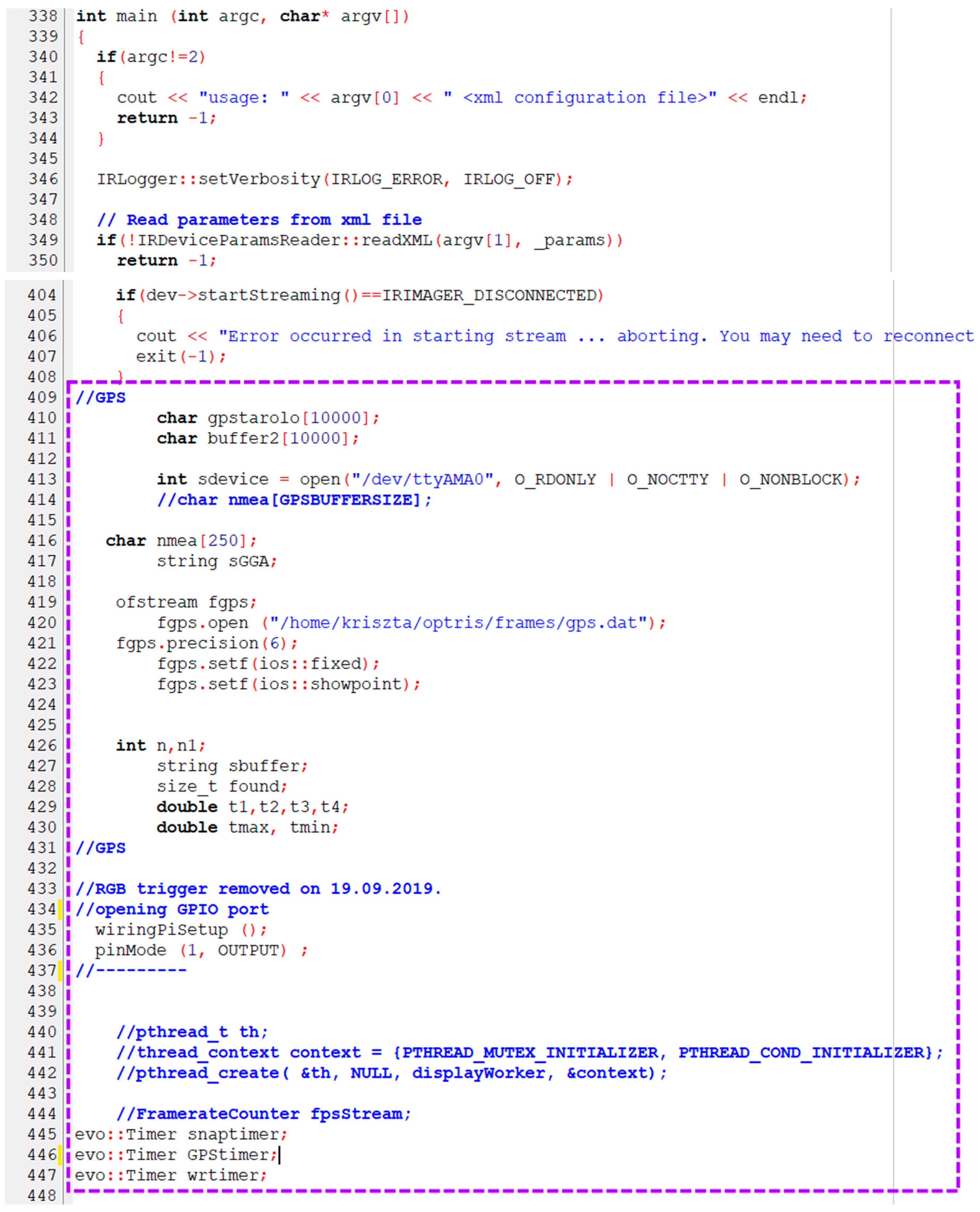

An example script (

irimagerShow.cpp) provided as part of the SDK was the base of our modified script. The main parts of the original script were kept, however parts of real-time data visualization were removed. The retained parts of the script performed the following tasks: establishing the link between the thermal camera and the RPi, initializing the operation of the thermal camera, performing calibration during operation and error handling. Modification of the code includes establishing RS232 communication with the GNSS receiver (

Appendix A Figure A2), obtaining and storing NMEA sentences, taking a thermal snapshot from the stream at regular intervals, providing a trigger signal to the RGB camera, and writing necessary (GPS and temperature) information into a .txt file (

Appendix A Figure A3). We have also put in some code lines to receive notifications if the communication between the RPi and the GNSS receiver was proper and if the M+/M2 was obtaining a proper quality (fixed) signal.

Control of the OPTRIS Thermal Imager and RGB Camera

By default, the output of the Optis PI400/640 thermal camera is a video stream from which snapshots (images/frames) can be saved by the

cbSnapshot() function of the SDK. The

cbSnapshot() function was complemented with further SDK functions (

_iBuilder.getIsothermalMin();

_iBuilder.getIsothermalMax()) to obtain the minimum and maximum temperatures for the actual frames, respectively (

Appendix A Figure A1). These data were required during the rescaling process (described in

Section 2.6.2) before mosaicking. Saving the thermal images as .ppm (Portable Pixmap Format) files to the RPi’s storage is also controlled by the

cbSnapshot () function.

Initialization of the serial communication with the GNSS receiver, opening of the. txt file storing GPS and temperature information was also added to the script (

Appendix A Figure A2). The GPIO 14 port triggering the digital camera was configured as output by the

wiringPiSetup() and

pinMode() commands of the WiringPi library (

http://wiringpi.com accessed on 29 November 2017).

A conditional (“while”) loop (called in every 1900 ms) was inserted into the inner part of the script (

Appendix A Figure A3), with all commands necessary to control the parts of the system and save collected images and information included in this loop.

The first of these commands was the one calling the cbSnapshot() function initiating the retrieval of the thermal frames.

After the cbSnaphop() function was completed, RPi GPIO 14 (UART TX) was set to high for 100 ms by the digitalWrite() command of the WiringPi library. This command triggered the Canon Ixus 160 camera via the home-made PCB connected to GND, 5V, GPIO14 and 15 ports of the RPi.

Afterwards, the serial port was polled for GPS data availability. The serial number of the actual snapshot, the corresponding maximum and minimum temperature and the GPS data were saved to a text file.

The camera controlling script slightly changed when the Canon camera was replaced with a Sony A6000 in the second half of the first study year. The Sony A6000 camera was time interval triggered from the menu and was equipped with a hot shoe connector triggering a time stamp store on the Emlid Reach GNSS receiver (

Figure 2). Using this setup meant to lose common control (triggering and taking a frame, respectively) of the thermal and the RGB camera from the same code. Geolocation accuracy of the RGB image, however, improved by using the standardized procedure applied on (hot shoe-triggered) timestamps stored in a text file on the M2 GNSS receiver (

https://emlid.com accessed on 19 March 2020).

2.4. Equipment for Georeferencing

For precise georeferencing GPS data obtained from the autopilot of the UAV is usually not enough as the accuracy of these data is typically in the order of a couple of meters. A higher (even centimeter) level of geolocation accuracy can be achieved by employing Real-Time or Post-Processed Kinematics (RTK or PPK) methods. Both methods require the operation of a base station collecting raw GNSS information or data from an NTRIP server in addition to the rover’s (on the UAV platform) GNSS data. The RTK technique requires a constant good quality airlink connection between the rover and the base. However, in the case of the PPK method the constant connection is not needed and correction of the GNSS data collected during the flight is carried out by post-processing. We have used the PPK method in this study. Raw GNSS data were collected by a STONEX S8 GNSS receiver used as a base station during the flights. The location of the base station was measured prior to the flight with high accuracy by averaging coordinates collected during two minutes. During this measurement, an NTRIP connection to a nearby server supplying the RTCM3 correction signal was used. The base station was disconnected from the NTRIP server afterwards and was recording the GNSS data during the flight for PPK processing. Some details of the applied PPK process are described in

Section 2.6.1.

An Emlid Reach M+ chip was used as the rover GNSS receiver onboard the UAV. The rover (the M+ chip) was set up by a mobile phone EMLID application to save the raw GNSS data at 5 Hz while streaming NMEA data through a USB connection to the Raspberry PI 3B. The M+ chip was replaced by an Emlid Reach M2 for faster start up and more stable operation. With M+ it might take to 20 min to have a fix signal with M2 it took 1–2 min and signal quality also improved significantly, after the exchange practically all the path was of Q1 quality (green on the application map in the RTKpost application). The Emlid M+ GNSS rover was receiving power supply from the RPi whereas the Emlid M2 from a separate USB power bank because the M2 requires larger power than available from the RPi USB. The data from M2 was sent using the separate serial connection between M2 and one of the RPI’s GPIO ports, while the micro USB port was used both for power supply and communication in the case of the M+. Setup of the GNSS receivers (GNSS networks used, recording frequency, storage, etc.) was carried out by using the mobile phone application provided by the supplier. The raw GNSS data of the rovers and later the stored coordinates of trigger events (NMEA sentences) were post-processed (PPK processing as described in the

Section 2.6).

Ground Control Points (GCP) were installed and their locations were measured with high accuracy using the Stonex S8 GNSS receiver set up as a rover in RTK mode at both the cropland and the grassland sites. The GCPs were 16x16 cm wooden squares coated with thick aluminum foil so that they could be detected on the thermal images as well. The wooden squares were fixed atop poles of height providing visibility, e.g., 40 cm height on the grassland and 90 cm height on the cropland.

2.5. Study Sites

UAV based image acquisition campaigns were conducted in 2019 and 2020 on two sites (

Table 3). One of them is a dry permanent grassland used as pasture in the Kiskunság National Park (19.6° N, 46.69° E, 104 m, Bugac, Hungary) the other is cropland (19.53° N, 47.66° E) near Kartal in the northern part of Hungary. The study sites are equipped with eddy covariance (EC) towers measuring the turbulent exchange between the vegetation and atmosphere. The measured turbulent fluxes include the sensible and the latent heat fluxes, used during the ET model validation process in the present study. The EC station was set up in 2002 at the grassland site and in 2017 at the cropland site.

The eddy covariance system consists of a CSAT3B Sonic Anemometer (Campbell Scientific Ltd., Logan, UT, USA) and an LI-7500 open path CO

2/H

2O infrared gas analyzer (LiCor Environmental, Lincoln, NE, USA) at both sites. Net radiation is measured by an NR Lite net radiometer (Campbell Scientific Ltd., Logan, UT, USA). A more detailed site description for the grassland and the setup of the eddy covariance systems valid at both sites have already been reported in [

25,

26]. The cropland site is on a productive soil of high clay content with millet and wheat sown in the two study years. The minimum fetch is about 100 m at this site, so the main devices of the EC system were installed at a variable height of 1.7–3.5 m above the ground.

2.6. Data Processing

2.6.1. GNSS Data Processing

Raw base data saved by the STONEX S8 GNSS receiver was downloaded by the STONEX RTK Assistant (ver 1.62) application in RINEX format. Rover data from the Emlid Reach M+/M2 chips was downloaded by the ReachView (Emlid Ltd., Hong Kong, China) application.

The raw GNSS data collected from the base and rover modules were post-processed by the tools provided by Emlid Ltd. These tools are based on the RTKLIB Open Source Program Package and were optimized by Emlid Ltd. to be used with data collected with GNSS receivers manufactured by Emlid. The raw GNSS data was collected in .UBX format, and as a first step it was converted (rtkconv.exe) to .nav and .obs files. In the next step, these files together with the RINEX OBS and RINEX NAV files from the base station were processed by the rtkpost.exe application. The result of this step is a .pos file containing the coordinates of the route of the UAV in 5 Hz resolution (i.e., every 0.2 s). When the Sony A6000 digital camera was connected to the Emlid Reach receiver (rover) through its hot shoe then an additional output file was generated by the application (rtkpost.exe) containing the post-processed coordinates of the camera trigger events. The output files also contain the GNSS solution quality (fix, float, single) of the coordinates. As in the mosaicking software (Agisoft Metashape) the position accuracy information was expected in a term of distance (shift), the quality codes were converted based on our arbitrary choice as follows: fix: 0.5 m; float: 2 m; single: 10 m.

In the case of the thermal images, the GPS data saved as a text file saved on the Raspberry PI 3B could not be post-processed directly by the RTKLIB applications. Instead, Post-processed GNSS data was picked from the corresponding (5 Hz) PPK output dataset containing the whole route according to the timestamps of the thermal camera trigger events saved in the .txt file on the RPi. This selection was performed in an R environment.

2.6.2. Thermal Image Processing

As the Optris PI 450/640 camera uses different temperature ranges at the different snapshots, a common temperature range for each flight had to be defined, and the images had to be re-scaled accordingly. This rescaling step is necessary before running the image mosaicking software to construct the map of surface temperatures.

Rescaling was performed in the R environment, thermal images were read with the

read.pnm() function of the pixmap package as a first step in this procedure. This function returns a 3 channel (each 8 bits) RGB pixmap object for each thermal image. The 3 × 8 bit RGB values were converted to single 24 bit values by using a linear combination of the data in the RGB channel, as

This value was the input to calculate the pixel’s temperatures using the stored maximum and minimum temperatures of the given thermal image. Based on this single value an unambiguous attribution can be made between the pixel colors and temperature values. The colorbar saved with each snapshot (thermal image) contains 239 different colors, the brightest color (highest value) corresponding to the maximum temperature of the image while the darkest color (lowest value) to the minimum temperature. Similarly, the temperature range of each snapshot was divided into 239 values and the single 24 bit values were converted into temperature based on the attribution of the colors of the colorbar and the temperature range. In the next step, colors were attributed to the common (regarding the whole flight) temperature range. Based on the temperature calculated in the first step a new color based on the common colorbar was assigned to each pixel of a given snapshot then it was converted back to RGB values also based on Equation (1). The resulting images (

Figure 3) were written with the

write.pnm() function also from the pixmap package.

2.6.3. Mosaicking of RGB and Thermal Images

The RGB images and the rescaled thermal images were mosaicked independently by the Metashape (Agisoft) software supplying the post-processed GNSS data in a separate file. The workflow was based on the tutorial published by Agisoft including—among others—camera optimalization and marking of GCPs. The latter step is supposed to enhance the spatial accuracy of the resulting maps. Four GCPs were left out of the procedure to make possible the evaluation of the spatial distortion of the geolocation. The outcome of the mosaicking process was two georeferenced 3-layered raster, one ordinary RGB raster, and one for the thermal images. In addition to the efforts made on enhancing the accuracy of GPS information by PPK, there was a slight misalignment between the two mosaics (RGB and thermal). As the GPS coordinates belonging to the RGB images were considered to be more accurate the thermal mosaic was aligned to the RGB in QGIS using the Georeferencer tool. The tool requires the marking of clearly discernible objects on the layer to be aligned and then obtaining their coordinates from the other layer. In this step, the same aluminum coated GCPs were used as in mosaicking. After the alignment, the thermal raster was converted to a 1-layer GeoTIFF containing temperature values with a procedure similar to the one described above rescaling the thermal snapshots to a single common temperature scale valid for a particular flight.

2.6.4. Quantifying Spatial Error of the Mosaics

The accuracy of mosaicking and georeferencing was also attempted to be assessed. The idea was to measure the distance of known points at the RGB mosaics and in reality. In 2019, without GCPs being set, markers of a previously established grid were used. The markers were 2 cm by 2 cm wooden squares painted in white. They were placed 40 cm above the ground and were situated mostly in the central part of the RGB. In the next season, constant GCPs placed at the edge of the area of interest before the start of the 2020 season were used for this analysis. The location of the GCPs regarding all the RGB mosaics corresponding to the flights listed in

Table 2 was marked and saved as a shapefile layer in QGIS. The distance between the location of the GCPs acquire from the RGBs and their measured coordinates were calculated by the

pointDistance() function of the geosphere library in R.

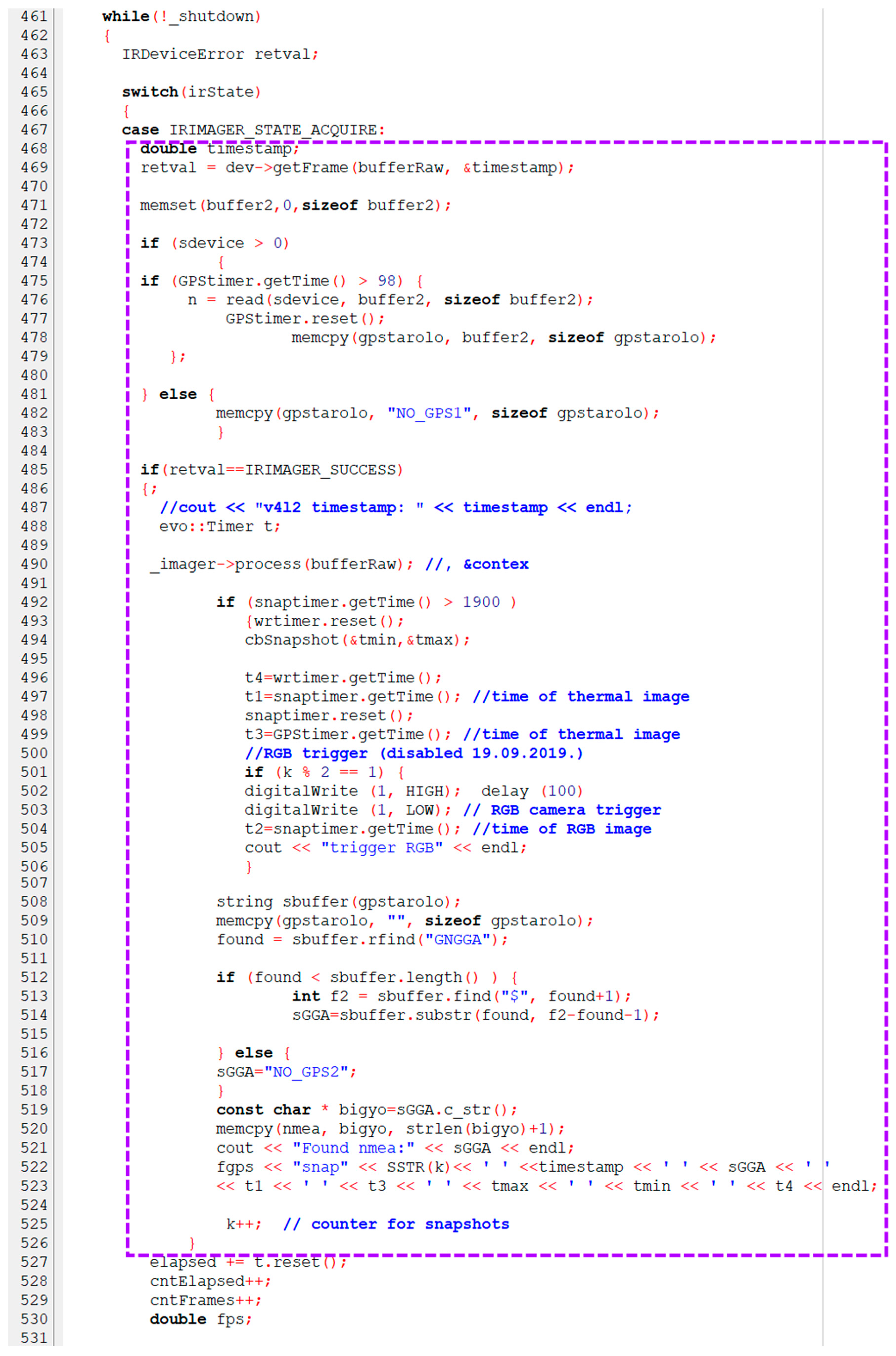

2.6.5. ET Modelling

The basic purpose of setting up the UAV system was to produce surface temperature maps and then turn them into evapotranspiration maps by the means of the pyTSEB model. The pyTSEB model is an energy balance model which calculates sensible heat flux from the temperature difference between the surface and the surrounding air, and then computes latent heat flux as the residual of the balance. The basics of the model are described in [

15,

27,

28], in present work the Python version of the model (PyTSEB) was used, which was downloaded from is its GitHub source (

https://github.com/hectornieto/pyTSEB accessed on 28 November 2019). The model is extensively used for evapotranspiration modelling both from UAV [

12,

14,

17,

18] and satellite-based thermal images [

2,

3].

In addition to its primary input—the surface temperature map/raster—there are other necessary inputs to run the pyTSEB model. These additional inputs include fractional cover (fc), green fraction (fg) and leaf area index (LAI) maps. Both, fractional cover and green fraction maps were produced based on the green layer of the RGB orthomosaic. Fractional cover of the grassland was considered as total (i.e., fc = 1), and the green fraction was estimated, while in the case of the cropland all the vegetation was assumed to be green (fg = 1), and the fractional cover was estimated from the green layer of the RGB. LAI maps were constructed using the regression between (ground) LAI estimations by a ceptometer and vegetation indexes (VI or VARI, whichever gave the higher R2) calculated from the RGB mosaic. The LAI and VI/VARI correlation were based on data from coinciding field survey activities when available. In the frame of these field surveys, LAI was measured at a spatial grid of 78 points with 30 m resolution. Without a coinciding survey, LAI was measured at 8 locations with highly different plant cover. In the latter case, after measurement, but prior to the flights, these locations were marked by 40 × 40 cm wooden squares which enabled us to extract the VI/VARI values without spatial bias.

As the last step of the data preparation, all the input rasters were resampled to the spatial resolution (~8 cm) of the thermal raster. Other surface parameters (vegetation type, vegetation structure parameters, micrometeorological variables) were compiled into a text file according to the format required by pyTSEB. The necessary micrometeorological data (air temperature, wind speed, pressure, vapor pressure, incoming solar radiation) was picked from the data measured at the eddy-covariance towers at corresponding the study site. The PyTSEB model was run from the conda command line. The main output of the pyTSEB model is a 4-layer GeoTiff image containing the net radiation (Rn), sensible heat (SHF) and latent heat flux (LHF) and ground heat flux (G), respectively.

2.6.6. Validation of ET Modelling

Validation of the latent heat flux estimated by the pyTSEB model was performed by comparing it to the LHF measured by the eddy covariance technique. As EC based turbulent fluxes refer to a much smaller area than the area covered by the UAV flights, thus the area from which the EC fluxes originated had to be selected. This selection was performed based on the source area (footprint) analysis of the EC measurements. The footprint analysis is usually part of the flux calculation procedure, and its results are included in the output. The footprint calculation [

29] in the EddyPro software outputs—among others—the main wind direction and the peak distance, i.e., the direction and distance from which the largest relative flux contribution is originating. The eddy flux footprint coinciding with the time of a given flight survey was used to find the area of interest on the ET map. We have used a rather simple representation of the footprint; it was considered as a 10 m diameter circle around the flux footprint peak. Pixels within the circle were averaged and then compared to the EC based LHF fluxes.

4. Discussion

4.1. Prospects for Thermal UAV Studies

While relatively easy access to satellite ET maps is already provided, these maps still have an insufficient spatial resolution for use in studies evaluating small plot field-scale experiments, or in plant phenotyping works. For this reason, the expectations towards high spatial resolution and accuracy of evapotranspiration maps keep the interest in UAV solutions. Moreover, regarding other practical considerations, i.e., the availability of satellite-based time series might be lower in regions with high average cloud cover [

32] such as Hungary, UAV based imagery has obvious advantage. Recent off-the-shelf solutions are also targeting higher thermal resolution imagery necessary in plant disease surveys in addition to the above-mentioned purposes. Opportunities for flexibility in UAV based thermal/RGB systems

For the above reasons, we have developed an integrated UAV based system including a high-resolution thermal imager, suitable to produce the necessary raster inputs to a TSEB model. Taking into account the trade-offs between the flexibility of such a system in terms of suitable constituents (cameras and GNSS receivers) and the efforts and resources used during development, we developed our application by relying on existing modules. These modules are of either hardware (such as a Raspberry Pi) or software type (e.g., the software development kit (SDK) provided to the thermal camera OPTRIS). The capabilities of the RPi microcomputer (in terms of different ports/interfaces, including, RS232, GPIO, Ethernet, general USB and WiFi) and the flexibility of the SDK proved to be highly adaptable in controlling the operation of the thermal and the RGB cameras and communicating with the onboard GNSS receiver. In addition, these features are a good base to configure the system as to include further component sensors.

The Emlid GNSS M+ receiver used as a rover onboard the UAV proved to be a troublesome item during the first part of the work. Later technical advancements published by the developers of the unit and the release of a new model contributed to the improved capabilities (booting up speed and later stability) of the system. The use of the hot-shoe adapter connecting directly the RGB camera (Sony A6000) to the GNSS rover increased geolocation accuracy. Comparing the solutions with the different Emlid Reach receivers, the bias of georeferencing decreased from about 1.5 m (M+) to 0.2–0.5 m (M2).

The current configuration of the system allows acquisition of 8 cm spatial resolution thermal imagery from a flight altitude of 60 m and the accuracy of the mosaicked RGB imagery is around 10 cm. In comparison, commonly available off-the-shelf combined thermal/multispectral solutions of Micasense offer 0.35 to 0.17 m thermal spatial resolution from the same height.

The application requires a few ground control measurements (leaf area index estimations using a ceptometer, for example), micrometeorological data (radiation, wind speed, air temperature, and humidity) in addition to the thermal imagery to run the pyTSEB model.

4.2. Validation of Modelled ET Data against Eddy Covariance Measured ET Fluxes

Modelled latent heat flux data have been validated against half-hourly averages of latent heat fluxes measured by eddy covariance (

Figure 6) with RMSE of 75 Wm

−2w/m

2 (RMSD 74 Wm

−2w/m

2). In the last couple of years, the TSEB model applied to UAV based thermal orthomosaic was successfully validated against EC data in several studies for various ecosystems, e.g., grasslands [

13,

14], a tree-grass mixed ecosystem [

3], an agricultural area [

12], peach and nectarine orchards [

20], olive orchard [

11], and vineyard [

10]. RMSD of modelled and measured (EC) was 40–50 Wm

−2 for grasslands [

13,

14,

18], while RMSE was 94 Wm

−2 for an agricultural area [

12].

The choice of the energy balance closure method affects the magnitude of uncertainty of the measured eddy fluxes. In the majority of recent studies validating UAV based energy fluxes against EC measured fluxes, the imbalance is fully added to the latent heat flux as suggested by [

31]. This method was found to yield the best validation result out of the considered ones (closing the balance fully at the expense of the sensible heat flux, the latent heat flux or according to the Bowen ratio) [

18]. This however cannot be considered as evidence in favor of closing the balance by increasing the latent heat flux, only. Yet, latent heat is calculated as the remainder in the two-source energy balance model after allowing for calculating sensible heat and ground heat fluxes. It is this analogy for which we used the approach of closing the energy imbalance also of the eddy covariance measurements solely by increasing the latent heat flux.