Abstract

The use of the Unmanned Aerial Vehicles (UAV) and Unmanned Aircraft System (UAS) for civil, scientific, and military operations, is constantly increasing, particularly in environments very dangerous or impossible for human actions. Many tasks are currently carried out in metropolitan areas, such as urban traffic monitoring, pollution and land monitoring, security surveillance, delivery of small packages, etc. Estimation of features around the flight path and surveillance of crowded areas, where there is a high number of vehicles and/or obstacles, are of extreme importance for typical UAS missions. Ensuring safety and efficiency during air traffic operations in a metropolitan area is one of the conditions for Urban Air Mobility (UAM) operations. This paper focuses on the development of a ground control system capable of monitoring crowded areas or impervious sites, identifying the UAV position and a safety area for vertical landing or take-off maneuvers (VTOL), ensuring a high level of accuracy and robustness, even without using GNSS-derived navigation information, and with on-board terrain hazard detection and avoidance (DAA) capabilities, in particular during operations conducted in BVLOS (Beyond Visual Line Of Sight). The system is composed by a mechanically rotating real-time LiDAR (Light Detection and Ranging) sensor, linked to a Raspberry Pi 3 as SBC (Session Board Controller), and interfaced to a GCS (Ground Control Station) by wireless connection for data management and 3-D information transfer.

1. Introduction

The use of autonomous, semi-autonomous or remotely controlled Unmanned Aerial Vehicles (UAVs) has considerably increased during the last years, thanks to ease of use, flexibility and versatility, low price, and advances in battery endurance, motor, stabilization techniques, navigation, and onboard sensor technology [1]. UAV refers only to the vehicle, whereas the acronym UAS (Unmanned Aircraft System) considers the whole system, composed by the aircraft, a Ground Control Station (GCS), and a communication subsystem (data link) to send and receive information [2,3,4,5].

Small VTOL (Vertical Takeoff and Landing) aerial platforms are being used on a daily basis for several civil (professional, scientific, and recreational) and military applications, such as search and rescue missions, pipeline inspection, urban traffic monitoring, aerial mapping, surveillance of archaeological sites, control of the territory against environmental crimes, control of public order, and others. In the next decade, a further widespread diffusion of UAVs is expected [6]; on the other hand, their misuse can lead to perform anti-social, unsafe, and even criminal actions, such as privacy violation, collision hazard (with people, other UAVs, and manned aircrafts), and even transport of illicit materials and/or explosives or biological agents [7]. Therefore, it is mandatory to monitor these platforms in the airspace in the best way. Table 1 shows the most widespread classification of unmanned vehicles [8,9].

Table 1.

NATO Classification of UAS (AGL = Above Ground Level; BLOS = Beyond Line Of Sight).

Currently, UASs are among the most used for civil applications, in individual missions or in swarms [10]. In the framework of a UAS autonomous flight mission in Urban Air Mobility operations, it is important to identify a safe area for a landing maneuver, to avoid potential conflicts with people, other vehicles, or structures [11,12,13,14]. Developing autonomous landing systems is the greatest challenge [15]; DAA (Detect and Avoid) or SAA (Sense and Avoid) systems, performing environment recognition during the landing phase by means of onboard sensors or by acquiring information from a ground station which could send safe paths to the vehicle, are of great importance and usefulness. Typically, environment perception is accomplished onboard the UAVs by means of real-time, small-dimension sensors (cameras, stereo cameras, Infra-Red (IR) or Time-of-Flight (ToF) cameras, ultrasound sensors, IR sensors, radar, LiDAR, etc.), easily configurable for UAV payloads [16,17,18,19]. These sensors could also be used to build small airfields equipped with GCA (Ground Controlled Approach)-like functionality. Nowadays, most of Safe Landing Area Determination (SLAD) systems are composed by technologies mounted on board. GNSS (Global Navigation Satellite System) technology is typically the primary source of positioning for most air and ground vehicles, and for a growing number of UASs in urban areas [20].

For optimal tracking and detection of landing areas, in urban operations, or during particular flight conditions (i.e., emergency cases, batteries with limited endurance, system failure), it is necessary to cover a large area in a short time. High maneuverability of small UAVs makes the tracking problem more difficult, due to the impossibility of making strong assumptions about the expected UAV motion trajectories and the related equations. Moreover, being pilotless and with no relevant payload, UAVs are aerial targets with small physical size, compared to conventional aircraft. Their identification and classification by High Range Resolution Radar Profiles (HRRPs) is problematic, and sub-centimeter resolution is required to capture spatial structures of targets with dimensions less than 100 cm.

In literature, a largely used approach to safe landing area identification involves vision-based systems and image analysis, and processing techniques [21,22,23,24,25], with alternative methodologies such as multi-sensor data fusion, using images and GNSS position data [26,27], point cloud reconstruction from LiDAR or radar sensors [28,29,30].

This paper exploits the ability of 2D LiDAR technology to provide three-dimensional elevation maps of a landing area and high-precision distance measurements, in order to design a safe landing identification strategy for UAS missions. A mechanically rotating, wide field-of-view 2D LiDAR is used, sensing the surroundings of the surveillance volume with high temporal resolution to detect obstacles, track objects, and support path planning. Another important issue, goal of this paper, is related to automatic landing zone surveying through obstacle detection around the landing area, in order to perform safe landing area determination (SLAD). Obstacle detection and avoidance systems (DAA) can be installed onboard the unmanned aircraft [31,32], and research on rotating LiDARs used onboard UAVs combined with stereo cameras for onboard landing area detection are available in literature [33,34]. In this paper, we propose a ground-controlled, rather than onboard-controlled, approach. The safe landing functionality, in terms of assessing the UAV correct position within a clear area, is performed by a LiDAR-equipped ground station, interfaced to a communication link with the UAV, therefore capable of identifying and transmitting safe landing paths to the approaching aircraft by identifying clearance areas, i.e., areas with no obstacles which could impair descent and/or landing. By mounting the LiDAR vertically on a servo motor, we combine the (now vertical) fast laser scanned information with the slow, controlled horizontal rotation of the motor, obtaining a tridimensional map of the surroundings of the landing zone. With respect to onboard SLAD systems, a ground-based solution offers many advantages, such as payload reduction, improved aircraft endurance due to reduced power consumption, communication of useful auxiliary information to the aircraft, and management of more than one aircraft in the surveillance volume. The system can identify obstacle-free areas, detect the UAS position, and indicate safe trajectories for landing maneuvers (a conceptual architecture of the system was presented by the authors in [35]).

This paper focuses on the system development, describing the theoretical and experimental phases towards the capability of creating a 3D point cloud and correcting for distortion and tilt, to obtain accurate estimates of the UAV distance to the ground and to the landing area, together with a preliminary obstacle detection strategy based on point cloud comparison. The innovative contributions of this research are:

- Promoting a new installation method for auxiliary equipment (a 2D rotating LiDAR with 3D capabilities, able to provide a point cloud of a volume in the vicinity of the landing site), aimed at improving the autonomous navigation capabilities of a VTOL small UAV (quadcopter, hexacopter, etc.), without installing additional sensors on the aircraft: therefore saving payload mass and power consumption, and enhancing the flight time (endurance).

- Proposing the application of safe landing trajectories as a function of the dynamic identification of obstacles in the surveillance volume (humans, hazards, etc.), both in indoor and outdoor scenarios, which is a significant improvement with respect to the ordinary vertical landing path, typical of other onboard autolanding systems. In principle, the LiDAR-equipped ground station could provide safe landing information, even for missions where the UAV is supposed to land on a moving platform. This work presents the design aspects and a validation of the ground system, in terms of providing a point cloud of the 3D surveillance volume (including the landing site), detecting the UAV as it enters the landing volume, estimating its position with respect to the landing site, identifying objects which could impair the safe landing, and monitoring the descent of the flying robot.

- Proposing a ground system capable of providing a safe descent path even in the presence of onboard hardware/software errors (for example, actuator failure, loss of position estimation from a possible IMU embedded in the UAV flight control system, etc.), by providing timely autonomous identification of safe paths, and assisting a controlled descent and land on the ground. Our approach is inspired by typical landing systems for civil aviation, such as ILS (Instrument Landing System) or GCA (Ground Controlled Approach).

- Proposing a simple and cost-effective alternative to vision-based SLAD methods.

- Developing a simple, cheap and useful obstacle detection system by analyzing the point cloud characteristics obtained by the ground-based LiDAR, with a clustering algorithm based on difference “images” of two corrected point cloud scenarios, with and without the obstacle.

- Promoting a methodology of safe landing area determination in GPS-denied environments, in failure scenarios, or when the aircraft is not equipped with a precise localization system, therefore extending the application domain of UAVs/UASs, especially multirotor helicopters.

- Proposing a potential minimum landing time approach for small, fast-moving small/micro UAVs in a variety of environments (typical flight speed of 10 m/s and 30 min battery life).

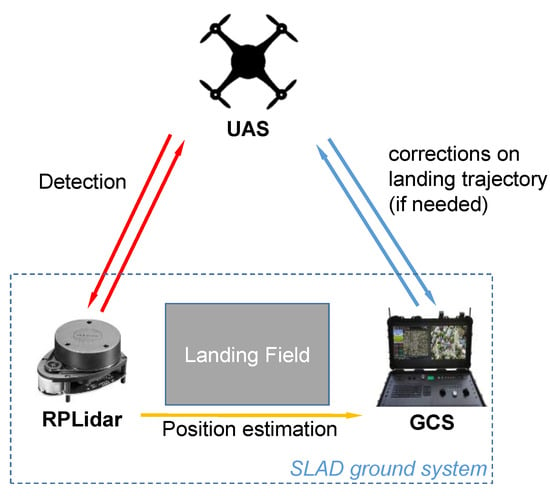

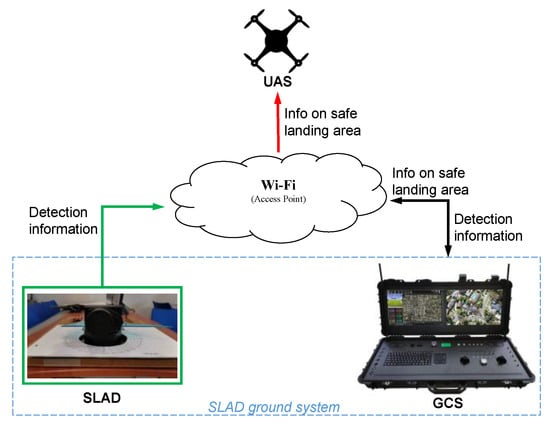

The architecture of the ground-based safe landing assessment methodology is shown in Figure 1. The Ground Control Station (GCS) establishes a data link with the UAV, to send and receive information.

Figure 1.

Architecture of the SLAD system.

The paper is organized as follows. Section 2 quickly recaps the theoretical framework and the observation geometry. In Section 3, the hardware (electronic components) and methods used for the preliminary setup of our LiDAR-based ground station are discussed in detail. Section 4 presents the point correction procedure and the obstacle detection algorithm implemented, together with experimental results from data collection campaigns which validate the feasibility of the proposed methodologies. Further developments and concluding remarks are outlined in Section 5.

2. Theoretical Framework

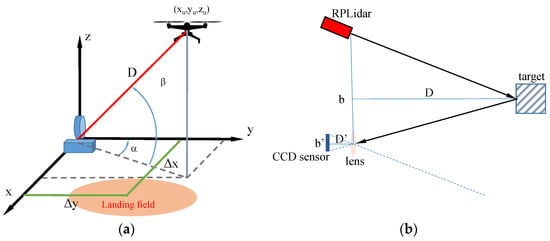

Figure 2a depicts the observation geometry. The LiDAR is in the origin of the reference frame, the UAV position is given by (), α is the servo motor angle, β and the slant range D are estimated by the laser sensor, rotating around an axis parallel to the xy-plane, and the co-ordinates of the center L of the landing area are (). For the sake of simplicity, the landing field and the LiDAR sensor are supposed coplanar, but the generalization for (i.e., a difference in height between the landing field and the sensor) is straightforward.

Figure 2.

(a) UAV position estimation by LiDAR measurements; (b) laser triangulation principle.

Denoting with the slant range (or LOS, Line Of Sight) of the UAV from the landing center, and with the ground range, we have:

Figure 2b shows the principle of laser triangulation, a typical alternative to ToF (Time-of-Flight) measurement in low-cost laser sensors. A lens with a focal distance d’, placed at a distance b from the laser source, focalizes the returned ray on a CCD (CMOS)-based position-sensitive device (PSD) at distance d’ from the lens. The triangles defined by (b, d) and (b’, d’) are similar, and the distance to the object is nonlinearly proportional to the angle of the reflected light (i.e., the laser acceptance angle). The perpendicular distance to the object (d) is given by:

if β is the angle of the laser beam, the slant range (distance along the geometric ray) is:

From (2) and (3), the range sensitivity (or resolution) is equal to:

and grows quadratically with the distance from the object. Small values of allow measurement of small distances, whereas the sensor resolution is enhanced by large .

3. SLAD Ground System

The SLAD ground system is composed by:

- − RPLiDAR sensor, model A1M8;

- − Raspberry Pi 3 (SBC);

- − 5-A Power Module;

- − Servo motor with standing structure;

- − PC-based Ground Control Station to manage LiDAR data and send clearance data (safe paths, safe landing zone) to the UAV;

- − Communication subsystem for real-time data transfer from the sensor to the GCS and transmission of safety information to the UAV.

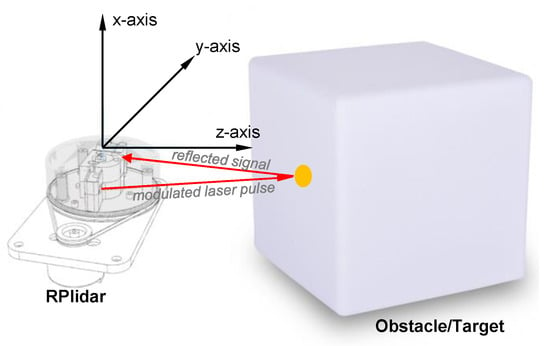

3.1. LiDAR Sensor: RPLIDAR Model A1M8

The low-cost, 360-degree FOV (Field Of View) laser range scanner RPLIDAR Model A1M8, built by Shanghai Slamtec Co., Shanghai, China, [36], shown in Figure 3, has been widely used in a variety of applications in robotics, in particular in the framework of SLAM (Simultaneous Localization and Mapping) methodologies for mobile robots [37]. It measures the distance from an object emitting a laser beam which is reflected by the object surface and measured and received by a position-sensitive detector. Built-in circuitry calculates the distance from the object by means of triangulation, based on the position of the detector with respect to the reflected light, obtaining range and angular data in the sensor coordinate system.

Figure 3.

RPLIDAR A1M8 (2D rotating laser scanner).

The active ranging device uses a low power (<5 mW peak) infrared laser (785-nanometer wavelength) as its light source, transmitting 110 µs pulses, and can perform 360-degree 2D scan within a 5 m range with angular resolution [36,38]. Typical distance resolution is <0.5 mm, or less than 1% of the distance. The produced 2D point cloud data can be used in mapping, localization, and object/environment modeling. It is fully suitable as a fundamental part of the SLAD system developed in this paper.

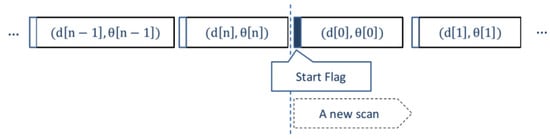

RPLIDAR A1′s scan rate is 5.5 Hz (configurable up to 10 Hz) when sampling 360 points per scan, with a typical sample frequency of 4 kHz (1 sample every 250 µs). With 5 V supplied by the power module during our tests, the sensor rotates at 468 rpm (i.e., the scan frequency is 7.8 Hz, or 128 ms per revolution), collecting (on average) 515 samples per revolution (i.e., the sample frequency is approximately 4 kHz). The assembly contains a range scanner system and a motor with speed detection and adaptive system. It can operate excellently in both indoor and outdoor environments with no sunlight. The system automatically adjusts the laser scan rate according to the motor speed and uses UART serial port (115,200 bps rate) as the communication interface with host computers or controllers. After power-on, the sensor starts rotating and scanning clockwise. The distance data acquired can be stored on a PC or a microcontroller (e.g., Raspberry, Arduino) through the communication interface (USB connection). Table 2 reports information of each sample point, whereas Figure 4 visualizes the formatted dataflow.

Table 2.

The RPLIDAR A1 Sample point data information.

Figure 4.

Data frames from the RPLIDAR A1M8.

The RPLIDAR A1 operates by measuring the angle of the reflected light (Figure 5), using high-speed vision acquisition and processing hardware, and outputting distance value and angle between the illuminated object and the sensor, thanks to a built-in angular encoding system. The whole system measures distance data more than 2000 times per second and high-resolution distance output (<1% of the distance). A 2 min pre-heating in start scan mode, with the sensor rotating, is recommended to obtain optimal measurement accuracy.

Figure 5.

Laser triangulation principle.

3.2. Raspberry PI 3

The Raspberry Pi 3 microcomputer hosts a 64-bit quad-core processor running at 1.4 GHz, dual-band 2.4 GHz, and 5 GHz wireless LAN, Bluetooth 4.2/BLE, faster Ethernet, and PoE (Power over Ethernet) capability via a separate PoE “hat”. The dual-band wireless LAN comes with modular compliance certification, allowing the board to be designed into end products with significantly reduced wireless LAN compliance testing, improving both cost and time to market [39]. The Raspberry Pi 3 is used in this paper as the main controller and transmitter of the data acquired by the RPLIDAR, and sent via its Wi-Fi module to a PC-based GCS for storage and processing. Raspberry and RPLIDAR are powered by a 5 VDC source through an embedded power module. The whole system is autonomous and portable without the constraint of a fixed power supply.

3.3. Power Module

The DFR0205 Power Module (Figure 6), built by DFRobot, is based on a small size 350 kHz switching frequency PWM buck DC-to-DC Converter (GS2678 [40]). It can convert any DC voltage between 3.6 V and 25 V to a selectable voltage from 3.3 V to 25 V.

Figure 6.

DFRobot 5-A Power Module DFR0205.

It is possible to choose 5 V direct output voltage with a switch or adjust the output voltage by means of a control resistor. The OVout (Original Voltage output) interface can output the original voltage of input, so that it can be used as a power source for other modules. In our research, the DRF0205 was used to convert the 7.4 V input from a 2600-mAh Li-ion 18,650 battery (each battery is 3.7 V) to the 5 V output which supplies energy to the Raspberry Pi 3 and the servo motor. Laboratory tests showed that the system can work continuously for about 6 h.

3.4. Standing SLAD Structure

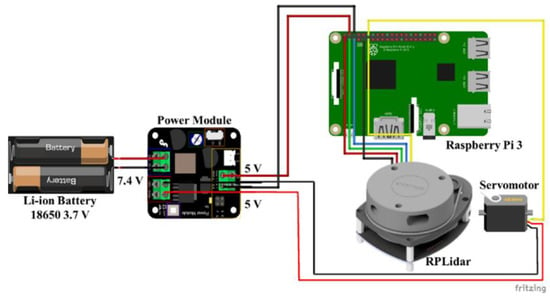

The electric scheme of the SLAD system (Raspberry, RPLIDAR, battery, servo motor and Power Module) is shown in Figure 7. The components were mounted on a rotating structure (a disc with a series of self-lubricating bearings to reduce friction and vibrations during rotation) locked to a servo motor. The whole system is mounted on a standing structure made in ABS (Figure 8).

Figure 7.

Electric connections of the SLAD system.

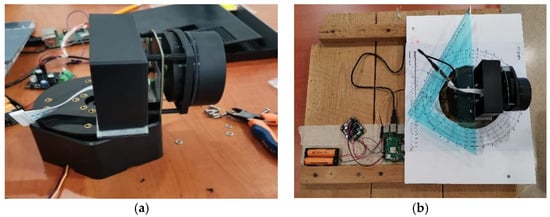

Figure 8.

(a) Servo motor with rotating disc, to allow LiDAR rotation in the plane orthogonal to the laser rotation axis; (b) The prototype with a graduated disc allowing to take readings of the servo motor angular position α.

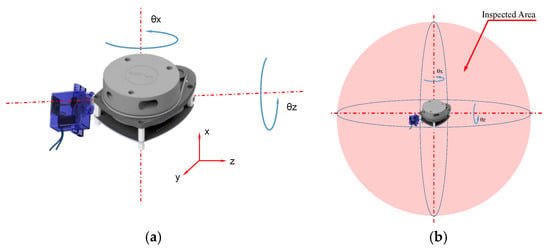

Figure 9 shows the capability of acquiring tridimensional information by combining the rotation of the LiDAR and the servo motor, and the inspection volume of the system.

Figure 9.

(a) Functionality of the system; (b) Survey volume.

3.5. Communication Subsystem

The communication subsystem, depicted in Figure 10, is based on a common Wi-Fi network, which interconnects the SLAD system (managed by the Raspberry Pi 3) to a remote terminal (a PC-based GCS). After configuring Raspbian (the Raspberry operating system, stored in a SD card) with the SSID and password details of the local Wi-Fi and enabling SSH (the Secure Shell protocol, providing a secure channel with a cryptographic network protocol [41]), Raspberry Pi 3 will connect automatically to the Wi-Fi network after booting. With a simple Python interface, developed by the authors, Raspberry acquires real-time data from the RPLiDAR and sends them to the remote terminal, connected to the common Wi-Fi. Data acquisition is performed at 8 kHz sampling rate. Figure 10 shows a conceptual architecture of the communication subsystem.

Figure 10.

Block scheme of the communication subsystem.

4. Experimental Tests and Results

Simulation tests of the SLAD ground system have been performed at the Flight Dynamics Laboratory of the University of Naples “Parthenope”, Italy.

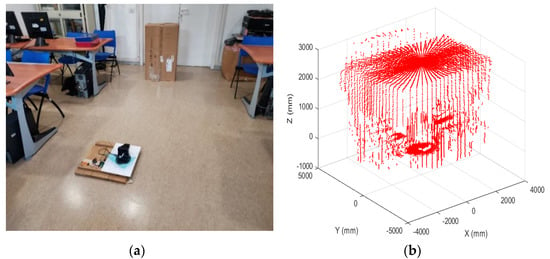

4.1. Validation of Laboratory Tests

By using laboratory open spaces, a simulated airfield has been built up (Figure 11) to test the LiDAR scanning capability in an area surrounding an assigned landing field. Every data acquisition campaign had a duration of 1 min, and data were sampled at 4 kHz. The control volume was mapped by the system, and some obstacles with known dimensions were placed to verify the obstacle detection capability of the ground station. Reference distance measurement were collected with a laser distance meter (Bosch PLR 40C [42]). Post-processed data allowed us to reconstruct the 3D flight volume and evaluate possible nonlinear distortion effects, imprecise pointing accuracies, and distance measurement errors.

Figure 11.

(a) Indoor experiment scenario (room dimensions: 6400 × 2890 mm); (b) 3D reconstruction from LiDAR data.

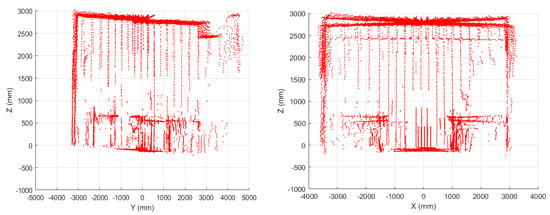

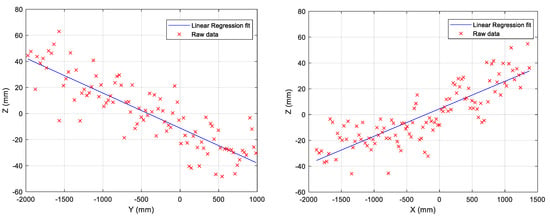

From data acquired in the yz- and xz-planes (Figure 12), tilt errors, and distortion effects were noted, pushing towards finding a calibration strategy, described in the next section.

Figure 12.

2D reconstructions from LiDAR data: planes yz and xz.

4.2. SLAD System Extrinsic Calibration: Removal of Disalignment and Nonlinear Distortions

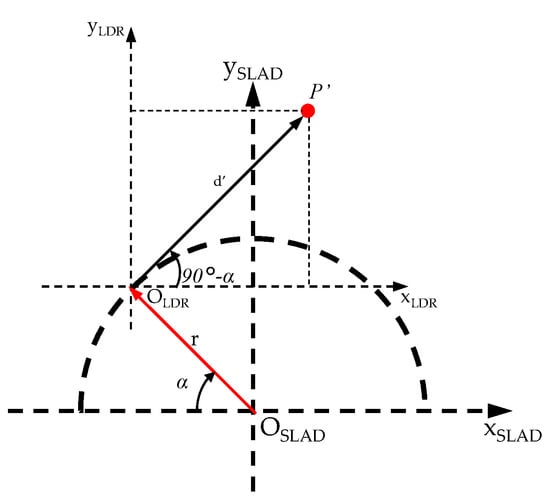

To compensate for tilt problems, related to suboptimal level of the mechanical structure, and to sensor position and installation issues, we devised a simple extrinsic calibration procedure, determining the transformation from the sensor measurements, i.e., the 3D coordinates of a point in the “image” derived from the laser data, to the 3D coordinates of the point in the sensor coordinate system. The calibration algorithm applies a translation t and a rotation R:

The rotation angles (Φ, Θ, Ψ) to be estimated are derived from a linear regression analysis in the three orthogonal planes. The angular coefficients of the regression lines are evaluated with respect to the three axes ). As far as the translation is concerned, indicating with r the distance between the origin of the SLAD reference and the LiDAR reference (equal to 85 mm), we have (Figure 13):

Figure 13.

Coordinate frames.

To evaluate the regression line, we chose, as a reference measurement, data representing distance from horizontal and vertical objects in the “real” world, i.e., walls and ceiling, as in Figure 14a (red dotted box).

Figure 14.

(a) Reference data used for the evaluation of the regression line in the yz-plane; (b) red dotted box zoom.

The angular coefficient m and the constant term q of a generic straight line are found by minimizing the function:

and are given by:

where are the coordinates of a point in the “image” and n+1 is the number of measurements. Figure 15 shows the regression lines for xy- and yz-planes, Table 3 gives the numerical values of m and p, and Table 4 shows the corresponding angles of the regression lines.

Figure 15.

Regression lines for yz- and xz-planes.

Table 3.

Parameters of the regression lines in the xz- and yz-planes.

Table 4.

Tilt angles of the regression lines.

The rotation matrices:

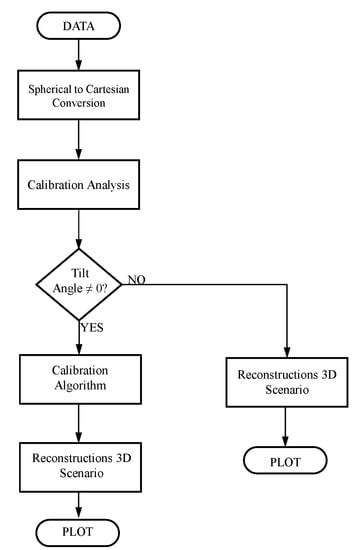

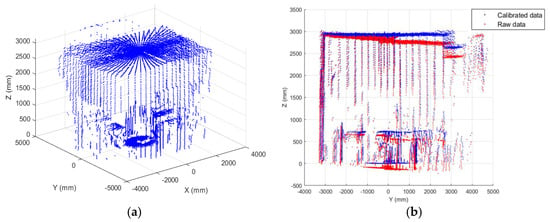

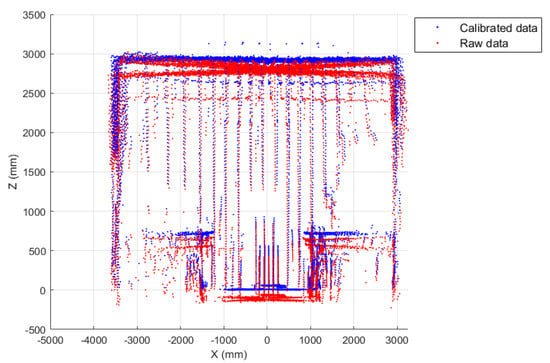

are applied to every point in the data measurement space. Figure 16 depicts a flowchart of the calibration procedure, whereas Figure 17 and Figure 18 show the results of the point correction procedure.

Figure 16.

Flowchart of the extrinsic calibration procedure.

Figure 17.

(a) 3D reconstruction scenario from calibrated data; (b) Comparison between raw and calibrated data (yz-plane).

Figure 18.

Comparison between raw and calibrated data (xz-plane).

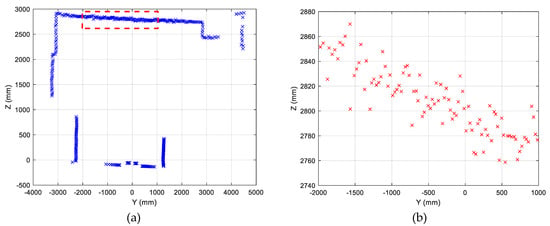

4.3. Obstacle Detection Methodology

We devised a simple and utilizable obstacle detection procedure that provides effective obstacle information for the approaching UAV, based on the difference between two scenarios scanned by the LiDAR and stored in point clouds (without obstacles and with obstacles in the landing area). With respect to other techniques (for example, IMU/INS data associated with the laser scanning [43], multi-point cloud fusion [44], clustering algorithms [45], and convolutional networks [46]), which are CPU-time consuming and unfit to UASs with low computing capabilities, we followed an approach based on a simple range difference between neighboring point in scan angle [47]. The technique, developed in the MATLAB environment, is based on a co-registration between the two point clouds by using linear interpolation of the single LiDAR scan for each angle value of the servo motor.

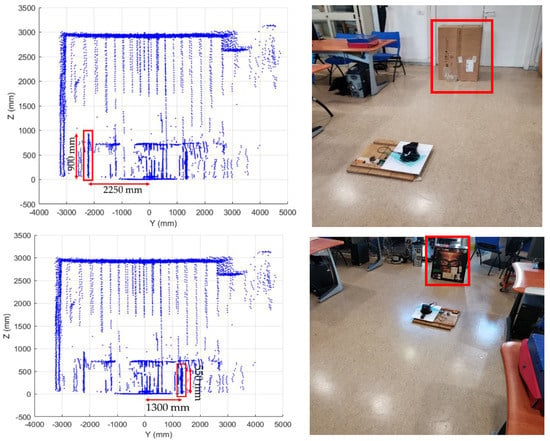

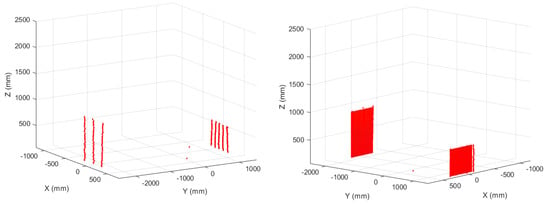

We placed two obstacles in the landing scenario, with dimensions 900 × 600 mm at a distance of 2250 mm from the SLAD system, and 550 × 550 mm at a distance of 1300 mm. Figure 19 (red boxes) shows the obstacle positions in the corrected point clouds, and Figure 20 shows the result of the “difference image” between the clean landing field and the same field with the obstacles.

Figure 19.

LiDAR mapping of the simulated landing field with two obstacles.

Figure 20.

Sample points relative to the two obstacles.

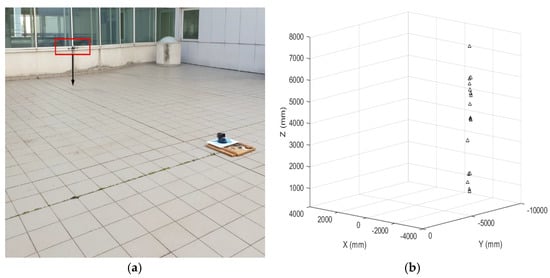

4.4. Outdoor Validation Test

In order to identify landing vehicles and map their descent trajectory, we set up a simple outdoor test by mapping a surveillance volume with the system, with the UAV approaching the landing field and executing a vertical landing. A preliminary calibration of the LiDAR was performed to verify the correctness of the alignment and the absence of geometric distortions. A sample object was used to test the calibration procedure (Figure 21). The calibration procedure described in the previous section gave the values shown in Table 5 or the inclination angles.

Figure 21.

LiDAR calibration during the outdoor tests.

Table 5.

Inclination angles of the regression line in the outdoor calibration test.

After extrinsic calibration, a UAV entered the scenario (highlighted in the red box in Figure 22a), and data were acquired from the ground system when the aircraft reached a distance from the ground of 7000 mm. Post-processed data are shown in Figure 22b.

Figure 22.

(a) UAV landing path; (b) Reconstruction from LiDAR data.

5. Conclusions and Further Work

This work presents the design and validation of a simple and cost-effective 2D LiDAR-based ground system for Safe Landing Area Determination for small UAVs, capable of providing a point cloud of the 3D surveillance volume reconstructed from 2D laser distance measurements by triangulation, using a servo motor to rotate the sensor (a low-cost RPLIDAR A1M8, developed by Slamtec Co., Shanghai, China) along the vertical axis. The system can detect the UAV as it enters the landing volume, to estimate its position with respect to the landing site, and to monitor the descent of the flying robot. Data collection was managed by a Raspberry Pi 3 microcomputer. A point cloud calibration procedure allowed correct reconstruction of the 3D scenario, by reducing tilt errors of the mechanical structure and geometric distortions. An obstacle detection strategy based on simple clustering by means of differences between homologous points in a reference point cloud, and a scenario with obstacles, demonstrates the capability of the system in terms of detecting and signaling obstacles to the approaching aircraft. The application of safe landing trajectories as a function of the dynamic identification of obstacles (humans, hazards, etc.) in a surveillance volume surrounding the landing site, both in indoor and outdoor scenarios, is a significant improvement from the ordinary vertical landing path, typical of other autolanding systems onboard the aircraft. In principle, the LiDAR-equipped ground station could provide safe landing information, even for missions where the UAV is supposed to land on a moving platform. Sending corrections to the landing trajectory to the approaching UAV via a communication link is the main aspect to be implemented in the successive stages of the research.

Ground-based safe landing area determination has the advantage of reducing the payload onboard the aircraft, which only needs a communication link to exchange information with the station located in the vicinity of the landing field. Outdoor tests verified the capability of the system to track the approaching vehicle, and derive information on the landing path and the distance from the landing field.

The detection of obstacles on the ground and/or in the surveillance volume is only a preliminary test to evaluate the system capability to de-risk the potential challenges involved in the UAV approach and landing procedure. The main objective remains to assist and give guidance to a flying robot in the terminal flight phase. Obstacle detection plays a fundamental role in the conception of the ground system, which will provide clearance signals to the approaching vehicle and transmit information about a possible safe descent.

Further developments will involve refinements of the mechanical structure (with a precision encoder for better knowledge of the angular position of the servo motor, for example) and enhancement of the calibration/reconstruction strategy, by means of filtering techniques to reduce measurement noise and possible effects of radial distortions in the LiDAR lens.

Author Contributions

Conceptualization, U.P., G.A. and S.P.; Methodology, U.P., G.A. and S.P.; Software, U.P., G.A. and A.G.; Validation, G.A., A.G. and U.P.; Formal Analysis, G.A., U.P., S.P. and A.G.; Investigation, G.A. and A.G.; Resources, G.D.C.; Data Curation, G.A., A.G. and U.P.; Writing—Original Draft Preparation, G.A., U.P. and S.P.; Writing—Review and Editing, S.P.; Supervision, Project Administration, Funding Acquisition, G.D.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the University of Naples “Parthenope” (Italy) Internal Research Project DIC (Drone Innovative Configurations, CUP I62F17000060005-DSTE 338).

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Valavanis, K.P.; Vachtsevanos, G.J. (Eds.) Handbook of Unmanned Aerial Vehicles; Springer Reference: Cham, Switzerland, 2015. [Google Scholar]

- Austin, R. Unmanned Aircraft Systems; Wiley: Hoboken, NJ, USA, 2010. [Google Scholar]

- Papa, U. Embedded Platforms for UAS Landing Path and Obstacle Detection. In Studies in Systems, Decision and Control; Springer: Cham, Switzerland, 2018; Volume 136. [Google Scholar]

- Wargo, C.A.; Church, G.C.; Glaneueski, J.; Strout, M. Unmanned Aircraft Systems (UAS) research and future analysis. In Proceedings of the 2014 IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2014; pp. 1–16. [Google Scholar]

- Gupta, S.G.; Ghonge, M.M.; Jawandhiya, P.M. Review of unmanned aircraft system (UAS). Int. J. Adv. Res. Comput. Eng. Technol. 2013, 2, 1646–1658. [Google Scholar] [CrossRef]

- SESAR Joint Undertaking. European Drones Outlook Study—Technical Report; European Commission: Brussels, Belgium, 2016. [Google Scholar]

- Finn, R.L.; Wright, D. Unmanned aircraft systems: Surveillance, ethics and privacy in civil applications. Comput. Law Secur. Rev. 2012, 28, 184–194. [Google Scholar] [CrossRef]

- NATO Standardization Agency. NATO STANAG 4670 (Edition 1) Recommended Guidance for the Training of Designated Unmanned Aerial Vehicle Operator (DUO). 2006. [Google Scholar]

- NATO Standardization Agency. NATO STANAG 4670—ATP-3.3.7, (Edition 3) Guidance for the Training of Unmanned Aircraft Systems (UAS) Operators. 2014. Available online: http://everyspec.com/NATO/NATO-STANAG/SRANAG-4670_ED-3_52054/ (accessed on 4 January 2022).

- Chapman, A.; Mesbahi, M. UAV Swarms: Models and Effective Interfaces. In Handbook of Unmanned Aerial Vehicles; Valavanis, K.P., Vachtsevanos, G.J., Eds.; Springer Reference: Cham, Switzerland, 2015; pp. 1987–2019. [Google Scholar]

- Thipphavong, D.P.; Apaza, R.; Barmore, B.; Battiste, V.; Burian, B.; Dao, Q.; Verma, S.A. Urban air mobility air-space integration concepts and considerations. In Proceedings of the 2018 Aviation Technology, Integration, and Operations Conference, Atlanta, GA, USA, June 25–29 2018; p. 3676. [Google Scholar]

- Hasan, S. Urban Air Mobility (UAM) Market Study. 2018; p. 148. Available online: https://ntrs.nasa.gov/citations/20190026762 (accessed on 4 January 2022).

- Cotton, W.B.; Wing, D.J. Airborne trajectory management for urban air mobility. In Proceedings of the 2018 Aviation Technology, Integration, and Operations Conference, Atlanta, GA, USA, June 25–29 2018; p. 3674. [Google Scholar] [CrossRef] [Green Version]

- European Union Aviation Safety Agency (EASA). Easy Access Ruler for Unmanned Aircraft Systems; © European Union: Brussels, Belgium, 2021; p. 308. [Google Scholar]

- Gautam, A.; Sujit, P.B.; Saripalli, S. A survey of autonomous landing techniques for UAVs. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 1210–1218. [Google Scholar]

- Ariante, G.; Papa, U.; Ponte, S.; Del Core, G. UAS for positioning and field mapping using LiDAR and IMU sensors data: Kalman filtering and integration. In Proceedings of the 2019 IEEE 5th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Turin, Italy, 19–21 June 2019; pp. 522–527. [Google Scholar]

- Ponte, S.; Ariante, G.; Papa, U.; Del Del Core, G. An Embedded Platform for Positioning and Obstacle Detection for Small Unmanned Aerial Vehicles. Electronics 2020, 9, 1175. [Google Scholar] [CrossRef]

- Ariante, G. Embedded System for Precision Positioning, Detection, and Avoidance (PODA) for Small UAS. IEEE Aerosp. Electron. Syst. Mag. 2020, 35, 38–42. [Google Scholar] [CrossRef]

- Papa, U.; Ariante, G.; Del Core, G. UAS aided landing and obstacle detection through LIDAR-sonar data. In Proceedings of the 2018 5th IEEE International Workshop on Metrology for AeroSpace (MetroAeroSpace), Rome, Italy, 20–22 June 2018; pp. 478–483. [Google Scholar]

- Bijjahalli, S.; Sabatini, R.; Gardi, A. GNSS Performance Modelling and Augmentation for Urban Air Mobility. Sensors 2019, 19, 4209. [Google Scholar] [CrossRef] [Green Version]

- Patterson, T.; McClean, S.; Morrow, P.; Parr, G.; Luo, C. Timely autonomous identification of UAV safe landing zones. Image Vis. Comput. 2014, 32, 568–578. [Google Scholar] [CrossRef]

- Shen, Y.-F.; Rahman, Z.; Krusienski, D.; Li, J. A Vision-Based Automatic Safe Landing-Site Detection System. IEEE Trans. Aerosp. Electron. Syst. 2013, 49, 294–311. [Google Scholar] [CrossRef]

- Kaljahi, M.A.; Shivakumara, P.; Idris, M.Y.I.; Anisi, M.H.; Lu, T.; Blumenstein, M.; Noor, N.M. An automatic zone detection system for safe landing of UAVs. Expert Syst. Appl. 2019, 122, 319–333. [Google Scholar] [CrossRef] [Green Version]

- Bosch, S.; Lacroix, S.; Caballero, F. Autonomous detection of safe landing areas for an UAV from monocular images. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 5522–5527. [Google Scholar]

- Mukadam, K.; Sinh, A.; Karani, R. Detection of landing areas for unmanned aerial vehicles. In Proceedings of the 2016 International Conference on Computing Communication Control and automation (ICCUBEA), Pune, India, 12–13 August 2016; pp. 1–5. [Google Scholar]

- Patterson, T.; McClean, S.; Morrow, P.; Parr, G. Modeling safe landing zone detection options to assist in safety critical UAV decision making. Procedia Comput. Sci. 2012, 10, 1146–1151. [Google Scholar] [CrossRef] [Green Version]

- Cesetti, A.; Frontoni, E.; Mancini, A.; Zingaretti, P.; Longhi, S. A Vision-Based Guidance System for UAV Navigation and Safe Landing using Natural Landmarks. J. Intell. Robot. Syst. 2009, 57, 233–257. [Google Scholar] [CrossRef]

- Yang, T.; Li, P.; Zhang, H.; Li, J.; Li, Z. Monocular Vision SLAM-Based UAV Autonomous Landing in Emergencies and Unknown Environments. Electronics 2018, 7, 73. [Google Scholar] [CrossRef] [Green Version]

- Yan, L.; Qi, J.; Wang, M.; Wu, C.; Xin, J. A Safe Landing Site Selection Method of UAVs Based on LiDAR Point Clouds. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; pp. 6497–6502. [Google Scholar]

- Shin, Y.H.; Lee, S.; Seo, J. Autonomous safe landing-area determination for rotorcraft UAVs using multiple IR-UWB radars. Aerosp. Sci. Technol. 2017, 69, 617–624. [Google Scholar] [CrossRef]

- Allignol, C.; Barnier, N.; Durand, N.; Blond, E. Detect and Avoid, UAV integration in the lower airspace Traffic. In Proceedings of the 7th International Conference on Research on Air Transportation (ICRAT 2016), Philadelphia, PA, USA, 15–18 June 2016; Available online: https://hal.archives-ouvertes.fr/hal-03165027 (accessed on 10 January 2022).

- Hoffmann, F.; Ritchie, M.; Fioranelli, F.; Charlish, A.; Griffiths, H. Micro-Doppler based detection and tracking of UAVs with multistatic radar. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 1–6 May 2016; pp. 1–6. [Google Scholar]

- Blažic, A.; Kotnik, K.; Nikolovska, K.; Ožbot, M.; Pernuš, M.; Petkovic, U.; Hrušovar, N.; Verbic, M.; Ograjenšek, I.; Zdešar, A.; et al. Autonomous Landing System: Safe Landing Zone Identification. SNE Simul. Notes Eur. 2018, 28, 165–170. [Google Scholar] [CrossRef]

- Royo, S.; Ballesta-Garcia, M. An Overview of Lidar Imaging Systems for Autonomous Vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef] [Green Version]

- Ariante, G.; Ponte, S.; Papa, U.; Del Core, G. Safe Landing Area Determination (SLAD) for Unmanned Aircraft Systems by using rotary LiDAR. In Proceedings of the 2021 IEEE 8th International Workshop on Metrology for AeroSpace (Metro-AeroSpace), Naples, Italy, 23–25 June 2021; pp. 110–115. [Google Scholar]

- Shangai Slamtec Co., Ltd. RPLIDAR A1. Introduction and Datasheet (Model: A1M8), Rev. 1.0. 2016. Available online: https://www.generationrobots.com/media/rplidar-a1m8-360-degree-laser-scanner-development-kit-datasheet-1.pdf (accessed on 24 January 2022).

- Debeunne, C.; Vivet, D. A Review of Visual-LiDAR Fusion based Simultaneous Localization and Mapping. Sensors 2020, 20, 2068. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shangai Slamtec Co. RPLIDAR A1. Development Kit User Manual. Rev. 1.0. 2016. Available online: http://www.dfrobot.com/image/data/DFR0315/rplidar_devkit_manual_en.pdf (accessed on 24 January 2022).

- Raspberry Pi (Trading), Ltd. Raspberry Pi Compute Module 3+. Release 1, January 2019. Available online: https://www.raspberrypi (accessed on 10 January 2022).

- DF Robot. Power Module 5A DFRobot 25 W. 2016. Available online: https://wiki.dfrobot.com/Power_Module_SKU_DFR0205_ (accessed on 10 January 2022).

- Ylonen, T. The Secure Shell (SSH) Protocol Architecture. Network Working Group, Cisco Systems, Inc. 2006. Available online: https://datatracker.ietf.org/doc/html/rfc4251 (accessed on 10 January 2022).

- Robert Bosch Power Tools GmbH. PLR 30 C/PLR 40 C Manual. 2019. Available online: https://www.libble.eu/bosch-plr-40-c/online-manual-894647/ (accessed on 24 January 2022).

- Xu, J.; Lv, J.; Pan, Z.; Liu, Y.; Chen, Y. Real-Time LiDAR Data Assocation Aided by IMU in High Dynamic Environment. In Proceedings of the 2018 IEEE International Conference on Real-Time Computing and Robotics (RCAR), Kandima, Maldives, 1–5 August 2018; pp. 202–205. [Google Scholar] [CrossRef]

- Rozsa, Z.; Sziranyi, T. Obstacle Prediction for Automated Guided Vehicles Based on Point Clouds Measured by a Tilted LIDAR Sensor. IEEE Trans. Intell. Transp. Syst. 2018, 19, 2708–2720. [Google Scholar] [CrossRef] [Green Version]

- Zheng, L.; Zhang, P.; Tan, J.; Li, F. The Obstacle Detection Method of UAV Based on 2D Lidar. IEEE Access 2019, 7, 163437–163448. [Google Scholar] [CrossRef]

- Li, B. 3D fully convolutional network for vehicle detection in point cloud. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1513–1518. [Google Scholar] [CrossRef] [Green Version]

- Hammer, M.; Hebel, M.; Laurenzis, M.; Arens, M. Lidar-based detection and tracking of small UAVs. In Proceedings of the SPIE 10799, Emerging Imaging and Sensing Technologies for Security and Defence III; and Unmanned Sensors, Systems, and Countermeasures, Berlin, Germany, 12 September 2018. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).