Weather Classification by Utilizing Synthetic Data

Abstract

:1. Introduction

1.1. Related Work

1.2. Contributions

- Holistic Weather Classification Solution. The solution proposed in this paper is a holistic solution in the sense that has been built from the ground up focusing on weather simulations;

- Simulator-based Training Dataset. Detection of weather using a single image requires a large images dataset with varying features for training CNNs, which turns out to be a bottleneck. In this work, a synthetic dataset for training CNNs is proposed;

- Real-Time Evaluation. The state-of-the-art CNNs are evaluated on real-time testing datasets to detect the accuracy of weather classification. Moreover, our classification approach differs from others in the sense that instead of processing only a portion of the image, it processes the entire image. This specific approach allows the system to record subtle changes in light intensity and color variations, which can be crucial in distinguishing between different weather conditions, such as cloudy and sunny.

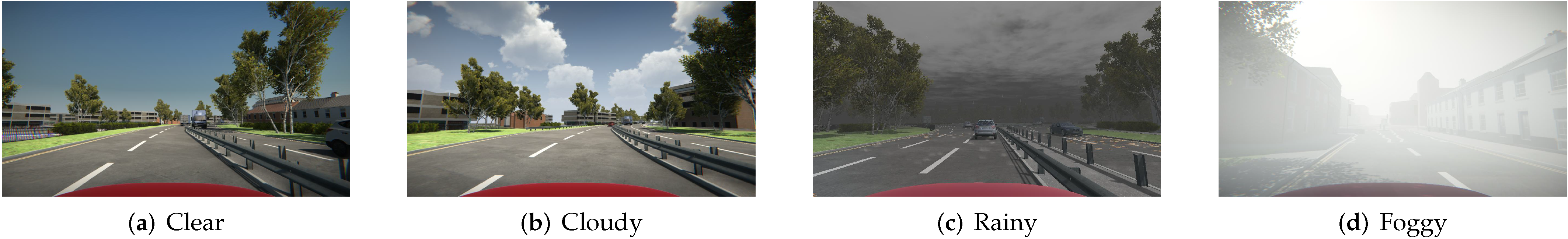

1.3. The Simulator

1.4. The Dataset

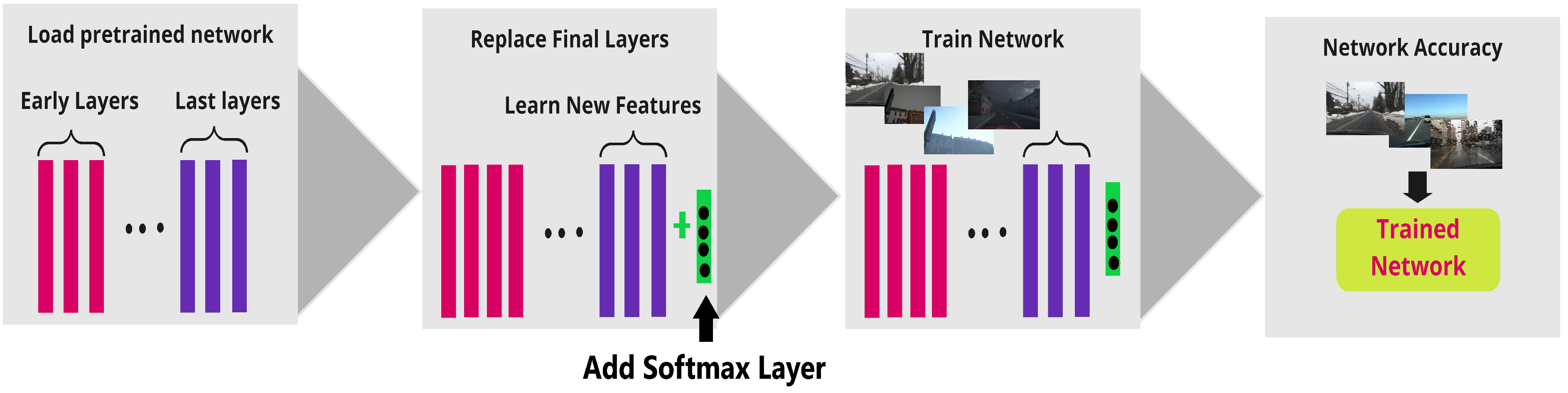

2. Weather Classification

Pretrained Model

- AlexNetAlexNet [42] can easily be considered as a breakthrough network that has popularized deep learning approaches against traditional machine learning approaches. With eight layers, AlexNet won the famous object recognition challenge known as called the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2012. It is a variant of an artificial neural network, where the hidden layers comprise convolutional layers, pooling layers, fully connected layers, and normalization layers. A few of its standout features are the addition of nonlinearity, use of dropouts to overcome overfitting, and a reduction in network size due to overfitting.

- VGGNETVGGNET [43], a 19-layer network, was proposed as a step forward from AlexNet and was a runner up in the ILSVRC-2014 challenge. As an improvement, the large kernel size of the first and second convolutional layers of AlexNet net were replaced by multiple 3 × 3 size kernel filters. The small-size filters allow the network to have a large number of weight layers. Nonlinearity in decision making was incremented by adding 1 × 1 convolution layer.

- GoogleLeNetGoogleLeNet [44], a 22-layer network, was the winner of the ILSVRC-2014 challenge. It was proposed as a variant of an inception network to reduce the computational complexity of traditional CNNs.The inspection layer had variable receptive fields to capture sparse correlation patterns in the feature map.

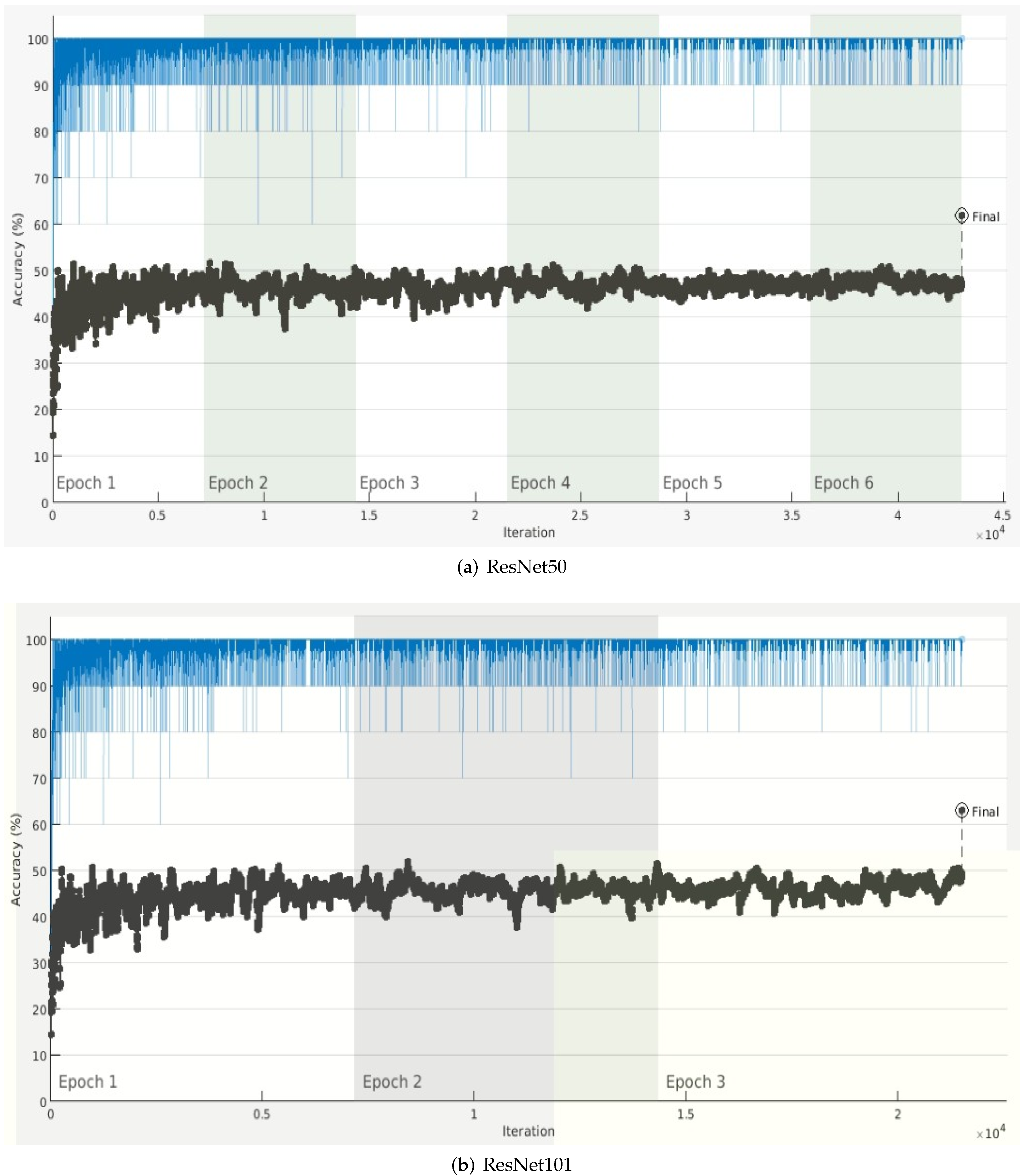

- Residual NetworkResidual Network [45] was the winner of the ILSVRC-2015 challenge. It was proposed with the aim of overcoming the problem of a vanishing gradient in ultra-deep CNN by introducing residual blocks. Various versions of Residual Network (ResNet) were developed by varying the number of layers as 34, 50,101, 152, and 1202. The popular Residual Networks ResNet50 and ResNet101 were used in our experiment.

3. Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Position Paper on Road Worthiness. Available online: https://knowledge-base.connectedautomateddriving.eu/wp-content/uploads/2019/08/CARTRE-Roadworthiness-Testing-Safety-Validation-position-Paper_3_After_review.pdf (accessed on 26 January 2022).

- Cools, M.; Moons, E.; Wets, G. Assessing the impact of weather on traffic intensity. Weather Clim. Soc. 2010, 2, 60–68. [Google Scholar] [CrossRef] [Green Version]

- Achari, V.P.S.; Khanam, Z.; Singh, A.K.; Jindal, A.; Prakash, A.; Kumar, N. I 2 UTS: An IoT based Intelligent Urban Traffic System. In Proceedings of the 2021 IEEE 22nd International Conference on High Performance Switching and Routing (HPSR), Paris, France, 7–10 June 2021; pp. 1–6. [Google Scholar]

- Kilpeläinen, M.; Summala, H. Effects of weather and weather forecasts on driver behaviour. Transp. Res. Part F Traffic Psychol. Behav. 2007, 10, 288–299. [Google Scholar] [CrossRef]

- Lu, C.; Lin, D.; Jia, J.; Tang, C.K. Two-class weather classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3718–3725. [Google Scholar]

- Roser, M.; Moosmann, F. Classification of weather situations on single color images. IEEE Intell. Veh. Symp. 2008, 10, 798–803. [Google Scholar]

- Zhang, Z.; Ma, H. Multi-class weather classification on single images. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 4396–4400. [Google Scholar]

- Zhang, T.; Zhang, X. ShipDeNet-20: An only 20 convolution layers and <1-MB lightweight SAR ship detector. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1234–1238. [Google Scholar]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S.; Wang, J.; Li, J.; Su, H.; Zhou, Y. Balance scene learning mechanism for offshore and inshore ship detection in SAR images. IEEE Geosci. Remote Sens. Lett. 2020, 19, 4004905. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Liu, C.; Xu, X.; Zhan, X.; Wang, C.; Ahmad, I.; Zhou, Y.; Pan, D.; et al. HOG-ShipCLSNet: A novel deep learning network with hog feature fusion for SAR ship classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5210322. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. Squeeze-and-excitation Laplacian pyramid network with dual-polarization feature fusion for ship classification in sar images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 4019905. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X. Quad-FPN: A novel quad feature pyramid network for SAR ship detection. Remote Sens. 2021, 13, 2771. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. A polarization fusion network with geometric feature embedding for SAR ship classification. Pattern Recognit. 2022, 123, 108365. [Google Scholar] [CrossRef]

- Dhiraj; Khanam, Z.; Soni, P.; Raheja, J.L. Development of 3D high definition endoscope system. In Information Systems Design and Intelligent Applications; Springer: Berlin/Heidelberg, Germany, 2016; pp. 181–189. [Google Scholar]

- Khanam, Z.; Raheja, J.L. Tracking of miniature-sized objects in 3D endoscopic vision. In Algorithms and Applications; Springer: Berlin/Heidelberg, Germany, 2018; pp. 77–88. [Google Scholar]

- Aslam, B.; Saha, S.; Khanam, Z.; Zhai, X.; Ehsan, S.; Stolkin, R.; McDonald-Maier, K. Gamma-induced degradation analysis of commercial off-the-shelf camera sensors. In Proceedings of the 2019 IEEE SENSORS, Montreal, QC, Canada, 27–30 October 2019; pp. 1–4. [Google Scholar]

- Khanam, Z.; Saha, S.; Aslam, B.; Zhai, X.; Ehsan, S.; Cazzaniga, C.; Frost, C.; Stolkin, R.; McDonald-Maier, K. Degradation measurement of kinect sensor under fast neutron beamline. In Proceedings of the 2019 IEEE Radiation Effects Data Workshop, San Antonio, TX, USA, 8–12 July 2019; pp. 1–5. [Google Scholar]

- Khanam, Z.; Aslam, B.; Saha, S.; Zhai, X.; Ehsan, S.; Stolkin, R.; McDonald-Maier, K. Gamma-Induced Image Degradation Analysis of Robot Vision Sensor for Autonomous Inspection of Nuclear Sites. IEEE Sens. J. 2021, 1. [Google Scholar] [CrossRef]

- Gil, D.; Hernàndez-Sabaté, A.; Enconniere, J.; Asmayawati, S.; Folch, P.; Borrego-Carazo, J.; Piera, M.À. E-Pilots: A System to Predict Hard Landing During the Approach Phase of Commercial Flights. IEEE Access 2021, 10, 7489–7503. [Google Scholar] [CrossRef]

- Hernández-Sabaté, A.; Yauri, J.; Folch, P.; Piera, M.À.; Gil, D. Recognition of the Mental Workloads of Pilots in the Cockpit Using EEG Signals. Appl. Sci. 2022, 12, 2298. [Google Scholar] [CrossRef]

- Yousefi, A.; Amidi, Y.; Nazari, B.; Eden, U. Assessing Goodness-of-Fit in Marked Point Process Models of Neural Population Coding via Time and Rate Rescaling. Neural Comput. 2020, 32, 2145–2186. [Google Scholar] [CrossRef] [PubMed]

- Azizi, A.; Tahmid, I.; Waheed, A.; Mangaokar, N.; Pu, J.; Javed, M.; Reddy, C.K.; Viswanath, B. T-Miner: A Generative Approach to Defend Against Trojan Attacks on DNN-based Text Classification. arXiv 2021, arXiv:2103.04264. [Google Scholar]

- Qian, Y.; Almazan, E.J.; Elder, J.H. Evaluating features and classifiers for road weather condition analysis. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 4403–4407. [Google Scholar] [CrossRef]

- Minhas, S.; Hernández-Sabaté, A.; Ehsan, S.; McDonald-Maier, K.D. Effects of Non-Driving Related Tasks During Self-Driving Mode. IEEE Trans. Intell. Transp. Syst. 2020, 23, 1391–1399. [Google Scholar] [CrossRef]

- Minhas, S.; Hernández-Sabaté, A.; Ehsan, S.; Díaz-Chito, K.; Leonardis, A.; López, A.M.; McDonald-Maier, K.D. LEE: A Photorealistic Virtual Environment for Assessing Driver-Vehicle Interactions in Self-Driving Mode. In Computer Vision—ECCV 2016 Workshops, Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Hua, G., Jégou, H., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 894–900. [Google Scholar]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. BDD100K: A Diverse Driving Dataset for Heterogeneous Multitask Learning. arXiv 2020, arXiv:1805.04687. [Google Scholar]

- Lim, S.H.; Ryu, S.K.; Yoon, Y.H. Image Recognition of Road Surface Conditions using Polarization and Wavelet Transform. J. Korean Soc. Civ. Eng. 2007, 27, 471–477. [Google Scholar]

- Kawai, S.; Takeuchi, K.; Shibata, K.; Horita, Y. A method to distinguish road surface conditions for car-mounted camera images at night-time. In Proceedings of the 2012 12th International Conference on ITS Telecommunications, Taipei, Taiwan, 5–8 November 2012; pp. 668–672. [Google Scholar] [CrossRef]

- Kurihata, H.; Takahashi, T.; Ide, I.; Mekada, Y.; Murase, H.; Tamatsu, Y.; Miyahara, T. Rainy weather recognition from in-vehicle camera images for driver assistance. In Proceedings of the IEEE Proceedings. Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; pp. 205–210. [Google Scholar] [CrossRef]

- Yan, X.; Luo, Y.; Zheng, X. Weather Recognition Based on Images Captured by Vision System in Vehicle. In Advances in Neural Networks—ISNN 2009; Yu, W., He, H., Zhang, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 390–398. [Google Scholar]

- Lu, C.; Lin, D.; Jia, J.; Tang, C. Two-Class Weather Classification. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2510–2524. [Google Scholar] [CrossRef]

- Song, H.; Chen, Y.; Gao, Y. Weather Condition Recognition Based on Feature Extraction and K-NN. In Foundations and Practical Applications of Cognitive Systems and Information Processing; Sun, F., Hu, D., Liu, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; pp. 199–210. [Google Scholar]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. In Proceedings of the 1st Annual Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Ros, G.; Sellart, L.; Materzynska, J.; Vazquez, D.; Lopez, A.M. The SYNTHIA Dataset: A Large Collection of Synthetic Images for Semantic Segmentation of Urban Scenes. In Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Feng, H.; Fan, H. 3D weather simulation on 3D virtual earth. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 543–545. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. High-speed ship detection in SAR images based on a grid convolutional neural network. Remote Sens. 2019, 11, 1206. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S. HyperLi-Net: A hyper-light deep learning network for high-accurate and high-speed ship detection from synthetic aperture radar imagery. ISPRS J. Photogramm. Remote Sens. 2020, 167, 123–153. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S. Depthwise separable convolution neural network for high-speed SAR ship detection. Remote Sens. 2019, 11, 2483. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1.0: A deep learning dataset dedicated to small ship detection from large-scale Sentinel-1 SAR images. Remote Sens. 2020, 12, 2997. [Google Scholar]

- Zhang, T.; Zhang, X.; Liu, C.; Shi, J.; Wei, S.; Ahmad, I.; Zhan, X.; Zhou, Y.; Pan, D.; Li, J.; et al. Balance learning for ship detection from synthetic aperture radar remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2021, 182, 190–207. [Google Scholar] [CrossRef]

- Guerra, J.C.V.; Khanam, Z.; Ehsan, S.; Stolkin, R.; McDonald-Maier, K. Weather Classification: A new multi-class dataset, data augmentation approach and comprehensive evaluations of Convolutional Neural Networks. In Proceedings of the 2018 NASA/ESA Conference on Adaptive Hardware and Systems (AHS). IEEE, Edinburgh, UK, 6–9 August 2018; pp. 305–310. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. Repvgg: Making vgg-style convnets great again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13733–13742. [Google Scholar]

| Class | Training | Testing |

|---|---|---|

| Clear | 9613 | 1764 |

| Cloudy | 38,949 | 1677 |

| Foggy | 29,914 | 5 |

| Rainy | 29,857 | 396 |

| Total | 108,333 | 3842 |

| Architecture | mAP | Trainable Parameter | Time (min) |

|---|---|---|---|

| AlexNet | 0.6856 ± 0.012 | 61M | 986 |

| VGGNET | 0.7334 ± 0.023 | 138M | 2930 |

| GoogleLeNet | 0.6034 ± 0.009 | 7M | 618 |

| ResNet50 | 0.6183 ± 0.025 | 26M | 1020 |

| ResNet101 | 0.63 ± 0.006 | 44M | 1242 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Minhas, S.; Khanam, Z.; Ehsan, S.; McDonald-Maier, K.; Hernández-Sabaté, A. Weather Classification by Utilizing Synthetic Data. Sensors 2022, 22, 3193. https://doi.org/10.3390/s22093193

Minhas S, Khanam Z, Ehsan S, McDonald-Maier K, Hernández-Sabaté A. Weather Classification by Utilizing Synthetic Data. Sensors. 2022; 22(9):3193. https://doi.org/10.3390/s22093193

Chicago/Turabian StyleMinhas, Saad, Zeba Khanam, Shoaib Ehsan, Klaus McDonald-Maier, and Aura Hernández-Sabaté. 2022. "Weather Classification by Utilizing Synthetic Data" Sensors 22, no. 9: 3193. https://doi.org/10.3390/s22093193

APA StyleMinhas, S., Khanam, Z., Ehsan, S., McDonald-Maier, K., & Hernández-Sabaté, A. (2022). Weather Classification by Utilizing Synthetic Data. Sensors, 22(9), 3193. https://doi.org/10.3390/s22093193