Markerless 3D Skeleton Tracking Algorithm by Merging Multiple Inaccurate Skeleton Data from Multiple RGB-D Sensors

Abstract

:1. Introduction

1.1. Research Background

1.2. Related Works

2. Materials and Methods

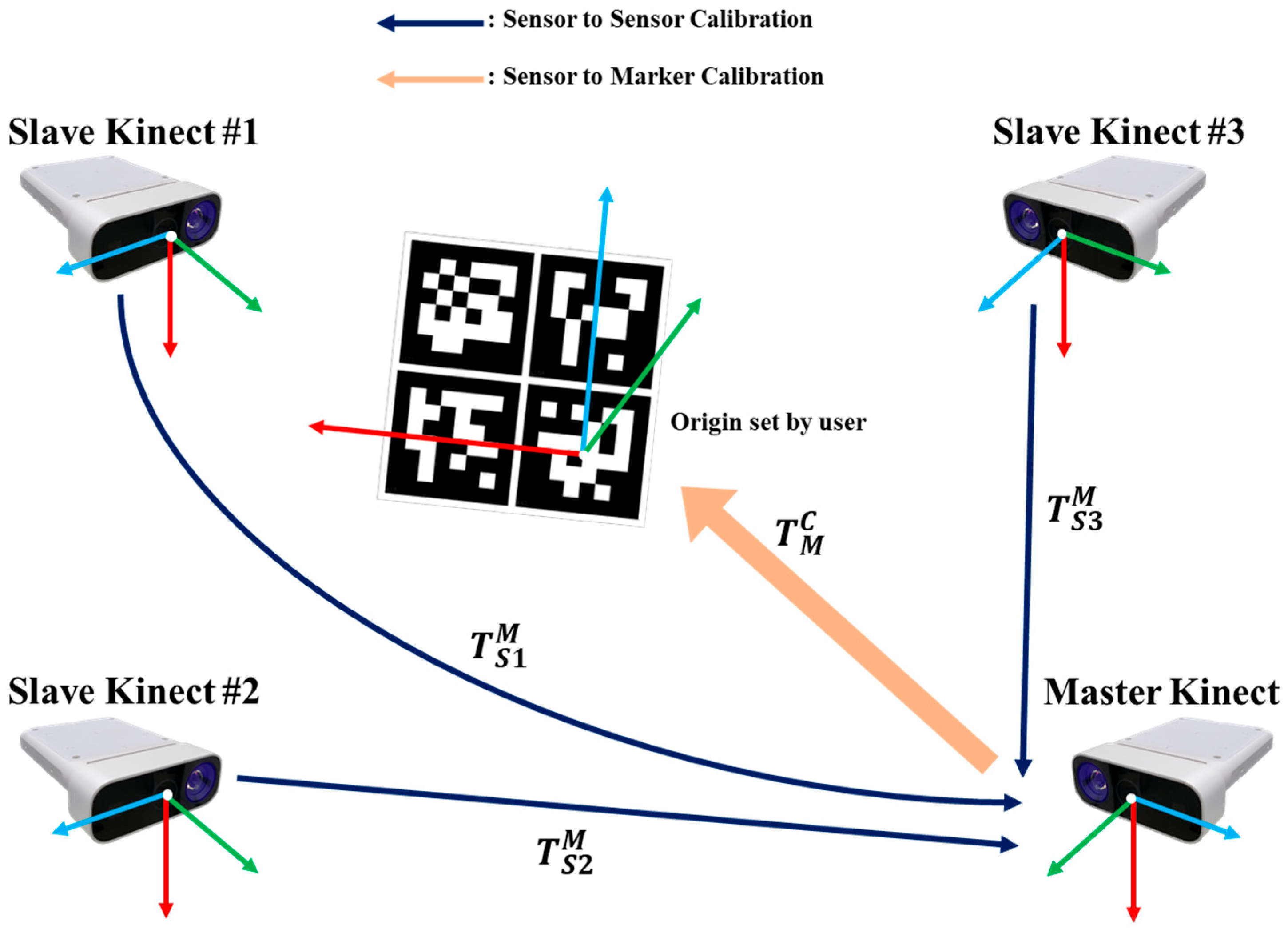

2.1. Calibration for Coordinate Systems of Sensors

2.1.1. Sensor-to-Sensor Calibration

2.1.2. Sensor-to-Marker Calibration

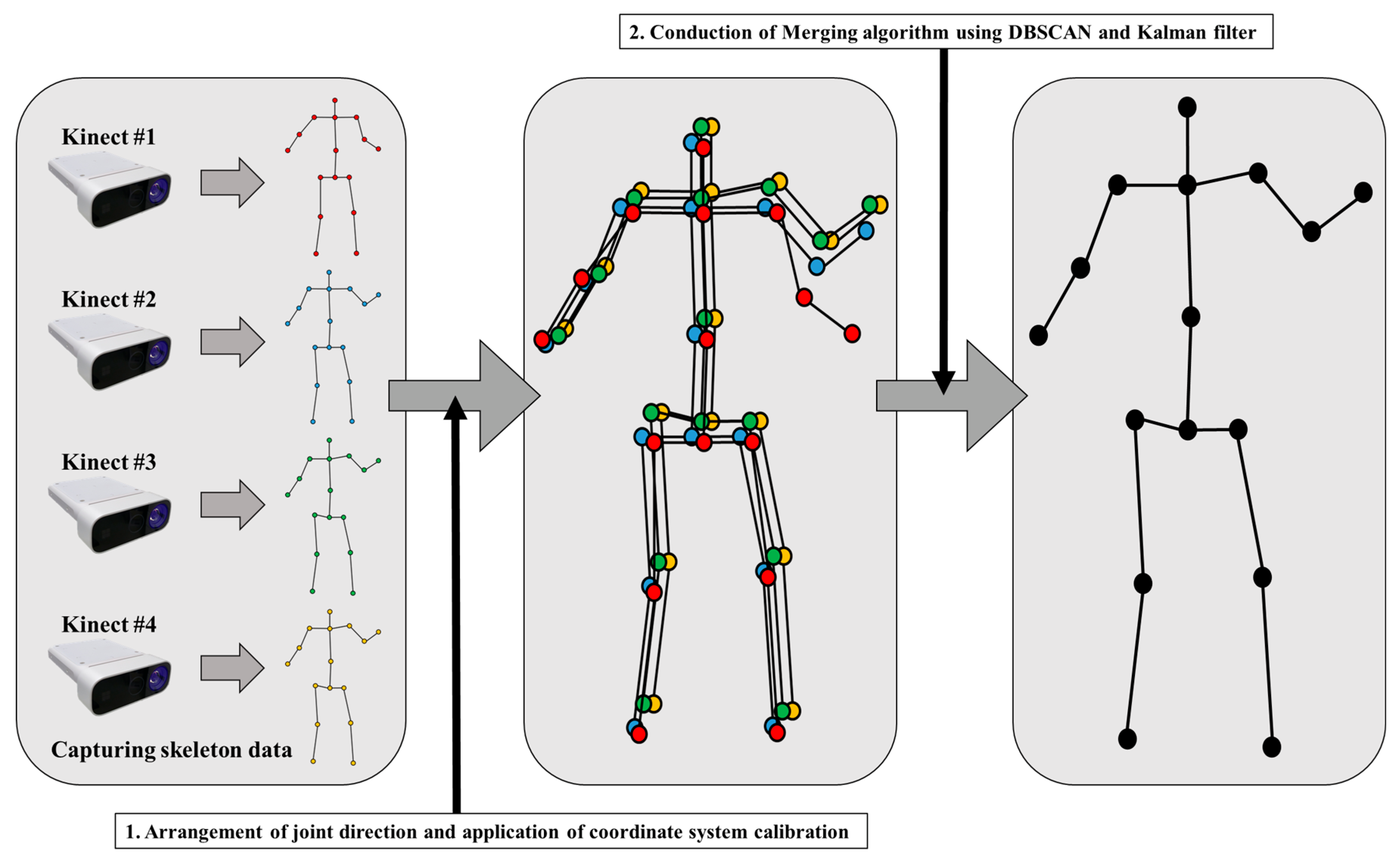

2.2. Skeleton Merging Algorithm

2.2.1. Arrangement of Skeleton to Correct the Misoriented Joints

2.2.2. Skeleton Merging and Noise Filtering Using DBSCAN

| Algorithm 1: DBSCAN | |

| Input: | candidates set , |

| searching area , | |

| minimum number of neighboring data | |

| Output: | labels |

| // number of cluster | |

| foreachdo | |

| ← UNASSIGNED | |

| end | |

| foreachdo | |

| if = UNASSIGNED then | |

| = SCAN(, ) // Searching neighbors of x | |

| if then | |

| ← | |

| ← | |

| foreach do | |

| if UNASSIGNED then | |

| ← | |

| = SCAN(, ) | |

| if then | |

| ← | |

| end | |

| end | |

| end | |

| end | |

| else | |

| ← NOISE | |

| end | |

| end | |

| end | |

| return | |

| Algorithm 2: SCAN | |

| Input: | data point , searching area |

| Output: | neighbors |

| foreachdo if Euclidean_distance(, ) then ← end end return | |

2.2.3. Joint Position Tracking Using Kalman Filter

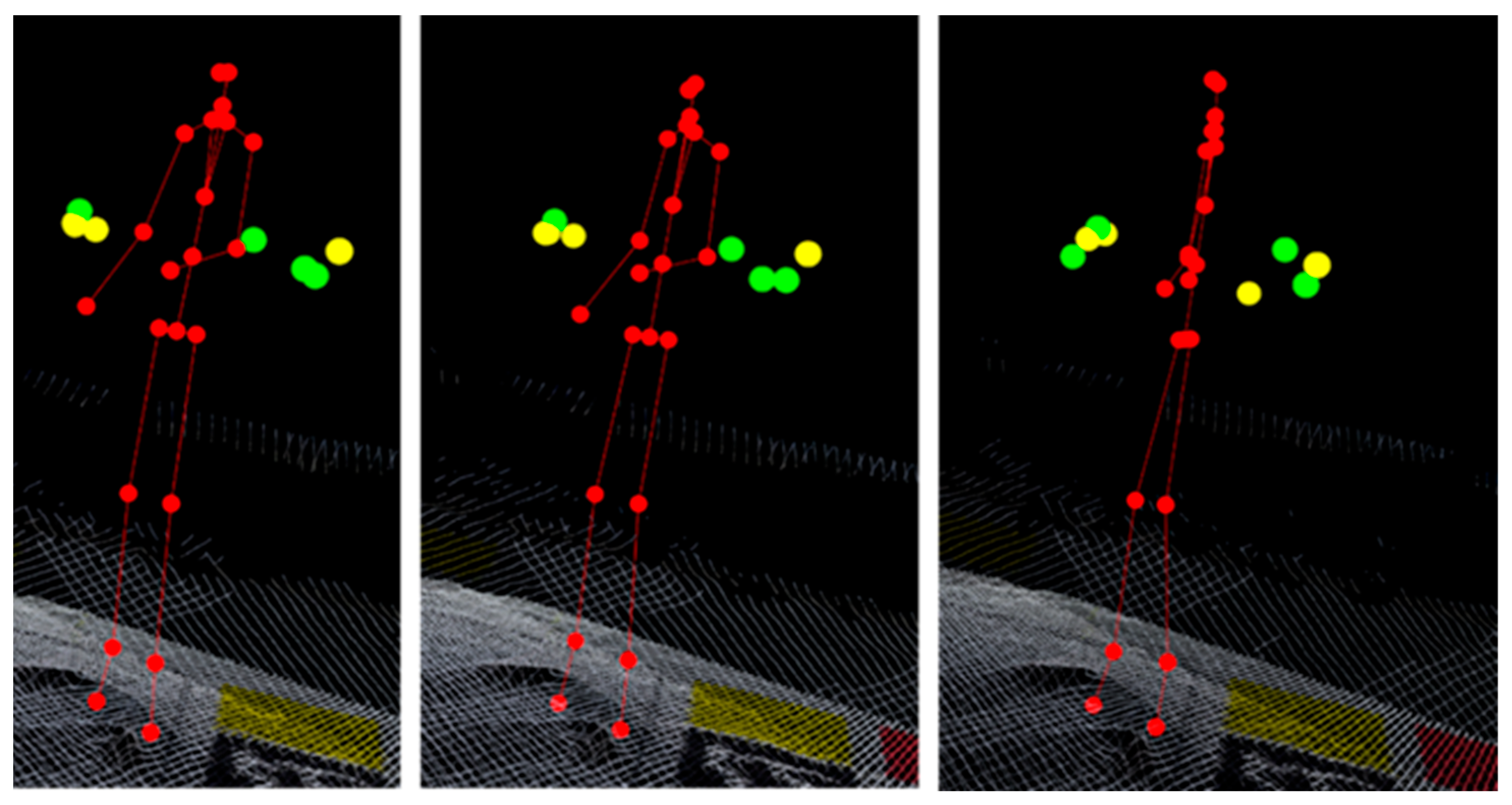

2.3. Experiment Setting

3. Experimental Results

3.1. Result of Performance Improvement in the Merging Algorithm

3.2. Result of Different Searching Areas of DBSCAN

3.3. Result According to TNOS

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Appendix A

| G1 | RMSE | A1 | A3_5 | A3_10 | A3_15 | A3_20 | A4_5 | A4_10 | A4_15 | A4_20 | A5_5 | A5_10 | A5_15 | A5_20 |

| Pelvis | 30.40 | 31.09 | 31.09 | 31.09 | 31.09 | 32.27 | 30.40 | 30.40 | 30.40 | 32.05 | 30.28 | 30.28 | 30.28 | |

| Spine Naval | 45.32 | 47.72 | 47.72 | 47.72 | 47.72 | 48.08 | 45.32 | 45.32 | 45.32 | 47.91 | 45.15 | 45.16 | 45.16 | |

| Neck | 39.13 | 35.34 | 35.34 | 35.34 | 35.34 | 32.66 | 39.16 | 39.14 | 39.14 | 30.84 | 38.69 | 38.68 | 38.68 | |

| L Shoulder | 39.11 | 33.58 | 33.58 | 33.58 | 33.58 | 31.27 | 36.55 | 39.11 | 39.11 | 30.10 | 35.48 | 38.48 | 38.48 | |

| L Elbow | 53.92 | 40.58 | 40.58 | 40.58 | 40.58 | 39.46 | 39.15 | 40.94 | 43.75 | 37.09 | 37.84 | 39.66 | 42.59 | |

| L Wrist | 80.86 | 65.02 | 65.02 | 65.02 | 65.02 | 65.12 | 59.06 | 57.98 | 59.96 | 56.85 | 54.25 | 55.95 | 57.83 | |

| R Shoulder | 33.82 | 36.67 | 36.67 | 36.67 | 36.67 | 38.44 | 32.88 | 33.90 | 33.86 | 37.35 | 31.80 | 33.11 | 33.05 | |

| R Elbow | 57.42 | 41.22 | 41.22 | 41.22 | 41.22 | 40.35 | 34.60 | 35.38 | 39.37 | 36.49 | 33.29 | 33.53 | 37.51 | |

| R Wrist | 102.76 | 72.64 | 72.64 | 72.64 | 72.64 | 74.84 | 59.57 | 58.64 | 57.83 | 68.68 | 54.33 | 56.34 | 56.33 | |

| L Hip | 33.17 | 36.36 | 36.36 | 36.36 | 36.36 | 38.89 | 33.17 | 33.17 | 33.17 | 38.43 | 33.00 | 33.00 | 33.00 | |

| L Knee | 24.80 | 24.13 | 24.13 | 24.13 | 24.13 | 24.16 | 24.77 | 24.79 | 24.80 | 23.74 | 24.34 | 24.37 | 24.38 | |

| L Ankle | 31.51 | 31.67 | 31.67 | 31.67 | 31.67 | 32.18 | 31.02 | 31.30 | 31.51 | 31.49 | 30.38 | 30.61 | 30.84 | |

| R Hip | 29.36 | 31.79 | 31.79 | 31.79 | 31.79 | 31.57 | 29.35 | 29.36 | 29.36 | 31.27 | 29.17 | 29.17 | 29.17 | |

| R Knee | 22.83 | 22.91 | 22.91 | 22.91 | 22.91 | 23.16 | 22.82 | 22.82 | 22.83 | 22.85 | 22.51 | 22.51 | 22.51 | |

| R Ankle | 29.44 | 28.26 | 28.26 | 28.26 | 28.26 | 28.53 | 28.77 | 28.93 | 29.48 | 27.88 | 28.18 | 28.16 | 28.86 | |

| Head | 38.32 | 34.42 | 34.42 | 34.42 | 34.42 | 32.10 | 38.28 | 38.32 | 38.32 | 30.49 | 37.53 | 37.62 | 37.61 | |

| G2 | Pelvis | 35.32 | 35.87 | 35.87 | 35.87 | 35.87 | 36.74 | 35.29 | 35.32 | 35.32 | 32.93 | 32.71 | 32.75 | 32.75 |

| Spine Naval | 48.80 | 46.31 | 46.31 | 46.31 | 46.31 | 56.12 | 48.51 | 48.80 | 48.80 | 50.61 | 46.61 | 46.92 | 46.92 | |

| Neck | 50.75 | 49.68 | 49.68 | 49.68 | 49.68 | 51.88 | 50.33 | 50.76 | 50.76 | 47.64 | 48.06 | 48.52 | 48.52 | |

| L Shoulder | 49.58 | 45.94 | 45.94 | 45.94 | 45.94 | 47.87 | 44.89 | 49.47 | 49.57 | 42.72 | 40.78 | 46.22 | 46.34 | |

| L Elbow | 73.62 | 56.68 | 56.68 | 56.68 | 56.68 | 57.10 | 53.40 | 56.65 | 64.31 | 47.99 | 45.59 | 50.11 | 59.17 | |

| L Wrist | 114.99 | 91.85 | 91.85 | 91.85 | 91.85 | 91.57 | 82.09 | 80.16 | 85.49 | 83.13 | 69.29 | 69.07 | 75.93 | |

| R Shoulder | 46.74 | 49.71 | 49.71 | 49.71 | 49.71 | 54.02 | 45.10 | 46.73 | 46.74 | 48.23 | 41.07 | 43.33 | 43.33 | |

| R Elbow | 72.43 | 60.63 | 60.63 | 60.63 | 60.63 | 67.36 | 59.09 | 55.69 | 60.33 | 56.17 | 48.71 | 49.07 | 54.37 | |

| R Wrist | 121.59 | 95.22 | 95.22 | 95.22 | 95.22 | 98.57 | 86.13 | 81.28 | 78.73 | 95.07 | 73.30 | 70.18 | 69.41 | |

| L Hip | 37.93 | 37.87 | 37.87 | 37.87 | 37.87 | 39.95 | 37.81 | 37.93 | 37.93 | 34.94 | 34.92 | 35.08 | 35.08 | |

| L Knee | 44.43 | 44.87 | 44.87 | 44.87 | 44.87 | 45.94 | 44.31 | 44.37 | 44.42 | 40.24 | 39.32 | 39.37 | 39.41 | |

| L Ankle | 43.33 | 43.29 | 43.29 | 43.29 | 43.29 | 43.67 | 42.94 | 42.99 | 43.12 | 38.21 | 37.83 | 37.89 | 37.99 | |

| R Hip | 35.16 | 35.91 | 35.91 | 35.91 | 35.91 | 37.77 | 35.09 | 35.17 | 35.16 | 33.46 | 32.02 | 32.18 | 32.17 | |

| R Knee | 40.94 | 41.43 | 41.43 | 41.43 | 41.43 | 42.74 | 40.82 | 40.82 | 40.93 | 36.45 | 35.41 | 35.43 | 35.49 | |

| R Ankle | 40.84 | 40.18 | 40.18 | 40.18 | 40.18 | 40.44 | 39.85 | 39.96 | 40.10 | 34.67 | 34.47 | 34.59 | 34.68 | |

| Head | 52.50 | 52.72 | 52.72 | 52.72 | 52.72 | 55.28 | 52.09 | 52.48 | 52.50 | 49.92 | 49.43 | 49.91 | 49.94 | |

| G3 | Pelvis | 39.07 | 39.38 | 39.38 | 39.38 | 39.38 | 39.62 | 39.07 | 39.07 | 39.07 | 38.39 | 37.86 | 37.86 | 37.86 |

| Spine Naval | 49.01 | 49.06 | 49.06 | 49.06 | 49.06 | 50.01 | 49.00 | 49.01 | 49.01 | 49.76 | 48.22 | 48.23 | 48.23 | |

| Neck | 45.79 | 47.42 | 47.42 | 47.42 | 47.42 | 48.69 | 45.73 | 45.75 | 45.79 | 45.16 | 44.71 | 44.73 | 44.77 | |

| L Shoulder | 44.68 | 43.02 | 43.02 | 43.02 | 43.02 | 42.65 | 42.37 | 44.65 | 44.66 | 41.43 | 40.33 | 43.07 | 43.08 | |

| L Elbow | 84.43 | 47.26 | 47.26 | 47.26 | 47.26 | 44.03 | 43.43 | 50.82 | 58.81 | 41.34 | 40.99 | 48.08 | 56.36 | |

| L Wrist | 145.80 | 104.66 | 104.66 | 104.66 | 104.66 | 90.87 | 70.54 | 75.03 | 82.32 | 92.94 | 66.62 | 72.43 | 79.77 | |

| R Shoulder | 36.63 | 42.41 | 42.41 | 42.41 | 42.41 | 45.17 | 35.53 | 36.55 | 36.62 | 42.95 | 33.42 | 34.72 | 34.77 | |

| R Elbow | 78.15 | 46.72 | 46.72 | 46.72 | 46.72 | 43.49 | 40.45 | 44.93 | 51.04 | 38.73 | 36.15 | 39.05 | 45.78 | |

| R Wrist | 145.46 | 98.59 | 98.59 | 98.59 | 98.59 | 97.68 | 60.66 | 62.45 | 69.23 | 104.87 | 56.75 | 58.79 | 64.79 | |

| L Hip | 40.82 | 41.27 | 41.27 | 41.27 | 41.27 | 42.92 | 40.82 | 40.82 | 40.82 | 41.73 | 39.52 | 39.52 | 39.52 | |

| L Knee | 44.14 | 39.60 | 39.60 | 39.60 | 39.60 | 39.58 | 39.91 | 40.79 | 41.52 | 38.07 | 37.96 | 38.76 | 39.32 | |

| L Ankle | 45.07 | 41.78 | 41.78 | 41.78 | 41.78 | 42.07 | 41.21 | 41.55 | 42.22 | 39.05 | 38.22 | 38.41 | 38.78 | |

| R Hip | 38.65 | 38.92 | 38.92 | 38.92 | 38.92 | 39.70 | 38.65 | 38.65 | 38.65 | 38.33 | 37.25 | 37.25 | 37.25 | |

| R Knee | 31.63 | 31.27 | 31.27 | 31.27 | 31.27 | 31.69 | 31.54 | 31.55 | 31.59 | 29.35 | 29.14 | 29.15 | 29.18 | |

| R Ankle | 33.99 | 33.07 | 33.07 | 33.07 | 33.07 | 33.20 | 33.57 | 33.70 | 33.80 | 29.89 | 30.36 | 30.45 | 30.53 | |

| Head | 45.89 | 49.01 | 49.01 | 49.01 | 49.01 | 50.92 | 45.82 | 45.81 | 45.88 | 47.31 | 44.59 | 44.58 | 44.64 |

| G4 | RMSE | A1 | A3_5 | A3_10 | A3_15 | A3_20 | A4_5 | A4_10 | A4_15 | A4_20 | A5_5 | A5_10 | A5_15 | A5_20 |

| Pelvis | 33.94 | 35.27 | 35.27 | 35.27 | 35.27 | 37.49 | 34.01 | 33.94 | 33.94 | 36.93 | 33.46 | 33.37 | 33.37 | |

| Spine Naval | 46.02 | 47.81 | 47.81 | 47.81 | 47.81 | 48.82 | 45.66 | 46.02 | 46.02 | 49.95 | 45.09 | 45.47 | 45.47 | |

| Neck | 41.99 | 38.10 | 38.10 | 38.10 | 38.10 | 39.04 | 41.75 | 41.99 | 41.99 | 39.78 | 40.72 | 40.98 | 40.98 | |

| L Shoulder | 44.50 | 39.82 | 39.82 | 39.82 | 39.82 | 42.27 | 39.69 | 43.84 | 44.29 | 41.53 | 38.12 | 42.33 | 42.75 | |

| L Elbow | 74.18 | 51.85 | 51.85 | 51.85 | 51.85 | 50.64 | 45.99 | 48.18 | 54.01 | 47.88 | 43.99 | 47.03 | 53.00 | |

| L Wrist | 109.44 | 81.04 | 81.04 | 81.04 | 81.04 | 75.88 | 65.34 | 63.65 | 67.16 | 69.52 | 59.35 | 61.87 | 66.62 | |

| R Shoulder | 38.45 | 39.96 | 39.96 | 39.96 | 39.96 | 43.66 | 37.29 | 38.08 | 38.27 | 42.65 | 35.48 | 36.32 | 36.50 | |

| R Elbow | 64.24 | 48.17 | 48.17 | 48.17 | 48.17 | 49.82 | 43.17 | 44.13 | 47.34 | 43.47 | 40.92 | 41.26 | 44.08 | |

| R Wrist | 111.15 | 78.47 | 78.47 | 78.47 | 78.47 | 77.60 | 62.44 | 60.09 | 63.11 | 76.96 | 56.12 | 56.97 | 60.64 | |

| L Hip | 37.48 | 38.75 | 38.75 | 38.75 | 38.75 | 41.67 | 36.92 | 37.47 | 37.48 | 40.56 | 36.18 | 36.69 | 36.69 | |

| L Knee | 66.81 | 42.99 | 42.99 | 42.99 | 42.99 | 43.34 | 40.91 | 42.25 | 44.54 | 42.65 | 40.54 | 41.50 | 43.36 | |

| L Ankle | 76.47 | 50.94 | 50.94 | 50.94 | 50.94 | 48.78 | 47.76 | 49.60 | 51.21 | 45.33 | 45.32 | 47.26 | 48.59 | |

| R Hip | 33.06 | 34.37 | 34.37 | 34.37 | 34.37 | 37.09 | 33.49 | 33.05 | 33.06 | 36.62 | 32.82 | 32.28 | 32.28 | |

| R Knee | 67.24 | 40.02 | 40.02 | 40.02 | 40.02 | 40.73 | 37.73 | 38.75 | 40.96 | 39.72 | 38.23 | 38.71 | 40.64 | |

| R Ankle | 85.21 | 53.90 | 53.90 | 53.90 | 53.90 | 51.48 | 49.38 | 50.65 | 52.72 | 46.93 | 47.35 | 49.19 | 50.37 | |

| Head | 42.40 | 38.29 | 38.29 | 38.29 | 38.29 | 39.30 | 42.02 | 42.39 | 42.40 | 39.23 | 40.71 | 41.07 | 41.08 | |

| G5 | Pelvis | 32.73 | 34.34 | 34.34 | 34.34 | 34.34 | 34.73 | 32.73 | 32.73 | 32.73 | 32.70 | 31.84 | 31.84 | 31.84 |

| Spine Naval | 45.16 | 44.28 | 44.28 | 44.28 | 44.28 | 45.60 | 44.99 | 45.16 | 45.16 | 49.05 | 44.61 | 44.78 | 44.78 | |

| Neck | 51.96 | 49.37 | 49.37 | 49.37 | 49.37 | 49.56 | 51.96 | 51.96 | 51.96 | 49.23 | 51.44 | 51.39 | 51.39 | |

| L Shoulder | 48.14 | 44.49 | 44.49 | 44.49 | 44.49 | 43.55 | 44.00 | 48.15 | 48.14 | 44.16 | 42.96 | 46.68 | 46.67 | |

| L Elbow | 80.28 | 52.75 | 52.75 | 52.75 | 52.75 | 53.27 | 54.56 | 61.44 | 70.22 | 48.24 | 54.03 | 57.10 | 66.28 | |

| L Wrist | 121.88 | 88.16 | 88.16 | 88.16 | 88.16 | 85.41 | 76.12 | 76.53 | 82.84 | 80.97 | 74.79 | 76.55 | 79.22 | |

| R Shoulder | 42.72 | 49.08 | 49.08 | 49.08 | 49.08 | 51.77 | 43.00 | 42.72 | 42.72 | 51.69 | 40.97 | 41.13 | 41.13 | |

| R Elbow | 65.98 | 46.88 | 46.88 | 46.88 | 46.88 | 48.98 | 44.23 | 46.66 | 52.32 | 44.32 | 41.33 | 41.96 | 47.58 | |

| R Wrist | 120.28 | 88.29 | 88.29 | 88.29 | 88.29 | 82.33 | 71.00 | 72.44 | 76.62 | 77.43 | 68.48 | 70.13 | 72.80 | |

| L Hip | 35.70 | 37.43 | 37.43 | 37.43 | 37.43 | 39.34 | 35.71 | 35.70 | 35.70 | 37.95 | 34.47 | 34.47 | 34.47 | |

| L Knee | 54.21 | 46.71 | 46.71 | 46.71 | 46.71 | 47.37 | 46.60 | 47.43 | 48.25 | 42.63 | 42.49 | 43.23 | 43.79 | |

| L Ankle | 57.21 | 52.51 | 52.51 | 52.51 | 52.51 | 52.52 | 51.53 | 51.87 | 53.02 | 47.55 | 46.92 | 47.15 | 48.12 | |

| R Hip | 33.16 | 31.73 | 31.73 | 31.73 | 31.73 | 31.16 | 32.96 | 33.16 | 33.16 | 28.79 | 31.63 | 31.86 | 31.86 | |

| R Knee | 54.25 | 45.22 | 45.22 | 45.22 | 45.22 | 47.25 | 44.90 | 45.94 | 47.42 | 42.07 | 41.61 | 42.44 | 43.65 | |

| R Ankle | 52.10 | 46.15 | 46.15 | 46.15 | 46.15 | 46.15 | 44.95 | 45.95 | 48.50 | 41.09 | 40.82 | 41.64 | 43.96 | |

| Head | 55.34 | 53.60 | 53.60 | 53.60 | 53.60 | 54.85 | 56.19 | 55.34 | 55.34 | 56.60 | 55.49 | 54.59 | 54.59 | |

| G6 | Pelvis | 34.99 | 36.77 | 36.77 | 36.77 | 36.77 | 37.17 | 34.99 | 34.99 | 34.99 | 37.48 | 34.42 | 34.42 | 34.42 |

| Spine Naval | 46.54 | 47.07 | 47.07 | 47.07 | 47.07 | 45.30 | 46.41 | 46.54 | 46.54 | 44.77 | 46.03 | 46.18 | 46.18 | |

| Neck | 42.71 | 36.52 | 36.52 | 36.52 | 36.52 | 33.19 | 42.67 | 42.71 | 42.71 | 34.14 | 42.05 | 42.11 | 42.10 | |

| L Shoulder | 44.50 | 39.47 | 39.47 | 39.47 | 39.47 | 38.80 | 40.19 | 44.37 | 44.39 | 38.36 | 38.32 | 42.94 | 42.97 | |

| L Elbow | 61.36 | 43.70 | 43.70 | 43.70 | 43.70 | 43.34 | 42.28 | 46.05 | 51.66 | 39.49 | 38.58 | 42.15 | 48.13 | |

| L Wrist | 90.33 | 67.82 | 67.82 | 67.82 | 67.82 | 65.07 | 59.62 | 61.46 | 65.48 | 57.21 | 54.08 | 55.82 | 59.87 | |

| R Shoulder | 41.48 | 42.54 | 42.54 | 42.54 | 42.54 | 44.29 | 40.50 | 41.41 | 41.40 | 42.70 | 38.70 | 39.86 | 39.86 | |

| R Elbow | 59.40 | 47.07 | 47.07 | 47.07 | 47.07 | 45.62 | 43.32 | 44.39 | 48.44 | 41.24 | 39.57 | 40.15 | 44.02 | |

| R Wrist | 93.98 | 69.09 | 69.09 | 69.09 | 69.09 | 66.93 | 60.72 | 57.65 | 59.33 | 60.77 | 50.91 | 52.09 | 53.72 | |

| L Hip | 38.45 | 39.65 | 39.65 | 39.65 | 39.65 | 41.16 | 37.55 | 38.45 | 38.45 | 41.14 | 36.65 | 37.60 | 37.60 | |

| L Knee | 33.51 | 32.00 | 32.00 | 32.00 | 32.00 | 32.18 | 33.02 | 33.19 | 33.39 | 30.63 | 31.26 | 31.41 | 31.58 | |

| L Ankle | 38.39 | 36.27 | 36.27 | 36.27 | 36.27 | 36.42 | 36.84 | 37.36 | 37.55 | 33.90 | 34.50 | 35.01 | 35.21 | |

| R Hip | 35.53 | 36.05 | 36.05 | 36.05 | 36.05 | 36.23 | 35.43 | 35.55 | 35.54 | 35.80 | 34.56 | 34.71 | 34.69 | |

| R Knee | 30.37 | 30.35 | 30.35 | 30.35 | 30.35 | 30.67 | 29.91 | 29.97 | 30.08 | 29.13 | 28.45 | 28.49 | 28.57 | |

| R Ankle | 37.43 | 35.09 | 35.09 | 35.09 | 35.09 | 34.99 | 35.50 | 35.82 | 36.06 | 32.58 | 33.13 | 33.44 | 33.60 | |

| Head | 42.75 | 36.68 | 36.68 | 36.68 | 36.68 | 35.26 | 42.73 | 42.75 | 42.74 | 35.15 | 41.88 | 41.90 | 41.90 |

| G1 | STD | A1 | A3_5 | A3_10 | A3_15 | A3_20 | A4_5 | A4_10 | A4_15 | A4_20 | A5_5 | A5_10 | A5_15 | A5_20 |

| Pelvis | 3.45 | 4.45 | 4.45 | 4.45 | 4.45 | 4.43 | 3.45 | 3.45 | 3.45 | 4.08 | 3.17 | 3.17 | 3.18 | |

| Spine Naval | 6.10 | 11.24 | 11.24 | 11.24 | 11.24 | 13.81 | 6.10 | 6.09 | 6.09 | 12.52 | 5.75 | 5.74 | 5.74 | |

| Neck | 9.06 | 12.76 | 12.76 | 12.76 | 12.76 | 13.84 | 9.07 | 6.09 | 9.07 | 12.23 | 8.43 | 8.44 | 8.43 | |

| L Shoulder | 7.78 | 10.71 | 10.71 | 10.71 | 10.71 | 10.77 | 10.36 | 7.77 | 7.77 | 9.57 | 9.10 | 6.93 | 6.94 | |

| L Elbow | 23.15 | 18.92 | 18.92 | 18.92 | 18.92 | 17.38 | 14.38 | 14.87 | 15.70 | 14.72 | 12.97 | 13.39 | 14.20 | |

| L Wrist | 42.49 | 37.60 | 37.60 | 37.60 | 37.60 | 36.66 | 30.24 | 28.15 | 28.94 | 30.69 | 26.18 | 26.49 | 27.01 | |

| R Shoulder | 8.89 | 12.85 | 12.85 | 12.85 | 12.85 | 13.65 | 8.93 | 8.94 | 8.92 | 12.34 | 7.85 | 8.12 | 8.10 | |

| R Elbow | 28.68 | 22.19 | 22.19 | 22.19 | 22.19 | 20.05 | 14.53 | 15.45 | 18.10 | 16.44 | 12.98 | 13.88 | 16.60 | |

| R Wrist | 52.66 | 45.37 | 45.37 | 45.37 | 45.37 | 48.06 | 33.30 | 32.03 | 29.95 | 44.71 | 28.59 | 28.88 | 27.70 | |

| L Hip | 3.79 | 4.85 | 4.85 | 4.85 | 4.85 | 5.26 | 3.79 | 3.79 | 3.79 | 4.77 | 3.40 | 3.40 | 3.40 | |

| L Knee | 5.36 | 5.13 | 5.13 | 5.13 | 5.13 | 5.15 | 5.32 | 5.35 | 5.36 | 4.46 | 4.60 | 4.63 | 4.64 | |

| L Ankle | 7.03 | 6.85 | 6.85 | 6.85 | 6.85 | 6.92 | 6.76 | 6.93 | 7.03 | 5.91 | 5.73 | 5.84 | 5.93 | |

| R Hip | 3.98 | 5.69 | 5.69 | 5.69 | 5.69 | 6.82 | 3.99 | 3.98 | 3.98 | 6.12 | 3.59 | 3.57 | 3.57 | |

| R Knee | 4.85 | 4.91 | 4.91 | 4.91 | 4.91 | 4.96 | 4.82 | 4.83 | 4.85 | 4.37 | 4.24 | 4.24 | 4.24 | |

| R Ankle | 7.93 | 8.13 | 8.13 | 8.13 | 8.13 | 8.05 | 7.85 | 8.00 | 7.97 | 7.03 | 6.84 | 6.92 | 6.86 | |

| Head | 10.70 | 13.16 | 13.16 | 13.16 | 13.16 | 13.66 | 10.84 | 10.71 | 10.70 | 12.37 | 10.11 | 9.98 | 9.98 | |

| G2 | Pelvis | 12.79 | 14.41 | 14.41 | 14.41 | 14.41 | 15.54 | 12.78 | 12.79 | 12.79 | 11.44 | 9.94 | 9.97 | 9.97 |

| Spine Naval | 10.86 | 15.24 | 15.24 | 15.24 | 15.24 | 26.71 | 10.58 | 10.85 | 10.86 | 22.01 | 8.03 | 8.30 | 8.31 | |

| Neck | 12.80 | 16.46 | 16.46 | 16.46 | 16.46 | 23.79 | 12.59 | 12.79 | 12.79 | 19.39 | 9.81 | 10.06 | 10.06 | |

| L Shoulder | 14.74 | 18.44 | 18.44 | 18.44 | 18.44 | 21.35 | 18.79 | 14.68 | 14.74 | 15.22 | 14.14 | 10.45 | 10.55 | |

| L Elbow | 33.16 | 33.61 | 33.61 | 33.61 | 33.61 | 33.92 | 30.21 | 30.36 | 29.39 | 25.80 | 22.49 | 23.50 | 23.88 | |

| L Wrist | 51.15 | 54.68 | 54.68 | 54.68 | 54.68 | 57.65 | 48.94 | 45.46 | 46.73 | 51.72 | 37.40 | 34.74 | 37.18 | |

| R Shoulder | 16.48 | 17.89 | 17.89 | 17.89 | 17.89 | 21.06 | 17.61 | 16.48 | 16.49 | 15.42 | 13.31 | 12.50 | 12.51 | |

| R Elbow | 36.55 | 38.39 | 38.39 | 38.39 | 38.39 | 45.24 | 37.63 | 33.72 | 32.68 | 35.36 | 27.56 | 27.52 | 27.06 | |

| R Wrist | 55.10 | 60.51 | 60.51 | 60.51 | 60.51 | 67.01 | 56.81 | 51.07 | 46.69 | 66.02 | 45.89 | 41.36 | 37.84 | |

| L Hip | 13.04 | 15.37 | 15.37 | 15.37 | 15.37 | 17.08 | 13.07 | 13.04 | 13.04 | 12.08 | 9.78 | 9.78 | 9.77 | |

| L Knee | 20.11 | 21.28 | 21.28 | 21.28 | 21.28 | 22.43 | 20.02 | 20.05 | 20.10 | 17.05 | 15.43 | 15.45 | 15.50 | |

| L Ankle | 20.19 | 20.24 | 20.24 | 20.24 | 20.24 | 20.52 | 19.88 | 19.93 | 19.98 | 15.36 | 14.97 | 15.05 | 15.03 | |

| R Hip | 14.25 | 15.57 | 15.57 | 15.57 | 15.57 | 16.40 | 14.32 | 14.26 | 14.25 | 11.90 | 10.93 | 10.89 | 10.88 | |

| R Knee | 20.85 | 21.39 | 21.39 | 21.39 | 21.39 | 22.62 | 20.77 | 20.76 | 20.83 | 16.61 | 15.68 | 15.69 | 15.74 | |

| R Ankle | 21.28 | 20.80 | 20.80 | 20.80 | 20.80 | 20.89 | 20.31 | 20.32 | 20.43 | 15.44 | 15.17 | 15.182 | 15.27 | |

| Head | 14.57 | 17.65 | 17.65 | 17.65 | 17.65 | 23.13 | 14.56 | 14.55 | 14.57 | 18.81 | 11.57 | 11.67 | 11.71 | |

| G3 | Pelvis | 11.40 | 12.23 | 12.23 | 12.23 | 12.23 | 12.48 | 11.40 | 11.40 | 11.40 | 10.75 | 9.76 | 9.76 | 9.76 |

| Spine Naval | 10.46 | 13.42 | 13.42 | 13.42 | 13.42 | 17.93 | 10.46 | 10.46 | 10.46 | 18.11 | 9.22 | 9.23 | 9.23 | |

| Neck | 12.20 | 13.94 | 13.94 | 13.94 | 13.94 | 16.91 | 12.12 | 12.15 | 12.20 | 13.85 | 11.02 | 11.05 | 11.10 | |

| L Shoulder | 12.91 | 14.50 | 14.50 | 14.50 | 14.50 | 15.01 | 15.30 | 12.88 | 12.90 | 12.65 | 12.62 | 10.46 | 10.49 | |

| L Elbow | 41.10 | 26.47 | 26.47 | 26.47 | 26.47 | 22.51 | 21.61 | 25.21 | 28.33 | 18.34 | 17.56 | 20.72 | 23.43 | |

| L Wrist | 71.55 | 65.38 | 65.38 | 65.38 | 65.38 | 57.84 | 37.17 | 37.79 | 39.90 | 58.31 | 31.50 | 33.64 | 35.58 | |

| R Shoulder | 12.59 | 15.19 | 15.19 | 15.19 | 15.19 | 15.71 | 12.35 | 12.50 | 12.59 | 13.86 | 10.00 | 10.37 | 10.44 | |

| R Elbow | 41.46 | 29.33 | 29.33 | 29.33 | 29.33 | 24.94 | 21.66 | 25.70 | 29.41 | 19.26 | 16.19 | 19.14 | 23.20 | |

| R Wrist | 70.93 | 66.74 | 66.74 | 66.74 | 66.74 | 72.51 | 35.83 | 35.12 | 39.94 | 78.49 | 31.37 | 30.60 | 34.25 | |

| L Hip | 11.36 | 12.24 | 12.24 | 12.24 | 12.24 | 12.49 | 11.35 | 11.36 | 11.36 | 10.80 | 9.45 | 9.46 | 9.46 | |

| L Knee | 19.77 | 16.06 | 16.06 | 16.06 | 16.06 | 15.74 | 15.39 | 16.18 | 16.94 | 13.13 | 12.54 | 13.30 | 13.87 | |

| L Ankle | 20.15 | 17.00 | 17.00 | 17.00 | 17.00 | 17.22 | 16.70 | 16.90 | 17.48 | 13.47 | 13.00 | 13.08 | 13.27 | |

| R Hip | 12.63 | 13.83 | 13.83 | 13.83 | 13.83 | 14.03 | 12.63 | 12.63 | 12.63 | 12.06 | 10.73 | 10.73 | 10.73 | |

| R Knee | 14.64 | 14.69 | 14.69 | 14.69 | 14.69 | 15.14 | 14.59 | 14.59 | 14.61 | 12.45 | 11.92 | 11.91 | 11.91 | |

| R Ankle | 16.19 | 15.50 | 15.50 | 15.50 | 15.50 | 15.68 | 15.82 | 15.88 | 15.95 | 11.88 | 12.13 | 12.14 | 12.19 | |

| Head | 13.53 | 15.43 | 15.43 | 15.43 | 15.43 | 17.73 | 13.43 | 13.44 | 13.52 | 15.03 | 12.29 | 12.30 | 12.38 |

| G4 | STD | A1 | A3_5 | A3_10 | A3_15 | A3_20 | A4_5 | A4_10 | A4_15 | A4_20 | A5_5 | A5_10 | A5_15 | A5_20 |

| Pelvis | 10.41 | 12.55 | 12.55 | 12.55 | 12.55 | 14.55 | 10.55 | 10.41 | 10.41 | 13.92 | 9.97 | 9.81 | 9.81 | |

| Spine Naval | 10.04 | 16.52 | 16.52 | 16.52 | 16.52 | 22.98 | 10.43 | 10.04 | 10.04 | 23.54 | 9.85 | 9.43 | 9.43 | |

| Neck | 12.45 | 15.17 | 15.17 | 15.17 | 15.17 | 18.27 | 12.55 | 12.45 | 12.45 | 18.03 | 11.52 | 11.39 | 11.39 | |

| L Shoulder | 14.45 | 17.30 | 17.30 | 17.30 | 17.30 | 20.23 | 15.97 | 13.80 | 14.20 | 19.37 | 14.07 | 12.00 | 12.34 | |

| L Elbow | 38.09 | 29.80 | 29.80 | 29.80 | 29.80 | 27.89 | 22.40 | 23.23 | 26.32 | 25.72 | 18.72 | 20.71 | 23.97 | |

| L Wrist | 61.16 | 53.22 | 53.22 | 53.22 | 53.22 | 50.01 | 39.65 | 36.59 | 38.05 | 45.13 | 33.78 | 34.28 | 36.44 | |

| R Shoulder | 14.44 | 16.36 | 16.36 | 16.36 | 16.36 | 18.07 | 14.46 | 14.10 | 14.28 | 17.16 | 12.82 | 12.32 | 12.53 | |

| R Elbow | 34.64 | 28.33 | 28.33 | 28.33 | 28.33 | 28.87 | 23.11 | 23.76 | 25.23 | 22.24 | 19.41 | 20.11 | 21.41 | |

| R Wrist | 61.44 | 52.08 | 52.08 | 52.08 | 52.08 | 54.26 | 38.87 | 35.24 | 37.12 | 55.49 | 32.81 | 31.15 | 33.61 | |

| L Hip | 11.21 | 13.86 | 13.86 | 13.86 | 13.86 | 15.97 | 11.42 | 11.21 | 11.21 | 15.62 | 10.52 | 10.27 | 10.27 | |

| L Knee | 35.51 | 19.96 | 19.96 | 19.96 | 19.96 | 20.00 | 17.07 | 17.75 | 19.47 | 19.28 | 16.60 | 16.89 | 18.08 | |

| L Ankle | 40.77 | 25.19 | 25.19 | 25.19 | 25.19 | 23.09 | 21.28 | 22.12 | 22.88 | 19.78 | 19.01 | 20.05 | 20.42 | |

| R Hip | 11.50 | 13.28 | 13.28 | 13.28 | 13.28 | 15.01 | 11.98 | 11.50 | 11.50 | 14.13 | 11.34 | 10.67 | 10.67 | |

| R Knee | 38.04 | 18.94 | 18.94 | 18.94 | 18.94 | 19.06 | 16.27 | 16.87 | 18.55 | 17.56 | 16.01 | 16.25 | 17.72 | |

| R Ankle | 44.79 | 28.22 | 28.22 | 28.22 | 28.22 | 26.13 | 23.20 | 23.64 | 25.33 | 21.64 | 21.09 | 22.05 | 22.83 | |

| Head | 13.98 | 15.60 | 15.60 | 15.60 | 15.60 | 17.87 | 13.98 | 13.98 | 13.98 | 17.46 | 12.78 | 12.74 | 12.74 | |

| G5 | Pelvis | 8.02 | 9.84 | 9.84 | 9.84 | 9.84 | 10.11 | 8.02 | 8.02 | 8.02 | 7.97 | 6.88 | 6.88 | 6.88 |

| Spine Naval | 6.00 | 10.46 | 10.46 | 10.46 | 10.46 | 16.43 | 6.15 | 6.00 | 6.00 | 17.35 | 5.14 | 5.04 | 5.04 | |

| Neck | 8.95 | 11.83 | 11.83 | 11.83 | 11.83 | 16.22 | 9.31 | 8.95 | 8.95 | 16.61 | 8.01 | 7.79 | 7.79 | |

| L Shoulder | 13.03 | 14.59 | 14.59 | 14.59 | 14.59 | 14.69 | 18.79 | 13.03 | 13.03 | 13.25 | 15.52 | 10.46 | 10.46 | |

| L Elbow | 29.67 | 26.78 | 26.78 | 26.78 | 26.78 | 26.10 | 26.25 | 29.80 | 30.00 | 20.88 | 23.83 | 23.31 | 24.08 | |

| L Wrist | 47.36 | 48.28 | 48.28 | 48.28 | 48.28 | 47.89 | 39.96 | 36.96 | 39.46 | 45.51 | 36.07 | 35.11 | 34.08 | |

| R Shoulder | 12.17 | 14.46 | 14.46 | 14.46 | 14.46 | 13.88 | 15.34 | 12.19 | 12.17 | 13.10 | 12.33 | 9.51 | 9.50 | |

| R Elbow | 30.25 | 25.10 | 25.10 | 25.10 | 25.10 | 25.77 | 21.84 | 23.14 | 25.62 | 21.74 | 18.74 | 18.52 | 20.89 | |

| R Wrist | 50.67 | 48.14 | 48.14 | 48.14 | 48.14 | 44.44 | 35.46 | 34.90 | 36.83 | 42.59 | 32.80 | 32.04 | 32.69 | |

| L Hip | 10.27 | 12.70 | 12.70 | 12.70 | 12.70 | 12.86 | 10.26 | 10.27 | 10.27 | 10.24 | 8.52 | 8.52 | 8.52 | |

| L Knee | 22.62 | 20.78 | 20.78 | 20.78 | 20.78 | 21.22 | 19.87 | 19.83 | 20.43 | 16.85 | 16.28 | 16.19 | 16.45 | |

| L Ankle | 23.29 | 21.50 | 21.50 | 21.50 | 21.50 | 21.52 | 20.75 | 20.91 | 21.43 | 17.09 | 16.68 | 16.76 | 17.10 | |

| R Hip | 10.18 | 10.60 | 10.60 | 10.60 | 10.60 | 10.29 | 10.10 | 10.18 | 10.18 | 8.25 | 8.22 | 8.30 | 8.30 | |

| R Knee | 26.19 | 22.05 | 22.05 | 22.05 | 22.05 | 23.78 | 21.26 | 21.85 | 22.86 | 18.94 | 18.13 | 18.67 | 19.61 | |

| R Ankle | 22.73 | 20.47 | 20.47 | 20.47 | 20.47 | 20.54 | 19.06 | 19.55 | 21.02 | 15.97 | 15.37 | 15.68 | 17.02 | |

| Head | 10.88 | 13.17 | 13.17 | 13.17 | 13.17 | 16.50 | 11.87 | 10.88 | 10.88 | 17.79 | 10.27 | 9.51 | 9.51 | |

| G6 | Pelvis | 9.44 | 9.70 | 9.70 | 9.70 | 9.70 | 9.76 | 7.73 | 7.73 | 7.73 | 7.82 | 6.60 | 6.60 | 6.60 |

| Spine Naval | 7.58 | 10.21 | 10.21 | 10.21 | 10.21 | 16.76 | 6.22 | 5.93 | 5.93 | 16.05 | 5.12 | 4.95 | 4.95 | |

| Neck | 9.94 | 11.63 | 11.63 | 11.63 | 11.63 | 15.41 | 8.53 | 8.46 | 8.46 | 14.67 | 7.28 | 7.32 | 7.32 | |

| L Shoulder | 15.42 | 15.74 | 15.74 | 15.74 | 15.74 | 15.76 | 19.43 | 14.07 | 14.07 | 13.71 | 15.65 | 10.87 | 10.87 | |

| L Elbow | 32.09 | 28.19 | 28.19 | 28.19 | 28.19 | 27.36 | 28.07 | 30.17 | 30.01 | 21.03 | 23.62 | 22.83 | 22.76 | |

| L Wrist | 52.02 | 47.37 | 47.37 | 47.37 | 47.37 | 47.48 | 40.25 | 38.60 | 40.94 | 43.70 | 35.32 | 34.64 | 33.91 | |

| R Shoulder | 14.67 | 15.11 | 15.11 | 15.11 | 15.11 | 14.73 | 15.81 | 13.14 | 13.14 | 13.81 | 12.04 | 9.82 | 9.82 | |

| R Elbow | 35.02 | 27.11 | 27.11 | 27.11 | 27.11 | 28.78 | 24.67 | 25.92 | 27.45 | 23.47 | 20.29 | 20.08 | 21.65 | |

| R Wrist | 57.06 | 49.82 | 49.82 | 49.82 | 49.82 | 46.99 | 38.69 | 37.46 | 38.60 | 41.80 | 34.96 | 33.83 | 33.92 | |

| L Hip | 11.09 | 11.87 | 11.87 | 11.87 | 11.87 | 12.39 | 9.44 | 9.44 | 9.44 | 9.59 | 7.51 | 7.51 | 7.51 | |

| L Knee | 23.70 | 19.43 | 19.43 | 19.43 | 19.43 | 19.76 | 18.66 | 18.68 | 18.92 | 15.40 | 14.75 | 14.76 | 14.92 | |

| L Ankle | 23.62 | 20.43 | 20.43 | 20.43 | 20.43 | 20.63 | 20.34 | 20.47 | 20.70 | 16.03 | 15.92 | 16.03 | 16.14 | |

| R Hip | 11.09 | 10.70 | 10.70 | 10.70 | 10.70 | 10.53 | 9.39 | 9.37 | 9.37 | 8.26 | 7.37 | 7.33 | 7.33 | |

| R Knee | 26.32 | 20.17 | 20.17 | 20.17 | 20.17 | 21.56 | 19.22 | 19.79 | 20.76 | 16.83 | 15.65 | 16.15 | 16.96 | |

| R Ankle | 22.28 | 19.23 | 19.23 | 19.23 | 19.23 | 19.22 | 18.00 | 18.57 | 19.77 | 15.03 | 14.37 | 14.64 | 15.82 | |

| Head | 11.64 | 12.77 | 12.77 | 12.77 | 12.77 | 15.84 | 10.46 | 10.27 | 10.27 | 16.32 | 8.95 | 8.91 | 8.91 |

Appendix B

| Gesture 1 | Gesture 2 | Gesture 3 | ||||||||||

| TNOS | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 |

| Pelvis | 43.80 | 34.68 | 31.57 | 30.29 | 52.05 | 39.36 | 35.07 | 32.71 | 53.12 | 45.37 | 42.58 | 37.86 |

| Spine Naval | 64.67 | 51.10 | 46.81 | 45.15 | 74.02 | 58.48 | 51.41 | 46.61 | 71.33 | 58.81 | 53.35 | 48.22 |

| Neck | 59.53 | 45.92 | 40.68 | 38.69 | 73.43 | 58.82 | 51.61 | 48.06 | 68.22 | 54.31 | 49.55 | 44.71 |

| L Shoulder | 64.22 | 48.42 | 38.55 | 35.49 | 78.30 | 61.09 | 46.22 | 40.78 | 72.95 | 55.90 | 44.72 | 40.33 |

| L Elbow | 72.43 | 56.64 | 40.52 | 37.84 | 98.83 | 75.85 | 51.71 | 45.59 | 112.68 | 85.58 | 56.37 | 40.99 |

| L Wrist | 106.31 | 93.77 | 63.82 | 54.24 | 147.66 | 125.14 | 83.78 | 69.29 | 188.05 | 166.06 | 113.39 | 66.62 |

| R Shoulder | 60.32 | 43.48 | 35.65 | 31.80 | 75.05 | 55.99 | 46.38 | 41.07 | 66.49 | 48.14 | 39.57 | 33.42 |

| R Elbow | 92.26 | 73.08 | 41.74 | 33.28 | 110.94 | 87.90 | 57.38 | 48.71 | 115.95 | 82.46 | 53.63 | 36.15 |

| R Wrist | 142.59 | 125.17 | 74.50 | 54.34 | 168.38 | 143.93 | 91.31 | 73.30 | 194.83 | 168.88 | 109.63 | 56.75 |

| L Hip | 51.22 | 39.51 | 35.02 | 33.00 | 56.22 | 43.30 | 37.82 | 34.92 | 56.68 | 48.15 | 44.55 | 39.52 |

| L Knee | 33.52 | 27.60 | 25.42 | 24.34 | 51.04 | 43.48 | 40.73 | 39.32 | 55.77 | 44.93 | 42.21 | 37.96 |

| L Ankle | 44.44 | 35.74 | 32.21 | 30.37 | 53.54 | 41.94 | 39.25 | 37.83 | 57.24 | 43.38 | 41.04 | 38.22 |

| R Hip | 47.06 | 35.12 | 31.11 | 29.18 | 54.97 | 41.30 | 35.54 | 32.02 | 54.99 | 46.00 | 42.47 | 37.25 |

| R Knee | 33.83 | 26.74 | 24.06 | 22.50 | 49.75 | 40.37 | 37.09 | 35.41 | 44.65 | 36.37 | 33.69 | 29.14 |

| R Ankle | 42.53 | 34.90 | 29.82 | 28.17 | 51.88 | 39.20 | 35.98 | 34.47 | 42.88 | 35.18 | 32.67 | 30.36 |

| Head | 60.00 | 46.02 | 39.84 | 37.53 | 74.96 | 60.28 | 53.17 | 49.43 | 69.08 | 54.47 | 49.78 | 44.59 |

| Gesture 4 | Gesture 5 | Gesture 6 | ||||||||||

| TNOS | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 |

| Pelvis | 53.74 | 44.74 | 38.61 | 35.89 | 50.12 | 38.76 | 34.66 | 32.88 | 51.00 | 39.88 | 36.24 | 34.42 |

| Spine Naval | 72.33 | 60.75 | 51.25 | 48.76 | 70.53 | 59.35 | 49.60 | 44.83 | 68.07 | 55.96 | 49.13 | 46.03 |

| Neck | 66.77 | 57.90 | 46.83 | 43.37 | 73.95 | 65.24 | 55.72 | 51.73 | 62.79 | 50.67 | 44.26 | 42.05 |

| L Shoulder | 72.27 | 60.80 | 46.95 | 39.52 | 76.95 | 69.59 | 51.91 | 42.51 | 70.41 | 55.48 | 43.27 | 38.32 |

| L Elbow | 94.83 | 73.07 | 49.00 | 43.41 | 109.30 | 102.77 | 66.25 | 53.35 | 84.44 | 63.12 | 42.88 | 38.58 |

| L Wrist | 139.50 | 108.09 | 71.74 | 59.21 | 165.96 | 142.33 | 87.54 | 73.26 | 121.91 | 99.03 | 62.03 | 54.08 |

| R Shoulder | 68.83 | 55.44 | 44.88 | 38.37 | 72.69 | 57.36 | 44.66 | 40.53 | 66.66 | 52.14 | 43.09 | 38.70 |

| R Elbow | 99.57 | 76.59 | 50.43 | 42.35 | 104.31 | 99.66 | 55.31 | 43.17 | 92.59 | 69.23 | 44.77 | 39.57 |

| R Wrist | 148.02 | 118.86 | 72.61 | 56.74 | 167.31 | 146.13 | 89.96 | 71.84 | 136.60 | 102.15 | 62.22 | 50.91 |

| L Hip | 59.64 | 50.55 | 41.92 | 38.23 | 54.16 | 42.47 | 37.38 | 35.30 | 58.08 | 45.33 | 39.10 | 36.65 |

| L Knee | 98.87 | 68.87 | 46.53 | 43.14 | 60.30 | 46.47 | 42.50 | 40.93 | 43.90 | 35.24 | 32.45 | 31.26 |

| L Ankle | 121.12 | 75.00 | 50.27 | 47.06 | 61.91 | 49.23 | 46.56 | 45.40 | 49.05 | 39.00 | 35.94 | 34.50 |

| R Hip | 56.38 | 46.67 | 39.53 | 35.32 | 53.04 | 40.10 | 34.54 | 32.02 | 54.49 | 43.01 | 37.46 | 34.56 |

| R Knee | 101.79 | 90.00 | 47.80 | 40.77 | 63.03 | 53.73 | 42.61 | 39.49 | 43.09 | 32.99 | 30.04 | 28.45 |

| R Ankle | 131.88 | 100.42 | 56.18 | 48.80 | 60.99 | 46.48 | 41.85 | 40.15 | 48.95 | 37.91 | 34.61 | 33.13 |

| Head | 67.08 | 58.79 | 47.15 | 42.77 | 76.87 | 67.56 | 58.87 | 55.18 | 63.26 | 50.79 | 44.32 | 41.88 |

| Gesture 1 | Gesture 2 | Gesture 3 | ||||||||||

| TNOS | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 |

| Pelvis | 4.70 | 3.81 | 3.43 | 3.17 | 15.29 | 11.42 | 10.81 | 9.94 | 15.50 | 13.45 | 12.93 | 9.76 |

| Spine Naval | 8.84 | 6.80 | 6.55 | 5.74 | 14.08 | 12.82 | 12.61 | 8.03 | 15.41 | 13.74 | 12.96 | 9.22 |

| Neck | 13.17 | 9.70 | 9.08 | 8.43 | 18.46 | 14.51 | 13.13 | 9.81 | 20.01 | 15.84 | 14.72 | 11.02 |

| L Shoulder | 16.11 | 10.77 | 10.03 | 9.09 | 20.72 | 19.53 | 17.74 | 14.14 | 21.44 | 17.97 | 15.52 | 12.62 |

| L Elbow | 31.44 | 26.01 | 14.85 | 12.96 | 43.08 | 42.04 | 27.59 | 22.49 | 53.62 | 47.73 | 28.46 | 17.56 |

| L Wrist | 55.25 | 52.66 | 34.21 | 26.18 | 70.82 | 67.92 | 48.90 | 37.40 | 97.58 | 96.12 | 66.63 | 31.50 |

| R Shoulder | 16.32 | 11.46 | 9.63 | 7.85 | 21.13 | 18.36 | 16.95 | 13.31 | 19.68 | 16.12 | 13.73 | 10.00 |

| R Elbow | 42.65 | 38.43 | 19.27 | 12.98 | 50.99 | 51.77 | 33.90 | 27.56 | 53.15 | 46.10 | 28.77 | 16.19 |

| R Wrist | 73.10 | 71.78 | 45.43 | 28.59 | 80.44 | 77.89 | 58.60 | 45.89 | 95.39 | 96.91 | 69.11 | 31.37 |

| L Hip | 6.15 | 4.34 | 3.93 | 3.40 | 16.51 | 12.54 | 11.18 | 9.78 | 16.24 | 13.26 | 12.60 | 9.45 |

| L Knee | 6.55 | 5.34 | 4.77 | 4.60 | 20.31 | 17.13 | 16.15 | 15.43 | 24.39 | 15.97 | 14.57 | 12.54 |

| L Ankle | 8.70 | 6.77 | 6.02 | 5.73 | 22.90 | 16.42 | 15.57 | 14.97 | 24.66 | 14.54 | 13.65 | 13.00 |

| R Hip | 5.78 | 4.20 | 3.90 | 3.59 | 16.54 | 12.68 | 11.96 | 10.93 | 17.26 | 14.43 | 13.78 | 10.73 |

| R Knee | 5.89 | 4.79 | 4.45 | 4.24 | 20.72 | 17.72 | 16.39 | 15.68 | 17.49 | 14.73 | 13.93 | 11.92 |

| R Ankle | 9.88 | 7.85 | 7.06 | 6.84 | 24.65 | 17.25 | 15.81 | 15.17 | 17.63 | 13.77 | 13.06 | 12.13 |

| Head | 15.73 | 11.68 | 10.84 | 10.10 | 21.07 | 16.31 | 14.95 | 11.57 | 21.97 | 17.43 | 16.14 | 12.29 |

| Gesture 4 | Gesture 5 | Gesture 6 | ||||||||||

| TNOS | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 |

| Pelvis | 16.43 | 14.48 | 12.08 | 10.21 | 9.71 | 7.00 | 6.84 | 6.60 | 12.17 | 8.57 | 8.32 | 7.59 |

| Spine Naval | 17.87 | 19.26 | 16.67 | 10.82 | 10.74 | 7.99 | 8.70 | 5.12 | 11.29 | 9.20 | 9.98 | 6.92 |

| Neck | 18.83 | 20.09 | 15.84 | 11.69 | 12.89 | 12.13 | 11.02 | 7.28 | 14.79 | 12.61 | 11.36 | 9.45 |

| L Shoulder | 24.02 | 24.58 | 20.35 | 15.03 | 15.52 | 18.38 | 18.97 | 15.65 | 19.21 | 19.38 | 16.30 | 13.70 |

| L Elbow | 52.69 | 41.61 | 24.17 | 19.71 | 55.08 | 52.91 | 33.33 | 23.62 | 43.51 | 36.56 | 22.37 | 19.31 |

| L Wrist | 85.21 | 65.07 | 43.43 | 33.24 | 93.62 | 71.66 | 47.06 | 35.32 | 69.92 | 60.10 | 35.55 | 28.42 |

| R Shoulder | 22.14 | 20.98 | 17.54 | 13.52 | 16.60 | 16.43 | 13.93 | 12.04 | 20.17 | 16.30 | 15.35 | 13.19 |

| R Elbow | 52.03 | 44.36 | 26.50 | 20.59 | 50.31 | 53.38 | 29.81 | 20.29 | 46.98 | 38.48 | 22.63 | 18.98 |

| R Wrist | 83.31 | 75.96 | 45.99 | 33.14 | 86.59 | 67.04 | 48.64 | 34.96 | 74.64 | 59.49 | 36.43 | 27.26 |

| L Hip | 19.05 | 17.81 | 13.70 | 11.36 | 11.04 | 8.27 | 7.64 | 7.51 | 13.79 | 10.91 | 10.38 | 9.22 |

| L Knee | 64.29 | 39.28 | 20.62 | 17.88 | 27.80 | 17.14 | 15.41 | 14.75 | 15.75 | 11.12 | 10.30 | 9.69 |

| L Ankle | 80.83 | 40.33 | 22.53 | 20.01 | 27.02 | 17.54 | 16.45 | 15.92 | 19.08 | 14.40 | 13.15 | 12.73 |

| R Hip | 18.55 | 17.39 | 14.57 | 11.83 | 11.60 | 8.57 | 7.94 | 7.37 | 14.71 | 11.67 | 10.61 | 9.71 |

| R Knee | 65.09 | 60.97 | 23.33 | 17.53 | 31.09 | 25.05 | 17.91 | 15.65 | 16.16 | 11.49 | 10.56 | 10.13 |

| R Ankle | 87.84 | 64.80 | 28.85 | 23.05 | 28.56 | 18.02 | 15.36 | 14.37 | 21.07 | 15.32 | 13.67 | 13.38 |

| Head | 20.60 | 21.85 | 16.89 | 12.70 | 14.98 | 13.16 | 12.24 | 8.95 | 17.36 | 14.58 | 12.95 | 10.88 |

References

- Ma, M.; Proffitt, R.; Skubic, M. Validation of a Kinect V2 based rehabilitation game. PLoS ONE 2018, 13, e0202338. [Google Scholar] [CrossRef]

- Taha, A.; Zayed, H.H.; Khalifa, M.; El-Horbaty, E.-S.M. Skeleton-based human activity recognition for video surveillance. Int. J. Sci. Eng. Res. 2015, 6, 993–1004. [Google Scholar] [CrossRef]

- Varshney, N.; Bakariya, B.; Kushwaha, A.K.S.; Khare, M. Rule-based multi-view human activity recognition system in real time using skeleton data from RGB-D sensor. Soft Comput. 2021, 241. [Google Scholar] [CrossRef]

- Cippitelli, E.; Gasparrini, S.; Gambi, E.; Spinsante, S. A human activity recognition system using skeleton data from RGBD sensors. Comput. Intell. Neurosci. 2016, 2016, 4351435. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bari, A.H.; Gavrilova, M.L. Multi-layer perceptron architecture for kinect-based gait recognition. In Computer Graphics International Conference; Springer: Berlin/Heidelberg, Germany, 2019; pp. 356–363. [Google Scholar]

- Yao, A.; Gall, J.; Fanelli, G.; Van Gool, L. Does human action recognition benefit from pose estimation? In Proceedings of the 22nd British Machine Vision Conference (BMVC 2011), Dundee, Scotland, 29 August–2 September 2011; BMV Press: Columbus, OH, USA, 2011. [Google Scholar]

- Schlagenhauf, F.; Sreeram, S.; Singhose, W. Comparison of kinect and vicon motion capture of upper-body joint angle tracking. In Proceedings of the 2018 IEEE 14th International Conference on Control and Automation (ICCA), Anchorage, AK, USA, 12–15 June 2018; IEEE: New York, NY, USA, 2018; pp. 674–679. [Google Scholar]

- Shaikh, M.B.; Chai, D. RGB-D Data-based Action Recognition: A Review. Sensors 2021, 21, 4246. [Google Scholar] [CrossRef]

- Wang, P.; Li, W.; Ogunbona, P.; Wan, J.; Escalera, S. RGB-D-based human motion recognition with deep learning: A survey. Comput. Vis. Image Underst. 2018, 171, 118–139. [Google Scholar] [CrossRef] [Green Version]

- Liu, B.; Cai, H.; Ju, Z.; Liu, H. RGB-D sensing based human action and interaction analysis: A survey. Pattern Recognit. 2019, 94, 1–12. [Google Scholar] [CrossRef]

- Tölgyessy, M.; Dekan, M.; Chovanec, Ľ.; Hubinský, P. Evaluation of the azure Kinect and its comparison to Kinect V1 and Kinect V2. Sensors 2021, 21, 413. [Google Scholar] [CrossRef]

- Romeo, L.; Marani, R.; Malosio, M.; Perri, A.G.; D’Orazio, T. Performance analysis of body tracking with the microsoft azure Kinect. In Proceedings of the 2021 29th Mediterranean Conference on Control and Automation (MED), Puglia, Italy, 22–25 June 2021; IEEE: New York, NY, USA, 2021; pp. 572–577. [Google Scholar]

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A. KinectFusion: Real-time 3D reconstruction and interaction using a moving depth camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 559–568. [Google Scholar]

- Tölgyessy, M.; Dekan, M.; Chovanec, Ľ. Skeleton Tracking Accuracy and Precision Evaluation of Kinect V1, Kinect V2, and the Azure Kinect. Appl. Sci. 2021, 11, 5756. [Google Scholar] [CrossRef]

- Aguileta, A.A.; Brena, R.F.; Mayora, O.; Molino-Minero-Re, E.; Trejo, L.A. Multi-sensor fusion for activity recognition—A survey. Sensors 2019, 19, 3808. [Google Scholar] [CrossRef] [Green Version]

- Gravina, R.; Alinia, P.; Ghasemzadeh, H.; Fortino, G. Multi-sensor fusion in body sensor networks: State-of-the-art and research challenges. Inf. Fusion 2017, 35, 68–80. [Google Scholar] [CrossRef]

- Yeung, L.-F.; Yang, Z.; Cheng, K.C.-C.; Du, D.; Tong, R.K.-Y. Effects of camera viewing angles on tracking kinematic gait patterns using Azure Kinect, Kinect v2 and Orbbec Astra Pro v2. Gait Posture 2021, 87, 19–26. [Google Scholar] [CrossRef]

- Kim, Y.; Baek, S.; Bae, B.C. Motion capture of the human body using multiple depth sensors. Etri J. 2017, 39, 181–190. [Google Scholar] [CrossRef]

- Colombel, J.; Daney, D.; Bonnet, V.; Charpillet, F. Markerless 3D Human Pose Tracking in the Wild with fusion of Multiple Depth Cameras: Comparative Experimental Study with Kinect 2 and 3. In Activity and Behavior Computing; Springer: Berlin/Heidelberg, Germany, 2021; pp. 119–134. [Google Scholar]

- Chen, N.; Chang, Y.; Liu, H.; Huang, L.; Zhang, H. Human pose recognition based on skeleton fusion from multiple kinects. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 5228–5232. [Google Scholar]

- Núñez, J.C.; Cabido, R.; Montemayor, A.S.; Pantrigo, J.J. Real-time human body tracking based on data fusion from multiple RGB-D sensors. Multimed. Tools Appl. 2017, 76, 4249–4271. [Google Scholar] [CrossRef]

- Wu, Y.; Gao, L.; Hoermann, S.; Lindeman, R.W. Towards robust 3D skeleton tracking using data fusion from multiple depth sensors. In Proceedings of the 2018 10th International Conference on Virtual Worlds and Games for Serious Applications (VS-Games), Wurzburg, Germany, 5–7 September 2018; IEEE: New York, NY, USA, 2018; pp. 1–4. [Google Scholar]

- Desai, K.; Prabhakaran, B.; Raghuraman, S. Combining skeletal poses for 3D human model generation using multiple Kinects. In Proceedings of the 9th ACM Multimedia Systems Conference, Amsterdam, The Netherlands, 12–15 June 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 40–51. [Google Scholar]

- Moon, S.; Park, Y.; Ko, D.W.; Suh, I.H. Multiple kinect sensor fusion for human skeleton tracking using Kalman filtering. Int. J. Adv. Robot. Syst. 2016, 13, 65. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; He, X.; Liu, Y. A Human Skeleton Data Optimization Algorithm for Multi-Kinect. In Proceedings of the 2020 Asia-Pacific Conference on Image Processing, Electronics and Computers (IPEC), Dalian, China, 14–16 April 2020; IEEE: New York, NY, USA, 2020; pp. 89–95. [Google Scholar]

- Ryselis, K.; Petkus, T.; Blažauskas, T.; Maskeliūnas, R.; Damaševičius, R. Multiple Kinect based system to monitor and analyze key performance indicators of physical training. Hum. Cent. Comput. Inf. Sci. 2020, 10, 51. [Google Scholar] [CrossRef]

- Swain, M.J.; Ballard, D.H. Indexing via color histograms. In Active Perception and Robot Vision; Springer: Berlin/Heidelberg, Germany, 1992; pp. 261–273. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Gower, J.C.; Dijksterhuis, G.B. Procrustes Problems; Oxford University Press on Demand: Oxford, UK, 2004; Volume 30. [Google Scholar]

- Arun, K.S.; Huang, T.S.; Blostein, S.D. Least-squares fitting of two 3-D point sets. IEEE Trans. Pattern Anal. Mach. Intell. 1987, PAMI-9, 698–700. [Google Scholar] [CrossRef] [Green Version]

- Garrido-Jurado, S.; Munoz-Salinas, R.; Madrid-Cuevas, F.J.; Medina-Carnicer, R. Generation of fiducial marker dictionaries using mixed integer linear programming. Pattern Recognit. 2016, 51, 481–491. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Kdd, 1996; AAAI: Palo Alto, CA, USA, 1996; pp. 226–231. [Google Scholar]

- Haller, E.; Scarlat, G.; Mocanu, I.; Trăscău, M. Human activity recognition based on multiple Kinects. In International Competition on Evaluating AAL Systems through Competitive Benchmarking; Springer: Berlin/Heidelberg, Germany, 2013; pp. 48–59. [Google Scholar]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Naeemabadi, M.; Dinesen, B.; Andersen, O.K.; Hansen, J. Influence of a marker-based motion capture system on the performance of Microsoft Kinect v2 skeleton algorithm. IEEE Sens. J. 2018, 19, 171–179. [Google Scholar] [CrossRef] [Green Version]

- Naeemabadi, M.; Dinesen, B.; Andersen, O.K.; Hansen, J. Investigating the impact of a motion capture system on Microsoft Kinect v2 recordings: A caution for using the technologies together. PLoS ONE 2018, 13, e0204052. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.-h.; Lee, D.-W.; Jun, K.; Lee, W.; Kim, M.S. Markerless 3D Skeleton Tracking Algorithm by Merging Multiple Inaccurate Skeleton Data from Multiple RGB-D Sensors. Sensors 2022, 22, 3155. https://doi.org/10.3390/s22093155

Lee S-h, Lee D-W, Jun K, Lee W, Kim MS. Markerless 3D Skeleton Tracking Algorithm by Merging Multiple Inaccurate Skeleton Data from Multiple RGB-D Sensors. Sensors. 2022; 22(9):3155. https://doi.org/10.3390/s22093155

Chicago/Turabian StyleLee, Sang-hyub, Deok-Won Lee, Kooksung Jun, Wonjun Lee, and Mun Sang Kim. 2022. "Markerless 3D Skeleton Tracking Algorithm by Merging Multiple Inaccurate Skeleton Data from Multiple RGB-D Sensors" Sensors 22, no. 9: 3155. https://doi.org/10.3390/s22093155

APA StyleLee, S.-h., Lee, D.-W., Jun, K., Lee, W., & Kim, M. S. (2022). Markerless 3D Skeleton Tracking Algorithm by Merging Multiple Inaccurate Skeleton Data from Multiple RGB-D Sensors. Sensors, 22(9), 3155. https://doi.org/10.3390/s22093155