Color Demosaicing of RGBW Color Filter Array Based on Laplacian Pyramid

Abstract

:1. Introduction

2. Related Work

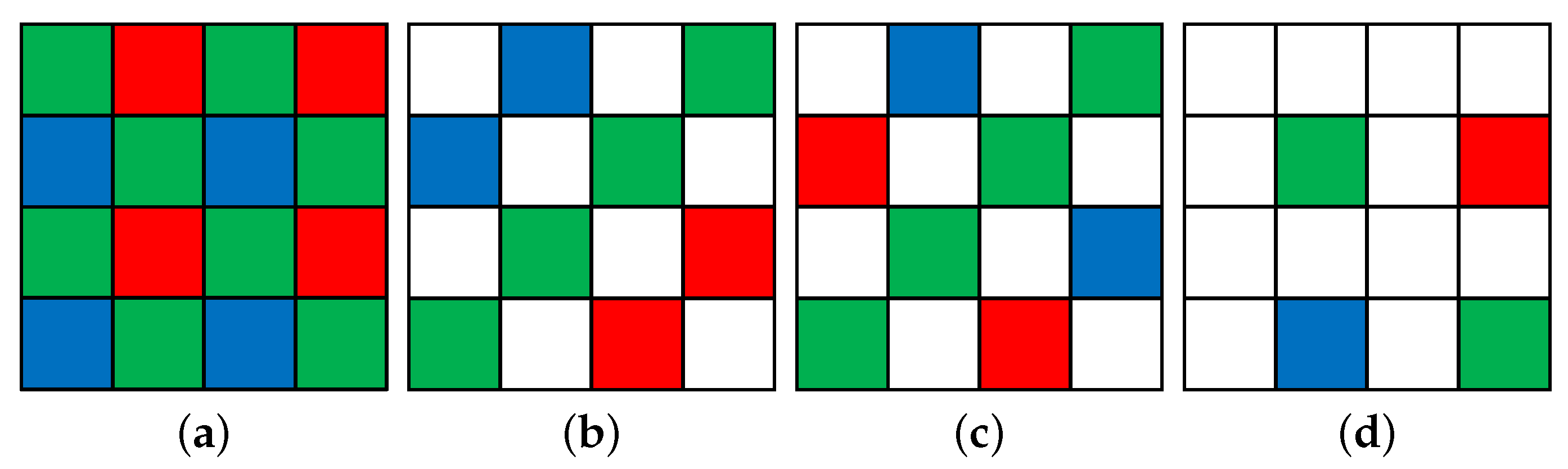

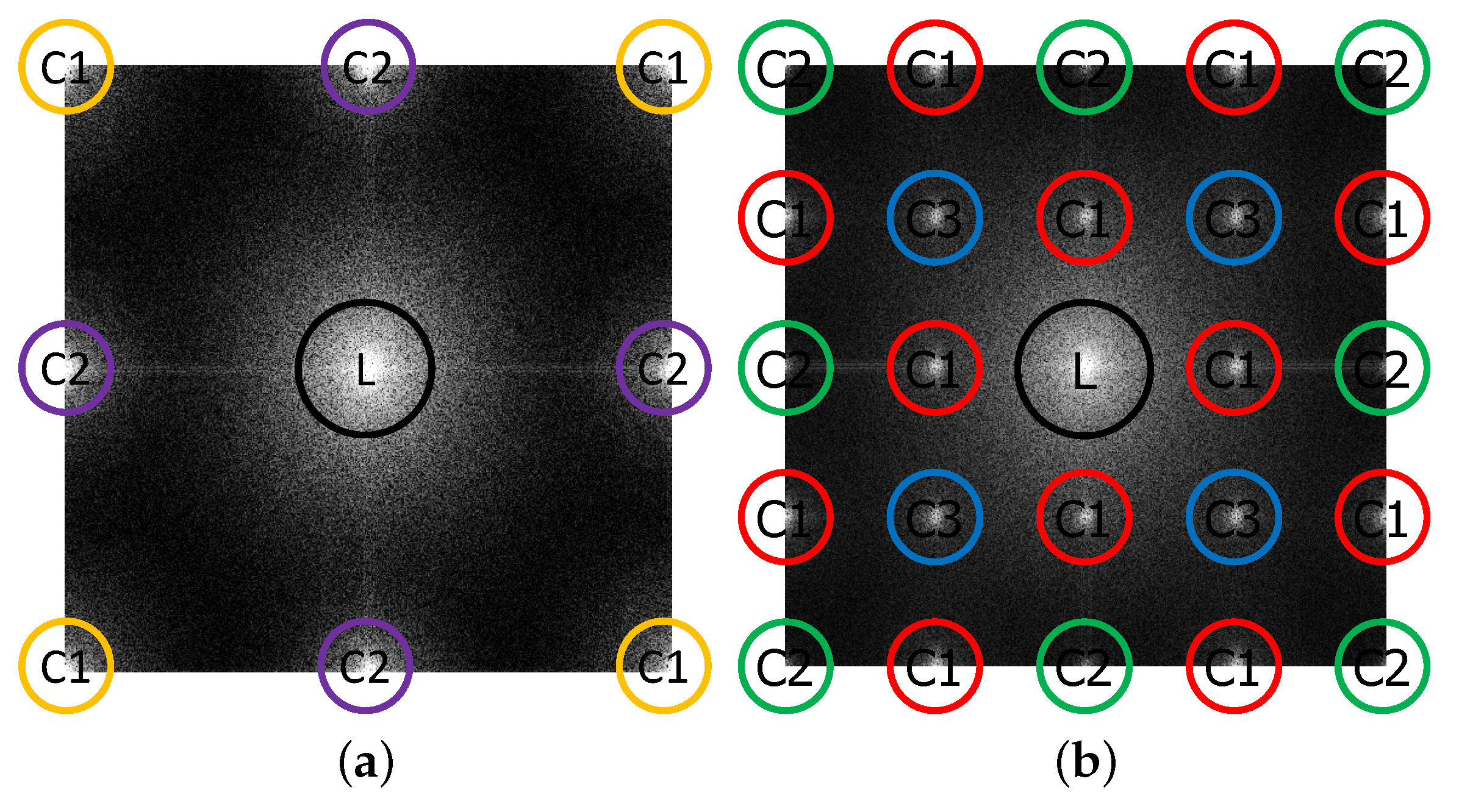

2.1. Frequency Analysis of CFAs

2.2. Traditional Methods of Color Demosaicing

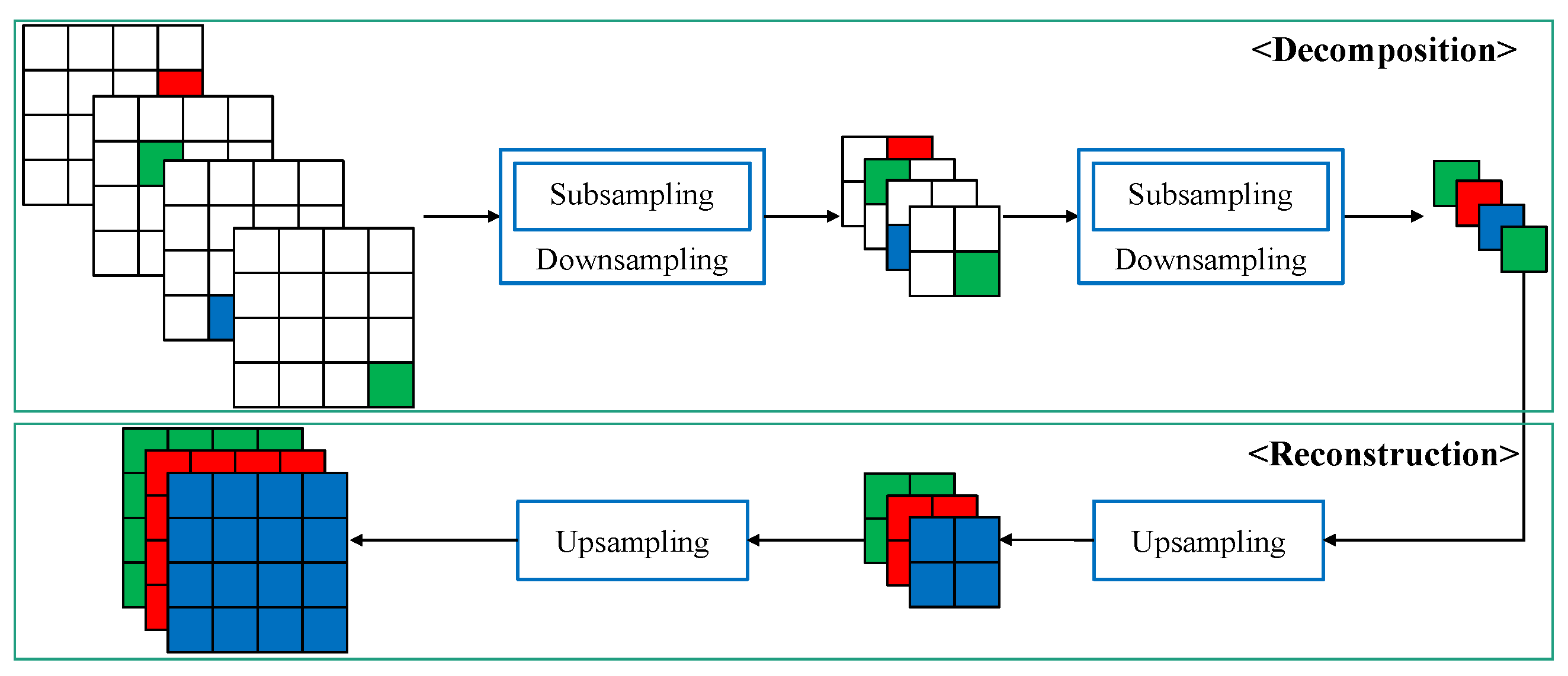

2.3. Laplacian Pyramid

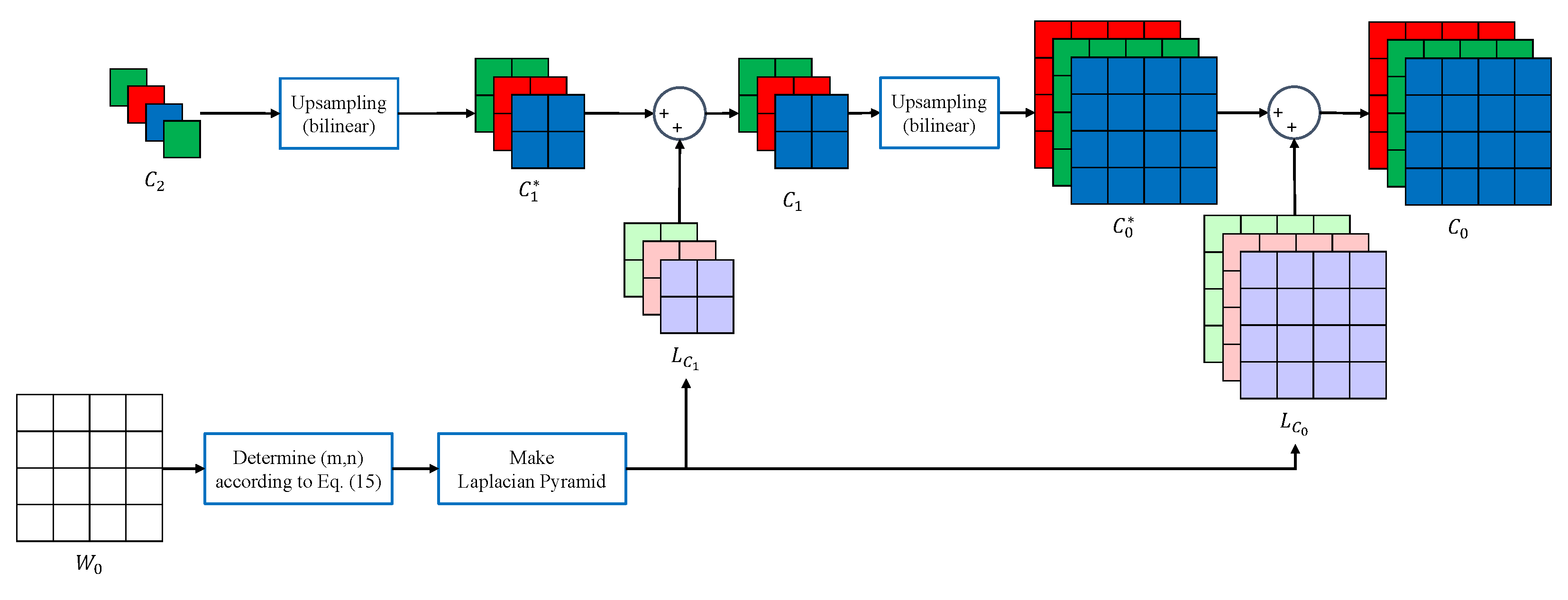

3. Proposed Algorithm

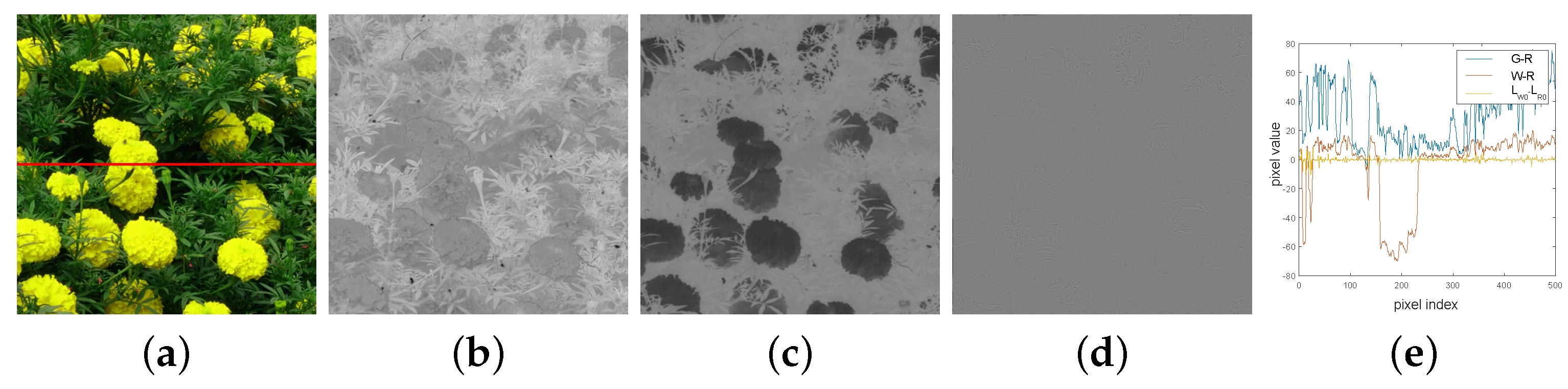

3.1. White Channel Interpolation

3.2. Red, Green, and Blue Channels Interpolation

| Algorithm 1: Color Interpolation using the Laplacian Pyramid |

Input: The estimated image , The subsampled CFA Output: The reconstructed color image , 1 Decide according to (20) 2 Make Laplacian pyramid about W with and depth level l 3 while  8 return |

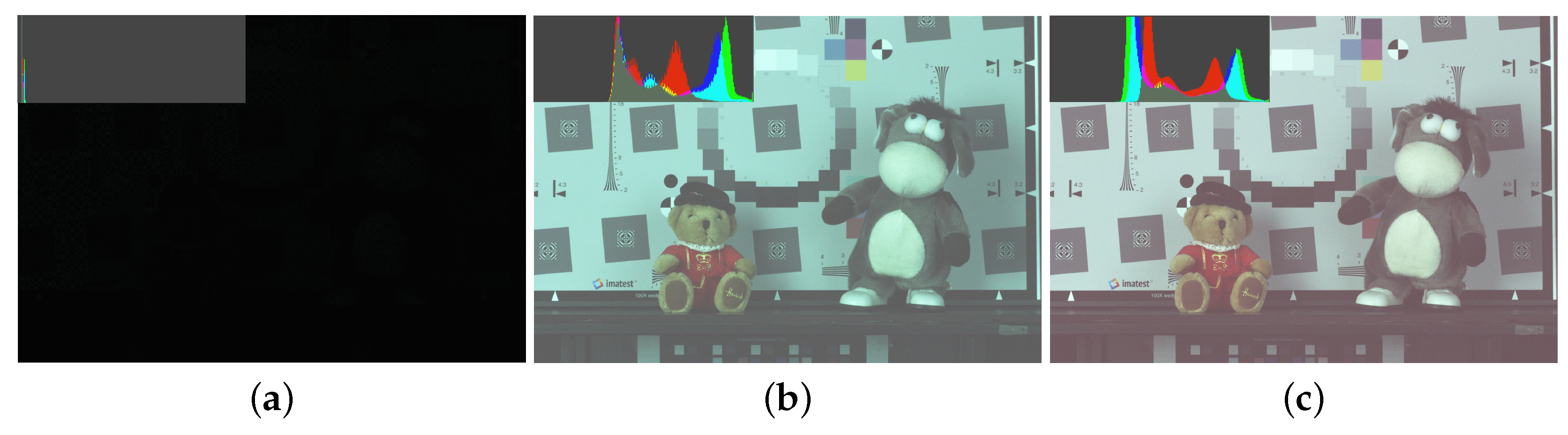

4. Experiment Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bayer, B.E. Color Imaging Array. U.S. Patent 3,971,065, 20 July 1976. [Google Scholar]

- Adams, J.; Parulski, K.; Spaulding, K. Color processing in digital cameras. IEEE Micro 1998, 18, 20–30. [Google Scholar] [CrossRef]

- Lu, W.; Tan, Y.P. Color filter array demosaicking: New method and performance measures. IEEE Trans. Image Process. 2003, 12, 1194–1210. [Google Scholar] [PubMed] [Green Version]

- Menon, D.; Calvagno, G. Regularization approaches to demosaicking. IEEE Trans. Image Process. 2009, 18, 2209–2220. [Google Scholar] [CrossRef] [PubMed]

- Alleysson, D.; Süsstrunk, S.; Hérault, J. Color demosaicing by estimating luminance and opponent chromatic signals in the Fourier domain. In Proceedings of the Color and Imaging Conference, Scottsdale, AZ, USA, 12–15 November 2002; Volume 2002, pp. 331–336. [Google Scholar]

- Dubois, E. Frequency-domain methods for demosaicking of Bayer-sampled color images. IEEE Signal Process. Lett. 2005, 12, 847–850. [Google Scholar] [CrossRef]

- Moghadam, A.A.; Aghagolzadeh, M.; Kumar, M.; Radha, H. Compressive demosaicing. In Proceedings of the 2010 IEEE International Workshop on Multimedia Signal Processing, Saint-Malo, France, 4–6 October 2010; pp. 105–110. [Google Scholar]

- Moghadam, A.A.; Aghagolzadeh, M.; Kumar, M.; Radha, H. Compressive framework for demosaicing of natural images. IEEE Trans. Image Process. 2013, 22, 2356–2371. [Google Scholar] [CrossRef] [PubMed]

- Kiku, D.; Monno, Y.; Tanaka, M.; Okutomi, M. Residual interpolation for color image demosaicking. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 2304–2308. [Google Scholar]

- Kiku, D.; Monno, Y.; Tanaka, M.; Okutomi, M. Beyond color difference: Residual interpolation for color image demosaicking. IEEE Trans. Image Process. 2016, 25, 1288–1300. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; Springer: Berlin, Germany, 2010; pp. 1–14. [Google Scholar]

- Tan, R.; Zhang, K.; Zuo, W.; Zhang, L. Color image demosaicking via deep residual learning. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; Volume 2, p. 6. [Google Scholar]

- Gharbi, M.; Chaurasia, G.; Paris, S.; Durand, F. Deep joint demosaicking and denoising. ACM Trans. Graph. (ToG) 2016, 35, 1–12. [Google Scholar] [CrossRef]

- Kumar, M.; Morales, E.O.; Adams, J.E.; Hao, W. New digital camera sensor architecture for low light imaging. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 2681–2684. [Google Scholar]

- Hirota, I. Solid-State Imaging Device, Method for Processing Signal of Solid-State Imaging Device, and Imaging Apparatus. U.S. Patent 8,436,925, 2 October 2013. [Google Scholar]

- Hikosaka, S. Imaging Device and Imaging System. U.S. Patent 10,567,712, 29 October 2020. [Google Scholar]

- Zhang, L.; Wu, X.; Buades, A.; Li, X. Color demosaicking by local directional interpolation and nonlocal adaptive thresholding. J. Electron. Imaging 2011, 20, 023016. [Google Scholar]

- Hao, P.; Li, Y.; Lin, Z.; Dubois, E. A geometric method for optimal design of color filter arrays. IEEE Trans. Image Process. 2010, 20, 709–722. [Google Scholar] [PubMed]

- Burt, P.J.; Adelson, E.H. The Laplacian pyramid as a compact image code. In Readings in Computer Vision; Elsevier: Hoboken, NJ, USA, 1987; pp. 671–679. [Google Scholar]

- Hamilton, J.F., Jr.; Adams, J.E., Jr. Adaptive Color Plan Interpolation in Single Sensor Color Electronic Camera. U.S. Patent 5,629,734, 13 May 1997. [Google Scholar]

- Pei, S.C.; Tam, I.K. Effective color interpolation in CCD color filter arrays using signal correlation. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 503–513. [Google Scholar]

- Pekkucuksen, I.; Altunbasak, Y. Gradient based threshold free color filter array interpolation. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 137–140. [Google Scholar]

- Paris, S.; Hasinoff, S.W.; Kautz, J. Local Laplacian filters: Edge-aware image processing with a Laplacian pyramid. ACM Trans. Graph. 2011, 30, 68. [Google Scholar] [CrossRef]

- Hirakawa, K.; Parks, T.W. Adaptive homogeneity-directed demosaicing algorithm. IEEE Trans. Image Process. 2005, 14, 360–369. [Google Scholar] [CrossRef] [PubMed]

- Buchsbaum, G. A spatial processor model for object colour perception. J. Frankl. Inst. 1980, 310, 1–26. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef]

- Oh, P.; Lee, S.; Kang, M.G. Colorization-based RGB-white color interpolation using color filter array with randomly sampled pattern. Sensors 2017, 17, 1523. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, H.; Lee, S.; Kang, M.G. Demosaicing of RGBW Color Filter Array Based on Rank Minimization with Colorization Constraint. Sensors 2020, 20, 4458. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Kodak Dataset | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CPSNR | SSIM | |||||||||||

| No. | CM1 | CM2 | CM3 | CM4 | CM5 | PM | CM1 | CM2 | CM3 | CM4 | CM5 | PM |

| 1 | 34.53 | 32.21 | 32.23 | 32.11 | 36.72 | 35.40 | 0.9792 | 0.9629 | 0.9634 | 0.9719 | 0.9879 | 0.9890 |

| 2 | 36.71 | 33.74 | 35.54 | 35.95 | 37.36 | 37.30 | 0.9890 | 0.9855 | 0.9921 | 0.9933 | 0.9946 | 0.9933 |

| 3 | 37.66 | 32.26 | 36.50 | 38.53 | 40.40 | 39.26 | 0.9911 | 0.9774 | 0.9885 | 0.9913 | 0.9949 | 0.9975 |

| 4 | 36.71 | 33.56 | 34.79 | 36.99 | 38.44 | 36.98 | 0.9897 | 0.9828 | 0.9891 | 0.9917 | 0.9938 | 0.9961 |

| 5 | 32.34 | 30.08 | 32.03 | 30.78 | 32.48 | 32.81 | 0.9742 | 0.9572 | 0.9726 | 0.9685 | 0.9781 | 0.9831 |

| 6 | 35.81 | 33.01 | 32.75 | 32.94 | 36.97 | 37.11 | 0.9841 | 0.9688 | 0.9691 | 0.9763 | 0.9897 | 0.9924 |

| 7 | 37.43 | 34.16 | 36.42 | 36.67 | 38.31 | 38.18 | 0.9885 | 0.9810 | 0.9893 | 0.9890 | 0.9923 | 0.9958 |

| 8 | 32.74 | 31.81 | 32.47 | 29.05 | 33.50 | 34.22 | 0.9787 | 0.9661 | 0.9736 | 0.9632 | 0.9827 | 0.9878 |

| 9 | 39.23 | 35.80 | 38.06 | 37.15 | 40.38 | 39.79 | 0.9855 | 0.9710 | 0.9770 | 0.9774 | 0.9874 | 0.9921 |

| 10 | 38.23 | 36.46 | 37.83 | 35.87 | 37.45 | 39.43 | 0.9844 | 0.9705 | 0.9785 | 0.9773 | 0.9857 | 0.9905 |

| 11 | 36.18 | 33.01 | 33.70 | 33.40 | 36.05 | 36.72 | 0.9830 | 0.9637 | 0.9720 | 0.9740 | 0.9848 | 0.9913 |

| 12 | 39.61 | 36.43 | 37.65 | 37.68 | 40.31 | 40.83 | 0.9906 | 0.9826 | 0.9858 | 0.9880 | 0.9932 | 0.9983 |

| 13 | 31.41 | 29.10 | 28.62 | 27.81 | 29.91 | 31.94 | 0.9742 | 0.9485 | 0.9473 | 0.9576 | 0.9744 | 0.9835 |

| 14 | 31.81 | 29.08 | 32.04 | 32.01 | 33.33 | 32.23 | 0.9773 | 0.9561 | 0.9677 | 0.9715 | 0.9821 | 0.9848 |

| 15 | 35.67 | 33.81 | 35.43 | 36.10 | 37.51 | 36.95 | 0.9834 | 0.9733 | 0.9826 | 0.9842 | 0.9886 | 0.9928 |

| 16 | 39.87 | 37.02 | 37.20 | 36.44 | 41.07 | 40.43 | 0.9870 | 0.9744 | 0.9767 | 0.9754 | 0.9902 | 0.9937 |

| 17 | 38.56 | 35.81 | 36.00 | 35.53 | 37.33 | 38.82 | 0.9869 | 0.9745 | 0.9771 | 0.9780 | 0.9862 | 0.9928 |

| 18 | 33.54 | 30.74 | 31.20 | 31.75 | 33.50 | 34.18 | 0.9756 | 0.9551 | 0.9616 | 0.9678 | 0.9773 | 0.9832 |

| 19 | 37.87 | 34.12 | 34.35 | 33.47 | 38.07 | 38.40 | 0.9869 | 0.9724 | 0.9747 | 0.9794 | 0.9878 | 0.9932 |

| 20 | 36.74 | 34.70 | 36.27 | 35.48 | 37.39 | 38.61 | 0.9760 | 0.9761 | 0.9801 | 0.9813 | 0.9866 | 0.9915 |

| 21 | 35.54 | 33.22 | 33.50 | 33.59 | 36.85 | 36.52 | 0.9846 | 0.9682 | 0.9742 | 0.9782 | 0.9877 | 0.9914 |

| 22 | 34.58 | 32.69 | 33.44 | 33.81 | 35.53 | 35.65 | 0.9807 | 0.9636 | 0.9706 | 0.9767 | 0.9829 | 0.9884 |

| 23 | 37.67 | 28.23 | 36.92 | 38.56 | 39.94 | 38.65 | 0.9909 | 0.9759 | 0.9899 | 0.9918 | 0.9935 | 0.9982 |

| 24 | 32.00 | 30.84 | 30.79 | 29.65 | 30.91 | 32.98 | 0.9734 | 0.9608 | 0.9653 | 0.9642 | 0.9748 | 0.9837 |

| Avg. | 35.94 | 33.00 | 34.41 | 34.22 | 36.65 | 36.81 | 0.9831 | 0.9695 | 0.9758 | 0.9778 | 0.9866 | 0.9910 |

| Noise Free | Noise | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| No. | CM1 | CM2 | CM3 | CM4 | CM5 | PM | CM1 | CM2 | CM3 | CM4 | CM5 | PM | |

| Kodak | CPSNR | 35.94 | 33.00 | 34.41 | 34.22 | 36.65 | 36.81 | 32.16 | 31.88 | 31.88 | 30.34 | 32.18 | 32.32 |

| SSIM | 0.9831 | 0.9695 | 0.9758 | 0.9778 | 0.9866 | 0.9910 | 0.9359 | 0.9341 | 0.9385 | 0.9194 | 0.9403 | 0.9391 | |

| McM | CPSNR | 32.51 | 30.14 | 33.14 | 32.71 | 33.84 | 33.17 | 30.16 | 28.20 | 31.03 | 29.53 | 30.69 | 31.28 |

| SSIM | 0.9703 | 0.9517 | 0.9748 | 0.9711 | 0.9770 | 0.9733 | 0.9303 | 0.9262 | 0.9430 | 0.9215 | 0.9364 | 0.9321 | |

| Kodak + McM | CPSNR | 34.47 | 31.77 | 33.87 | 33.57 | 35.45 | 35.25 | 31.30 | 30.30 | 31.52 | 30.00 | 31.54 | 31.87 |

| SSIM | 0.9776 | 0.9619 | 0.9754 | 0.9749 | 0.9825 | 0.9834 | 0.9335 | 0.9307 | 0.9404 | 0.9203 | 0.9386 | 0.9361 | |

| CM1 | CM2 | CM3 | CM4 | CM5 | PM | |

|---|---|---|---|---|---|---|

| Time (s) | 0.8392 | 5.5317 | 110.01 | 1.3872 | 1.4478 | 0.6994 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, K.; Kim, J.; Kang, M.G. Color Demosaicing of RGBW Color Filter Array Based on Laplacian Pyramid. Sensors 2022, 22, 2981. https://doi.org/10.3390/s22082981

Jeong K, Kim J, Kang MG. Color Demosaicing of RGBW Color Filter Array Based on Laplacian Pyramid. Sensors. 2022; 22(8):2981. https://doi.org/10.3390/s22082981

Chicago/Turabian StyleJeong, Kyeonghoon, Jonghyun Kim, and Moon Gi Kang. 2022. "Color Demosaicing of RGBW Color Filter Array Based on Laplacian Pyramid" Sensors 22, no. 8: 2981. https://doi.org/10.3390/s22082981

APA StyleJeong, K., Kim, J., & Kang, M. G. (2022). Color Demosaicing of RGBW Color Filter Array Based on Laplacian Pyramid. Sensors, 22(8), 2981. https://doi.org/10.3390/s22082981