S-NER: A Concise and Efficient Span-Based Model for Named Entity Recognition

Abstract

:1. Introduction

2. Related Work

2.1. Sequence Labeling-Based NER Model

2.2. Span-Based NER Model

2.3. Span-Based Model for Joint Entity and Relation Extraction

3. Model

3.1. Model Encoder

3.1.1. Span Representation

3.1.2. Contextual Representation

3.1.3. Span Length Embedding

3.1.4. Span Semantic Representation

3.2. Model Decoder

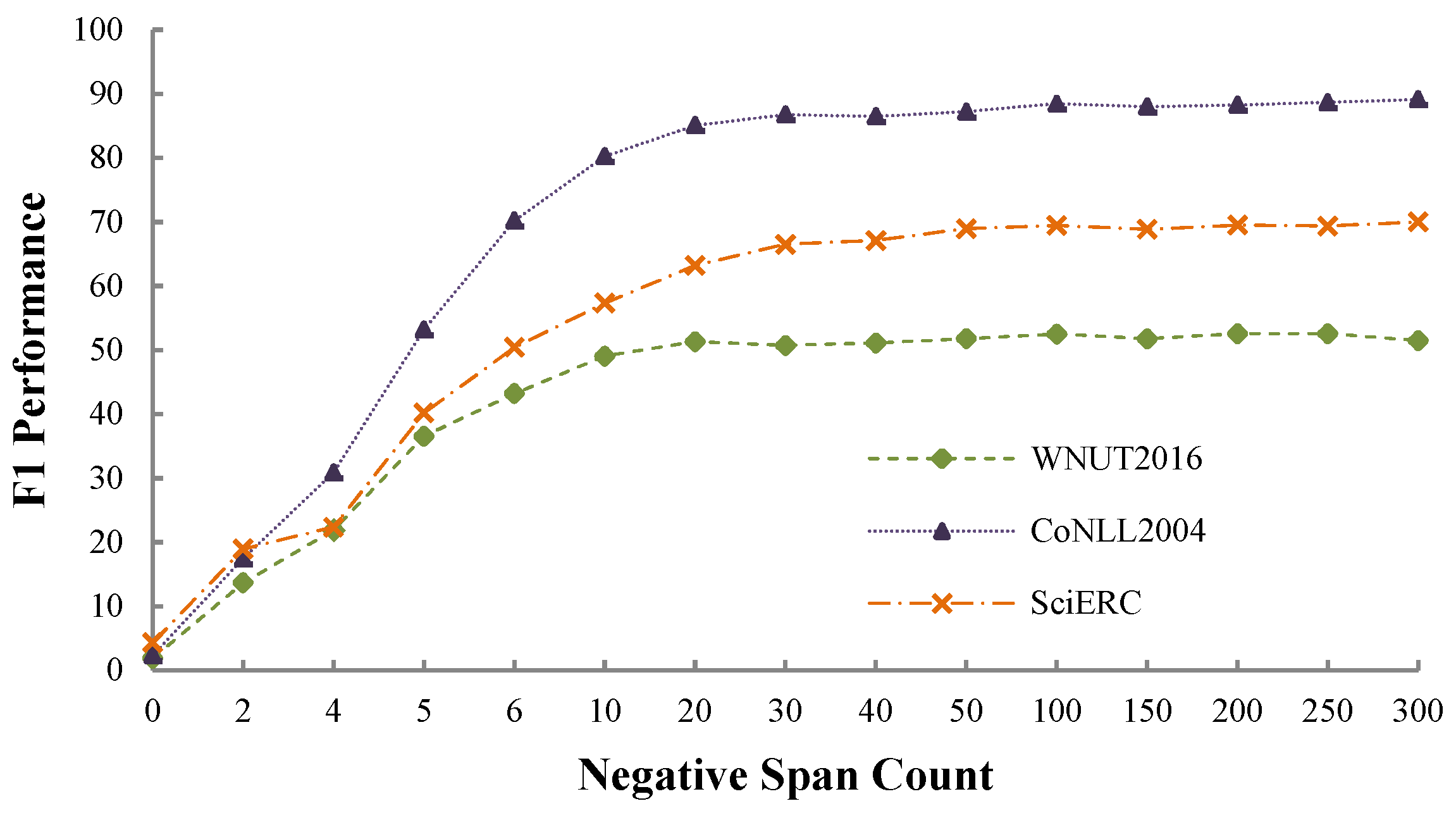

3.3. Negative Sampling Strategy

4. Experiment

4.1. Experimental Setup

4.1.1. Datasets

- The WNUT2016 dataset is constructed from Twitter. It includes ten types of entity (i.e., Geo_Loc, Facility, Movie, Company, Product, Person, Other, Sportsteam, TVShow and Musicartist). We use the same training (2934 sentences), development (3850 sentences) and test set (1000 sentences) split proposed by Nie et al. [34].

- The CoNLL2004 dataset consists of sentences from news articles. It includes four types of entity (i.e., People, Location, Organization and Other) and five types of relation (i.e., Work-For, Live-In, Kill, Organization-Based-In and Located-In). In this paper, we only use the entity annotations. We use the same training (1153 sentences) and test set (288 sentences) split proposed by Eberts and Ulges [28]. Moreover, 20% of the training set is taken as a held-out development part for hyperparameter tuning.

- The SciERC dataset is derived from 500 abstracts of AI papers and is composed of a total of 2687 sentences. It includes six types of scientific entity (i.e., Task, Material, Other-Scientific-Term, Method, Metric and Generic) and seven types of relation (Compare, Feature-Of, Part-Of, Conjunction, Evaluate-For, Used-For and Hyponym-Of). We only use the entity annotations. Moreover, we use the same training (1861 sentences), development (275 sentences) and test (551 sentences) split used by Eberts and Ulges [28].

4.1.2. Implementation Details

4.2. Main Results

4.3. Performance against Cascading Label Misclassification

4.4. Performance against Negative Sampling Strategy

4.5. Performance against Decoder Layers

4.6. Performance against Span Representation

4.7. Investigation of Model Training Speed

4.8. Case Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| NER | Named Entity Recognition |

| NLP | Natural Language Processing |

| S-NER | Span-Based Model for NER |

| RNN | Recurrent Neural Network |

| CNN | Convolutional Neural Network |

| MTL | Multi-Task Learning |

| CRF | Conditional Random Fields |

| FFN | Feed-Forward Network |

| BiLSTM | Bidirectional Long Short-Term Memory |

| BERT | Bidirectional Encoder Representation from Transformers |

References

- Liu, S.; Sun, Y.; Li, B.; Wang, W.; Zhao, X. HAMNER: Headword amplified multi-span distantly supervised method for domain specific named entity recognition. AAAI Conf. Artif. Intell. 2020, 34, 8401–8408. [Google Scholar] [CrossRef]

- Jie, Z.; Lu, W. Dependency-Guided LSTM-CRF for Named Entity Recognition. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3862–3872. [Google Scholar]

- Sui, D.; Chen, Y.; Liu, K.; Zhao, J.; Liu, S. Leverage lexical knowledge for Chinese named entity recognition via collaborative graph network. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3830–3840. [Google Scholar]

- Yan, H.; Deng, B.; Li, X.; Qiu, X. TENER: Adapting Transformer Encoder for Named Entity Recognition. arXiv 2019, arXiv:1911.04474. [Google Scholar]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF models for sequence tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar]

- Lafferty, J.D.; McCallum, A.; Pereira, F.C. Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data. In Proceedings of theProceedings of the 18th International Conference on Machine Learning 2001 (ICML 2001), Williamstown, MA, USA, 28 June–1 July 2001. [Google Scholar]

- Tan, C.; Qiu, W.; Chen, M.; Wang, R.; Huang, F. Boundary enhanced neural span classification for nested named entity recognition. AAAI Conf. Artif. Intell. 2020, 34, 9016–9023. [Google Scholar] [CrossRef]

- Dadas, S.; Protasiewicz, J. A bidirectional iterative algorithm for nested named entity recognition. IEEE Access 2020, 8, 135091–135102. [Google Scholar] [CrossRef]

- Li, F.; Lin, Z.; Zhang, M.; Ji, D. A Span-Based Model for Joint Overlapped and Discontinuous Named Entity Recognition. In Proceedings of the Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Online, 1–6 August 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 4814–4828. [Google Scholar] [CrossRef]

- Luan, Y.; Wadden, D.; He, L.; Shah, A.; Ostendorf, M.; Hajishirzi, H. A general framework for information extraction using dynamic span graphs. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 3036–3046. [Google Scholar]

- Dixit, K.; Al-Onaizan, Y. Span-level model for relation extraction. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 5308–5314. [Google Scholar]

- Ouchi, H.; Suzuki, J.; Kobayashi, S.; Yokoi, S.; Kuribayashi, T.; Konno, R.; Inui, K. Instance-Based Learning of Span Representations: A Case Study through Named Entity Recognition. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 6452–6459. [Google Scholar] [CrossRef]

- Lin, B.Y.; Xu, F.F.; Luo, Z.; Zhu, K. Multi-channel bilstm-crf model for emerging named entity recognition in social media. In Proceedings of the 3rd Workshop on Noisy User-generated Text, Copenhagen, Denmark, 7–9 September 2017; pp. 160–165. [Google Scholar]

- Zheng, S.; Wang, F.; Bao, H.; Hao, Y.; Zhou, P.; Xu, B. Joint Extraction of Entities and Relations Based on a Novel Tagging Scheme. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 1227–1236. [Google Scholar]

- Chen, J.; Yuan, C.; Wang, X.; Bai, Z. MrMep: Joint extraction of multiple relations and multiple entity pairs based on triplet attention. In Proceedings of the 23rd Conference on Computational Natural Language Learning (CoNLL), Hong Kong, China, 3–4 November 2019; pp. 593–602. [Google Scholar]

- Zhou, P.; Zheng, S.; Xu, J.; Qi, Z.; Bao, H.; Xu, B. Joint extraction of multiple relations and entities by using a hybrid neural network. In Chinese Computational Linguistics and Natural Language Processing Based on Naturally Annotated Big Data; Springer: Berlin/Heidelberg, Germany, 2017; pp. 135–146. [Google Scholar]

- Ye, H.; Zhang, N.; Deng, S.; Chen, M.; Tan, C.; Huang, F.; Chen, H. Contrastive Triple Extraction with Generative Transformer. arXiv 2020, arXiv:2009.06207. [Google Scholar]

- Kenton, J.D.M.W.C.; Toutanova, L.K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Fu, J.; Huang, X.; Liu, P. SpanNER: Named Entity Re-/Recognition as Span Prediction. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 7183–7195. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Guo, Z.; Zhang, Y.; Lu, W. Attention Guided Graph Convolutional Networks for Relation Extraction. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 241–251. [Google Scholar] [CrossRef] [Green Version]

- Yu, J.; Bohnet, B.; Poesio, M. Named Entity Recognition as Dependency Parsing. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 6470–6476. [Google Scholar] [CrossRef]

- Nguyen, D.Q.; Verspoor, K. End-to-end neural relation extraction using deep biaffine attention. In Proceedings of the European Conference on Information Retrieval; Springer: Berlin/Heidelberg, Germany, 2019; pp. 729–738. [Google Scholar]

- Luan, Y.; He, L.; Ostendorf, M.; Hajishirzi, H. Multi-Task Identification of Entities, Relations, and Coreference for Scientific Knowledge Graph Construction. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 3219–3232. [Google Scholar]

- Ilic, S.; Marrese-Taylor, E.; Balazs, J.; Matsuo, Y. Deep contextualized word representations for detecting sarcasm and irony. In Proceedings of the 9th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, Brussels, Belgium, 31 October–2 November 2018; pp. 2–7. [Google Scholar]

- Wadden, D.; Wennberg, U.; Luan, Y.; Hajishirzi, H. Entity, Relation, and Event Extraction with Contextualized Span Representations. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 5784–5789. [Google Scholar]

- Eberts, M.; Ulges, A. Span-based Joint Entity and Relation Extraction with Transformer Pre-training. In Proceedings of the 24th European Conference on Artificial Intelligence, Santiago De Compostela, Spain, 29 August–8 September 2020. [Google Scholar]

- Wu, Y.; Schuster, M.; Chen, Z.; Le, Q.V.; Norouzi, M. Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation. arXiv 2016, arXiv:1609.08144. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Ji, B.; Yu, J.; Li, S.; Ma, J.; Wu, Q.; Tan, Y.; Liu, H. Span-based joint entity and relation extraction with attention-based span-specific and contextual semantic representations. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 88–99. [Google Scholar]

- Strauss, B.; Toma, B.; Ritter, A.; De Marneffe, M.C.; Xu, W. Results of the wnut16 named entity recognition shared task. In Proceedings of the 2nd Workshop on Noisy User-generated Text (WNUT), Osaka, Japan, 11–12 December 2016; pp. 138–144. [Google Scholar]

- Roth, D.; Yih, W.t. A Linear Programming Formulation for Global Inference in Natural Language Tasks. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 3–7 June 2019; pp. 1–8. [Google Scholar]

- Nie, Y.; Tian, Y.; Wan, X.; Song, Y.; Dai, B. Named Entity Recognition for Social Media Texts with Semantic Augmentation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 1383–1391. [Google Scholar]

- Beltagy, I.; Lo, K.; Cohan, A. SciBERT: A Pretrained Language Model for Scientific Text. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3615–3620. [Google Scholar]

- Nguyen, D.Q.; Vu, T.; Nguyen, A.T. BERTweet: A pre-trained language model for English Tweets. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; pp. 9–14. [Google Scholar]

- Zhou, J.T.; Zhang, H.; Jin, D.; Zhu, H.; Fang, M.; Goh, R.S.M.; Kwok, K. Dual adversarial neural transfer for low-resource named entity recognition. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 3461–3471. [Google Scholar]

- Shahzad, M.; Amin, A.; Esteves, D.; Ngomo, A.C.N. InferNER: An attentive model leveraging the sentence-level information for Named Entity Recognition in Microblogs. In Proceedings of the The International FLAIRS Conference Proceedings, North Miami Beach, FL, USA, 17–19 May 2021; Volume 34. [Google Scholar]

- Wang, X.; Jiang, Y.; Bach, N.; Wang, T.; Huang, Z.; Huang, F.; Tu, K. Improving Named Entity Recognition by External Context Retrieving and Cooperative Learning. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 1800–1812. [Google Scholar]

- Yan, Z.; Zhang, C.; Fu, J.; Zhang, Q.; Wei, Z. A Partition Filter Network for Joint Entity and Relation Extraction. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online, 7–11 November 2021; pp. 185–197. [Google Scholar]

- Zhong, Z.; Chen, D. A Frustratingly Easy Approach for Entity and Relation Extraction. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 50–61. [Google Scholar]

- Yu, H.; Mao, X.L.; Chi, Z.; Wei, W.; Huang, H. A robust and domain-adaptive approach for low-resource named entity recognition. In Proceedings of the 2020 IEEE International Conference on Knowledge Graph (ICKG), Nanjing, China, 9–11 August 2020; pp. 297–304. [Google Scholar]

- Bekoulis, G.; Deleu, J.; Demeester, T.; Develder, C. Joint entity recognition and relation extraction as a multi-head selection problem. Expert Syst. Appl. 2018, 114, 34–45. [Google Scholar] [CrossRef] [Green Version]

- Tran, T.; Kavuluru, R. Neural metric learning for fast end-to-end relation extraction. arXiv 2019, arXiv:1905.07458. [Google Scholar]

- Zhang, M.; Zhang, Y.; Fu, G. End-to-end neural relation extraction with global optimization. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 1730–1740. [Google Scholar]

- Li, X.; Yin, F.; Sun, Z.; Li, X.; Yuan, A.; Chai, D.; Zhou, M.; Li, J. Entity-Relation Extraction as Multi-Turn Question Answering. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 1340–1350. [Google Scholar]

- Crone, P. Deeper Task-Specificity Improves Joint Entity and Relation Extraction. arXiv 2020, arXiv:2002.06424. [Google Scholar]

- Wang, J.; Lu, W. Two are Better than One: Joint Entity and Relation Extraction with Table-Sequence Encoders. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 1706–1721. [Google Scholar]

| Dataset | Model | Multi-Task Learning | F1-Score | |

|---|---|---|---|---|

| Micro | Macro | |||

| WNUT2016 | BERTweet [36] | - | 52.10 | - |

| DATNet [37] | - | 53.43 | - | |

| InferNER [38] | - | 53.48 | - | |

| TENER [4] | - | 54.06 | - | |

| SANER [34] | - | 55.01 | - | |

| SpanNER [20] | - | 56.27 | - | |

| CL-KL [39] ♠ | - | 58.98 | - | |

| S-NER | - | 60.12 (+1.14) | 46.77 (new) | |

| SciERC | SciE [25] | ✓ | 64.20 | - |

| SciBERT [35] | - | 65.50 | - | |

| DyGIE++ [27] | ✓ | 67.50 | - | |

| SpERT [28] | ✓ | 67.62 | - | |

| PFN [40] | ✓ | 66.80 | - | |

| PURE [41] | ✓ | 68.90 | - | |

| RDANER [42] ♠ | - | 68.96 | - | |

| SpERT (SciBERT) [28] | ✓ | 70.33 | - | |

| S-NER | - | 69.46 (+0.50) | 69.47 (new) | |

| S-NER (SciBERT) | - | 70.55 (+0.22) | 70.71 (new) | |

| CoNLL2004 | Multi-head [43] | ✓ | - | 83.90 |

| Relation-metric [44] | ✓ | - | 84.15 | |

| Biaffine [24] | ✓ | - | 86.20 | |

| Global [45] | ✓ | 85.60 | - | |

| Multi-turn QA [46] | ✓ | 87.80 | - | |

| SpERT [28] | ✓ | 88.94 | 86.25 | |

| Deeper [47] | ✓ | 89.78 | 87.00 | |

| Table-sequence [48] | ✓ | 90.10 | 86.90 | |

| S-NER | - | 90.36 (+0.26) | 88.41 (+1.41) | |

| Model | WNUT16 (F1-Score) | Model | CoNLL2004 (F1-Score) | Model | SciERC (F1-Score) |

|---|---|---|---|---|---|

| CL-KL | 16.79 | Table-sequence | 27.44 | RDANER | 21.57 |

| S-NER | 53.63 | S-NER | 73.46 | S-NER | 44.89 |

| Model | WNUT2016 (F1-Score) | CoNLL2004 (F1-Score) | SciERC (F1-Score) |

|---|---|---|---|

| S-NER + | |||

| w negative sampling strategy | 53.77 | 87.36 | 68.87 |

| w/o negative sampling strategy | 54.17 | 87.12 | 69.46 |

| Model | WNUT2016 (Spans/Sent) | CoNLL2004 (Spans/Sent) | SciERC (Spans/Sent) |

|---|---|---|---|

| S-NER + | |||

| w negative sampling strategy | 81 | 87 | 96 |

| w/o negative sampling strategy | 167 | 246 | 198 |

| Model | WNUT2016 (Sents/s ) | CoNLL2004 (Sents/s ) | SciERC (Sents/s ) |

|---|---|---|---|

| S-NER + | |||

| w negative sampling strategy | 44 | 67 | 53 |

| w/o negative sampling strategy | 32 | 31 | 34 |

| Model | WNUT2016 (F1-Score) | CoNLL2004 (F1-Score) | SciERC (F1-Score) |

|---|---|---|---|

| S-NER + | |||

| Decoder with 1 FFN layer | 53.77 | 87.36 | 68.87 |

| Decoder with 2 FFN layers | 51.66 | 87.54 | 68.12 |

| Decoder with 3 FFN layers | 51.79 | 88.71 | 67.49 |

| Model | WNUT2016 (F1-Score) | CoNLL2004 (F1-Score) | SciERC (F1-Score) |

|---|---|---|---|

| S-NER + | |||

| Max-pooling | 53.77 | 87.36 | 68.87 |

| Average-pooling | 52.71 | 86.45 | 67.43 |

| Boundary | 52.23 | 87.21 | 66.99 |

| Model | WNUT16 (Sents/s ) | Model | CoNLL2004 (Sents/s ) | Model | SciERC (Sents/s ) |

|---|---|---|---|---|---|

| CL-KL | 36 | Table-sequence | 32 | RDANER | 37 |

| S-NER | 44 | S-NER | 67 | S-NER | 53 |

| WNUT2016 | ||

| Case 1 | Text | Kern Valley will play the San Diego Jewish Academy tomorrow |

| Label | B-SpSt I-SpSt O O O B-GeoLoc I-GeoLoc I-GeoLoc I-GeoLoc O | |

| Entity | (Kern Valley); (San Diego Jewish Academy) | |

| CL-KL | Label | B-SpSt I-SpSt O O O B-Loc I-Loc B-Org I-org O |

| Entity | (Kern Valley); (San Diego); (Jewish Academy) | |

| S-NER | Entity | (Kern Valley); (San Diego Jewish Academy) |

| SciERC | ||

| Case 2 | Text | The model is evaluated on English and Czech newspaper texts |

| Label | O B-Genric O O O B-Mat I-Mat I-Mat I-Mat I-Mat | |

| Entity | (model); (English and Czech newspaper texts) | |

| RDANER | Label | O B-Genric O O O B-Mat O B-Mat I-Mat I-Mat |

| Entity | (model); (English); (Czech newspaper texts) | |

| S-NER | Entity | (model); (English and Czech newspaper texts) |

| CoNLL2004 | ||

| Case 3 | Text | Paul Fournier, a spokesman for the state Department of Inland Fisheries and Wildlife |

| Label | B-Per I-Per O O O O O B-Org I-Org I-Org I-Org I-Org I-Org | |

| Entity | (Paul Fournier); (Department of Inland Fisheries and Wildlife) | |

| Table-sequence | Label | I-Per I-Per O O O O B-Org I-Org O B-Other I-Other I-Other I-Other |

| Entity | (Paul Fournier); [state Department]; [Inland Fisheries and Wildlife] | |

| S-NER | Entity | (Paul Fournier); (Department of Inland Fisheries and Wildlife) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, J.; Ji, B.; Li, S.; Ma, J.; Liu, H.; Xu, H. S-NER: A Concise and Efficient Span-Based Model for Named Entity Recognition. Sensors 2022, 22, 2852. https://doi.org/10.3390/s22082852

Yu J, Ji B, Li S, Ma J, Liu H, Xu H. S-NER: A Concise and Efficient Span-Based Model for Named Entity Recognition. Sensors. 2022; 22(8):2852. https://doi.org/10.3390/s22082852

Chicago/Turabian StyleYu, Jie, Bin Ji, Shasha Li, Jun Ma, Huijun Liu, and Hao Xu. 2022. "S-NER: A Concise and Efficient Span-Based Model for Named Entity Recognition" Sensors 22, no. 8: 2852. https://doi.org/10.3390/s22082852

APA StyleYu, J., Ji, B., Li, S., Ma, J., Liu, H., & Xu, H. (2022). S-NER: A Concise and Efficient Span-Based Model for Named Entity Recognition. Sensors, 22(8), 2852. https://doi.org/10.3390/s22082852