1. Introduction

The personalized production paradigm that characterizes industry 4.0 allows customers to order unique products, with characteristics that the customer himself specifies. The linear, sequential and standardized production lines that emphasize the high productivity and product flow required by the last (third) industrial revolution no longer satisfy the consumer’s current needs and personalized demands. The industry, however, is not yet completely prepared to deal with this paradigm [

1,

2,

3].

While matching this new trend and managing the demand for innovative products, industries are expected to keep high production levels without decreasing their product quality, to maintain relevance within a competitive market. To do so, a high level of production flexibility, decentralization of decision-making, and intelligent use of available resources are required to allow customized mass production without significantly increasing production costs [

1,

2,

4]. Industrial automation alone is no longer enough to fulfill these new requirements. Traditional robotic systems alone are no longer suitable for every task that each production requires. This urge created a path for new technologies, such as collaborative robots and augmented reality, and concepts, such as human–robot collaboration and smart operator or operator 4.0, to emerge.

Even though factories are increasingly employing smarter and connected technologies, human workers still have a dominant role in the production process. Combinations of humans and machines have the potential to outperform either working alone since their capabilities complement each other. For example, humans can handle uncertainties that demand cognitive knowledge and dexterity while robots supply higher physical strength and precision. This duality leads to an idealization of a system where humans and robots combine strengths in a way that both contributors could make use of their values to support each other and not be limited by their conditions, and work towards achieving a common goal. This combination of working is addressed as Human–Robot Collaboration (HRC) [

5].

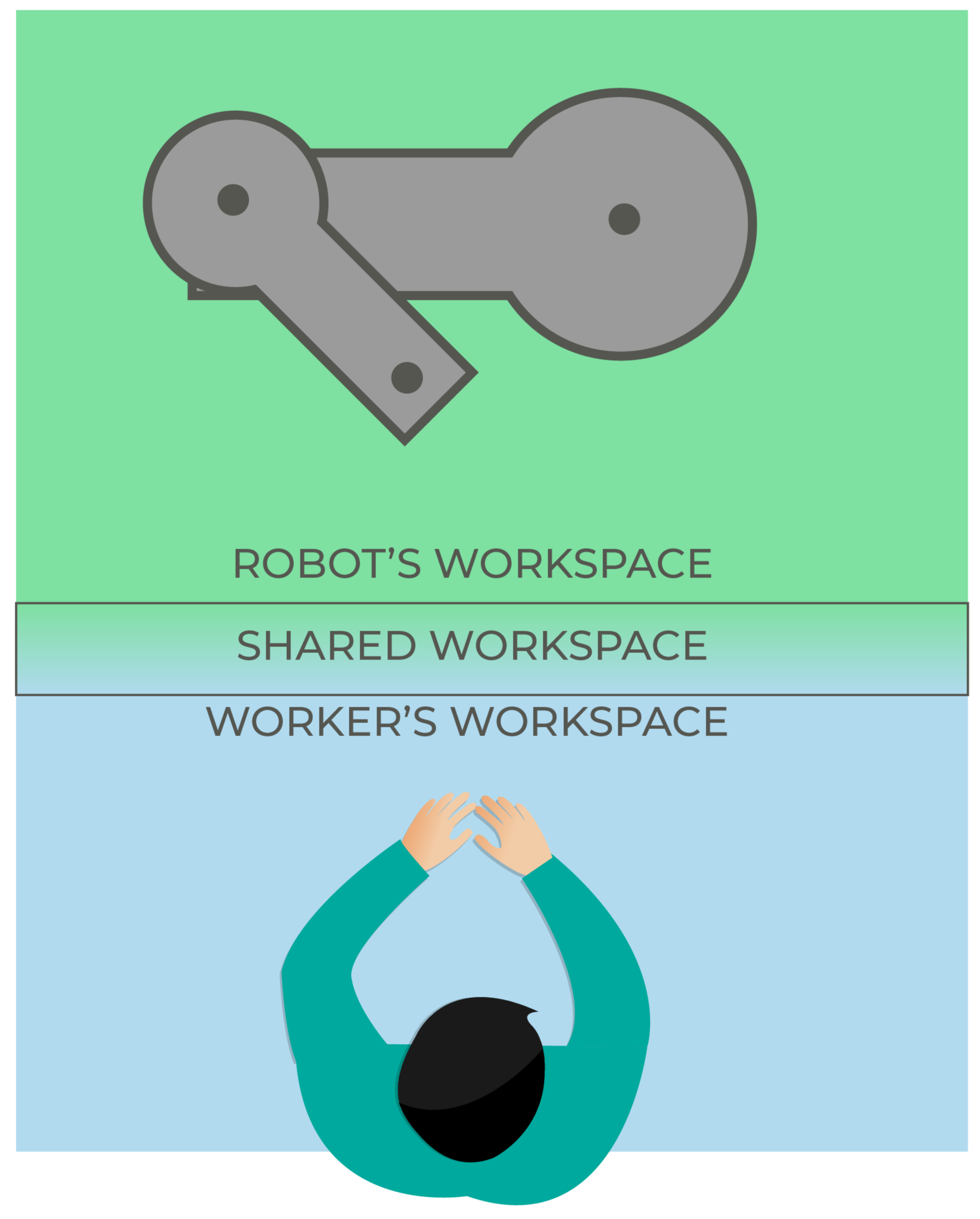

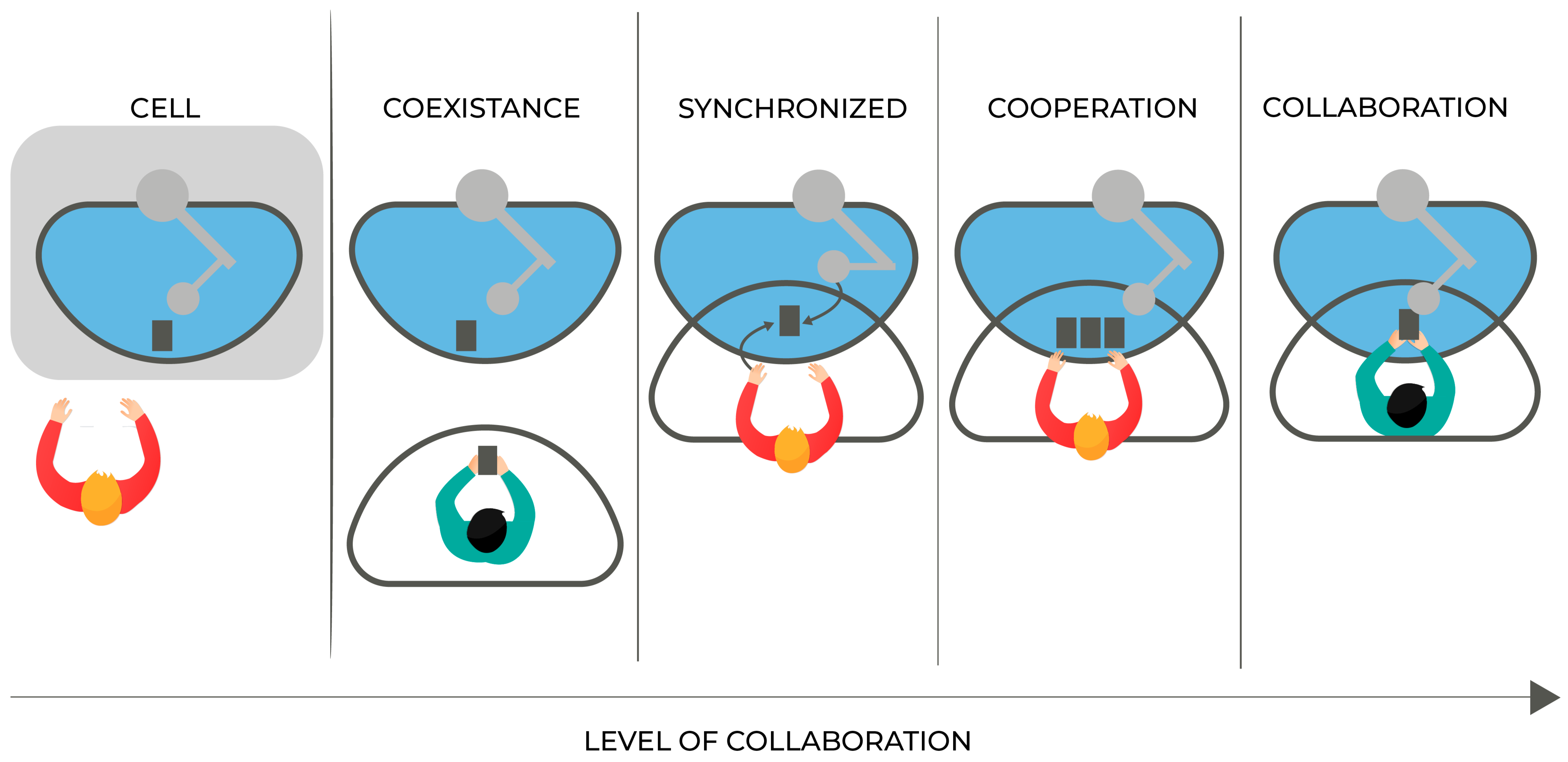

To safely cope with human operators, industrial robots, working in a mechanized repetitive production line, are now being replaced by collaborative robots or cobots. The ISO 10218-2:2011 standard defines collaborative robots as robots designed for direct interaction with a human within a limited collaborative workspace. The same ISO defines a collaborative workspace as a common area within the safeguarded ground where both humans and robots can perform tasks simultaneously during production operations. Hentout et al. [

6] presents a comparison between traditional standard industrial robots and industrial collaborative robots, where the main differences between them can be summarized in three major points: the ability to safely interact with humans in a shared workspace, allocation flexibility (light-weight structure, smaller size), and ease of programming.

It is worth mentioning that robots are not collaborative per se. Instead, applications can be rendered collaborative by adopting adequate safety features. Moreover, the term “collaborative robots” suggests the erroneous idea that the robot is intrinsically safe to cope with the operator in all situations [

7]. For example, a robot working with a sharp edge as an end effector continues to be dangerous even if working at a low speed and with limited torque.

There are plenty of different collaborative robots manufacturers currently on the market, for example, ABB [

8], Kuka [

9], Rethink Robotics [

10], Universal Robots [

11], COMAU [

12], Franka Emika [

13], Yaskawa [

14], TECHMAN [

15], among many others. Villani et al. [

16] compiled and compared some models specifications in his work. Collaborative Robots can be utilized in numerous industrial applications such as Assembly (screwdriving, part insertion …) [

17]; Dispensing (glueing, sealing, painting …) [

18]; Finishing (sanding, polishing …) [

19]; Machine Tending (CNC, Injection mold …) [

20]; Material Handling (Packaging, Palletizing …) [

21]; Material removal (grinding, deburring, milling …) [

22]; Quality Inspection (Testing, Measuring …) [

23]; Welding [

24] and many more.

Understanding that the transition from a purely manual or automated production activity to a human–robot cooperative scenario must be done in a way that brings not only benefits to the manufacturing industry (less processing time, increased quality and control of production processes …) but also to the operators (improvement of working conditions, improvement of skills and capabilities …), Romero et al. [

25,

26], addresses the “smart operator” topic from a human–centered perspective of industry 4.0. The author defines Operator 4.0 as an intelligent and skilled operator who performs work, aided by machines if and as needed. Romero et al. [

26] also describes the typologies of operator 4.0 that were compiled, later on, by Zolotová et al. [

27], into the definition of:

Smart operator: an operator that benefits from technology to understand production through context-sensitive information. A smart operator utilizes equipment capable of enriching the real-world with virtual and augmented reality, uses a personal assistant and social networks, analyzes acquired data, wears trackers and works with robots to obtain additional advantages.

According to Milgram and Kishino [

28], Augmented Reality (AR) is in between real and virtual reality (VR), thus being considered a mixed reality technology (MR). They define AR as a real environment “augmented” by means of virtual (computer graphic) objects. Azuma [

29] defined AR to be any system to have the following properties, which avoid limiting AR to specific technologies, such as head-mounted displays, as previous authors did:

Combine real and virtual objects;

To be interactively and in real time;

To be registered in three dimensions.

Thus, it is possible to rewrite this definition as a technology capable of enhancing a person’s physical environment perception through interactive digital information virtually superimposed on the real world.

Despite not totally mature for every industrial applications due to hardware factors such as ergonomic aspects like weight and movement restraint, which do limit the number of components and features that a visualization equipment can have, due to software factors such as tracking and recognition technology limitations, lack of standards on applications development and on how and which information should be displayed to the user, as well as due to being a new technology yet to be fully integrated into enterprise resource planning systems (ERP) and manufacturing execution systems (MES) [

30,

31], AR has been used in plenty of different industrial applications such as: Assembly support [

32]; Maintenance support [

33]; Remote assistance [

34]; Logistics [

35]; Training [

36]; Quality Control [

37]; Data visualization/feedback [

38]; Welding [

39]; Product design/authoring [

40]; and Safety [

41] among other applications including Human–Robot Collaboration that will be discussed next. Thus, proving that AR is a promising tool to increase the operator’s performance and safety conditions, increase activities execution speed, decrease the error rate during task execution, rework and redundant inspection, and reduce mental workload.

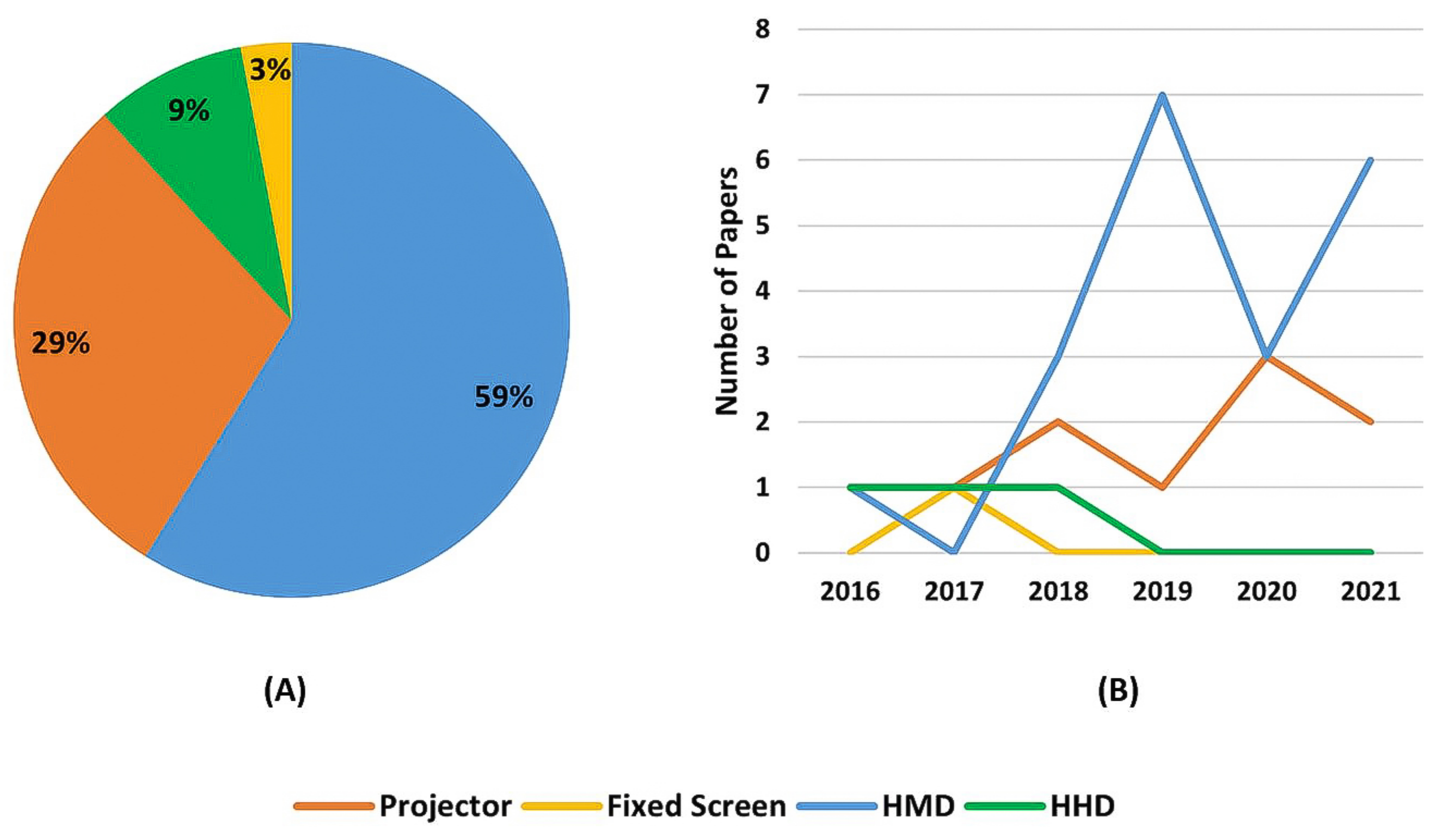

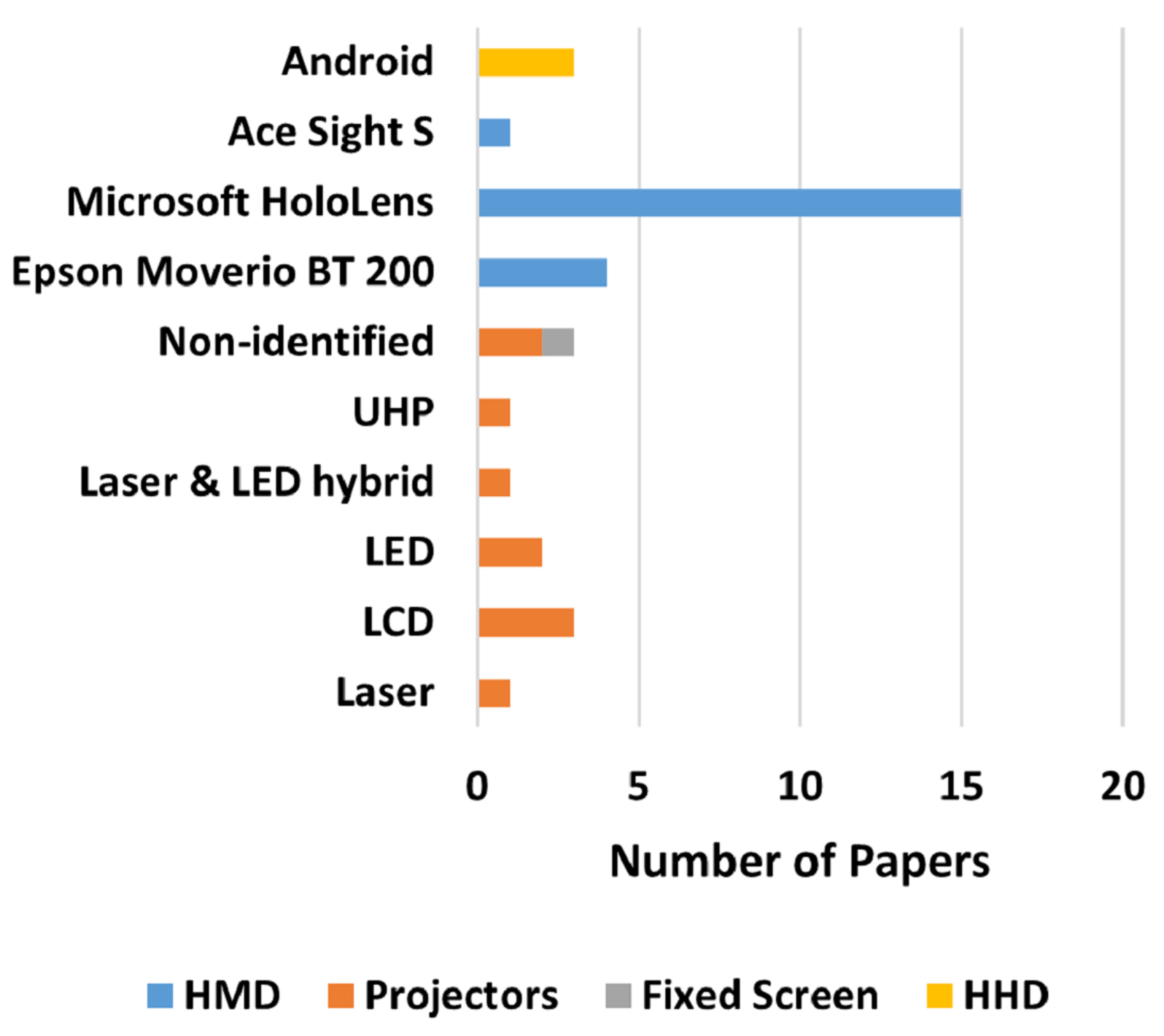

AR visualization technologies can be classified into four main categories as displayed in

Table 1 [

30,

31,

42,

43,

44].

Another important requirement of AR applications is the activation and tracking method, which can be divided into two main categories: marker-based and markerless systems. As the name implies, the main difference between the two technologies is that the first relies on fiducial physical markers, such as QR codes, for example, to be positioned where it wants the virtual information to be anchored and exposed to the user. Although this method tends to be easier to implement, it can suffer in industrial environments due to dirt, corrosion, and poor light conditions. In contrast, markerless systems do not depend on fiducial markers; instead, they rely on objects natural feature tracking, such as borders and colors, which require a much higher processing capacity due to the more computationally intensive, complex, and elaborated detection algorithms, and can also be affected by poor light conditions. For example, one markerless tracking could continuously scan the environment and compare each frame to a CAD model to find a similar pattern or a gesture, whereas a marker-based tracking system scan for a single specific predefined pattern that will trigger the virtual information to be rendered and shown to the user [

45,

46]. Moreover, tracking systems are important to ensure the accuracy and repeatability of mixed reality systems, which is indispensable for some applications, such as programming by demonstration [

47].

With the continuously growing usage of industrial collaborative robots, the urge for achieving a seamless human–robot interaction has also increased. Seamless cooperation between a human operator and a robot agent is a key factor to reaching a more flexible, effective, and efficient production line by reducing the number of errors and time required to finish a task while improving the operator’s ergonomics at work, lowering its cognitive load, enabling decentralized decisions and making better use of available resources.

As a prominent and prospective tool for aiding human operators to understand robots’ intentions and interact with them, Augmented Reality, is expected to fill this gap and solve the “human in the loop” integration problem by augmenting information properly into the operators’ field of view and acquiring its inputs to the cyber-physical system. AR has been demonstrated to be effective for Human–Robot Interaction in numerous fields of applications such as: Assembly guidance [

48,

49]; Data visualization/feedback [

50]; Safety [

51]; Programming/authoring [

52,

53], and so on. The growing market expectations of both technologies [

54,

55] corroborates with the upward tendency in the number of research papers towards the field of augmented reality human–robot collaboration.

Table 1.

AR visualization technologies and its characteristics.

Table 1.

AR visualization technologies and its characteristics.

| Visualization Technology | Examples | Characteristics |

|---|

| Head-Mounted Displays (HMDs) | Comprises smart glasses and helmet devices such as Microsoft HoloLens [56] and Epson MOVERIO [57] | Head-Mounted Displays use mainly optical see-through technology, where the virtual content is superimposed on the operator’s field of view through a transparent display in front of his eyes, allowing the user to see both the real world and the digital information. This technology is also portable and different from Hand-Held Devices, it is hands-free. Its drawbacks consist of bulkiness and eyestrain, being somewhat cumbersome to use for long periods. |

| Hand-Held Displays (HHDs) | Comprises devices such as tablets and smartphones | Hand Held Displays works by utilizing a video see-through, where the device’s built-in camera records frames of the real world that are then augmented with virtual content. These devices are widely used due to their low cost, easy deployability, usability, and portability, meaning that they are not restricted to stationary applications. However, it has the worst drawback of all, which is to require the operator to hold it during usage. Being a non-hands-free technology makes it not ideal to be applied to manual applications. In addition, it also requires some operator’s attention shift from the task. |

| Fixed or Static Screens | Comprises devices such as televisions and computer screens | Fixed screens display an augmented real-world view on a stationary monitor. It needs a combination of external image capturing devices and processing tools to generate and display the augmented content. Its drawbacks are the fact that it requires the operator to shift its attention from the task, and it is limited to a stationary application since a large screen is not easily portable. |

| Spatial Displays | Comprises projection equipment | Projectors display augmented content directly over the real objects and similarly to fixed screens they also need to be combined with external image capturing devices and processing tools. Differently from fixed screens, they do not require the user to shift its attention from the task though it is also limited to a stationary application. Both modalities facilitate collaborative tasks among users since they are not associated with a single user, differently from the following technologies. One possible drawback of this modality is that, when using a single projector, it can suffer from occlusion problems. It is also known as Spatial Augmented reality (SAR). |

Previous reviews compiled the current state of the art of Augmented Reality for industrial applications, and enlighten the advancements and current challenges faced towards its implementation in production systems. Danielsson et al. [

58] raised the current maturity level of Augmented Reality Smart Glasses (ARSG) for industrial assembly operations and highlighted the endeavors required before large-scale implementations, either from a technological and engineering perspective. Makhataeva and Varol [

59] focused on compiling several AR applications, from 2015 to 2019, for medical applications, motion planning and control, human–robot interaction, and multi-agent systems, providing an overview of AR, VR, and MR research trends in each category. Hentout et al. [

6] presented a literature review of works on human–robot interaction in industrial collaborative robots between 2008 and 2017. Bottani and Vignali [

60] reviewed the scientific literature concerning AR applied to the manufacturing industry, from 2006 to early 2017, where the authors organized 174 papers according to publication year, publication country, keywords, application field, industrial sectors, technological devices, tracking technology, user test, and results. De Pace et al. [

4], brought together papers about augmented reality interfaces for collaborative industrial robots, from 2001 to 2019, and analyzed them to find the main usage and typologies of AR for collaborative industrial robots, the most analyzed objective and subjective data for evaluating applications, as well as the most adopted questionnaires for obtaining subjective data, also to identify the main strengths and weaknesses of AR usage for these applications, and finally to point out future developments regarding AR interfaces for collaborative industrial robots.

This systematic literature review, differently from those previously mentioned ones, intends to address AR applications specifically applied to human–robot cooperative and collaborative work. For a paper to be considered, it must include an application where both humans and robots work together either in the same task or in different ones simultaneously while sharing a common workspace.

The purpose of this review is to categorize the recent literature on Augmented Reality for Human–Robot Collaboration, published from early 2016 to late 2021, to examine the evolution of this research field, identify the main areas and sectors where it is currently deployed, describe the adopted technological solutions, as well as highlight the key benefits that can be achieved with this technology.

The remainder of this article is organized as follows.

Section 2 presents some terms definitions adopted in this work.

Section 3 describes the research methodology adopted for the literature survey and presents some preliminary information about the studies analyzed.

Section 4 details the survey results and includes not only descriptive statistics on the sample of papers reviewed but also their categorization and their detailed analysis. Finally,

Section 5 summarizes the key findings from the review, discusses the related scientific and practical implications, and indicates potential future research. In the end, it also depicts and outlines this work’s final considerations.

3. Methodology

To assess the current use of Augmented Reality in industrial human–robot collaborative and cooperative operations, a Systematic Literature Review (SLR) approach has been adopted. Fink [

73] defined SLR as a “

systematic, explicit, and reproducible method for identifying, evaluating, and synthesizing the existing body of completed and recorded work produced by researchers, scholars, and practitioners”. It helps to collect all related publications and documents that fit pre-defined inclusion criteria to answer a specific research question. It uses unambiguous and systematic procedures to minimize the occurrence of bias during searching, appraisal, synthesis, and analysis of studies [

74]. This previous course of action is also known as the SALSA framework. Based on De Pace et al. [

4] rearrangement of Grant and Booth [

75] SALSA Framework, the procedure presented in

Table 2 was adopted to guide the execution of this systematic review. This framework ensures the replicability and transparency of this study [

76]. For this research, Parsifal [

77], an online tool to support systematic literature reviews that provides mechanisms to help design and conduct, was used.

3.1. Protocol

The Population, Intervention, Comparison, Outcome, and Context (PICOC) framework is a useful strategy to determine the research scope, which helps to formulate research questions, determine the research boundaries, and to identify the proper research method [

4,

74]. Other similar works have also applied this methodology, such as Fernández del Amo et al. [

78] and Khamaisi et al. [

79]. This paper PICOC framework is presented in

Table 3.

The resulting objectives are presented in the form of research questions in the list below:

What are the main AR visualization technologies used in industrial Human–Robot collaboration and cooperation context?

What is the main field of application of AR in industrial Human–Robot collaboration and cooperation context?

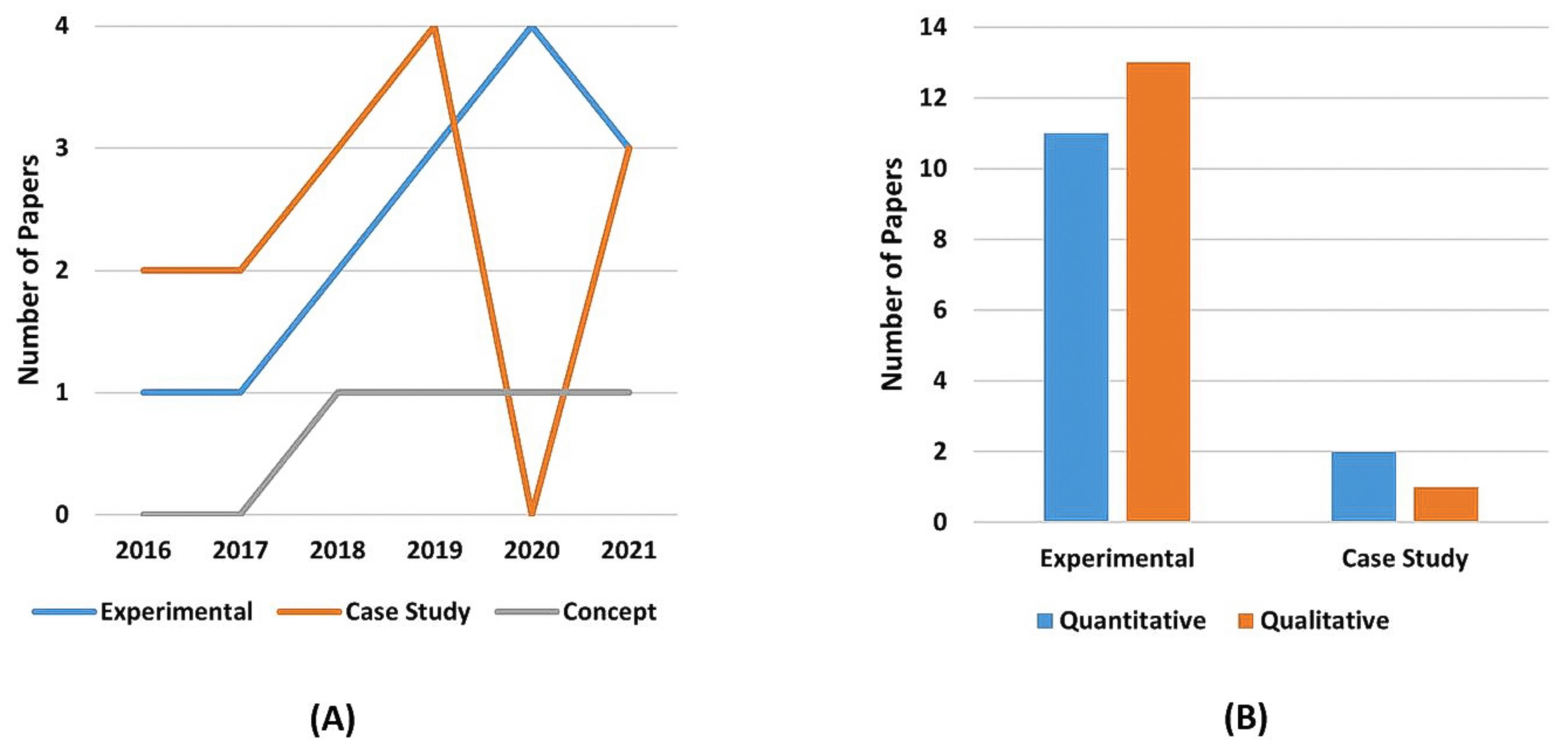

What is the current state of the art of AR applications for Human–Robot collaboration and cooperation? Is research focusing on experimental or concept applications? What are the most used assessment techniques and indicators? What are the research gaps presented in AR for industrial Human–Robot collaboration and cooperation context?

Having defined the questions to be answered by this SLR, the following subsections will describe the adopted procedure used throughout the conducting of this research.

3.2. Search

The search phase consists of identifying sources of information that could be relevant for this research and defining the search string. Even though some search engines, such as Scopus and Web of Science, encompass overlapping publication venues [

80], it was decided to use multiple different databases to assert their relevance when searching this specific field of study, by comparing how many of the selected papers can be found in each one. Therefore, the selected search engines were: ACM Digital Library [

81], Dimensions [

82], IEEE Xplore [

83], Web of Science [

84], ScienceDirect [

85], Scopus [

86] and Ebsco [

87].

During the search phase, it was brought to attention the aforementioned plurality of interpretations of some relevant terms to the research. Thus, based on the combination of main keywords (Augmented Reality—Mixed Reality—Cooperation—Collaboration), multiple strings were created to try to fill this gap and cover the largest number of papers, preventing any relevant article from being left out. Those strings are generally presented below, and some minor modifications were needed for specifics search engines that do not support wildcards and to adequate the search for Title, Abstract and Key-words fields. They are:

((“Augmented reality” OR “AR”) AND (“human–machine collaborati*” OR “HMC” OR “human–robot collaborati*” OR “HRC”))

((“Mixed reality” OR “MR”) AND (“human–machine collaborati*” OR “human–robot collaborati*”))

((“Augmented reality” OR “AR”) AND (“human–machine cooperati*” OR “human–robot cooperati*”))

((“Mixed reality” OR “MR”) AND (“human–machine cooperati*” OR “human–robot cooperati*”))

It is relevant to point out that abbreviations were only used for the first search string. After the first search, it was realized that those abbreviations were not reaching relevant articles; instead, they were reaching a large number of papers out of this research scope. Therefore, they were discarded for the following search strings. The search was realized on 3 January 2022.

3.3. Appraisal

The appraisal step comprises the filtering and quality assessment of the search results. The main goal of a quality assessment procedure is to identify a paper selection criterion in case two or more studies present similar approaches or conflicting ideas or results [

4]. The study selection consisted of the screening of search results for selecting papers that were relevant to this review. To make the selection process repeatable, a set of pre-determined exclusion criteria were defined, and are presented in

Table 4.

Based on similar reviews, such as De Pace et al. [

4], and using the proposed search strings on the Scopus database, it is possible to infer that the augmented reality for Human–Robot Collaboration and Cooperation topic, despite having its first papers published by 2004, started gained relevance by 2016, where the number of publications consistently surpassed the threshold of two papers per year. Therefore, the year 2016 was chosen as the starting year of this review.

Nowadays, search engines offer several filters capable of narrowing, at source, the search scope. Year and research field filters were applied to every database, according to its respective criteria, described in

Table 4, resulting in a reduced number of 378 articles. After screening and applying all exclusion criteria to these 378 papers found, the selected (remaining) papers had their references manually searched and compared against the exclusion criteria in a second search phase. This second search phase returned nine potential papers that added up to the 387 papers presented in

Table 5.

A two-step selection process was applied for selecting the relevant papers. A first sieve was made among the articles found, where the title, the abstract, the results, and the conclusions were analyzed, which resulted in a total of 42 papers. Then, a second and more thorough and in-depth read of the 42 remaining papers was carried out to confirm whether these papers did not present evidence of fitting the exclusion criteria in other sections that were not previously analyzed. After this second step, a total of 32 papers remained.

Afterward, a quality assessment procedure has been applied to evaluate the selected papers. According to Fernández del Amo et al. [

78], in case any biases or contradictory ideas appear when analyzing selected-relevant papers, quality assessment results can be used to provide more transparent, repeatable findings. Eleven Quality Criteria (QC), presented in

Table 6, were chosen for the quality assessment procedure. QC1 and QC2 are quantitative metrics extracted from the databases and represent the normalized number of citations per year and the normalized impact factor, respectively.

The Normalized number of citations per year (QC1) is defined by the equation:

where

is the paper number of citations and

is the largest number of citations among the selected papers within the same publication year. This was used as a metric to compare the relevance of each paper in comparison to others published in the same year.

In addition, the Normalized Impact Factor (QC2) is defined by the equation:

where

is the impact factor from Scimago Journal Rank,

is the impact factor from Clarivate Analytics Journal Citation Reports, CiteScore (

CS) is the impact factor from Elsevier, and

is the highest number obtained from the sum of those three impact factors. This was used as a measure to compare the relevance of each journal.

QC3 to QC10, also presented in

Table 6, are subjective metrics and their score are derived from the authors’ analysis of the selected papers. All the 32 selected papers were evaluated against each criterion with a score of 0 (no compliance), 0.5 (partial compliance), or 1 (full compliance).

Table 7 presents the main author country and the paper publication year. The selected paper’s quality assessment is presented in

Table 8, detailing the score attributed to each paper, either to the quantitative and qualitative criteria. It is important to note that these results were not used further in this review since no bias or contradictions were found in the analysis of the 32 papers. Despite that, according to Fernández del Amo et al. [

78], the quality assessment is a process prone to bias itself; therefore, the mean and standard deviation for each criterion is provided as a tool for numerical validation of a potential author bias in the assessment process. Not considering the quantitative criteria (QC1 and QC2), the papers that were perceived with the subjectively higher quality were Kalpagam et al. [

49] and Chan et al. [

88], followed by Materna et al. [

89], Hietanen et al. [

51], and Tsamis et al. [

90], with a score of 7.5 out of 8 for the first two, and 7 out of 8 for the following three, respectively. If one considers the quantitative criteria, the order of relevance changes to Hietanen et al. [

51], Kalpagam et al. [

49], Chan et al. [

88], Materna et al. [

89], and Michalos et al. [

91] figuring out in the last place instead of Hietanen et al. [

51] on the top 5, for a small score difference of 0.025 points. This last place change may or may not revert, due to the quantitative criteria, as well as other papers’ classifications, over time, since newer papers will eventually be more cited in future works.

In

Table 9, the papers that do not have a database associated with it as “Found by the search string” were found when searching the references of selected papers and added manually; therefore, they were not found by the search string. Moreover, special attention must be paid to the Terminology columns, where the “String Combination” column represents the combination of keywords used on the search string to find the paper, and the third column represents the classification of the papers, whether it is a cooperative or collaborative application according to the definitions presented in

Section 2. Since the terminology of mixed reality used in this work encompasses the augmented reality definition, there is no point in classifying the papers according to reality enhancement terminology.

3.4. Syntheses and Analysis

The synthesis and analysis phase consisted of a thorough examination of the selected papers for extraction and classification of data that could further be organized to highlight relevant information to the research scope. To do so, several topics were identified to be analyzed; they are: Application field, Robot type (collaborative or not-collaborative) and User interaction mechanisms; Visualization and Tracking methods; Research type and Evaluation techniques; Demographic (Authors, Country, and publication year); and, finally, Database, Publication venues, and Associated Terminology.

The evaluation of these synthesized data is expected to draw out discussions and conclusions that can answer the proposed research questions. It also aims to map how each theme was covered by each paper, find a correlation between them and identify the research field poles, evolution over time, and search mechanisms.

Table 10 presents some of this information extracted from the selected papers. Please note that Lee et al. [

97], in the Robot column, does not mention what robot was used, and where there is only the manufacturer, as in papers Andersen et al. [

93], Liu and Wang [

94], Mueller et al. [

102], Bolano et al. [

106], Lotsaris et al. [

111], and Andronas et al. [

113], the author does not specify what robot was used, but it was possible to identify the robot manufacturer through figures.

4. Systematic Literature Review Analysis

4.1. Database, Publication Venues and Terminology

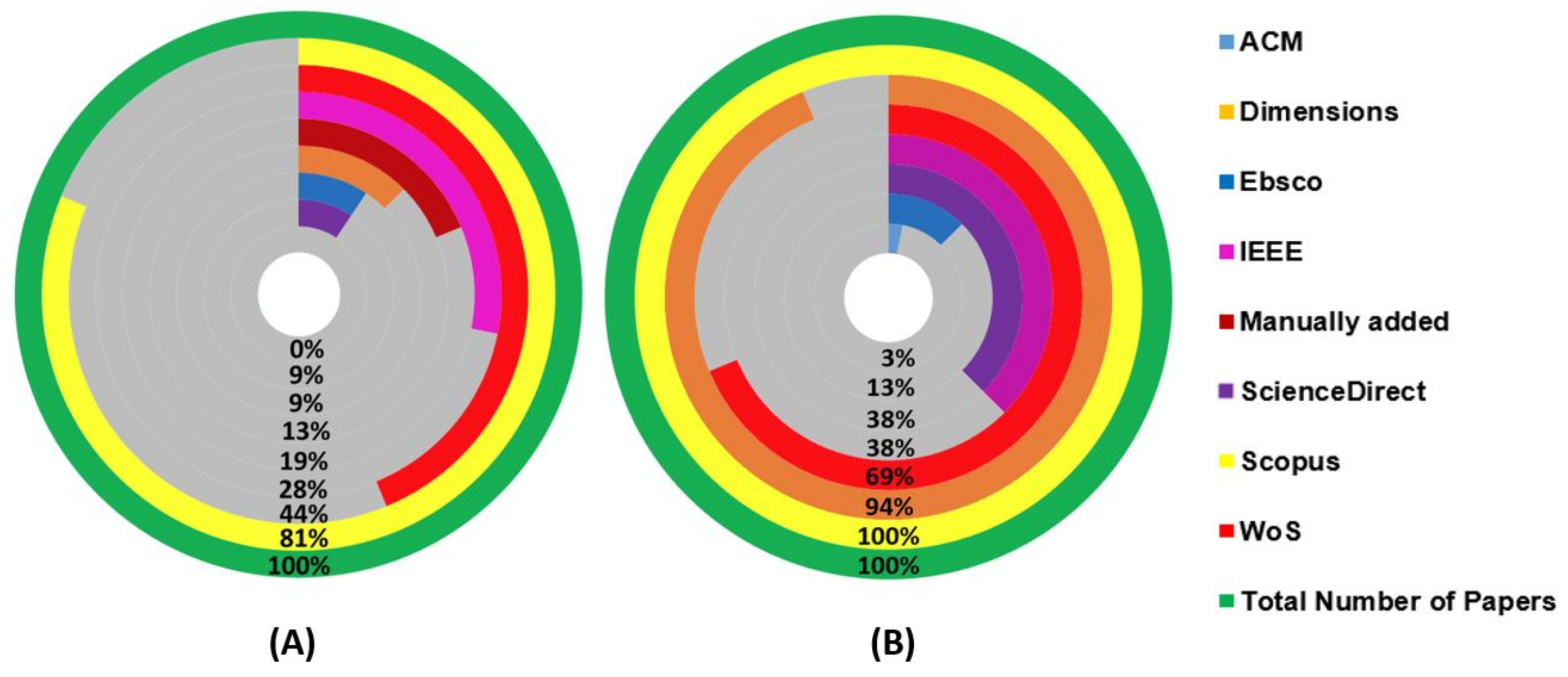

As can be seen in

Table 9, several papers have been found in multiple databases, indicating that there are some overlaps. Out of the 32 selected papers, 26 were found by the research strings. All of those 26 papers could be found on the Scopus search engine. Web of Science was the database with the second most found papers, followed by IEEE Xplore, as can also be seen from

Table 9.

Using the title of each selected paper a manual search was done in every database to verify if the databases contained a selected paper that was not found by the search string.

Figure 4A shows the percentage relative to the number of papers found by the search string in each database while

Figure 4B shows the number of selected papers that could have been found in each database.

Figure 5 shows the overlap between them, from which it is possible to infer that using just one of the databases does not guarantee that every paper regarding a subject will be covered.

One of the reasons why the databases did not find all selected papers might be because some of those that were manually added did not have the search keywords on the searched fields (title, keywords and abstract) due to author’s choice, probably because the searched terms were not the focus of the work. Moreover, one was under the Human–Robot Interaction keyword, which if searched would have returned a numerous amount of papers out of the scope of this research.

From these figures, it is possible to conclude that, for this specific field of study, there is no need for searching relevant articles in multiple databases, since most of them can be found using the Scopus search engine. Although other databases, such as Dimensions.ai, also have a great number of papers in their database, the search engine somehow could not find as many papers as Scopus did, using the same search string. There can be two reasons for this: either the search string is too restrictive, or the Dimensions’ search engine is not as flexible as Scopus or Web of Science ones.

Understanding that Dimensions, Scopus, and Web of Science are indexing services databases, it was expected that they would find most of the papers, which did not happen with the Dimensions one when using the search string, as mentioned before. Regarding the other databases, which provide archival services, the one that presented the most papers when searched with the proposed search strings and with the manual search was the IEEE Xplore.

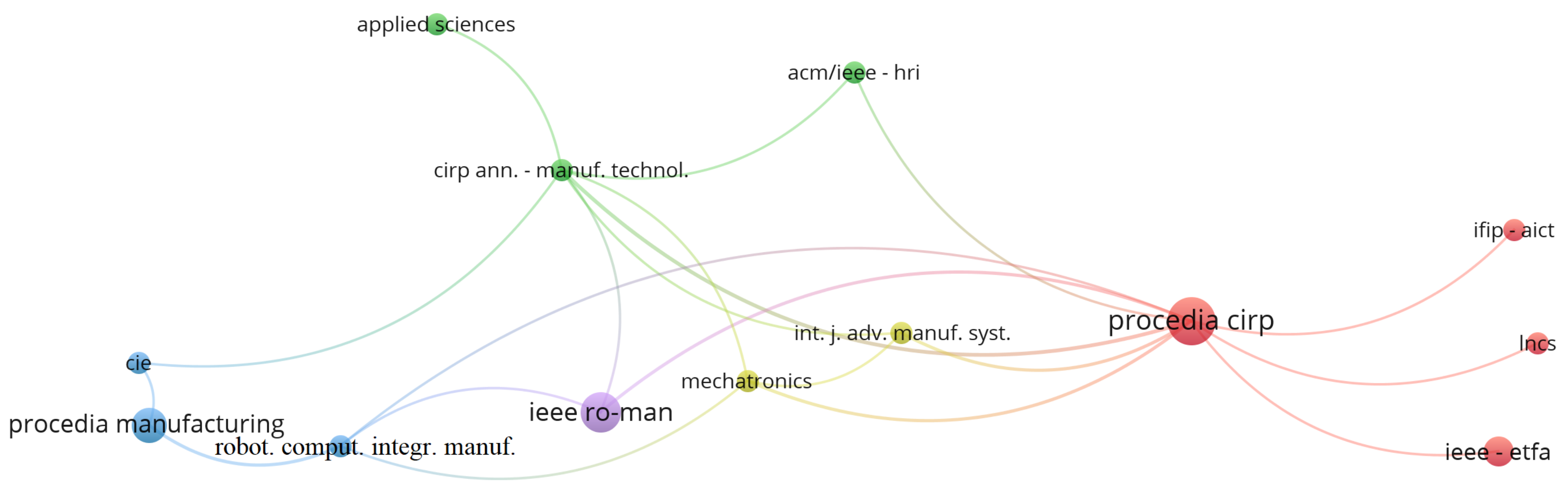

With respect to publication venues, the one with most papers found was Procedia CIRP, followed by IEEE International Conference on Robot & Human Interactive Communication (RO-MAN) and Procedia Manufacturing, respectively. Details can be seen in

Table 7 and in

Figure 6.

The string combination, second column, in

Table 9 presents the combination of terms that were used on the search string for finding that paper.

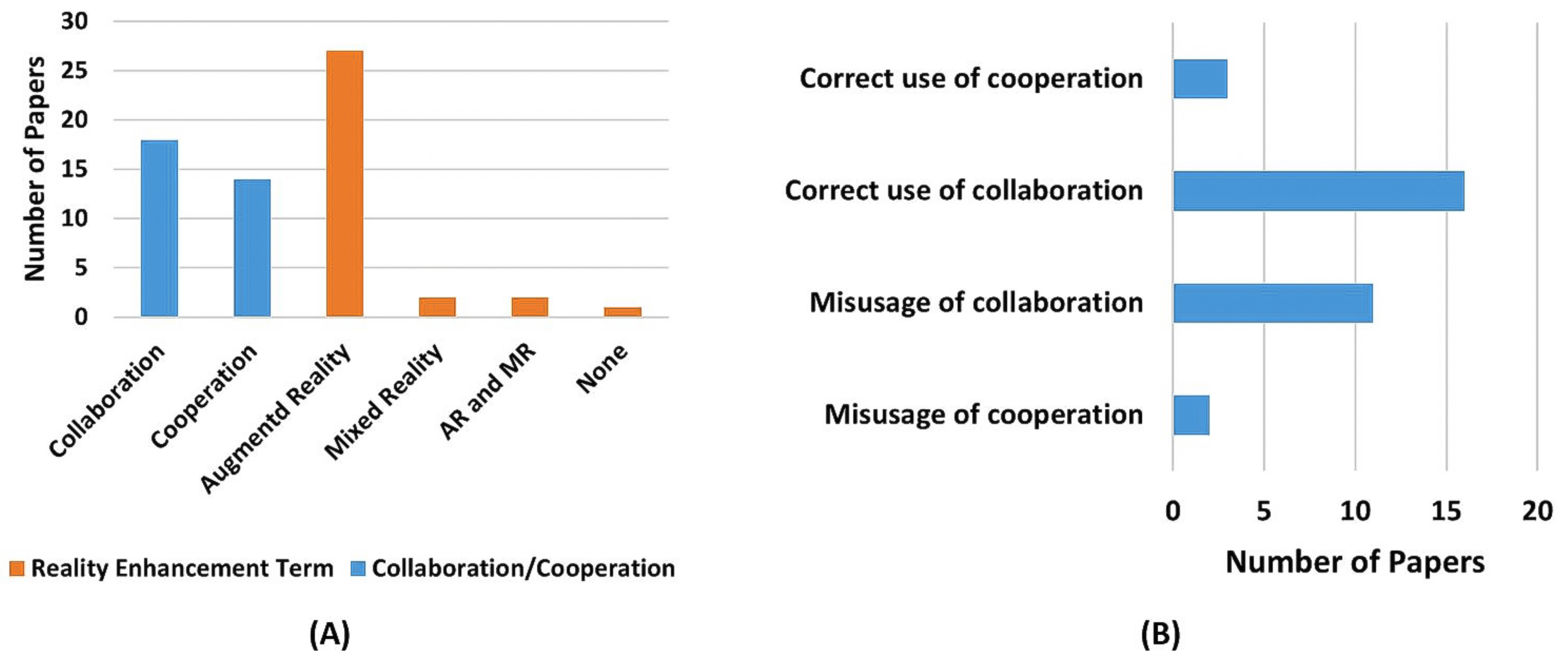

Section 2 definition, third column, presents the classification of the papers application as cooperative or collaborative according to the definitions presented in

Section 2. As can be noticed in

Figure 7A, the most commonly used terms for each category are Augmented Reality and Collaboration.

It also shows that, despite blurry to standard definition [

67], the usage of the term AR is more widespread for referring to reality enhancement technology than the term MR. On the other hand,

Figure 7B corroborates Vicentini [

7] the statement that the terms’ cooperation and collaboration are prone to misguided usage.

Figure 7B was achieved by comparing the selected papers used, cooperation or collaboration, and terms to describe the activity, against the definitions presented in

Section 2.

In the end, 18 papers were classified as collaboration and 14 as cooperation, by evaluating their application according to the definitions presented in

Section 2. This can be seen in

Table 9.

4.2. Demographic

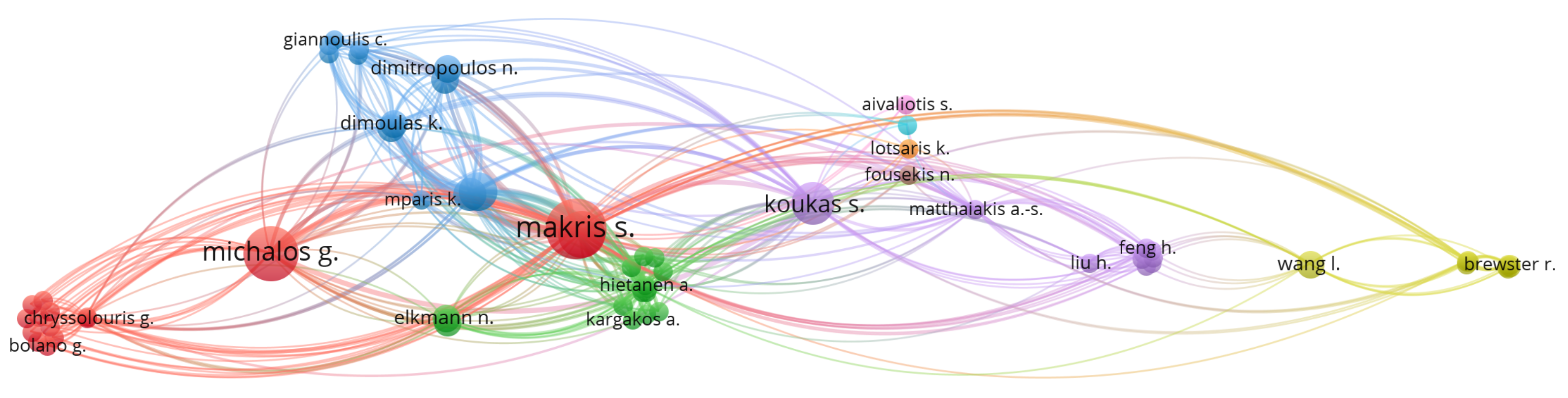

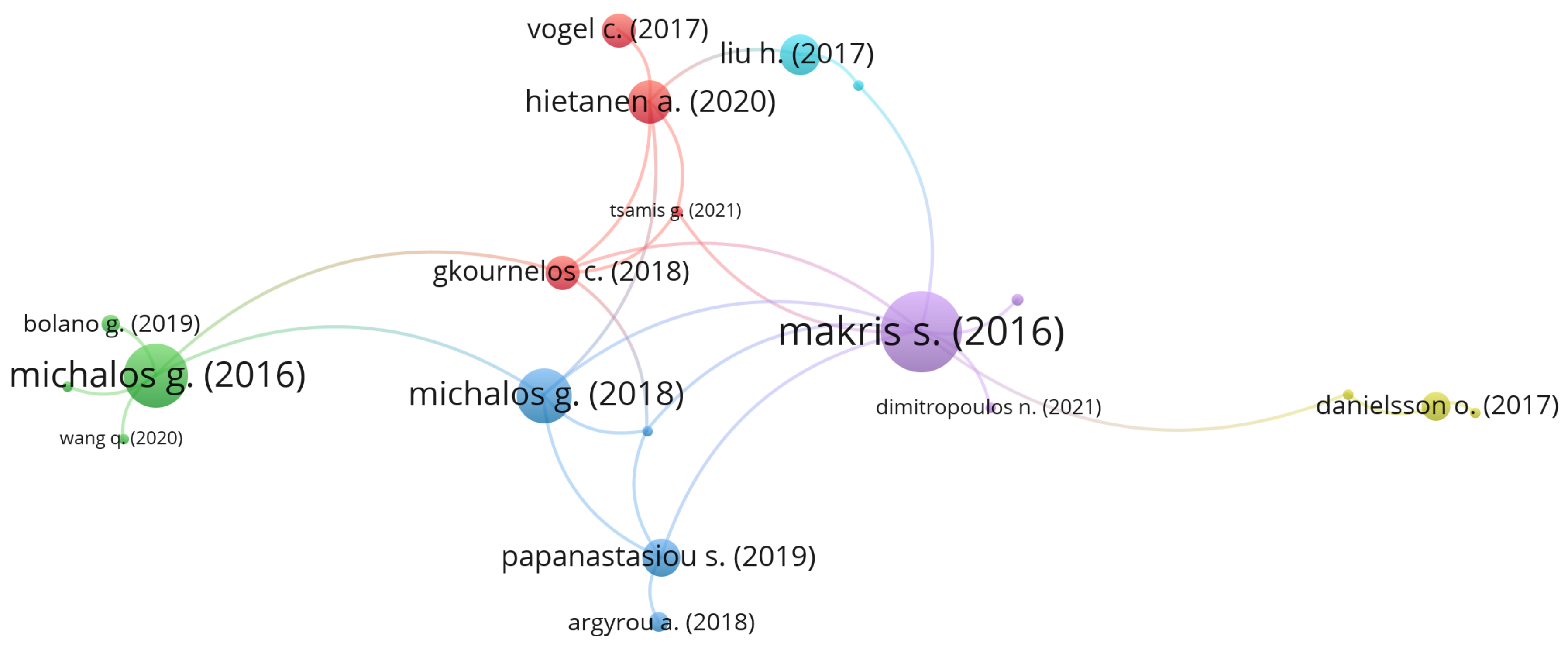

For extracting bibliometric networks, the VOSviewer [

116] software tool was used. These networks may for instance include publication venues, researchers, or individual publications, and they can be constructed based on citation, bibliographic coupling, co-citation, or co-authorship relations. Based on co-occurrence Analysis, where the relatedness of items is determined based on the number of documents in which they occur together, and on citation analysis, where the relatedness of items is determined based on the number of times they cite each other, some interesting conclusions can be drawn.

From

Figure 8, which describe the co-occurrence correlation between the authors used keywords, it is possible to infer that the Augmented Reality and Human–Robot Collaboration terms that have the largest number of co-occurrence, as expected; on the other hand, the terms Human–Robot Cooperation and Mixed Reality did not have as great an influence in comparison with the first two. Another conclusion that can be dragged out from this figure is that Assembly and Safety applications concentrate the greatest amount of research on AR for the human–robot collaboration and cooperation field.

Regarding

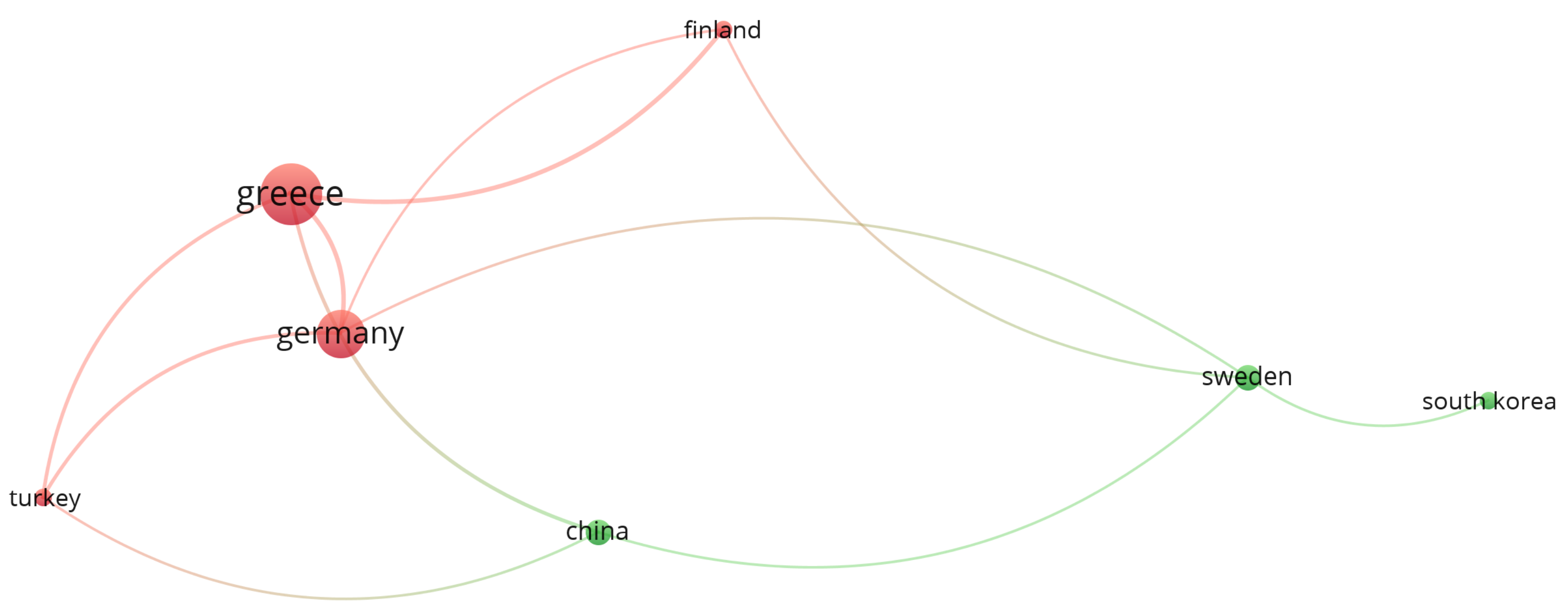

Figure 9, which presents the author citation correlation between the selected papers, it is possible to identify that Makris, Michalos, and Karagianis are the most referenced authors, among the selected papers. This is because the three of them are co-authors on very cited papers, such as Makris et al. [

92] (171 citations) and Michalos et al. [

91] (143 citations), as can be seen in

Figure 10 and the authors’ correlation in

Figure 11. This influence can also be seen in

Figure 12, where Greece is the most cited country followed by Germany. Another fact that corroborates with this last statement is the number of papers found by country, which can be seen in

Table 11, where Greece and Germany have the largest number of selected papers followed by the United States and China. If related works were counted as one, Greece’s number of papers would be reduced to 6, shortening the distance between the countries but not changing the current ranking. In the future, when a larger sample might be available for analysis, the country with the most published papers might alternate according to the willingness of researchers of each country to further study the augmented reality for human–robot collaboration and cooperation topics.

With respect to publication venues, the most cited one was Procedia Cirp, followed by IEEE ROMAN and Procedia Manufacturing, which could be expected since they are the top three publication venues with the most found papers and also have a significantly high impact factor, as can be seen in

Figure 13.

Finally,

Figure 14 presents the number of papers per year. It is possible to infer from its trend line that, in this moment, it is not a fast growing research field, slowly attracting the interest of researchers and yet to be considered matured.

4.3. Robot Type

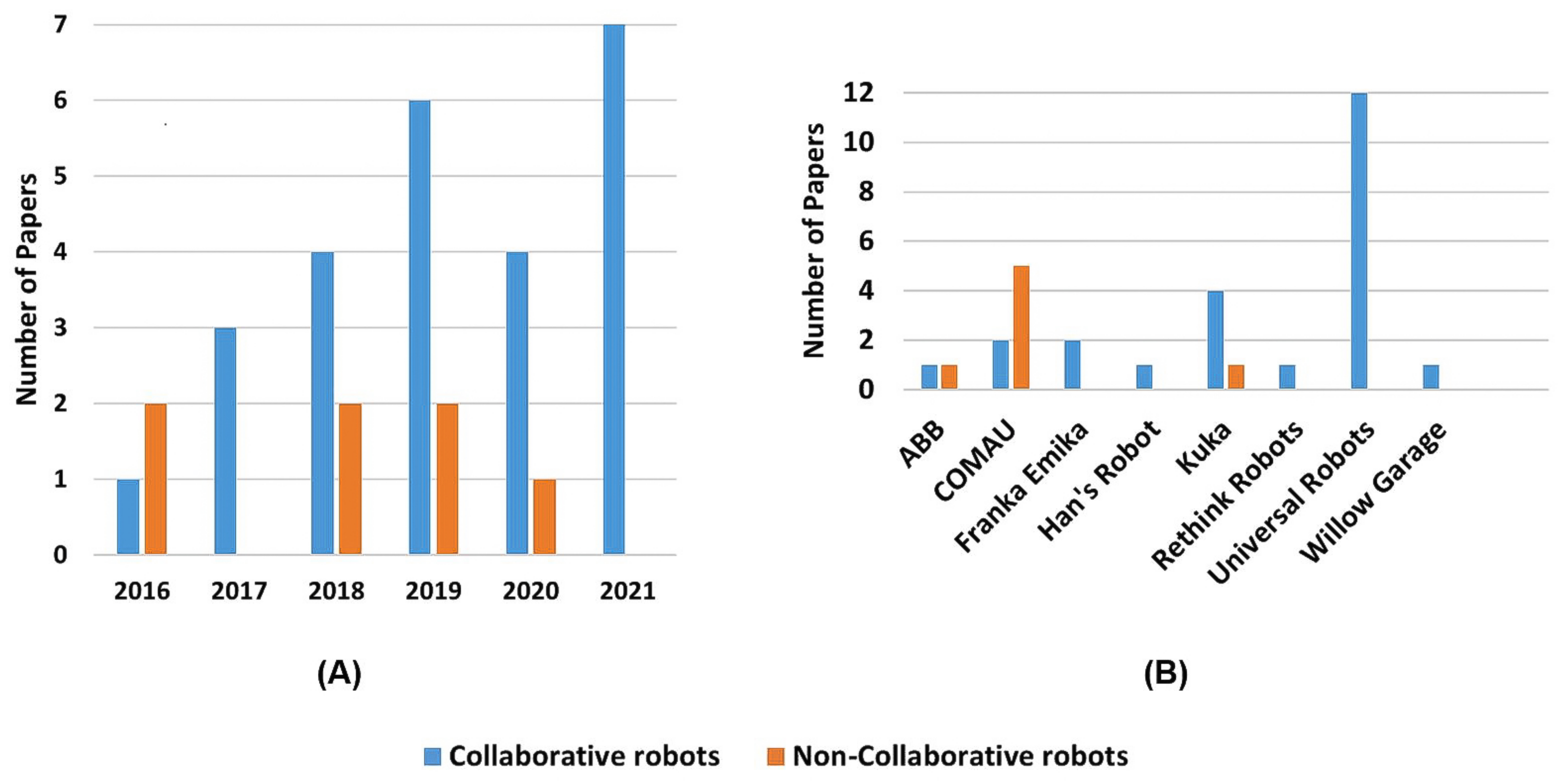

Figure 15A clearly shows that the research focus, regarding the augmented reality for human–robot collaboration and cooperation field, is growing towards the usage of collaborative robots, while there is not as much interest in cooperative usage of non-collaborative robots. Regarding the selected papers, 78% use collaborative robots while only 22% developed works using non-collaborative ones.

Even though non-collaborative robots can be used for cooperative applications, if correctly configured with features to provide a safe interaction,

Figure 15A may sustain researchers’ interest in maintaining industrial non-collaborative robots to mechanized repetitive burdening tasks, where there is no need for human intervention. In addition, the fact that most non-collaborative high payloads robots are large eventually makes them cumbersome for collaborative tasks in smaller workspaces, being used only for larger applications only.

Figure 15B shows a clear researcher’s preference towards Universal Robots cobots usage for collaborative applications. This may be because UR is one of the main manufacturers of collaborative robots and the existence of open-sourced application programming interfaces (APIs) for control and simulation of robot actions, as well as establishing remote or local communication with the robot. As for non-industrial robots, COMAU is the leading manufacturer used in research for human–robots’ collaboration and cooperation.

4.3.1. Non-Collaborative Robots

Makris et al. [

92], Gkournelos et al. [

98] and Michalos et al. [

99] use a non-collaborative COMAU NJ 130 robot as a means of aiding the operator to load a car axle and rear wheel group to fixtures, where the human operator can perform the necessary tasks. Similarly, Michalos et al. [

91] uses a COMAU NJ 370 for the same application of handling a rear-wheel group to fixtures. Gkournelos et al. [

98] also uses a COMAU Racer 7 non-collaborative robot for applying sealant in specific parts of a refrigerator, in an assembly operation, and so does Papanastasiou et al. [

105]. All of the above-mentioned papers are related to each other, thus being extensions of the same work.

On the other side, Ji et al. [

101] uses an ABB IRB 1200 non-collaborative robot for assembling smaller machined parts in the same assembly station, while Vogel et al. [

109] uses a Kuka KR60L45 non-collaborative robot for aiding the operator on transferring workpieces from one station to another.

4.3.2. Collaborative Robots

Andersen et al. [

93] used a UR cobot for a pick and place task, where the robot and the operator, alternately, change the position of a box over a table. On the other hand, Tsamis et al. [

90] also uses a UR manipulator for a pick-and-place task, but for exchanging parts from a workstation to a different one. Liu and Wang [

94], Argyrou et al. [

96], Hietanen et al. [

51] uses a cobot to aid the operator on a car engine assembly process, performing parts handling and mounting. Similarly, Danielsson et al. [

48] on its turn uses a UR3 for assisting the operator on a wooden car toy assembly task. In the same model, Kalpagam et al. [

49] uses a UR5 robot to support the operator on a car door assembly task. Mueller et al. [

102] uses a UR cobot for an aircraft riveting process, where the robot would correctly position the anvil while the operator handled the riveting hammer. Bolano et al. [

106] uses it to screw bolts on a metallic part, while the operator places the bolts on the next part. Wang et al. [

107] uses a cobot to aid the operator on an automotive gearbox assembly. Lotsaris et al. [

111] uses cobots to aid in the process of assembling the front suspensions of a passenger vehicle, by moving and lifting heavy parts. Finally, Andronas et al. [

113] uses a UR manipulator to assist the operator to assemble a powertrain component, performing the lifting of heavy parts and fastening operations.

Vogel et al. [

95], Paletta et al. [

103], Kyjanek et al. [

104] and Chan et al. [

88] made use of Kuka collaborative robots. Vogel et al. [

95] uses a Kuka iiwa LBR 14 for assisting the operator to screw mounting plates to a ground plate in a cooperative assembly task, while Paletta et al. [

103] uses a Kuka iiwa LBR 7 on a toy problem, where the cobot assists the operator on a pick and place task, where the goal is to assemble a tangram puzzle (toy problem). Kyjanek et al. [

104] employs a Kuka cobot to support the human operator on a non-standard wooden construction system, where the cobot position and holds the part in the correct location while the operator attaches them. Finally, Chan et al. [

88] works with a Kuka manipulator on a large-scale manufacturing task, where the robot and the human operator work together on an aircraft pleating task.

Lamon et al. [

100] and De Franco et al. [

50] employed Franka Emika’s Panda cobot for two different applications. Both authors use the cobot for assisting the operator in the assembly of aluminium profiles, by holding the piece in the right location while the operator attaches them. Moreover, De Franco et al. [

50] also presents a second application where the robot and the human operator work collaboratively on a surface polishing task, where the operator guides the manipulator, with a polishing tool as the end effector, controlling the amount of force applied over the surface. These papers are related to each other and thus can be considered an extension of each other regarding the aluminium profile assembly operation.

Dimitropoulos et al. [

114] and Dimitropoulos et al. [

115] utilize two different COMAU collaborative robots. Both Dimitropoulos et al. [

114] and Dimitropoulos et al. [

115] use the high payload collaborative robot COMAU AURA for assisting the human operator on mounting an elevator, more specifically, the cab door panel hangers, where the robot lifts the heavy parts and position them in an ergonomic position for the operator to perform the necessary tasks. Dimitropoulos et al. [

115] also uses a low payload collaborative robot COMAU Racer 5 to aid the operator in assembling large aluminium panels; in this case, the robot does not perform any lifting; instead, it performs riveting actions around the panel. These papers are related to each other, thus, they can be considered an extension of each other regarding the elevator assembly operation.

For assisting the operator on a stool assembly process, where the robot picks the next part to be assembled from a storage bin and places it over the mounting table, Materna et al. [

89] makes use of a Willow Garage’s PR2 robot. Hald et al. [

108] for a drawing application (toy problem), where the objective was to assess the operators’ trust towards the robot as the robotic manipulator speed changes, takes on the Rethink Robotics’ Sawyer cobot. Finally, Luipers and Richert [

112] uses an ABB YuMi for handover tasks.

4.4. Application Field

Although one may argue that assembly operations is a term that comprises smaller activities such as riveting, gluing, even material and tools handling, or even part placement (pick-and-place activity), this paper decided to consider the combination of these smaller activities as one. The same is true to the fact that the pick-and-place activities were considered in this paper to embrace exclusively pick-and-place or handling-only activities.

Therefore, a great dominance of research covering assembly applications over pick-and-place and handover tasks can be noticed with a quick analysis of

Table 12, which could be expected since the majority of applications that involves human operators and cobots working together in industrial environments are towards optimizing the production line, where the robot takes care of repetitive and non-ergonomic tasks, while the operator focuses on more dexterous and cognitive activities. Of the 27 assembly applications presented, 74% employed collaborative robots, while 22% used non-collaborative robots. Regarding the five pick-and-place activities presented, four (80%) employed collaborative robots, while only one (20%) implemented a non-collaborative robot. The activities, considered in the “others” column, refers to De Franco et al. [

50] polishing and Hald et al. [

108] drawing activities. Both made use of collaborative robots. It is important to mention that some papers presented more than one application, therefore being considered once for each application.

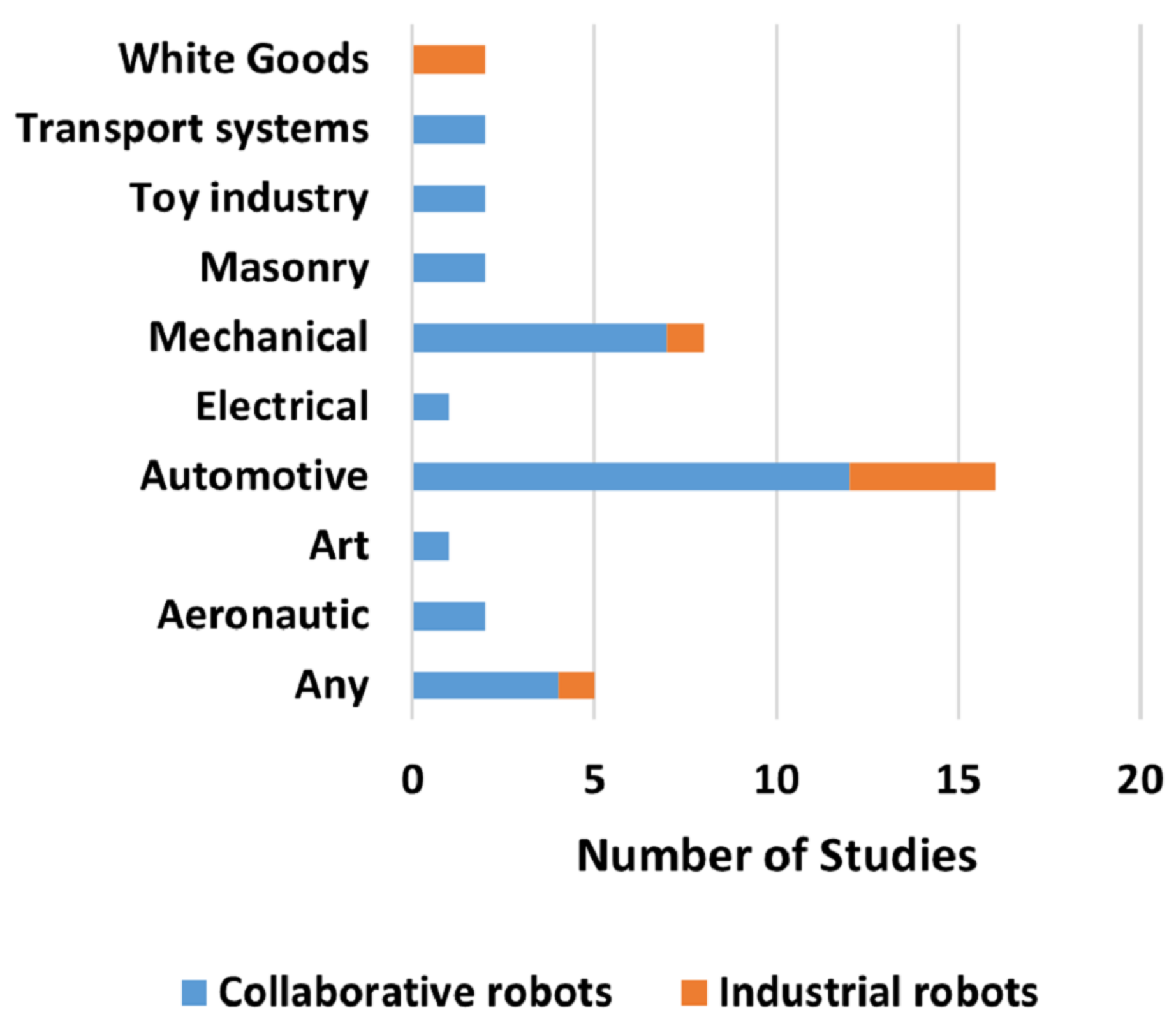

Moreover, it is possible to notice a greater research interest in industrial automotive applications, which is presented by

Figure 16. This may be because the automotive industry has always been a pioneer when it comes to testing emerging technologies and especially to the fact that it presents numerous challenges that are a perfect fit for evaluating different applications, from a small scale to a larger one. The term “any” in

Figure 16 indicates that the developed application does not target a specific industry.

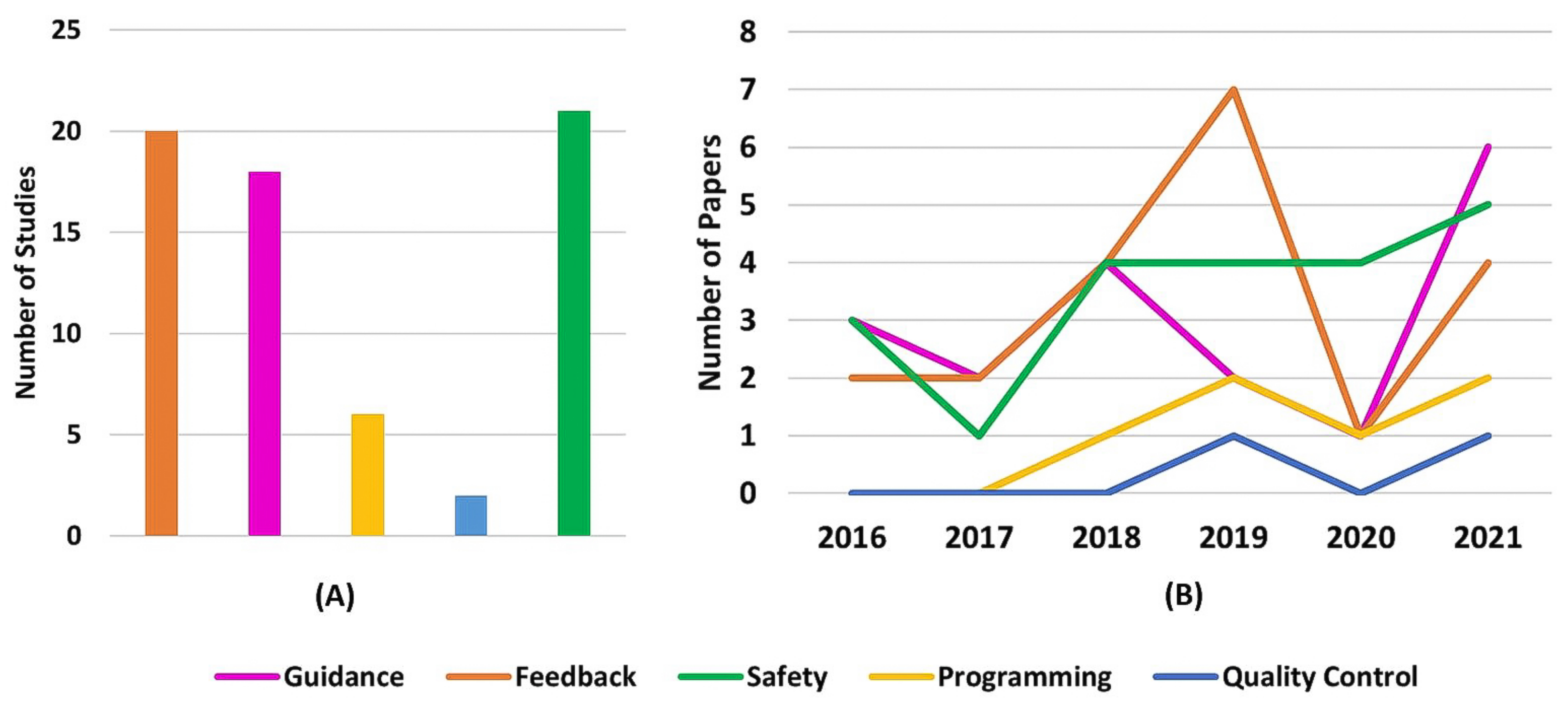

Regarding AR applications, it is possible to see three major areas of interest, which can be seen in

Table 13 and

Figure 17A. It is possible to see researchers’ great interest in the safety research topic, where researchers intend to use Augmented reality for improving, among other factors, the operator’s awareness. Following behind, in second place, is using augmented reality for inserting the human operator in the loop through relevant production and process-related data feedback. Moreover, in the third position, guidance applications highlight the interest in using AR as a tool to support operators throughout tasks.

Furthermore, since Augmented Reality for Human–Robot Collaboration is a growing research field, it is reasonable to expect that all categories have growing tendencies, despite some being steeper than others. This is ratified by

Figure 17B.

It is interesting to point out in

Figure 17B that the feedback category presents in 2020 a steeper declination contradicting the general upward tendency shown. This counter tendency movement happens since the feedback category was thought of as the application capability to provide extra information that would not fit any other presented categories. Therefore, after analyzing each 2020 selected paper’s features and augmented information, most were classified as Guidance, Safety Programming, or Quality Control. Despite that, in 2021, the feedback category followed the upward trend again. Therefore, it can be considered a sampling noise to the graph.

Andersen et al. [

93], Gkournelos et al. [

98], and Dimitropoulos et al. [

114] present more than one application and industry; therefore, they were considered multiple times in

Figure 17B and

Figure 18, one for each different application and industry.

4.4.1. Applications—Safety

This subsection describes the usage of Augmented Reality for enhancing operators’ safety in human–robot collaboration tasks. Aiming to enhance operator’s safety, Makris et al. [

92], Michalos et al. [

91], Gkournelos et al. [

98], Michalos et al. [

99], and Papanastasiou et al. [

105] use AR to project visual alerts, show the robot’s end-effector trajectory and enable workspace visualization. Those visual alerts can be a warning that the robot has started or stopped moving, a production line emergency stop, or any other general alert for example. The workspace visualization is composed of two holographic cubical safety volumes, one green, indicating the area where it is safe for the human operator to act within, and a red one, indicating the area where the robot will act upon and possible collisions may occur in case the operator enters it. These visual cues increase the operator’s awareness and may help avoid accidents on the shop floor. It is important to mention that those above-mentioned functionalities are for informational purposes only, meaning that the AR system does not detect whether the operator breaches the non-safe space or not, thus not triggering any protective stop or robot speed reduction. For these applications, an external, COMAU C5G controller, double encoder certified system is used to ensure operator safety during operations. In addition, Gkournelos et al. [

98] and Michalos et al. [

99] use for the automotive application the PILZ Safety Eye [

117] 3D camera system. Moreover, Gkournelos et al. [

98] and Papanastasiou et al. [

105] also use, for the white goods application, the AIRSKIN [

118] safety sensors.

Kalpagam et al. [

49] enhances operators’ safety perception by projecting onto the workpiece the robot’s intention through warning signs and highlighting the segment on which the robot will act upon, increasing the operator awareness of the robot intentions. Kalpagam et al. [

49] also projects warning signs and safety zones on the ground, highlighting in red the robot workspace, in yellow a caution zone, and in green, a safe zone, where there is no risk of immediate harm to the operator, as Vogel et al. [

109] also does. The difference between these last two is that, unlike Kalpagam et al. [

49], Vogel et al. [

109] projects a dynamically changing safety zone and also covers a larger area since it uses a non-collaborative large robot in its application. Another important difference is that Vogel et al. [

109] is capable of identifying if the caution/warning zone or the Robot/danger zone is breached, using KEYENCE Safety Laser Scanners [

119], and halting the robotic manipulator motion if so.

Using a projector-camera safety detection system, Vogel et al. [

95] projects dynamic robot working volumes onto the collaborative workspace. The projector highlights the area that will be occupied by the robot with a striped outline, and when the human operator breaches the safety borders, the camera detects a deviation of the projected lines, triggering a robot motion stop and changing the outline to a solid red color. This increases the operator’s awareness of the robot’s working area and may avoid potential hazards during the operation. Argyrou et al. [

96] projects warning signs at the operator’s field of view and on a graphical user interface on the operator’s smartwatch and also triggering safety protocols, based on the input of the application developed HRC monitoring system.

Combining a depth camera-based safety monitoring system with augmented reality, Ji et al. [

101] sends as feedback to the operator his distance to the robot and respective warning messages in different colors according to the safety level, and also sets the robot speed accordingly, even bringing the robot to an eventual full stop. In addition to that, visual safety alerts and the robot end-effector trajectory can also be shown to the operator. This application also allows the operator to edit the robot’s trajectory and to preview the motion by visualizing the robot’s digital twin performing the planned trajectory, enabling the operator to set a safer path for the robot avoiding possible collisions. Kyjanek et al. [

104] also projects the robot’s end-effector trajectory for the user and allows the user to make a trajectory planning. On the other hand, Chan et al. [

88] does not show the robot’s path after planning but keeps the waypoints, set by the operator, visible in a way that the user can have minimal knowledge of the robot working area and displays a digital twin robot so the user can preview the robot motion prior the execution.

To enhance the operator awareness and safety during operation, Bolano et al. [

106] projects, on the working table, a green outline around the piece which the robot is currently working on, as well as the specific screw it will tighten. Moreover, it can also render in 2D the robot’s planned trajectory swept volume or the end effector’s planned trajectory, according to the users’ preference. Therefore, the operator can be aware of possible collision areas. In addition to that, the developed system can also detect whether the operator or another obstacle obstructs the robot’s planned motion. It casts a red marker on the detected spot and an additional warning text is displayed to the user explaining the task’s current state, and the robot is stopped.

For monitoring, if the operator breaches the dynamically changing safety zones during the robot operation or not, Hietanen et al. [

51] developed a safety monitoring system based on a depth camera. Two approaches were adopted as visualization means of the safety zones. The first approach uses a projector, to render the boundaries of the safety zone in blue over the workstation. The second approach uses AR glasses, through which the safety zones’ boundaries are rendered as a solid virtual fence in red with a pre-determined height. In case of any boundary violation, the robot is led to a full stop. The paper also uses a virtual dead man’s switch for ensuring the operator’s safety when allowing the robot to perform the next actions.

Hald et al. [

108] projects over the paper the lines that the robot is going to draw. Similarly, Luipers and Richert [

112] also intends to show the user the cobot planned path so the user can be aware of the robot’s intended motion. Schmitt et al. [

110] projects in the workstation colored areas, being green and red for human and robot workspaces, respectively, and yellow for a collaborative task, where they share the same working area.

To make the user aware of potential danger, Lotsaris et al. [

111] communicates to the user when the manipulator platform starts a movement by displaying augmented notifications. In addition, the operator can visualize planar safety fields around the mobile platform. If any danger zones violation is detected, by the laser scanners, the robot is immediately set to a complete stop. Moreover, the user can see, through the HMD, the digital twin of the mobile platform, and the robotic arms at the trajectory’s final position, as well as a line that indicates the path to be followed.

Tsamis et al. [

90] also has a robotic manipulator mounted over a mobile platform. The developed augmented reality application enables the user to visualize the mobile platform and the manipulator’s planned trajectories by interpolating holographic 3D green spheres accordingly to the calculated waypoints. The manipulator’s maximum workspace is also shown to the user as a red semi-transparent red sphere, with its center always on the cobot base. Through virtual collision detection, the application is capable of identifying whether the operator is inside or outside a virtual geometric shape. Thus, this feature is used for detecting if the operator breaches the robot’s workspace. If so, the robot motion is halted and a potential collision warning message is shown to the user. Similar to Chan et al. [

88], the application also renders a digital twin of the robot with which the operator can preview the planned motion, but, unlike Chan et al. [

88], the user cannot edit the planned path.

Last but not least, Andronas et al. [

113] uses augmented reality to show the user the robot trajectories and safety zones. However, differently from other applications, this one uses, in addition to visual warning messages, audio notifications as well.

Some other papers mention safety features but do not integrate them into augmented reality. Liu and Wang [

94] uses a depth sensor for monitoring the distance between the robot and the operator, but no AR cues are displayed to the user. Paletta et al. [

103] tracks the user head and hands with a motion capturing system and computes the distance to the nearest robot part; based on that and on the participant’s stress level, the robot arm’s speed is re-adjusted downwards to a potential full stop. In addition, Dimitropoulos et al. [

114] uses the vibration feature of a smartwatch as cues for safety alerts.

It is important to mention that, even though some approaches are capable of detecting through AR whether the operator breaches a non-safe working area and acts upon the robot by reducing its speed or even stopping it, most of the above-mentioned AR safety functionalities are not certified but still can be used as an extra layer of protection.

Moreover, an interesting classification can be done regarding whether the presented approaches are active or passive agents ensuring the user’s safety. Approaches that are not capable of monitoring the current state of the operation, by meaning of identifying a breach in the safety zones or anticipating a possible collision by monitoring or predicting the operators’ movement and comparing it against the robot current planned trajectory, and thus do not act to prevent an accident by stopping or reducing the robot speed or even changing the robot trajectory on the fly, can be considered passive approaches, while those that are capable of acting upon the system to prevent accidents can be considered active approaches.

Most of the papers, such as Makris et al. [

92], Michalos et al. [

91], Gkournelos et al. [

98], Michalos et al. [

99], Papanastasiou et al. [

105], Andersen et al. [

93], Kalpagam et al. [

49], Kyjanek et al. [

104], Chan et al. [

88], Hald et al. [

108], Luipers and Richert [

112], Schmitt et al. [

110], and Andronas et al. [

113], present only safety informational content and do not act directly to prevent accidents or collisions. Therefore, they can be classified as passive approaches. On the other hand, as discussed above, Vogel et al. [

109], Vogel et al. [

95], Argyrou et al. [

96], Ji et al. [

101], Bolano et al. [

106], Lotsaris et al. [

111] and Tsamis et al. [

90] presented active approaches towards assuring the operator’s safety.

4.4.2. Applications—Guidance

This section describes the usage of Augmented Reality as a guidance tool to assist the operator in a human–robot collaborative task.Makris et al. [

92], Michalos et al. [

91], Gkournelos et al. [

98], Michalos et al. [

99] and Papanastasiou et al. [

105] used Augmented Reality to display textual and 3D modeled holographic instructions to the user. Through this feature, the operator can see all needed parts, tools and components for that corresponding task. The 3D holographic model of the components allows the operator to know exactly where and how each part should fit or be used. Gkournelos et al. [

98] does not use an AR guidance system for the sealing application.

For instructing the user on how to interact with a tool or the assembly item itself, Andersen et al. [

93] developed a system capable of projecting this information directly over the workpiece. It shows whether the operator should rotate it, to which direction and how much rotation was still needed—or whether the operator should move it, highlighting the final destination on the table and also showing the distance left for the manipulation to be successful. The values shown are updated in real time according to the operator’s action over the item.

Using a large screen, Liu and Wang [

94] displays assembly text instructions to the operator. Moreover, the developed system uses feature recognition to identify the parts and tools available in the workstation, and, if one matches the stored CAD file, a 3D label is rendered over it to facilitate its recognition by the user. According to the identified item, the correct instructions are also projected to the operator. The application renders some other visual cues such as a red arrow over the next piece to be manipulated by the cobot.

Making usage of augmented text instructions and 3D holographic representation of to be assembled items for highlighting locations and parts of interest in each step Danielsson et al. [

48] aims to assist the operator through an assembly process. The instructions are presented in the upper right corner so as not to cover the main part of the screen. Moreover, the highlighted parts or locations could blink or be static.

To aid the operator, Argyrou et al. [

96] uses augmented reality to present textual information on a virtual slate and colored arrows to highlight areas of interest and walk the operator through an engine assembly process. The application also uses the developed monitoring system to calculate the status of the current task and display the appropriate instructions.

Kalpagam et al. [

49] projects, on the workpiece and the ground, instructions to aid the operator through a door assembly process. This application shows for example how much the operator needs to move or rotate and how much is left for this interaction to be concluded. The values shown were updated in real-time according to the operator’s action over the item. It also shows the operator where to place specific parts and which ones to join. Moreover, it highlights needed tools and locations where the operator should move to during the task. The application also uses a projection mapping detection algorithm to identify when a task was correctly completed by projecting a confirmation sign to the user and automatically passing it to the next step.

Retrieving from a database, Ji et al. [

101] makes available to the user stored production instructions by rendering a combination of 3D colored arrows and text pointing at the location/item where the operator/robot should act over. The text has the same color as its respective arrow, and the instructions describe the action, the agent, and the step order.

By projecting information on the table when using the projector approach or through a virtual slate when using the Augmented Reality head-mounted display, Hietanen et al. [

51] communicates to the user through images and detailed text instructions describing the task being performed and the robot’s following actions—similarly, Andronas et al. [

113], through virtual slate projects’ task information and detailed instructions to the operator. Moreover, it also presents to the user a 3D holographic visualization of the part being assembled, highlighting where it should be mounted. The same concept is presented in Luipers and Richert [

112] where the author intends to use an AR feature to guide the user throughout an assembly operation by projecting a textual description of the current step. Lotsaris et al. [

111] on its turn makes use of multiple workbenches, so the developed application presents, in addition to the textual description of the task to be performed, indications showing in which workbench the task should be performed.

For the first application, Dimitropoulos et al. [

114] projects on top of the aluminum panel being worked with, textual instructions and task description along with illustrations of the parts and procedures that the operator has to execute. In addition, arrows and highlighted shapes indicate where each component should be assembled. The second application uses an HMD device to display to the user, animated 3D models and auxiliary panels containing information about the procedure being executed. It also indicates the position where each part should be assembled, as well as the required tools necessary for executing that task. Using the HMD approach, the user can switch between two levels of assistance, the higher one with detailed information and 3D holographic animations, or choose the basic one that just renders the part contour where it should be assembled. When being used at the highest level of assistance, the system is capable of identifying whether the operator is close to the hologram, disabling the graphics superimposed over the real part to enhance the visibility of the operator. Furthermore, the controller developed by Dimitropoulos et al. [

114] is also capable of detecting the operator’s actions and sending notifications if the operator is manipulating the wrong objects or performing wrong actions. In case the operator performs correct actions, the system will also identify it and move to the next step.

Lastly, Dimitropoulos et al. [

115] mentions the usage of AR instructions but does not describe how it was shown to the user. In the work, the HMD device is also used as an image capturing system that attempts to identify the step that the operator intends to execute and properly show instructions, as a means to make the user interaction more seamless.

4.4.3. Applications—Feedback

This section describes the usage of Augmented Reality for feedbacking useful information, apart from the previously mentioned guidance and safety data, to the operator in a human–robot collaborative task. Makris et al. [

92], Michalos et al. [

91], Gkournelos et al. [

98] and Michalos et al. [

99] provide, upon user request, shop floor status and production data. The received feedback contains information about the current and the next product to be assembled, the average remaining cycle time to complete the current operation, and the number of completed operations versus the targeted one.

In its turn, the system developed in Danielsson et al. [

48] shows the user the overall progress during the assembly process, informing the current and the total number of steps. It also shows the operator which voice commands are available in each step. The command text is highlighted in green if it was successfully recognized by the system or in red if the system was not sure about the speech and whether the command has been called or not, and therefore not triggering any function.

Making use of three projected auxiliary areas, one in the bottom plus one on each side of the workspace, Vogel et al. [

95] approach, projects textual messages and notifications to the user in case of errors and fault diagnosis and textual instructions on how to handle them. It also shows the user the current state of the robot and the task progress status.

The AR application developed in Lee et al. [

97] is capable of providing to the user real-time process data of a specific product, such as assembly blueprint, as well as its current production status. This blueprint is a general process overview, not step-by-step guidance throughout the operation. This is why it was classified as feedback and not as guidance.

On a more human–centered approach, the AR application in Paletta et al. [

103] projects into the user’s field of view, his current mental load, and concentration level, through a virtual yellow and orange bar, respectively. It also shows the users’ gaze, represented by a small blue sphere. Similarly, Andronas et al. [

113], besides the information presented as guidance or safety features, also gives feedback on the operator’s ergonomics by coloring a virtual small manikin’s body parts with a green, yellow and red color scale. The ergonomic analysis is done individually for each part of the body.

During the execution of the task in Materna et al. [

89], the user has access to the current state of the system and error messages, as well as the currently available actions in a notification bar close to the edge of the table. In addition, for error messages, a sound was played as audio feedback to the user. Moreover, for every part detected and picked by the robot, an outline and ID are displayed, where this part will be placed.

With one of the simplest user interfaces among all selected papers, Lamon et al. [

100] and De Franco et al. [

50] show to the operator just a text and a small sphere. Through the color change of the sphere and display of different texts, the interface is capable of indicating to the user the activity that is being performed by the robot, whether it has grasped an item or not, or even if it is in an idle state, waiting for user input. Furthermore, De Franco et al. [

50] presents a similar interface for the polishing task. In this last one, the text was changed for numbers that feedback to the user the magnitude of the force being applied to the surface, and the small sphere was changed for an arrow, which increases or decreases its size according to the amount of force being applied (the higher the arrow, the higher the force). The arrow also follows a green, yellow and red color code that represents how far the applied force is from the desired amount. Both Lamon et al. [

100] and De Franco et al. [

50] present a beep sound to alert the operator that the robot is moving.

Luipers and Richert [

112] presents a concept of an AR feature to give feedback to the user on the assembly status, which steps were concluded or not, as well as the overall progress of the process. Ji et al. [

101] goes a little further exhibiting production-related information, such as the running time of the task, the expected remaining time to conclude it, which task is being performed, the current and total number of steps, and how many components are available to be assembled.

Understanding that a lack of coordination between the agents of an aircraft riveting operation parts can severely damage the aircraft fuselage, the Augmented Reality application in Mueller et al. [

102] enables the user to see the current robot’s position on the other side of the aircraft fuselage during the riveting process. With a related idea, allowing the user to visualize the robot motion during an automobile gearbox collaborative assembly simulation, Wang et al. [

107] enables the user to train, learn the assembly process, and get used to working with a cobot.

By giving the user access to general production-related information, such as the current assembly step, the task completed percentage, the piece ID, how many parts have already been placed, and how many layers have already been built, Kyjanek et al. [

104] makes use of the augmented reality HMD communication capability to obtain these pieces of information through ROS messages. Moreover, the user can visualize the robot joints velocity, the exact location where the robot will place the part that is being manipulated, and also the position where the remaining parts should be assembled.

In Bolano et al. [

106], items that are within the workspace are outlined according to their current status. If the item is ready and available to be worked with, it is outlined in purple. If the robot is currently working on an item, this item is outlined in green. Lastly, if a possible collision is detected, a red outline is projected. As another feedback feature, the application also projects a representation of the workspace on the left side of the work table, so the operator can have a quick overview of it even if the robot is occupying and occluding the workspace. It also casts on the table textual information about the current state of the robot and error descriptions. This feedback is important to make the operator aware of the current status of the vision system. In this way, the operator can detect instantly if the robot has issues in the localization of the parts placed into the workspace.

In cases of failure, emergency, or exception handling, in Lotsaris et al. [

111], the operator gets informed with popup notifications that describe the type of emergency and give specific information on how to overcome it. Moreover, it also provides the user notifications about the activity’s current status. Similarly, in Tsamis et al. [

90], the robot’s current status is given as feedback to the user informing whether the motion execution or planning has failed or succeeded.

4.4.4. Applications—Programming

This section describes the usage of Augmented Reality for programming or re-planning the robot trajectory during a human–robot collaborative task.Materna et al. [

89] makes use of a touch-enabled table in combination with a projection system to enable the user to program the robot to execute an action more abstractly. It proposes the usage of pre-constructed instruction blocks which can be connected based on the success or failure result of the previous command, allowing for simple branching and cycling of the program. Those blocks were given a simple straightforward name (e.g., “pick from feeder”), and their parameters should be set individually. The programs with set parameters were colored in green, the ones that still had parameters to be set in red, and the ones that do not have parameters in gray. The robot parameters are set by kinesthetic teaching and the final position of the items by dragging the respective outline over the table. The author also simulates, using the Wizard of Oz approach, that the application had a user monitoring system when using two specific instructions (“wait for the user to finish”/“wait for user”). These instructions were expected to make the robot wait for the user to finish executing some activity, or to return to the center of the table, respectively. When executing the program, all instructions become gray and non-interactable.

The robot’s end-effector planned trajectory, in Ji et al. [

101], is represented by evenly spaced waypoints represented by small white spheres. The user can edit the initially planned path by making a pinch hand gesture, and dragging this waypoint to the desired location. Every time the user does it, the path is updated to satisfy the user’s modification. By using voice commands, the user can preview the planned trajectory being executed by the robot’s digital twin and, if satisfied, the user can confirm it with another voice command.

Abstracting the user from planning trajectories, Kyjanek et al. [

104] uses the ROS-based planning framework MoveIt! [

120] for generating the robot’s trajectory. In this way, by using a pre-programmed robot’s behaviors and spatial limitations in combination with the known position and shape of the already assembled structure, pressing the plan button triggers a generation and preview of a collision-free and valid robot motion. After the user’s confirmation, the planned path is sent to the robot controller for execution as a set of joint values.

To create a trajectory for the robot, in Chan et al. [

88], the user wearing the HMD looks at a point on the work surface and uses the voice command “set point” to set a waypoint. A virtual sphere is rendered at the desired position with an arrow indicating the surface normal. At any moment, the user can say the “reset path” command to clear any set waypoints. After done planning, the operator can use the “lock path” command and the trajectory points will be sent to a control computer running ROS that will compute the robot path and starts the robot motion.

The programming feature presented in Lotsaris et al. [

111] enables the user to command the robot to move towards a specific location or workstation inside the warehouse. The difference is that, for the workstation feature, holographic buttons with pre-defined coordinates are displayed over the workstation so the robot is always sent to the same location, whether other locations inside the warehouse can be chosen by clicking to any point inside the shop floor. In both cases, the digital twin of the mobile platform and the robot arm appears in the user’s field of view at the designated final position, as well as a line indicating the robot’s planned path.

Lastly, Dimitropoulos et al. [

115] developed two programming approaches. The first is a direct movement of the end-effector using manual guidance, where the operator grabs the dedicated handles installed at the gripper of the robot and moves the end effector to his preferred position. The second one is an indirect AR-based control of the end-effector position, through which the operator places a virtual 3D holographic end-effector at the desired position where the physical end-effector should move to.

4.4.5. Applications—Quality Control

This section describes the usage of Augmented Reality as means of quality assurance in a human–robot collaborative task. In Bolano et al. [

106], the developed system is capable of identifying defects on the manipulated parts, which needs to be inspected by the operator. To signal that human intervention is needed, the projection system casts a blinking marker over the respective piece, while, in Schmitt et al. [

110], the system is capable of identifying if the parts are correctly positioned and if all screws have been inserted and signaling it to the user. If any of these situations is not correct, a notification is projected in the same area used for instructions.

4.5. User Interaction Modality

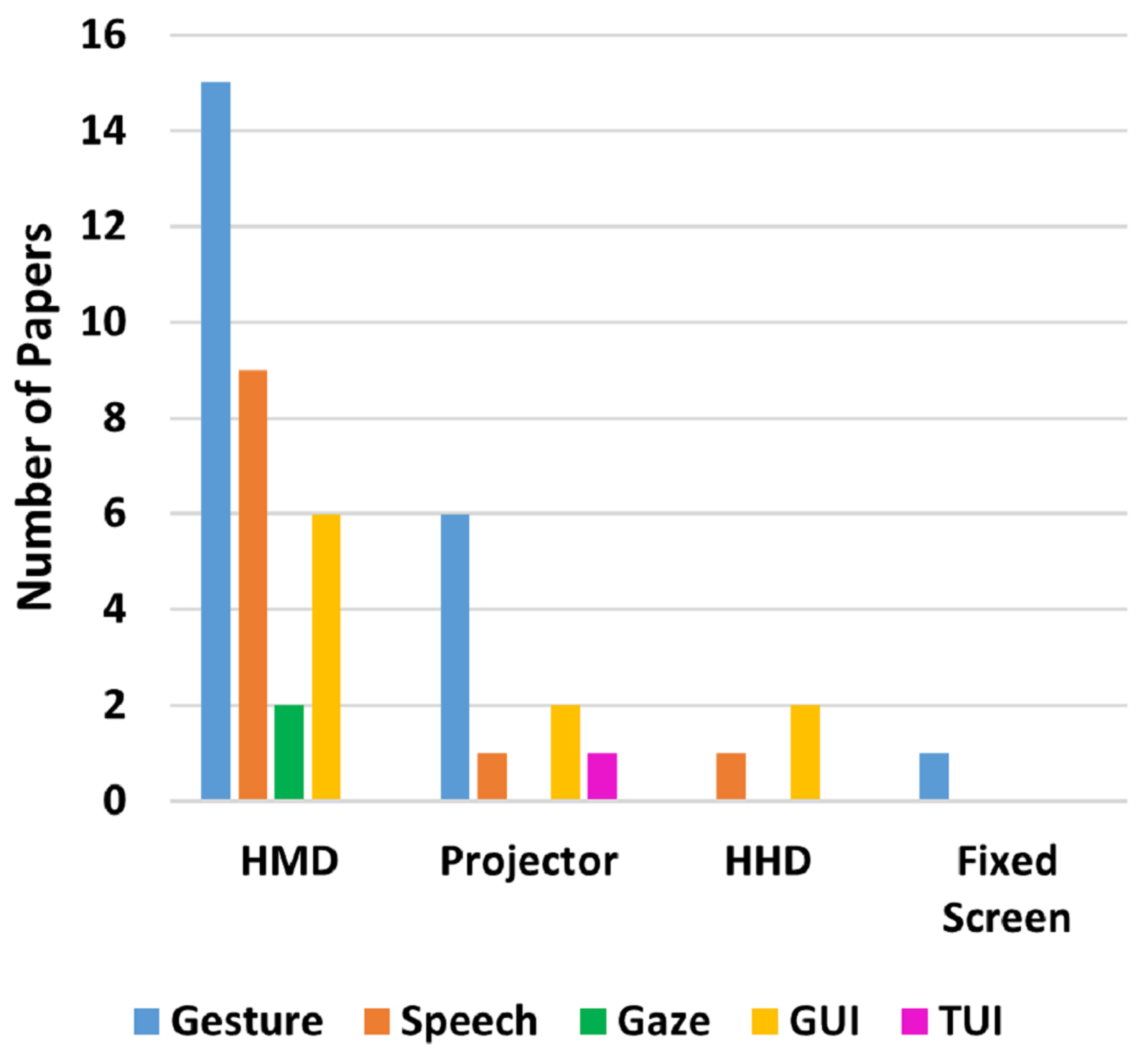

Most of the selected papers use gesture as the main interaction modality, as shown in

Table 14 and

Figure 18. This is because most systems used to superimpose the interface into the user field of view are also capable of tracking and recognizing gestures offering an easy and intuitive way for manipulating the augmented environment while maintaining focus on the task.

Gesture recognition is the process of recognizing meaningful human movements that contain useful information in human–human or human–robot interaction. Gestures can be performed by different body parts and combinations thereof, such as hands, head, legs, full-body, or upper body. Hand gestures have received particular attention in gesture recognition. Hand movement provides the opportunity for a wide range of meaningful gestures, which is convenient for quickly and naturally conveying information in vision-based interfaces [

121].

Speech recognition is the process of recognizing and appropriately responding to the meaning embedded within the human speech. By allowing data and commands to be entered into a computer without the need for typing, computer understanding of naturally spoken languages frees human hands for other tasks [

122].

Gaze-based interaction can be split into four categories, namely: Diagnostic, normally used for assessing one’s attention towards a task, which is mainly performed offline following its recording during the performance of some task; Active, normally used for command effects, which uses real-time data provided by eye trackers such as

x and