Vision-Based Defect Inspection and Condition Assessment for Sewer Pipes: A Comprehensive Survey

Abstract

:1. Introduction

1.1. Background

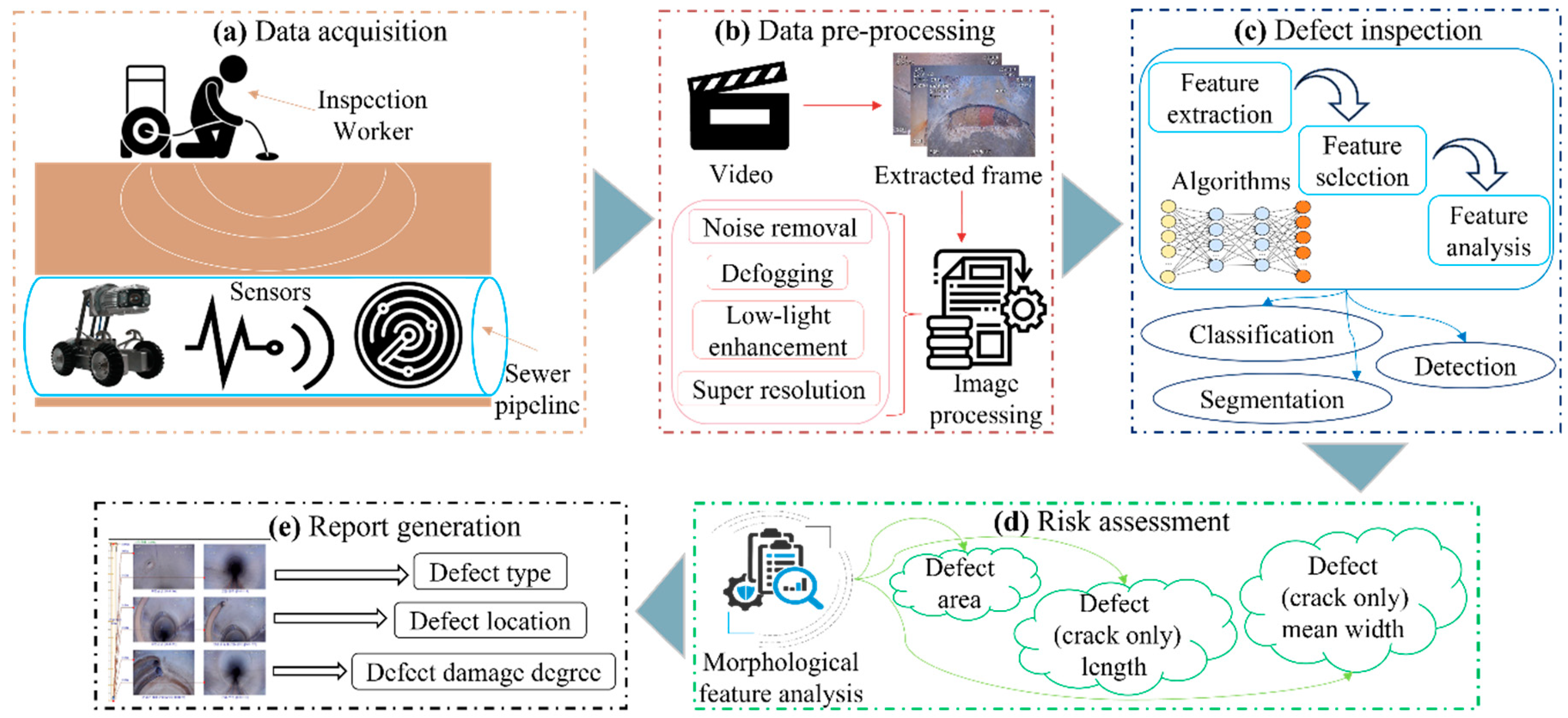

1.2. Defect Inspection Framework

1.3. Previous Survey Papers

1.4. Contributions

2. Survey Methodology

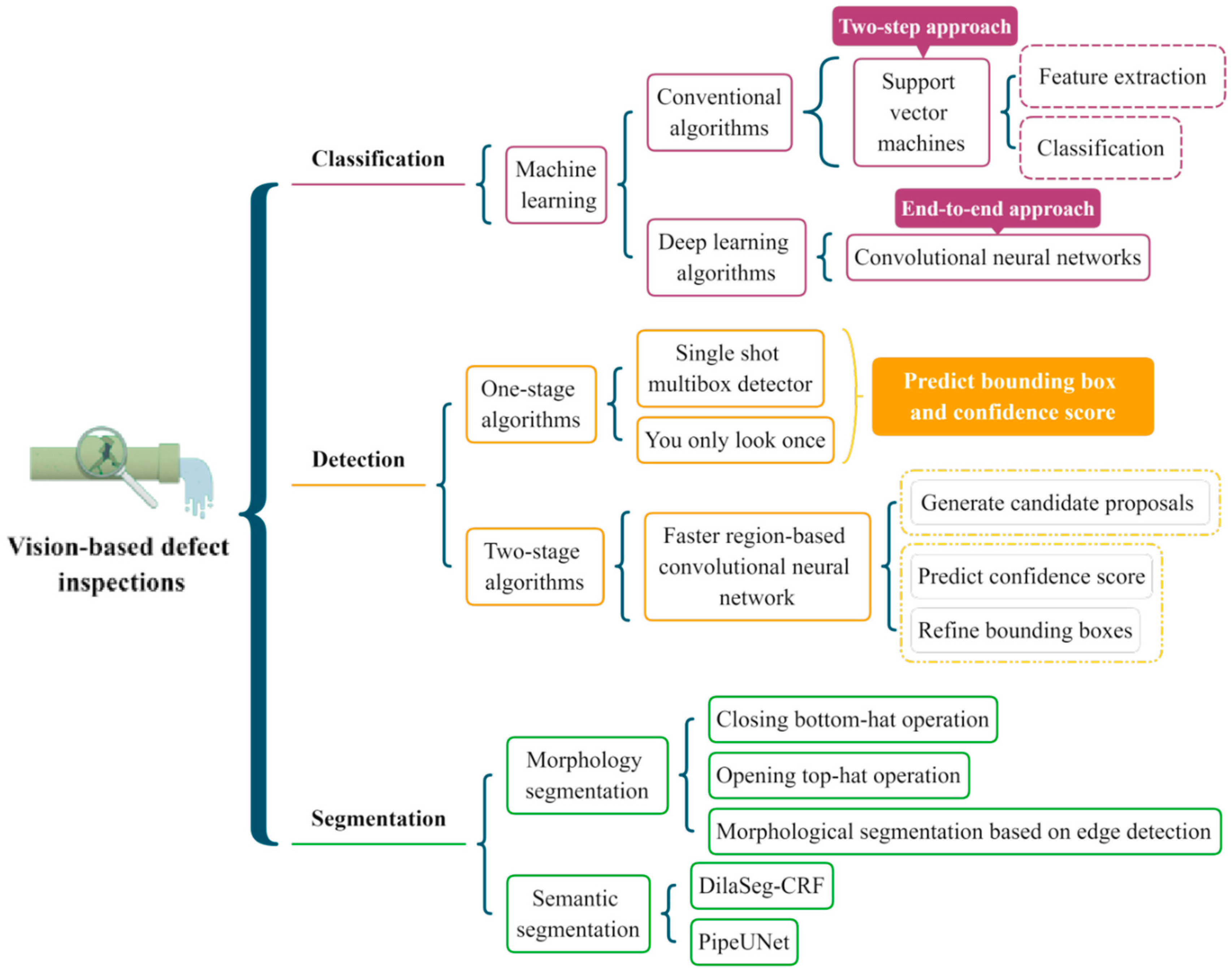

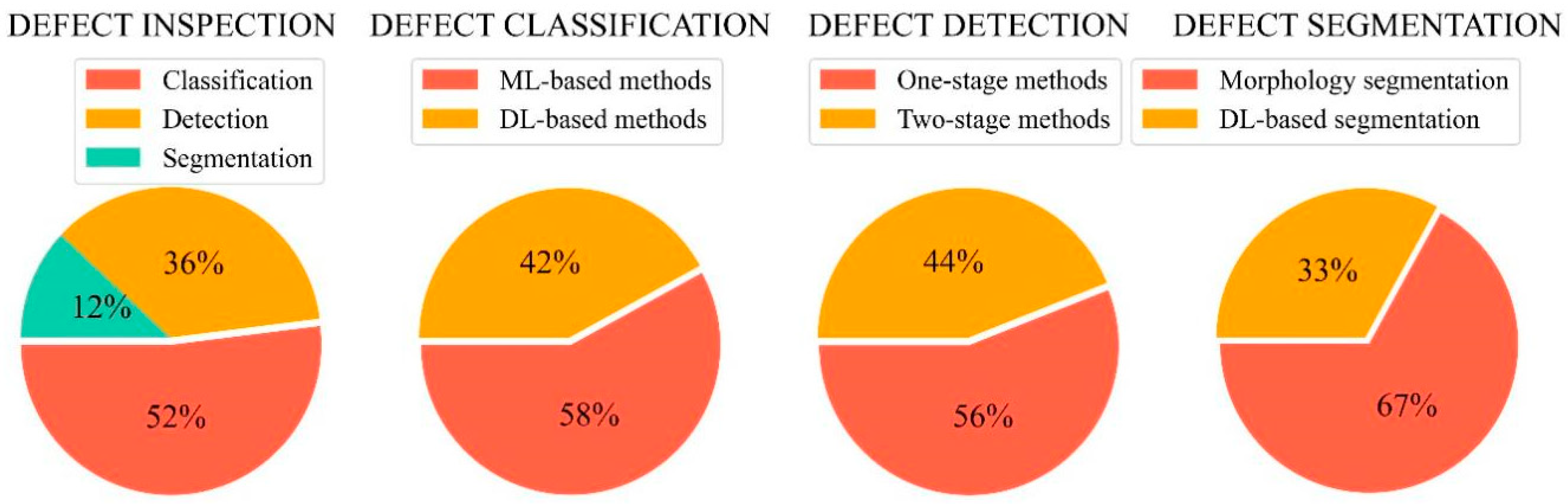

3. Defect Inspection

3.1. Defect Classification

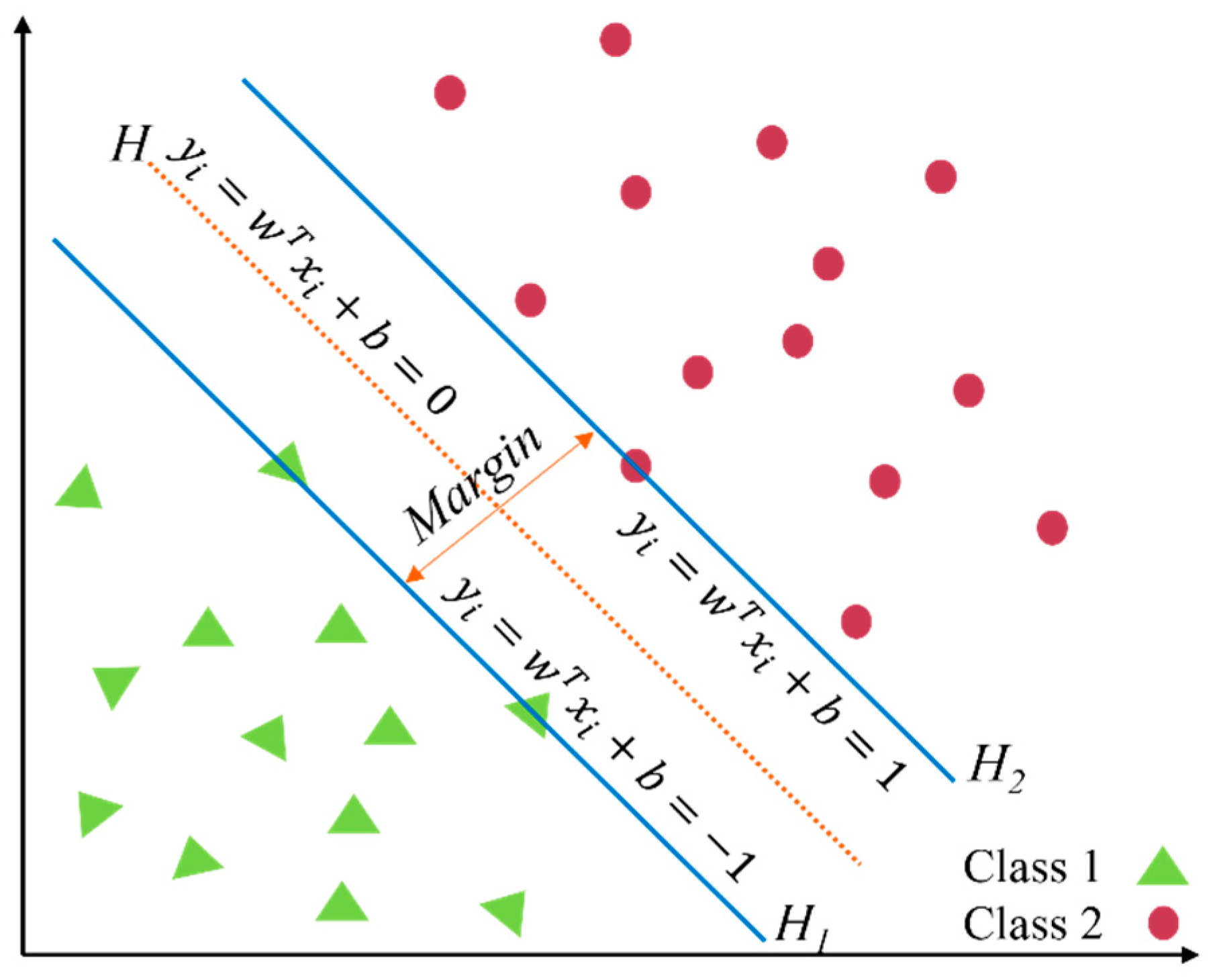

3.1.1. Support Vector Machines (SVMs)

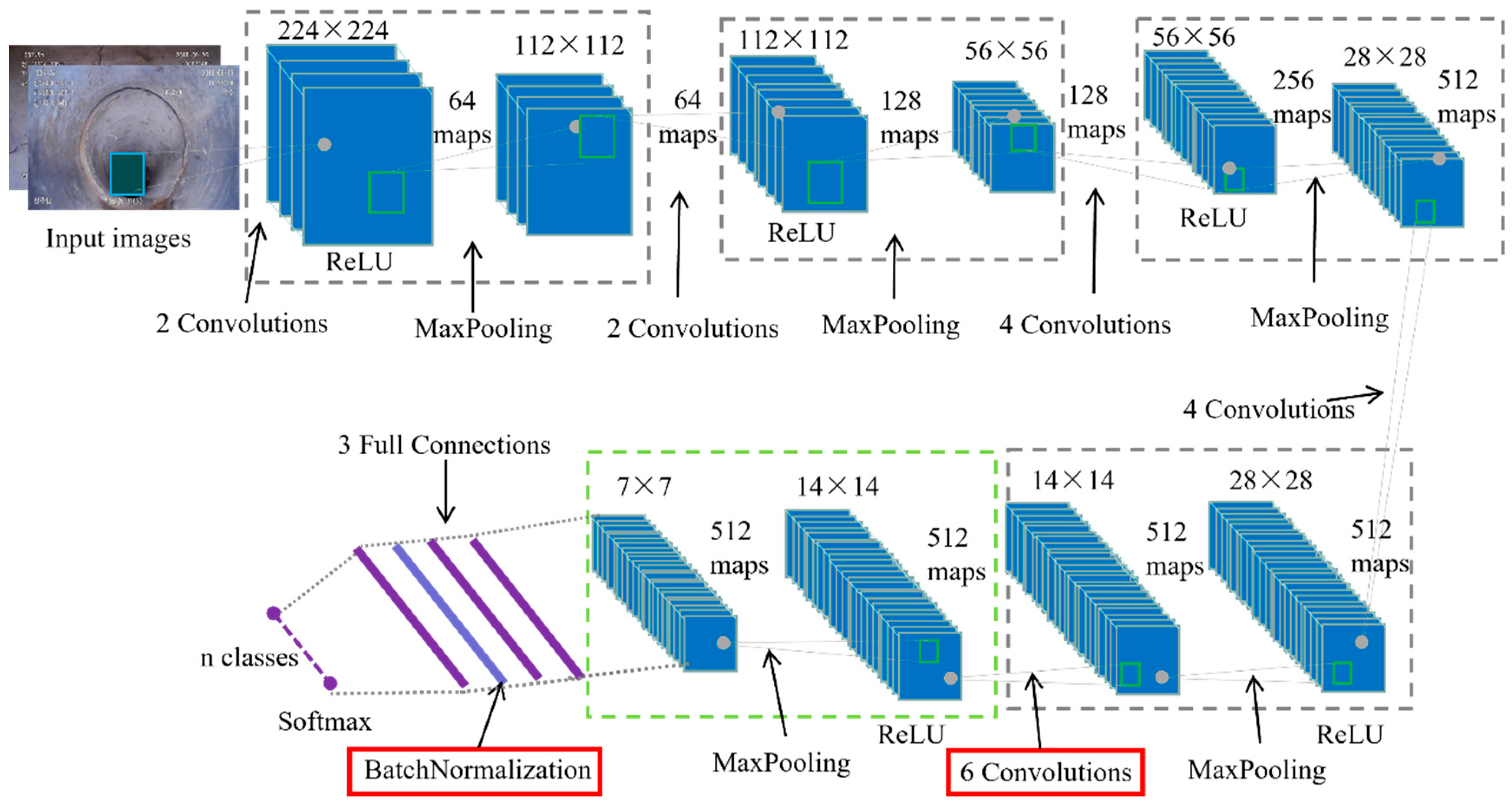

3.1.2. Convolutional Neural Networks (CNNs)

| Time | Methodology | Advantage | Disadvantage | Ref. |

|---|---|---|---|---|

| 2000 | Back-propagation algorithm | Perform well for classification | Slow learning speed | [54] |

| 2002 | Neuro-fuzzy algorithm | Good classification efficiency | Weak feature extraction scheme | [55] |

| 2006 | Neuro-fuzzy classifier | ● Combines neural network and fuzzy logic concepts ● Screens data before network training to improve efficiency | Not an end-to-end model | [56] |

| 2009 | Rule-based classifier | Recognize defects under the realistic sewer condition | No real-time recognition | [57] |

| 2009 | Rule-based classifier | Addresses realistic defect detection and recognition | Unsatisfactory classification result | [58] |

| 2009 | Radial basis network (RBN) | Overall classification accuracy is high | Heavily relies on the pre-engineered results | [59] |

| 2012 | Self-organizing map (SOM) | Suitable for large-scale real applications | High computation complexities | [60] |

| 2013 | Ensemble classifiers | ● High practicability ● Reliable classification result | Feature extraction and classification are separately implemented | [61] |

| 2016 | Random forests | Dramatically reduces the processing time | Processing speed can be improved | [62] |

| 2017 | Random forest classifier | Automated fault classification | Poor performance | [63] |

| 2017 | Hidden Markov model (HMM) | ● Efficient for numerous patterns of defects ● Real time | Low classification accuracy | [64] |

| 2018 | One-class SVM (OCSVM) | Available for both still images and video sequences | Cannot achieve a standard performance | [65] |

| 2018 | Multi-class random forest | Poor classification accuracy | Real-time prediction | [66] |

| 2018 | Multiple binary CNNs | ● Good generalization capability ● Can be easily re-trained | ● Do not support sub-defects classification ● Cannot localize defects in pipeline | [48] |

| 2018 | CNN | ● High detection accuracy ● Strong scene adaptability in realistic scenes | Poor performance for the unnoticeable defects | [67] |

| 2018 | HMM and CNN | Automatic defect detection and classification in videos | Poor performance | [68] |

| 2019 | Single CNN | ● Outperforms the SOTA ● Allows multi-label classification | Weak performance for fully automatic classification | [49] |

| 2019 | Two-level hierarchical CNNs | Can identify the sewer images into different classes | Cannot classify multiple defects in the same image simultaneously | [69] |

| 2019 | Deep CNN | ● Classifies defects at different levels ● Performs well in classifying most classes | There exists a extremely imbalanced data problem (IDP) | [70] |

| 2019 | CNN | Accurate recognition and localization for each defect | Classifies only one defect with the highest probability in an image | [71] |

| 2019 | SVM | Reveals the relationship between training data and accuracy | Requires various steps for feature extraction | [41] |

| 2020 | SVM | ● Classifies cracks at a sub-category level ● High recall and fast processing speed | Limited to only three crack patterns | [11] |

| 2020 | CNN | ● Image pre-processing used for noisy removal and image enhancement ● High classification accuracy | Can classify one defect only | [72] |

| 2020 | CNN | Shows great ability for defect classification under various conditions | Limited to recognize the tiny and narrow cracks | [73] |

| 2021 | CNN | Is robust against the IDP and noisy factors in sewer images | No multi-label classification | [52] |

| 2021 | CNN | Covers defect classification, detection, and segmentation | Weak classification results | [74] |

3.2. Defect Detection

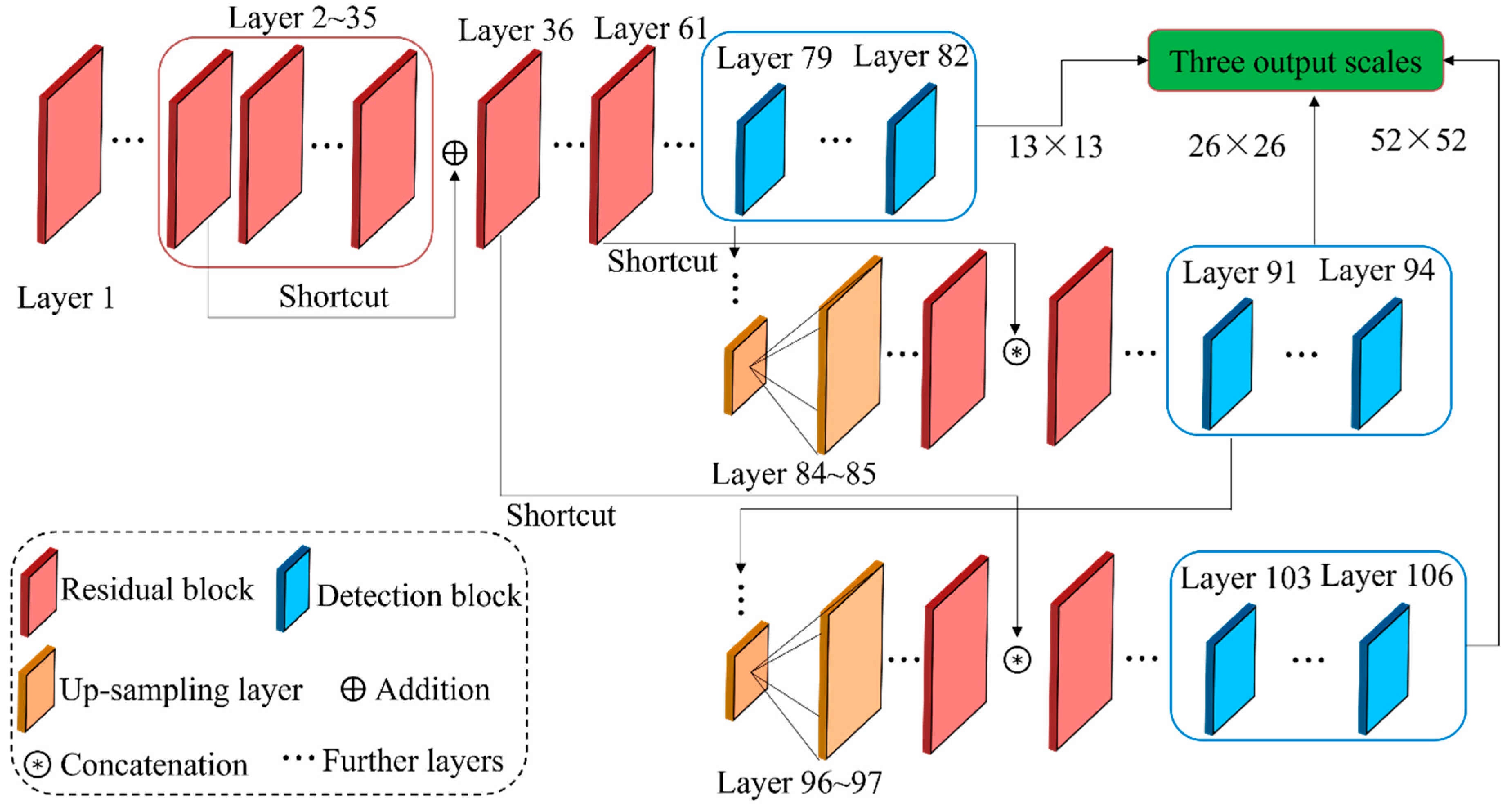

3.2.1. You Only Look Once (YOLO)

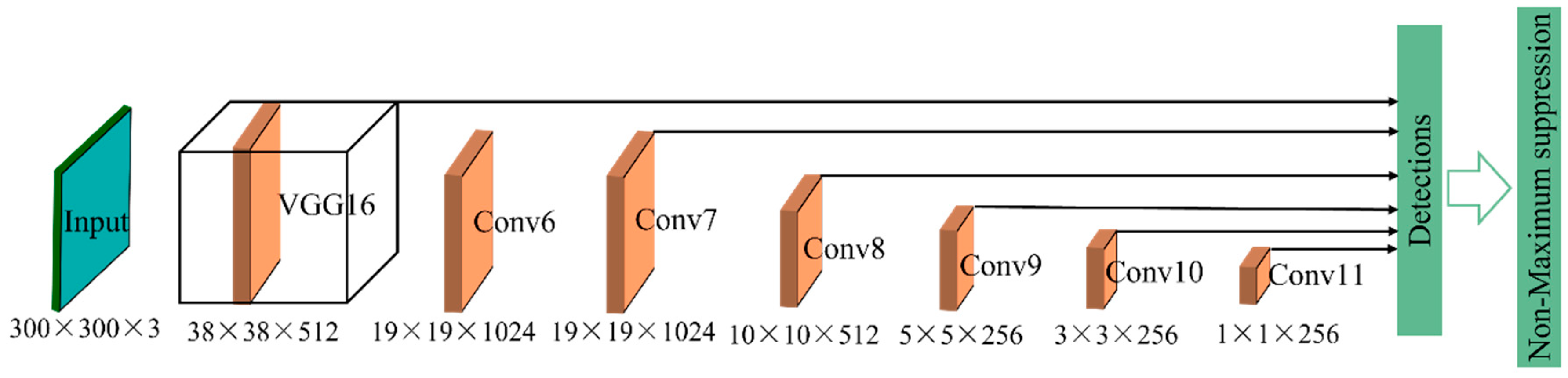

3.2.2. Single Shot Multibox Detector (SSD)

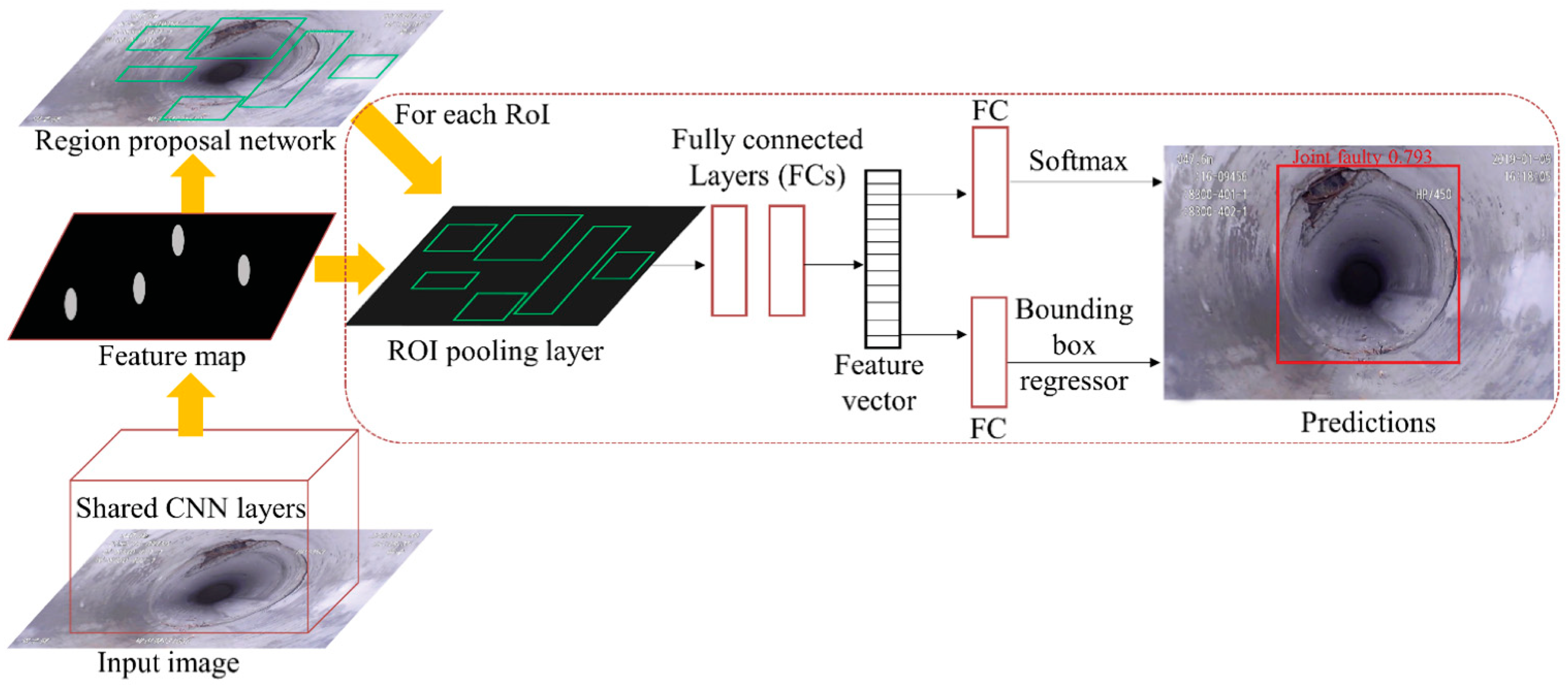

3.2.3. Faster Region-Based CNN (Faster R-CNN)

| Time | Methodology | Advantage | Disadvantage | Ref. |

|---|---|---|---|---|

| 2004 | Genetic algorithm (GA) and CNN | High average detection rate | Can only detect one type of defect | [100] |

| 2014 | Histograms of oriented gradients (HOG) and SVM | Viable and robust algorithm | Complicated image processing steps before detecting defective regions | [101] |

| 2018 | Faster R-CNN | ● Explores the influences of several factors for the model performance ● Provides references to applied DL in autonomous construction | Limited to the still images | [98] |

| 2018 | Faster R-CNN | Addresses similar object detection problems in industry | Long training time and slow detection speed | [102] |

| 2018 | Rule-based detection algorithm | ● Based on image processing techniques ● No need training process ● Requires less images | Low detection performance | [103] |

| 2019 | YOLO | End-to-end detection workflow | Cannot detect defect at the sub-classes | [104] |

| 2019 | YOLOv3 | ● High detection rate ● Real-time defect detection ● Efficient input data manipulation process | Weak function of output frames | [9] |

| 2019 | SSD, YOLOv3, and Faster R-CNN | Automatic detection for the operational defects | Cannot detect the structural defects | [105] |

| 2019 | Rule-based detection algorithm | Performs well on the low-resolution images | Requires multiple digital image processing steps | [106] |

| 2019 | Kernel-based detector | Promising and reliable results for anomaly detection | Cannot get the true position inside pipelines | [107] |

| 2019 | CNN and YOLO | Obtained a considerable reduction in processing speed | Can detect only one type of structural defect | [108] |

| 2020 | Faster R-CNN | Can assess the defect severity as well as the pipe condition | Cannot run in real time | [97] |

| 2020 | Faster R-CNN | ● Can obtain the number of defects ● First work for sewer defect tracking | Requires training two models separately, not an end-to-end framework | [99] |

| 2020 | SSD, YOLOv3, and Faster R-CNN | Automated defect detection | Structural defect detection and severity classification are not available | [105] |

| 2021 | YOLOv3 | ● Covers defect detection, video interpretation, and text recognition ● Detect defect in real time | The ground truths (GTs) are not convincing | [94] |

| 2021 | CNN and non-overlapping windows | Outperformed existing models in terms of detection accuracy | Deeper CNN model with better performance requires longer inference time | [109] |

| 2021 | Strengthened region proposal network (SRPN) | ● Effectively locate defects ● Accurately assess the defect grade | ● Cannot be applied for online processing ● Cannot identify if the defect is mirrored | [110] |

| 2021 | YOLOv2 | Covers defect classification, detection, and segmentation | Weak detection results | [74] |

| 2022 | Transformer-based defect detection (DefectTR) | ● Does not require prior knowledge ● Can generalize well with limited parameters | The robustness and efficiency can be improved for real-world applications | [111] |

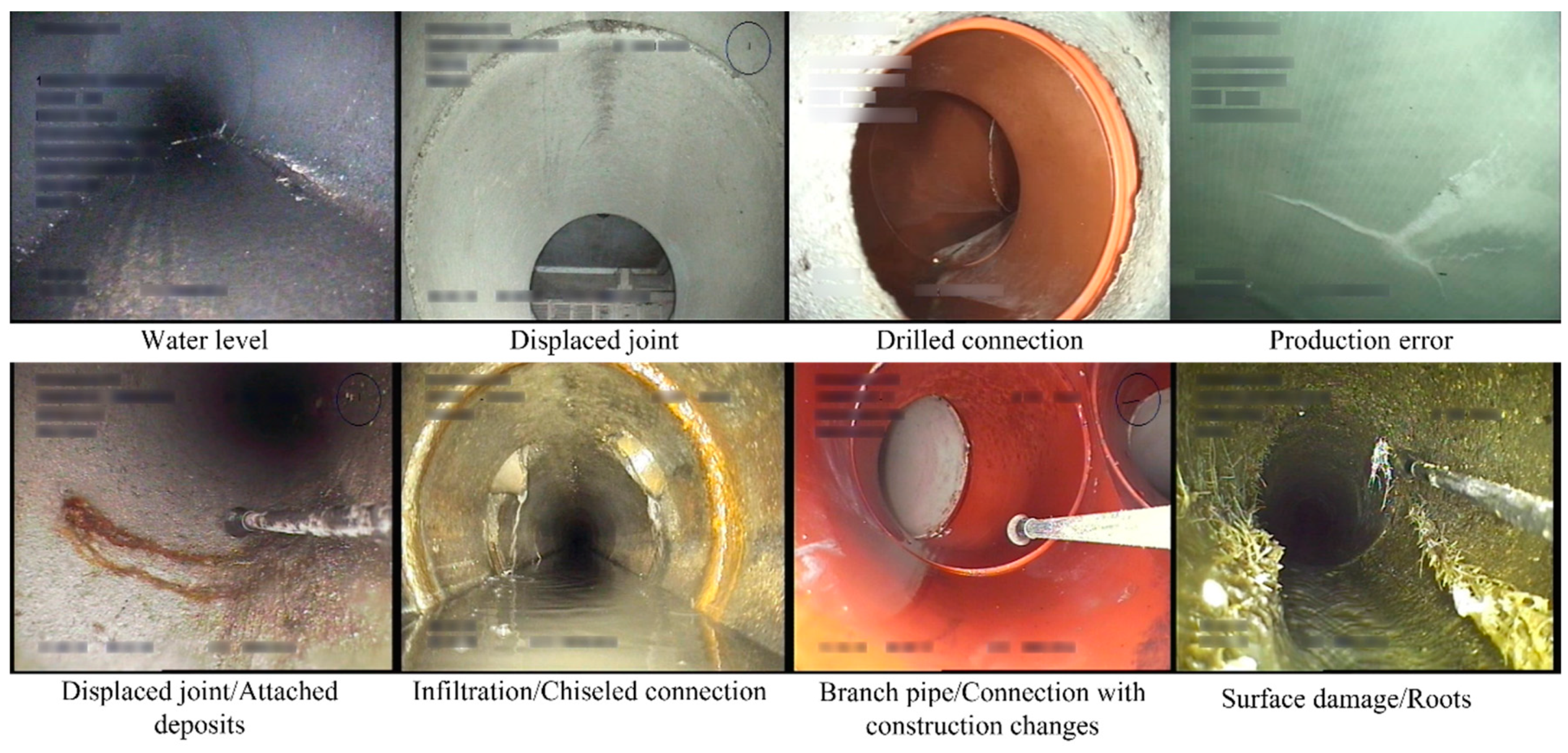

3.3. Defect Segmentation

3.3.1. Morphology Segmentation

3.3.2. Semantic Segmentation

| Time | Methodology | Advantage | Disadvantage | Ref. |

|---|---|---|---|---|

| 2005 | Mathematical morphology-based Segmentation | ● Automated segmentation based on geometry image modeling ● Perform well under various environments | ● Can only segment cracks ● Complicated and multiple steps | [123] |

| 2014 | Mathematical morphology-based Segmentation | Requires less data and computing resources to achieve a decent performance | ● Challenging to detect cracks ● Various processing steps | [113] |

| 2019 | DL-based semantic segmentation (DilaSeg-CRF) | ● End-to-end trainable model ● Fair inference speed | Long training time | [116] |

| 2020 | DilaSeg-CRF | ● Promising segmentation accuracy ● The defect severity grade is presented | Complicated workflow | [23] |

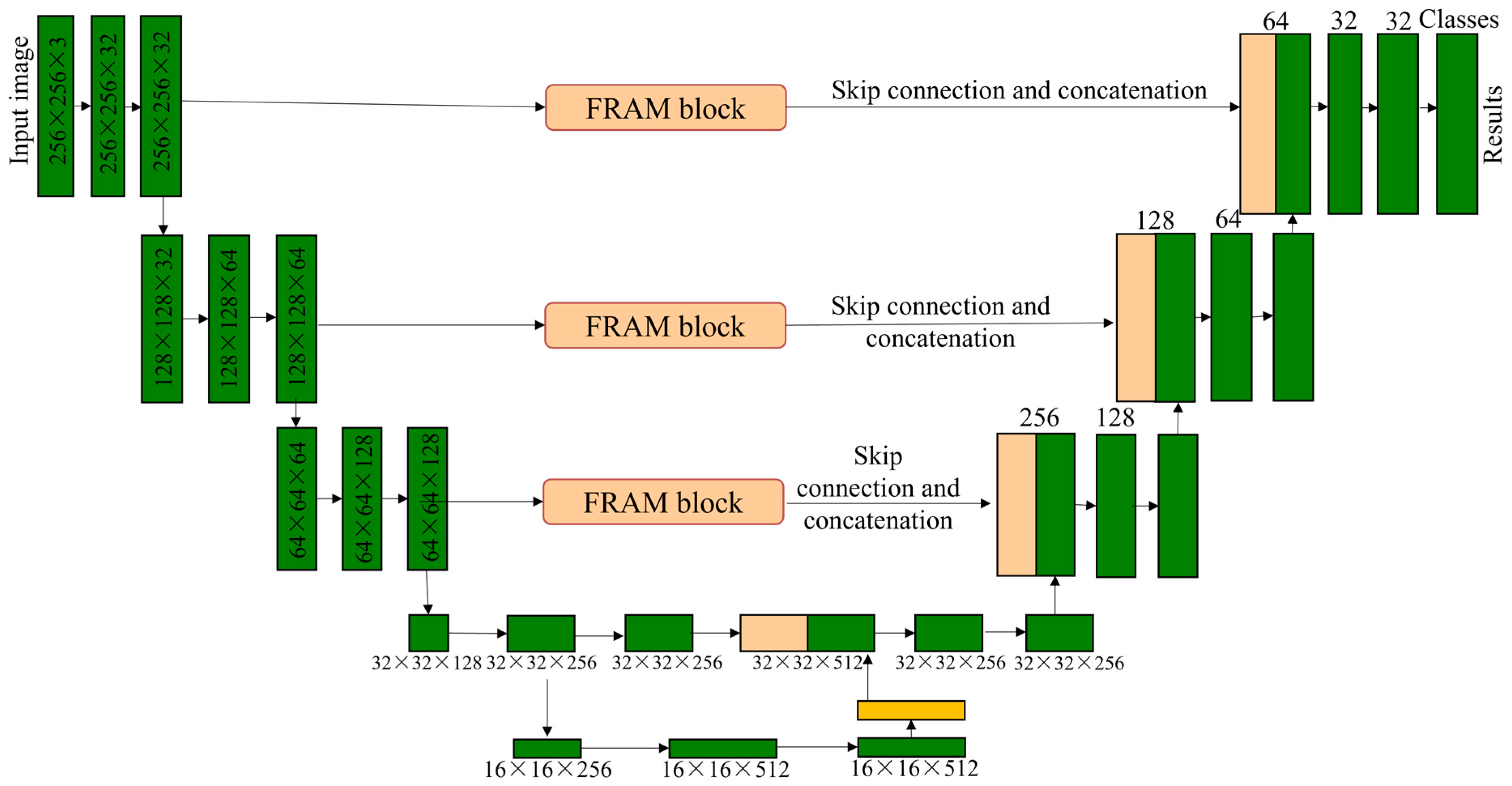

| 2020 | DL-based semantic segmentation (PipeUNet) | ● Enhances the feature extraction capability ● Resolves semantic feature differences ● Solves the IDP | Still exists negative segmentation results | [122] |

| 2021 | Feature pyramid networks (FPN) and CNN | Covers defect classification, detection, and segmentation | Weak segmentation results | [74] |

| 2022 | DL-based defect segmentation (Pipe-SOLO) | ● Can segment defect at the instance level ● Is robust against various noises from natural scenes | Only suitable for still sewer images | [124] |

4. Dataset and Evaluation Metric

4.1. Dataset

4.1.1. Dataset Collection

4.1.2. Benchmarked Dataset

4.2. Evaluation Metric

5. Challenges and Future Work

5.1. Data Analysis

5.2. Model Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- The 2019 Canadian Infrastructure Report Card (CIRC). 2019. Available online: http://canadianinfrastructure.ca/downloads/canadian-infrastructure-report-card-2019.pdf (accessed on 20 February 2022).

- Tscheikner-Gratl, F.; Caradot, N.; Cherqui, F.; Leitão, J.P.; Ahmadi, M.; Langeveld, J.G.; Le Gat, Y.; Scholten, L.; Roghani, B.; Rodríguez, J.P.; et al. Sewer asset management–state of the art and research needs. Urban Water J. 2019, 16, 662–675. [Google Scholar] [CrossRef] [Green Version]

- 2021 Report Card for America’s Infrastructure 2021 Wastewater. Available online: https://infrastructurereportcard.org/wp-content/uploads/2020/12/Wastewater-2021.pdf (accessed on 20 February 2022).

- Spencer, B.F., Jr.; Hoskere, V.; Narazaki, Y. Advances in computer vision-based civil infrastructure inspection and monitoring. Engineering 2019, 5, 199–222. [Google Scholar] [CrossRef]

- Duran, O.; Althoefer, K.; Seneviratne, L.D. State of the art in sensor technologies for sewer inspection. IEEE Sens. J. 2002, 2, 73–81. [Google Scholar] [CrossRef]

- 2021 Global Green Growth Institute. 2021. Available online: http://gggi.org/site/assets/uploads/2019/01/Wastewater-System-Operation-and-Maintenance-Guideline-1.pdf (accessed on 20 February 2022).

- Haurum, J.B.; Moeslund, T.B. A Survey on image-based automation of CCTV and SSET sewer inspections. Autom. Constr. 2020, 111, 103061. [Google Scholar] [CrossRef]

- Mostafa, K.; Hegazy, T. Review of image-based analysis and applications in construction. Autom. Constr. 2021, 122, 103516. [Google Scholar] [CrossRef]

- Yin, X.; Chen, Y.; Bouferguene, A.; Zaman, H.; Al-Hussein, M.; Kurach, L. A deep learning-based framework for an automated defect detection system for sewer pipes. Autom. Constr. 2020, 109, 102967. [Google Scholar] [CrossRef]

- Czimmermann, T.; Ciuti, G.; Milazzo, M.; Chiurazzi, M.; Roccella, S.; Oddo, C.M.; Dario, P. Visual-based defect detection and classification approaches for industrial applications—A survey. Sensors 2020, 20, 1459. [Google Scholar] [CrossRef] [Green Version]

- Zuo, X.; Dai, B.; Shan, Y.; Shen, J.; Hu, C.; Huang, S. Classifying cracks at sub-class level in closed circuit television sewer inspection videos. Autom. Constr. 2020, 118, 103289. [Google Scholar] [CrossRef]

- Dang, L.M.; Hassan, S.I.; Im, S.; Mehmood, I.; Moon, H. Utilizing text recognition for the defects extraction in sewers CCTV inspection videos. Comput. Ind. 2018, 99, 96–109. [Google Scholar] [CrossRef]

- Li, C.; Lan, H.-Q.; Sun, Y.-N.; Wang, J.-Q. Detection algorithm of defects on polyethylene gas pipe using image recognition. Int. J. Press. Vessel. Pip. 2021, 191, 104381. [Google Scholar] [CrossRef]

- Sinha, S.K.; Fieguth, P.W. Segmentation of buried concrete pipe images. Autom. Constr. 2006, 15, 47–57. [Google Scholar] [CrossRef]

- Yang, M.-D.; Su, T.-C. Automated diagnosis of sewer pipe defects based on machine learning approaches. Expert Syst. Appl. 2008, 35, 1327–1337. [Google Scholar] [CrossRef]

- McKim, R.A.; Sinha, S.K. Condition assessment of underground sewer pipes using a modified digital image processing paradigm. Tunn. Undergr. Space Technol. 1999, 14, 29–37. [Google Scholar] [CrossRef]

- Dirksen, J.; Clemens, F.; Korving, H.; Cherqui, F.; Le Gauffre, P.; Ertl, T.; Plihal, H.; Müller, K.; Snaterse, C. The consistency of visual sewer inspection data. Struct. Infrastruct. Eng. 2013, 9, 214–228. [Google Scholar] [CrossRef] [Green Version]

- van der Steen, A.J.; Dirksen, J.; Clemens, F.H. Visual sewer inspection: Detail of coding system versus data quality? Struct. Infrastruct. Eng. 2014, 10, 1385–1393. [Google Scholar] [CrossRef]

- Hsieh, Y.-A.; Tsai, Y.J. Machine learning for crack detection: Review and model performance comparison. J. Comput. Civ. Eng. 2020, 34, 04020038. [Google Scholar] [CrossRef]

- Hawari, A.; Alkadour, F.; Elmasry, M.; Zayed, T. A state of the art review on condition assessment models developed for sewer pipelines. Eng. Appl. Artif. Intell. 2020, 93, 103721. [Google Scholar] [CrossRef]

- Yang, X.; Li, H.; Yu, Y.; Luo, X.; Huang, T.; Yang, X. Automatic pixel-level crack detection and measurement using fully convolutional network. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 1090–1109. [Google Scholar] [CrossRef]

- Li, G.; Ma, B.; He, S.; Ren, X.; Liu, Q. Automatic tunnel crack detection based on u-net and a convolutional neural network with alternately updated clique. Sensors 2020, 20, 717. [Google Scholar] [CrossRef] [Green Version]

- Wang, M.; Luo, H.; Cheng, J.C. Severity Assessment of Sewer Pipe Defects in Closed-Circuit Television (CCTV) Images Using Computer Vision Techniques. In Proceedings of the Construction Research Congress 2020: Infrastructure Systems and Sustainability, Tempe, AZ, USA, 8–10 March 2020; American Society of Civil Engineers: Reston, VA, USA; pp. 942–950. [Google Scholar]

- Moradi, S.; Zayed, T.; Golkhoo, F. Review on computer aided sewer pipeline defect detection and condition assessment. Infrastructures 2019, 4, 10. [Google Scholar] [CrossRef] [Green Version]

- Yang, M.-D.; Su, T.-C.; Pan, N.-F.; Yang, Y.-F. Systematic image quality assessment for sewer inspection. Expert Syst. Appl. 2011, 38, 1766–1776. [Google Scholar] [CrossRef]

- Henriksen, K.S.; Lynge, M.S.; Jeppesen, M.D.; Allahham, M.M.; Nikolov, I.A.; Haurum, J.B.; Moeslund, T.B. Generating synthetic point clouds of sewer networks: An initial investigation. In Proceedings of the International Conference on Augmented Reality, Virtual Reality and Computer Graphics, Lecce, Italy, 7–10 September 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 364–373. [Google Scholar]

- Alejo, D.; Caballero, F.; Merino, L. RGBD-based robot localization in sewer networks. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 4070–4076. [Google Scholar]

- Lepot, M.; Stanić, N.; Clemens, F.H. A technology for sewer pipe inspection (Part 2): Experimental assessment of a new laser profiler for sewer defect detection and quantification. Autom. Constr. 2017, 73, 1–11. [Google Scholar] [CrossRef]

- Duran, O.; Althoefer, K.; Seneviratne, L.D. Automated pipe defect detection and categorization using camera/laser-based profiler and artificial neural network. IEEE Trans. Autom. Sci. Eng. 2007, 4, 118–126. [Google Scholar] [CrossRef]

- Khan, M.S.; Patil, R. Acoustic characterization of pvc sewer pipes for crack detection using frequency domain analysis. In Proceedings of the 2018 IEEE International Smart Cities Conference (ISC2), Kansas City, MO, USA, 16–19 September 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–5. [Google Scholar]

- Iyer, S.; Sinha, S.K.; Pedrick, M.K.; Tittmann, B.R. Evaluation of ultrasonic inspection and imaging systems for concrete pipes. Autom. Constr. 2012, 22, 149–164. [Google Scholar] [CrossRef]

- Li, Y.; Wang, H.; Dang, L.M.; Sadeghi-Niaraki, A.; Moon, H. Crop pest recognition in natural scenes using convolutional neural networks. Comput. Electron. Agric. 2020, 169, 105174. [Google Scholar] [CrossRef]

- Wang, H.; Li, Y.; Dang, L.M.; Ko, J.; Han, D.; Moon, H. Smartphone-based bulky waste classification using convolutional neural networks. Multimed. Tools Appl. 2020, 79, 29411–29431. [Google Scholar] [CrossRef]

- Hassan, S.I.; Dang, L.-M.; Im, S.-H.; Min, K.-B.; Nam, J.-Y.; Moon, H.-J. Damage Detection and Classification System for Sewer Inspection using Convolutional Neural Networks based on Deep Learning. J. Korea Inst. Inf. Commun. Eng. 2018, 22, 451–457. [Google Scholar]

- Scholkopf, B. Support vector machines: A practical consequence of learning theory. IEEE Intell. Syst. 1998, 13, 4. [Google Scholar] [CrossRef]

- Li, X.; Wang, L.; Sung, E. A study of AdaBoost with SVM based weak learners. In Proceedings of the 2005 IEEE International Joint Conference on Neural Networks, Montreal, QC, Canada, 31 July–4 August 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 196–201. [Google Scholar]

- Han, H.; Jiang, X. Overcome support vector machine diagnosis overfitting. Cancer Inform. 2014, 13, 145–158. [Google Scholar] [CrossRef]

- Shao, Y.-H.; Chen, W.-J.; Deng, N.-Y. Nonparallel hyperplane support vector machine for binary classification problems. Inf. Sci. 2014, 263, 22–35. [Google Scholar] [CrossRef]

- Cervantes, J.; Garcia-Lamont, F.; Rodríguez-Mazahua, L.; Lopez, A. A comprehensive survey on support vector machine classification: Applications, challenges and trends. Neurocomputing 2020, 408, 189–215. [Google Scholar] [CrossRef]

- Xu, Y.; Yang, J.; Zhao, S.; Wu, H.; Sawan, M. An end-to-end deep learning approach for epileptic seizure prediction. In Proceedings of the 2020 2nd IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Genoa, Italy, 31 August–2 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 266–270. [Google Scholar]

- Ye, X.; Zuo, J.; Li, R.; Wang, Y.; Gan, L.; Yu, Z.; Hu, X. Diagnosis of sewer pipe defects on image recognition of multi-features and support vector machine in a southern Chinese city. Front. Environ. Sci. Eng. 2019, 13, 17. [Google Scholar] [CrossRef]

- Hu, M.-K. Visual pattern recognition by moment invariants. IRE Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar]

- Quiroga, J.A.; Crespo, D.; Bernabeu, E. Fourier transform method for automatic processing of moiré deflectograms. Opt. Eng. 1999, 38, 974–982. [Google Scholar] [CrossRef]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No. 98CH36271), Bombay, IN, USA, 7 January 1998; IEEE: Piscataway, NJ, USA, 1998; pp. 839–846. [Google Scholar]

- Gavaskar, R.G.; Chaudhury, K.N. Fast adaptive bilateral filtering. IEEE Trans. Image Process. 2018, 28, 779–790. [Google Scholar] [CrossRef] [Green Version]

- Hubel, D.H.; Wiesel, T.N. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 1962, 160, 106–154. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Kumar, S.S.; Abraham, D.M.; Jahanshahi, M.R.; Iseley, T.; Starr, J. Automated defect classification in sewer closed circuit television inspections using deep convolutional neural networks. Autom. Constr. 2018, 91, 273–283. [Google Scholar] [CrossRef]

- Meijer, D.; Scholten, L.; Clemens, F.; Knobbe, A. A defect classification methodology for sewer image sets with convolutional neural networks. Autom. Constr. 2019, 104, 281–298. [Google Scholar] [CrossRef]

- Cheng, H.-D.; Shi, X. A simple and effective histogram equalization approach to image enhancement. Digit. Signal Process. 2004, 14, 158–170. [Google Scholar] [CrossRef]

- Salazar-Colores, S.; Ramos-Arreguín, J.-M.; Echeverri, C.J.O.; Cabal-Yepez, E.; Pedraza-Ortega, J.-C.; Rodriguez-Resendiz, J. Image dehazing using morphological opening, dilation and Gaussian filtering. Signal Image Video Process. 2018, 12, 1329–1335. [Google Scholar] [CrossRef]

- Dang, L.M.; Kyeong, S.; Li, Y.; Wang, H.; Nguyen, T.N.; Moon, H. Deep Learning-based Sewer Defect Classification for Highly Imbalanced Dataset. Comput. Ind. Eng. 2021, 161, 107630. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Moselhi, O.; Shehab-Eldeen, T. Classification of defects in sewer pipes using neural networks. J. Infrastruct. Syst. 2000, 6, 97–104. [Google Scholar] [CrossRef]

- Sinha, S.K.; Karray, F. Classification of underground pipe scanned images using feature extraction and neuro-fuzzy algorithm. IEEE Trans. Neural Netw. 2002, 13, 393–401. [Google Scholar] [CrossRef] [PubMed]

- Sinha, S.K.; Fieguth, P.W. Neuro-fuzzy network for the classification of buried pipe defects. Autom. Constr. 2006, 15, 73–83. [Google Scholar] [CrossRef]

- Guo, W.; Soibelman, L.; Garrett, J., Jr. Visual pattern recognition supporting defect reporting and condition assessment of wastewater collection systems. J. Comput. Civ. Eng. 2009, 23, 160–169. [Google Scholar] [CrossRef]

- Guo, W.; Soibelman, L.; Garrett, J., Jr. Automated defect detection for sewer pipeline inspection and condition assessment. Autom. Constr. 2009, 18, 587–596. [Google Scholar] [CrossRef]

- Yang, M.-D.; Su, T.-C. Segmenting ideal morphologies of sewer pipe defects on CCTV images for automated diagnosis. Expert Syst. Appl. 2009, 36, 3562–3573. [Google Scholar] [CrossRef]

- Ganegedara, H.; Alahakoon, D.; Mashford, J.; Paplinski, A.; Müller, K.; Deserno, T.M. Self organising map based region of interest labelling for automated defect identification in large sewer pipe image collections. In Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN), Brisbane, Australia, 10–15 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–8. [Google Scholar]

- Wu, W.; Liu, Z.; He, Y. Classification of defects with ensemble methods in the automated visual inspection of sewer pipes. Pattern Anal. Appl. 2015, 18, 263–276. [Google Scholar] [CrossRef]

- Myrans, J.; Kapelan, Z.; Everson, R. Automated detection of faults in wastewater pipes from CCTV footage by using random forests. Procedia Eng. 2016, 154, 36–41. [Google Scholar] [CrossRef] [Green Version]

- Myrans, J.; Kapelan, Z.; Everson, R. Automatic detection of sewer faults using continuous CCTV footage. Computing & Control for the Water Industry. 2017. Available online: https://easychair.org/publications/open/ZQH3 (accessed on 20 February 2022).

- Moradi, S.; Zayed, T. Real-time defect detection in sewer closed circuit television inspection videos. In Pipelines 2017; ASCE: Reston, VA, USA, 2017; pp. 295–307. [Google Scholar]

- Myrans, J.; Kapelan, Z.; Everson, R. Using automatic anomaly detection to identify faults in sewers. WDSA/CCWI Joint Conference Proceedings. 2018. Available online: https://ojs.library.queensu.ca/index.php/wdsa-ccw/article/view/12030 (accessed on 20 February 2022).

- Myrans, J.; Kapelan, Z.; Everson, R. Automatic identification of sewer fault types using CCTV footage. EPiC Ser. Eng. 2018, 3, 1478–1485. [Google Scholar]

- Chen, K.; Hu, H.; Chen, C.; Chen, L.; He, C. An intelligent sewer defect detection method based on convolutional neural network. In Proceedings of the 2018 IEEE International Conference on Information and Automation (ICIA), Wuyishan, China, 11–13 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1301–1306. [Google Scholar]

- Moradi, S.; Zayed, T.; Golkhoo, F. Automated sewer pipeline inspection using computer vision techniques. In Pipelines 2018: Condition Assessment, Construction, and Rehabilitation; American Society of Civil Engineers: Reston, VA, USA, 2018; pp. 582–587. [Google Scholar]

- Xie, Q.; Li, D.; Xu, J.; Yu, Z.; Wang, J. Automatic detection and classification of sewer defects via hierarchical deep learning. IEEE Trans. Autom. Sci. Eng. 2019, 16, 1836–1847. [Google Scholar] [CrossRef]

- Li, D.; Cong, A.; Guo, S. Sewer damage detection from imbalanced CCTV inspection data using deep convolutional neural networks with hierarchical classification. Autom. Constr. 2019, 101, 199–208. [Google Scholar] [CrossRef]

- Hassan, S.I.; Dang, L.M.; Mehmood, I.; Im, S.; Choi, C.; Kang, J.; Park, Y.-S.; Moon, H. Underground sewer pipe condition assessment based on convolutional neural networks. Autom. Constr. 2019, 106, 102849. [Google Scholar] [CrossRef]

- Khan, S.M.; Haider, S.A.; Unwala, I. A Deep Learning Based Classifier for Crack Detection with Robots in Underground Pipes. In Proceedings of the 2020 IEEE 17th International Conference on Smart Communities: Improving Quality of Life Using ICT, IoT and AI (HONET), Charlotte, NC, USA, 14–16 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 78–81. [Google Scholar]

- Chow, J.K.; Su, Z.; Wu, J.; Li, Z.; Tan, P.S.; Liu, K.-f.; Mao, X.; Wang, Y.-H. Artificial intelligence-empowered pipeline for image-based inspection of concrete structures. Autom. Constr. 2020, 120, 103372. [Google Scholar] [CrossRef]

- Klusek, M.; Szydlo, T. Supporting the Process of Sewer Pipes Inspection Using Machine Learning on Embedded Devices. In Proceedings of the International Conference on Computational Science, Krakow, Poland, 16–18 June 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 347–360. [Google Scholar]

- Sumalee, A.; Ho, H.W. Smarter and more connected: Future intelligent transportation system. Iatss Res. 2018, 42, 67–71. [Google Scholar] [CrossRef]

- Veres, M.; Moussa, M. Deep learning for intelligent transportation systems: A survey of emerging trends. IEEE Trans. Intell. Transp. Syst. 2019, 21, 3152–3168. [Google Scholar] [CrossRef]

- Li, Y.; Wang, H.; Dang, L.M.; Nguyen, T.N.; Han, D.; Lee, A.; Jang, I.; Moon, H. A deep learning-based hybrid framework for object detection and recognition in autonomous driving. IEEE Access 2020, 8, 194228–194239. [Google Scholar] [CrossRef]

- Yahata, S.; Onishi, T.; Yamaguchi, K.; Ozawa, S.; Kitazono, J.; Ohkawa, T.; Yoshida, T.; Murakami, N.; Tsuji, H. A hybrid machine learning approach to automatic plant phenotyping for smart agriculture. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1787–1793. [Google Scholar]

- Boukhris, L.; Abderrazak, J.B.; Besbes, H. Tailored Deep Learning based Architecture for Smart Agriculture. In Proceedings of the 2020 International Wireless Communications and Mobile Computing (IWCMC), Limassol, Cyprus, 15–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 964–969. [Google Scholar]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Melenbrink, N.; Rinderspacher, K.; Menges, A.; Werfel, J. Autonomous anchoring for robotic construction. Autom. Constr. 2020, 120, 103391. [Google Scholar] [CrossRef]

- Lee, D.; Kim, M. Autonomous construction hoist system based on deep reinforcement learning in high-rise building construction. Autom. Constr. 2021, 128, 103737. [Google Scholar] [CrossRef]

- Tan, Y.; Cai, R.; Li, J.; Chen, P.; Wang, M. Automatic detection of sewer defects based on improved you only look once algorithm. Autom. Constr. 2021, 131, 103912. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 379–387. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Won, J.-H.; Lee, D.-H.; Lee, K.-M.; Lin, C.-H. An improved YOLOv3-based neural network for de-identification technology. In Proceedings of the 2019 34th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC), Jeju, Korea, 23–26 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–2. [Google Scholar]

- Zhao, L.; Li, S. Object detection algorithm based on improved YOLOv3. Electronics 2020, 9, 537. [Google Scholar] [CrossRef] [Green Version]

- Yin, X.; Ma, T.; Bouferguene, A.; Al-Hussein, M. Automation for sewer pipe assessment: CCTV video interpretation algorithm and sewer pipe video assessment (SPVA) system development. Autom. Constr. 2021, 125, 103622. [Google Scholar] [CrossRef]

- Murugan, P. Feed forward and backward run in deep convolution neural network. arXiv 2017, arXiv:1711.03278. [Google Scholar]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 850–855. [Google Scholar]

- Wang, M.; Luo, H.; Cheng, J.C. Towards an automated condition assessment framework of underground sewer pipes based on closed-circuit television (CCTV) images. Tunn. Undergr. Space Technol. 2021, 110, 103840. [Google Scholar] [CrossRef]

- Cheng, J.C.; Wang, M. Automated detection of sewer pipe defects in closed-circuit television images using deep learning techniques. Autom. Constr. 2018, 95, 155–171. [Google Scholar] [CrossRef]

- Wang, M.; Kumar, S.S.; Cheng, J.C. Automated sewer pipe defect tracking in CCTV videos based on defect detection and metric learning. Autom. Constr. 2021, 121, 103438. [Google Scholar] [CrossRef]

- Oullette, R.; Browne, M.; Hirasawa, K. Genetic algorithm optimization of a convolutional neural network for autonomous crack detection. In Proceedings of the 2004 Congress on Evolutionary Computation (IEEE Cat. No. 04TH8753), Portland, OR, USA, 19–23 June 2004; IEEE: Piscataway, NJ, USA, 2004; pp. 516–521. [Google Scholar]

- Halfawy, M.R.; Hengmeechai, J. Automated defect detection in sewer closed circuit television images using histograms of oriented gradients and support vector machine. Autom. Constr. 2014, 38, 1–13. [Google Scholar] [CrossRef]

- Wang, M.; Cheng, J.C. Development and improvement of deep learning based automated defect detection for sewer pipe inspection using faster R-CNN. In Workshop of the European Group for Intelligent Computing in Engineering; Springer: Berlin/Heidelberg, Germany, 2018; pp. 171–192. [Google Scholar]

- Hawari, A.; Alamin, M.; Alkadour, F.; Elmasry, M.; Zayed, T. Automated defect detection tool for closed circuit television (cctv) inspected sewer pipelines. Autom. Constr. 2018, 89, 99–109. [Google Scholar] [CrossRef]

- Yin, X.; Chen, Y.; Zhang, Q.; Bouferguene, A.; Zaman, H.; Al-Hussein, M.; Russell, R.; Kurach, L. A neural network-based application for automated defect detection for sewer pipes. In Proceedings of the 2019 Canadian Society for Civil Engineering Annual Conference, CSCE 2019, Montreal, QC, Canada, 12–15 June 2019. [Google Scholar]

- Kumar, S.S.; Wang, M.; Abraham, D.M.; Jahanshahi, M.R.; Iseley, T.; Cheng, J.C. Deep learning–based automated detection of sewer defects in CCTV videos. J. Comput. Civ. Eng. 2020, 34, 04019047. [Google Scholar] [CrossRef]

- Heo, G.; Jeon, J.; Son, B. Crack automatic detection of CCTV video of sewer inspection with low resolution. KSCE J. Civ. Eng. 2019, 23, 1219–1227. [Google Scholar] [CrossRef]

- Piciarelli, C.; Avola, D.; Pannone, D.; Foresti, G.L. A vision-based system for internal pipeline inspection. IEEE Trans. Ind. Inform. 2018, 15, 3289–3299. [Google Scholar] [CrossRef]

- Kumar, S.S.; Abraham, D.M. A deep learning based automated structural defect detection system for sewer pipelines. In Computing in Civil Engineering 2019: Smart Cities, Sustainability, and Resilience; American Society of Civil Engineers: Reston, VA, USA, 2019; pp. 226–233. [Google Scholar]

- Rao, A.S.; Nguyen, T.; Palaniswami, M.; Ngo, T. Vision-based automated crack detection using convolutional neural networks for condition assessment of infrastructure. Struct. Health Monit. 2021, 20, 2124–2142. [Google Scholar] [CrossRef]

- Li, D.; Xie, Q.; Yu, Z.; Wu, Q.; Zhou, J.; Wang, J. Sewer pipe defect detection via deep learning with local and global feature fusion. Autom. Constr. 2021, 129, 103823. [Google Scholar] [CrossRef]

- Dang, L.M.; Wang, H.; Li, Y.; Nguyen, T.N.; Moon, H. DefectTR: End-to-end defect detection for sewage networks using a transformer. Constr. Build. Mater. 2022, 325, 126584. [Google Scholar] [CrossRef]

- Su, T.-C.; Yang, M.-D.; Wu, T.-C.; Lin, J.-Y. Morphological segmentation based on edge detection for sewer pipe defects on CCTV images. Expert Syst. Appl. 2011, 38, 13094–13114. [Google Scholar] [CrossRef]

- Su, T.-C.; Yang, M.-D. Application of morphological segmentation to leaking defect detection in sewer pipelines. Sensors 2014, 14, 8686–8704. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, M.; Cheng, J. Semantic segmentation of sewer pipe defects using deep dilated convolutional neural network. In Proceedings of the International Symposium on Automation and Robotics in Construction, Banff, AB, Canada, 21–24 May 2019; IAARC Publications. 2019; pp. 586–594. Available online: https://www.proquest.com/openview/9641dc9ed4c7e5712f417dcb2b380f20/1 (accessed on 20 February 2022).

- Zhang, L.; Wang, L.; Zhang, X.; Shen, P.; Bennamoun, M.; Zhu, G.; Shah, S.A.A.; Song, J. Semantic scene completion with dense CRF from a single depth image. Neurocomputing 2018, 318, 182–195. [Google Scholar] [CrossRef]

- Wang, M.; Cheng, J.C. A unified convolutional neural network integrated with conditional random field for pipe defect segmentation. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 162–177. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Fayyaz, M.; Saffar, M.H.; Sabokrou, M.; Fathy, M.; Klette, R.; Huang, F. STFCN: Spatio-temporal FCN for semantic video segmentation. arXiv 2016, arXiv:1608.05971. [Google Scholar]

- Bi, L.; Feng, D.; Kim, J. Dual-path adversarial learning for fully convolutional network (FCN)-based medical image segmentation. Vis. Comput. 2018, 34, 1043–1052. [Google Scholar] [CrossRef]

- Lu, Y.; Chen, Y.; Zhao, D.; Chen, J. Graph-FCN for image semantic segmentation. In Proceedings of the International Symposium on Neural Networks, Moscow, Russia, 10–12 July 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 97–105. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Pan, G.; Zheng, Y.; Guo, S.; Lv, Y. Automatic sewer pipe defect semantic segmentation based on improved U-Net. Autom. Constr. 2020, 119, 103383. [Google Scholar] [CrossRef]

- Iyer, S.; Sinha, S.K. A robust approach for automatic detection and segmentation of cracks in underground pipeline images. Image Vis. Comput. 2005, 23, 921–933. [Google Scholar] [CrossRef]

- Li, Y.; Wang, H.; Dang, L.M.; Piran, M.J.; Moon, H. A Robust Instance Segmentation Framework for Underground Sewer Defect Detection. Measurement 2022, 190, 110727. [Google Scholar] [CrossRef]

- Haurum, J.B.; Moeslund, T.B. Sewer-ML: A Multi-Label Sewer Defect Classification Dataset and Benchmark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 13456–13467. [Google Scholar]

- Mashford, J.; Rahilly, M.; Davis, P.; Burn, S. A morphological approach to pipe image interpretation based on segmentation by support vector machine. Autom. Constr. 2010, 19, 875–883. [Google Scholar] [CrossRef]

- Iyer, S.; Sinha, S.K.; Tittmann, B.R.; Pedrick, M.K. Ultrasonic signal processing methods for detection of defects in concrete pipes. Autom. Constr. 2012, 22, 135–148. [Google Scholar] [CrossRef]

- Lei, Y.; Zheng, Z.-P. Review of physical based monitoring techniques for condition assessment of corrosion in reinforced concrete. Math. Probl. Eng. 2013, 2013, 953930. [Google Scholar] [CrossRef]

- Makar, J. Diagnostic techniques for sewer systems. J. Infrastruct. Syst. 1999, 5, 69–78. [Google Scholar] [CrossRef] [Green Version]

- Myrans, J.; Everson, R.; Kapelan, Z. Automated detection of fault types in CCTV sewer surveys. J. Hydroinform. 2019, 21, 153–163. [Google Scholar] [CrossRef]

- Guo, W.; Soibelman, L.; Garrett, J.H. Imagery enhancement and interpretation for remote visual inspection of aging civil infrastructure. Tsinghua Sci. Technol. 2008, 13, 375–380. [Google Scholar] [CrossRef]

| ID | Ref. | Time | Defect Inspection | Condition Assessment | Contributions |

|---|---|---|---|---|---|

| 1 | [24] | 2019 | √ | √ |

|

| 2 | [10] | 2020 | √ | × |

|

| 3 | [7] | 2020 | √ | × |

|

| 4 | [20] | 2020 | × | √ |

|

| 5 | [8] | 2021 | √ | √ |

|

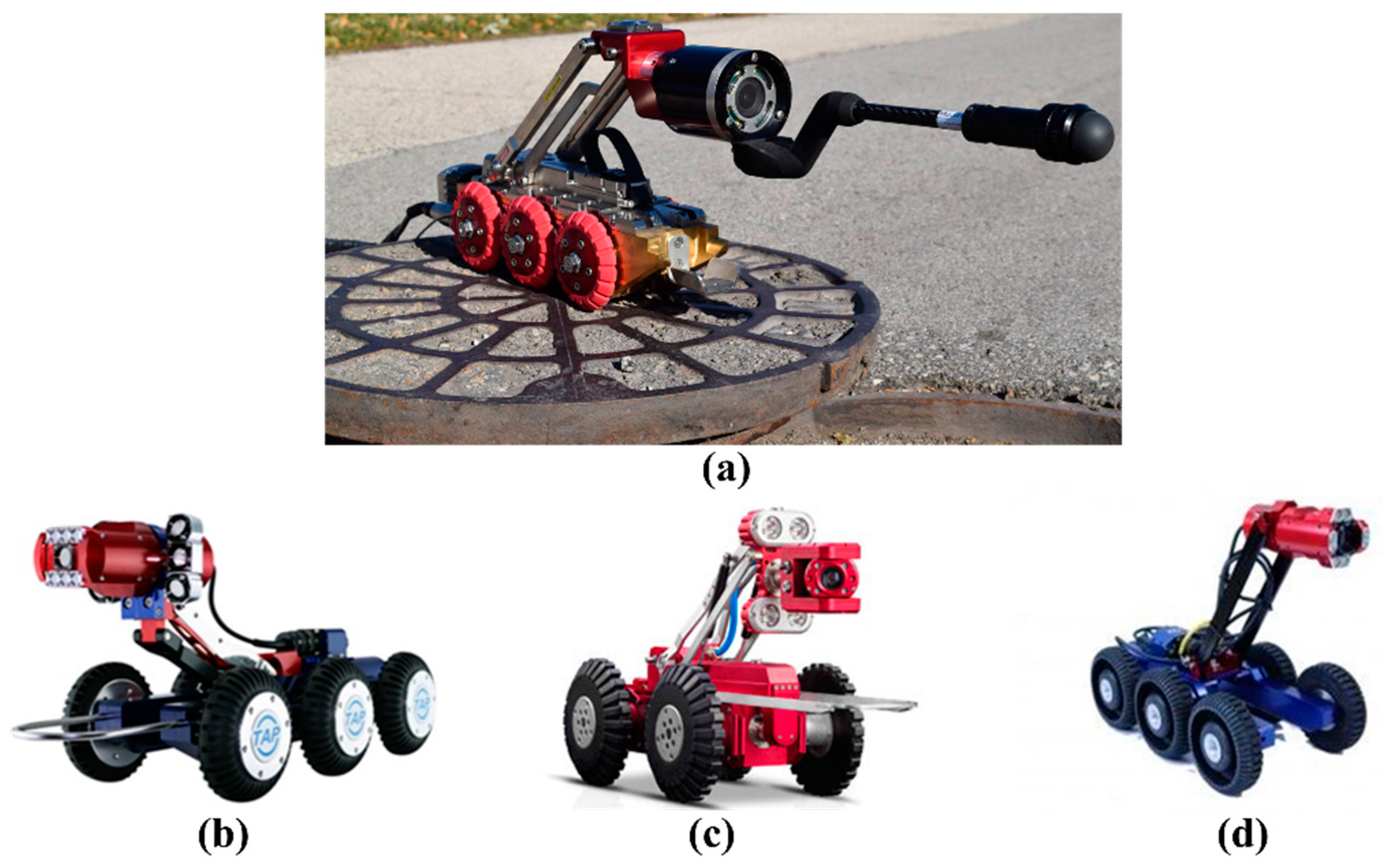

| Name | Company | Pipe Diameter | Camera Feature | Country | Strong Point |

|---|---|---|---|---|---|

| CAM160 (https://goolnk.com/YrYQob accessed on 20 February 2022) | Sewer Robotics | 200–500 mm | NA | USA | ● Auto horizon adjustment ● Intensity adjustable LED lighting ● Multifunctional |

| LETS 6.0 (https://ariesindustries.com/products/ accessed on 20 February 2022) | ARIES INDUSTRIES | 150 mm or larger | Self-leveling lateral camera or a Pan and tilt camera | USA | ● Slim tractor profile ● Superior lateral camera ● Simultaneously acquire mainline and lateral videos |

| wolverine® 2.02 | ARIES INDUSTRIES | 150–450 mm | NA | USA | ● Powerful crawler to maneuver obstacles ● Minimum set uptime ● Camera with lens cleaning technique |

| X5-HS (https://goolnk.com/Rym02W accessed on 20 February 2022) | EASY-SIGHT | 300–3000 mm | ≥2 million pixels | China | ● High-definition ● Freely choose wireless and wired connection and control ● Display and save videos in real time |

| Robocam 6 (https://goolnk.com/43pdGA accessed on 20 February 2022) | TAP Electronics | 600 mm or more | Sony 130-megapixel Exmor 1/3-inch CMOS | Korea | ● High-resolution ● All-in-one subtitle system |

| RoboCam Innovation4 | TAP Electronics | 600 mm or more | Sony 130-megapixel Exmor 1/3-inch CMOS | Korea | ● Best digital record performance ● Super white LED lighting ● Cableless |

| Robocam 30004 | TAP Electronics’ Japanese subsidiary | 250–3000 mm | Sony 1.3-megapixel Exmor CMOS color | Japan | ● Can be utilized in huge pipelines ● Optical 10X zoom performance |

| ID | Defect Type | Image Resolution | Equipment | Number of Images | Country | Ref. |

|---|---|---|---|---|---|---|

| 1 | Broken, crack, deposit, fracture, hole, root, tap | NA | NA | 4056 | Canada | [9] |

| 2 | Connection, crack, debris, deposit, infiltration, material change, normal, root | 1440 × 720–320 × 256 | RedZone® Solo CCTV crawler | 12,000 | USA | [48] |

| 3 | Attached deposit, defective connection, displaced joint, fissure, infiltration, ingress, intruding connection, porous, root, sealing, settled deposit, surface | 1040 × 1040 | Front-facing and back-facing camera with a 185∘ wide lens | 2,202,582 | The Netherlands | [49] |

| 4 | Dataset 1: defective, normal | NA | NA | 40,000 | China | [69] |

| Dataset 2: barrier, deposit, disjunction, fracture, stagger, water | 15,000 | |||||

| 5 | Broken, deformation, deposit, other, joint offset, normal, obstacle, water | 1435 × 1054–296 × 166 | NA | 18,333 | China | [70] |

| 6 | Attached deposits, collapse, deformation, displaced joint, infiltration, joint damage, settled deposit | NA | NA | 1045 | China | [41] |

| 7 | Circumferential crack, longitudinal crack, multiple crack | 320 × 240 | NA | 335 | USA | [11] |

| 8 | Debris, joint faulty, joint open, longitudinal, protruding, surface | NA | Robo Cam 6 with a 1/3-in. SONY Exmor CMOS camera | 48,274 | South Korea | [71] |

| 9 | Broken, crack, debris, joint faulty, joint open, normal, protruding, surface | 1280 × 720 | Robo Cam 6 with a megapixel Exmor CMOS sensor | 115,170 | South Korea | [52] |

| 10 | Crack, deposit, else, infiltration, joint, root, surface | NA | Remote cameras | 2424 | UK | [66] |

| 11 | Broken, crack, deposit, fracture, hole, root, tap | NA | NA | 1451 | Canada | [104] |

| 12 | Crack, deposit, infiltration, root | 1440 × 720–320 × 256 | RedZone® Solo CCTV crawler | 3000 | USA | [98] |

| 13 | Connection, fracture, root | 1507 × 720–720 × 576 | Front facing CCTV cameras | 3600 | USA | [99] |

| 14 | Crack, deposit, root | 928 × 576–352 × 256 | NA | 3000 | USA | [97] |

| 15 | Crack, deposit, root | 512 × 256 | NA | 1880 | USA | [116] |

| 16 | Crack, infiltration, joint, protruding | 1073 × 749–296 × 237 | NA | 1106 | China | [122] |

| 17 | Crack, non-crack | 64 × 64 | NA | 40,810 | Australia | [109] |

| 18 | Crack, normal, spalling | 4000 × 46,000–3168 × 4752 | Canon EOS. Tripods and stabilizers | 294 | China | [73] |

| 19 | Collapse, crack, root | NA | SSET system | 239 | USA | [61] |

| 20 | Clean pipe, collapsed pipe, eroded joint, eroded lateral, misaligned joint, perfect joint, perfect lateral | NA | SSET system | 500 | USA | [56] |

| 21 | Cracks, joint, reduction, spalling | 512 × 512 | CCTV or Aqua Zoom camera | 1096 | Canada | [54] |

| 22 | Defective, normal | NA | CCTV (Fisheye) | 192 | USA | [57] |

| 23 | Deposits, normal, root | 1507 × 720–720 × 576 | Front-facing CCTV cameras | 3800 | USA | [72] |

| 24 | Crack, non-crack | 240 × 320 | CCTV | 200 | South Korea | [106] |

| 25 | Faulty, normal | NA | CCTV | 8000 | UK | [65] |

| 26 | Blur, deposition, intrusion, obstacle | NA | CCTV | 12,000 | NA | [67] |

| 27 | Crack, deposit, displaced joint, ovality | NA | CCTV (Fisheye) | 32 | Qatar | [103] |

| 29 | Crack, non-crack | 320 × 240–20 × 20 | CCTV | 100 | NA | [100] |

| 30 | Barrier, deposition, distortion, fraction, inserted | 600 × 480 | CCTV and quick-view (QV) cameras | 10,000 | China | [110] |

| 31 | Fracture | NA | CCTV | 2100 | USA | [105] |

| 32 | Broken, crack, fracture, joint open | NA | CCTV | 291 | China | [59] |

| Metric | Description | Ref. |

|---|---|---|

| Precision | The proportion of positive samples in all positive prediction samples | [9] |

| Recall | The proportion of positive prediction samples in all positive samples | [48] |

| Accuracy | The proportion of correct prediction in all prediction samples | [48] |

| F1-score | Harmonic mean of precision and recall | [69] |

| FAR | False alarm rate in all prediction samples | [57] |

| True accuracy | The proportion of all predictions excluding the missed defective images among the entire actual images | [58] |

| AUROC | Area under the receiver operator characteristic (ROC) curve | [49] |

| AUPR | Area under the precision-recall curve | [49] |

| mAP | mAP first calculates the average precision values for different recall values for one class, and then takes the average of all classes | [9] |

| Detection rate | The ratio of the number of the detected defects to total number of defects | [106] |

| Error rate | The ratio of the number of mistakenly detected defects to the number of non-defects | [106] |

| PA | Pixel accuracy calculating the overall accuracy of all pixels in the image | [116] |

| mPA | The average of pixel accuracy for all categories | [116] |

| mIoU | The ratio of intersection and union between predictions and GTs | [116] |

| fwIoU | Frequency-weighted IoU measuring the mean IoU value weighing the pixel frequency of each class | [116] |

| ID | Number of Images | Algorithm | Task | Performance | Ref. | |

|---|---|---|---|---|---|---|

| Accuracy (%) | Processing Speed | |||||

| 1 | 3 classes | Multiple binary CNNs | Classification | Accuracy: 86.2 Precision: 87.7 Recall: 90.6 | NA | [48] |

| 2 | 12 classes | Single CNN | Classification | AUROC: 87.1 AUPR: 6.8 | NA | [48] |

| 3 | Dataset 1: 2 classes | Two-level hierarchical CNNs | Classification | Accuracy: 94.5 Precision: 96.84 Recall: 92 F1-score: 94.36 | 1.109 h for 200 videos | [69] |

| Dataset 2: 6 classes | Accuracy: 94.96 Precision: 85.13 Recall: 84.61 F1-score: 84.86 | |||||

| 4 | 8 classes | Deep CNN | Classification | Accuracy: 64.8 | NA | [70] |

| 5 | 6 classes | CNN | Classification | Accuracy: 96.58 | NA | [71] |

| 6 | 8 classes | CNN | Classification | Accuracy: 97.6 | 0.15 s/image | [52] |

| 7 | 7 classes | Multi-class random forest | Classification | Accuracy: 71 | 25 FPS | [66] |

| 8 | 7 classes | SVM | Classification | Accuracy: 84.1 | NA | [41] |

| 9 | 3 classes | SVM | Classification | Recall: 90.3 Precision: 90.3 | 10 FPS | [11] |

| 10 | 3 classes | CNN | Classification | Accuracy: 96.7 Precision: 99.8 Recall: 93.6 F1-score: 96.6 | 15 min 30 images | [73] |

| 11 | 3 classes | RotBoost and statistical feature vector | Classification | Accuracy: 89.96 | 1.5 s/image | [61] |

| 12 | 7 classes | Neuro-fuzzy classifier | Classification | Accuracy: 91.36 | NA | [56] |

| 13 | 4 classes | Multi-layer perceptions | Classification | Accuracy: 98.2 | NA | [54] |

| 14 | 2 classes | Rule-based classifier | Classification | Accuracy: 87 FAR: 18 Recall: 89 | NA | [57] |

| 15 | 2 classes | OCSVM | Classification | Accuracy: 75 | NA | [65] |

| 16 | 4 classes | CNN | Classification | Recall: 88 Precision: 84 Accuracy: 85 | NA | [67] |

| 17 | 2 class | Rule-based classifier | Classification | Accuracy: 84 FAR: 21 True accuracy: 95 | NA | [58] |

| 18 | 4 classes | RBN | Classification | Accuracy: 95 | NA | [59] |

| 19 | 7 classes | YOLOv3 | Detection | mAP: 85.37 | 33 FPS | [9] |

| 20 | 4 classes | Faster R-CNN | Detection | mAP: 83 | 9 FPS | [98] |

| 21 | 3 classes | Faster R-CNN | Detection | mAP: 77 | 110 ms/image | [99] |

| 22 | 3 classes | Faster R-CNN | Detection | Precision: 88.99 Recall: 87.96 F1-score: 88.21 | 110 ms/image | [97] |

| 23 | 2 classes | CNN | Detection | Accuracy: 96 Precision: 90 | 0.2782 s/image | [109] |

| 24 | 3 classes | Faster R-CNN | Detection | mAP: 71.8 | 110 ms/image | [105] |

| SSD | mAP: 69.5 | 57 ms/image | ||||

| YOLOv3 | mAP: 53 | 33 ms/image | ||||

| 25 | 2 classes | Rule-based detector | Detection | Detection rate: 89.2 Error rate: 4.44 | 1 FPS | [106] |

| 26 | 2 classes | GA and CNN | Detection | Detection rate: 92.3 | NA | [100] |

| 27 | 5 classes | SRPN | Detection | mAP: 50.8 Recall: 82.4 | 153 ms/image | [110] |

| 28 | 1 class | CNN and YOLOv3 | Detection | AP: 71 | 65 ms/image | [108] |

| 29 | 3 classes | DilaSeg-CRF | Segmentation | PA: 98.69 mPA: 91.57 mIoU: 84.85 fwIoU: 97.47 | 107 ms/image | [116] |

| 30 | 4 classes | PipeUNet | Segmentation | mIoU: 76.37 | 32 FPS | [122] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Wang, H.; Dang, L.M.; Song, H.-K.; Moon, H. Vision-Based Defect Inspection and Condition Assessment for Sewer Pipes: A Comprehensive Survey. Sensors 2022, 22, 2722. https://doi.org/10.3390/s22072722

Li Y, Wang H, Dang LM, Song H-K, Moon H. Vision-Based Defect Inspection and Condition Assessment for Sewer Pipes: A Comprehensive Survey. Sensors. 2022; 22(7):2722. https://doi.org/10.3390/s22072722

Chicago/Turabian StyleLi, Yanfen, Hanxiang Wang, L. Minh Dang, Hyoung-Kyu Song, and Hyeonjoon Moon. 2022. "Vision-Based Defect Inspection and Condition Assessment for Sewer Pipes: A Comprehensive Survey" Sensors 22, no. 7: 2722. https://doi.org/10.3390/s22072722

APA StyleLi, Y., Wang, H., Dang, L. M., Song, H.-K., & Moon, H. (2022). Vision-Based Defect Inspection and Condition Assessment for Sewer Pipes: A Comprehensive Survey. Sensors, 22(7), 2722. https://doi.org/10.3390/s22072722