Abstract

Space-time adaptive processing (STAP) plays an essential role in clutter suppression and moving target detection in airborne radar systems. The main difficulty is that independent and identically distributed (i.i.d) training samples may not be sufficient to guarantee the performance in the heterogeneous clutter environment. Currently, most sparse recovery/representation (SR) techniques to reduce the requirement of training samples still suffer from high computational complexities. To remedy this problem, a fast group sparse Bayesian learning approach is proposed. Instead of employing all the dictionary atoms, the proposed algorithm identifies the support space of the data and then employs the support space in the sparse Bayesian learning (SBL) algorithm. Moreover, to extend the modified hierarchical model, which can only apply to real-valued signals, the real and imaginary components of the complex-valued signals are treated as two independent real-valued variables. The efficiency of the proposed algorithm is demonstrated both with the simulated and measured data.

1. Introduction

Space-time adaptive processing (STAP) has the capability to detect slow-moving targets that might otherwise be swallowed up by the strong sidelobe clutter. The performance of STAP filter is dependent on the accuracy of the clutter plus noise covariance matrix (CNCM) of the cell under test (CUT) [1]. According to the well-known Reed-Mallet-Brennan (RMB) rule [2], to achieve the steady performance, the number of the independent and identically distributed (i.i.d) secondary range cells used to estimate CNCM is no less than twice the system degrees of freedom (DOF). However, it is hard to obtain enough i.i.d samples in practice because of array geometry structures, nonhomogeneous clutter environment and so on [1]. Knowing how to improve the performance of STAP with limited samples has been a hot topic until now.

Reduced-dimension (RD) [3] and reduced-rank (RR) [4] algorithms are proposed to improve the performance of STAP with limited secondary samples. Even though they are easy to implement, their performance gets worse when the number of secondary samples is smaller than twice the rank of clutter [5].

Apart from RD and RR algorithms, some other algorithms [6,7,8,9,10] are proposed and succeed in suppressing clutter in theory. However, they face some disadvantages in practice. Sufficiently, (1) direct data domain (DDD) algorithms in [6] that achieve enough samples from the CUT suffer from aperture loss; and (2) knowledge-aided (KA) algorithms in [7,8,9,10] state that the accurate prior knowledge must be required in advance. Since the cost to achieve the accurate prior knowledge is expensive and the prior knowledge changes with time, KA algorithms are not widely used in practical applications.

Over the past twenty years, sparse recovery/representation (SR) algorithms have received continuous attention in STAP [11,12,13,14,15] because they have enormous potential to estimate the clutter spectrum with limited samples. The sparse Bayesian learning (SBL)-type algorithms are robust and have drawn continuous attention in the last five years.

The SBL algorithm was proposed by Tipping in [16]. Wipf enhanced it in the single measurement vector (SMV) case in [17] and then the multiple measurement vectors (MMV) case in [18]. Due to the excellent performance of SBL algorithms, the fast marginal likelihood maximization (FMLM) algorithm [19], the Bayesian compressive sensing (BCS) algorithm [20], the multitask BCS (M-BCS) algorithm [21], and other Bayesian algorithms [22,23,24] were proposed by researchers in the following years. SBL was firstly introduced into STAP with MMV, defined as the M-SBL-STAP algorithm in [25], by Duan to estimate CNCM and Wang improved the fast convergence of the algorithm in [26]. SBL has also been used to solve some common problems in STAP in the last five years, such as off-grid in [27] and discrete interference in [28]. However, the Bayesian algorithms aforementioned have one or more of the following disadvantages: (a) high computational cost, (b) inaccurate estimation of the noise variance and (c) inapplicability to complex-valued signals.

In this paper, to improve the computational efficiency of the M-SBL-STAP algorithm, we extend the FMLM algorithm to the conventional M-SBL-STAP algorithm. The real and imaginary components of the complex-valued signals are treated as two independent real-valued variables in order to extend the modified hierarchical model. Simulation results with both simulated and Mountain-Top data demonstrate that the proposed algorithm can achieve high computational efficiency and good performance.

The main contributions of this paper can be listed as follows:

- We extend the FMLM algorithm into M-SBL-STAP for the purpose of identifying the support space of the data, i.e., the atoms whose corresponding hyper-parameters are non-zero. After support space identification, the dimensions of the effective problems are drastically reduced due to sparsity, which can reduce computational complexities and alleviate memory requirements.

- According to [18], we have no access to obtain the accurate value of the noise variance. Instead of estimating the noise variance, we extend the modified hierarchical model, introduced in Section 4, to the SBL framework.

- Although the hierarchical models apply to the real-valued signals, they cannot be extended directly to the complex-valued signal according to [29,30]. The data needed to be dealt with in STAP are all complex-valued. To solve the problem, we transform sparse complex-valued signals into group sparse real-valued signals.

Notation: In this article, scalar quantities are denoted with italic typeface. Boldface small letters are reserved for vectors, and boldface capital letters are reserved matrices. The entry of a vector is denoted by , while and denote the row and element of a matrix , respectively. The symbols and denote the matrix transpose and conjugate transpose, respectively. The symbols , and are reserved for , and Frobenius () norms, respectively. is reserved for pseudo-norm. stands for a mixed norm defined as the number of non-zero elements of the vector formed by the norm of each row vector. The symbol is reserved for the determinant. The notations , and represent the identity matrix, the all zero matrix and the all one matrix, respectively. The expectation of a random variable is denoted as .

2. Background and Problem Formulation

2.1. STAP Signal Model for Airborne Radar

Consider an airborne pulsed-Doppler radar system with a side-looking uniform linear array (ULA) consisted of elements. A coherent burst of pulses is transmitted at a constant pulse repetition frequency (PRF) in a coherent processing interval (CPI). The complex sample at the CUT from the element and the pulse is denoted as , and the data for the CUT can be written as a vector , termed a space-time snapshot.

Radar systems need to ascertain whether the targets are present in the CUT; thus, target detection is formulated into a binary hypothesis problem: the hypothesis represents target absence and the other hypothesis represents target presence.

where is the target amplitude and is the space-time vector of the target. The vector is the thermal noise, which is uncorrelated both spatially and temporally. A general model for the space-time clutter is

where denotes the random amplitude of the echo from the corresponding clutter patch; denotes the number of clutter patches in a clutter ring; , and denote space-time steering vector, temporal steering vector and spatial steering vector, respectively; and and denote the corresponding normalize temporal frequency and spatial frequency, respectively.

According to the linearly constrained minimum variance (LCMV) criterion, the optimal STAP weight vector is

where the CNCM is expressed as

2.2. SR-STAP Model and Principle

Discretize the space-time plane uniformly into grids, where denotes the number of normalized spatial frequency bins and denotes the number of normalized Doppler frequency bins. Each grid corresponds to a space-time steering vector . The dictionary used in STAP is the collection of space-time steering vectors of all grids.

The signal model in STAP can be cast in the following form

where ; denotes sparse coefficient matrix; non-zero elements indicate the presence of clutter on the space-time profile; denotes the number of the range gates in the data; and denotes zero-mean Gaussian noise.

In sparse signal recovery algorithms with MMV, our goal is to represent the measurement , which is contaminated by noise as a linear combination of as few dictionary atoms as possible. Therefore, the objective function is expressed as

where is the noise error allowance.

3. M-SBL-STAP Algorithm

3.1. Sparse Bayesian Learning Formulation

In the SBL framework, are assumed as i.i.d training snapshots. The noise is submitted to white complex Gaussian distribution with unknown power . The likelihood function of the measurement vectors can be denoted as

Since the training snapshots are i.i.d, each column in is independent and shares the same covariance matrix. Assign to each column in with a zero-mean Gaussian prior

where is a zero vector and . are unknown variance parameters corresponding to sparse coefficient matrix. The prior of can be expressed as

Combining likelihood with prior, the posterior density of can be easily expressed as

modulated by the hyper-parameters and . To find the hyper-parameters and , which are enough accurate to estimate the CNCM, the most common method is the expectation maximization (EM) algorithm. The EM algorithm is divided into two parts: E-step and M-step. represents the sequence number of the current iteration. At the E-step: treat as hidden data, and the posterior density can be described with hyper-parameters and .

with covariance and mean given by

where

At the M-step, we update the hyper-parameters by maximizing the expectation of .

where

The M-SBL-STAP algorithm is shown in Algorithm 1.

| Algorithm 1: Pseudocode for the M-SBL-STAP algorithm. |

| Step 1: Input: the clutter data X, the dictionary |

| Step 2: Initialization: initial the values of and . |

| Step 3: E-step: update the posterior moments and using (17) and (18). |

| Step 4: M-step: update and using (22) and (23). |

| Step 5: Repeat step 3 and step 4 until a stopping criterion is satisfied. |

| Step 6: Estimate the CNCM by the formula where is a load factor and the symbol * represents the stopping criterion. |

| Step 7: Compute the space-time adaptive weight using (7). |

| Step 8: The output of the M-SBL-STAP algorithm is . |

3.2. Problem Statement of the M-SBL-STAP Algorithm

At the E-step in each iteration, the inversion of a

matrix, which brings high computational complexities in the order of , is required to be calculated when update covariance (17). is the number of the atoms in the dictionary, and the value of is usually large. If we avoid calculating the matrix inversion of a matrix, the computational complexities can be reduced a lot.

At the M-step in each iteration, the noise variance

needs to be estimated. However, it has been demonstrated in [18] that estimated by (23) can be extremely inaccurate when the dictionary is structured and . We can see in [21] that is a nuisance parameter in the iterative procedure and an inappropriate value may contaminate the convergence performance of the algorithm.

We extend a modified hierarchical model to the SBL framework, which aims at integrating

out instead of estimating . However, the modified model, which applies to the real-valued signals, cannot be directly extended to complex-valued signals.

In the next sections, we introduce the proposed algorithm to solve the above problems. The proposed algorithm is defined as M-FMLM-STAP algorithm.

4. The Proposed M-FMLM-STAP Algorithm

4.1. Modified Hierarchical Model

In [16], the scholars define the following hierarchical hyperpriors over , as well as the noise variance .

where and the ‘gamma function’ . It has been demonstrated in [16] that an appropriate choice of the shape parameter and the scale parameter encourages the sparsity of the coefficient matrix.

In the STAP framework, CNCM can be expressed as

We can translate STAP weight vector in the form

where and are constants.

The above analysis shows that is equivalent to in the performance of clutter suppression. Thus, including in the prior of , each component of is defined as a zero-mean Gaussian distribution and the modified hierarchical model follows (28) and (29).

where .

4.2. Application of the Modified Hierarchical Model to Complex-Valued Signals

However, although both of the original and the modified models apply to real-valued signals, they cannot be directly extended to complex-valued signals. To solve the above problem, the real and imaginary components of the complex-valued signals are treated as two independent real-valued variables.

The new signal model is expressed as

where is the new data model, is the new sparse coefficient matrix and is the new noise matrix in new model. The new dictionary is expressed as

while the new covariance, mean and hyper-parameter in the modified model are expressed as

where . The signal is group sparse because the entries in each group, saying , are either zero or nonzero simultaneously.

In order to avoid calculating in (33) and (34), we extend the modified hierarchical model to the SBL framework. The new real-valued likelihood function can be expressed as

and the new prior of can be expressed as

Combining the new likelihood and prior, the new posterior density of can be expressed as

Integrate out, and the posterior density function of is

where

From (39), we can draw the conclusion that the modified formulation induces a heavy-tailed Student-t distribution on the residual noise, which improves the robustness of the algorithm.

The logarithm of the marginal likelihood function is

with

Since the signals is group-sparse, we define

and then

where .

4.3. Maximization of to Estimate

A most probable way to point estimate hyper-parameters may be found via a type-II maximum likelihood procedure. Mathematically, maximize the marginal likelihood function or its logarithm (42) with respect to . In the following substance, the FMLM algorithm is applied to maximize (42) to estimate . Unlike the EM algorithm, the FMLM algorithm reduces the computational complexities by identifying the support space of data, i.e., the atoms in the dictionary whose corresponding values in is non-zero.

For notational convenience, we ignore the symbol . and in the following substance all represent and , respectively.

Define as the set of the non-zero values in and as the support space of data.

where

and is the number of non-zeros in .

At the beginning of the FMLM algorithm, we initialize , namely, and . Then, we need to identify the support space of data in each iteration until that converges to a steady point.

The matrix can be decomposed into two parts.

The first term contains all terms that are independent of , and the second term includes all the terms related to .

Using the Woodbury Matrix Identity, the matrix inversion and matrix determinate lemmas are expressed as

Then, (42) can be expressed as

where contains all terms that are independent of and includes all the terms related to .

Define

Then, can be expressed as

The eigenvalue decomposition (EVD) of

where and denote the eigenvalue and eigenvector of respectively. is the eigen-matrix of .

Define , and the formula (55) can then be expressed as

The next step involves maximizing (57) to estimate the hyper-parameters , and we choose only one candidate from that can maximize (53).

Differentiate with respect to , and set the result to zero

which is equivalent to solve the following polynomial function

where

It has at most 3 roots found by standard root-finding algorithms. Considering that and the corresponding is not in the support space when , the set of the positive roots is

The optimal is given by

If , it means the set has no contribution to , that is to say, . If , we need to set because .

In the iteration, we choose only one that can maximize (53), while fix the other . The sequence number is expressed as

In order to avoid calculating , we define

Since in the iteration is a fixed constant, the sequence number can also be expressed as

The next step is to change the value of while fixing the other .

If and , add to (i.e., is not in , , ); if and (i.e., is already in , ), replace with in ; if and , delete from and delete from ; and if and (i.e., ), stop iteration because the procedure converges to steady state. Finally, is the exact support space of the data and is the set of the non-zero values in the exact where the symbol * represents the stopping criterion.

4.4. Fast Computation of

In each iteration, we need to calculate the matrix inversion of all when updating . In order to reduce computation complexities, we need a fast means to update .

Define

If we can calculate with , the computational complexities can be reduced a lot because we only calculate the matrix inversion of .

Substituting (51) into (68), we can arrive at the following formula:

We can draw the conclusion that the eigen-matrix of is the same as that of from (56) and (69). The EVD of can be also expressed as

where denotes the eigenvalue of .

Thus, can be obtained via the EVD of

and can be also computed with and , respectively, via the EVD of .

Thus,

Similarly,

Using (74), we can obtain

Therefore, can be obtained from using (72), (74) and (76).

Compared with (68), there is another approach that requires fewer computational complexities to calculate . The formula (43) is equivalent to

With matrix inversion lemmas and (77), it is fast and more convenient to update with the following formulae compared with (68).

where herein represents the covariance of the non-zeros in .

Additionally, the mean of the non-zeros in herein is expressed as

The computational complexities are measured in terms of the number of multiplications. Assuming that the dimension of is , is not a fixed value in each iteration but satisfies the condition because the measurements are sparse. When we calculate with (68), the computation complexities are in order of . With (78), the computation complexities of calculating are in order of . It is apparent that the latter operation is faster and more convenient.

Since the measurements are sparse, the dimension of the matrix in (79) is far smaller than the dimension of in (41). The proposed M-FMLM-STAP algorithm identifies the support space of data to reduce the effective problem dimensions drastically due to sparsity. Therefore, the proposed algorithm has tremendous potential for real- time operation.

The proposed M-FMLM-STAP algorithm is shown in Algorithm 2. The detailed update formulae are shown in the Appendix A.

| Algorithm 2: Pseudocode for M-FMLM-STAP algorithm. |

| Step 1: Input: the original data X, the original dictionary and . |

| Step 2: and . |

| Step 3: Initialize: and . |

| Step 4: while not converged do Choose only one candidate and find optimal using (65). If and , then , and . Otherwise, if and , then , and replace with . Otherwise, if and , then delete from , and delete from . end Update ,,, and referring to Appendix A. end while |

| Step 5: Estimate the CNCM by where the vector is the column of , is the number of non-zeros in and is a load factor. The symbol * represents the stopping criterion. |

| Step 6: Compute the space-time adaptive weight using (7). |

| Step 7: The output of M-FMLM-STAP is . |

The main symbols aforementioned are listed in Table 1.

Table 1.

The main symbols.

5. Complexity Analysis and Convergence Analysis

5.1. Complexity Analysis

Here, we compare the computational complexities of the proposed M-FMLM-STAP algorithm and the M-SBL-STAP algorithm for a single iteration. The computational complexities are measured in terms of the number of multiplications.

First, we analyze the computational complexities of the M-SBL-STAP algorithm. It is apparent that the main computational complexities of the M-SBL-STAP algorithm are related to the formulae (17) and (18). Noting that in (17) is a diagonal matrix, the computational complexities of (17) are in the order of . The computational complexities of (18) are in the order of . Thus, the computational complexities of the M-SBL-STAP algorithm are in the order of .

Second, we analyze the computational complexities of the M-FMLM-STAP algorithm. Noting that the dimension of is , the computational complexities of the EVD of are small enough to be ignored, that is to say, the computational complexities to calculate with can be ignored. Meanwhile, the computational complexities to solve the polynomial function (59), and to find the sequence number (65) are in direct proportion to , which are also small enough to be ignored. Thus, the main computational complexities of the M-FMLM-STAP algorithm are used to update . Assuming that the dimension of is , is not a fixed value in all iterations. However, the condition is satisfied because the measurements are sparse. The computational complexities of the term are in the order of . Thus, and , the computational complexities of (78), are in the order of . The computational complexities of (79) and (80) are in the order of and , respectively. To sum up, ignore the low-order computational complexities, and the computational complexities of the M-FMLM-STAP algorithm for a single iteration are in the order of .

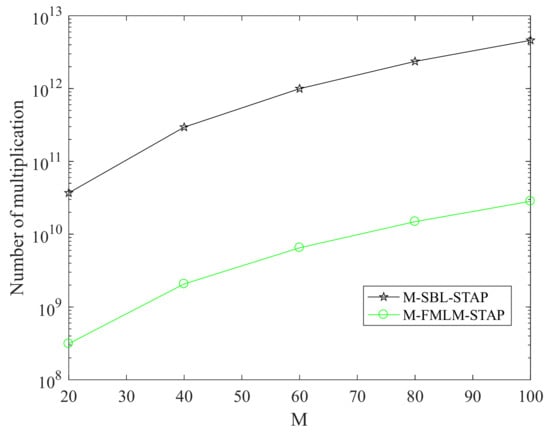

Figure 1 illustrates the complexity requirements of two algorithms for a single iteration. It shows the computational complexities to the number of pluses for a single iteration. Suppose that , and . Although the value is unknown and unfixed in each iteration, we can suppose that it is twice as much to the rank of clutter in the current iteration. We can draw the conclusion that the computational complexities of the M-SBL-STAP algorithm are far more than that of the M-FMLM-STAP algorithm for a single iteration.

Figure 1.

Computational complexity versus the number of pluses for a single iteration.

5.2. Convergence Analysis

It is obvious that the logarithm of the marginal likelihood function has upper bound [31]. From (62), in the iteration, is the maximal value of , that is to say, . Therefore, the condition is promised in each iteration referring to (65). Thus, the convergence performance of our proposed algorithm is guaranteed.

6. Performance Assessment

In this section, we firstly verify the performance of the loading sample matrix inversion (LSMI) algorithm, the multiple orthogonal matching pursuit (M-OMP-STAP) algorithm [32], the M-SBL-STAP algorithm and the proposed M-FMLM-STAP algorithm with the simulated data of side-looking ULA in the ideal case and the array errors case. The first two algorithms are common approaches in STAP. We then assess the performance of the M-SBL-STAP algorithm and the proposed M-FMLM-STAP algorithm with the Mountain-Top data. We utilize the improvement factor (IF) and signal to interference plus noise ratio (SINR) loss as two measurements of performance, which are expressed as

where is the exact CNCM of the CUT.

6.1. Simulated Data

The parameters of a side-looking phased array radar are listed in Table 2. The 600th range gate is chosen to be CUT. According to the parameters of the radar system, the slope of the clutter ridge is 1. We set the resolution scales and . Total of six training samples are utilized for two algorithms and the parameters .

Table 2.

Radar system parameters.

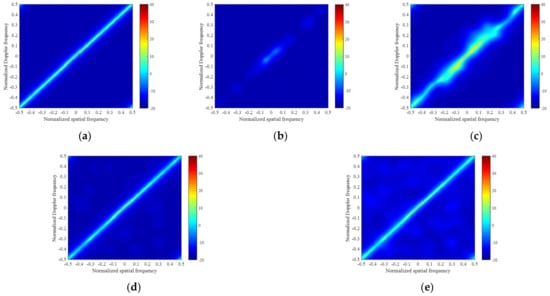

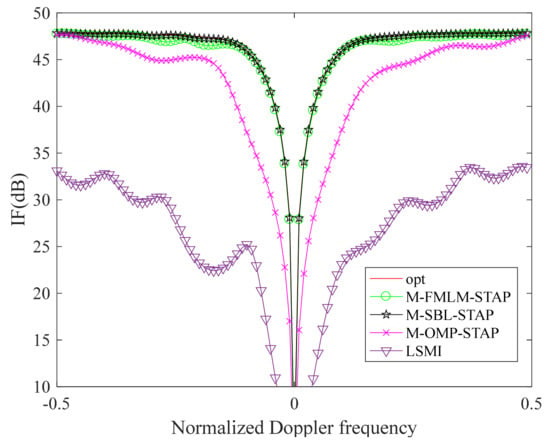

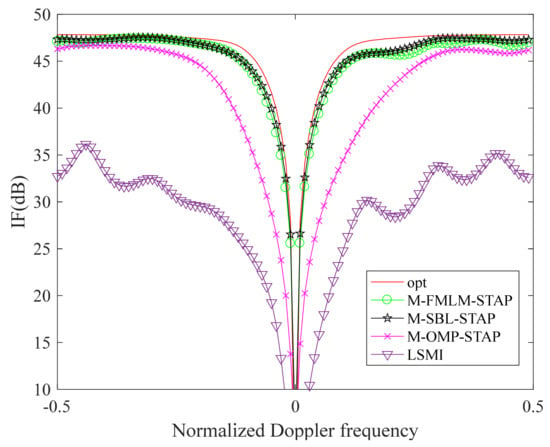

In Figure 2, there are five clutter power spectrum figures in the framework of the side-looking ULA in the ideal case. Figure 2a–e show the clutter power spectrums estimated by the exact CNCM, the LSMI algorithm, the M-OMP-STAP algorithm, the M-SBL-STAP algorithm and the M-FMLM-STAP algorithm, respectively. We note that the clutter spectrums obtained by the M-SBL-STAP algorithm and the M-FMLM-STAP algorithms are closer to the exact spectrum both on the location and power than the other algorithms in the ideal case. As shown in Figure 3, the IF factor curve achieved by the M-SBL-STAP algorithm is a litter better than that achieved by the M-FMLM-STAP algorithm because the latter algorithm is sensitive to noise fluctuation. However, the latter algorithm needs much fewer computational complexities, and the loss on performance can be offset by the decrease on the computational complexities. Using a computer equipped with two Inter(R) Xeon(R) Gold 6140 CPU @2.30 GHz 2.29 GHz processors, the former spends more than 40 s and the latter spends less than 4 s at average. The larger the value of , the bigger gap of computational complexities between the M-SBL-STAP algorithm and the M-FMLM-STAP algorithm.

Figure 2.

Angle-Doppler clutter power spectrums in the ideal case: (a) exact spectrum calculated by the ideal CNCM; (b) sparse spectrum estimated by LSMI; (c) sparse spectrum estimated by M-OMP-STAP; (d) sparse spectrum estimated by M-SBL-STAP; and (e) sparse spectrum estimated by M-FMLM-STAP.

Figure 3.

IF curves in the ideal case.

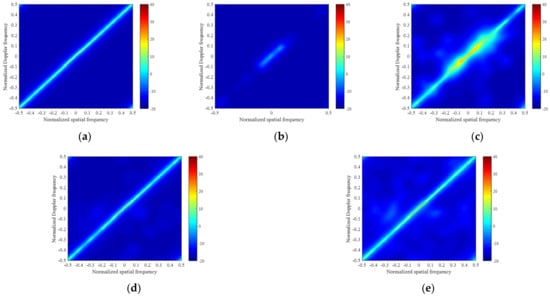

In Figure 4, we apply four approaches in the non-ideal case with amplitude Gaussian error (standard deviation 0.03) and phase random error (standard deviation ). The error is the same at all direction. Figure 4a–e show the clutter power spectrums estimated by the exact CNCM, the LSMI algorithm, the M-OMP-STAP algorithm, the M-SBL-STAP algorithm and the M-FMLM-STAP algorithm, respectively. We note that the clutter spectrums obtained by the M-SBL-STAP algorithm and the M-FMLM-STAP algorithm are much closer to the exact spectrum, both in terms of the location and power in the non-ideal case. As shown in Figure 5, the notch of the IF factor curve achieved by the M-SBL-STAP algorithm is close to that achieved by the M-FMLM-STAP algorithm, which means the performance of two algorithms is about the same in the non-ideal case. However, the running time of the latter is much less than that of the former.

Figure 4.

Angle-Doppler clutter power spectrums in the non-ideal case with array errors: (a) exact spectrum calculated by the exact CNCM; (b) sparse spectrum estimated by LSMI; (c) sparse spectrum estimated by M-OMP-STAP; (d) sparse spectrum estimated by M-SBL-STAP; and (e) sparse spectrum estimated by M-FMLM-STAP.

Figure 5.

IF curves in the non-ideal case.

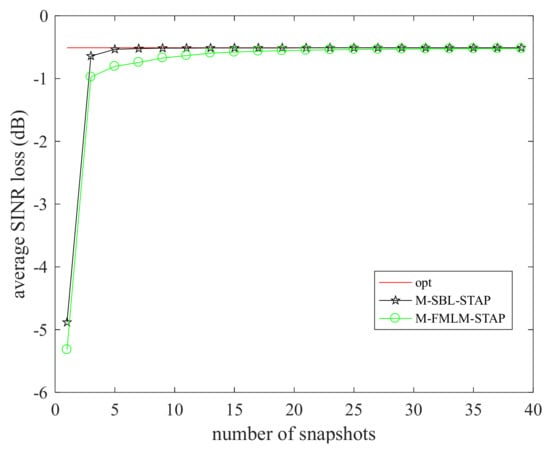

In Figure 6, the average SINR loss, defined as the mean of the SINR loss values in the entire normalized Doppler frequency range to the number of training samples of the M-SBL-STAP algorithm and the M-FMLM-STAP algorithm, is presented. The curve of the average SINR loss achieved by the M-SBL-STAP algorithm is a litter better than that achieved by the M-FMLM-STAP algorithm within 0.5 dB, which can be ignored in practice. However, the gap on computational complexities of two algorithms will be larger as the DOF of the radar system increases. All presented results are averaged over 100 independent Monte Carlo runs.

Figure 6.

Average SINR loss to the number of training samples.

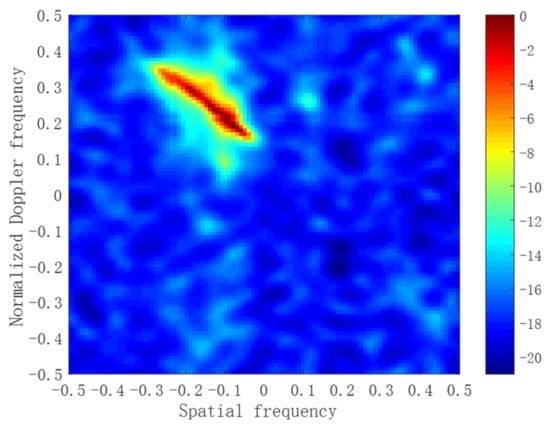

6.2. Measured Data

We apply the M-SBL-STAP algorithm and the M-FMLM-STAP algorithm to the public available Mountain-Top set, i.e., t38pre01v1 CPI6 [33]. In this data file, the number of array elements and coherent pulses are 14 and 16, respectively. There are 403 range cells, and the clutter locates around 245° relative to the true North. The target is located in 147th range cell, and the azimuth angle is 275° to the true North. The normalized target Doppler frequency is 0.25. The estimated clutter Capon spectrum utilizing all 403 range cells is provided in Figure 7.

Figure 7.

Estimated clutter Capon spectrum with all samples.

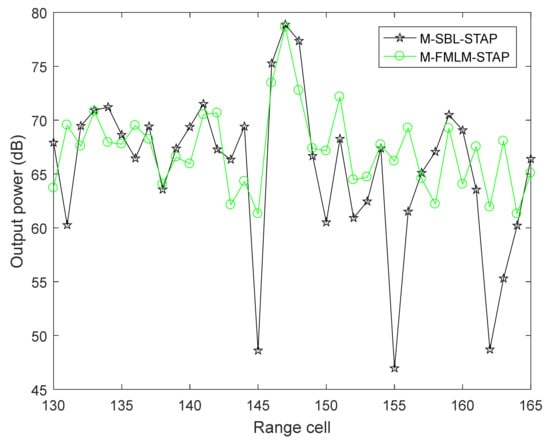

Figure 8 depicts the STAP output power of the M-SBL-STAP algorithm and the M-FMLM-STAP algorithm in the range cells from 130 to 165, and 10 range cells out of 20 range cells located next to the CUT are selected as training samples. The target locates at 147th range cell and can be detected by two algorithms. Obviously, the detection performance of the proposed M-FMLM-STAP algorithm is close to that of M-SBL-STAP algorithm. However, the operation time of the former algorithm is much less than that of the latter algorithm. Therefore, the proposed algorithm is applicable in practice.

Figure 8.

STAP output power to the range cell of two algorithms.

7. Conclusions

In this paper, we have extended the real-valued multitask compressive sensing technique to suppress complex-valued heterogeneous clutter for airborne radar. Unlike the conventional M-SBL-STAP algorithm, the proposed algorithm avoids the inversion of a matrix at each iteration to guarantee real-time operations. We integrate the noise out instead of estimating to overcome the problem that we have no access to obtain the accurate value of . The complex-valued multitask clutter data are translated into group real-valued sparse signals in the article to suppress clutter because the modified hierarchical model is not suitable to complex-valued signals. At the end, simulation results demonstrate the high computational efficiency and the great performance of the proposed algorithm.

Author Contributions

Conceptualization, C.L. and T.W.; methodology, C.L. and T.W.; software, C.L.; validation, C.L., S.Z. and B.R.; writing—original draft preparation, C.L.; writing—review and editing, S.Z.; visualization, C.L.; supervision, T.W.; project administration, T.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Key R&D Program of China, grant number 2021YFA1000400.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

For notational convenience, we ignore the symbol in the iteration and use to represent the iteration.

Appendix A.1. Adding a New Basis Group Function ()

When , .

where and .

Appendix A.2. Re-Estimating a Basis Group Function ()

represents the index of the group in the whole dictionary matrix, and represents the position index of the above-mentioned group in the used basis matrix . is the hyper-parameter re-estimated, , , and .

Appendix A.3. Deleting a Basis Group Function ()

represents the index of the group in the whole dictionary matrix, and represents the position index of the above-mentioned group, which is removed in the used basis matrix .

References

- Ward, J. Space-Time Adaptive Processing for Airborne Radar; Technical Report; MIT Lincoln Laboratory: Lexington, KY, USA, 1998. [Google Scholar]

- Reed, I.S.; Mallet, J.D.; Brennan, L.E. Rapid convergence rate in adaptive arrays. IEEE Trans. Aerosp. Electron. Syst. 1974, AES-10, 853–863. [Google Scholar] [CrossRef]

- Dipietro, R.C. Extended factored space-time processing for airborne radar systems. In Proceedings of the 26th Asilomar Conference on Signals, Systems and Computing, Pacific Grove, CA, USA, 26–28 October 1992; pp. 425–430. [Google Scholar]

- Haimovich, A. The eigencanceler: Adaptive radar by eigenanalysis methods. IEEE Trans. Aerosp. Electron. Syst. 1996, 32, 532–542. [Google Scholar] [CrossRef]

- Melvin, W.L. A STAP overview. IEEE Aerosp. Electron. Syst. Mag. 2004, 19, 19–35. [Google Scholar] [CrossRef]

- Sarkar, T.K.; Park, S.; Koh, J. A deterministic least squares approach to space time adaptive processing. Digit. Signal Process. 1996, 6, 185–194. [Google Scholar] [CrossRef] [Green Version]

- Capraro, C.T.; Capraro, G.T.; Bradaric, I.; Weiner, D.D.; Wicks, M.C.; Baldygo, W.J. Implementing digital terrain data in knowledge-aided space-time adaptive processing. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 1080–1097. [Google Scholar] [CrossRef]

- Capraro, C.T.; Capraro, G.T.; Weiner, D.D.; Wicks, M.C.; Baldygo, W.J. Improved STAP performance using knowledge-aided secondary data selection. In Proceedings of the 2004 IEEE Radar Conference, Philadelphia, PA, USA, 29 April 2004. [Google Scholar]

- Melvin, W.; Wicks, M.; Antonik, P.; Salama, Y.; Li, P.; Schuman, H. Knowledge-based space-time adaptive processing for airborne early warning radar. IEEE Aerosp. Electron. Syst. Mag. 1998, 13, 37–42. [Google Scholar] [CrossRef]

- Bergin, J.S.; Teixeira, C.M.; Techau, P.M. Improved clutter mitigation performance using knowledge-aided space-time adaptive processing. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 997–1009. [Google Scholar] [CrossRef]

- Donoho, D.L.; Elad, M.; Temlyakov, V.N. Stable recovery of sparse overcomplete representations in the presence of noise. IEEE Trans. Inf. Theory 2006, 52, 6–18. [Google Scholar] [CrossRef]

- Sun, K.; Zhang, H.; Li, G.; Meng, H.D.; Wang, X.Q. A novel STAP algorithm using sparse recovery technique. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; Volume 1, pp. 3761–3764. [Google Scholar]

- Yang, Z.C.; Li, X.; Wang, H.Q.; Jiang, W.D. On clutter sparsity analysis in space-time adaptive processing airborne radar. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1214–1218. [Google Scholar] [CrossRef]

- Yang, Z.C.; de Lamare, R.C.; Li, X. L1-regularized STAP algorithm with a generalized sidelobe canceler architecture for airborne radar. IEEE Trans. Signal Process. 2012, 60, 674–686. [Google Scholar] [CrossRef]

- Sen, S. Low-rank matrix decomposition and spatio-temporal sparse recovery for STAP radar. IEEE J. Sel. Top. Signal Process. 2015, 9, 1510–1523. [Google Scholar] [CrossRef]

- Tipping, M.E. Sparse Bayesian learning and the relevance vector machine. J. Mach. Learn. 2001, 1, 211–244. [Google Scholar]

- Wipf, D.P.; Rao, B.D. Sparse Bayesian learning for basis selection. IEEE Trans. Signal Process. 2004, 52, 2153–2164. [Google Scholar] [CrossRef]

- Wipf, D.P.; Rao, B.D. An empirical Bayesian strategy for solving the simultaneous sparse approximation problem. IEEE Trans. Signal Process. 2007, 55, 3704–3716. [Google Scholar] [CrossRef]

- Tipping, M.E.; Faul, A.C. Fast marginal likelihood maximization for sparse Bayesian models. In Proceedings of the Ninth International Workshop on Artificial Intelligence and Statistics, Key West, FL, USA, 3–6 January 2003; pp. 3–6. [Google Scholar]

- Ji, S.H.; Xue, Y.; Carin, L. Bayesian compressive sensing. IEEE Trans. Signal Process. 2008, 56, 2346–2356. [Google Scholar] [CrossRef]

- Ji, S.H.; Dunson, D.; Carin, L. Multi-task compressive sensing. IEEE Trans. Signal Process. 2009, 57, 92–106. [Google Scholar] [CrossRef]

- Wu, Q.S.; Zhang, Y.M.; Amin, M.G.; Himed, B. Complex multitask Bayesian compressive sensing. In Proceedings of the 2014 IEEE International Conference on Acoustic, Speech and Signal Processing, Florence, Italy, 4–9 May 2014. [Google Scholar]

- Serra, J.G.; Testa, M.; Katsaggelos, A.K. Bayesian K-SVD using fast variational inference. IEEE Trans. Image Process. 2017, 26, 3344–3359. [Google Scholar] [CrossRef]

- Ma, Z.Q.; Dai, W.; Liu, Y.M.; Wang, X.Q. Group sparse Bayesian learning via exact and fast marginal likelihood maximization. IEEE Trans. Signal Process. 2017, 65, 2741–2753. [Google Scholar] [CrossRef]

- Duan, K.Q.; Wang, Z.T.; Xie, W.C.; Chen, H.; Wang, Y.L. Sparsity-based STAP algorithm with multiple measurement vectors via sparse Bayesian learning strategy for airborne radar. IET Signal Process. 2017, 11, 544–553. [Google Scholar] [CrossRef]

- Wang, Z.T.; Xie, W.C.; Duan, K.Q. Clutter suppression algorithm base on fast converging sparse Bayesian learning for airborne radar. Signal Process. 2017, 130, 159–168. [Google Scholar] [CrossRef]

- Yuan, H.D.; Xu, H.; Duan, K.Q.; Xie, W.C.; Liu, W.J.; Wang, Y.L. Sparse Bayesian learning-based space-time adaptive processing with off-grid self-calibration for airborne radar. IEEE Access 2018, 6, 47296–47307. [Google Scholar] [CrossRef]

- Yang, X.P.; Sun, Y.Z.; Yang, J.; Long, T.; Sarkar, T.K. Discrete Interference suppression method based on robust sparse Bayesian learning for STAP. IEEE Access 2019, 10, 26740–26751. [Google Scholar] [CrossRef]

- Bai, Z.L.; Shi, L.M.; Sun, J.W.; Christensen, M.G. Complex sparse signal recovery with adaptive Laplace priors. arXiv 2006, arXiv:2006.16720v1. [Google Scholar]

- Babacan, S.D.; Molina, R.; Katsaggelos, A.K. Bayesian compressive sensing using Laplace priors. IEEE Trans. Image Process. 2010, 19, 53–63. [Google Scholar] [CrossRef]

- Wipf, D.; Nagarajan, S. A new view of automatic relevance determination. Adv. Neural Inf. Process. Syst. 2008, 20, 1–9. [Google Scholar]

- Tropp, J.A. Algorithms for simultaneous sparse approximation, part I: Greedy pursuit. Signal Process. 2006, 86, 572–588. [Google Scholar] [CrossRef]

- Titi, G.W.; Marshall, D.F. The ARPA/NAVY mountaintop program: Adaptive signal processing for airborne early warning radar. In Proceedings of the 1996 IEEE International Conference on Acoustics, Speech, and Signal Processing, Atlanta, GA, USA, 9 May 1996. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).