Resolving Multi-Path Interference in Compressive Time-of-Flight Depth Imaging with a Multi-Tap Macro-Pixel Computational CMOS Image Sensor

Abstract

1. Introduction

2. Temporally Compressive Time-of-Flight Depth Imaging

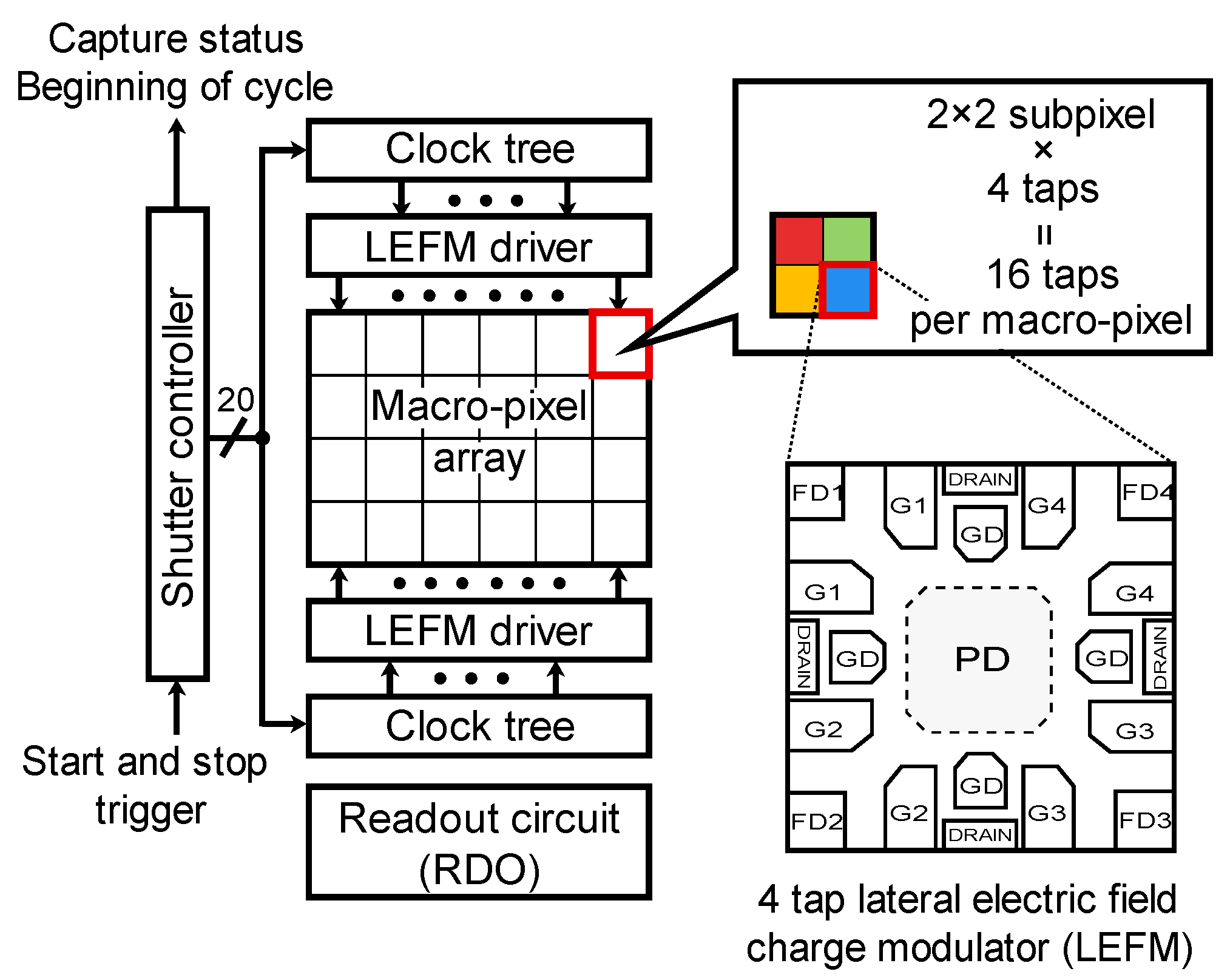

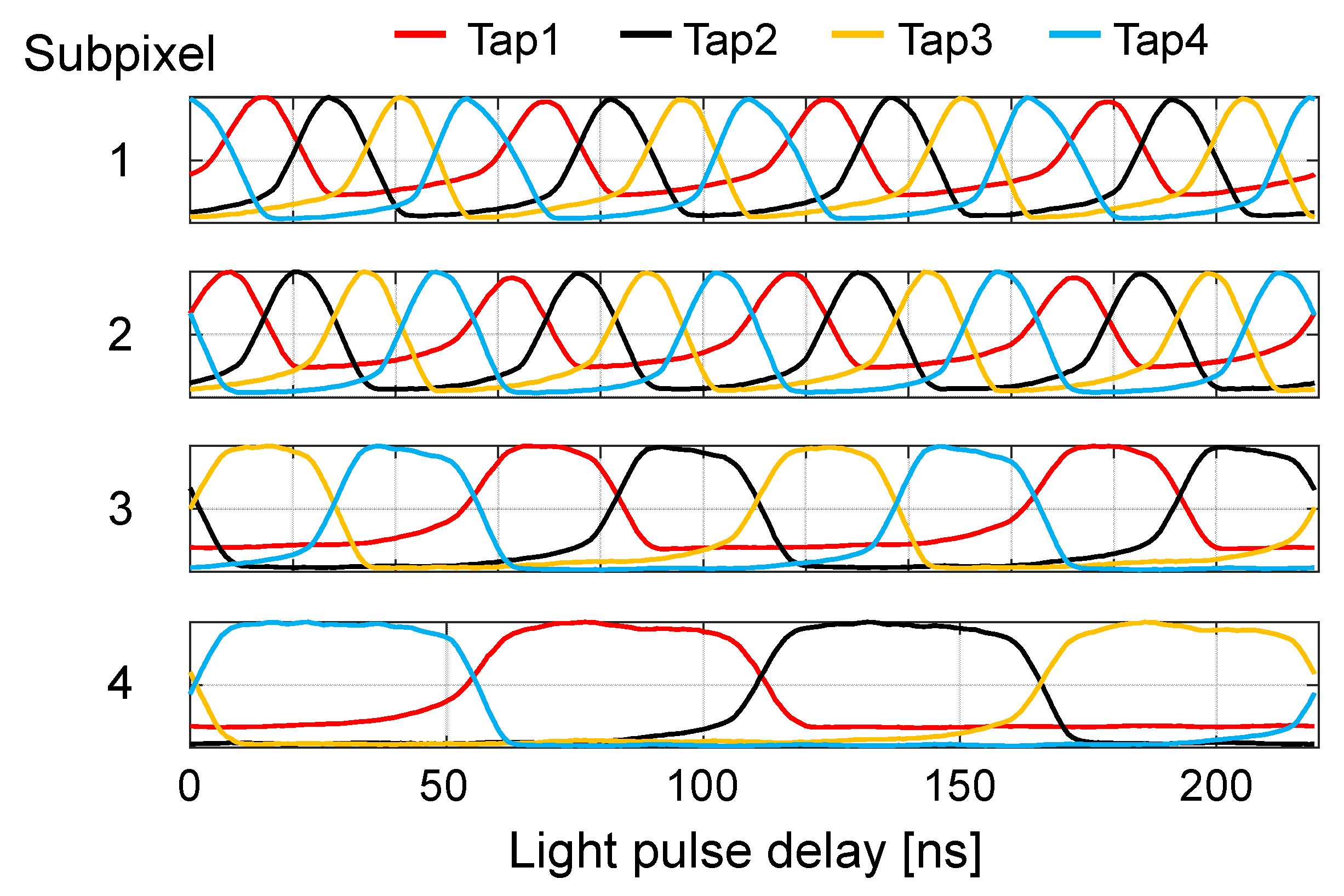

2.1. Multi-Tap Macro-Pixel Computational CMOS Image Sensor

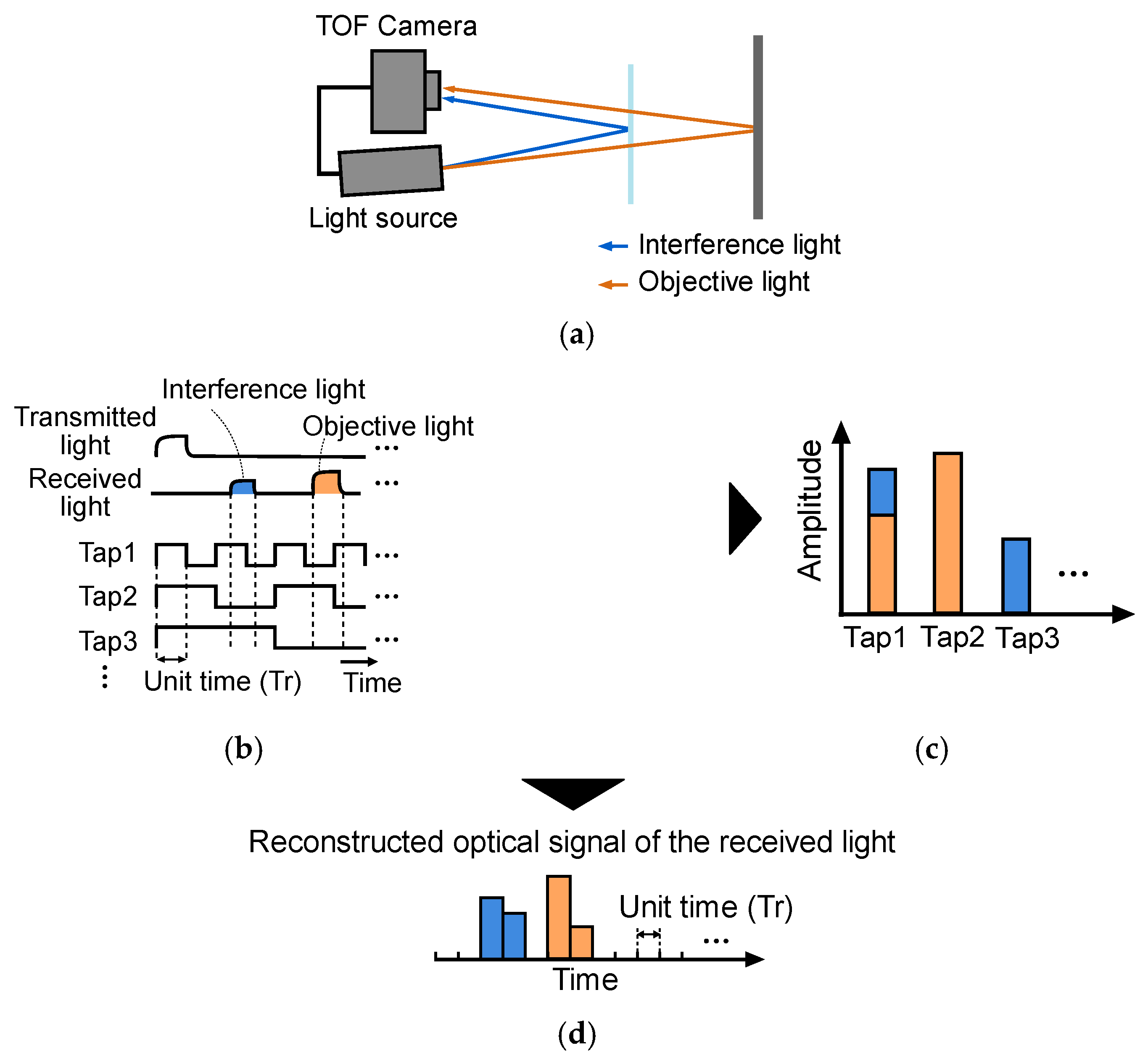

2.2. Modeling of Multi-Path Interference in ToF

2.3. Selection of Exposure Patterns

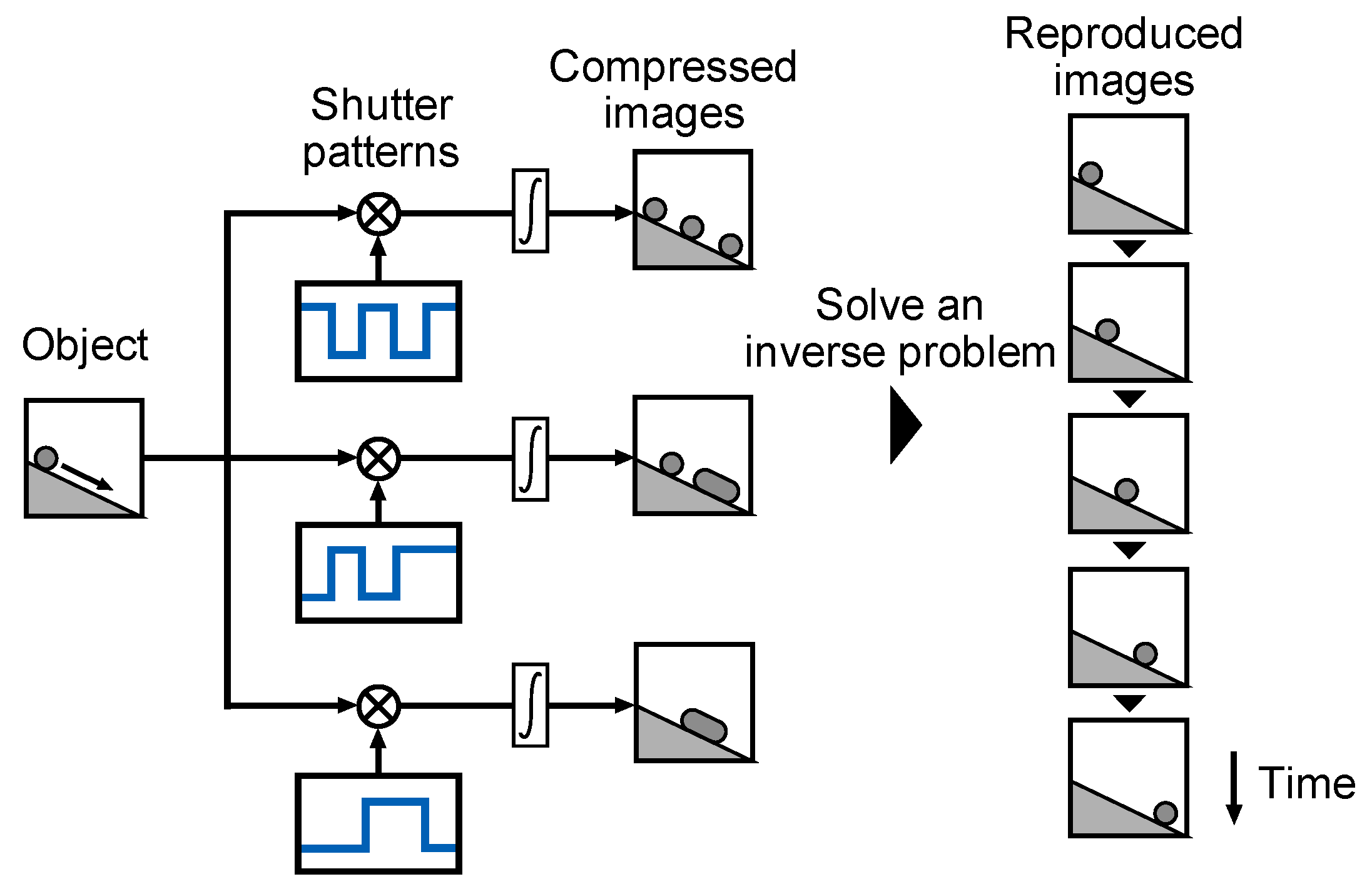

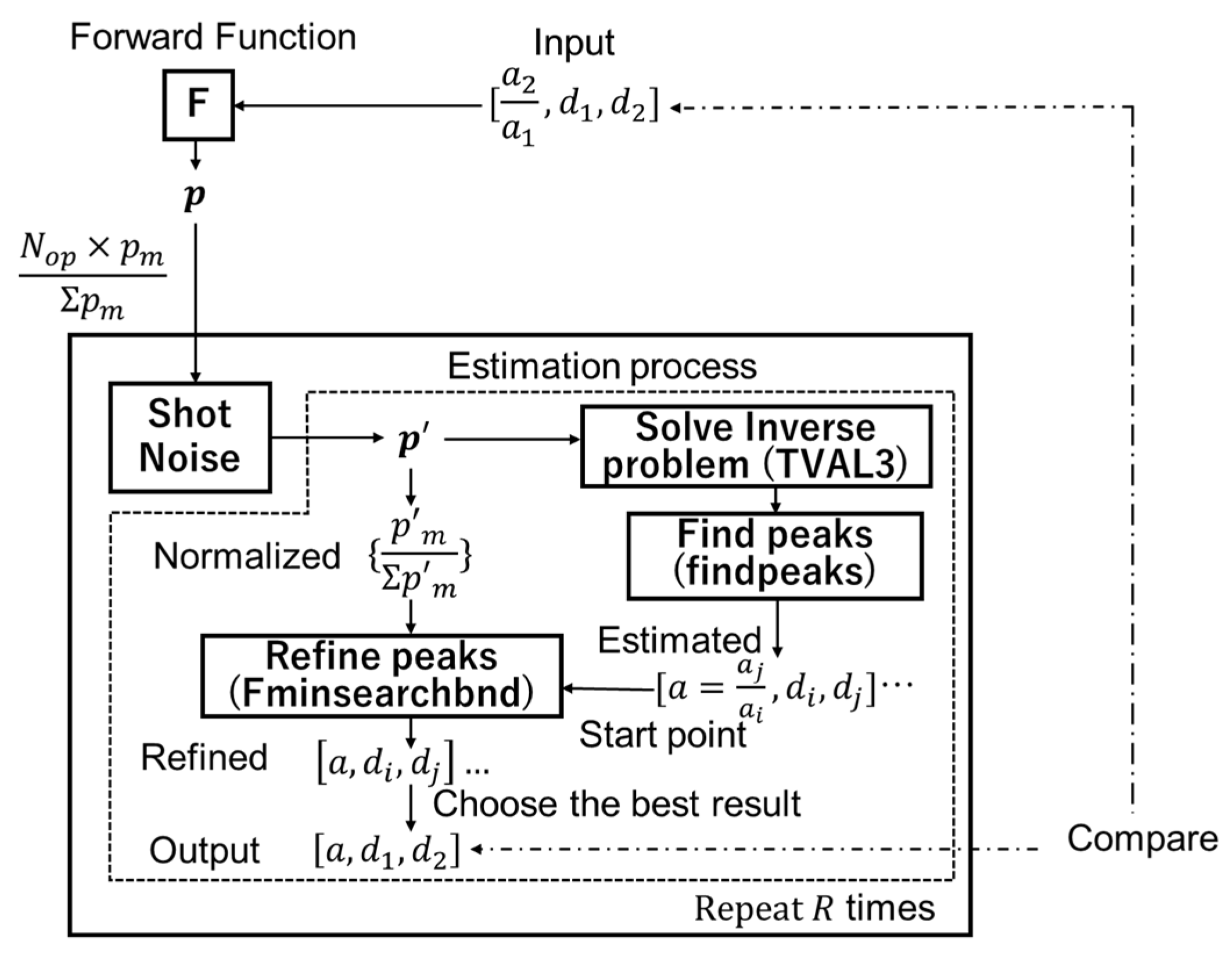

2.4. Solving the Inverse Problem and Depth Refinement

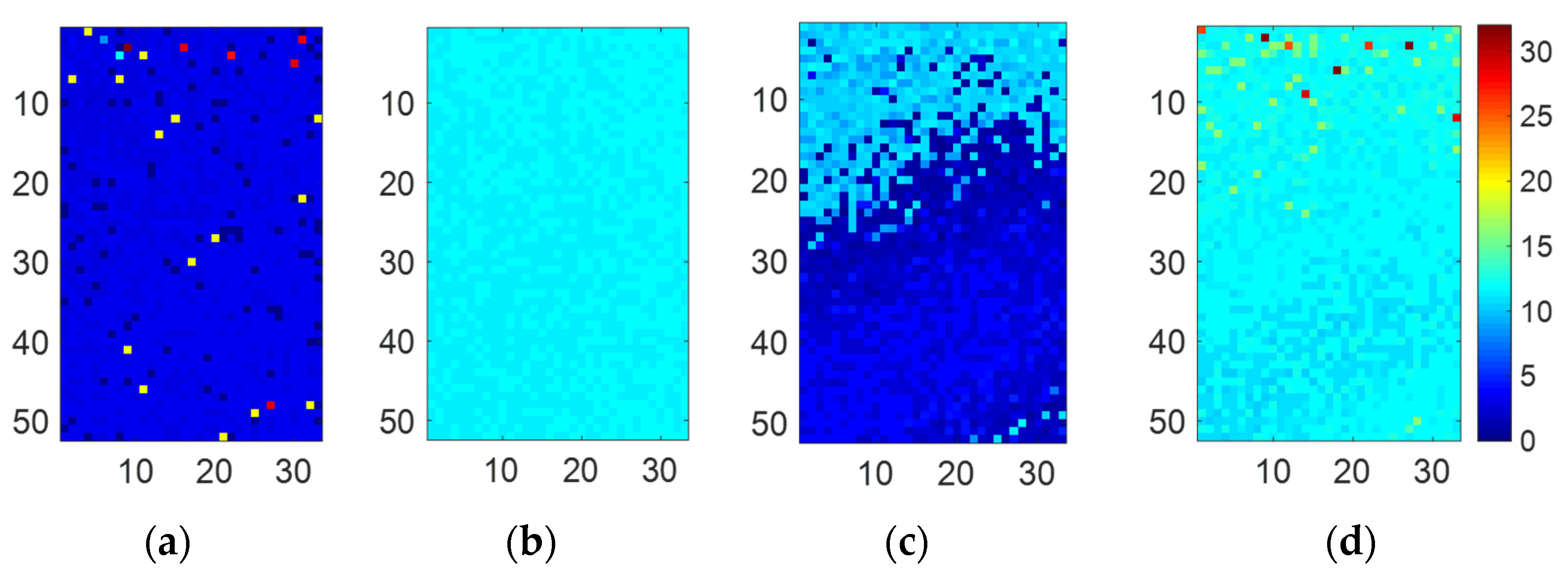

3. Simulation

3.1. Simulation Method

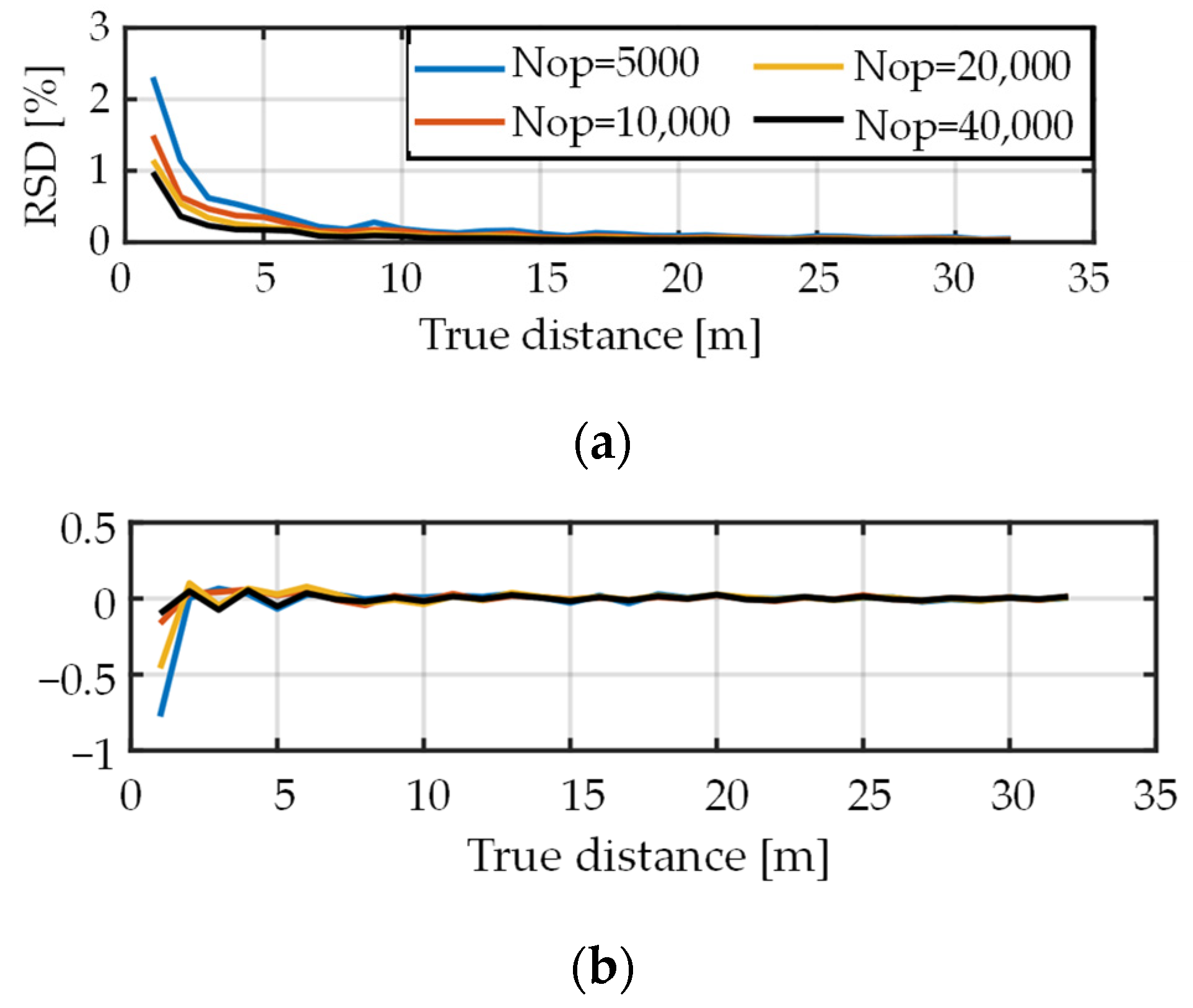

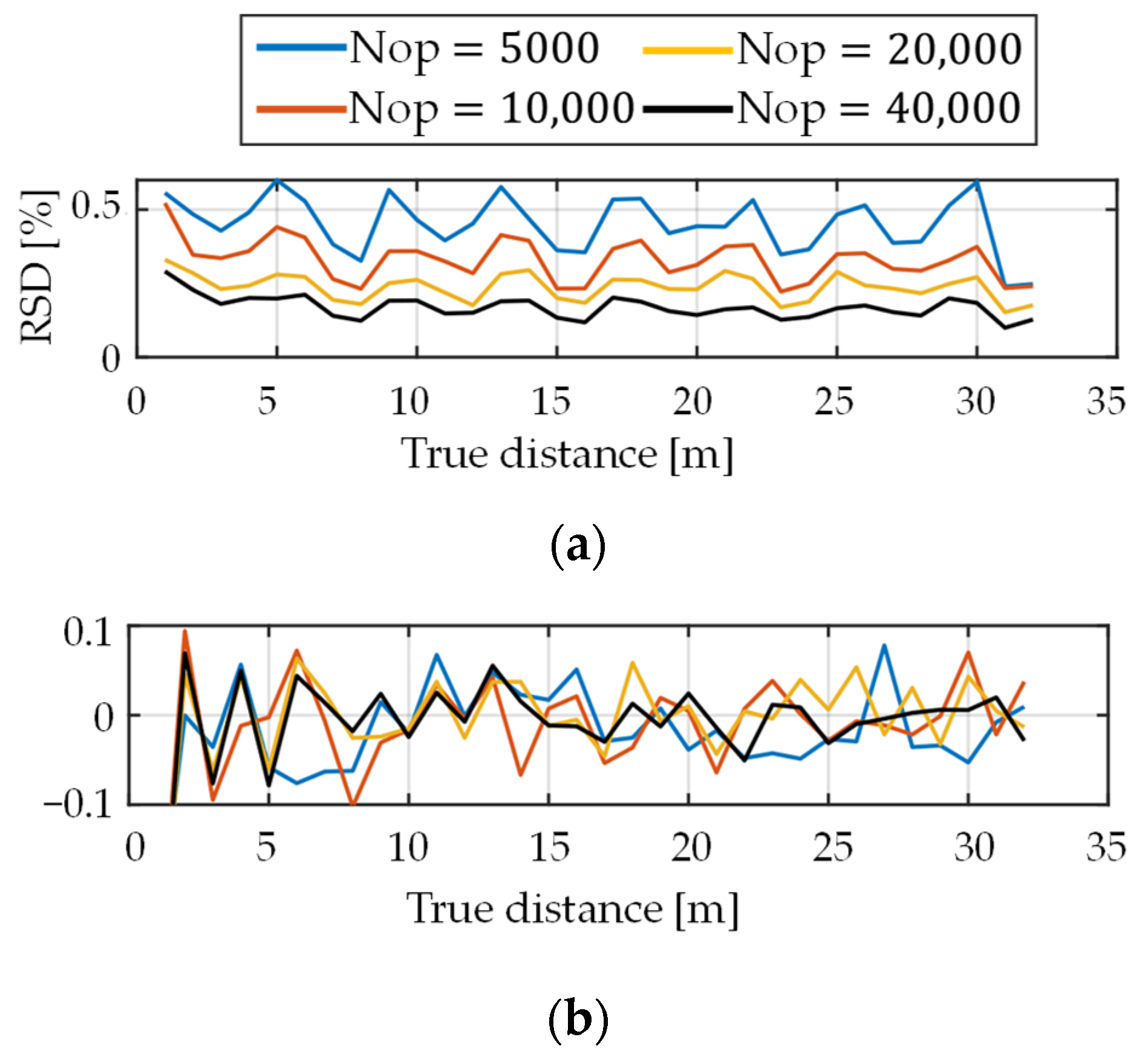

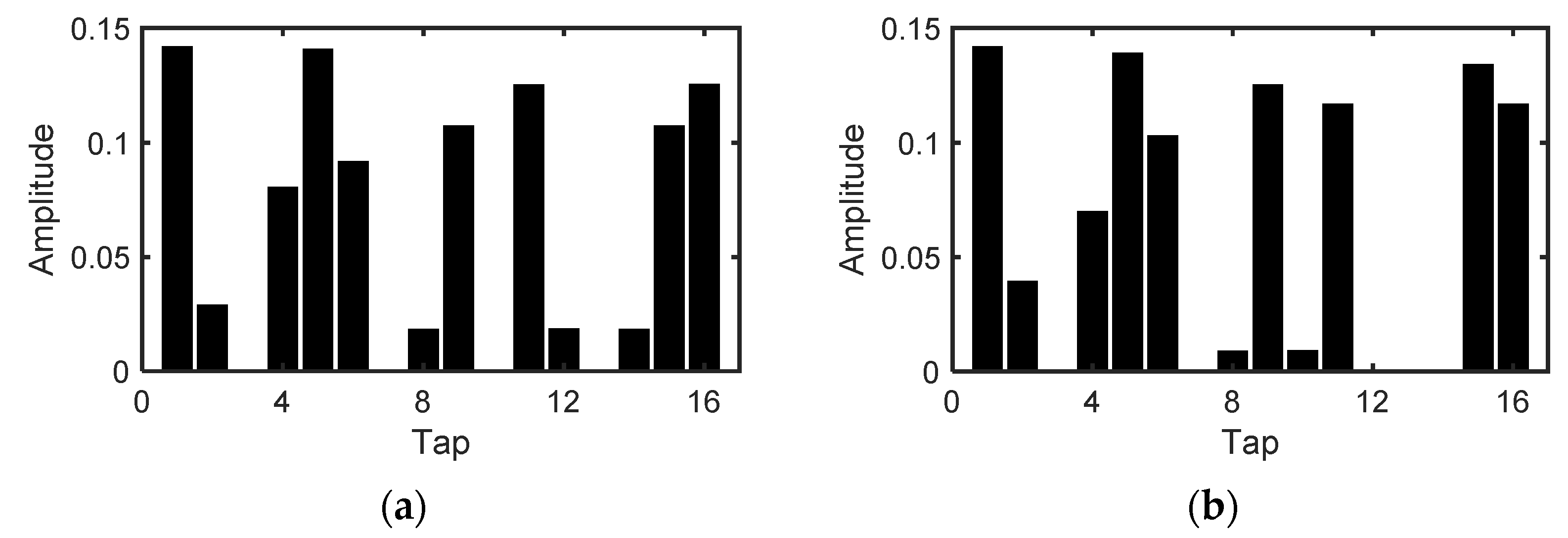

3.2. Single Path

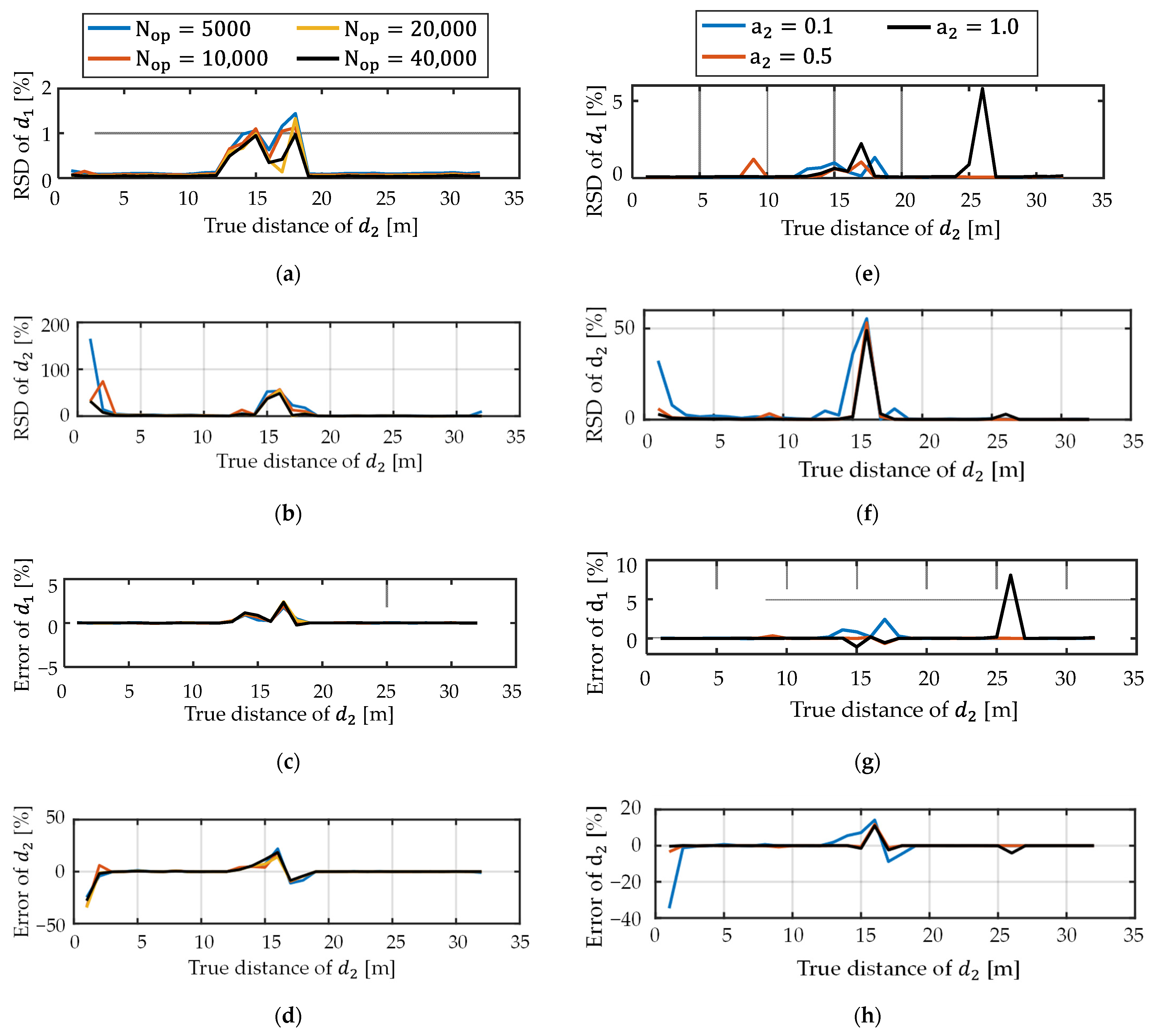

3.3. Dual-Path

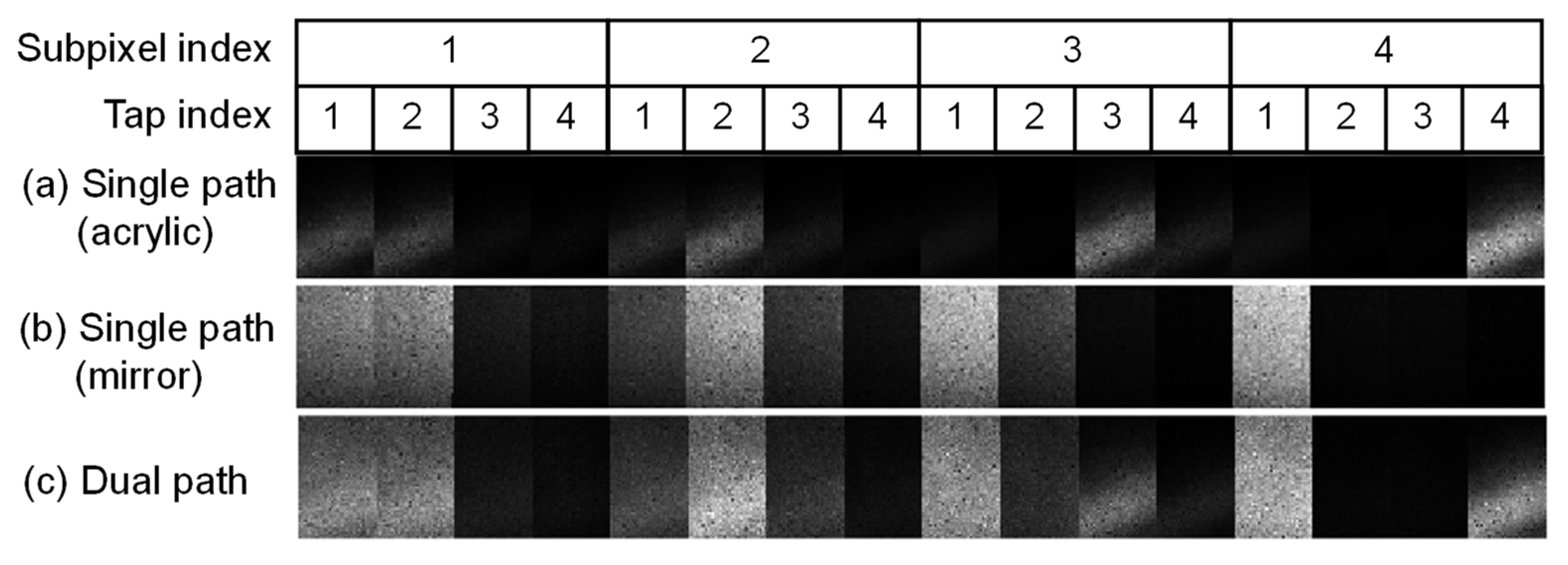

4. Experiment Using a Multi-Tap Macro-Pixel Computational CMOS Image Sensor

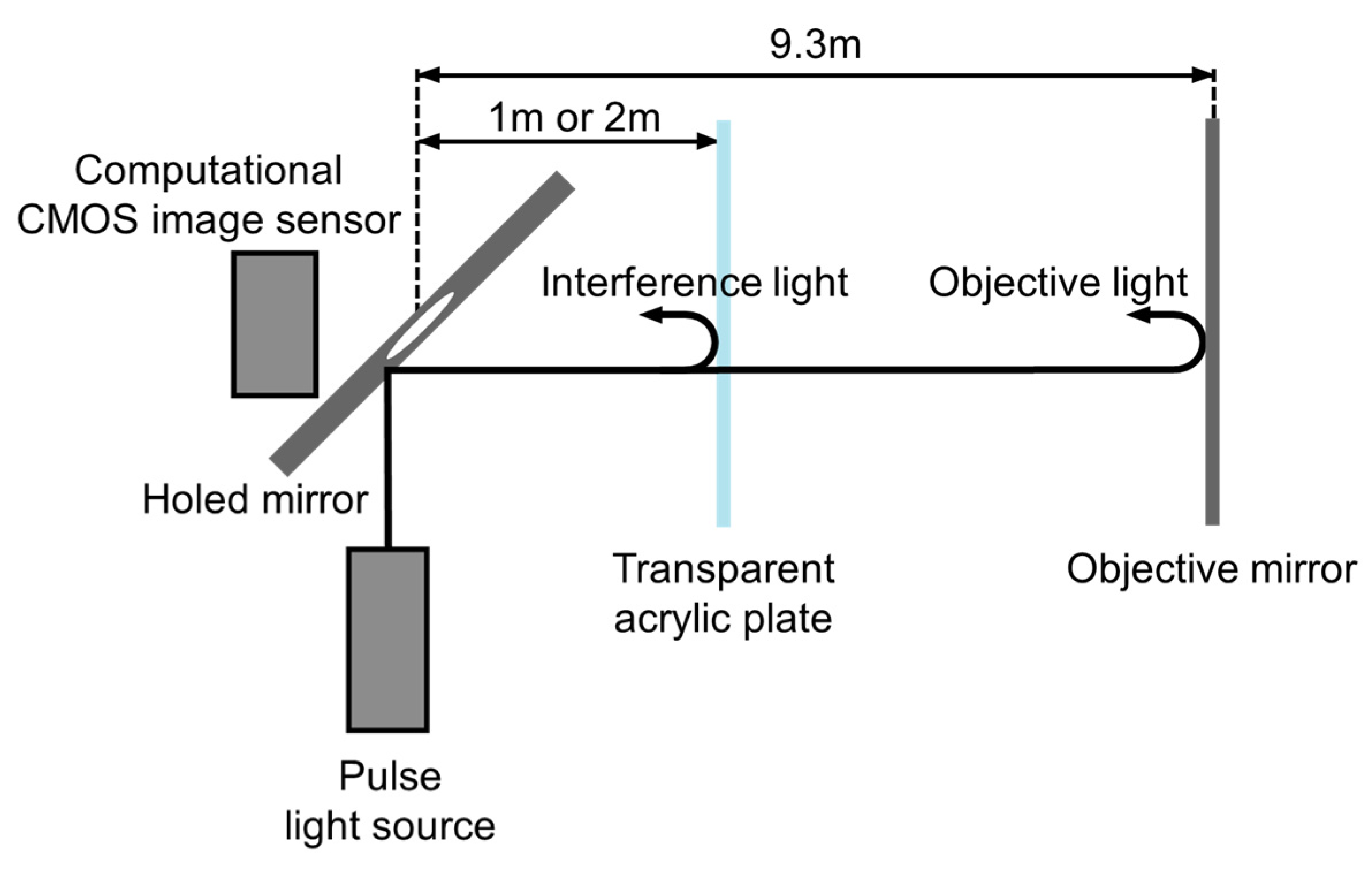

4.1. Experimental System

4.2. Measured and Processed Results

5. Limitations

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Remondino, F.; Stoppa, D. TOF Range-Imaging Cameras; Springer: Berlin/Heidelberg, Germany, 2013; Volume 68121. [Google Scholar]

- He, Y.; Chen, S. Recent Advances in 3D Data Acquisition and Processing by Time-of-Flight Camera. IEEE Access 2019, 7, 12495–12510. [Google Scholar] [CrossRef]

- Feigin, M.; Bhandari, A.; Izadi, S.; Rhemann, C.; Schmidt, M.; Raskar, R. Resolving Multi-path Interference in Kinect: An Inverse Problem Approach. IEEE Sens. J. 2016, 16, 3419–3427. [Google Scholar] [CrossRef]

- Whyte, R. Multi-Path Interference in Indirect Time-of-Flight Depth Sensors. Available online: https://medium.com/chronoptics-time-of-flight/multi-path-interference-in-indirect-time-of-flight-depth-sensors-7f59c5fcd122 (accessed on 28 April 2021).

- Ximenes, A.R.; Padmanabhan, P.; Lee, M.-J.; Yamashita, Y.; Yaung, D.N.; Charbon, E. A 256 × 256 45/65nm 3D-Stacked SPAD-Based Direct TOF Image Sensor for LiDAR Applications with Optical Polar Modulation for up to 18.6 dB Interference Suppression. In Proceedings of the 2018 IEEE International Solid—State Circuits Conference—(ISSCC), San Francisco, CA, USA, 11–15 February 2018; pp. 96–98. [Google Scholar]

- Acerbi, F.; Moreno-Garcia, M.; Koklü, G.; Gancarz, R.M.; Büttgen, B.; Biber, A.; Furrer, D.; Stoppa, D. Optimization of Pinned Photodiode Pixels for High-Speed Time of Flight Applications. IEEE J. Electron Devices Soc. 2018, 6, 365–375. [Google Scholar] [CrossRef]

- Cova, S.; Longoni, A.; Andreoni, A. Towards picosecond resolution with single-photon avalanche diodes. Rev. Sci. Instrum. 1981, 52, 408–412. [Google Scholar] [CrossRef]

- Bamji, C.S.; Mehta, S.; Thompson, B.; Elkhatib, T.; Wurster, S.; Akkaya, O.; Payne, A.; Godbaz, J.; Fenton, M.; Rajasekaran, V.; et al. IMpixel 65 nm BSI 320MHz Demodulated TOF Image Sensor with 3 μm Global Shutter Pixels and Analog Binning. In Proceedings of the 2018 IEEE International Solid—State Circuits Conference—(ISSCC), San Francisco, CA, USA, 11–15 February 2018; pp. 94–96. [Google Scholar]

- Yasutomi, K.; Okura, Y.; Kagawa, K.; Kawahito, S. A Sub-100 μm-Range-Resolution Time-of-Flight Range Image Sensor with Three-Tap Lock-In Pixels, Non-Overlapping Gate Clock, and Reference Plane Sampling. IEEE J. Solid-State Circuits 2019, 54, 2291–2303. [Google Scholar] [CrossRef]

- Kadambi, A.; Whyte, R.; Bhandari, A.; Streeter, L.; Barsi, C.; Dorrington, A.; Raskar, R. Coded Time of Flight Cameras: Sparse Deconvolution to Address Multi-path Interference and Recover Time Profiles. ACM Trans. Graph. (ToG) 2013, 32, 167. [Google Scholar] [CrossRef]

- Kitano, K.; Okamoto, T.; Tanaka, K.; Aoto, T.; Kubo, H.; Funatomi, T.; Mukaigawa, Y. Recovering Temporal PSF Using ToF Camera with Delayed Light Emission. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 15. [Google Scholar] [CrossRef][Green Version]

- Bhandari, A.; Kadambi, A.; Whyte, R.; Barsi, C.; Feigin, M.; Dorrington, A.; Raskar, R. Resolving Multi-path Interference in Time-of-Flight Imaging via Modulation Frequency Diversity and Sparse Regularization. Opt. Lett. 2014, 39, 1705–1708. [Google Scholar] [CrossRef] [PubMed]

- Kadambi, A.; Schiel, J.; Raskar, R. Macroscopic Interferometry: Rethinking Depth Estimation with Frequency-Domain Time-of-Flight. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 893–902. [Google Scholar]

- Lickfold, C.A.; Streeter, L.; Cree, M.J.; Scott, J.B. Simultaneous Phase and Frequency Stepping in Time-of-Flight Range Imaging. In Proceedings of the 2019 IEEE SENSORS, Montreal, QC, Canada, 27–30 October 2019; pp. 1–4. [Google Scholar]

- Agresti, G.; Schaefer, H.; Sartor, P.; Zanuttigh, P. Unsupervised Domain Adaptation for Tof Data Denoising with Adversarial Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5584–5593. [Google Scholar]

- Marco, J.; Hernandez, Q.; Munoz, A.; Dong, Y.; Jarabo, A.; Kim, M.H.; Tong, X.; Gutierrez, D. Deeptof: Off-the-Shelf Real-Time Correction of Multi-path Interference in Time-of-Flight Imaging. ACM Trans. Graph. (ToG) 2017, 36, 219. [Google Scholar] [CrossRef]

- Mochizuki, F.; Kagawa, K.; Miyagi, R.; Seo, M.-W.; Zhang, B.; Takasawa, T.; Yasutomi, K.; Kawahito, S. Separation of Multi-Path Components in Sweep-Less Time-of-Flight Depth Imaging with a Temporally-Compressive Multi-Aperture Image Sensor. ITE Trans. Media Technol. Appl. 2018, 6, 202–211. [Google Scholar] [CrossRef]

- Mochizuki, F.; Kagawa, K.; Okihara, S.; Seo, M.-W.; Zhang, B.; Takasawa, T.; Yasutomi, K.; Kawahito, S. Single-Shot 200 Mfps 5 × 3-Aperture Compressive CMOS Imager. In Proceedings of the 2015 IEEE International Solid-State Circuits Conference-(ISSCC) Digest of Technical Papers, San Francisco, CA, USA, 22–26 February 2015; pp. 1–3. [Google Scholar]

- Baraniuk, R.G. Compressive Sensing [Lecture Notes]. IEEE Signal Process. Mag. 2007, 24, 118–121. [Google Scholar] [CrossRef]

- Romberg, J. Imaging via Compressive Sampling. IEEE Signal Process. Mag. 2008, 25, 14–20. [Google Scholar] [CrossRef]

- Dadkhah, M.; Deen, M.J.; Shirani, S. Compressive Sensing Image Sensors-Hardware Implementation. Sensors 2013, 13, 4961–4978. [Google Scholar] [CrossRef] [PubMed]

- Duarte, M.F.; Davenport, M.A.; Takhar, D.; Laska, J.N.; Sun, T.; Kelly, K.F.; Baraniuk, R.G. Single-Pixel Imaging via Compressive Sampling. IEEE Signal Process. Mag. 2008, 25, 83–91. [Google Scholar] [CrossRef]

- Kagawa, K.; Kokado, T.; Sato, Y.; Mochizuki, F.; Nagahara, H.; Takasawa, T.; Yasutomi, K.; Kawahito, S. Multi-Tap Macro-Pixel Based Comporessive Ultra-High-Speed CMOS Image Sensor. In Proceedings of the 2019 International Image Sensor Workshop, Snowbird, UT, USA, 23–27 June 2019; pp. 270–273. [Google Scholar]

- Li, C.; Yin, W.; Zhang, Y. TVAL3: TV Minimization by Augmented Lagrangian and Alternating Direction Algorithms. Available online: https://www.caam.rice.edu/~optimization/L1/TVAL3/ (accessed on 28 April 2021).

- Li, C. An Efficient Algorithm for Total Variation Regularization with Applications to the Single Pixel Camera and Compressive Sensing. Ph.D. Thesis, Rice University, Houston, TX, USA, 2010. [Google Scholar]

- D’Errico, J. Fminsearchbnd, Fminsearchcon. Available online: https://jp.mathworks.com/matlabcentral/fileexchange/8277-fminsearchbnd-fminsearchcon (accessed on 28 April 2021).

- Kawahito, S.; Baek, G.; Li, Z.; Han, S.-M.; Seo, M.-W.; Yasutomi, K.; Kagawa, K. CMOS Lock-in Pixel Image Sensors with Lateral Electric Field Control for Time-Resolved Imaging. In Proceedings of the International Image Sensor Workshop (IISW), Snowbird, UT, USA, 12–16 June 2013; Volume 361. [Google Scholar]

- Seo, M.-W.; Kagawa, K.; Yasutomi, K.; Kawata, Y.; Teranishi, N.; Li, Z.; Halin, I.A.; Kawahito, S. A 10 Ps Time-Resolution CMOS Image Sensor with Two-Tap True-CDS Lock-in Pixels for Fluorescence Lifetime Imaging. IEEE J. Solid-State Circuits 2015, 51, 141–154. [Google Scholar]

- Lee, S.; Yasutomi, K.; Morita, M.; Kawanishi, H.; Kawahito, S. A Time-of-Flight Range Sensor Using Four-Tap Lock-In Pixels with High near Infrared Sensitivity for LiDAR Applications. Sensors 2020, 20, 116. [Google Scholar] [CrossRef] [PubMed]

- Conde, M.H.; Kagawa, K.; Kokado, T.; Kawahito, S.; Loffeld, O. Single-Shot Real-Time Multiple-Path Time-of-Flight Depth Imaging for Multi-Aperture and Macro-Pixel Sensors. In Proceedings of the ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1469–1473. [Google Scholar]

| dToF | iToF | |

|---|---|---|

| Detector | SPAD | Charge modulator |

| Pixel size | Relatively large | Small |

| Pixel readout circuits | Time-to-digital converter and histogram builder | The same as ordinary CMOS image sensors (pixel source followers, column correlated double sampling circuits, and analog-to-digital converters) |

| Immunity to multi-path interference | Good | No |

| Technology | 0.11 µm CMOS image sensor process |

| Chip size | 7.0 mm × 9.3 mm |

| Valid subpixels | 134 × 110 |

| Subpixel pitch | 22.4 µm × 22.4 µm |

| Subpixel count per macro-pixel | 2 × 2 |

| Tap count per subpixel | 4 |

| Shutter length per tap | 8 to 256 bits by 8 bits |

| Type A (Variable Total Photons) | Type B (Variable Interference Reflection Amplitude) | ||

|---|---|---|---|

| Shutter length | 32 bits | ||

| Minimal time window duration | 13.7 ns | ||

| Light source pulse width | 13.7 ns | ||

| Number of total taps per macro-pixel | 16 | ||

| Number of total electrons (Nop) | 5000, 10,000, 20,000, 40,000 | 20,000 | |

| Amplitude | Objective | ||

| Interference | |||

| Depth | Objective | ||

| Interference | |||

| Acrylic Plate at 1 m | Acrylic Plate at 2 m | Mirror at 9.3 m | |

|---|---|---|---|

| Single path | −8.5172 | −7.2929 | 0 |

| Dual path | −8.3404 | −7.3693 | −0.1826 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Horio, M.; Feng, Y.; Kokado, T.; Takasawa, T.; Yasutomi, K.; Kawahito, S.; Komuro, T.; Nagahara, H.; Kagawa, K. Resolving Multi-Path Interference in Compressive Time-of-Flight Depth Imaging with a Multi-Tap Macro-Pixel Computational CMOS Image Sensor. Sensors 2022, 22, 2442. https://doi.org/10.3390/s22072442

Horio M, Feng Y, Kokado T, Takasawa T, Yasutomi K, Kawahito S, Komuro T, Nagahara H, Kagawa K. Resolving Multi-Path Interference in Compressive Time-of-Flight Depth Imaging with a Multi-Tap Macro-Pixel Computational CMOS Image Sensor. Sensors. 2022; 22(7):2442. https://doi.org/10.3390/s22072442

Chicago/Turabian StyleHorio, Masaya, Yu Feng, Tomoya Kokado, Taishi Takasawa, Keita Yasutomi, Shoji Kawahito, Takashi Komuro, Hajime Nagahara, and Keiichiro Kagawa. 2022. "Resolving Multi-Path Interference in Compressive Time-of-Flight Depth Imaging with a Multi-Tap Macro-Pixel Computational CMOS Image Sensor" Sensors 22, no. 7: 2442. https://doi.org/10.3390/s22072442

APA StyleHorio, M., Feng, Y., Kokado, T., Takasawa, T., Yasutomi, K., Kawahito, S., Komuro, T., Nagahara, H., & Kagawa, K. (2022). Resolving Multi-Path Interference in Compressive Time-of-Flight Depth Imaging with a Multi-Tap Macro-Pixel Computational CMOS Image Sensor. Sensors, 22(7), 2442. https://doi.org/10.3390/s22072442