On-Machine Detection of Sub-Microscale Defects in Diamond Tool Grinding during the Manufacturing Process Based on DToolnet

Abstract

:1. Introduction

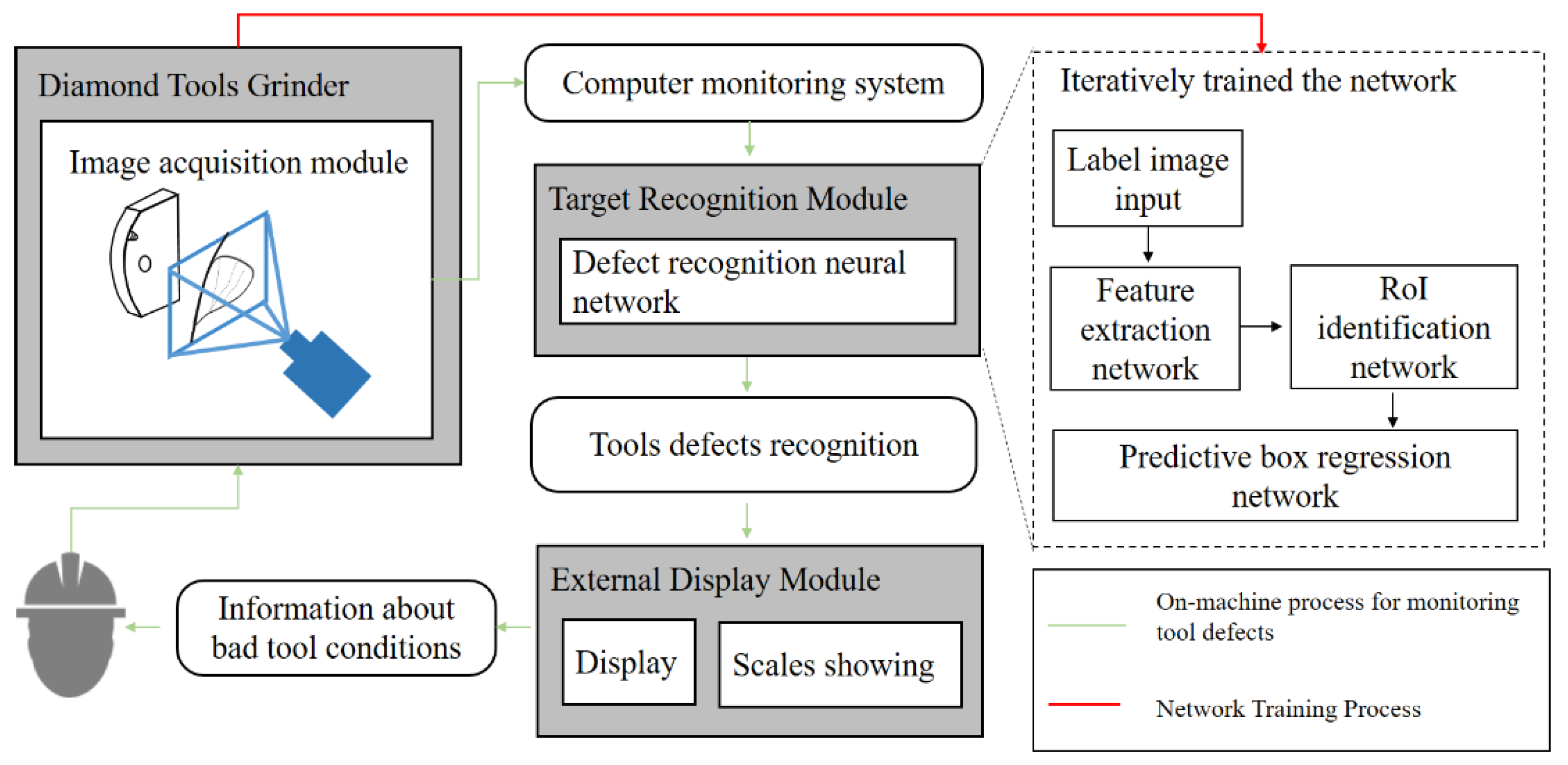

2. Structure and Principle of the DToolnet

2.1. An Overview of DToolnet

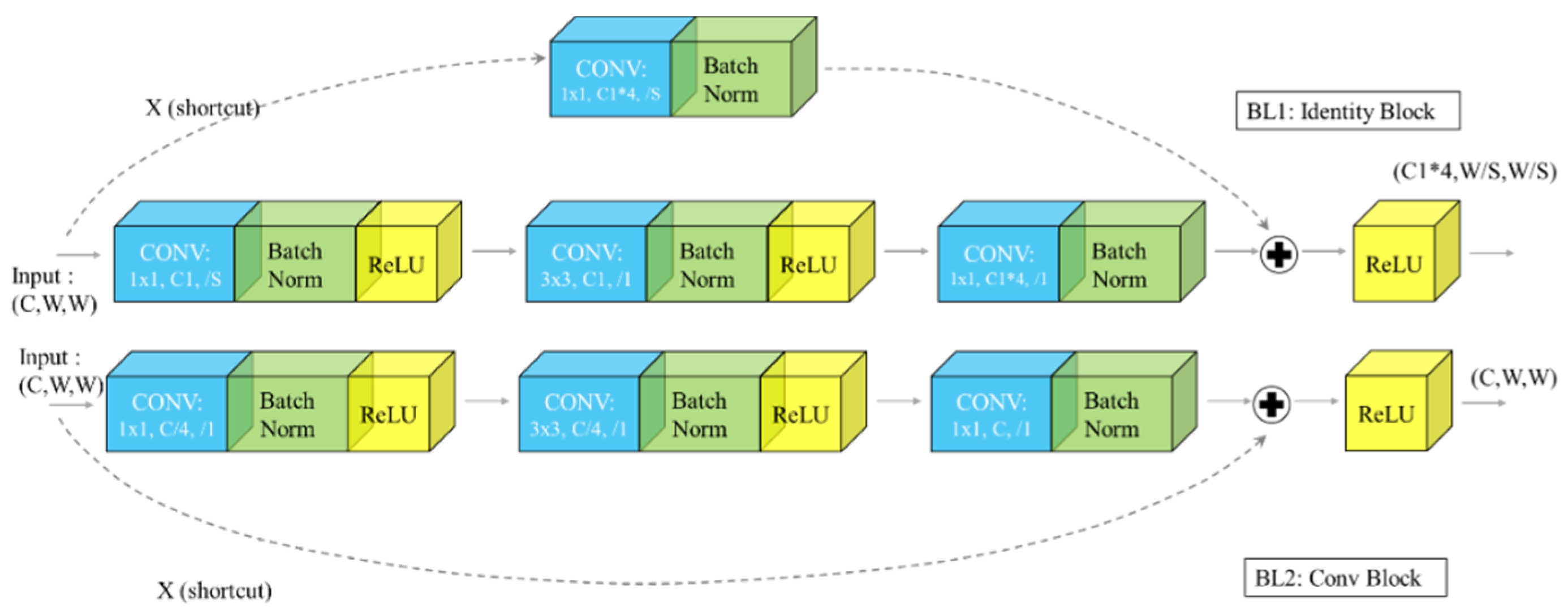

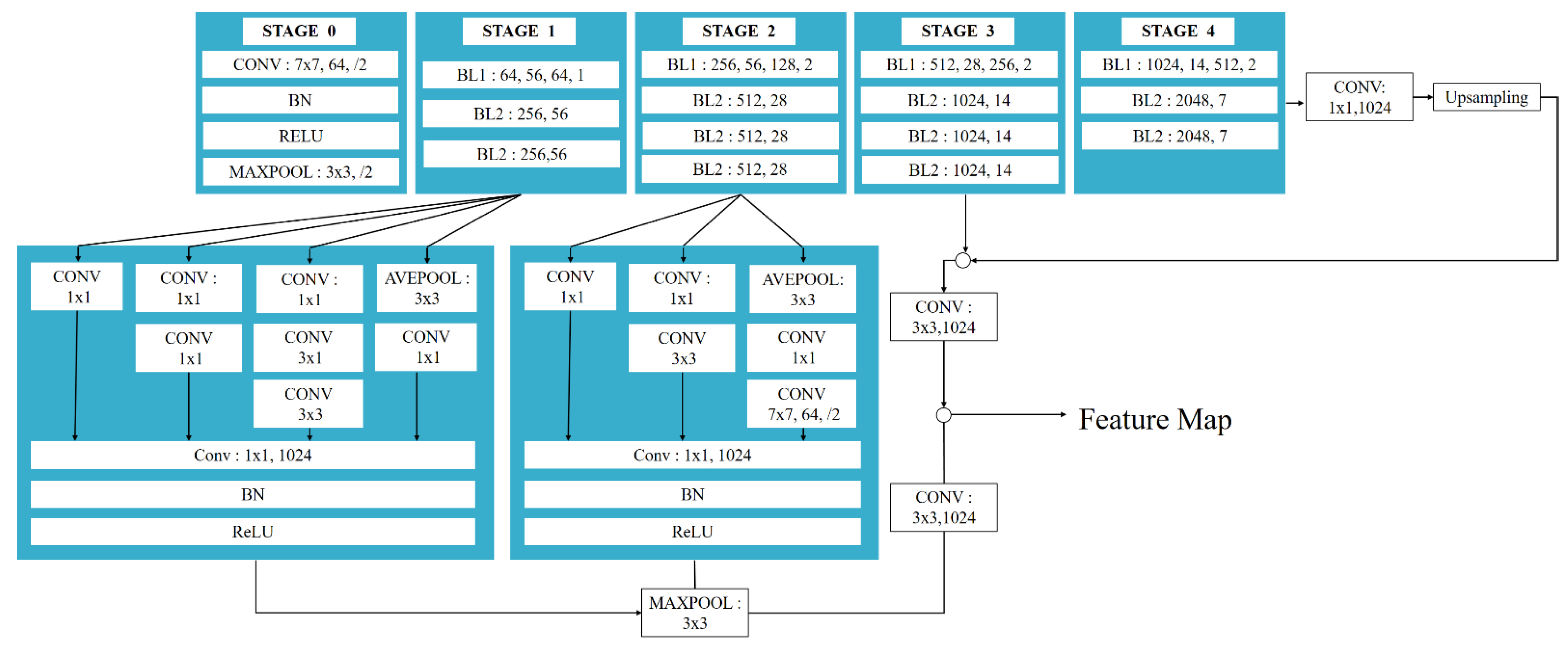

2.2. The DToolnet Feature Extraction Network

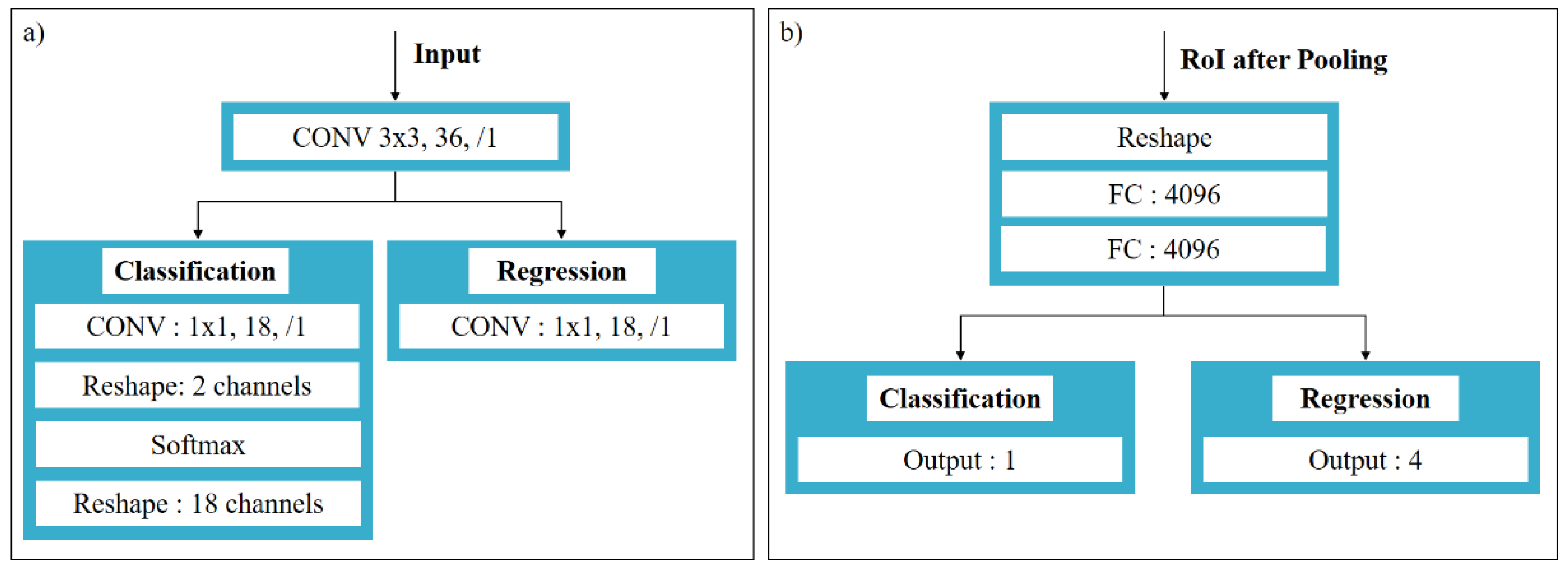

2.3. RPN Module

2.4. The ROI Pooling Module

2.5. Classification and Regression Module

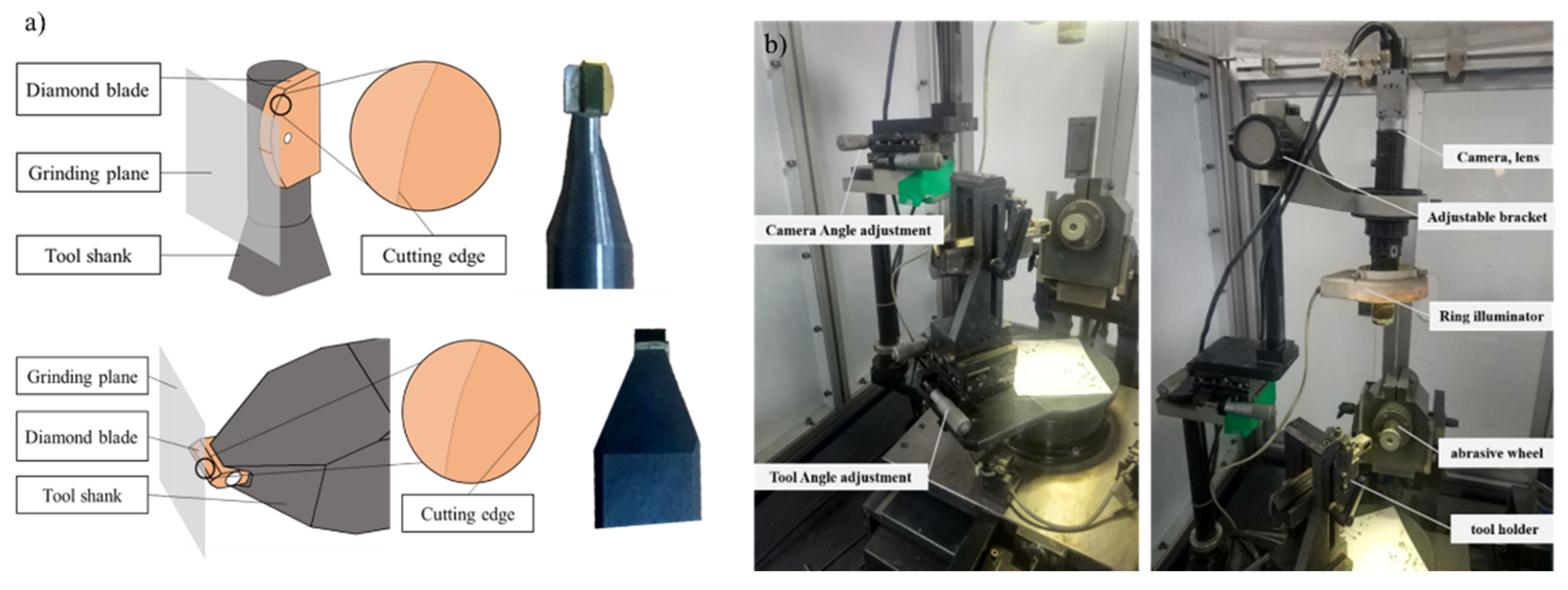

3. Experiment Setup

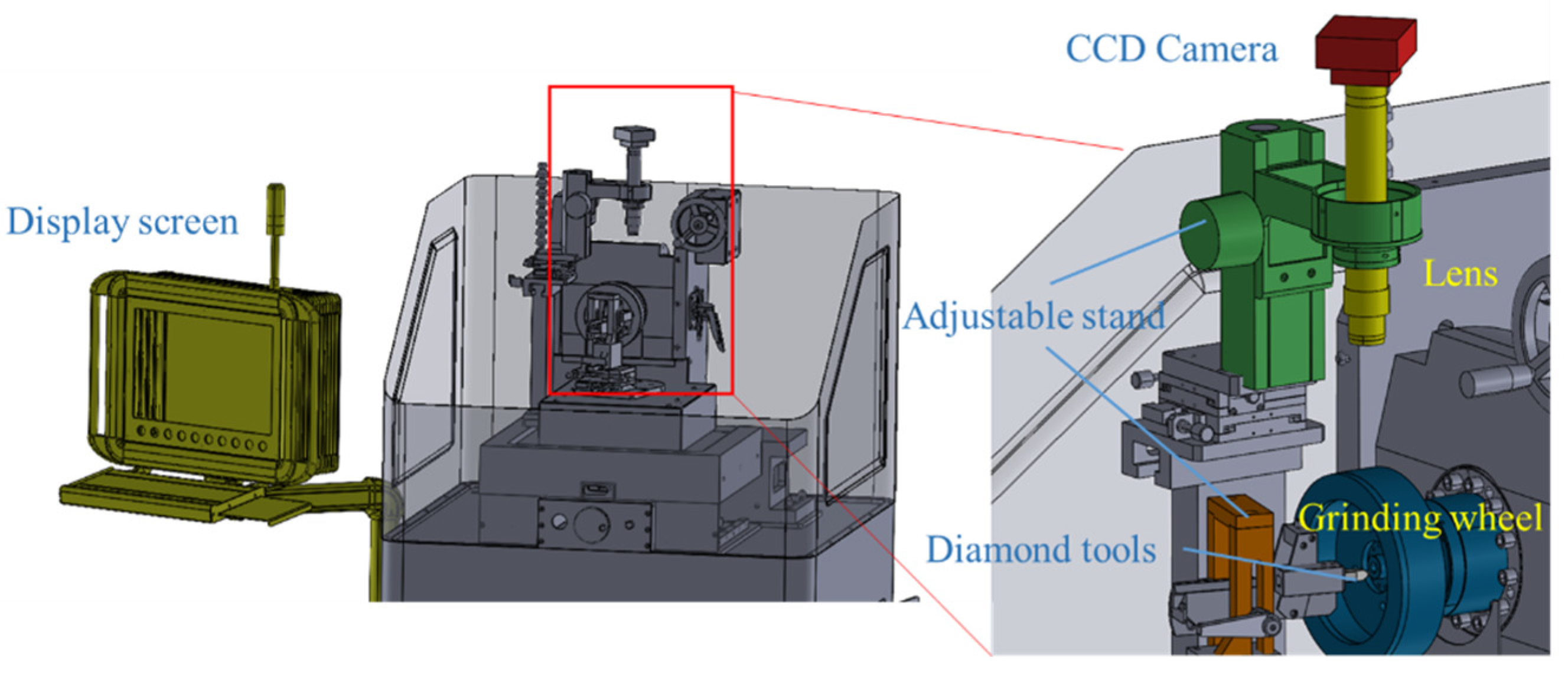

3.1. Hardware and Software

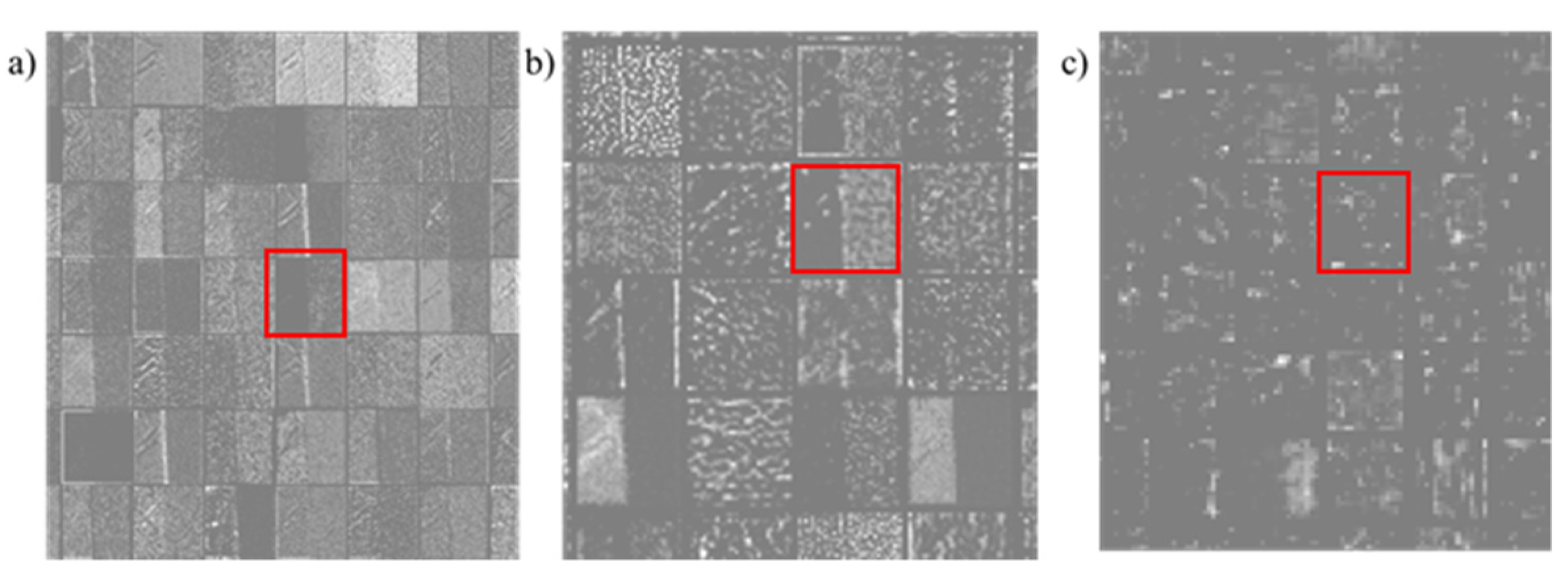

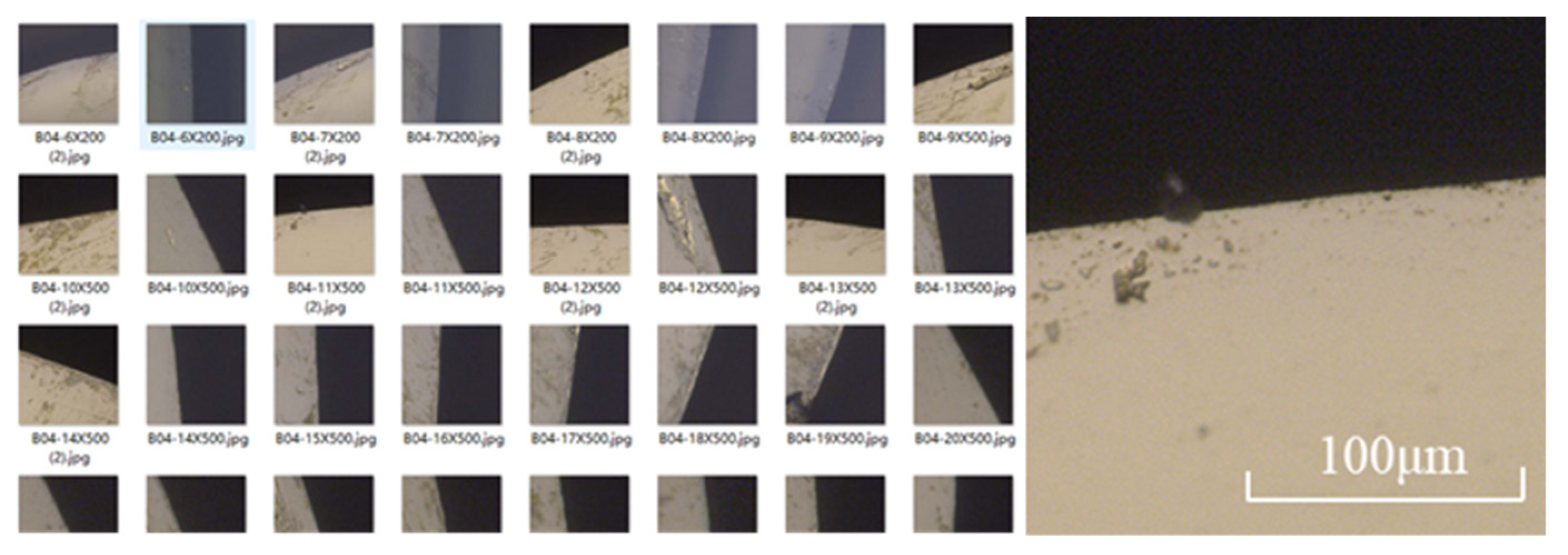

3.2. Database

3.3. The Experiment Material and Platform

4. Result and Discussion

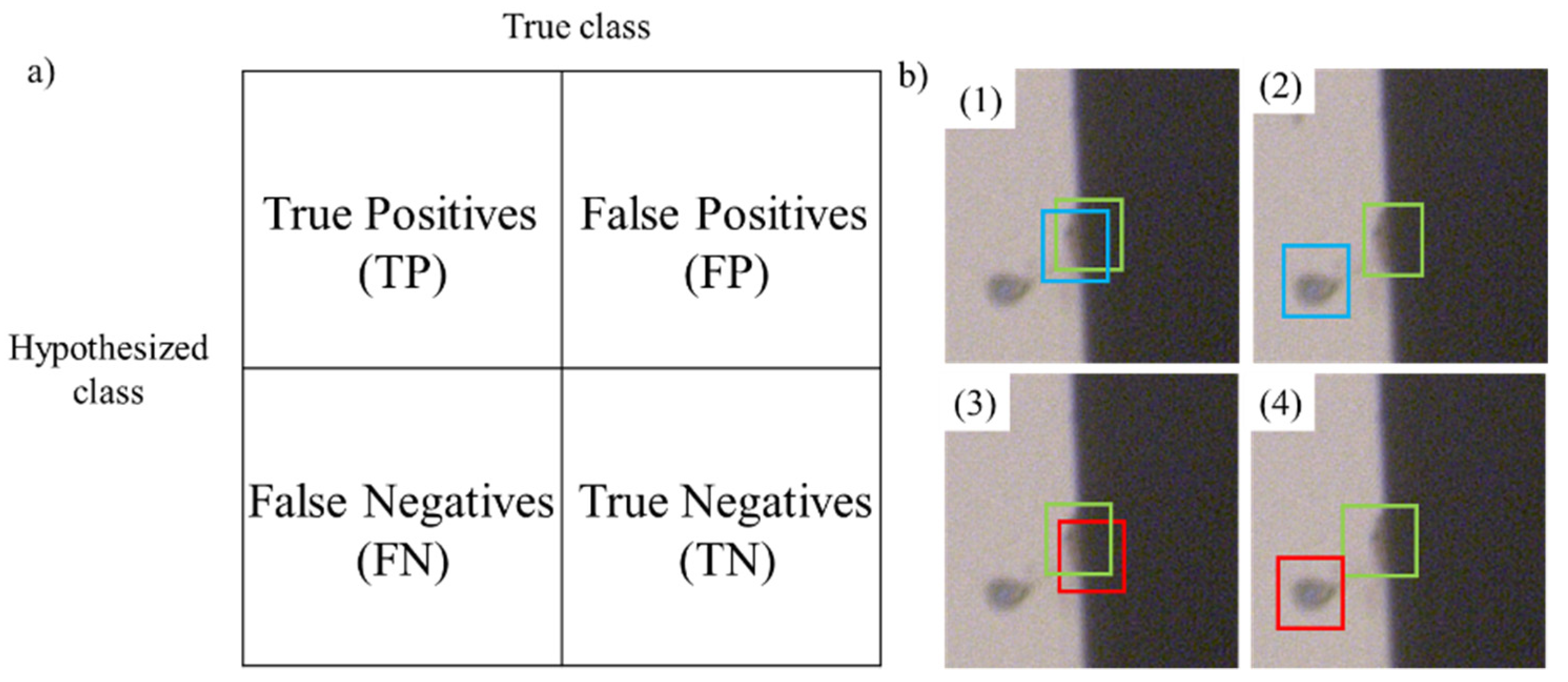

4.1. Evaluation Metrics

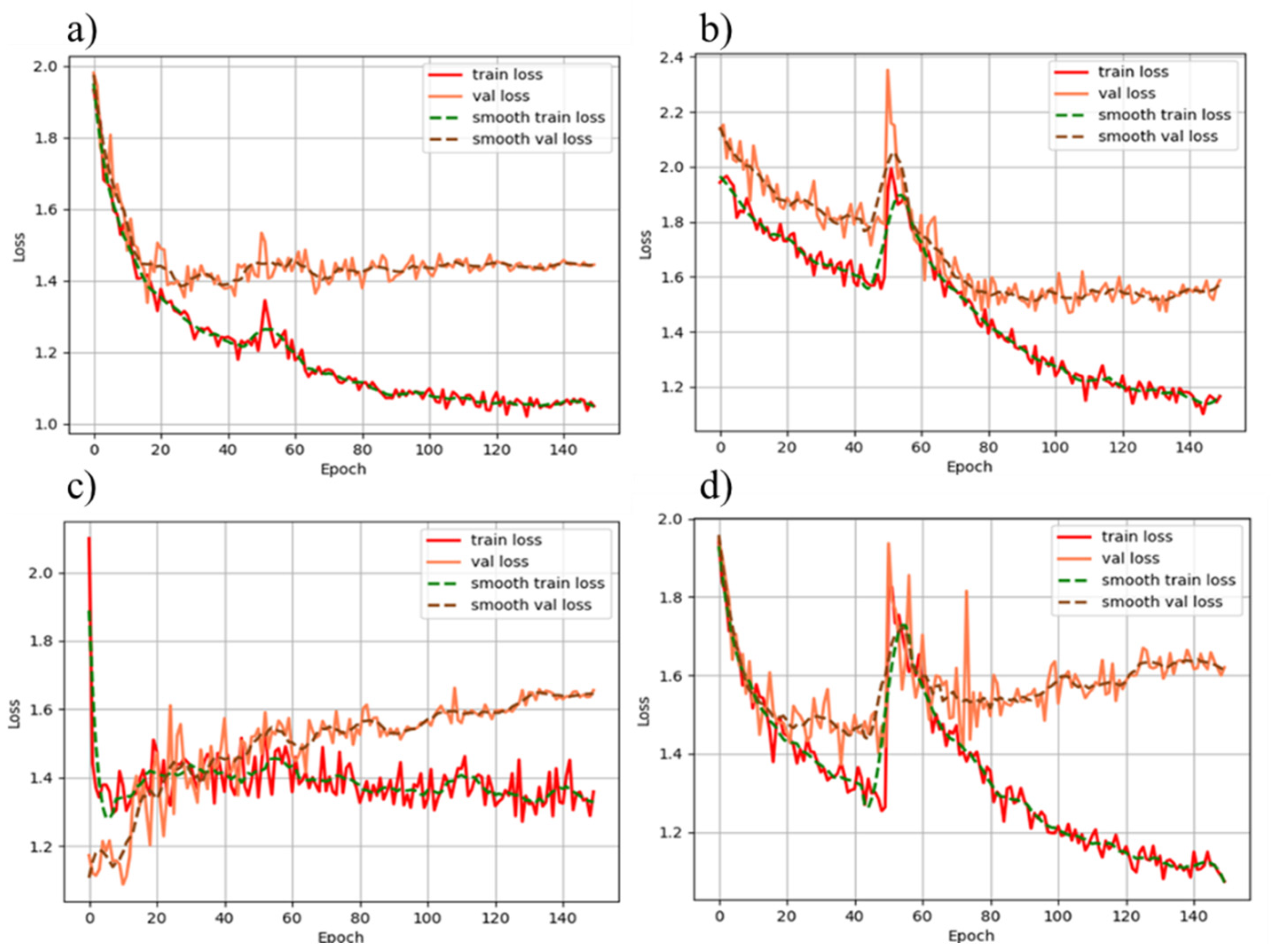

4.1.1. Loss during Training

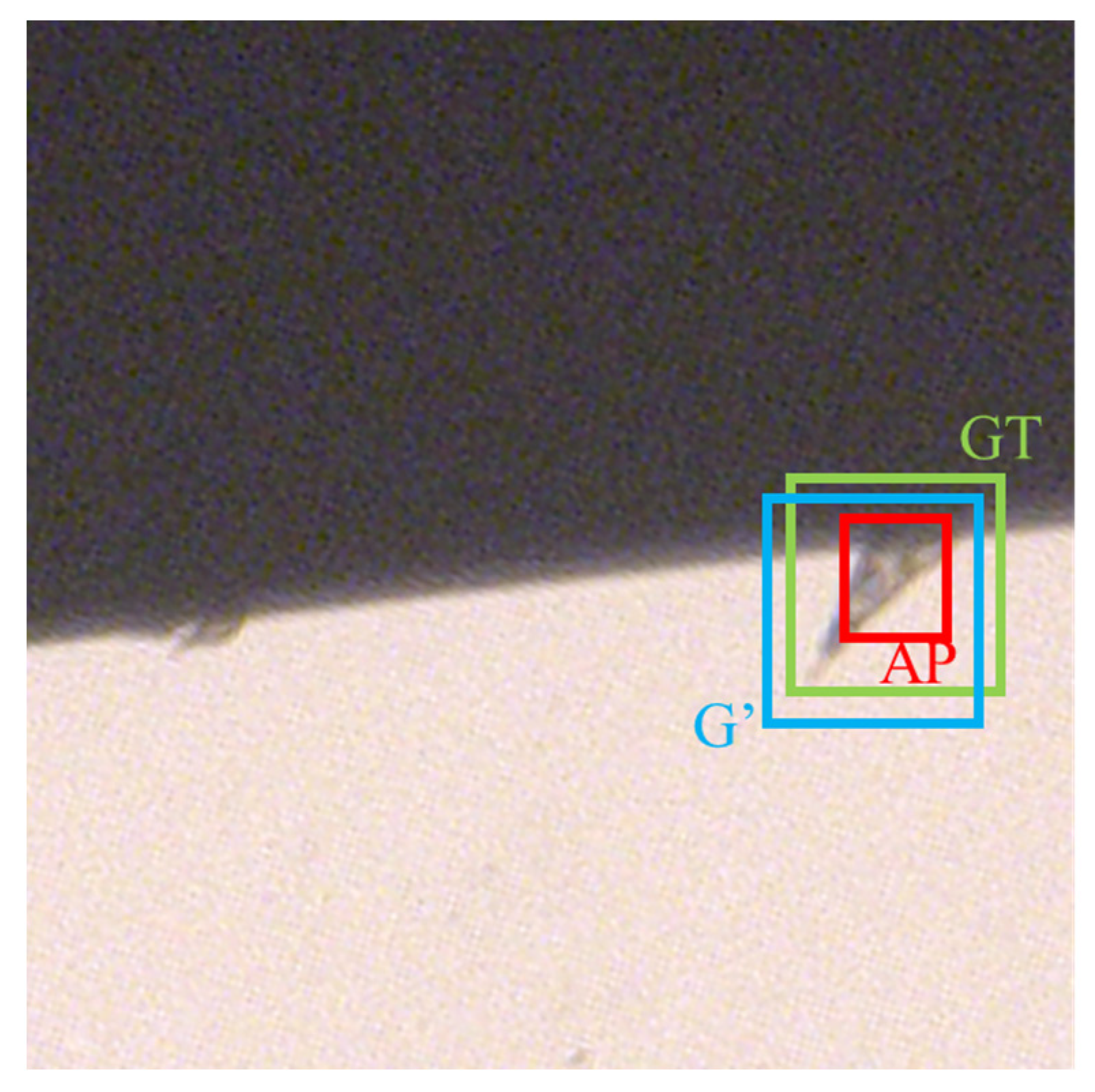

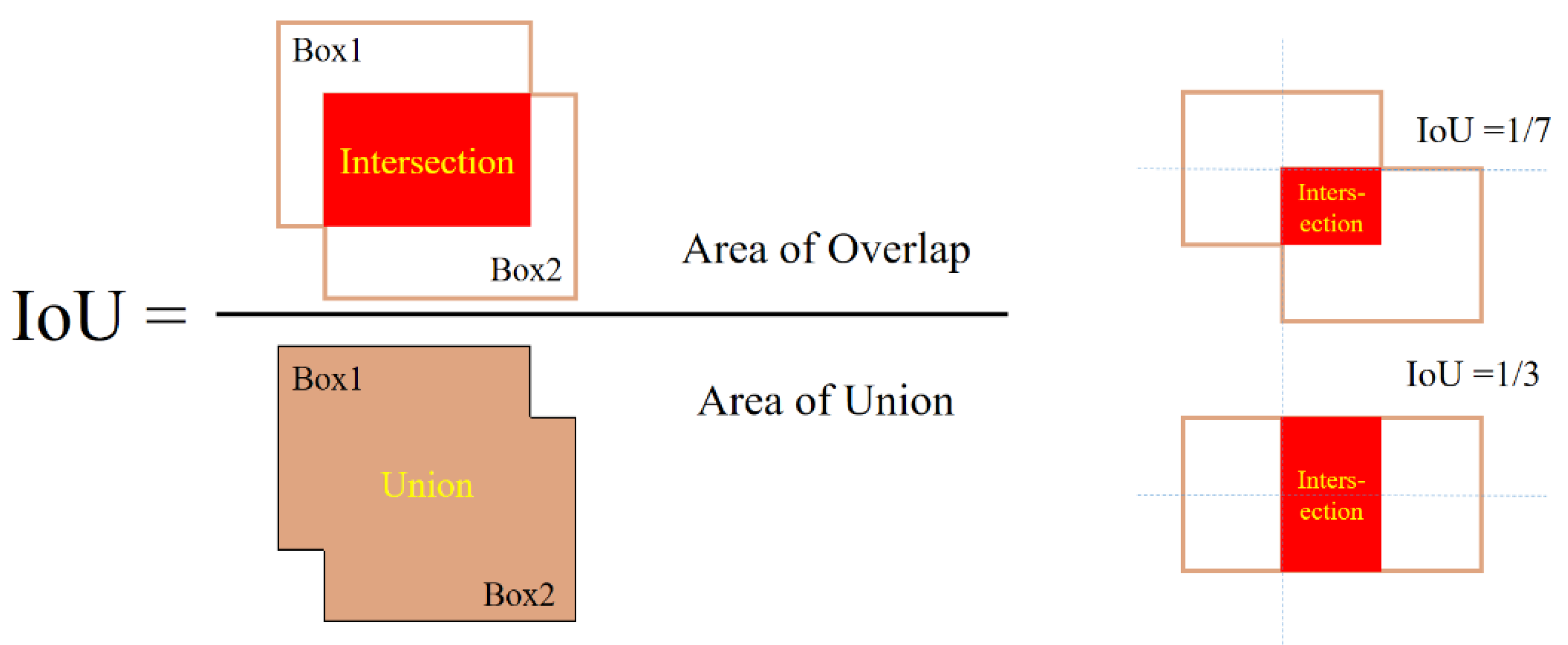

4.1.2. Intersection over Union

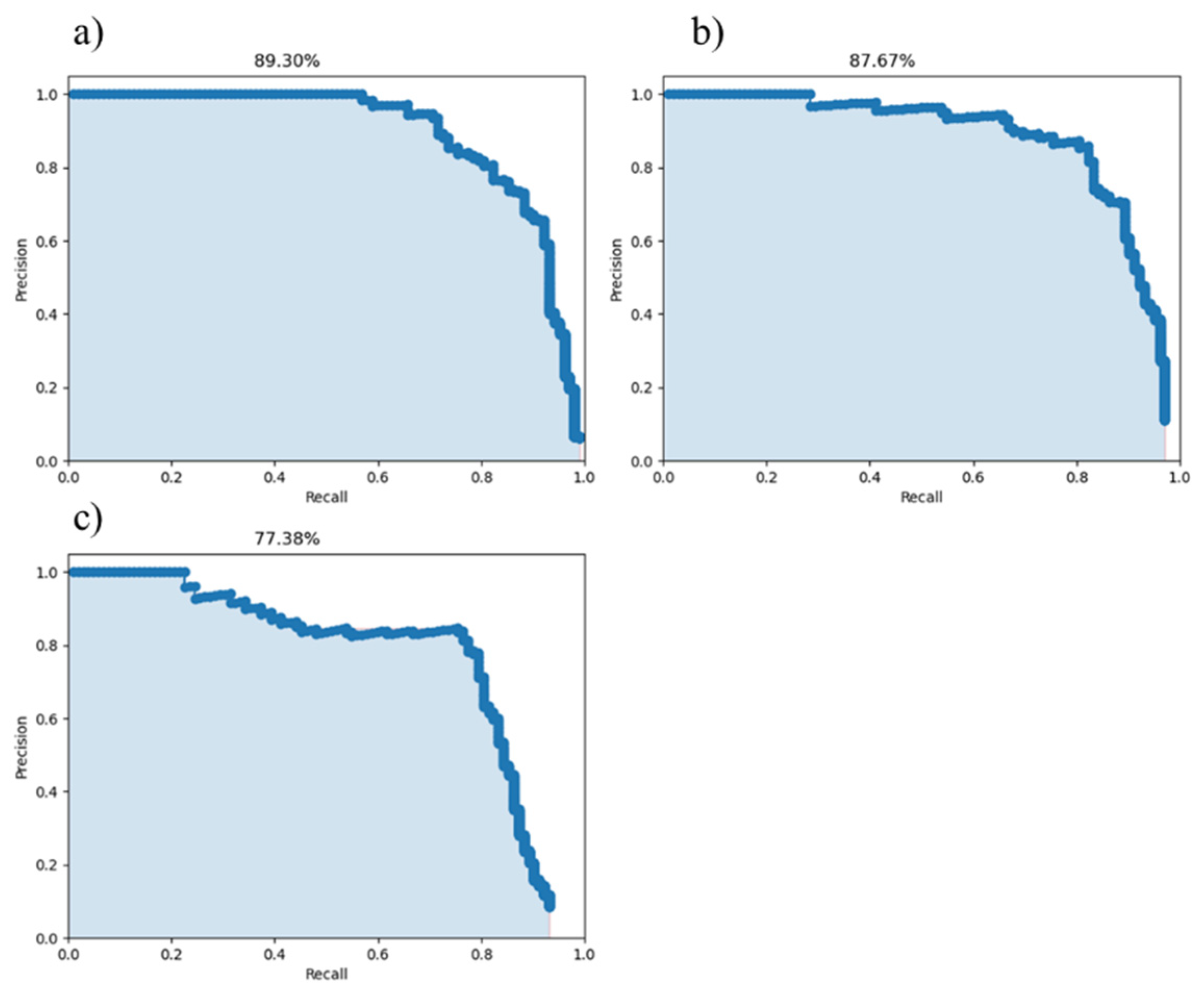

4.1.3. Performance of DToolnet on the Test Set

4.2. Result and Discussion

4.2.1. Result of the Loss during Training

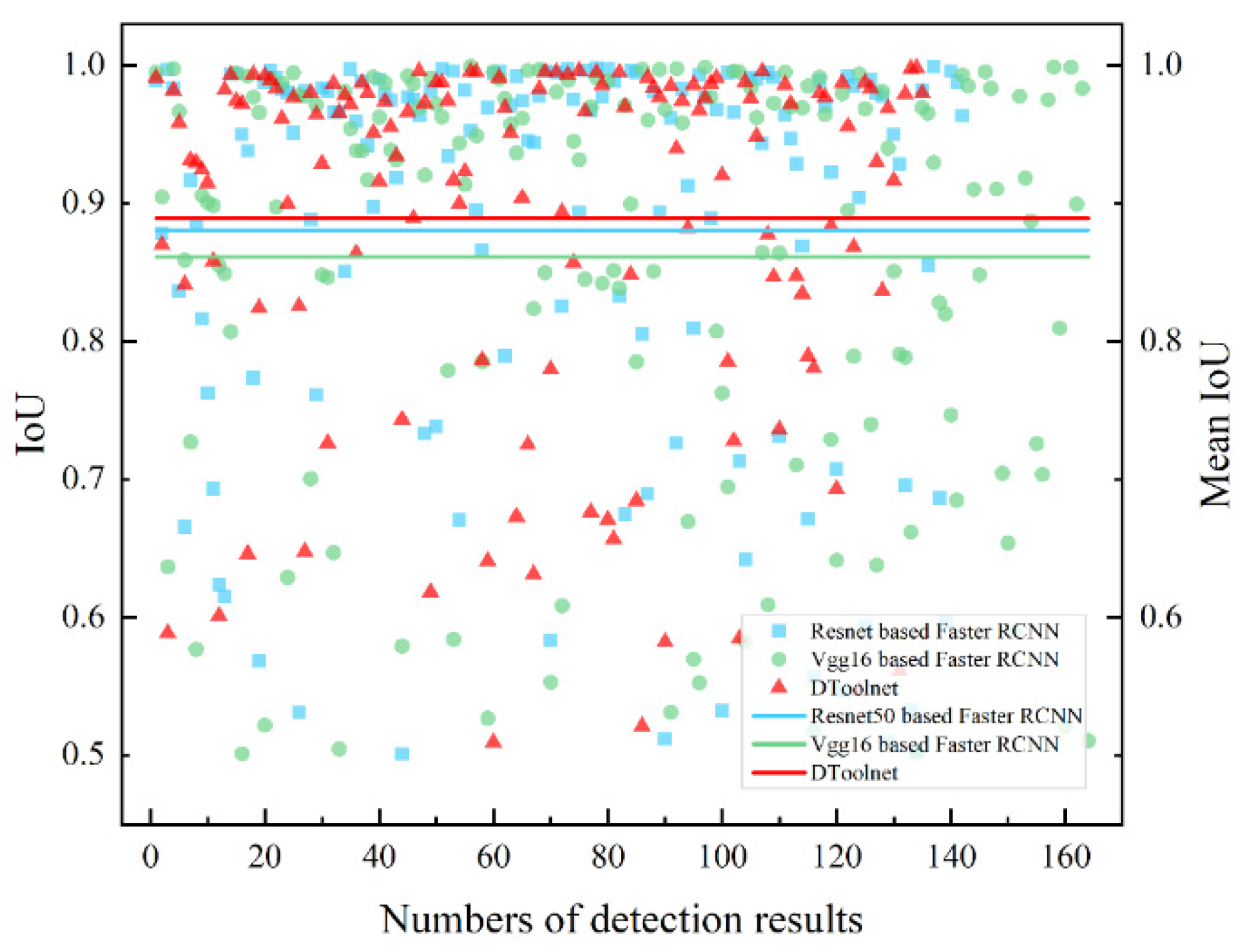

4.2.2. Comparison of the IoU and the Mean IoU

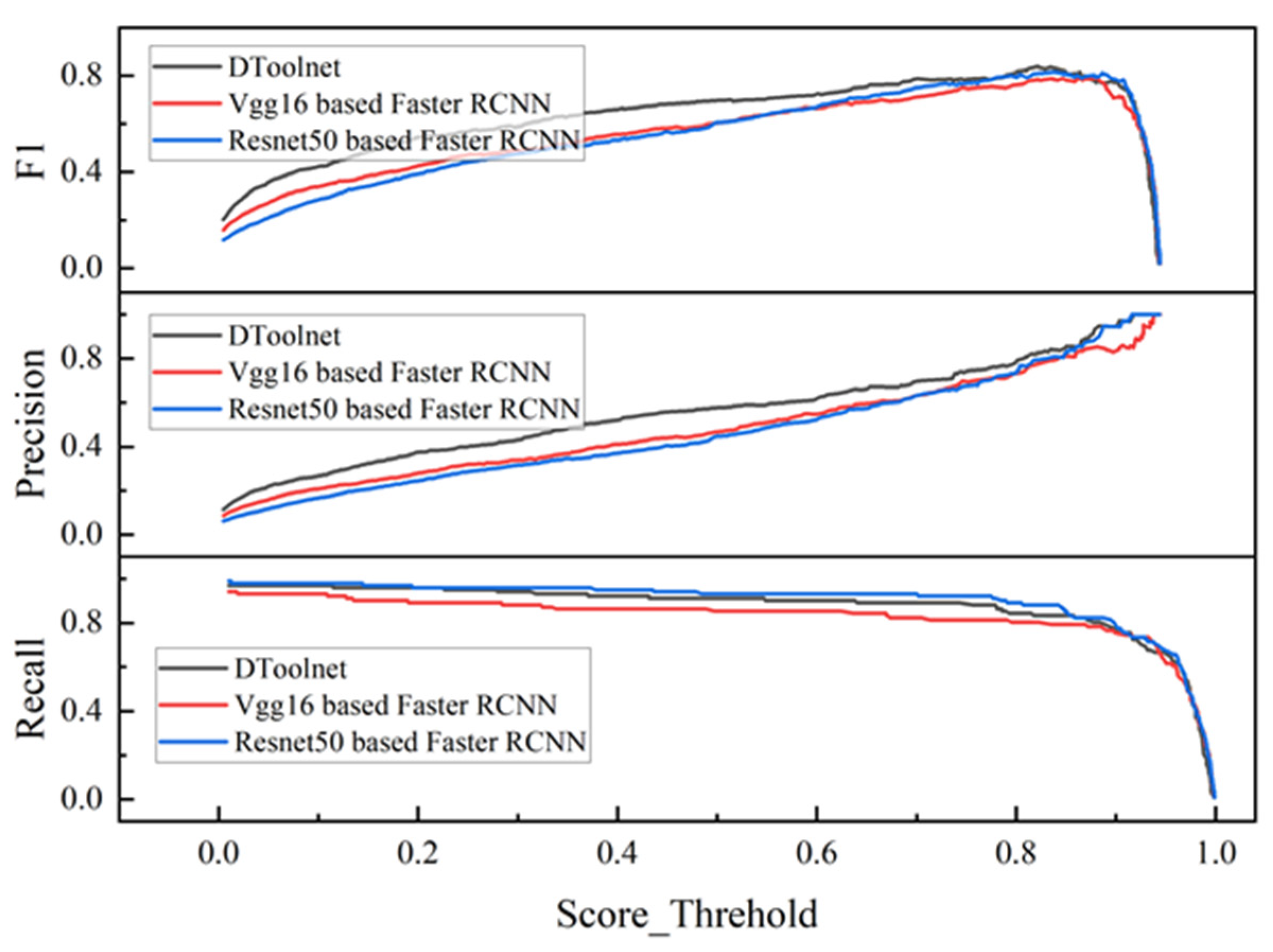

4.2.3. Comparison Results of Different Networks on the Test Set

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Vagnorius, Z.; Rausand, M.; Sørby, K. Determining optimal replacement time for metal cutting tools. Eur. J. Oper. Res. 2010, 206, 407–416. [Google Scholar] [CrossRef]

- Lins, R.G.; Guerreiro, B.; Araujo, P.R.M.; Schmitt, R. In-process tool wear measurement system based on image analysis for CNC drilling machines. IEEE Trans. Instrum. Meas. 2019, 69, 5579–5588. [Google Scholar] [CrossRef]

- Rehorn, A.G.; Jiang, J.; Orban, P.E. State-of-the-art methods and results in tool condition monitoring: A review. Int. J. Adv. Manuf. Technol. 2005, 26, 693–710. [Google Scholar] [CrossRef]

- Zhang, X.; Han, C.; Luo, M.; Zhang, D. Tool wear monitoring for complex part milling based on deep learning. Appl. Sci. 2020, 10, 6916. [Google Scholar] [CrossRef]

- Kuntoğlu, M.; Aslan, A.; Pimenov, D.Y.; Usca, Ü.A.; Salur, E.; Gupta, M.K.; Mikolajczyk, T.; Giasin, K.; Kapłonek, W.; Sharma, S. A review of indirect tool condition monitoring systems and decision-making methods in turning: Critical analysis and trends. Sensors 2021, 21, 108. [Google Scholar] [CrossRef]

- Zhou, Y.; Xue, W. A multisensor fusion method for tool condition monitoring in milling. Sensors 2018, 18, 3866. [Google Scholar] [CrossRef] [Green Version]

- Kuntoğlu, M.; Sağlam, H. Investigation of signal behaviors for sensor fusion with tool condition monitoring system in turning. Measurement 2021, 173, 108582. [Google Scholar] [CrossRef]

- Zhou, Y.; Sun, B.; Sun, W. A tool condition monitoring method based on two-layer angle kernel extreme learning machine and binary differential evolution for milling. Measurement 2020, 166, 108186. [Google Scholar] [CrossRef]

- Zhu, K. Big data oriented smart tool condition monitoring system. In Smart Machining Systems; Springer: Cham, Switcherland, 2022; pp. 361–381. [Google Scholar]

- Sortino, M. Application of statistical filtering for optical detection of tool wear. Int. J. Mach. Tools Manuf. 2003, 43, 493–497. [Google Scholar] [CrossRef] [Green Version]

- Yang, N.; Huang, W.; Lei, D. Diamond tool cutting edge measurement in consideration of the dilation induced by afm probe tip. Measurement 2019, 139, 403–410. [Google Scholar] [CrossRef]

- Cheng, K.; Luo, X.; Ward, R.; Holt, R. Modeling and simulation of the tool wear in nanometric cutting. Wear 2003, 255, 1427–1432. [Google Scholar] [CrossRef]

- El Gomayel, J.; Bregger, K. On-line tool wear sensing for turning operations. J. Eng. Ind. 1986, 108, 44–47. [Google Scholar] [CrossRef]

- Tondon, A.; Singh, M.; Sandhu, B.; Singh, B. Importance of voxel size in defect localization using gamma-ray scattering. Nucl. Sci. Eng. 2019, 193, 1265–1275. [Google Scholar] [CrossRef]

- Iwai, T.; Tsuchida, H. In situ positron beam Doppler broadening measurement of ion-irradiated metals–Current status and potential. Nucl. Instrum. Methods Phys. Res. Sect. B Beam Interact. Mater. At. 2012, 285, 18–23. [Google Scholar] [CrossRef]

- Jun, M.B.; Ozdoganlar, O.B.; DeVor, R.E.; Kapoor, S.G.; Kirchheim, A.; Schaffner, G. Evaluation of a spindle-based force sensor for monitoring and fault diagnosis of machining operations. Int. J. Mach. Tools Manuf. 2002, 42, 741–751. [Google Scholar] [CrossRef]

- Kuntoğlu, M.; Aslan, A.; Sağlam, H.; Pimenov, D.Y.; Giasin, K.; Mikolajczyk, T. Optimization and analysis of surface roughness, flank wear and 5 different sensorial data via tool condition monitoring system in turning of AISI 5140. Sensors 2020, 20, 4377. [Google Scholar] [CrossRef]

- Kuntoğlu, M.; Aslan, A.; Pimenov, D.Y.; Giasin, K.; Mikolajczyk, T.; Sharma, S. Modeling of cutting parameters and tool geometry for multi-criteria optimization of surface roughness and vibration via response surface methodology in turning of AISI 5140 steel. Materials 2020, 13, 4242. [Google Scholar] [CrossRef]

- Abouelatta, O.; Madl, J. Surface roughness prediction based on cutting parameters and tool vibrations in turning operations. J. Mater. Process. Technol. 2001, 118, 269–277. [Google Scholar] [CrossRef]

- Rao, K.V.; Murthy, B.; Rao, N.M. Cutting tool condition monitoring by analyzing surface roughness, work piece vibration and volume of metal removed for AISI 1040 steel in boring. Measurement 2013, 46, 4075–4084. [Google Scholar]

- Yao, C.; Chien, Y. A diagnosis method of wear and tool life for an endmill by ultrasonic detection. J. Manuf. Syst. 2014, 33, 129–138. [Google Scholar] [CrossRef]

- Ambhore, N.; Kamble, D.; Chinchanikar, S.; Wayal, V. Tool condition monitoring system: A review. Mater. Today Proc. 2015, 2, 3419–3428. [Google Scholar] [CrossRef]

- Jossa, I.; Marschner, U.; Fischer, W.-J. Application of the FSOM to machine vibration monitoring. In Fuzzy Control. Advances in Soft Computing; Physica: Heidelberg, Germany, 2000; Volume 6, pp. 397–405. [Google Scholar]

- Scheffer, C.; Heyns, P. Wear monitoring in turning operations using vibration and strain measurements. Mech. Syst. Signal Process. 2001, 15, 1185–1202. [Google Scholar] [CrossRef]

- Ning, Y.; Rahman, M.; Wong, Y. Monitoring of chatter in high speed endmilling using audio signals method. In Proceedings of the 33rd International MATADOR Conference; Springer: London, UK, 2000; pp. 421–426. [Google Scholar]

- Konrad, H.; Isermann, R.; Oette, H. Supervision of tool wear and surface quality during end milling operations. IFAC Proc. Vol. 1994, 27, 507–512. [Google Scholar] [CrossRef]

- Salgado, D.; Alonso, F. An approach based on current and sound signals for in-process tool wear monitoring. Int. J. Mach. Tools Manuf. 2007, 47, 2140–2152. [Google Scholar] [CrossRef]

- Hayashi, S.; Thomas, C.; Wildes, D.; Tlusty, G. Tool break detection by monitoring ultrasonic vibrations. CIRP Ann. 1988, 37, 61–64. [Google Scholar] [CrossRef]

- Tingbin, F.; Huadong, Z.; Zhenwei, Z.; Yong, L. Analysis of Current Signal of Grinding Wheel Spindle Motor. In Proceedings of the 2021 4th International Conference on Electron Device and Mechanical Engineering (ICEDME), Guangzhou, China, 19–21 March 2021; pp. 63–66. [Google Scholar]

- Thakre, A.A.; Lad, A.V.; Mala, K. Measurements of tool wear parameters using machine vision system. Model. Simul. Eng. 2019, 2019, 1876489. [Google Scholar] [CrossRef]

- Asai, S.; Taguchi, Y.; Horio, K.; Kasai, T.; Kobayashi, A. Measuring the very small cutting-edge radius for a diamond tool using a new kind of SEM having two detectors. CIRP Ann. 1990, 39, 85–88. [Google Scholar] [CrossRef]

- Denkena, B.; Köhler, J.; Ventura, C. Customized cutting edge preparation by means of grinding. Precis. Eng. 2013, 37, 590–598. [Google Scholar] [CrossRef]

- Zhang, K.; Shimizu, Y.; Matsukuma, H.; Cai, Y.; Gao, W. An application of the edge reversal method for accurate reconstruction of the three-dimensional profile of a single-point diamond tool obtained by an atomic force microscope. Int. J. Adv. Manuf. Technol. 2021, 117, 2883–2893. [Google Scholar] [CrossRef]

- Zhao, C.; Cheung, C.F.; Xu, P. Optical nanoscale positioning measurement with a feature-based method. Opt. Lasers Eng. 2020, 134, 106225. [Google Scholar] [CrossRef]

- Zhao, C.; Cheung, C.; Liu, M. Integrated polar microstructure and template-matching method for optical position measurement. Opt. Express 2018, 26, 4330–4345. [Google Scholar] [CrossRef]

- Zhao, C.; Li, Y.; Yao, Y.; Deng, D. Random residual neural network–based nanoscale positioning measurement. Opt. Express 2020, 28, 13125–13130. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Zhang, C.; Wang, Y.; Zou, X.; Hu, T. A vision-based fusion method for defect detection of milling cutter spiral cutting edge. Measurement 2021, 177, 109248. [Google Scholar] [CrossRef]

- Brili, N.; Ficko, M.; Klančnik, S. Tool condition monitoring of the cutting capability of a turning tool based on thermography. Sensors 2021, 21, 6687. [Google Scholar] [CrossRef] [PubMed]

- Kuric, I.; Klarák, J.; Bulej, V.; Sága, M.; Kandera, M.; Hajdučík, A.; Tucki, K. Approach to Automated Visual Inspection of Objects Based on Artificial Intelligence. Appl. Sci. 2022, 12, 864. [Google Scholar] [CrossRef]

- Xian, Y.; Liu, G.; Fan, J.; Yu, Y.; Wang, Z. YOT-Net: YOLOv3 Combined Triplet Loss Network for Copper Elbow Surface Defect Detection. Sensors 2021, 21, 7260. [Google Scholar] [CrossRef] [PubMed]

- Ren, R.; Hung, T.; Tan, K.C. A generic deep-learning-based approach for automated surface inspection. IEEE Trans. Cybern. 2017, 48, 929–940. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. Available online: https://arxiv.org/abs/1409.1556 (accessed on 10 February 2022).

| Method | Sensors/ Instruments | |

|---|---|---|

| Direct | Optical image | Optical sensor [10] |

| Contact | Probe [11,12], magnetic gap sensor [13] | |

| Radiometric technique | Radioactive element [14,15] | |

| Indirect | Cutting temperature | Thermocouple [16], infrared temperature [17] |

| Surface roughness | Surface roughness measuring instrument [18,19,20] | |

| Ultrasonic | Ultrasonic heat generator and receiver [21] | |

| Vibration | Accelerometer [22], vibration sensor [23] | |

| Cutting force | Strain sensor [24], voltage power sensor [25] | |

| Power | Power sensor [26] | |

| Current | current sensors [27] | |

| Acoustic emission | Acoustic emission sensor [28] | |

| Camera | Basler acA2440-75uc | ||

|---|---|---|---|

| Photosensitive chip supplier | Sony | Photosensitive chip | IMX250 |

| Shutter | Global Shutter | Chip size | 2/3 |

| Photosensitive chip type | CMOS | Photosensitive chip size | 8.4 mm × 7.1 mm |

| Horizontal/Vertical Resolution | 2448 px × 2048 px | Resolution | 5 MP |

| Horizontal/Vertical Pixel Size | 3.45 µm × 3.45 µm | Frame rate | 75 fps |

| Color | Color | interface | USB 3.0 |

| Lens | POMEAS VP-LZL-12105 | ||

| Working distance | 77 ± 2 mm | Interface | C-Mount |

| Optical magnification | 0.58X~7.5X | ||

| GPU | CPU | |

|---|---|---|

| Training platform | NVIDIA GeForce RTX 2060i | Intel (R) i7-10700 |

| Testing platform | NVIDIA GeForce RTX 2060i | Intel(R) i7-10700 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xue, W.; Zhao, C.; Fu, W.; Du, J.; Yao, Y. On-Machine Detection of Sub-Microscale Defects in Diamond Tool Grinding during the Manufacturing Process Based on DToolnet. Sensors 2022, 22, 2426. https://doi.org/10.3390/s22072426

Xue W, Zhao C, Fu W, Du J, Yao Y. On-Machine Detection of Sub-Microscale Defects in Diamond Tool Grinding during the Manufacturing Process Based on DToolnet. Sensors. 2022; 22(7):2426. https://doi.org/10.3390/s22072426

Chicago/Turabian StyleXue, Wen, Chenyang Zhao, Wenpeng Fu, Jianjun Du, and Yingxue Yao. 2022. "On-Machine Detection of Sub-Microscale Defects in Diamond Tool Grinding during the Manufacturing Process Based on DToolnet" Sensors 22, no. 7: 2426. https://doi.org/10.3390/s22072426

APA StyleXue, W., Zhao, C., Fu, W., Du, J., & Yao, Y. (2022). On-Machine Detection of Sub-Microscale Defects in Diamond Tool Grinding during the Manufacturing Process Based on DToolnet. Sensors, 22(7), 2426. https://doi.org/10.3390/s22072426