Abstract

Accurate kinematic modelling is pivotal in the safe and reliable execution of both contact and non-contact robotic applications. The kinematic models provided by robot manufacturers are valid only under ideal conditions and it is necessary to account for the manufacturing errors, particularly the joint offsets introduced during the assembling stages, which is identified as the underlying problem for position inaccuracy in more than 90% of the situations. This work was motivated by a very practical need, namely the discrepancy in terms of end-effector kinematics as computed by factory-calibrated internal controller and the nominal kinematic model as per robot datasheet. Even though the problem of robot calibration is not new, the focus is generally on the deployment of external measurement devices (for open loop calibration) or mechanical fixtures (for closed loop calibration). On the other hand, we use the factory-calibrated controller as an ‘oracle’ for our fast-recalibration approach. This allows extracting calibrated intrinsic parameters (e.g., link lengths) otherwise not directly available from the ‘oracle’, for use in ad-hoc control strategies. In this process, we minimize the kinematic mismatch between the ideal and the factory-calibrated robot models for a Kinova Gen3 ultra-lightweight robot by compensating for the joint zero position error and the possible variations in the link lengths. Experimental analysis has been presented to validate the proposed method, followed by the error comparison between the calibrated and un-calibrated models over training and test sets.

1. Introduction

Robots are being widely adopted on the industrial floor nowadays, with the aim to automate more and more manufacturing processes for increased efficiency and production. In addition, in industrial robotic applications, position accuracy and repeatability are the most fundamental attributes for automating flexible manufacturing/assembly tasks [1]. When operating robots in position control mode to trace a mathematically described trajectory, repeatability alone is not sufficient for it to trace that path. There arises a need to measure how accurately the robot moves along the generated path [2].

Positional accuracy can be defined as the difference between the position of a commanded pose and the barycentre of the attained position [3]. Most manufacturing processes involve low tolerances between various components, thereby requiring high positional accuracy. Errors higher than a couple of millimetres may result in wear or damage to the parts/objects involved. In such scenarios, accuracy can be seen as an important indicator of performance. Other examples of robotic applications that require high absolute positioning accuracy include offline programming, visual servoing and laser cutting [4].

Inaccuracies in robots are caused by several factors and in particular, these sources of error can be divided into geometric and non-geometric factors. The most common sources are geometric in nature such as minor axis misalignments from the model which arise during production, errors in joint positions and joint angles [5]. Robots are usually manufactured to their specifications at a minimum tolerance to ensure highly precise geometric configuration of the joint axes and transmission mechanisms for actuating the joints. However, oftentimes after assembly of all the parts, it becomes challenging to accurately measure these specifications and this can result in some deviations from the model. Apart from the manufacturing errors, robots operating in flexible industrial assembly systems also have abrasive wearing of transmission parts due to very low tolerances [6]. These may even require repairs to be conducted at times which can further result in errors with respect to the actual model and in order to compensate for these errors, a re-calibration of the robot may be needed [7].

The non-geometric factors contributing to accuracy errors consist of structural deformations such as backlash [8] and clearance in the transmission system as well as link flexibility, joint flexibility, slip-stick phenomena and thermal expansion [5]. However, these errors are considered to be much smaller compared to those originating from geometric factors [7,9]. Therefore, having a reliable method for calibrating robots is essential to ensure position accuracy at all times.

The kinematic calibration technique presented in this paper was motivated by a very practical need, namely the discrepancy in terms of end-effector kinematics as computed by factory-calibrated internal controller and the nominal kinematic model as per robot datasheet. The proposed approach focuses on the static open-loop re-calibration of the Kinova Gen3 robot arm; however, the procedure is generic enough to be applied to other serial manipulators.

Even though the problem of robot calibration is not something new, most of the existing methodologies require external measuring instruments, such as laser trackers or some kind of mechanical fixtures. This not only makes the calibration a laborious procedure in terms of the experimental setup and establishing data synchronization across multiples devices but also introduces challenges under limited robotic workspace (such as factory floor).

While many of the methods address the calibration problem by introducing corrections in the final end-effector pose, our approach focuses on correcting the kinematic model itself (i.e., in terms of the joint and the link offsets). As a result, the differentials (and hence the Jacobian) are easily obtained, which otherwise is not available from the factory calibrated feedback. Calibrated models are essential in the dynamic control of robots, where the computation of both forward and inverse dynamics of the robot depends heavily on the underlying model.

Hence, the contribution of our work can be summarized as (i) a fast calibration approach without the use of external measuring devices or mechanical fixtures but simply utilizing the factory calibrated kinematics as the ground truth and (ii) a way to calibrate the kinematic robot model itself rather than just the corrected end-effector pose.

The rest of the paper is organized as follows. In Section 2, we discuss the relevant robot calibration approaches followed by Section 3, where the kinematic modelling for the robot is reviewed. In Section 4, the methodology for calibration is discussed. Section 5 outlines the experimental validation of the proposed approach followed by the discussion and conclusion in Section 7 and Section 8 respectively.

2. Existing Methodologies for Kinematic Calibration

Robot calibration is performed to improve the positional accuracy of the end-effector by accurately calibrating the kinematic parameters, which are proven to be major contributors of the positioning error [6,10]. Kinematic calibration of robots can be done in two different ways namely: model-based and model-less. The model-based methods are more commonly used for robot calibration [6]. However, some studies have also implemented model-less calibration techniques such as [11,12,13,14]. Such techniques involve building relation between the position errors of robots and workspace or joint space [6].

The model-based calibration procedure in general involves developing a model whose parameters accurately represent those of the actual robot then accurately measuring specific features of the real robot followed by the computation of the parameter values for which the model reflects the measurements made [7]. Kinematic or Level 2 calibration is known to improve the robot accuracy across the entire volume of its configuration space. In the model-based approach of kinematic calibration, the geometric factors are applied to identify model parameters. Non-geometrical error sources can be minimized through Level 3 calibration. However, since these non-geometrical errors account for a rather small percentage of the total error, consideration of geometrical errors alone is usually sufficient for a simplified calibration model as they account for a considerable proportion of the end-effector pose error [7,15]. Therefore, in this study we propose a quick re-calibration (Level 2) procedure for serial robot arms by using the geometric factors for parameter identification. Non-geometric calibration is out of the scope of the current work.

Kinematic calibration is usually carried out in following four steps [4,6,16]: (i) Modeling, (ii) Measurement, (iii) Identification and (iv) Compensation/Correction. Before the measurements can be made, the kinematic error model for the robot must be transformed into an identification model. The identification model is a representation of the mapping from pose errors of the end-effector to the unknown geometric errors. The actual values of pose errors are then measured for different configurations using measurement devices and then input to the identification model wherein the geometric errors are computed using numerical methods before being compensated for using hardware/software [17].

Several studies have been conducted so far on the kinematic calibration of industrial robots. Denavit–Hartenberg (DH) modelling is the most popular kinematic modelling technique for serial robot arms; however, it is unable to address the singularity issue of two adjacent parallel joints. Ref. [10] presents a modified DH model (MDH) by introducing a new rotation parameter to overcome this difficulty. Many other works have dealt with the singularity problem through the use of Product-of-Exponentials (POE) based modelling [18,19,20].

Most calibration methods compare the taught point positions of a robot with measurements relating the end-effector to an external 3D measuring device such as a laser tracker [21,22,23,24,25,26], theodolite measurement devices [27] or coordinate measuring machines (CMM) [28,29]. These are known as open-loop calibration techniques [30]. Based on the measurements made, the kinematic parameters of the mathematical model of the robot are then corrected to minimize the difference between the positions where the robot thinks it is and its actual position in the workspace [1]. Ref. [28] performed online pose measurement with an optical CMM and used that as a feedback to steer the end-effector of the robot accurately, to the desired pose. Their approach increased positional accuracy of a robot independent of its kinematic parameters. Ref. [31] presented a method for calibration using distance and sphere constraints to improve robot accuracy in a specific workspace. Spheres with precisely known distances from each other were probed by the robot end-effector multiple times and the measured pose values were then compared with the poses calculated from the kinematic model in an iterative process until the root mean square (RMS) error between the iterations dropped below the specified threshold. Ref. [32] calibrated the robot parameters by controlling six-axis industrial robot arms to get to the same location in different poses. Different identification and compensation methods were proposed that could be mixed and matched to obtain optimal solutions depending on the operational environment.

Table 1 reviews model-based calibration techniques implemented in some of the recent works conducted on kinematic calibration as well as the proposed method for comparison. It can be observed that most of these techniques cater to the experimental environment, requiring external measuring instruments such as laser trackers. This makes it a very time consuming procedure which is only suitable for laboratory environments and not for industrial settings such as automated assembly lines [16]. Thus, to address the research gap on fast calibration methods, this work focuses on fast open-loop re-calibration. The ground truth for measurement of the end-effector pose was not an external measuring device but instead the kinematic feedback provided by the robot controller into which the calibration parameters are implicitly modelled. This calibrated forward kinematics (FK) model was not explicitly available to us; however, the feedback was considered accurate and further used to validate our experiments. A robot kinematic model is characterized by a non-linear function that relates link geometric parameters and joint variables to the robot end-effector pose [5]. To fit this model to experimental data, it needs to be linearized and solved. For parameter identification, different authors have so far implemented different methods of linearizing the kinematic models of their robots. Ref. [33] used least-square minimization (LSM) and single value deposition (SVD) to calibrate parameter errors. Ref. [34] also performed the parameter identification procedure by solving a linearized least square problem for which a ballbar measurement device was used. Linear least square algorithms are usually applied owing to their quick convergence rates [7].

Table 1.

Brief comparison of kinematic calibration techniques.

Even though the problem of robot calibration is widely explored, the focus is generally on the deployment of external measurement devices (for open loop calibration [9,28]) or mechanical fixtures (for closed loop calibration [35,36]). In the approach presented in this paper, a model-based fast-kinematic re-calibration is devised whereby we use the factory-calibrated controller as an ‘oracle’ for our fast-recalibration approach.This allows for the extraction of calibrated intrinsic parameters (e.g., link lengths) otherwise not directly available from the ‘oracle’, for use in ad-hoc control strategies. Here, we account for the small variations in both the zero joint offset and the link offsets by minimizing the mismatch between the nominal kinematic model as per robot datasheet and the ‘oracle’. While the problem can be formulated mathematically as a non-linear regression problem, by making the assumption that the end-effector orientation is unaffected by the variations in the link dimensions, it is possible to solve for the above offsets by solving two separate linear regression problems, which simplifies the computation. Concretely, we proceed by first solving for the zero joint offsets and then identifying the link offsets.

3. Kinematic Modelling

The first step towards a typical robot calibration is the modelling of the end-effector/tool pose (i.e., the forward kinematics) with respect to a reference frame, which in general is the robot base frame itself. This can be done in several ways, of which the 4-parameter DH (Denavit–Hatenberg) convention is most widely employed. In this paper, the robot platform used for experimental validation is the Kinova Gen3 ultra-lightweight robot arm and hence we employ the DH representation in order to adhere to the convention followed by the datasheet in building the ideal forward kinematic model.

For a robot manipulator with its N joints assuming the configuration , the forward kinematics can be expressed as a homogeneous transformation between the end-effector and base coordinate systems of the robot as [38]

where and represent the end-effector orientation and position with respect to the robot base frame,

Here, respectively represent the robot base frame and the end-effector frame. and represent the rotation and the translation between the and the frame respectively. Note that for the joint, the homogeneous transformation depends only on the joint angle .

We can now define the body Jacobian matrix for the manipulator as [38,39]

where and represent the adjoint matrix (The adjoint of a homogeneous transformation matrix is obtained as , where is used to denote the matrix representation) for the homogenous transformation of the end-effector frame to the joint frame.

The body Jacobian can be re-written as

where and are, respectively, the top and bottom submatrices.

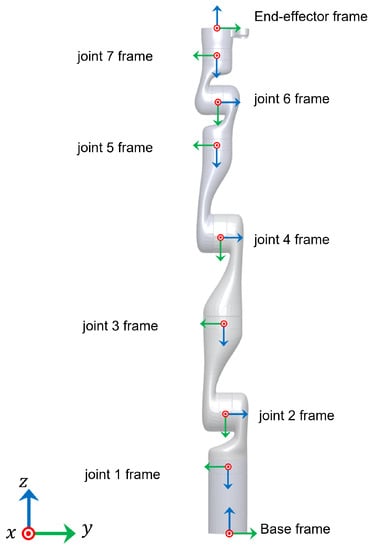

With reference to Figure 1, the homogeneous transformation matrices between the joint frames for a Kinova Gen3 ultra-lightweight robot at zero configuration are as shown in Table 2.

Figure 1.

Kinova Gen3 joint frames at zero configuration.

Table 2.

Homogeneous transformation matrices for Kinova Gen3 7DoF robot (provided by manufacturer).

4. Parameter Identification and Compensation

The kinematic model available from the manufacturer (for example, the one shown in Table 2) is ideal and does not represent the actual kinematics of the robot after the manufacturing and the assembling stages. In this section, we perform a fast kinematic calibration to account for this discrepancy.

To perform the calibration, we consider a Kinova Gen3 ultra-lightweight robot with 7 degrees of freedom, with no end-effector mounting. However, the methodology is general enough to be adapted for other industrial robots with arbitrary number of degrees of freedom.

Our approach is based on the assumption that the actual forwards kinematics measurements are known. While in some cases, the calibrated parameters are incorporated implicitly in the robot controller (e.g., Kinova Gen3) to provide an accurate feedback of the tool pose, in other instances, one can always make use of external measurement systems calibrated to the robot base frame to identify the actual forward kinematics. In our approach to facilitate a fast kinematic calibration, instead of using an external measuring device to set a ground truth for the tool pose, we consider the feedback tool pose from the robot controller (which is factory calibrated) itself as the ground truth.

For a N-joint serial manipulator, consider the forward kinematics as given by the uncorrected (i.e., the ideal) model from Equation (3)

(Note: The subscript is to show that the forward kinematics is defined for nominal geometric (link) lengths, possibly from CAD models).

However, as discussed in the previous section, the specified transformations hold true only under ideal conditions as variations are introduced during manufacturing and assembling stages. In this paper, we compensate for the zero joint offsets (which henceforth are called angular offsets ( generated in the assembly stage and also for possible deviations of the link dimensions (linear offsets ( where )) from their CAD models due to the manufacturing errors. To account for these variations, the transformation between two joint frames can be re-defined as

where is the position vector (say, given by the CAD model) of joint frame n with respect to joint frame and is the vector of linear offsets for the th link.

Equation (2) on substituting in Equation (3) gives us the corrected forward kinematics which can be denoted as

Assuming that the factory calibrated tool pose available as a feedback from the controller is a sufficiently accurate representation of the actual forward kinematics, the ground truth can be established as

Hence, our goal is to identify the small angular () and linear () adjustments to minimize, in some sense, the difference between and .

4.1. Identification of Angular Offsets (

The problem of calibration require us to compensate for both the linear and angular offset which results in a non-linear regression problem. However, for small enough angular offsets, under the assumption that the task-space orientation of the robot is dependent only on the angular offsets, the non-linear regression problem can be turned into two separate linear regression problems for determining the linear and the angular offsets as outlined as follows.

Let be the rotation matrix with joint offset correction and be the known rotation matrix.

At a first order, the vicinity of and can be expressed as

Recall that the Cartesian angular velocity and that , therefore

Hence Equation (4) can be re-written as

where the operator turns skew-symmetric matrices into corresponding 3D vectors.

Given a number of M robot joint configurations , , we determine an optimal estimate , via linear regression for the following system

where and

4.2. Identification of Linear Offsets (

Once the calibration for the joint offset is done, the angular adjustments can be taken into account by rewriting

Now, the linear offsets are accommodated into the robot kinematics by re-writing the homogeneous transformation between the joint frames as

For the same number of M measurements, we find the linear offsets that minimize the difference

where is the corrected end-effector position and is the known robot position.

The first order approximation of the error can be written as

Therefore, the optimal optimal estimate can be computed as a linear regression of the following (linear) system

The solution to the above equation gives us the linear adjustments to be made, which an be taken into account as

Hence, for a given joint configuration , the calibrated forward kinematic model can be represented as

5. Experimental Validation

5.1. Parameter Identification from the Training Dataset

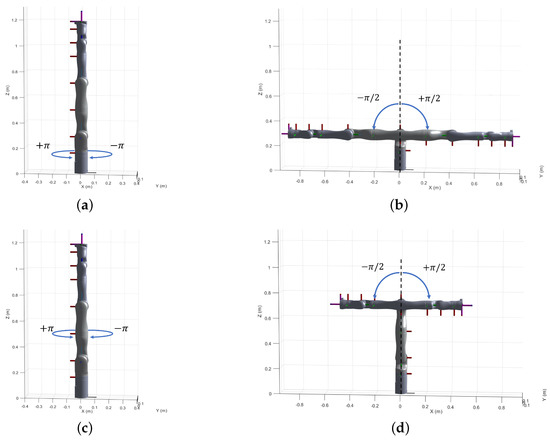

To validate the proposed approach, we collected a set of training data samples by executing the robot motion in the following manner. Joints with odd indices were commanded to move from 0 to rad followed by a motion from 0 to rad and the joints with even indices were moved from 0 to rad followed by a motion from 0 to rad (due to self-collision constraints). Each joint was moved independently while the rest of the joints were set at the respective zero positions, i.e., for the ith joint, (See Figure 2). The end-effector positions and orientations (i.e., the feedback from the controller) along with the joint configurations were logged during the robot motion. Once the samples were collected, the linear and the angular offsets were identified (Table 3) respectively with the help of Equations (6) and (10) to obtain the corrected kinematic model, which we denote as Model 1.

Figure 2.

Commanded robot joint motion to generate the training dataset. (a) Joint 1. (b) Joint 2. (c) Joint 3. (d) Joint 4. (e) Joint 5. (f) Joint 6. (g) Joint 7.

Table 3.

Calibration parameters for Model 1.

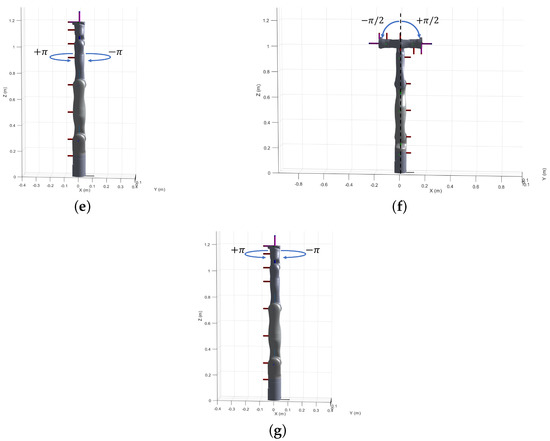

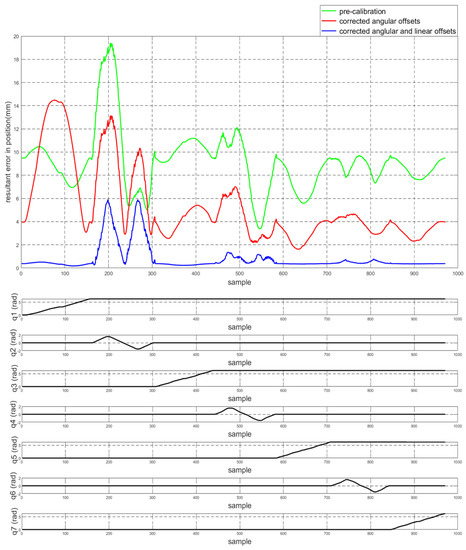

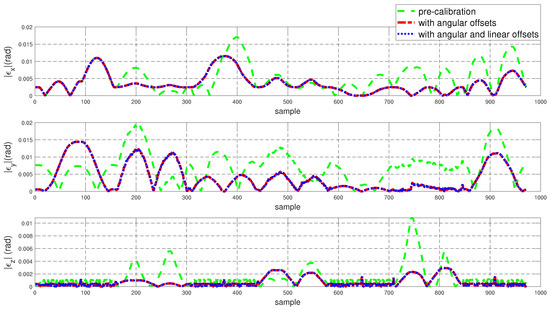

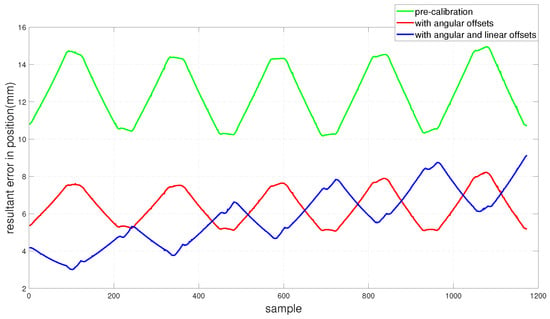

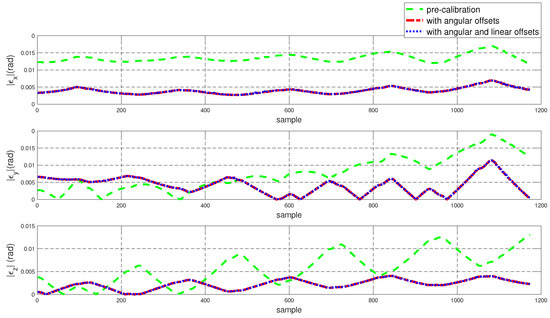

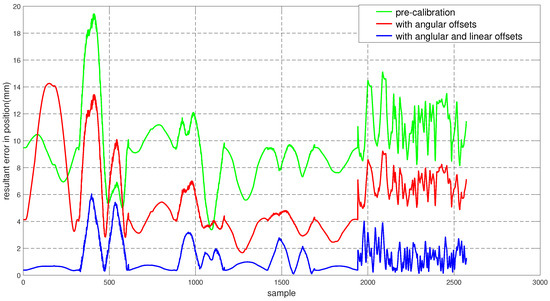

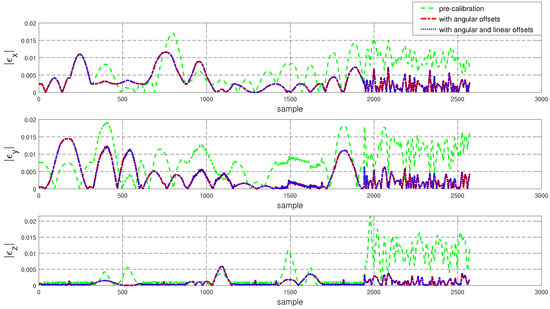

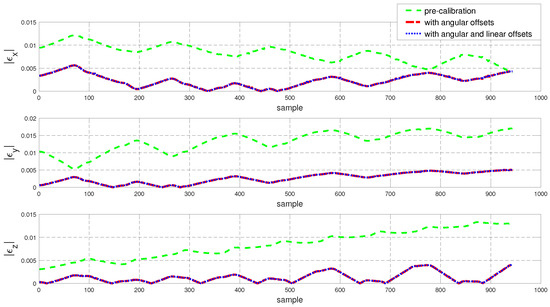

5.2. Validation on the Training Dataset

To assess the performance of the calibrated model on the training dataset, both the position and orientation errors have been computed before (Equation (1)) and after (Equation (11)) the calibration. Figure 3 depicts the plots for the absolute position error without calibration (), with angular offset calibration () and with both linear and angular calibration () for the end-effector. For better insight, the robot joint angle profile during the training data collection is also added. From the figure, a qualitative observation can be made that the calibration improves the resultant position accuracy. From Table 4, it can be observed that our calibrated model managed to bring down the maximum error by 69.59 % and the mean error by 91.29%. The orientation error is computed and plotted in Figure 4. With reference to Table 5, by compensating for both the angular and linear offsets, the maximum error for each of the x, y and z dimensions ( and respectively) are brought down by 32.75%, 25.77% and 73.15% whereas the mean errors came down by 31.37%, 44.29% and 53.3% respectively. It is evident that the orientation error does not change by introducing the linear offsets, which is in line with our assumption that the link length does not affect the orientation of the robot.

Figure 3.

The plot for the resultant end-effector position error for the training set before and after calibration (top) and the motion of each of the robot joints during the training set generation (bottom).

Table 4.

Resultant position error before and after calibration.

Figure 4.

Error in end-effector orientation for the training dataset before and after calibration (where and are the end-effector rotation matrices obtained using the kinematic model and the feedback respectively).

Table 5.

Resultant orientation error before and after calibration.

5.3. Validation on the Test Dataset

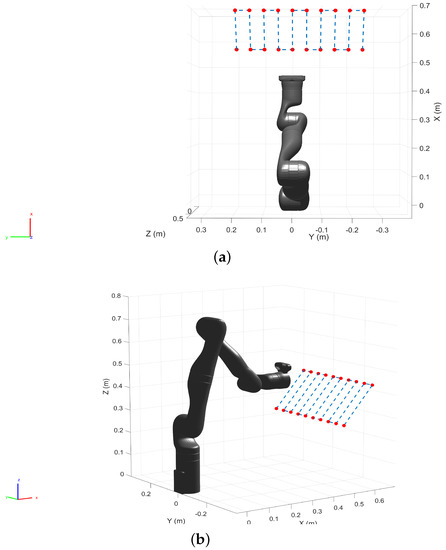

To validate the proposed approach against a test set, we generated a set of spatial locations spanning the first two quadrants of the robot base frame (Figure 5). The robot was commanded to move to these location resulting in a raster motion in position control mode, starting from the left to the right while the end-effector position and orientation were logged.

Figure 5.

Spatial data points are generated to collect test dataset. While the robot moves to each of the locations with a raster motion, the position and the orientation of the end-effector along with the joint angle are logged. (a) Top view. (b) Perspective view.

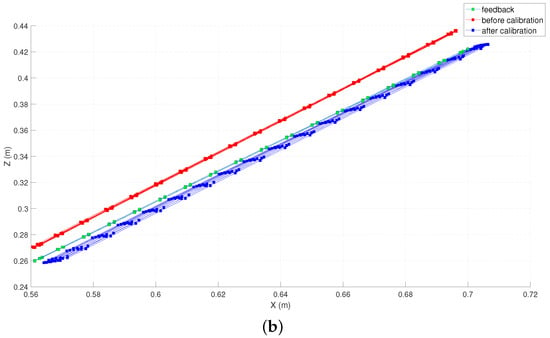

We computed the trajectory with the un-calibrated and the calibrated models, which are superimposed on the commanded trajectory for comparison in Figure 6.

Figure 6.

Estimated end-effector position before and after calibration (down-sampled by 5) plotted along with the feedback positions. (a) Top view (X-Y plane). (b) side view (X-Z plane).

The position and orientation error plots before and after the calibration are shown in Figure 7 and Figure 8 respectively. Despite the fact that the test data was generated mostly from a different subspace of the workspace compared to the training dataset, the calibrated model managed to bring down the resultant position error as can be observed in Figure 7. By compensating for the angular offsets alone, the maximum and the mean position errors were brought down by 44.97% and 48.8% respectively. With reference to Table 6, by accommodating both the angular and the linear offsets, the maximum position error is reduced by 38.93% whereas the mean error came down by 53.6%. Table 7 shows that, by compensating for both the angular and linear offsets, the maximum orientation error for each of the x, y and z dimensions is brought down by 59.17%, 39.47% and 68.70% whereas the mean errors are lowered by 70.30%, 45.60% and 61.40% respectively.

Figure 7.

Resultant end-effector position error for the test set before and after calibration.

Figure 8.

Error in end-effector orientation error for the test dataset before and after calibration.

Table 6.

Resultant position error before and after calibration.

Table 7.

Resultant orientation error before and after calibration.

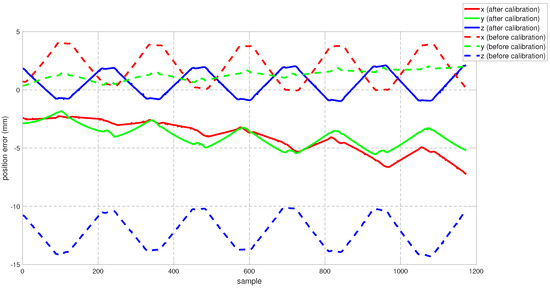

During the validation on the test set, while our approach was successful in bringing down the resultant kinematic error, the corrected model did not perform well in the X and Y axes individually (See Figure 9). However, often the X-Y accuracy is highly desirable in the context of planar tasks where the task space is simply the X-Y plane. We hypothesise the error in the X-Y plane is because of the fact that very few training data samples were collected from the desired subspace of the robot configuration space during the training set generation (See Figure 2). To validate the hypothesis, we devised a second experiment where we collected more training data samples from the desired subspace of the task space so that the calibrated model is more meaningful to the task to be performed by the robot.

Figure 9.

Test set position error along individual axes.

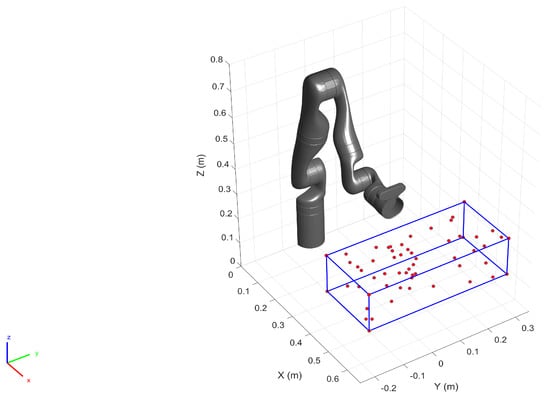

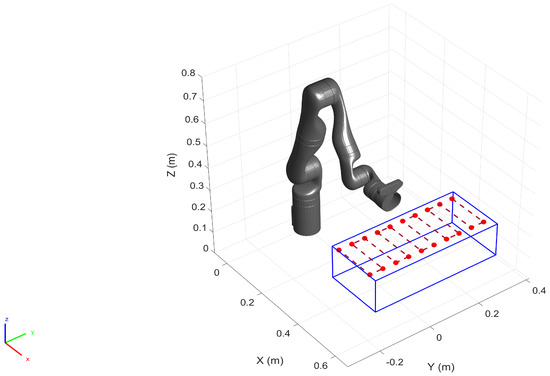

6. Operation Space Targeted Calibration

In this section, we perform the calibration by collecting additional training data samples from the pre-defined operating space of the robot. To that end, we first defined a work volume for the robot within which we assume the robot performs a given task (See Figure 10). We also generated a total of 58 spatial locations within the work-volume (including the vertices) as the goal positions for the robot. In order to populate the training dataset we commanded the robot (in position control mode) to move to each of the generated goal positions while logging the position and the orientation of the end-effector along with the joint configuration. The robot was commanded to maintain a constant orientation throughout the motion. The additional training data samples together with the original data samples (See Figure 3) generate the final training dataset for calibration. Here, almost 25% of the training set is composed of the additional data samples.

Figure 10.

For localized calibration a work volume is defined (formed by the blue lines), within which 58 spatial points (red circles) were generated. The robot moves to each of the 3D points, and the simultaneous logging of the robot position and the orientation populate the training set.

The calibration preformed on the test dataset yielded a set of calibration parameters as tabulated in Table 8 and the corrected kinematic model is considered to be Model 2.

Table 8.

Calibration parameters for Model 2.

6.1. Validation on the Training Set

To evaluate the performance of Model 2 on the training set, both the position and the orientation errors have been computed and plotted in Figure 11 and Figure 12 respectively. The statistical analysis for the error data is carried out and outlined in Table 9 and Table 10.

Figure 11.

Resultant end-effector position error for the training set before and after calibration. The error corresponding to the additional training data starts at sample number 1940.

Figure 12.

Error in end-effector orientation error for the training dataset before and after calibration.

Table 9.

Resultant position error before and after calibration.

Table 10.

Resultant orientation error before and after calibration.

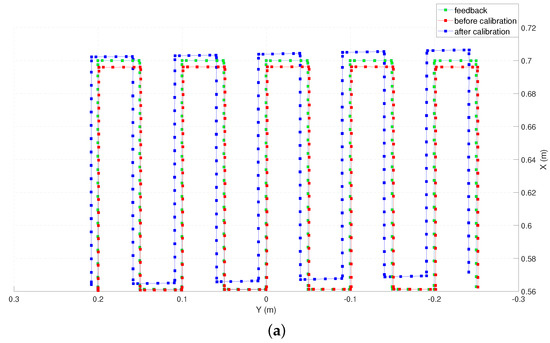

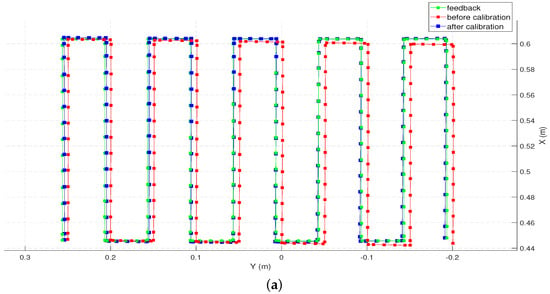

6.2. Validation on the Test Set

To populate the test dataset, we generated 20 spatial points in the pre-defined work volume as shown in Figure 13. The robot (in position control mode) was commanded to move to each of these locations by performing a raster motion maintaining a constant orientation as illustrated in Figure 13. The Cartesian position and the orientation of the end-effector were logged together with the robot joint configuration.

Figure 13.

Spatial data points are created within the pre-defined work volume and the test data samples are collected as the robot moves to each of the locations.

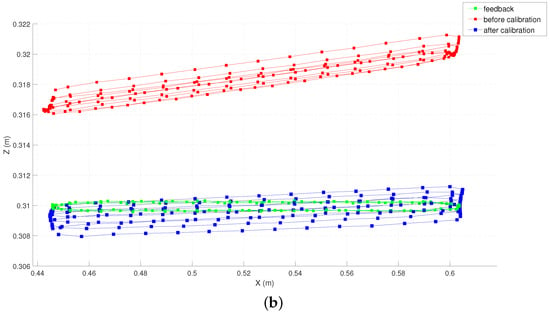

To evaluate the accuracy along individual axes, the commanded trajectory for the robot, the factory calibrated feedback from the controller, the forward kinematics given by the calibrated and the un-calibrated models are plotted on the X-Y and the X-Z planes as shown in Figure 14 (The data points representing the position before and after calibration are down-sampled by 10 for clarity of illustration). Qualitatively, it can be observed that the end-effector position computed by the calibrated model outperforms the one computed by the un-calibrated model along all three axes.

Figure 14.

Estimated end-effector position before and after calibration (down-sampled by 10) plotted along with the feedback positions. (a) Top view (X-Y plane). (b) side view (X-Z plane).

The performance of the calibrated model on the test data is evaluated and both the position and the orientation error are plotted in Figure 15 and Figure 16 respectively. In addition, the statistical analysis was performed and tabulated in Table 11 and Table 12.

Figure 15.

Resultant end-effector position error for the training set before and after calibration.

Figure 16.

Resultant error in end-effector orientation error for the training dataset before and after calibration.

Table 11.

Resultant position error before and after calibration.

Table 12.

Resultant orientation error before and after calibration.

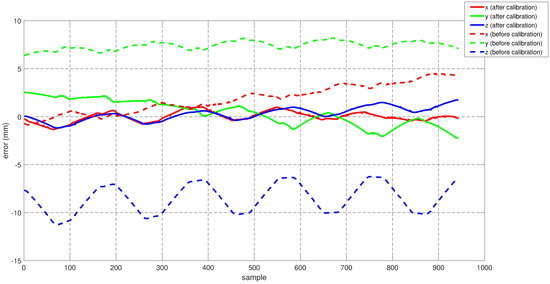

To perform a quantitative analysis, the error values for each of the axes are plotted in Figure 17. From the plot, it can be noticed that the calibrated model performs significantly better in comparison to its un-calibrated counterpart.

Figure 17.

Test set position error along individual axes.

Now to validate our hypothesis regarding the error in the X-Y plane for Model 1, in Figure 18 we compare the error measurements along the X, Y and Z axes both for Model 1 and Model 2. It can be observed that for the same test dataset, Model 2 (i.e., obtained by collecting for training samples from the operating space of the robot) exhibits a better performance in comparison to Model 1. This aligns with our hypothesis that the increase in the X-Y errors in Section 5.3 (Figure 9) is due to the fact that very few training data samples were collected from the desired subspace of the robot configuration space.

Figure 18.

Comparison of the error along the coordinates axes for Model 1 and Model 2.

7. Discussion

The work presented in this paper focuses on compensating for the linear and the angular offsets that adversely affect the position accuracy of the robot. However, we do not account for factors such as the compliance in the link or the joint transmission systems. The influence of these components comes into play significantly under the presence of a payload or even simply gravity itself, particularly under configurations which impose relatively higher torques on the links and joints. We observed that during the logging of the training dataset, the robot passes through a total of six such configurations (Figure 2b,d,f) at which higher gravitational torques are imposed due to the extension of the distal bodies. We can observe that corresponding to these configurations there are three pairs of identical peaks in the resulting error plots (see Figure 3) which diminish in magnitude as the robot motion progresses to the distal joints (also lower gravitational loading). Hence, we infer that the peaks with relatively larger values of errors are generated due to the link deflection under the increased gravitational loading, which was unaccounted for during the calibration.

8. Conclusions

Robot calibration is a necessity in planning and executing both contact and non-contact tasks alike, reliably and safely. This paper presents a fast re-calibration method to improve the robot position and orientation accuracy by compensating for the joint offset error as well as for the discrepancies in the link dimensions. Our approach is based on the assumption that the actual forward kinematics is known with sufficient accuracy, possibly through the factory calibrated feedback from the controller. A set of parameters to account for the linear and angular discrepancies are identified by minimising the mismatch between feedback and the computed forward kinematics. The proposed calibration approach brought about a significant improvement in the forward kinematics in comparison with an un-calibrated model, which is backed up by experimental analysis.

Author Contributions

Conceptualization, S.K., J.G. and D.C.; methodology, S.K., J.G., D.C. and S.H.T.; software, S.K. and V.R.; data curation, S.K., J.G. and V.R.; validation, S.K.; formal analysis, S.K. and D.C.; investigation, S.K. and J.G.; resources, D.C.; writing—original draft preparation, S.K. and J.G.; writing—review and editing, S.K., J.G., V.R., S.H.T., M.Z.A. and D.C.; supervision, D.C.; project administration, J.G., D.C. and M.Z.A.; funding acquisition, D.C. All authors have read and agreed to the published version of the manuscript.

Funding

The work was conducted within the Delta-NTU Corporate Lab for Cyber-Physical Systems with funding supporting from Delta Electronics Inc. and the National Research Foundation (NRF) Singapore under the Corp Lab @ University Scheme and partly supported by the National Research Foundation, Singapore, under the NRF Medium Sized Centre scheme (CARTIN).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Disclaimer

Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not reflect the views of National Research Foundation, Singapore.

References

- Conrad Kevin, L.; Yih, T.C. Robotic Calibration Issues: Accuracy, Repeatability and Calibration. In Proceedings of the 8th Mediterranean Conference on Control & Automation, Rio, Greece, 17–19 July 2000. [Google Scholar]

- Judd, A.; Knasinski, R. A technique to calibrate industrial robots with experimental verification. IEEE Trans. Robot. Autom. 1990, 6, 20–30. [Google Scholar] [CrossRef]

- ISO 9283:1998(R2015); Manipulating Industrial Robots—Performance Criteria and Related Test Methods. Standard, ISO Technical Committee 299 Robotics, BSI: London, UK, 1998.

- Gan, Y.; Duan, J.; Dai, X. A calibration method of robot kinematic parameters by drawstring displacement sensor. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419883072. [Google Scholar] [CrossRef]

- Motta, J.M.S.; Llanos-Quintero, C.H.; Coral Sampaio, R. Inverse Kinematics and Model Calibration Optimization of a Five-D.O.F. Robot for Repairing the Surface Profiles of Hydraulic Turbine Blades. Int. J. Adv. Robot. Syst. 2016, 13, 114. [Google Scholar] [CrossRef]

- Wang, W.; Wang, G.; Yun, C. A calibration method of kinematic parameters for serial industrial robots. Ind. Robot. 2014, 41, 157–165. [Google Scholar] [CrossRef]

- Elatta, A.Y.; Gen, L.P.; Zhi, F.L.; Daoyuan, Y.; Fei, L. An Overview of Robot Calibration. Inf. Technol. J. 2004, 3, 74–78. [Google Scholar] [CrossRef] [Green Version]

- Slamani, M.; Nubiola, A.; A Bonev, I.A. Modeling and assessment of the backlash error of an industrial robot. Robotica 2012, 30, 1167–1175. [Google Scholar] [CrossRef]

- Lattanzi, L.; Cristalli, C.; Massa, D.; Boria, S.; Lépine, P.; Pellicciari, M. Geometrical calibration of a 6-axis robotic arm for high accuracy manufacturing task. Int. J. Adv. Manuf. Technol. 2020, 111, 1813–1829. [Google Scholar] [CrossRef]

- Hayati, S.A. Robot arm geometric link parameter estimation. In Proceedings of the 22nd IEEE Conference on Decision and Control, San Antonio, TX, USA, 14–16 December 1983; pp. 1477–1483. [Google Scholar]

- Cai, Y.; Yuan, P.; Chen, D. A flexible calibration method connecting the joint space and the working space of industrial robots. Ind. Robot. Int. J. 2018, 45, 407–415. [Google Scholar] [CrossRef]

- Zeng, Y.; Tian, W.; Li, D.; He, X.; Liao, W. An error-similarity-based robot positional accuracy improvement method for a robotic drilling and riveting system. Int. J. Adv. Manuf. Technol. 2017, 88, 2745–2755. [Google Scholar] [CrossRef]

- Messay, T.; Chen, C.; Ordóñez, R.; Taha, T.M. Gpgpu acceleration of a novel calibration method for industrial robots. In Proceedings of the 2011 IEEE National Aerospace and Electronics Conference (NAECON), Dayton, OH, USA, 20–22 July 2011; pp. 124–129. [Google Scholar]

- Zeng, Y.; Tian, W.; Liao, W. Positional error similarity analysis for error compensation of industrial robots. Robot. Comput.-Integr. Manuf. 2016, 42, 113–120. [Google Scholar] [CrossRef]

- Chen, G.; Wang, H.; Lin, Z. Determination of the identifiable parameters in robot calibration based on the poe formula. IEEE Trans. Robot. 2014, 30, 1066–1077. [Google Scholar] [CrossRef]

- Li, Z.; Shuai Li, S.; Luo, X. An overview of calibration technology of industrial robots. IEEE/CAA J. Autom. Sin. 2021, 8, 23–36. [Google Scholar] [CrossRef]

- Jiang, Z.; Huang, M.; Tang, X.; Song, B.; Guo, Y. Observability index optimization of robot calibration based on multiple identification spaces. Auton. Robot. 2020, 44, 1029–1046. [Google Scholar] [CrossRef]

- Li, C.; Wu, Y.; Löwe, H.; Li, Z. Poe-based robot kinematic calibration using axis configuration space and the adjoint error model. IEEE Trans. Robot. 2016, 32, 1264–1279. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, W.; Dong, H.; Ke, Y. An improved kinematic model for serial robot calibration based on local poe formula using position measurement. Ind. Robot. Int. J. 2018, 45, 573–584. [Google Scholar] [CrossRef]

- Yang, X.; Wu, L.; Li, J.; Chen, K. A minimal kinematic model for serial robot calibration using POE formula. Robot. Comput.-Integr. Manuf. 2014, 30, 326–334. [Google Scholar] [CrossRef]

- Jin, Z.; Yu, C.; Li, J.; Ke, Y. A robot assisted assembly system for small components in aircraft assembly. Ind. Robot. Int. J. 2014, 41, 413–420. [Google Scholar] [CrossRef]

- Lertpiriyasuwat, V.; Berg, M.C. Adaptive real-time estimation of end-effector position and orientation using precise measurements of end-effector position. IEEE/ASME Trans. Mechatron. 2006, 11, 304–319. [Google Scholar] [CrossRef]

- Posada, J.D.; Schneider, U.; Pidan, S.; Gerav, M.; Stelzer, P.; Verl, A. High accurate robotic drilling with external sensor and compliance model-based compensation. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 3901–3907. [Google Scholar]

- Hoai-Nhan, N.; Jian, Z.; Hee-Jun, K. A new full pose measurement method for robot calibration. Sensors 2013, 13, 9132–9147. [Google Scholar]

- Jiang, Y.; Yu, L.; Jia, H.; Zhao, H.; Xia, H. Absolute positioning accuracy improvement in an industrial robot. Sensors 2020, 20, 4354. [Google Scholar] [CrossRef]

- Chen, T.; Lin, J.; Wu, D.; Wu, H. Research of Calibration Method for Industrial Robot Based on Error Model of Position. Appl. Sci. 2021, 11, 1287. [Google Scholar] [CrossRef]

- Whitney, D.E.; Lozinski, C.A.; Rourke, J.M. Industrial robot forward calibration method and results. J. Dyn. Syst. Meas. Control. 1986, 108, 1–8. [Google Scholar] [CrossRef]

- Gharaaty, S.; Shu, T.; Joubair, A.; Xie, W.F.; Bonev, I.A. Online pose correction of an industrial robot using an optical coordinate measure machine system. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418787915. [Google Scholar] [CrossRef] [Green Version]

- Hsiao, T.; Huang, P.-H. Iterative learning control for trajectory tracking of robot manipulators. Int. J. Autom. Smart Technol. 2017, 7, 133–139. [Google Scholar] [CrossRef] [Green Version]

- Siciliano, B.; Khatib, O.; Kröger, T. Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2016; Volume 200. [Google Scholar]

- Joubair, A.; Bonev, I.A. Kinematic calibration of a six-axis serial robot using distance and sphere constraints. Int. J. Adv. Manuf. Technol. 2015, 77, 515–523. [Google Scholar] [CrossRef]

- He, S.; Ma, L.; Yan, C.; Lee, C.-H.; Hu, P. Multiple location constraints based industrial robot kinematic parameter calibration and accuracy assessment. Int. J. Adv. Manuf. Technol. 2019, 102, 1037–1050. [Google Scholar] [CrossRef]

- Press, W.H.; Teukolsky, S.A.; Vetterling, W.T.; Flannery, B.P. Numerical recipes: The art of scientific computing. Phys. Today 1987, 40, 120. [Google Scholar] [CrossRef]

- Albert Nubiola, A.; Bonev, I.A. Absolute robot calibration with a single telescoping ballbar. Precis. Eng. 2014, 38, 472–480. [Google Scholar] [CrossRef]

- Nadeau, N.A.; Bonev, I.A.; Joubair, A. Impedance control self-calibration of a collaborative robot using kinematic coupling. Robotics 2019, 8, 33. [Google Scholar] [CrossRef] [Green Version]

- Gaudreault, M.; Joubair, A.; Bonev, I. Self-calibration of an industrial robot using a novel affordable 3D measuring device. Sensors 2018, 18, 3380. [Google Scholar] [CrossRef] [Green Version]

- Li, G.; Zhang, F.; Fu, Y.; Wang, S. Kinematic calibration of serial robot using dual quaternions. Ind. Robot. Int. J. Robot. Res. Appl. 2019, 46, 247–258. [Google Scholar] [CrossRef]

- Lynch, K.M.; Park, F.C. Modern Robotics; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Murray, R.M.; Li, Z.; Sastry, S.S. A Mathematical Introduction to Robotic Manipulation; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).