1. Introduction

Underwater target classification has been a hot topic driven by its important and general applications. However, many factors including complicated and variable marine environments, volatile modes of target related radiated noise, and lack of training samples have brought great challenges to the classifications of underwater targets. Radiated noise of an underwater target received by hydrophone is affected by navigational statuses, marine environment, etc. which leads to low classification accuracy.

Convolutional neural networks (CNNs) provide an efficient way to classify target in the field of image processing through local connection and weight planting sharing. CNN combines feature extraction and classifier design. Compared with traditional methods, CNN could avoid feature loss and dimension disaster and improve efficiency and accuracy of classification [

1]. When applying CNN to underwater target classification, the normal way would be transforming target data into image data. Then, the image data are preprocessed and sent into CNN to classify target.

Ref. [

1] proposed a deep competitive deep-belief network (CDBN) to learn underwater acoustic target features with more discriminative information from both labeled and unlabeled samples. By stacking the proposed competitive restricted Boltzmann machine, the network could adjust the activation level of the grouped hidden units by competitive learning. Ref. [

2] presented an automatic target recognition approach for sonar onboard unmanned underwater vehicles (UUVs). Target features were extracted by a convolutional neural network (CNN) operating on sonar images, and then classified by a support vector machine (SVM) that was trained based on manually labeled data. Ref. [

3] developed a new subband-based classification scheme to classify underwater mines and mine-like targets from the acoustic backscattered signals. The system consisted of a feature extractor using wavelet packets in conjunction with linear predictive coding (LPC), a feature selection scheme, and a backpropagation neural-network classifier. Ref. [

4] used the idea of transfer learning to pre-train the neural network on the ImageNet dataset, and improved fish recognition performance correspondingly. Ref. [

5] used the dataset of civil ships, and utilized the structure of CNN plus extreme learning machine (ELM) to classify underwater target. Ref. [

5] utilized CNN to learn deep and robust features of underwater targets, followed by removing the fully connected layers. Then extreme learning machine (ELM) fed with the CNN features was used as classifier to conduct classification. Experiments on actual dataset of civil ships obtained recognition rate up to

. Ref. [

6] used sparse autoencoder (SAE) to obtain spectral numbers from data of underwater targets, combining the softmax classifier. Ref. [

7] proposed a classification and recognition method based on the time-domain second-order pooled CNN with the time–frequency joint attention mechanism.

In general, sample sizes of underwater targets are small, and different categories are imbalanced. This would cause serious problems when applying deep learning algorithm, which is not considered in the above investigations. To tackle the problem of small sample size, a deep learning model may be very complex and involve too many parameters with prohibitive computational burdens. Imbalanced categories may cause serious interference to the training of the model, and make the classification tend towards the class that dominates the data set.

In this paper, we have investigated underwater target classification based on a deep learning algorithm. To tackle the problems of small sample size and imbalanced categories of underwater target data, we have proposed the modified DCGAN model to augment the underwater target dataset by generating “fake” data with high quality and diversity based on real target data. We have proposed the S-ResNet model for underwater target classification by combining CNN with SqueezeNet, which is a popular type of lightweight neural network. We found that our proposed model obtains good classification accuracy while significantly reducing complexity of the model.

We summarize the contributions of this paper as follows:

We have proposed a modified DCGAN model to augment data for underwater targets, which could improve the quality and training stability for underwater targets with a small sample size.

We have proposed a S-ResNet model to obtain good classification accuracy while significantly reducing the complexity of the model.

Field experiments have been carried out with five different types of underwater targets, verifying the effectiveness of proposed models.

The structure of the paper is as follows.

Section 2 introduces related works in the fields of data augmentation and classification models.

Section 3 presents the materials and models of our proposed method, including the framework, the modified DCGAN model, and the S-ResNet classification model.

Section 4 illustrates the performance our proposed models with experimental data. Finally,

Section 5 draws conclusions and and discusses future work.

3. Proposed Underwater Target Classification Models

3.1. Framework

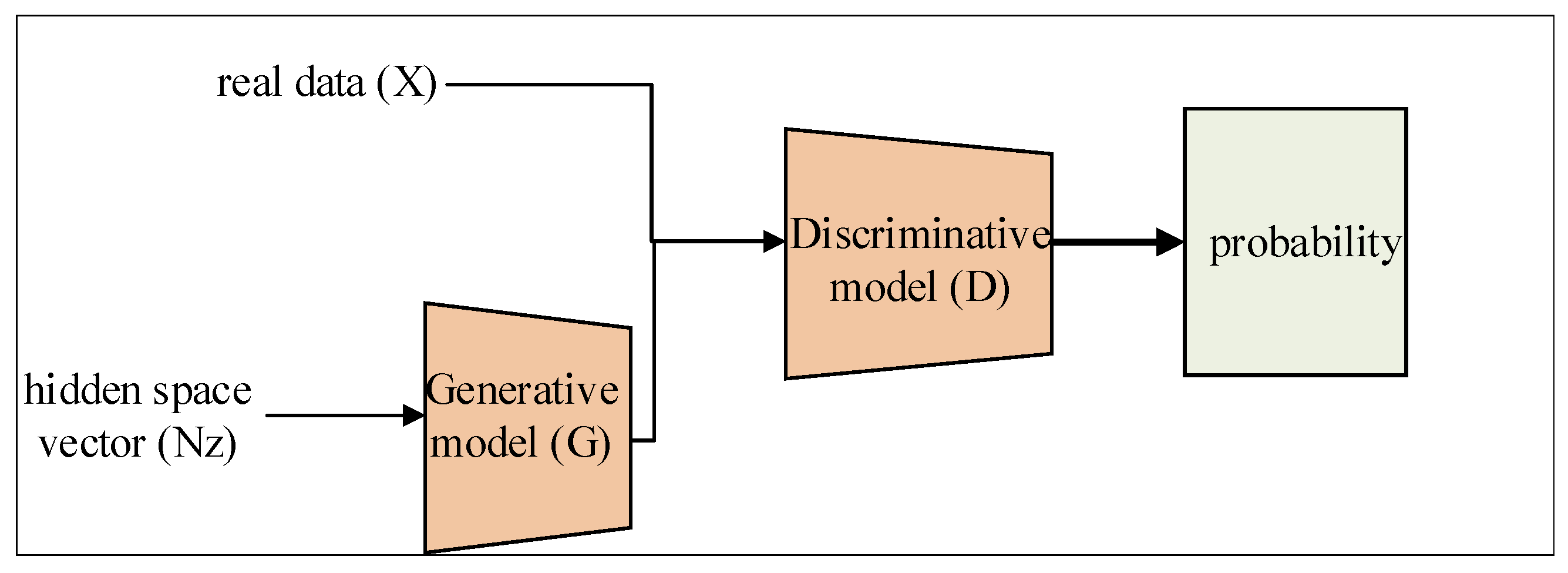

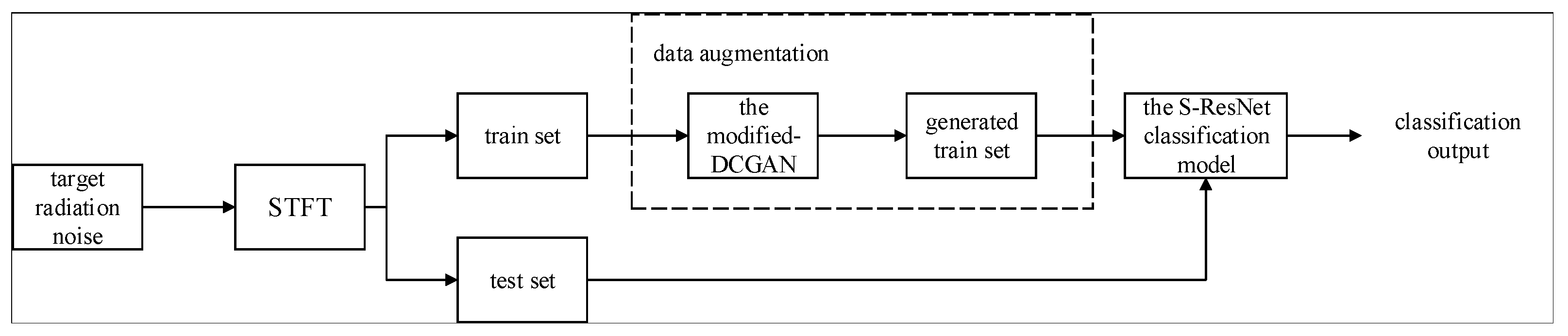

The block diagram of the proposed underwater target classification framework is shown in

Figure 2. The collected radiated noises of a target are preprocessed by short-time Fourier transform (STFT), which can depict characteristics of different underwater targets in both time and frequency domains. The two-dimension results of STFT could be viewed as images, and different characteristics of STFT results corresponding to different underwater targets could be captured by different images. Thus, CNN-based classification models could be utilized to classify underwater targets.

We follow this methodology and divide the different target data in the form of time–frequency images randomly into train sets and test sets. The problem is, however, that given the price of obtaining data samples for different underwater targets, one may lack sufficient training data, especially for some types of targets. Considering we are dealing with time-*frequency images, naturally one could handle this problem by utilizing data augmentation such as GAN and DCGAN to generate new data to target. Unfortunately, we find that both GAN and DCGAN are not effective for underwater targets. In this paper, we have proposed the modified DCGAN model to augment data in training set for the target with limited samples. The augmented data are used to train and optimize the subsequent classification model. To deal with the classification of underwater targets more efficiently, we have also proposed S-ResNet classification model.

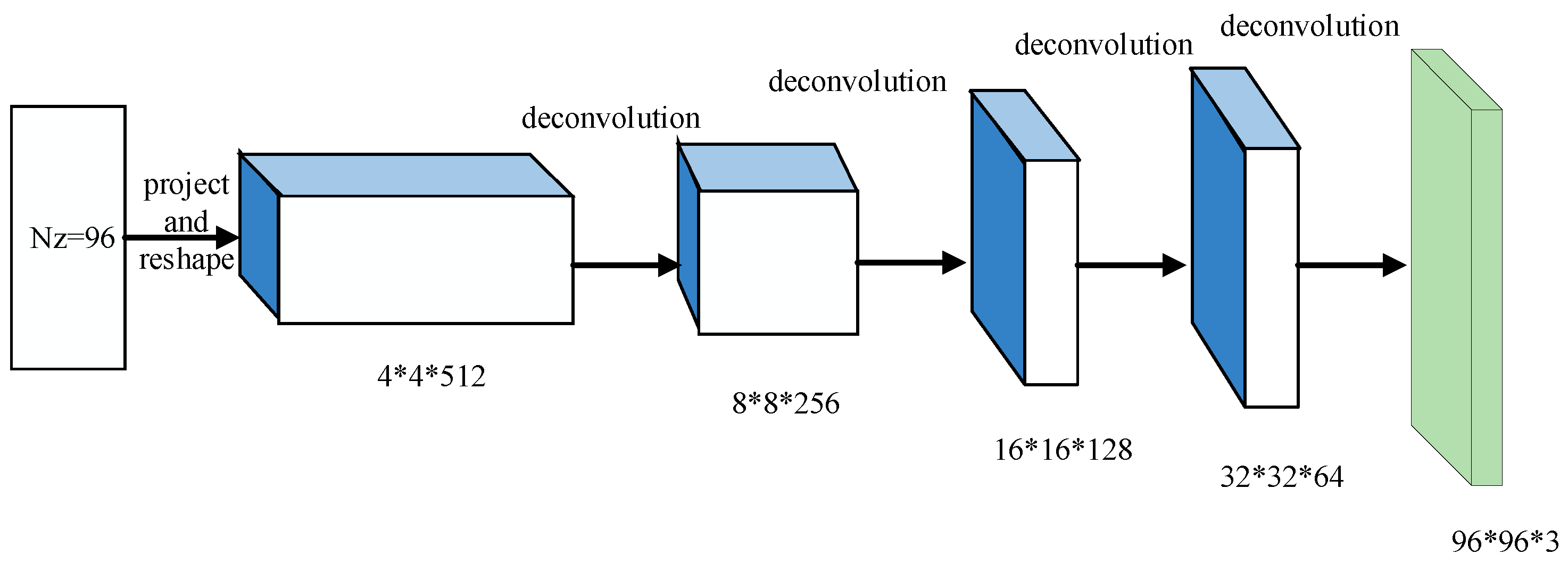

3.2. The Modified DCGAN

Although DCGAN has the powerful ability to generate new data with distribution similar to real data, the optimization of DCGAN may be disturbed by error characteristics in the process of learning and training, including mode collapse and checkerboard. GAN mode collapse is essentially a GAN training optimization problem. In this paper, we tackle the mode collapse problem by modifying the network architecture and optimizing the hyperparameter. Specifically, we have modified the architecture of DCGAN by tuning the last layer of convolution kernel in the generative model. When training the generative model, deconvolution is used for spatial sampling instead of a pooling layer. Time–frequency images of underwater targets are reconstructed using a set of convolution kernels and features. Through step-by-step deconvolution, the size of the images continuously expands in both length and width, whereas depth continuously decreases, until required size has achieved. Batch normalization (BN) is utilized to ensure unobstructed gradient flow, avoiding being affected by the initialization of weight parameters, and training performance could be improved. To avoid the problem of partly gradient saturation and to make the model more stable, the proposed generative model uses the ReLU activation function internally and the Tanh activation function in the data output layer. Furthermore, we find that the quality of generated data could be improved by optimizing the hyperparameter of adaptive moment estimation (Adam) optimization algorithm of generation and discriminant models. With respect to the optimization process for generative and discriminative models, we have selected the adaptive moment estimation (Adam) algorithm to improve stability. Specifically, we can prevent shock and instability by changing the parameter from to in the Adam optimization algorithm.

In our modified-DCGAN, the values of convolution kernel size and stride during deconvolution operation are optimized to reduce the checkerboard effect. Through a large number of training and optimization, the size of the convolution kernel and stride are set as

and 2 for deconvolution in the generative model, respectively, whereas corresponding values are set as

and 3 in the last layer of the generative model to reduce the checkerboard effect. The generative model of proposed modified-DCGAN is shown in

Figure 3, and the specific structure is illustrated in

Table 1.

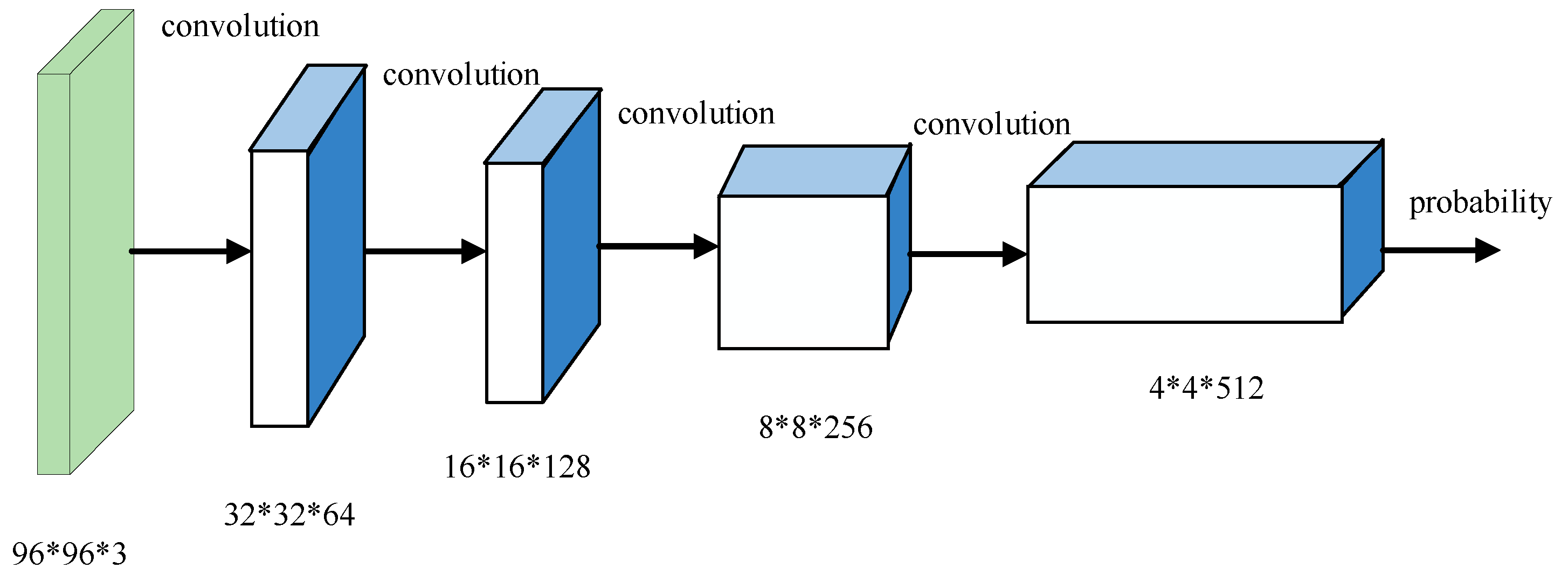

In our discriminative model, a convolutional layer with the stride greater than 1 is used to replace the pooling layer for spatial subsampling. The last layer is flattened and sent to the output layer to preserve position information as much as possible. Similar to the the generative model, the discriminative model uses the Leaky ReLU activation function internally to maximize retention of information from the previous layer and update the negative ladder information. Sigmoid activation function is used only in the data output layer. BN is utilized to stabilize the learning process. The discriminative model used in this paper is shown in

Figure 4, and

Table 2 shows the specific structure of proposed discriminative model.

3.3. The Classification Model

In this paper, we have proposed the S-ResNet model for underwater target classification by combining a CNN model with a lightweight neural network. The proposed model is expected to effectively reduce the number of network parameters and computational complexities without deteriorating the performance of underwater target classification.

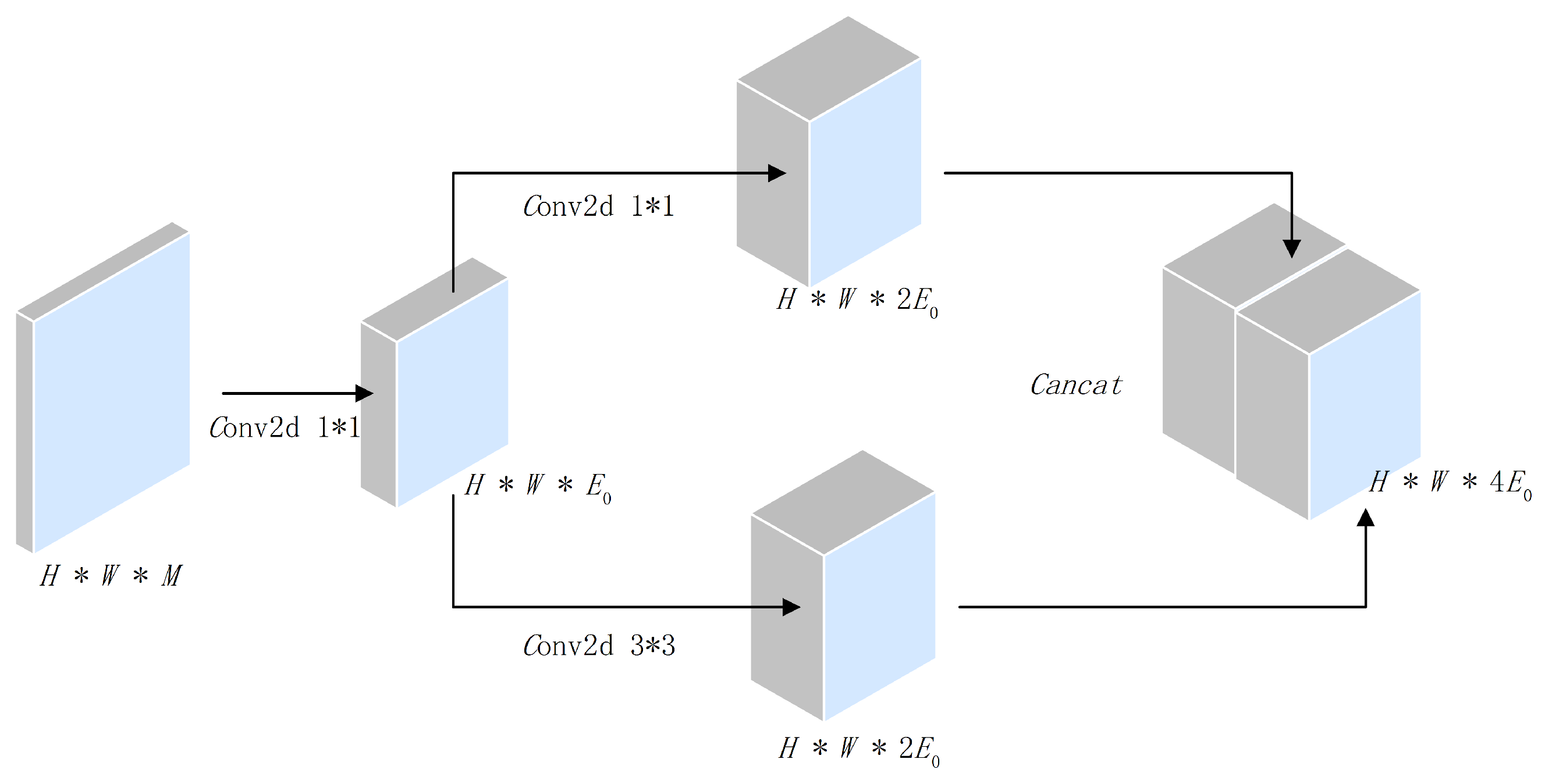

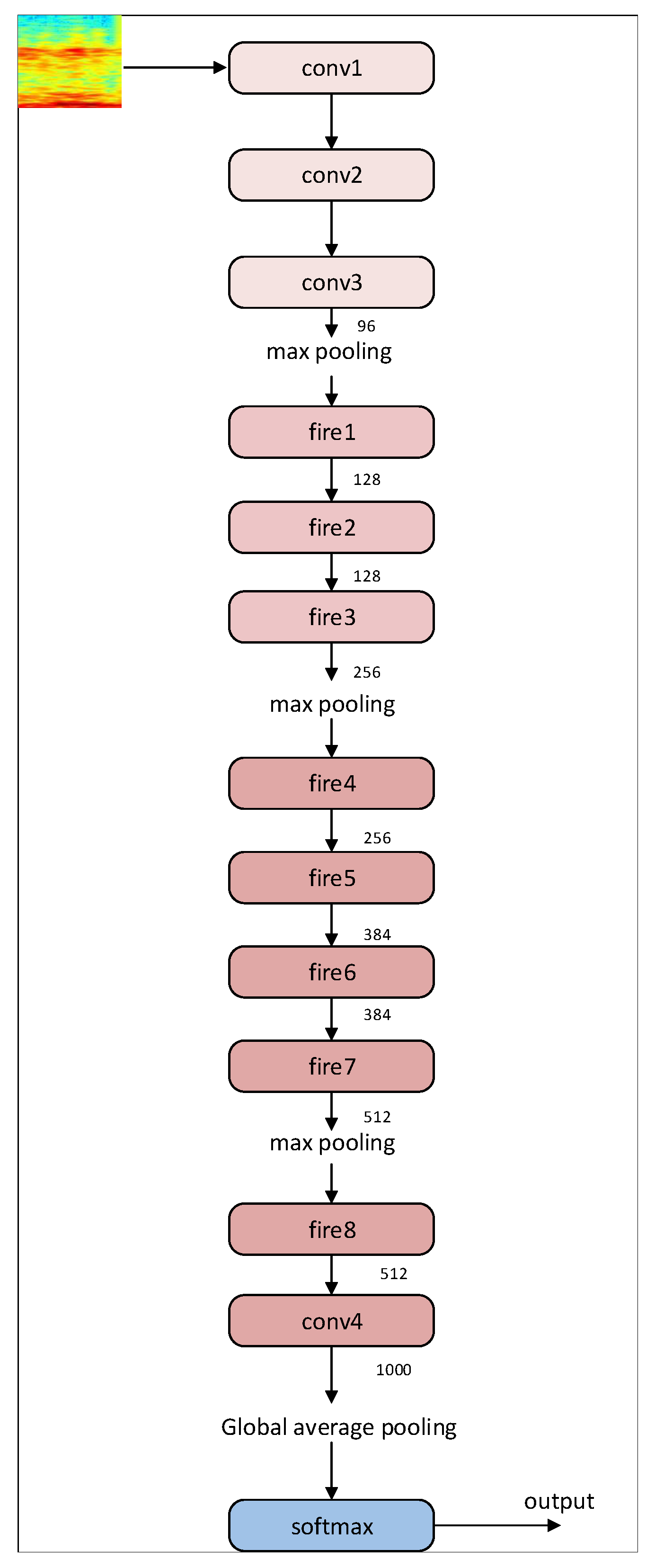

The specific structure of proposed S-ResNet classification model is shown in

Figure 5 and detailed as follows. The

convolution layer in the first layer of the SqueezeNet network model is decomposed into

convolution layers, by which the number of model parameters could be significantly reduced without sacrificing classification performance. Inspired by the idea of fire module in the SqueezeNet, we have designed a new fire module as the constructive unit block for the S-ResNet classification model, as shown in

Figure 6. In the designed fire module, the input size is

, in which

H,

W, and

M represent the length, width, and number of channels of the input sample data, respectively, and the output characteristic graph is

, in which

denotes the number of convolution kernels (the number of convolution kernels with

and

are both

). Note that, in our S-ResNet model, we have

. The compression ratio parameter in the fire module in this section is set as

.

Note that the number of convolution kernels of the proposed S-ResNet model is reduced compared with SqueezeNet, thus the computational complexity is also reduced. Furthermore, the ratio of squeeze layer to expand layer of the S-ResNet model is 1:4, while in the original SqueezeNet it is 1:8. By increasing the ratio, we can obtain a better tradeoff between classification performance and computational complexity. The ratio of the number of convolution kernels in the expanded layer to the total number of convolution kernels is a hyper parameter, which is set as 0.25 in our model, showing the tradeoff between the performance and complexity of the model.

The S-ResNet classification model can further improve the performance of quantitative neural network without increasing the number of parameters of the CNN through convolutional kernel decomposition and compression ratio hyperparameter. Compared with the classical convolutional neural network, the S-ResNet classification model has the advantages of fewer parameters.

The cross entropy loss function is used in conjunction with softmax in our model. The specific structure of S-ResNet classification model is shown in

Table 3.

4. Experimental Results

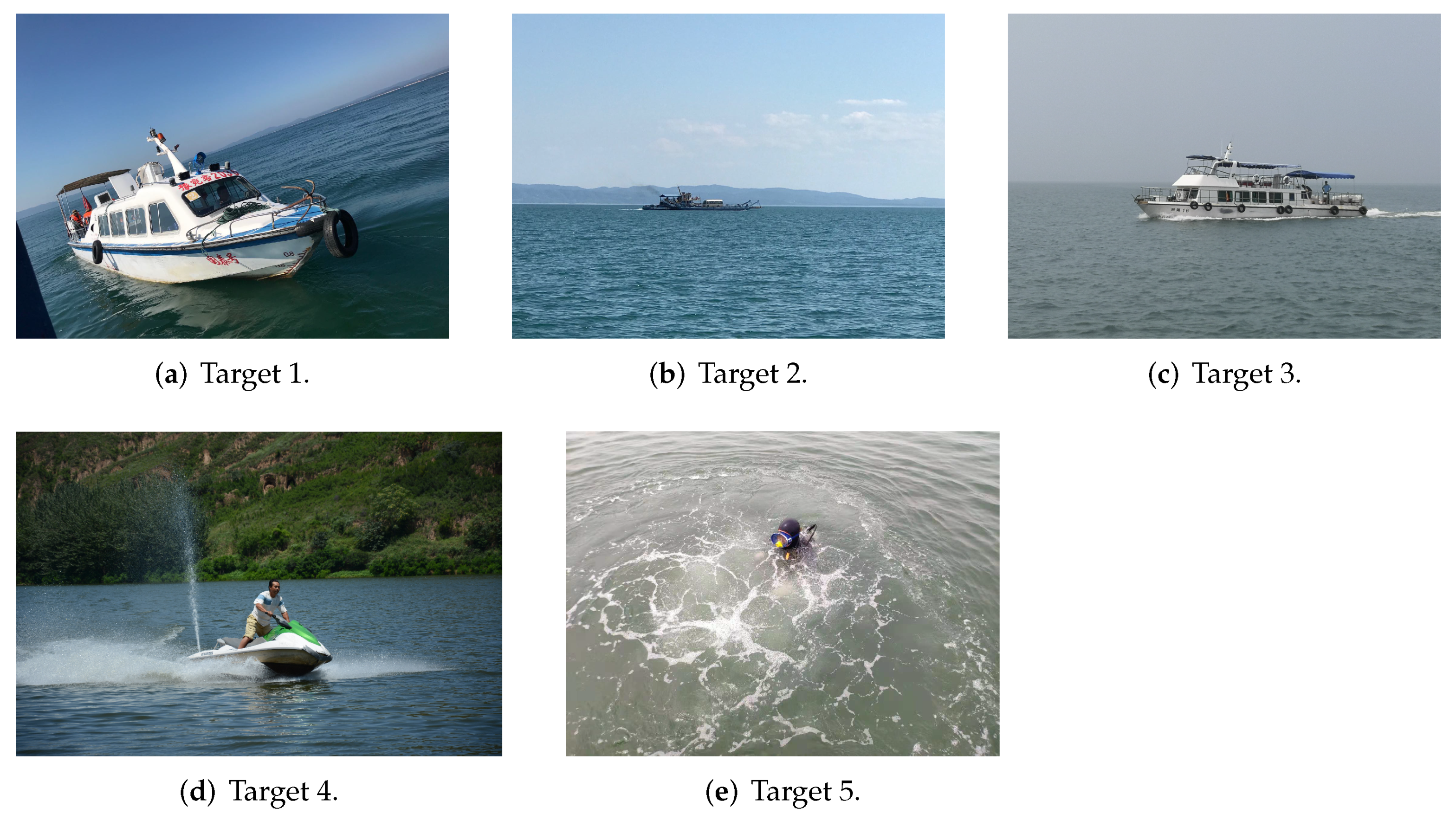

The experiments were conducted at three different locations in China, namely the Danjiangkou Reservoir in Henan Province, the Yangjiahe Reservoir in Shaanxi Province, and Jiao Zhou Bay in Shandong Province. The collected data correspond to five different types of targets, namely a speedboat, two different types of ferries, a motorboat, and a frogman, as shown in

Figure 7.

Collected data of each type of target are processed with short-time Fourier transform (STFT). The resulted time–frequency diagrams are taken as dataset S, which is randomly divided into train set and test set in accordance with preset ratio of 7:3. The original resolution of the generated time–frequency diagram is , and is reduced to . This is the standard resolution of classic CNN-based models, and the computational complexity can be reduced with lower resolution.

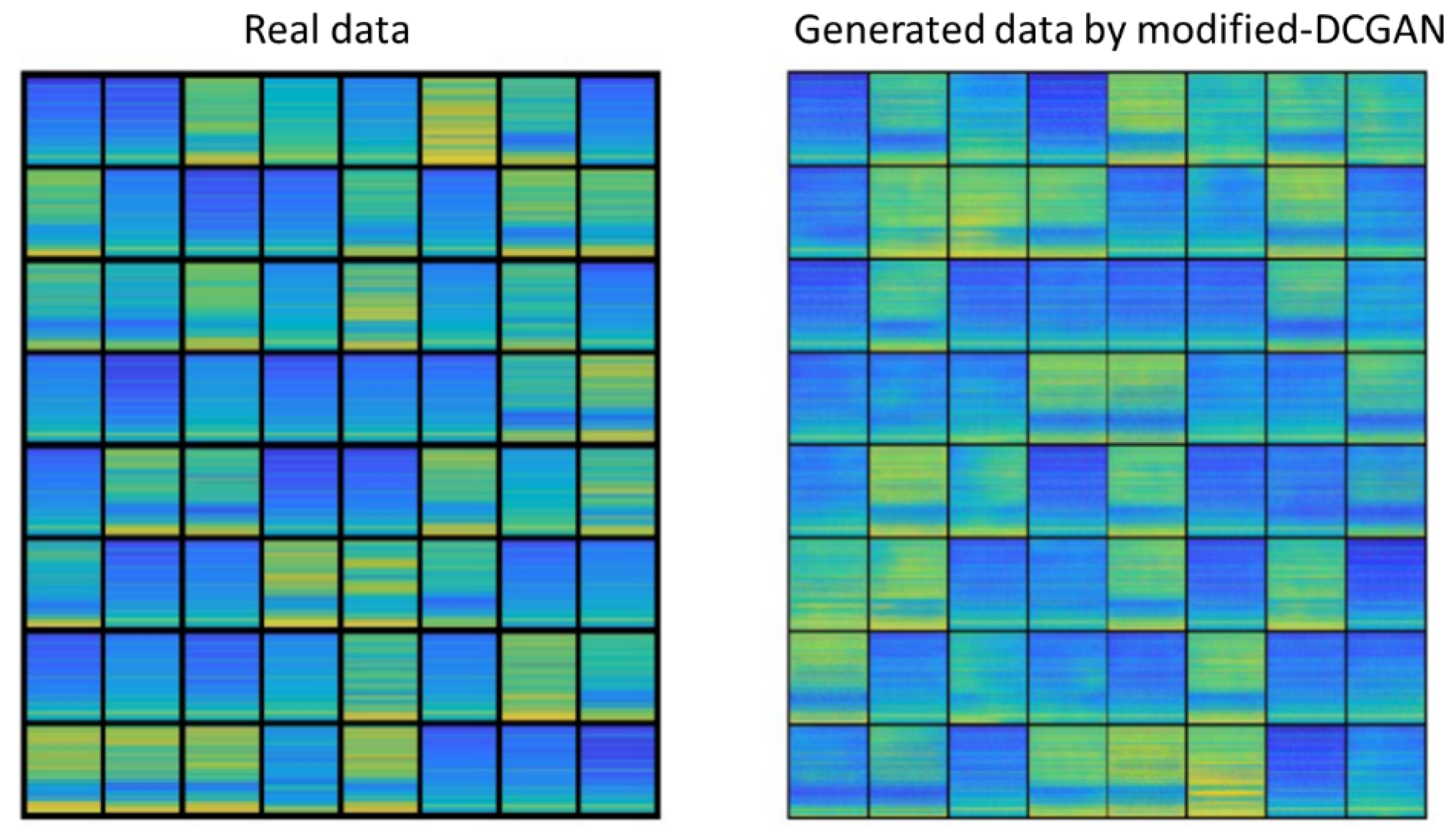

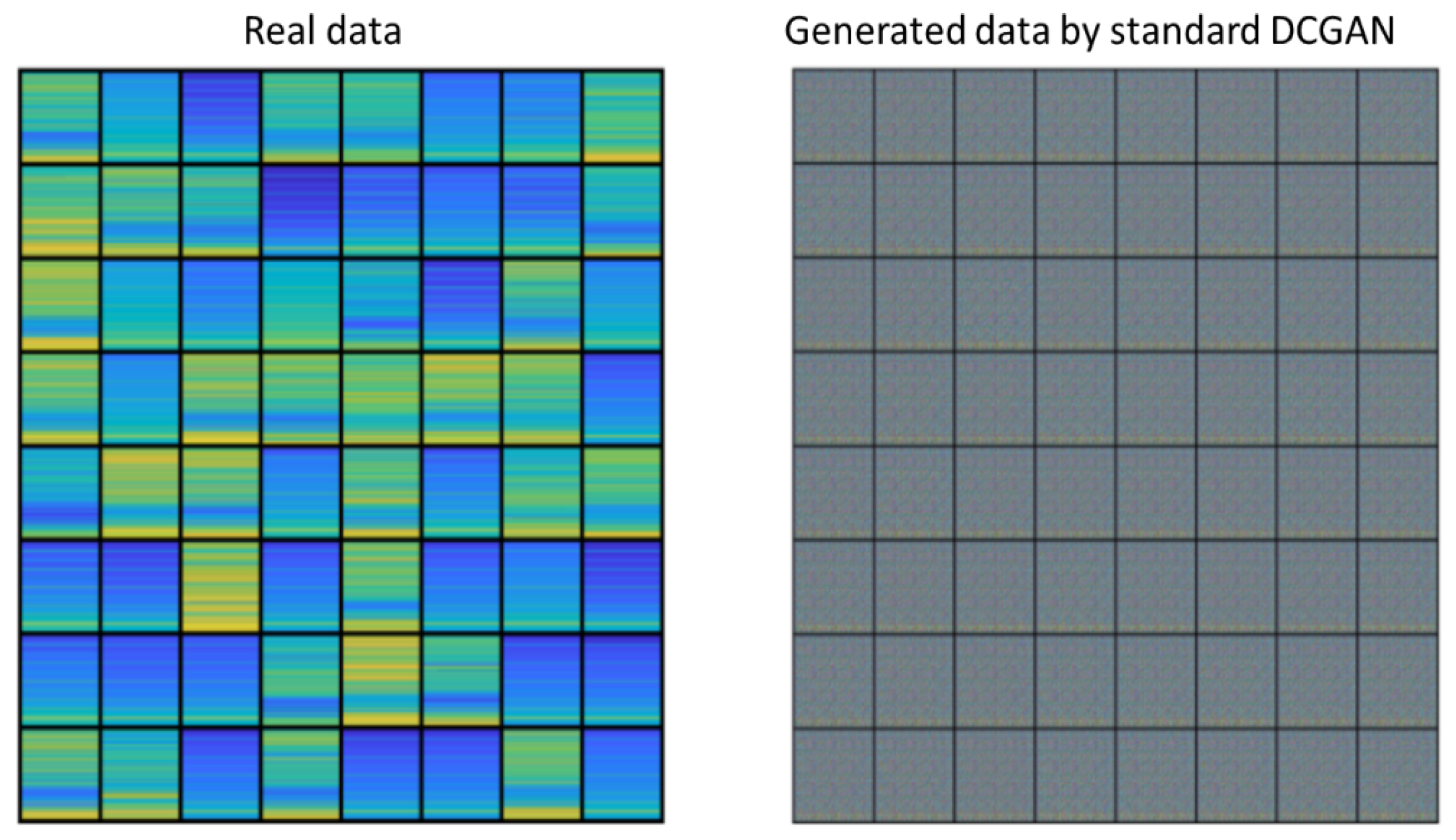

As the collected data from the frogman are very limited, we test our proposed modified DCGAN and standard DCGAN models on the frogman dataset. Parameter settings of both models are shown in

Table 4. The graphs from real data and generated graphs through proposed modified DCGAN with the resolution

are shown in

Figure 8, whereas the generated graphs by standard DCGAN with the resolution

are shown in

Figure 9. We put the 64 generated graphs together for comparison.

As

Figure 9 shows, the standard DCGAN model fails to generate effective data, and all generated data are same, indicating mode collapse and checkerboard artifact. These problems may be caused by insufficient feature learning of the standard DCGAN model when generating data. On the other hand, it can be seen from

Figure 8 that positions of spectral line are approximately correct. Furthermore, the generated graphs from proposed modified DCGAN model and real data look very similar, indicating high quality and good diversity of the generated data.

Next, we quantitatively evaluate our model through two commonly used indicators, namely Frechet inception distance (FID) and inception score (IS), in

Table 5. Essentially, FID measures the difference between the real data and generated data, while IS indicates the quality and diversity of generated data. As shown in

Table 5, a smaller FID value indicates the data generated through our modified DCGAN model are closer to the real data, whereas a larger IS value suggests better quality and diversity.

We augmented data corresponding to the frogman by 200 extra samples with the proposed modified DCGAN model. The numbers of data samples with augmentation for different targets are shown in

Table 6. The original train set

is supplemented by generated data, and proposed S-ResNet classification model is tested on test set. Parameter settings of proposed S-ResNet classification model are shown in

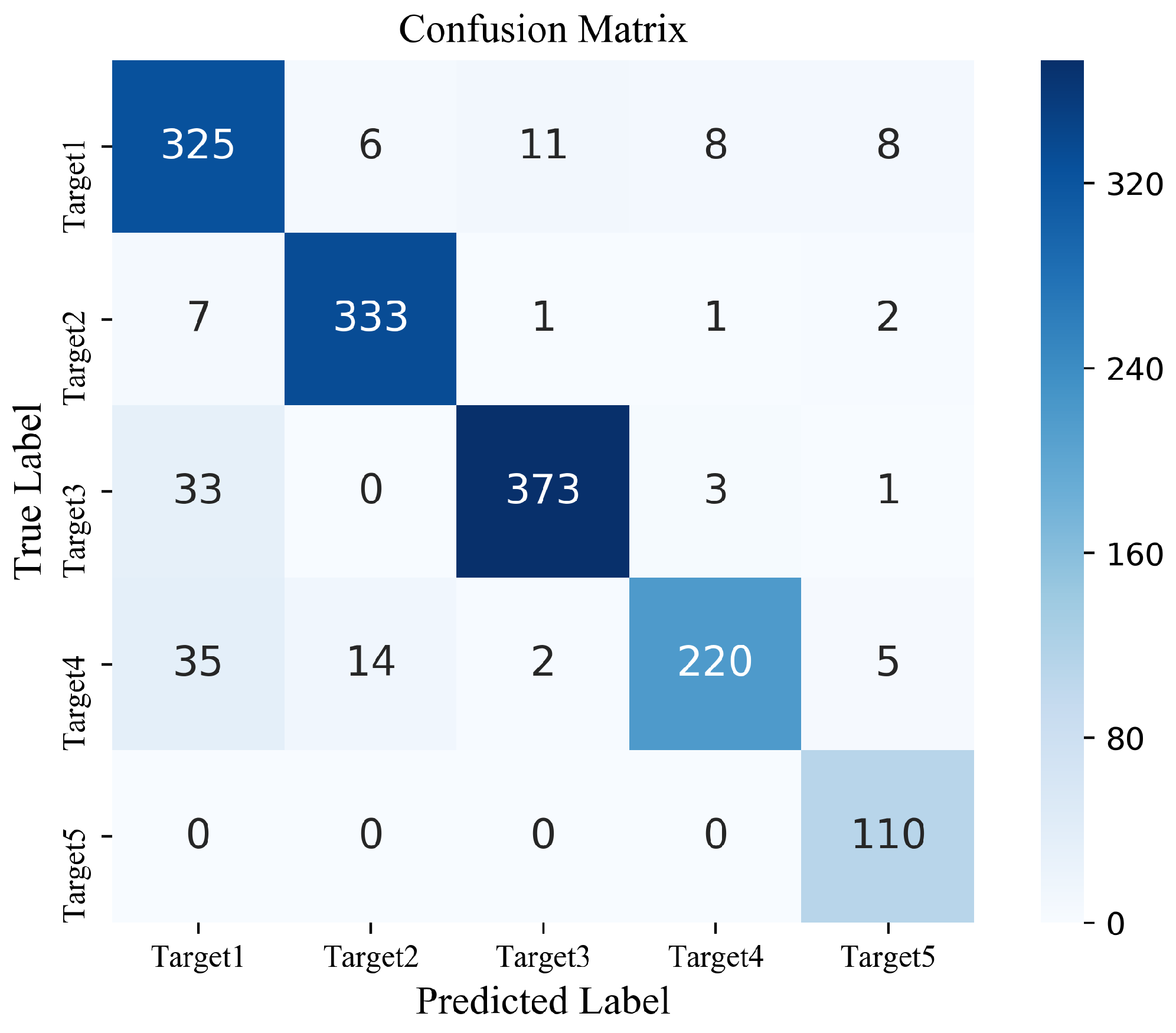

Table 7. The obtained confusion matrix is shown in

Figure 10, and the classification performance is shown in

Table 8.

For comparison, we provide the classification performance without data augmentation in

Table 9. Clearly, classification performance is improved by utilizing a modified DCGAN model, indicating the effectiveness of the proposed model. Note that, although we only augment the dataset corresponding to the frogman, the whole classification performance could be improved.

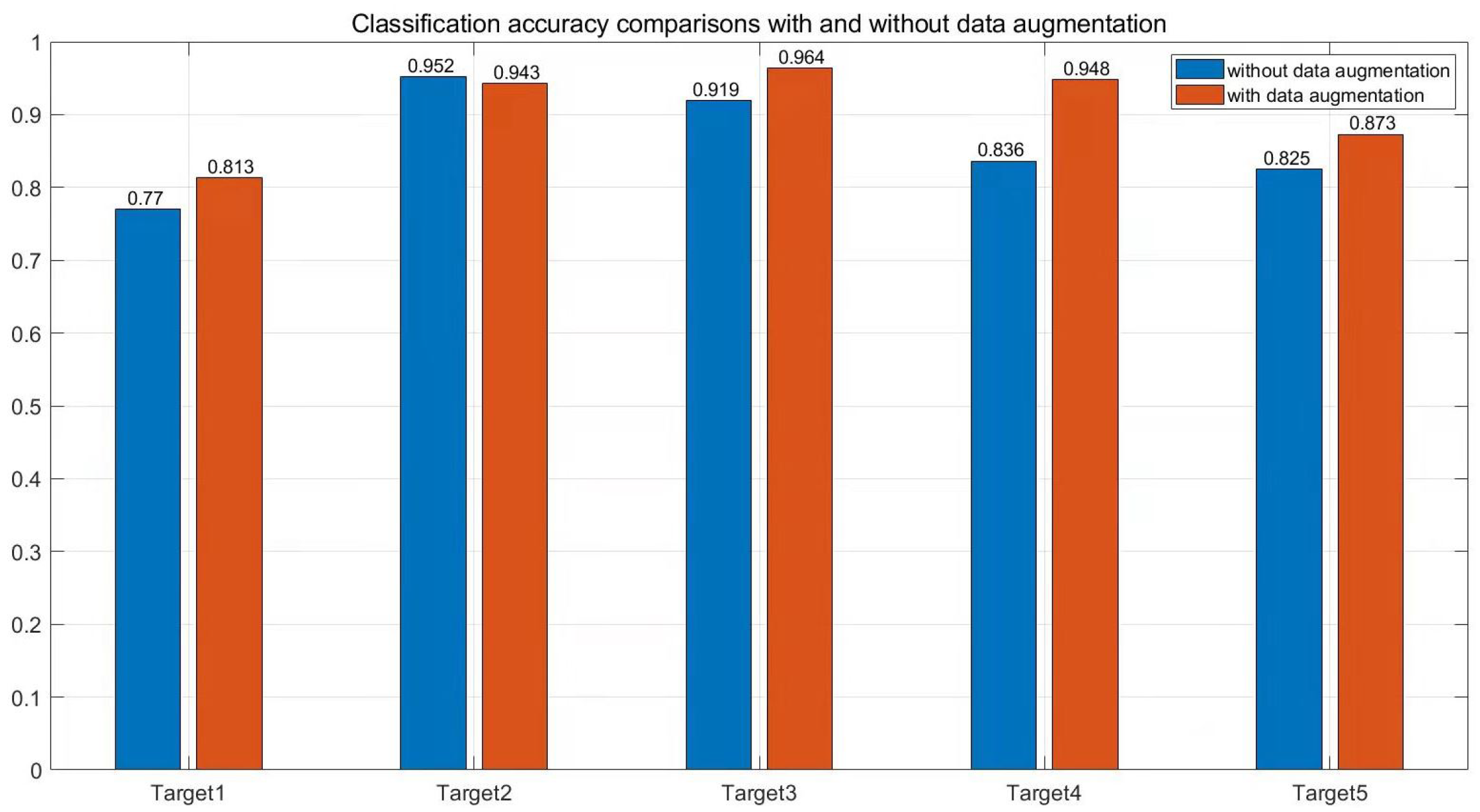

The effect of data augmentation for each type can be observed more clearly in

Figure 11, where we exhibit the classification accuracy comparisons of five different targets without and with data augmentation through the modified-DCGAN model. Naturally, the classification accuracy of the frogman is significantly improved from 83.6% to 94.8% with more generated trained data. Furthermore, the classification accuracies of other targets are increased by 4.3–4.8% or maintained, although no extra trained data are generated for these targets.

Then, we compare our S-ResNet model with other classification algorithms. Specifically, we test four classical machine learning classification algorithms, decision tree, KNN, random forest, and multi-classification SVM, using the datasets of five types of underwater targets obtained from the experiments. Classification comparisons of different classification algorithms are shown in

Table 10.

As shown in

Table 10, our S-ResNet model outperforms other algorithms in terms of classification accuracy of each target. The overall classification accuracy is increased by 6.9–10.5%.

Furthermore, we have tested the up-to-date CNN-based models, including ResNet-18, ResNet-34, ResNet-50, VGG-16, DenseNet, AlexNet, and SqueezeNet, based on the datasets of five types of underwater targets obtained from the experiments. We have compared our model with these models in terms of accuracy, number of parameters, FLOPs, and Epoch. The comparisons of classification performance with different models are shown shown in

Table 11.

Clearly, the proposed model improves classification accuracy compared with SqueezeNet, which is a lightweight CNN classification model, while maintaining the low complexity of the model. On the other hand, the proposed model significantly reduces complexity compared with other classification models, indicated by the number of parameters, FLOPs, and epoch, while keeping excellent classification accuracy. These results exhibit that the proposed model achieves a good tradeoff between classification accuracy and complexity.