1. Introduction

Mutual Intelligent Transport Systems (MITS) are transportation systems in which two or more ITS sub-systems (personal, car, roadside, and centralized) facilitate and deliver an ITS solution with higher quality and service level than if only one of the ITS sub-systems worked together. MITS will employ sophisticated ad hoc short-range communication technologies (such as ETSI ITS G5) as well as complementary wide-area communication technologies (such as 3G, 4G, and 5G) to allow road vehicles to communicate with other vehicles, traffic signals, roadside infrastructural facilities, and other road users. Vehicle-to-vehicle (V2V), vehicle-to-infrastructure (V2I), and vehicle-to-person (V2P) interactions are all terms used to describe cooperative V2X systems.

Nowadays, data amalgamation in MITS has shown to be extremely effective in making the transport systems easier and safer. By minimizing traffic jams, offering smart transport techniques, drastically decreasing the frequency of road accidents, and eventually achieving autonomous vehicles, the data-based technologies implemented in MITS promise to profoundly transform a person’s driving experiences [

1,

2,

3].

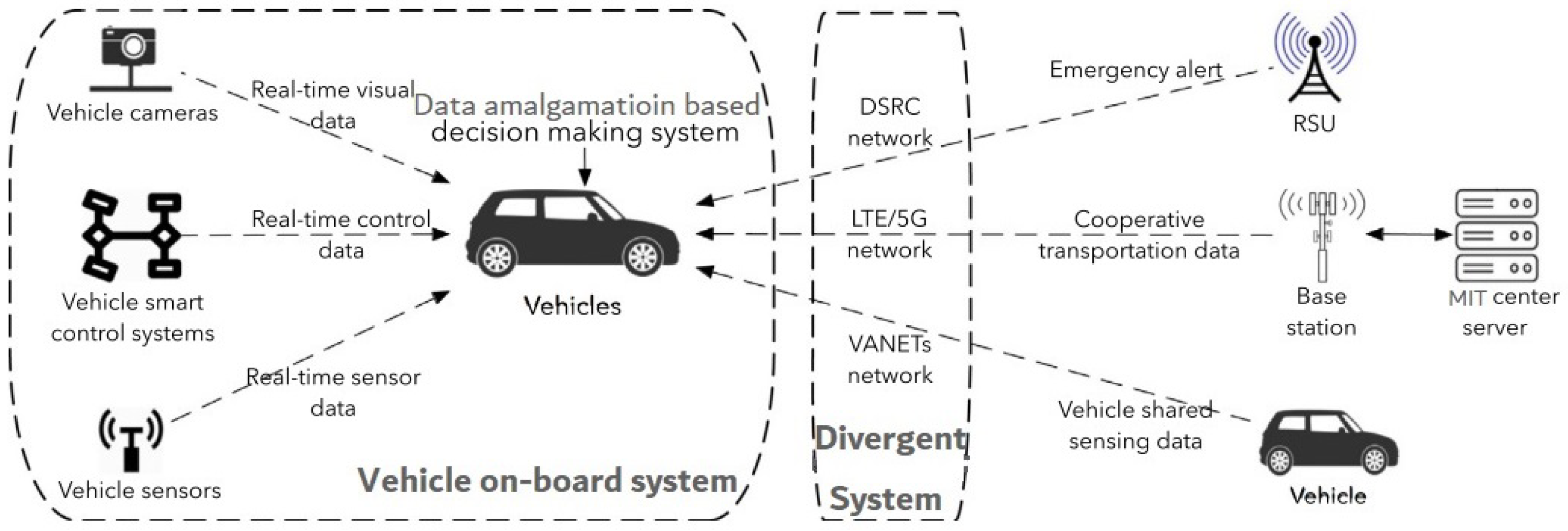

Data may be gathered and amalgamated on many MITS modules due to the advancement of software and hardware. Due to transportation-based communications networks, people are living in the age of big data. Vehicles are equipped with smart modules for data gatherings, such as vehicular cams, detectors, and advanced control technologies, as depicted in

Figure 1. As an outcome, the variation and quantity of data acquired by these systems for both physical and cyber settings is quickly rising [

4]. However, at the other side, data blending and analyzing techniques, particularly Artificially Intelligent (AI) techniques, have improved to satisfy the actual data amalgamation requirements of MITS channels.

Wi-Fi connection between vehicles and other devices in MITS channels is another essential and basic technique for data blending in MITS infrastructure. IEEE 802.11p [

5] and cellular-based [

6] Wi-Fi transmission technologies are the two primary kinds of Wi-Fi transmission technologies for V2X data transmission. There are DSRC (Dedicated Short-Range Communications) guidelines in the United States [

5] and Smart Transport System (STS)-G5 guidelines in Europe [

5] for IEEE 802.11p standard-based transmission technologies. IEEE 802.11p can fulfill the most rigorous performance requirements for most Vehicle-to-Everything (V2X) applications. It is also possible to employ mobile connectivity technologies, such as LTE and 5G, to create uninterrupted and reliable network linkages, such as the connection between vehicles and remote servers.

Figure 1 shows an example of how several transmission techniques are implemented in a divergent system for information sharing and information combination-based intelligent decision-making.

V2X transmission has been developed and categorized as per the sender and receiver [

7] for exchanging data in this divergent data transmission system: Vehicle-to-Infrastructure (V2I), Vehicle-tof-Vehicle (V2V), Vehicle-to-Network (V2N), and Vehicle-to-Pedestrian (V2P). In essence, such concept is being used to further categorize the gathered information at the application level in order to implement information fusion-based intelligent decision-making processes on a single vehicle. V2I and V2V are by far the potential information transfer methods for upcoming MITS systems among the four forms of V2X data transmission [

7]. Most efficient smart decision may be derived via data amalgamation and analysis based on information gathered from the smart detectors (cameras, sensor systems, radars, and controllers) and obtained from divergent V2X network systems. These smart choices made on every vehicle can subsequently be transmitted to other parts of the MITS network for traffic system improvement. Mutual information fusion may offer drivers a clearer view of a junction and assist them to identify other vehicles or walkers that they might otherwise miss owing to complicated driving situations such as barriers, disturbance, or adverse weather. Data amalgamation-based technologies on V2X divergent networks will aid in the implementation of autonomous vehicles on highways in the coming time.

Data interchange between vehicles and other elements in MITS systems has become more difficult with the advent of data amalgamation in the V2X data transmission systems, as multiple data types are exchanged over divergent networks. Many of these type of data are crucial to transportation and security systems, particularly in the context of driver-less driving. As a result, one key question is whether the acquired data via V2X systems can be trustworthy, particularly when it is utilized for security-related decision-making. An intruder may, for instance, corrupt the sending system and broadcast fraudulent or incorrect data to corrupt the entire MITS system. Furthermore, data carried via the V2X system may even be tampered with by an intruder to deceive vehicles, perhaps resulting in road accidents. An intruder can obtain the monthly broadcasts Cooperative Awareness Messages (CAM) [

8] including the vehicle’s location, speed, and certain confidential material, allowing for eavesdropping and unlawful monitoring.

To create the security framework for data transferred in the V2X systems, solutions such as security and data privacy protection systems are developed. In related research, a Public Key Infrastructure (PKI) [

9] has been used to share ephemeral keys to ensure data transfer safety and confidentiality in V2X platforms. Security methods such as key distribution as well as other safety computations such as procedures that provide information source authenticity, information content consistency, and information encoding, however, add significant delay. As security-related apps demand ultra-low delay, V2X is not projected to have a delay tolerance (e.g., cautions before a crash a total delay of less than 50 ms is usually required [

6]). As a result, establishing an effective data amalgamation trust mechanism for data sharing in V2X divergent systems is a critical first step in advancing data amalgamation in MITS. Because communication apps rely on cluster communications, group-based safety features are crucial. The authenticity and security of the information that flows among others should be assured, which can be accomplished by Group key Agreement (GKA). GKA can accommodate both dynamic and static groups. GKA approaches are categorized into three parts: dispersed, contributing, and centralized.

For systems that require several users to communicate across open networks, security is a major concern. Among the most important components of protecting group communication is group key organization. The majority of group key distribution study is motivated by the original Diffie–Hellman (DH) key agreement technique’s main premise. This notion that there are costly exponentially procedures to be performed out will be the main disadvantage of generalized DH for multi-party. Transmission rounds and computing costs are two elements that impact the capacity of a GKA method. The idea of neuronal encryption, which is built on Tree Parity Machine (TPM) cooperative training, is expanded to processes in this article. Transmission loops and computing costs are two elements that impact the capacity of a GKA method. The group key was created for a participatory group key establishment mechanism which verifies participants and enables individuals to construct their key pair. It was also discovered that neural network-based GKA methods accomplish key validity and key concealment. While one method relies on an even more accurate link among quantity volume and price, another method concentrates on quantity demand and commitment to innovation.

The most important challenges are:

Despite the different ideas of data amalgamation security in previous research, a universal data amalgamation security framework is still required.

Minimization of delay caused by key distribution having a PKI framework in the current method for offering security functionalities for required data authentication in V2X data transmission is necessary.

As, on integrated systems in the vehicle, computations relating to security are always computationally intensive due to data source verification and data integrity checking to obtain the third degree of confidence for more data amalgamation, optimization of delay in the calculation is having a higher priority.

The establishment of a positional key agreement method that may may considerably minimize the delay caused by current key distributions is required.

A speedy calculation computing solution is required that fully utilizes the vehicles’ onboard GPUs for safety computation.

To improve confidentiality and effectiveness, a method for coordinating the neural group key swap-over process should be developed.

The main contribution of this paper is to build a data fusion security architecture with variable levels of trust. This research also provides an efficient and effective information fusion security solution for various sources and multiple types of data sharing in V2X heterogeneous networks. For artificial neural synchronization-based rapid group key exchange, an area-based PKI infrastructure with speed enabled by a Graphics Processing Unit (GPU) is offered. To confirm that the suggested data fusion trust solution fulfill the rigorous delay requirements of V2X systems, a parametric test is performed. This article discusses the benefits of implementing an area-based key pre-distribution scheme. First, by lowering request volumes, the area-based key distribution strategy can minimize the mean delay. The mean delay can be reduced with a high accuracy prediction rate.

Secondly, key distribution is a sort of information exchange procedure that includes the transmission of data as well as the essential security computations, whereas key requests/responses are a data type that is communicated in V2X heterogeneous networks.

Third, GPU speeding reduces the time spent on security-related computation operations. Therefore, information exchange delay in V2X networks involving cars and RSUs is significantly lowered for important request/response.

The section provides an overview of the paper’s different significant contributions:

This paper offers four levels of trust for the data amalgamation trust system which contain (i) there are no safety features, (ii) verified source of data, (iii) the authenticity, as well as the integrity of the data source has been verified, (iv) the data source has been confirmed, the data integrity has been checked, and the data content has been encoded.

To achieve the third degree of belief for information fusion in V2X systems a key exchange system as well as safety computation activities has been implemented so that the extra delay of implementing security methods must be taken into account, as the delay criteria in this data amalgamation process is stringent because safety is a top priority.

The delay has been decreased by minimizing key request updates with a position-based key dispersal network.

As per this research, the key exchange process must be developed by synchronizing a group of Triple Layer Tree Parity Machines (TLTPM). Rather than synchronizing individual TLTPM, the cluster members can utilize the identical key by cooperating among some of the selected skippers TLTPMs in logarithmic time.

The secret key is created by exchanging very few parameters across an unprotected link while both sides use the neuronal coordination procedure.

The proposed technique’s coordination duration for various learning rules are substantially fewer than the existing techniques.

The key swap over strategies described by [

10,

11,

12,

13,

14] were investigated in the present study. This research focused on their weaknesses as well. To overcome the relevant problems, this article gives a TLTPM coordinating key agreement technique that results in a secret key with a flexible size.

Section 2 delves into related work.

Section 3 contains the suggested approach.

Section 4 deals with results and discussions. A conclusion and recommendation for future are presented in

Section 5.

2. Related Work

The first degree of trust is used in some models [

15], but it appears to be ineffective. PKI was used in certain models to share keys for identity verification, achieving the second degree of trust. The third degree of trust should be used for routine V2X data transfer, with fourth degree of trust accessible as a backup strategy for critical data transfer.

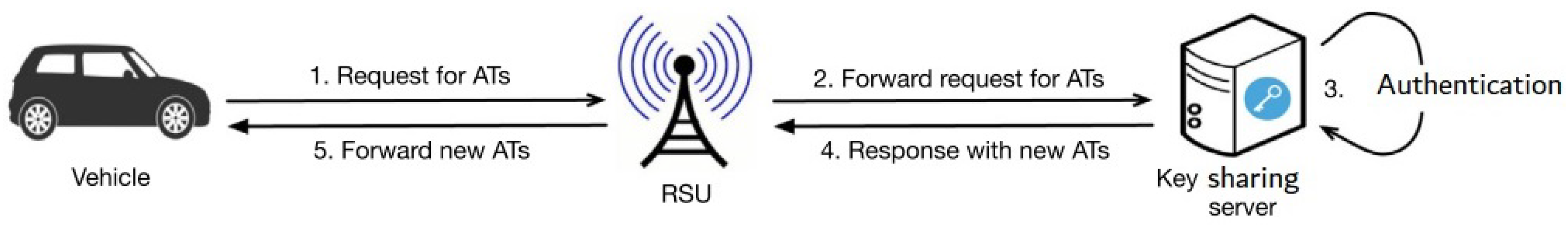

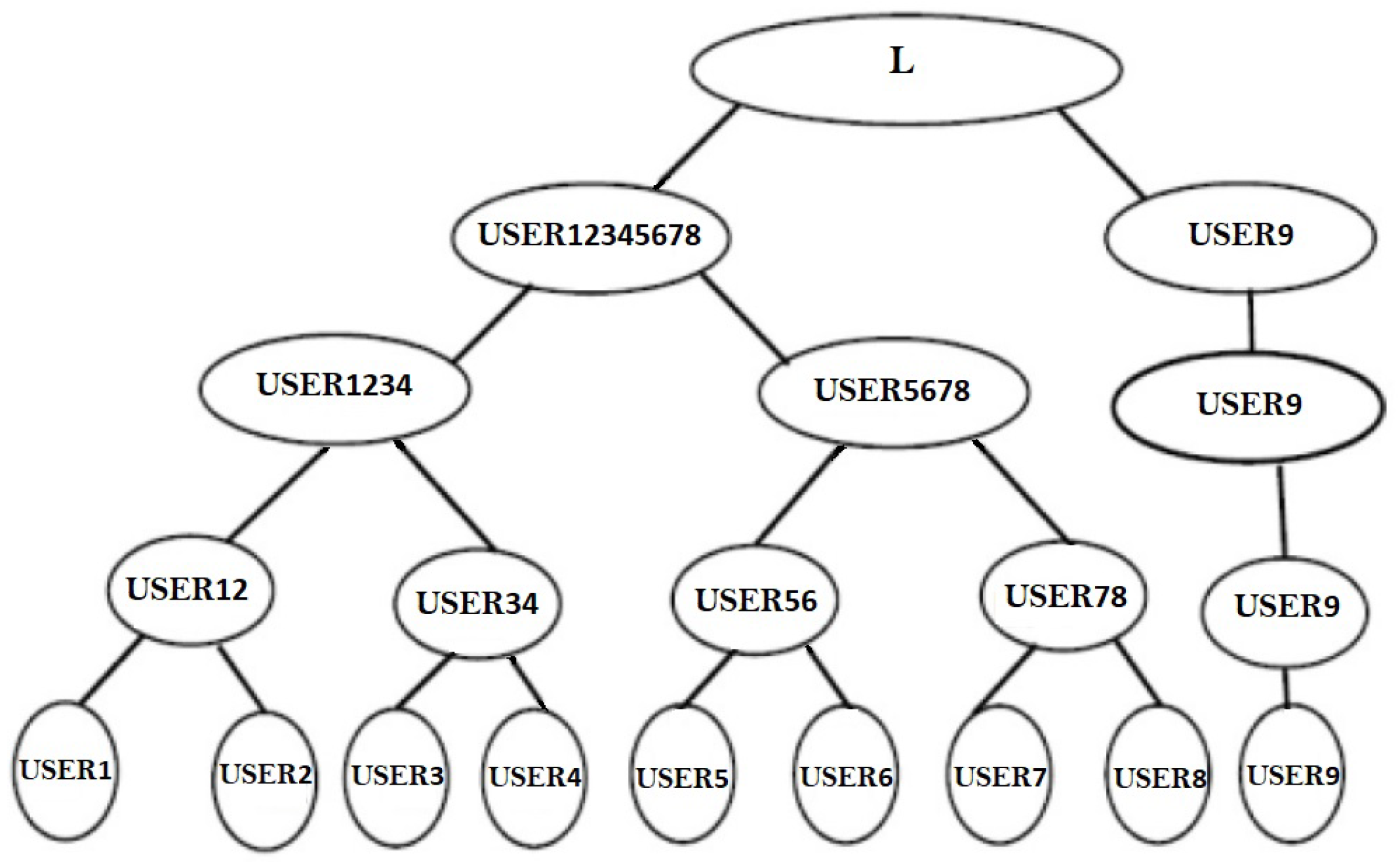

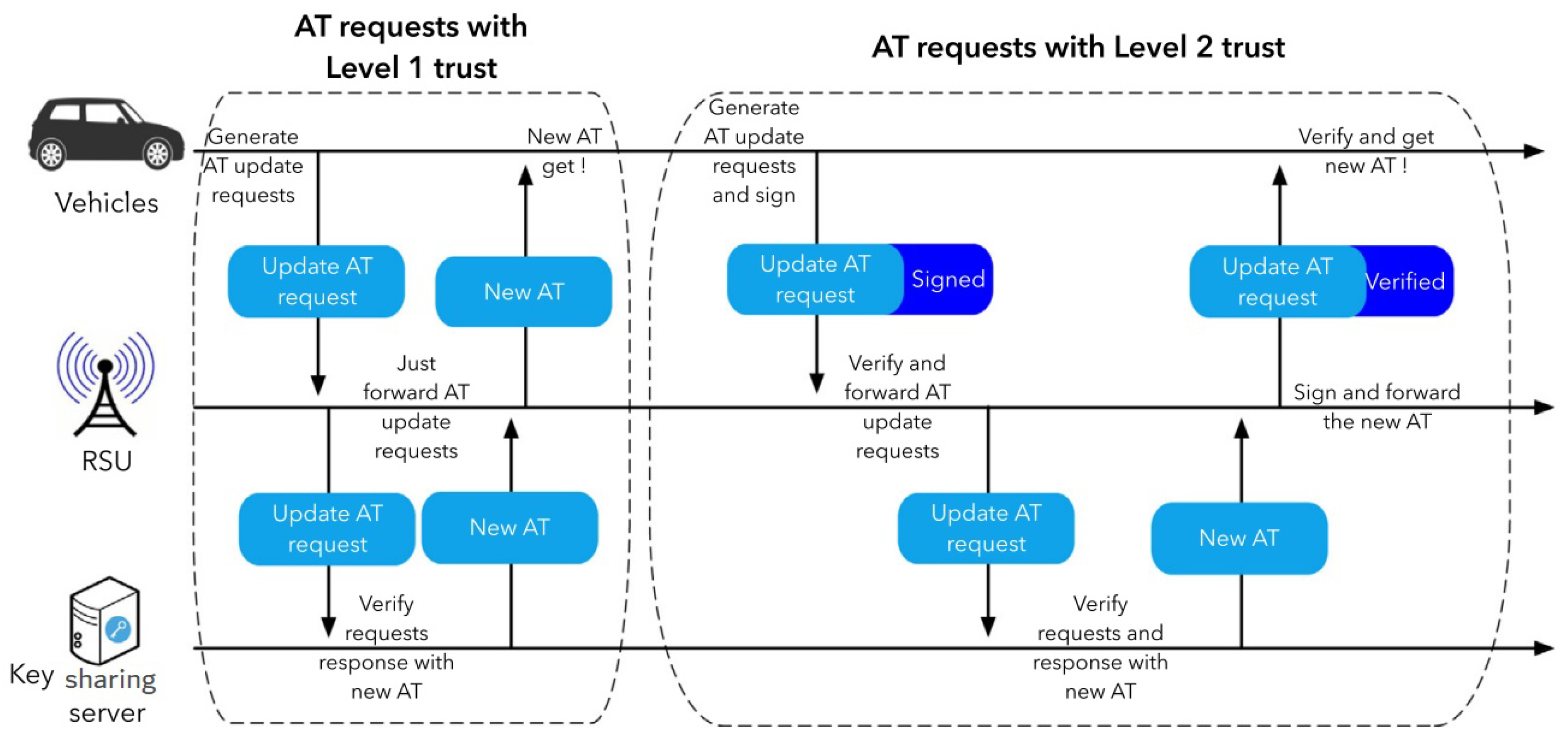

The shared keys for vehicle transmissions in this network are temporary keys known as Activation Tokens (ATs). ATs are distributed once every small length of time (e.g., 10 min). As depicted in

Figure 2, the procedure begins with a request from one vehicle to update ATs to RSUs, which is then forwarded to a distant key exchange platform, which validates the identification and replies. The vehicles and RSUs remain inactive throughout this period until the key sharing server responds. If the vehicle’s request is genuine, RSUs will transfer fresh ATs to the vehicle for use in the following time frame. In a different application scenario described in [

9], the RSU verifies the digital certificate of the updated AT queries before forwarding them if they are legitimate. On the RSU side, this will undoubtedly result in longer delays. Without taking into account the delay between RSUs and PKI servers, the overall delay for AT upgrading may be over 400 ms. Furthermore, this delay will occur every 10 min, potentially affecting V2X transmission, particularly security messages, which have a 50 ms time restriction.

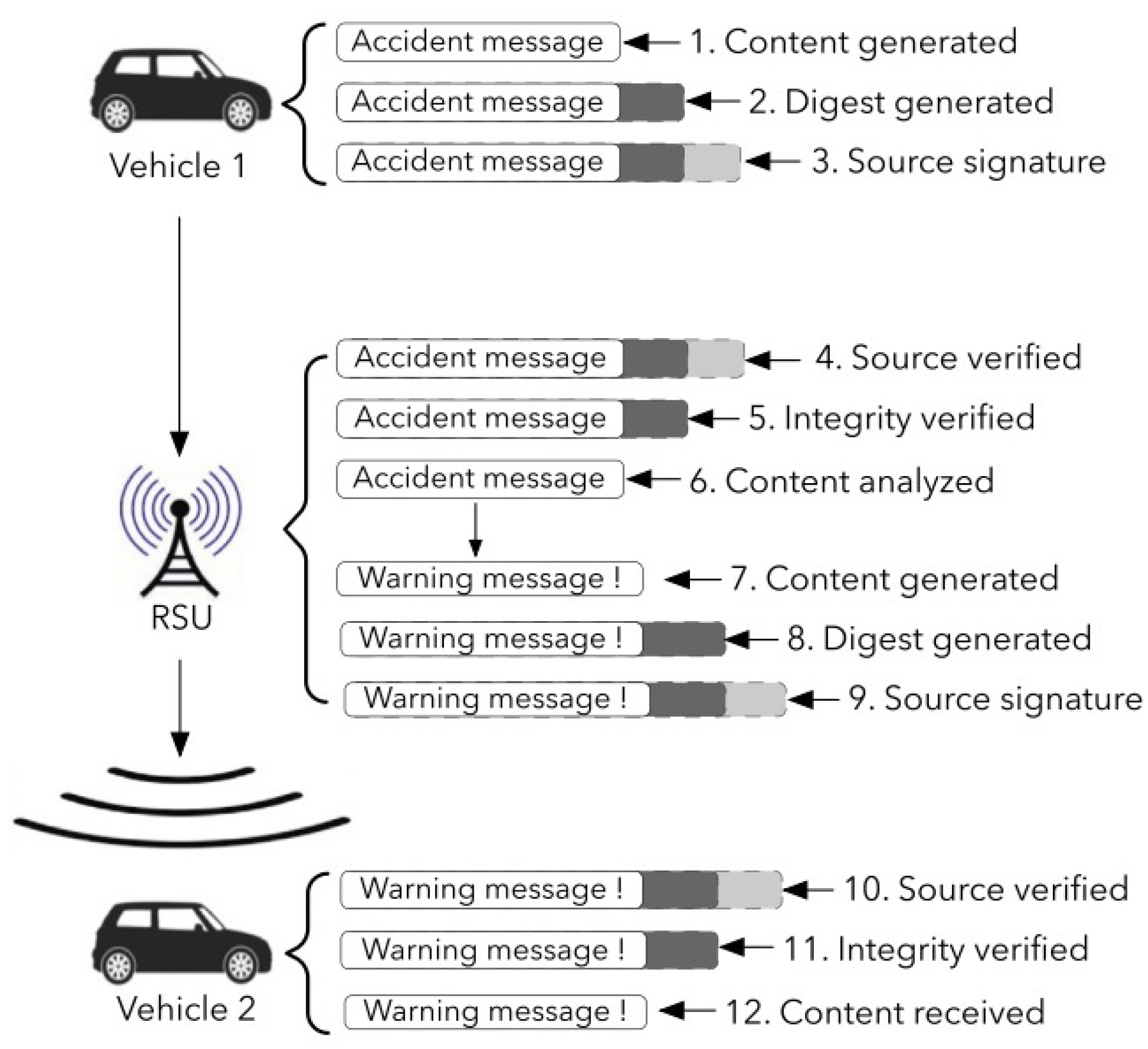

It is presumed that the V2X data transmission uses a public-key cryptosystem to validate V2X messages. The data of the V2X communication are not encoded in this case. Vehicle 1 would wish to send security information regarding an urgent situation, such as a vehicle breakdown, as depicted in

Figure 3. This information will be created and structured in accordance with the V2X protocol, which includes both the payload and safety portions of the V2X information. A message-digest algorithm, such as the HASH, will also be used to create the specific pattern for consistency authentication in this V2X message. The given information will then be validated using a digital signature. When a neighboring RSU gets V2X information, it will first verify the information source’s authenticity and information integrity before accessing the content of the information. After all safety-related parts have been validated, the information will be processed and warning information will be sent to all surrounding cars. The RSU would then have to execute the HASH and digital signature operations to transmit this warning information to adjacent vehicles. Before the information payload is processed, the given information and information integrity must be examined and validated by the cars in the vicinity.

Four hash functions and two ECDSA signatures and authentication procedures run on vehicles and the RSU, respectively, will create the delay caused by safety actions in this scenario. In [

16], the researchers compare their research on real-world V2X systems. According to the results of [

16], the ECDSA technique takes between 5.5 ms and 7.2 ms every transaction using a 224 and 256-bit key. For authentications, the ECDSA technique with 224-bit key takes

ms and the 256-bit key takes

ms, respectively.

The efficiency on ARM9 devices may be substantially slower, as the authors highlighted, with one authentication process taking 150 ms.

Many prior studies [

17] on both the hardware and software sides have developed and improved Hash algorithms, notably SHA-2 functions. Yet, the energy and computation capacity of integrated CPUs in cars have always been restricted. Measurement of SHA-2 efficiency on ARM series CPUs may be used to make an estimate, and a single SHA2 computation will take less than 1 ms. According to [

18], depending on the physical device and length of the input information, SHA-3 might be 2 to 3 times worse.

Ingemarsson et al. [

19] present the very first multi-user key management system, which is a Diffie–Hellman modification based on the concept of symmetrical functions. Members are connected in a ring, with

accepting only information from

and transmitting to only

. Member

is able to calculate the secret key after

sessions. However, being closer to a silent attacker who can monitor the communication pathways between both the members, this method was found to be vulnerable. Steiner et al. [

20] present modifications of the Ingemarsson guidelines, in which member

can calculate the secret key after completing the very first phase. In stage 2, the member

transmits messages to

then the end portion of the communication is used by

as the power of its arbitrary value

to create a secret key. The text’s last

portion is then sent to

In compared to the Ingemarsson et al. method, this technique requires lesser computing costs from the principal but takes twice as many sessions. No communications could be transmitted till the previous secrets signal has been acknowledged in this method. Steiner et al. proposed a different method to lower every user’s average computation. Additionally, separate rules for joining and leaving of members are included in [

21]. A circular method is used in Burmester-Desmedt key (BD) [

22]. Members are connected in a circle in this approach. The calculation of the secret key is conducted in two steps, every adjacent couple of principles

and

finishes the fundamental DH key interchange in the first step. Client

, on the other hand, instead of calculating the secret key separately, calculates the proportion of its two secret key

with adjacent client. At step 2, every client transmits their

value, allowing any client to calculate the secret key. When users are organized in a logical line instead of a circle. A slight change in the secret key emerges, which now just comprises the adjacent exponent sets on the connection. The approach for calculating the secret key is therefore dependent on the user’s location on the line, and it is much more capable than [

22], especially when there are a high number of users. Perrig [

23] proposed a GKA technique in which users are arranged as leaves in a B-Tree. The advantage of Perrig’s technique over prior techniques is that the quantity of necessary synchronization steps is logarithmic in terms of the total number of users; however, the technique’s restriction is that it allows

users. Kim et al. [

24] have demonstrated that the tree-based key establishment technique is particularly useful when the group’s arrangement has to be changed without having to restart the entire technique. They had also demonstrated that by re-configuring the tree of keys, they could easily add and remove individuals. Asymmetric GKA was described by Wu et al. [

25] and Zhang et al. [

26], while Gu et al. [

27] suggested a consolidated GKA technique to reduce encrypting time. In ad hoc networks, Konstantinou [

28] proposed an ID-centred GKA mechanism including well constant round. Jarecki et al. [

29] created a resilient GKA technique in 2011 that permits a group of people to launch a shared secret key despite network problems. Many group key establishment techniques that have been provided can be implemented in one phase, but they do not provide advance confidentiality [

30]. Most of the DH generalizations presented to date need minimum of two stages to create the mutually agreed key. The Joux [

31] technique is the GKA technique that may be executed in a single stage while still maintaining advance confidentiality; however, it can only function with three members. The researchers of [

10] proposed the CVTPM, which uses complex integers for all control factors. It is unclear if the CVTPM can resist a majority threat because they only looked at the geometric threat. A VVTPM system has been proposed by Jeong et al. [

12]. This approach, however, does not really provide an accurate synchronization assessment. Teodoro et al. [

13] suggested putting TPM structure on an FPGA to conduct key exchange by mutual training of these machines. Alieve et al. [

32] presented a safe, lightweight, and scalable group key distribution and message encryption framework to tackle the secrecy of vehicle-to-vehicle (V2V) broadcasting. Leveraging scalable rekeying algorithms, the described group key management approach can handle diverse circumstances such as a node entering or leaving the group. Han et al. [

33] developed a LoRa-based physical key generation technique for protecting V2V/V2I interactions. The communication is based on the Long Range (LoRa) protocol, that may use the Received Signal Strength Indicator (RSSI) to produce secure keys over long distances. Liu et al. [

34] introduced recent conceptual conclusions on linked fractional-order recurrent neural networks’ global synchronization. The synchronization delay is quite long, and thus is not an effective strategy for group synchronization. Karakaya et al. [

35] presented a memristive chaotic circuit-based True random bit generator and its realization on an Embedded system. The genuine randomness of the produced random number is not tested in this article using the NIST test suite. Dolecki and Kozera [

14] investigated the sync times achieved for network weights picked at random from either a homogeneous or a Gaussian distribution with varying standard deviations. The network’s synchronization time is investigated as a function of various numbers of inputs and distinct weights pertaining to intervals of diverse widths. It is possible to correlate networks with various weight intervals; the deviation of a Gaussian distribution is chosen based on this interval size, which is also a novel way for determining the distribution’s variables. Patidar et al. [

36] proposed a chaotic logistic map-based pseudo random bit generator. The genuine randomness of the produced random number is not examined in this work utilizing the 15 NIST test suite. A chaotic PRBG system based on a non-stationary logistic map was developed by Liu et al. [

37]. The researchers devise a dynamic approach to convert a non-random argument sequence into a random-like sequence. The changeable parameters cause the system’s phase space to be disrupted, allowing it to successfully withstand phase space rebuilding attempts.

In conclusion, the overall delay in

Figure 3 generated only by safety-based computations may be approximated at 30–40 ms. Data communication delay, data processing delay, and data producing delay are all examples of delays that are overlooked. This implementation and hardware configuration appears to be insufficient for V2X data transmission networks to share security-based data (50 ms delay). As a result, safety-based functions will need to be accelerated as well.

3. Proposed Methodology

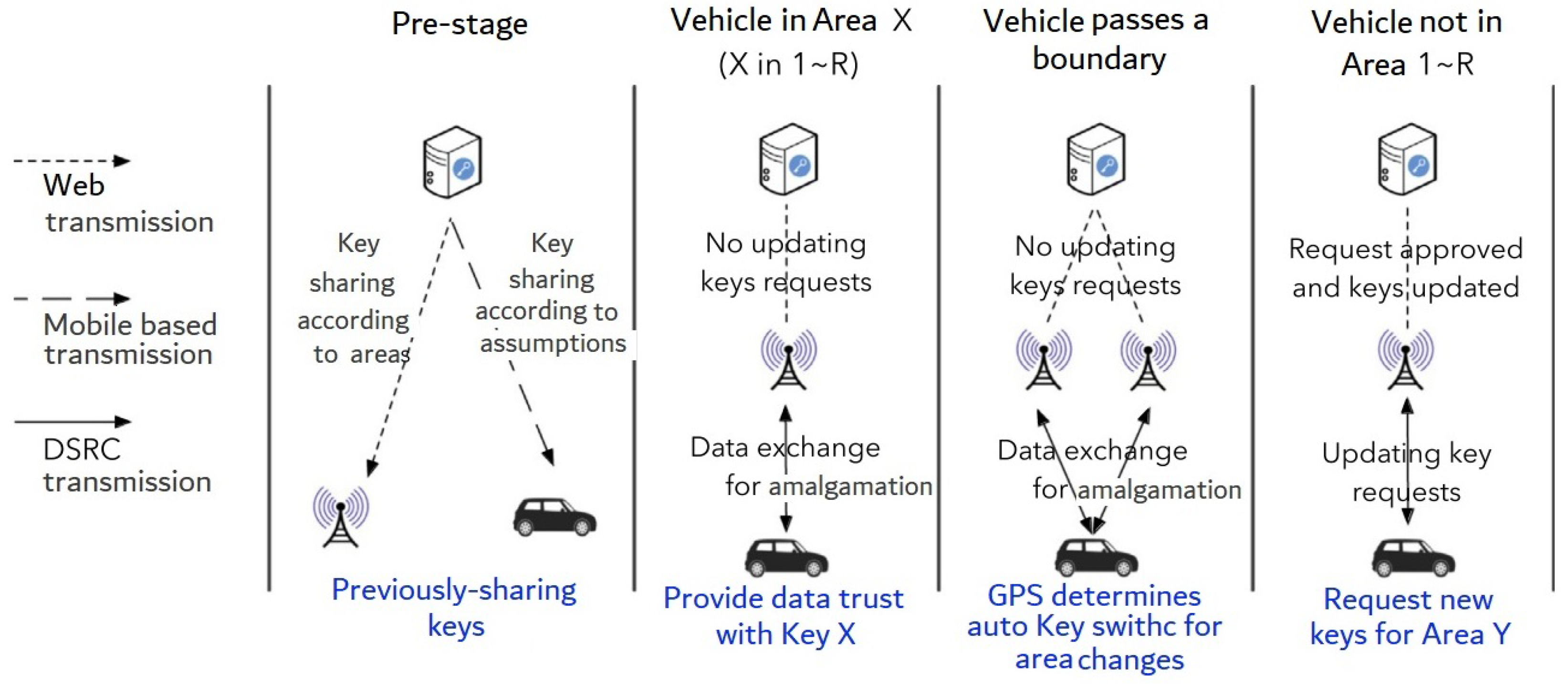

This paper proposes an area-based key sharing system for cars and RSUs in this part, with pre-estimated and previously shared key pair pools. The temporary keys are updated in this system depending on the positions of the vehicles rather than the time frame. Interim keys (ATs) are previously-shared keys that are distributed to vehicles and RSUs for a limited period (e.g., 24 h). When a vehicle moves to a new region, the interim keys are updated to reflect the new location. Updated key requests may be disregarded as long as the cars are in regions with previously shared keys. Keys will be updated during non-peak hours (e.g., midnight) for use in the upcoming time frame. The use of a smart route prediction system can aid in the assumptions of areas through which one vehicle may traverse to access additional key sharing.

The researchers of [

10] proposed the CVTPM, which uses complex integers for all control factors. It is unclear if the CVTPM can resist a majority threat because they only looked at the geometric threat. A VVTPM system has been proposed by Jeong et al. [

12]. This approach, however, does not really provide an accurate synchronization assessment. Teodoro et al. [

13] suggested putting TPM structure on an FPGA to conduct key exchange by mutual training of these machines.

The proposed architecture’s original concept is to share keys depending on the location of a single car rather than predetermined time intervals. The key pairs used for V2X transmission are modified based on the position information of the vehicle.

Here’s an instance: Assuming the V2X transmission system is set up in a broad region with a single key sharing center, several RSUs, and a huge number of vehicles. To begin, this region is divided into

N zones, each of which corresponds to

N types of secret key stores. RSUs are classified into regions depending on their location. The RSUs are then assigned key sets that correspond to their region. After that,

R sets of keys (key sets ranging from

) are previously-shared to one car, with proper identification confirmed. This part, seen in

Figure 4, is known as pre-stage, and it is carried out at the start of each effective time frame (e.g., 24 h). If a vehicle remains in region

X and

X corresponds to

, the key sequences

X can be used for V2X transmission. As a consequence, as soon as one car stays inside one region with previously-shared keys, no need to change keys inside a permissible time frame. When a vehicle reaches a zone without having received previously-shared keys, a request for related keys will be made to PKI servers via RSUs, just as the current V2X PKI network. As a result, by lowering the number of requests for key updates, the average key sharing delay may be decreased.

In the first stage of this network, the very first step is to share the keys. A cellular-based transmission network should be used since this phase necessitates data transmission between vehicles/RSUs and PKI servers. As previously said, one suitable time period is 24 h, and the previously-mentioned sharing step should occur at a non-rush hours, such as late at night. While the keys from the previous time session will still be in effect, the pre-stage in

Figure 4 can be configured for one hour. RSUs will get fresh keys and relevant data via internet connections at this pre-stage. The majority of vehicles on the streets at present moment are unable to download new keys with previous-authentication using cellphone-based internet networks such as LTE or 5G connections. Meanwhile, there will still be automobiles on the roads that use the old keys to connect. Once the pre-stage is complete, the cars will rely on the currently requested keys as a backup mechanism until they can establish a robust link with the PKI server through mobile communication.

If a vehicle arrives in a region without previously shared keys, it will seek ATs as a complement to the key sharing mechanism. RSUs will send the request to the key sharing unit, and if authentication is satisfactory, new ATs will be returned, as shown in

Figure 4. In this scenario, the delay is still present, exactly as it was in

Figure 2. Still, with the proposed architecture, average requests for upgrading keys may be much decreased, resulting in a lower average delay for upgrading keys. The driving path prediction may be used to forecast the passing regions in the following time frame for single vehicle, which can help optimize this architecture.

When this design is first implemented, there is no way of knowing which key pools each vehicle should download. Assuming that the inbuilt computer of a vehicle has small memory space, requests for ATs related with unshared zones cannot be ignored.

The proposed key sharing method, must be utilized in collaboration with the present requests and responses-based key sharing system. The supplemental techniques are queries to PKI servers for key updates. If the zones related with previously-shared keys are R, and the entire areas one car actually crossed are M, then It may be deduced that if , that refers to a single car, has keys for all of the passing areas, no requests for key updates will be made. If , the car will require the old key upgrading mechanism as a backup for acquiring new ATs in zones where previously-shared keys are not available. As a result, while delay cannot be prevented in this situation, requests are still minimized. Apparently, if , the vehicle will have to seek AT updates in all areas it has gone through. As a result, if the vehicle goes through one area in less than 10 min, there may be additional update requests.

To summarize, to decrease the number of requests for ATs, it is critical to predict each vehicle’s traveling areas and previously-shared appropriate keys. The potential traveling areas for one vehicle in the following span of time may then be estimated using a destination and path assumptions method.

Because this article is not about smart path or endpoint prediction, this paper presume a variable for the success ratio of path prediction in

Section 5 and look at alternative efficiency assessment outcomes.

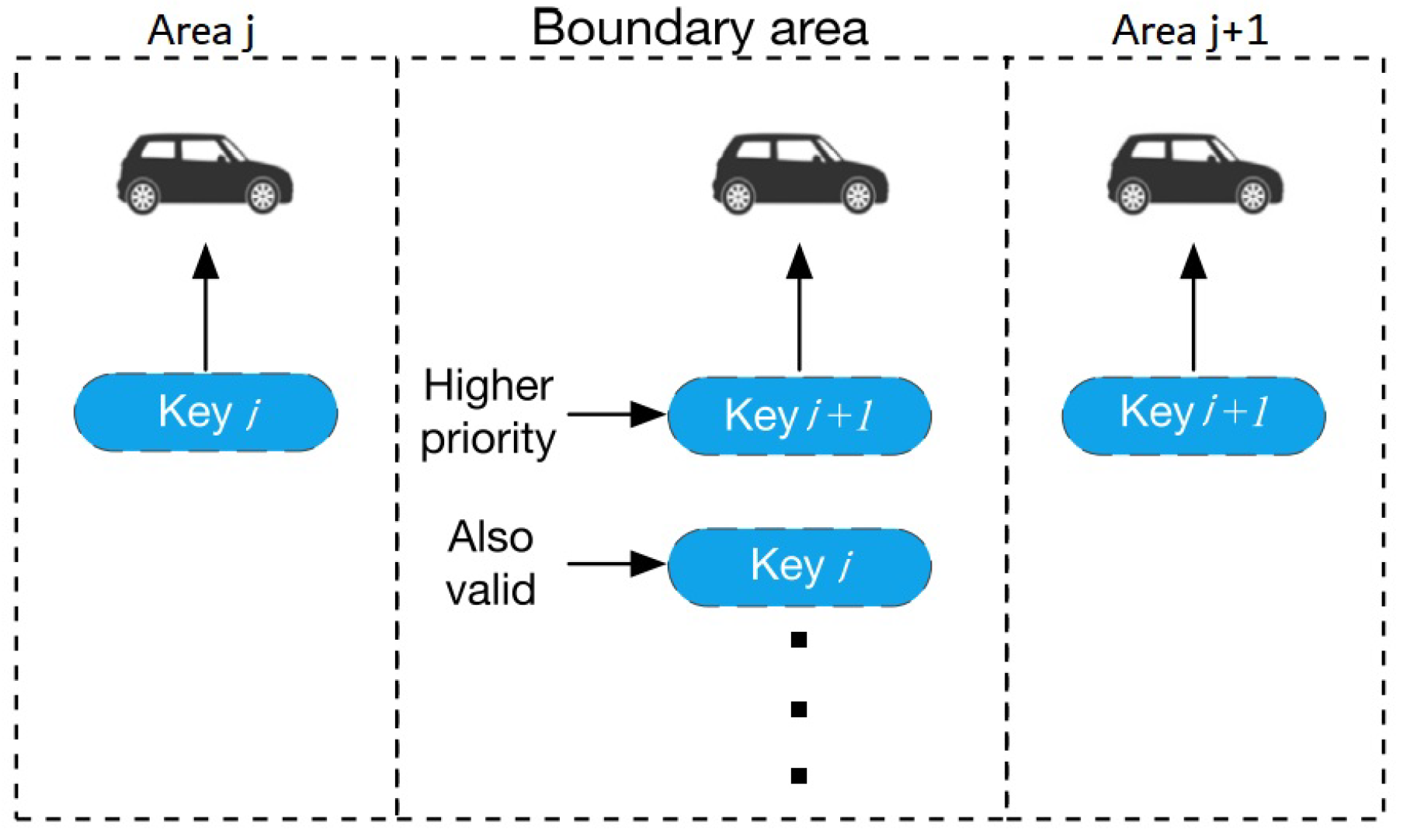

There will always be borders between neighboring regions since the design is dependent on geography. The key pair that is currently in use needs to be upgraded as per the GPS position as a vehicle crosses a boundary between two neighboring areas. There will be a period of time when connectivity is inaccessible owing to the changeover of the keys in this instance. In this architecture, this article built a border zone between two nearby regions in which both keys are permitted to utilize for automobiles and RSUs. After just a vehicle travels from region

j to region

, there is indeed a region where both keys are available, as shown in

Figure 5. The keys utilized for the next passing area will have precedence if the navigation system recognizes which area the car will be in next. If the vehicle is traveling from Area

j to Area

, for example, Key

will have greater priority than Key

j as illustrated in

Figure 5. Keys pertaining to preceding regions would have a smaller impact due to the lack of a defined priority based on the path prediction. Setting border zones has the primary purpose of maintaining connectivity by minimizing time delays caused by key flipping.

This design is based on the validation of one-time slot’s starting phase. After their genuine ID has been validated, the cars and RSUs will get the necessary shared key from the key sharing hub. During each time frame, a monitoring mechanism should be implemented to keep an eye on the abuse of the keys or other odd actions, such as sending misinformation to the MITS in one region. Any further exploitation of the ATs will result in the ID being re-verified, ensuring that an intruder does not have a genuine authenticate ID to acquire the keys in a single time period. As a result, after the attack has been observed, the assailants will be penalized in the following time period. To summarize, dangers persist, but the difficulty of attacking this security framework has increased, and attackers’ actual identities are easier to detect for subsequent operations.

Another advantage of this area-based PKI is the ability to update the keys more often. Because the keys for V2X communications must be changed to prevent unlawful monitoring, area-based PKI might allow several key pairs for a single block, allowing cars to change keys more often.

The definitions of the symbols used are depicted in

Table 1.

A suitable replacement for number theory-based encryption methods is the transmission of secret keys across public networks utilizing mutual communication of neuronal networks. Two ANNs (Artificial Neural Network) were trained using the identical weight vector. The keys are the same weights acquired from the coordination. On a public network, this technique was utilized to create cryptographic keys. The ANNs will coordinate quicker due to the evident ideal matched weights created by neural coordination utilizing the identical input. These ideal input vectors not just to speed up the coordination process, but they also minimize the likelihood of adversaries. This technique of key creation is effective to the extent that if a new key is required for every communication, it may be created without storing any information. The network’s efficiency can be improved even more to acquire a session key on a transmission media by expanding the amount of nodes for either the input or concealed layer. The idea of neuronal encryption has been expanded to produce a group key in this article, in which the secret key consists of coordinated weights acquired via the ANN. The ANN technique for creating keys for two-party connectivity will now be expanded to produce keys for users connecting in groups. Every user will get his or her own ANN. ANNs begin with their own starting vectors and weight vectors, and coordination occurs when all of the ANNs in the cluster reach the identical weight vectors. The following subsections describe two forms of GKA-based on the primary setup.

Section 3.1,

Section 3.2,

Section 3.3 are discussed synchronization using ring framework, synchronization using tree framework, and security assessment of proposed methodology.

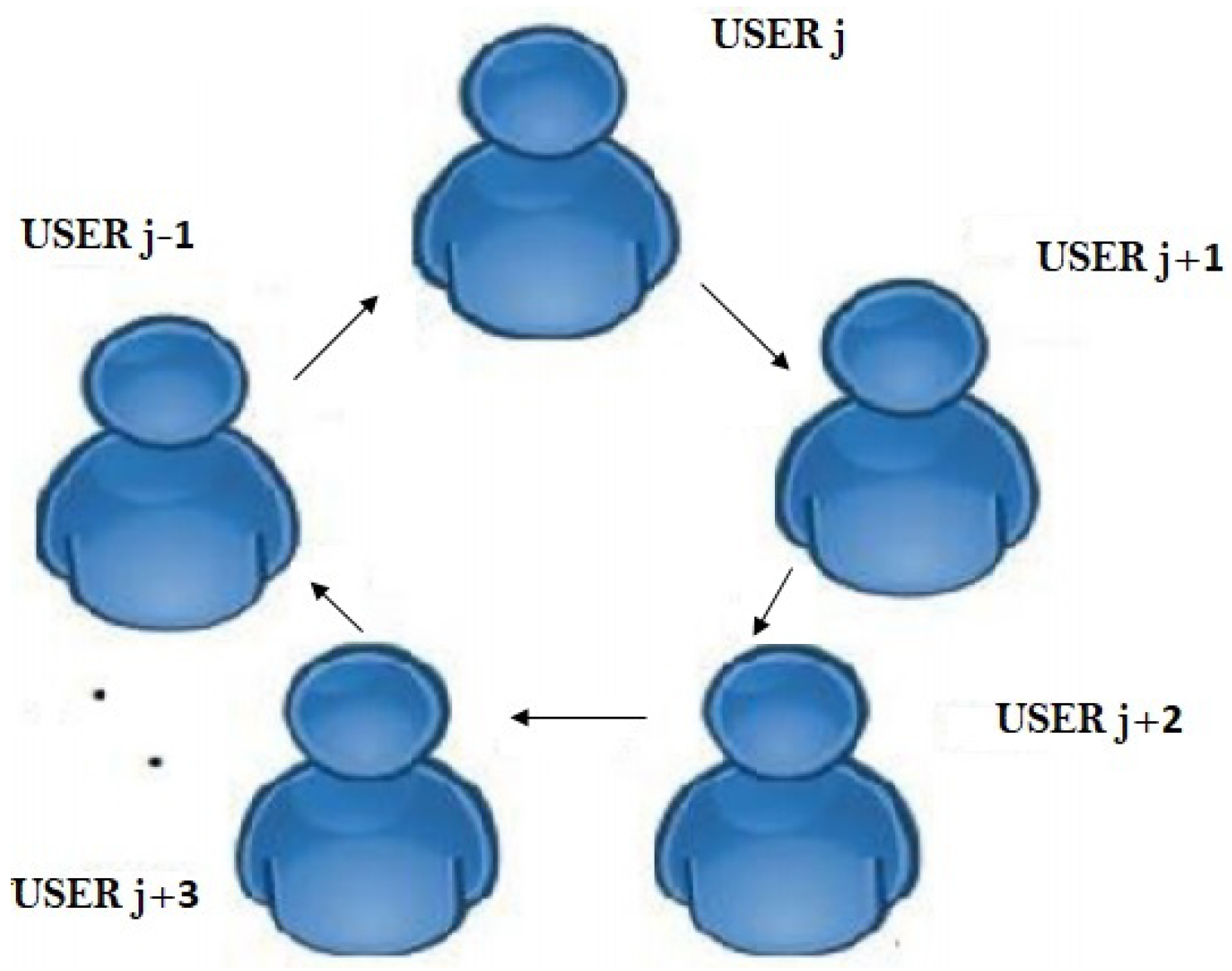

3.1. Synchronization Using Ring Framework

As illustrated in

Figure 6, the

m users involved in the communication are organized in a ring, so that user

only takes messages from

and communicates with

. The initial input vector and the weight range are distributed across the users. Here, flag

g is used to indicate whether the output of TLTPMs of different user’s are same or not. If output of two TLTPMs are identical then flag set to 1. Otherwise, it is set to 0.

Phase One: Initialization:

1. Each member will also have his or her own TLTPM, with starting weight.

2. Each member would put the flag number and initialize his TLTPM with the shared input.

Phase two: Key Generation:

3. Until coordination, every principal will carry out the procedures below.

(i) Calculate the values of the hidden neurons and the output neuron. Principal now transmits its output and flag values to . Then, compares its output to the received one. If both outputs are same then is kept. Otherwise, reset the flag value to 0 and transfers it to .

(ii) If the flag parameter is initialized to zero at any time during the calculation, the subsequent users continue to propagate to the remaining principals in the ring until . As a result, a newer flag value will be sent to the agreed-upon users.

(a) Go to step 2 if the flag .

(b) If the flag

, each user

updates their weight vector according to the following learning rule (Equation (

1)).

The Heaviside stage function is

The keys are the same weights acquired from the coordination. The keys now can be utilized for the encoding/decoding. After agreeing on the neural key by the members of the group, it may be necessary for users to enter or quit the group in the ring structure. One method is to simply resurrect the method in order to generate a new key for the customized group.

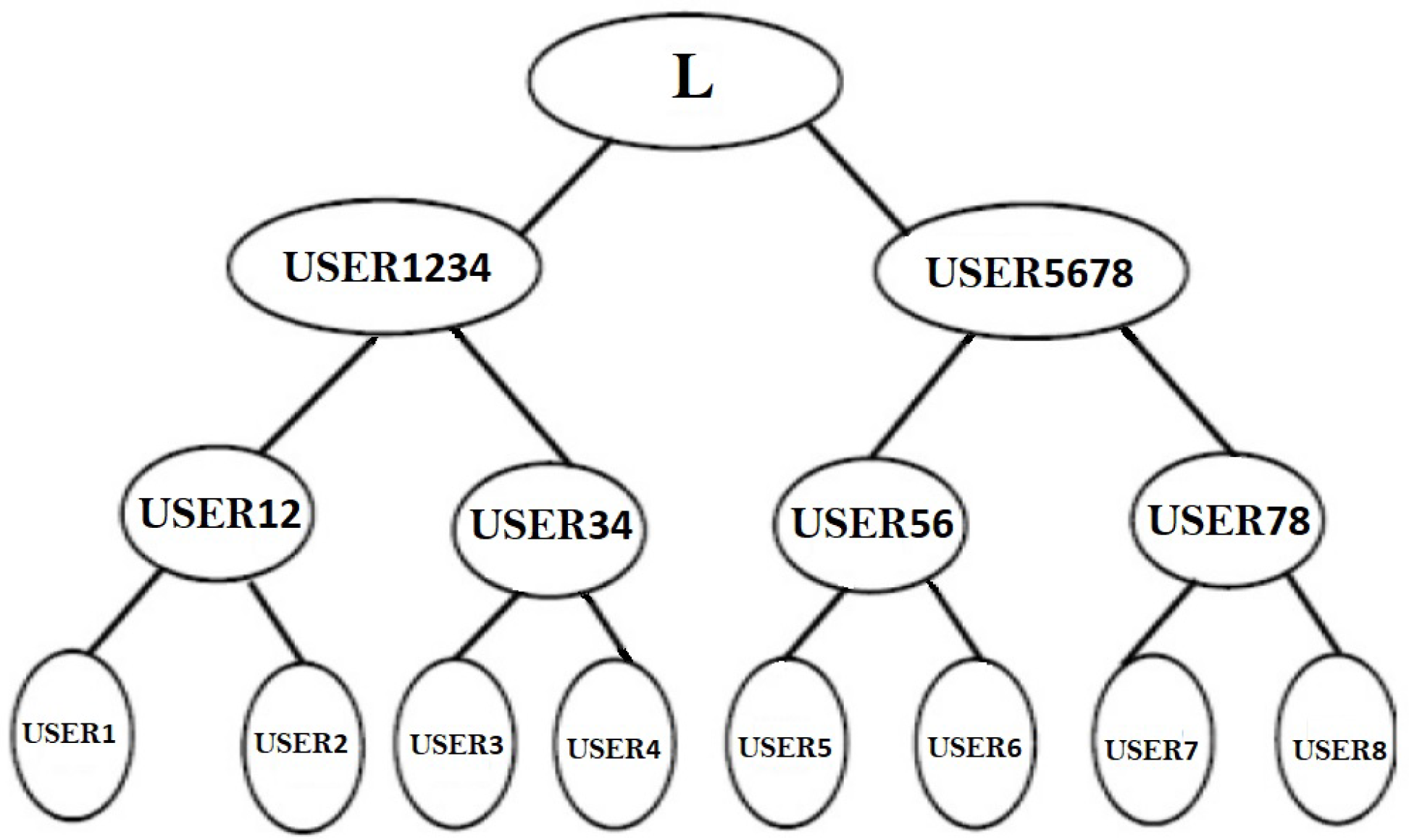

3.2. Synchronization Using B-Tree Framework

The terminal neurons of the B-Tree are represented by the main

in the group in

Figure 7. The neighboring principles

form a couple and begin with a shared starting input.

There will be

pairings if the number of nodes is even. In

Figure 7, we examine eight users who pair up as

.

Each pair forms a TLTPM to create the synchronized weights . These synchronized weights are now the pair’s initial weights . Similarly, have initial synchronized weights. In the following round, and create consecutive pairs for the TLTPM and produce keys . The above mentioned merging and synchronization procedure is repeated until all users have merged to create a single group. Every member are linked to an identical weights that forms the neural group key.

For odd number of members, as illustrated in

Figure 8, say

, the

users will merge to an identical neural key as previously explained, while the final member will then coordinate the TLTPM with the TLTPM of

to get a mutually agreed key. The amount of layers necessary for key exchange is determined by the amount of users in the cluster. That is, for

number of users then

m levels are required. For example, for

, we may have 5, 6, 7, or 8 members, and the amount of levels necessary for key creation stays the same for all of these users.

Algorithm 1 explains the whole TLTPM sync mechanism.

| Algorithm 1: The whole TLTPM sync mechanism. |

Input: The random vector weight and the identical input . Output: With the identical key pair, both sender and recipient have synchronized TLTPM. Step 1: Assign the weight matrix to any random vector value. Where, . Repeat steps 2 through 5 until you have achieved ideal synchronization. Step 2: Every hidden node’s output is determined by a weight dependent on the current state of the inputs. The result of the first hidden layer is determined by Equation ( 2). The result of the v-th concealed node is denoted by in Equation ( 3). If is true, is mapped to , then binary outcome is produced. is assigned to if is true, indicating that the concealed neuron is functioning. The concealed node is deactivated if the magnitude is . Equation ( 4) shows how to do this. Step 3: Calculate the final result of TLTPM. The ultimate result of TLTPM is calculated by multiplying the hidden neurons in the last layer. This is represented by (Equation ( 5)). Equation ( 6) shows how the magnitude of is represented. If TLTPM has one concealed neuron, . For distinct options, the value is the same. Step 4: When the findings of two TLTPMs G and D agree, adjusts the weights using one of the following rules: Both TLTPMs will be learned from each other using the Hebbian learning method (Equation ( 7)). If the weights for each TLTPM are the same, go to step 6. Step 5: If the outcomes of the both TLTPMs differ, the weights cannot be altered, . Go to step 2 now. Step 6: As cryptographic keys, use these coordinated weights. |

The procedure manages the joining and leaving of individuals from the group as below.

- (i)

Leaving from the grouping

Figure 9 depicts a situation in which a member

, let

, wishes to quit the group at level 2 after obtaining keys

and

. It is now apparent that omitting

will not result in a change to

obtained from the pair

. As a result, these users do not need to execute their TLTPM once again to generate coordinated weight vector. The weights of

impacts the

, although

can also keep their coordinated weight vector from level 1. That

is a single node, its TLTPM will have initial weight vector and will coordinate with

to obtain a pair of coordinated keys for

. After that,

and

execute their separate TLTPMs to merge to the same weight vector, that becomes the group of principals’ neural key.

- (ii)

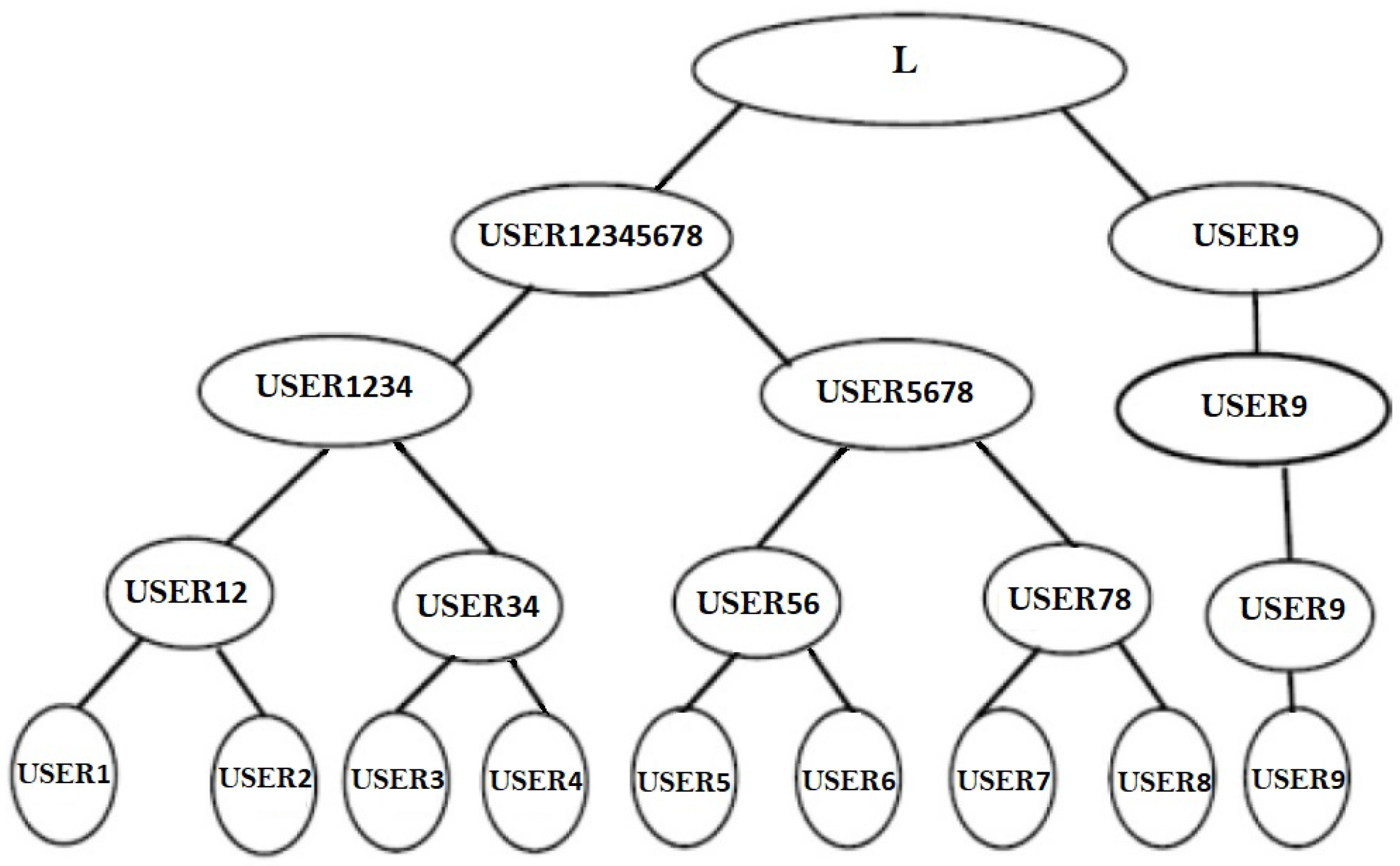

Joining to the group

If any user

wishes to participate in the group, such as a fresh member

,

get linked with the root of the tree, as illustrated in

Figure 10. Previously, with the eight members, two levels are needed to construct the key, namely

and

instruct their networks in simultaneously at 0th, and then in 1st level

and

will instruct their networks for synchronization, and in level 2

will instruct their TLTPM’s and merge to an identical weight vector. Now, there are 9 participants in the group

. After level 2

instructed their network system and synced weight values, a new joining USER 9 in level 3

will develop as a pair and instruct their network systems for weight synchronization, as illustrated in

Figure 10.

3.3. Assessment of Security

As safety computations are implemented, the delay in V2X transmission systems can also be raised, as demonstrated in

Section 2. This method record all safety computations depending on the degree of confidence specified in this part. After that, a GPU-based architecture will be shown, with its efficiency compared to conventional systems.

Public-key encryption is used in existing V2X data transmission to verify over internet transmissions. Despite various variations between the US stack (IEEE 1609) and the EU stack (ETSI ITS G5) [

5], the techniques used regarding safety are almost comparable, as per [

38]. This paper categorize safety computations based on the needs. There are three primary types of security features in V2X data transmission for the most common use cases: information source authenticity, information content consistency, and information encoding.

3.3.1. Authentication of the Information Source

To avoid the problems caused by fraudulent and incorrect data from spoofing attacks, a verification system is employed to ensure that the information is transmitted from a genuine source. The most practical approach is to employ digital authentications for both the initiator and the recipient to sign and check. The ECC technique was chosen by the standards owing to the lower size of signs and keys, as per [

39]. Signs are generated by using ECDSA using 256 or 224-bit keys [

38].

3.3.2. The Consistency of Information Content

The data content integrity is achieved by generating a hash, that is always sent with the data to identify any change at the recipient’s end. SHA-2 functions, which generally include SHA-256 and SHA-512 functions, are the most commonly used Hash functions. The TUAK method set [

40], a more newly formed second method set for validation and key formation, will be used in V2X transmissions, according to the most current ESTI publication.

3.3.3. Encoding of Information

Encrypting every information sent is the best way to totally avoid snooping and the leakage of confidential data in V2X transmissions. Key sharing techniques are often used in most existing data transmission to provide verification between two transmission participants. The contents of the sent communication are then protected using symmetric encryption methods. In most data transmission techniques, however, encrypting all transactions is always computationally costly. V2X data transmission networks, as mentioned in

Section 1, have limited tolerance for processing overhead. Encoding will be employed as a supplemental technique for transferring confidential data, which is a more feasible architecture.

3.3.4. GPU Computing-Based Acceleration

In reality, there are additional acceleration techniques for ECDSA or the Hash function in V2X data transmission networks to achieve higher efficiency. According to [

38], the specialized hardware accelerator can do 1500 and 2000 ECDSA signature and validation calculations per second, respectively. The author of [

41] implements ECDSA on an Intel i7 CPU, at a speed of less than

ms for a single signing or validation process. However, because of performance of these computations is greatly dependent on hardware configuration, it is appropriate to develop a viable solution based on reasonable hardware estimates. For example, equipping each vehicle with a specialized high-performance security calculator is expensive. Or, for architectural or power usage reasons, the Intel i7 CPU is unlikely to be used in cars. The architecture should take into account the hardware resources that are available on today’s vehicles. One viable option is to do the computation operations on System on Chip (SoC) platforms, which are often found in embedded systems such as smartphones. As a result, SoC designs are already being used in today’s automobiles: Vehicles using Nvidia Drive

(really Nvidia Tegra X1 [

42]) systems were used to speed up AI algorithms [

43].

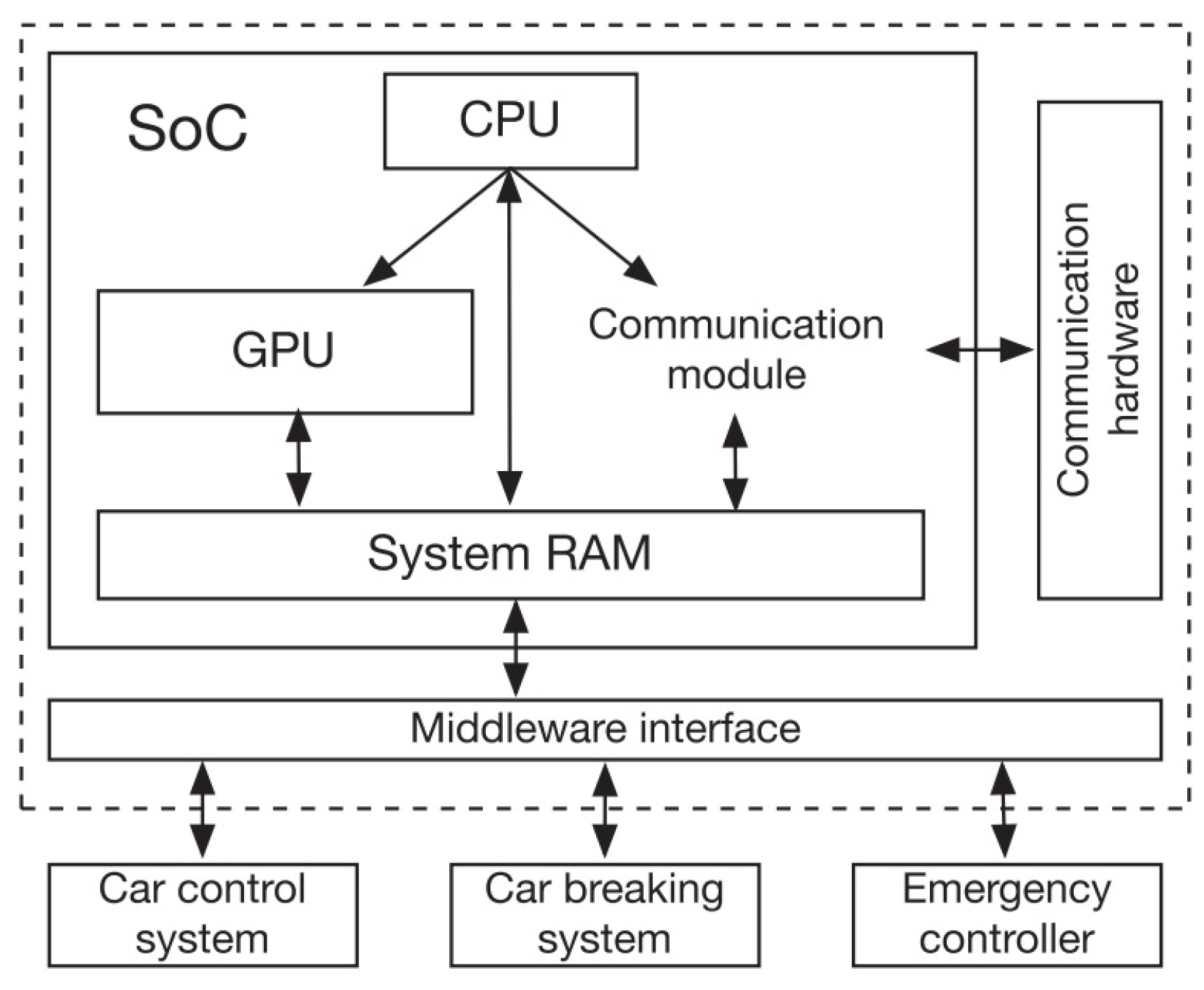

The Nvidia Tegra X1 is a conventional SoC design that includes a GPU, a visual encoder/decoder, DSP, and dashboard monitor, as well as a single part of systems RAM and a CPU that acts as control system and coordinators. As illustrated in

Figure 11, this article construct the solution for V2X transmission systems using a minimized SoC structure with only the essential parts. The CPU is a computation core that also serves as a controller for other parts such as the GPU and communication modules. Information in the system RAM can be calculated by GPU, that can also be controlled by the CPU and units for communication. Small computation processes can then be assigned to various units for numerous complicated processes for overlapping the processing period for improved execution.

3.3.5. ECDSA and Hash Computation on GPU

Nvidia has a number of various driving platforms that are ready to be installed in automobiles. The GPU on Nvidia SoC systems can be leveraged to speed safety computations, according to a study [

44]. Singla et al. [

44] provides an ECDSA benchmark on both GPU and CPU units for the Nvidia Tegra X1 system. In offline mode, the Tegra CPU can do one signature procedure in around 10 milliseconds, while the Tegra GPU can speed up the process by three times. When it comes to validating information, the Tegra CPU can do one checking process in less than 1 millisecond, which is quicker than the GPU. The experiments in [

45] demonstrated that now the Hash techniques SHA-512 and SHA3 sequence can be accelerated on the Tegra architecture and that the performance meets the delay criteria.

As a result, Tegra’s CPU deployment speed may only be sufficient for security apps. The Tegra SoC platform can speed up safety-related computation considerably quicker than the findings in [

16] and will fulfill the delay requirements of security apps because of GPU acceleration. Furthermore, by allocating computing jobs for a large number of inputs utilizing such SoC architectures, this technique may achieve better speeds.

Future confidentiality, reverse confidentiality, and key autonomy are safety objectives for dynamic organizations described by Kim et al. [

24]. Future confidentiality ensures that an attacker who has a collection of cluster keys will be unable to deduce any consecutive group key. To put it another way, when a principle quits the group, the key he left behind has no bearing on the architecture of succeeding group keys. During joining, a new key is generated, which ensures reverse confidentiality. In a ring procedure, the participants begin with an arbitrary input and synchronize to produce a newer key value. When a new principle joins a group using a tree structure-based protocol, reverse confidentiality is ensured by generating a newer key. The fresh group key will be produced when the principal joins the current node, as illustrated in

Figure 7. We may argue that key independence is also ensured if any attacker who has a collection of cluster keys are unable to form any other cluster key since the session is fully reliant on the arbitrariness of the input. The number of test steps is also shown to be directly related to the weight range

M, slowing the coordination procedure and increasing the risk of intrusion. However, it helps to accelerate the coordination procedure when combined with an increase in

M and the amount of concealed neurons. An attack’s success rate eventually drops as a result of this. As a result, the current protocol is protected against attack, and it is also important to specify which people may be trusted to protect data. A confidential key decided upon by both participants may be utilized to produce a static length hash associated with an information for this type of integrity verification. Hash functions is used to generate a data integrity certificate that safeguards the message’s authenticity and is transmitted with the message to safeguard it from illegal access. The communications are not hidden from an unknown party, but their integrity is guaranteed.

4. Results and Discussions

The performance analysis for information amalgamation trust structure is provided in this section. A diverging V2X system has been simulated using the NS-3 simulator, which includes a short-range transmission infrastructure amongst cars and RSUs as well as web guidelines amongst RSUs and the key sharing hub. The mean delay owing to the key sharing is computed for a region key sharing dispersal structure with distant prophecy accuracy ratios.

This method demonstrates the benefits of adopting an area-based key previously-sharing scheme in this simulation. First, by lowering request quantities, the area-based key sharing system can minimize the average delay. The average delay can be reduced with a high accuracy prediction ratio.

There are two types of key update processes matching two distinct trust levels in the present assessment for conventional key sharing system [

9]. The two kinds of key update procedures are identified in

Figure 12 as AT inquiries having tier 1 trust and AT inquiries having tier 2 trust. AT inquiries having tier 1 trust are unprotected, and RSUs are supposed to merely transmit anything from the vehicles or the key sharing servers. On cars and RSUs that confirmed the source of data in AT requests having tier 2 reliability, the request/response are all tested as well as evaluated.

When the cars reach an unexpected area, as mentioned in

Section 3, this approach leverages current key sharing techniques as backup options. In this part, this method will use the area-based key sharing technique to predict the average delay of key sharing, with the current key sharing technique as a backup.

,

,

, and

are the four elements of the overall key sharing delay for the one-time key update, as shown in

Figure 12.

refers to the time it takes for the vehicle to generate the request message, sign it, get the response from the key distribution server, and verify the response.

is the time it takes for the RSU ends to validate the key update request, forward it, get the response message from the key distribution server, and sign it.

The delay on the key distribution server is therefore included in , which is simplified as verify and response.

delay on a heterogeneous network comprises delay on the DSRC network between cars and RSUs, delay on the Internet between RSUs and key distribution servers, and random delay congestion on the link between RSUs and key distribution centers.

refers (Equation (

8)) to the time it takes for the vehicle to produce the request information (

), sign the request information (

), get the reply from the key sharing server (

), and check the reply (

).

is (Equation (

9)) the delay for the RSU endpoints to check the key update request (

), send it (

), receive the reply information from the key sharing server (

), and sign it (

).

The delay on the key sharing server is then taken into account by

(Equation (

10)). In this study, the procedure is referred to as

and

. The focus of this work is not on the key sharing server.

is (Equation (

11)) the delay on the divergent system, that consists of delay on the DSRC network between vehicles and RSUs (

), delay on the Web between RSUs and key sharing servers (

), and random delay

on the link between RSUs and key sharing center (which is ignored in [

9]).

The delay is always avoided since the connections between the RSUs and cars are performed using the DSRC specifications [

9]. The link amongst the RSUs and the key sharing server, on the other hand, is dependent on current web protocols, which, like some other web applications, may suffer from delay [

44]. This Web delay (

) is modelled as an arbitrary delay that can happen over a time span and minimized as an extra communication time (0 to 30% extra communication time) in this simulation.

Then, as indicated in

Section 3, a positive assumption’s ratio of

(

) is used to determine the expected key sharing delay. As a result, once the vehicle reaches an area with predetermined keys, the transmission delay will be

, which is generated by network latency and is expected to be extremely minimal. Only when the assumptions fail will key requests be made, and the system shown in

Figure 12 will be used.

Equation (

12) should be used to estimate the overall delay of area-based key sharing, whereas

equals the sum of Equations (8)–(11).

The Equation (

12), once

, which indicates that in this simulation, everything is the same. Vehicles must send key upgrading requests to the RSU, which is a requirement. As long as this method presume the condition in [

9], it should be the same. Each vehicle will remain in a single zone for the duration of an AT (for example, 10 min).

Another significant point to note is that the [

9] study did not account for speeding up the safety procedures such as information signing and verification on both cars and RSUs. The average time spent signing and validating on a vehicle (

in Equation (

8)) is 50 ms, whereas the average time spent signing and confirming on RSUs (

in Equation (

9)) is 200 ms, as per the findings presented in [

9]. However, as demonstrated in

Section 4, on vehicles that are fitted with acceleration, such as Nvidia Tegra systems, this average time required by safety computations can be reduced. This section of the approach simulates circumstances in which both automobiles and RSUs are fitted with Nvidia Tegra platforms, but neither vehicle nor RSU has such acceleration.

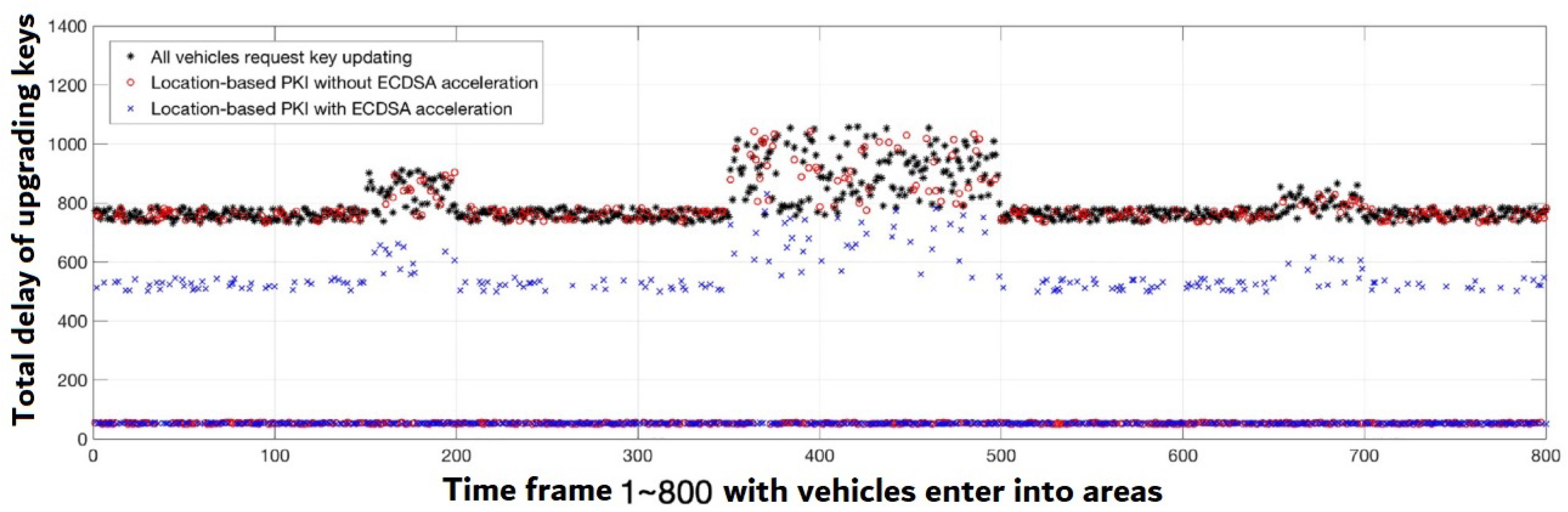

In the simulation, this method set it up so that at least one vehicle reaches an area per short time interval, and this procedure keep updating the transmission key. Some of them have the requisite keys, so the only key swap is necessary, while others do not and attempt to obtain a replacement key through the RSU. The successful assumptions ratios have been set at 0.3, 0.5 and 0.7.

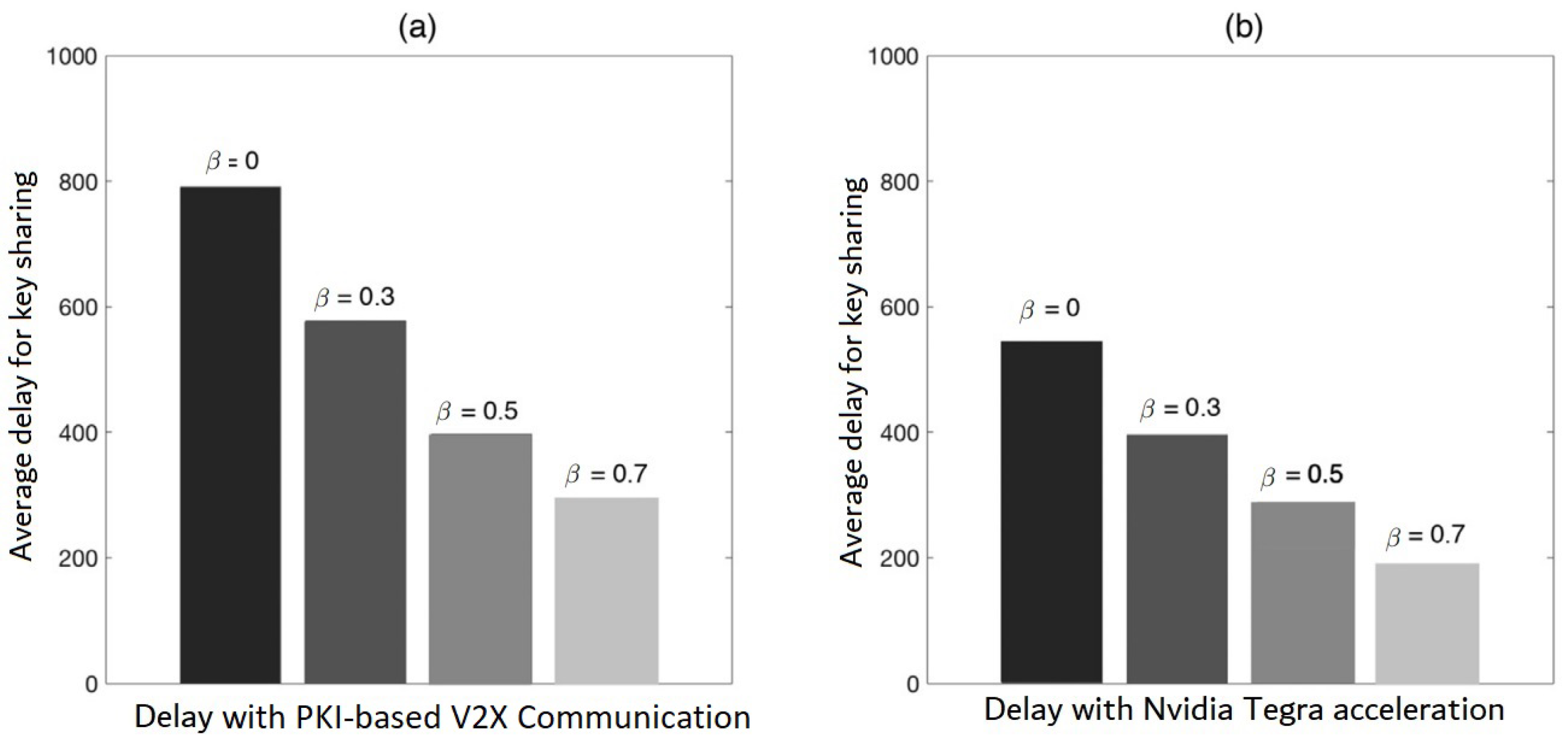

Figure 13 depicts the delay sharing for the value of

. The findings on the Nvidia Tegra platform are compared to the different hardware implementation results based on the estimated time in [

9]. It has been discovered that once the

is set to

, which indicates a good accurate ratio of assumptions, most cars do not require upgrading key requests. The latency is equal to the time it takes to switch keys (

).

Furthermore, when the number of upgrading requests decreases, the average delay for key sharing decreases. Requests are made and executed in 2nd stage trust for some cars that do not have previously-shared keys for this area, as illustrated in

Figure 12. Under this scenario, the Nvidia Tegra platform’s speeding up can minimize the ECDSA signature and authentication time, lowering key sharing delay.

Figure 14 depicts the average delay caused by key sharing. Lower key sharing latency can be obtained with a greater accurate assumption ratio. The speeding up made by the introduction of the Nvidia Tegra SoC platform may be seen for the same assumption ratio.

This paper demonstrates the benefits of adopting an area-based key previously-sharing scheme in this simulation. First, by lowering request quantities, the area-based key sharing network can minimize the average delay. The average delay can be reduced with a high accuracy prediction ratio.

The second issue to consider is that key sharing is a form of information interchange process that includes data communication as well as the essential safety computations, whereas key requests/responses are a type of information conveyed in V2X divergent system. The time spent on safety computation activities is decreased by GPU acceleration, as per the assessed findings given in

Figure 14. As a result, information interchange latency between cars and RSUs in V2X systems is significantly decreased for critical request/response.

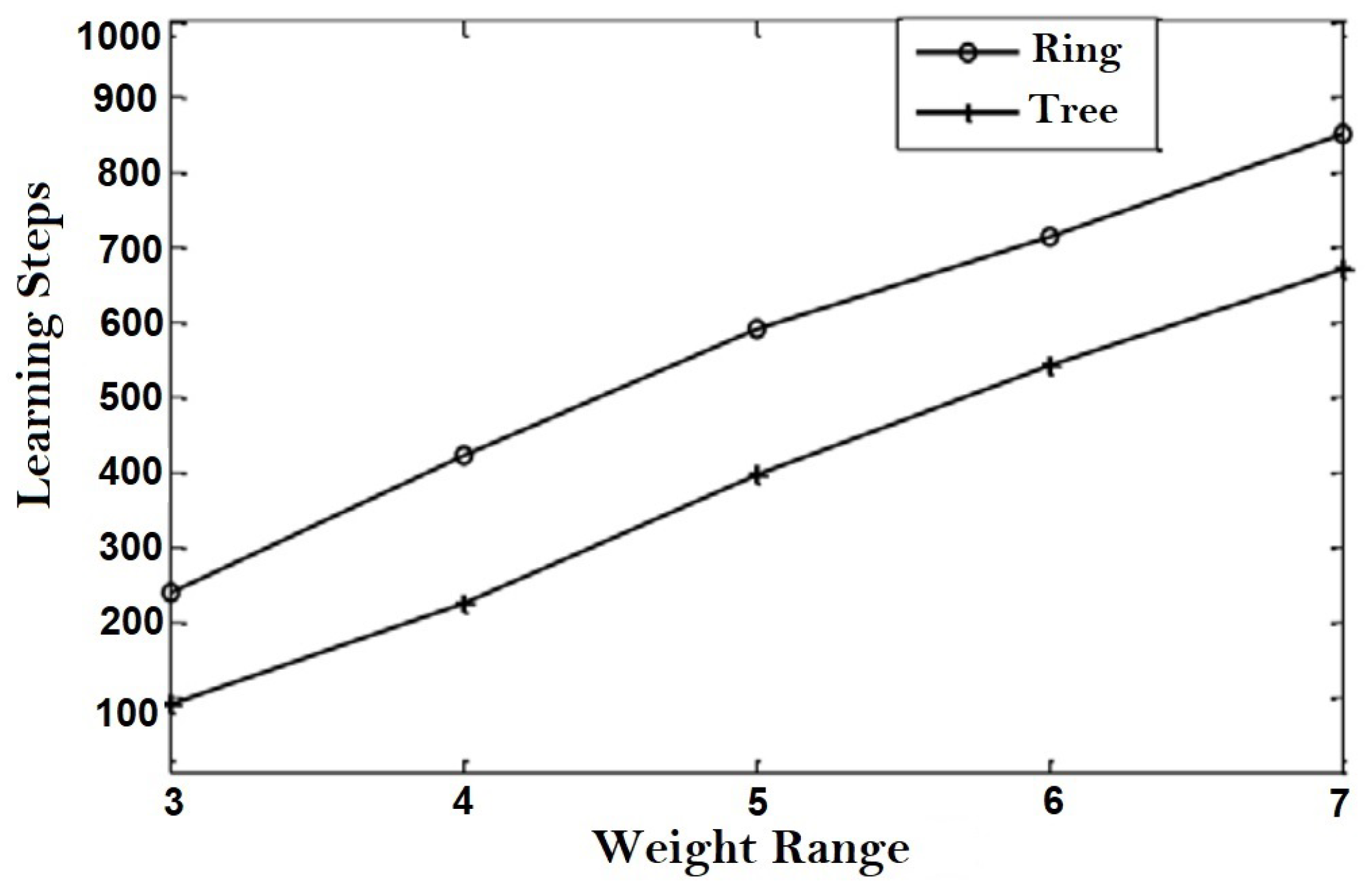

For an input vector, the TLTPM system was constructed.

TLTPM syncing was first accomplished by utilizing optimum weights ranging from 3 to 7.

Figure 15 shows the average number of learning phases necessary to reach weight coordination for various weight range values for both ring and tree topologies for a constant number of members

.

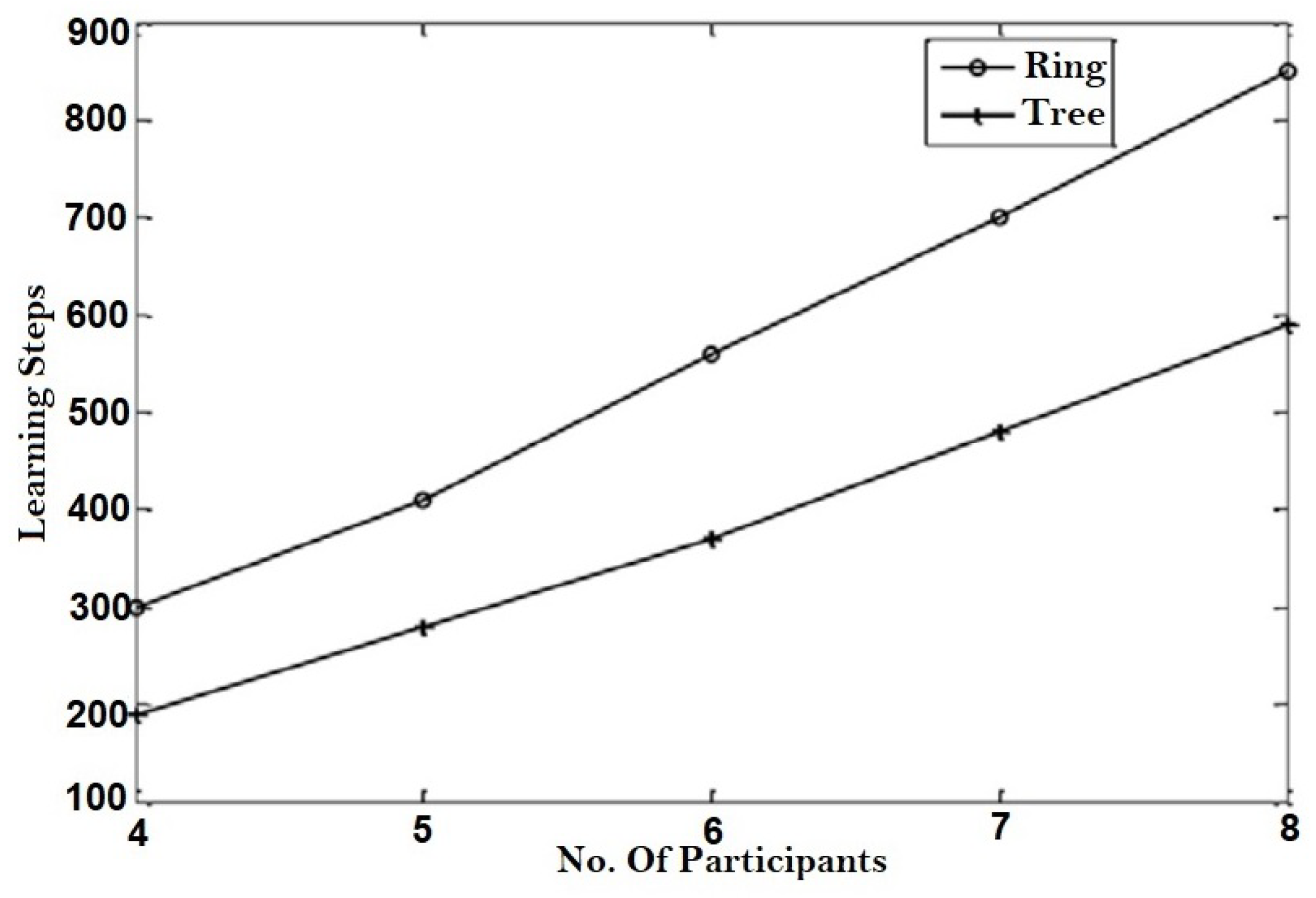

For such constant

value of 7,

Figure 16 displays the average range of learning stages plotted against the amount of users. As predicted, the coordination time increases as

and the number of members increases. When contrasted to the ring system, the number of training necessary to generate a key utilizing tree structure is clearly fewer.

The following are the common problems to consider while designing group standards:

The proficiency of a group key exchange method may vary as the amount of the group of members varies. The method’s extensibility indicates to its capacity to be expanded to consider a broad group. Extensibility in the terms of confidentiality relates to the distribution and management of keys, as well as a variety of other safety regulations, when the group grows or shrinks. The group neural key created at the start of the connection can be used all across the session when interacting between fixed groups. However, in dynamic groups, where users enter and exit the group on a regular basis, the group key produced initially will not enough. This is to enable both reverse access control, which prevents a fresh member from capturing prior messages, and controlling of forward access, which prevents a departing user from viewing forthcoming communications. When a fresh member enters or an old member quits, the group key must be produced again. Individual Rekeying is the name given to this technique. As a result, group key configurations for strong communication groupings should allow users to join or immediately leave without jeopardizing future confidentiality, past confidentiality, or key autonomy.

The joining principle cannot obtain any of the previous keys since the “join” procedure must ensure full past confidentiality. As a result, each key must be changed. The “join” step in the suggested ring structure procedure results in the recreation of a fresh neural key by restarting the TLTPM coordination. If the new member joins as a current node, it combines with its adjoining and establishes a new set of coordinated weights before moving onto another stage; if it enters as a new user, this will possess its optimal weight vector to the final stage and prepare its system with its adjacent cluster for weight coordination.

Comparably, the “leave” procedure must ensure flawless forward confidentiality, then all the keys that perhaps the exiting member learns must be changed, that is accomplished in the ring structure procedure by bringing back the procedure each time a principal is inserted or deleted, while in the tree-like framework, if some member quits, the member who is linked to the former member, say , will produce new weights and link with the adjacent group . The procedure is repeated until the level is reached. As a result, the vital freshness and independence are retained.

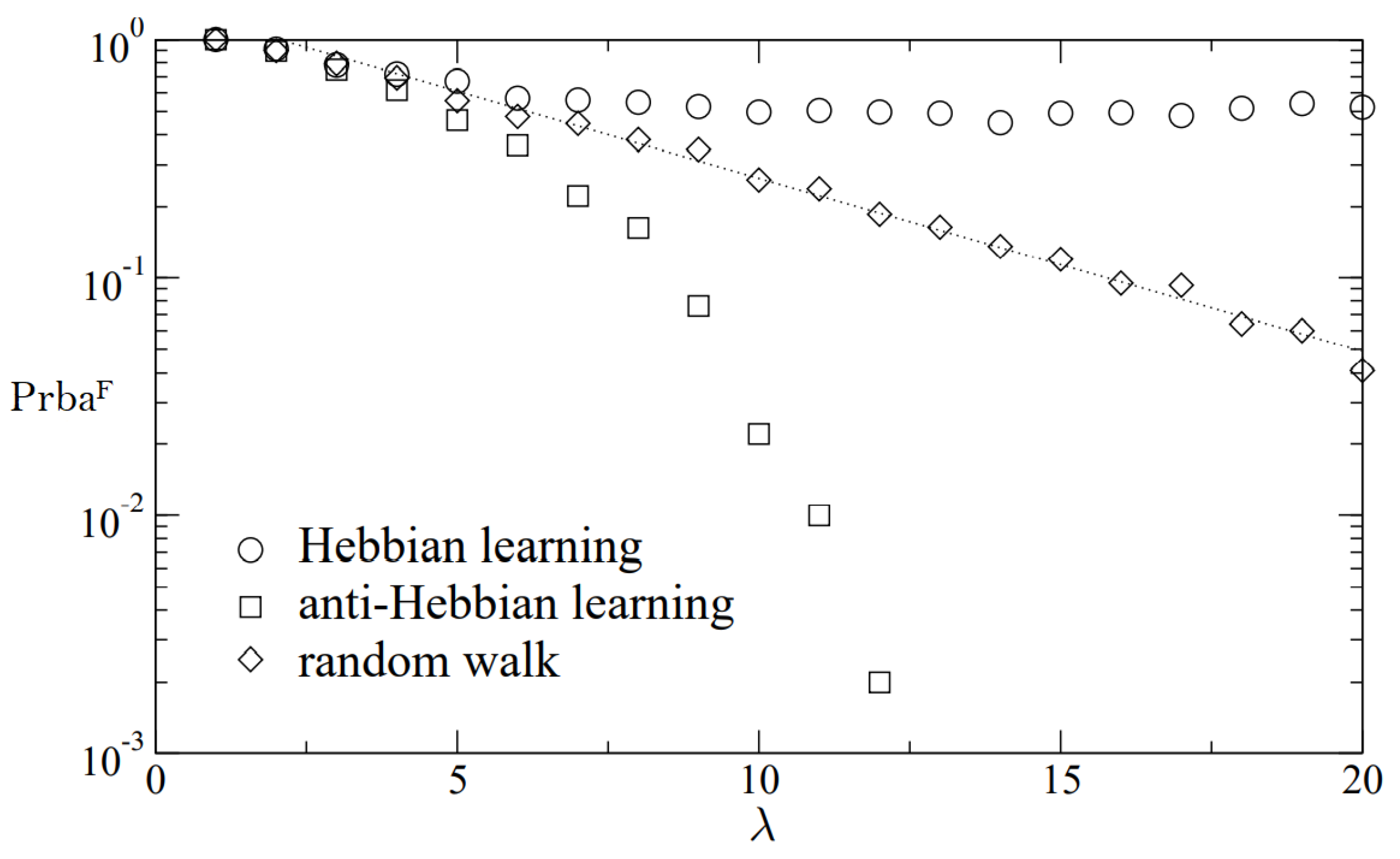

Figure 17 illustrates the probability of a majority approach with 100 attacker networks succeeding. In this case, 10,000 iterations are taken account and

.

The findings of the NIST test [

46] on the synced neuronal key are shown in

Table 2. The

p-Value evaluation is carried out for the TLTPM, CVTPM [

10], Dolecki and Kozera [

14], Liu et al. [

34], VVTPM [

12], and GAN-DLTPM [

11] techniques. The TLTPM’s

p-Value has indeed been emerged as a promising alternative than others.

The outcome of the frequency test in the synced neural key indicates a percentage of 1 and 0. The result is 0.701496, which is a lot better than the result 0.512374 in [

10], 0.537924 in [

14], 0.516357 in [

34], 0.538632 in [

12], 0.584326 in [

11], 0.1329 in [

35], 0.632558 in [

36], and 0.629806 in [

37].

Table 3 shows the

p-Value comparisons for the frequency strategy.

Table 4 shows the outcomes for different

values. Two columns define the minimum and maximum syncing times. The capacity of an adversary to formulate and implement the actions of approved TLTPMs is evaluated by invader syncing.

The arrangements (8-8-8-16-8) and (8-8-8-8-128) provide the optimum protection against the invading system, as shown in

Table 4. In all 700,000 trials, E will not respond like A and B’s TLTPM.

Table 5 displays the most cost-effective choices.

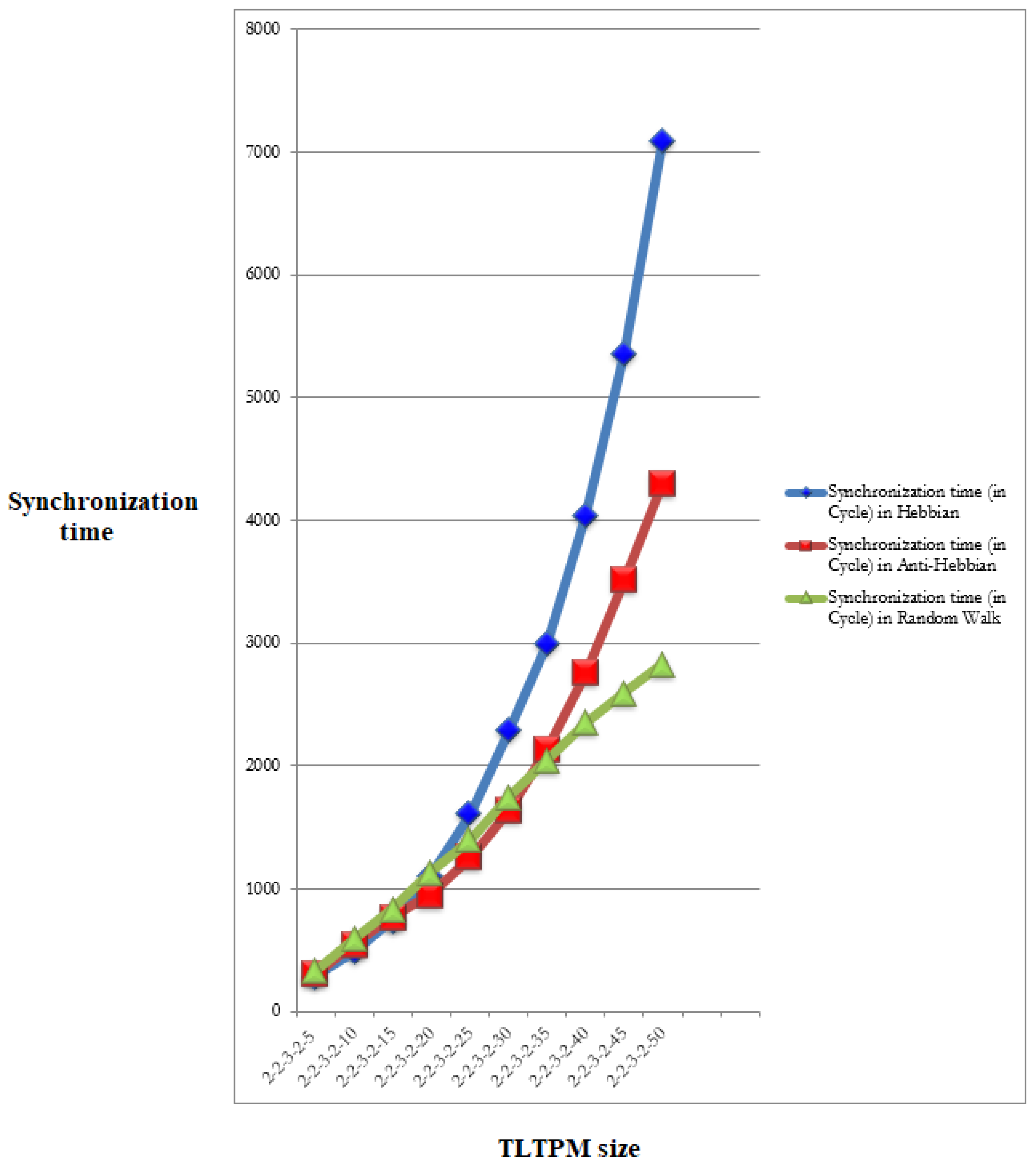

For various learning, weight range, and constant TLTPM size,

Table 6 analyzes the synchronization periods of TLTPM and TPM-FPGA methods. When

increases in all three rules, there is a propensity for the sync step to rise. In the

range of 5 to 15, Hebbian hardly requires any sync stages than that of the other two rules, but when the

rises, Hebbian uses more sync steps than the others.

The Anti-Hebbian harmonizing times of the TLTPM and CVTPM strategies are compared in

Table 7. The TLTPM’s sync interval is noticeably shorter than the CVTPM’s, as shown in table. The Anti-Hebbian, in comparing to others, requires less effort.

The necessary synchronizing duration for the 512-bit key generation with varying synaptic ranges is shown in

Figure 18. In large scale networks, the Random Walk rule surpasses other learning techniques.

Table 8 compares the TLTPM and VVTPM time synchronization approaches using the Random Walk method. The TLTPM, which uses the Random Walk learning algorithm, has a much faster coordinating period than the VVTPM.