Multi-Agent Reinforcement Learning for Joint Cooperative Spectrum Sensing and Channel Access in Cognitive UAV Networks

Abstract

:1. Introduction

- •

- To coordinate the behaviors of various CUAVs for efficient utilization of idle spectrum resources of PUs, a CUAV channel exploration and utilization protocol framework based on sensing–fusion–transmission is proposed.

- •

- A problem maximizing the expected cumulative weighted rewards of CUAVs is formulated. Considering the practical constraints, i.e., the lack of prior knowledge about the dynamics of PU activities and the lack of a centralized access coordinator, the original one-shot optimization problem is reformulated into a Markov game (MG). A weighted composite reward function combining both the cost and utility for spectrum sensing and channel access is designed to transform the considered problem into a competition and cooperation hybrid multi-agent reinforcement learning (CCH-MARL) problem.

- •

- To tackle the CCH-MARL problem through a decentralized approach, UCB-Hoeffding (UCB-H) strategy searching and the independent learner (IL) based Q-learning scheme are introduced. More specifically, UCB-H is introduced to achieve a trade-off between exploration and exploitation during the process of Q-value updating. Two decentralized algorithms with limited information exchange among the CUAVs, namely, the IL-based Q-learning with UCB-H (IL-Q-UCB-H) and the double deep Q-learning with UCB-H (IL-DDQN-UCB-H), are proposed. The numerical simulation results indicate that the proposed algorithms are able to improve network performance in terms of both the sensing accuracy and channel utilization.

2. System Model

2.1. Network Model

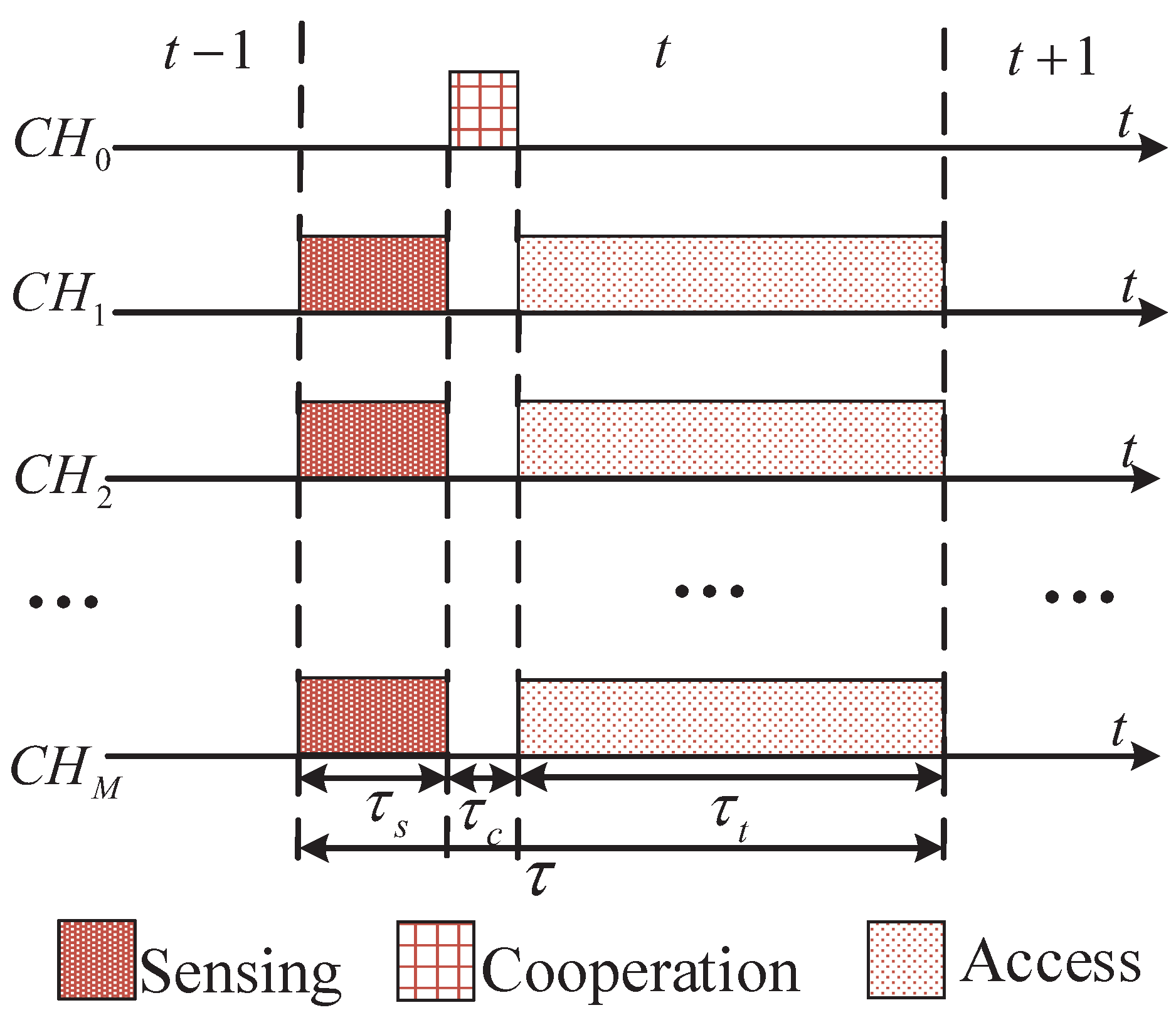

2.2. Framework of Channel Sensing and Access

2.3. Problem Formulation

3. Problem Modeling Based on MARL

3.1. Markov Game-Based Problem Formulation

- is the set of agents.

- is the state space observed consistently by all agents.

- is the action space of agent n, and the joint action space of all the agents is .

- is the transition probability from any state to any state for any given joint action .

- The reward function determines the instant reward received by agent n in the controlled Markov process from to .

- is the reward discount factor.

- Agent Set consists of the N CUAVs (agents), i.e., .

- State space of the MG is defined aswhere is the number of CUVAs that select PU channel m to sense and access in the previous time slot. In particular, is the number of CUVAs that do not select any PU channel. Since each CUAV can select at most one single PU channel for sensing-and-access, . is the observed occupancy state of PU channel m in the previous time slot. Following (2), the size of the state space is .

- Action space for CUAV n is defined as . Let denote the PU channel selected by agent n at time slot t, indicates that no channel is selected. The joint action space can be defined as the Cartesian product of all the CUAVs, and the joint action at time slot t is .

- State transition probability consists of the transition maps for all , and . Note that for the elements of transition , the transition probability is determined by the two-state Markov process shown in Figure 2.

- Reward function of CUAV n, is observed at time slot after the CUAVs taking a joint action . The details of the reward are presented in the next subsection.

3.2. Definition of CUAVs’ Reward Function

- (i)

- If PU channel m is busy, and the sensing fusion result is the same, i.e., , the reward of CUAV n is solely determined by the spectrum sensing cost .

- (ii)

- If PU channel m is busy but the sensing fusion result leads to a missed detection, i.e., , CUAV n’s reward is determined by the sum of spectrum sensing cost and the cost due to the failed data transmission, .

- (iii)

- If PU channel m is idle and the sensing fusion result is the same, i.e., , CUAV n’s reward is determined by the weighted sum of the sensing cost, , the cost for data transmission, , and the utility of successful transmission, .

- (iv)

- If PU channel m is idle but the fusion result leads to a false alarm, i.e., , the reward of CUAV n is determined by the weighted sum of spectrum sensing cost and the lost transmission utility .

- (v)

- If CUAV n does not select any PU channel, i.e., , the reward is 0.

- Spectrum sensing cost for CUAV n at time slot is defined as the energy consumed for spectrum sensing, namely, a function proportional to the working voltage of the receiver, the bandwidth of the sensed channel B, and the sensing duration [31]:

- Data transmission cost for CUAV n in time slot is defined as the energy consumed for data transmission during the time slot,where and are the data transmission duration and transmit power, respectively. , , and are assumed to be the same for all the CUAVs, i.e., .

- Transmission utility for CUAV n in time slot of (cf. Cases iii and iv) is measured as the amount of data transmitted over the time slot. We consider that the quality of transmission is evaluated based on the throughput over a given channel under the co-channel interference:where is the received signal-to-interference-to-noise ratio (SINR) for CUAV n over its selected PU channel m. can be expressed aswhere is noise power. is the channel gain of CUAV n on PU channel m and is the channel gain between CUAV j and CUAV n on PU channel m. As mentioned earlier, with platooning of the CUAV cluster, the channel gains among the CUAVs could be considered as quasi-static over the period of interest. is the co-channel interference from the other CUAVs sharing the same PU channel m. Since the spatial positions and the transmitting–receiving relationship of the CUAVs over the same channel are not necessarily the same, the channel gains between different CUAVs are different, and thus the SINR of the received signals of each CUAV are different.

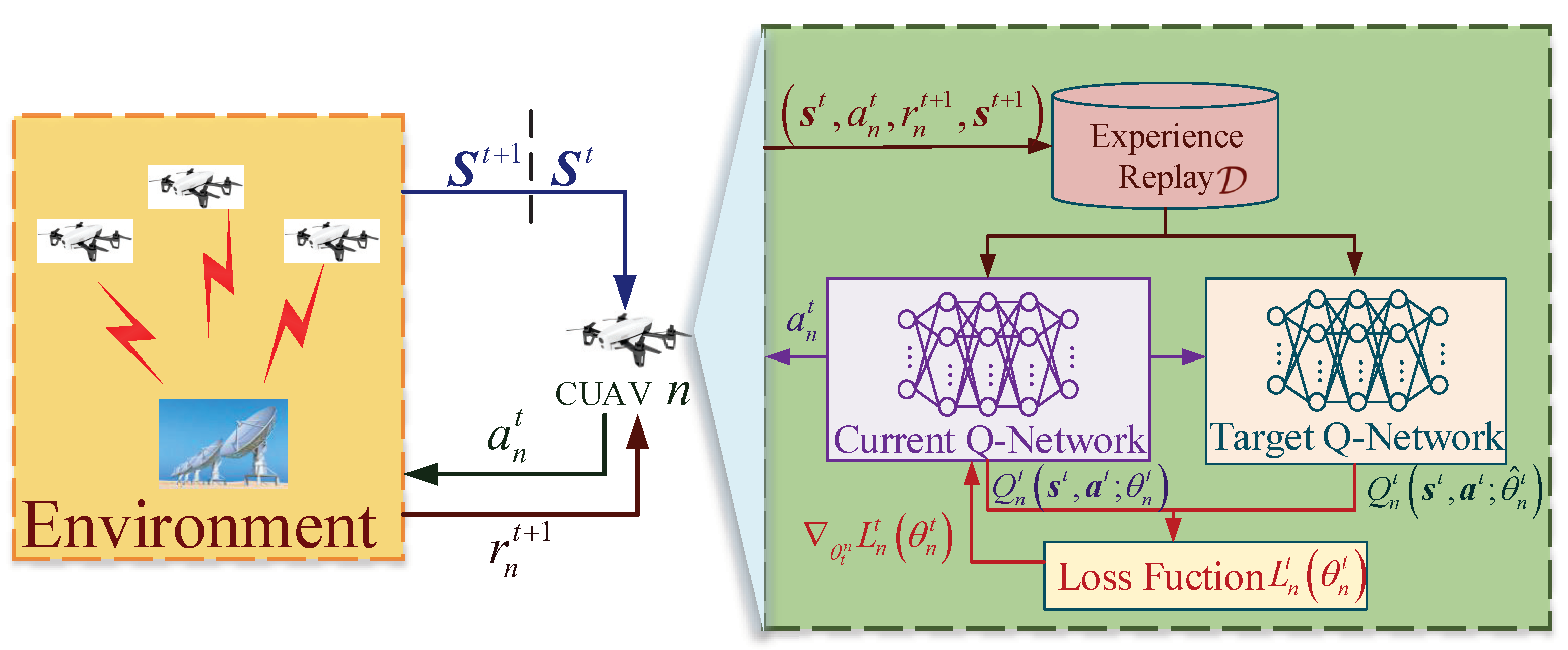

3.3. MARL Algorithm Framework

4. Algorithm Design Based on Independent Learner

4.1. UCB-H Strategy

4.2. IL-Q-UCB-H Algorithm

| Algorithm 1: IL-Q-UCB-H algorithm. |

|

4.3. IL-DDQN-UCB-H Algorithm

| Algorithm 2: IL-DDQN-UCB-H Algorithm. |

|

4.4. Algorithm Complexity Analysis

- IL-Q-UCB-H algorithm: Since each CUAV executes the IL-Q-UCB-H algorithm independently, its information interaction overhead is mainly caused by broadcasting its own sensing decision information. The amount of information interaction increases linearly with the increase of CUAVs. For algorithm execution, each CUAV needs to store a Q-table of size according to the number of states and actions. It increases exponentially with the numbers of CUAVs and PU channels. The computational cost for each CUAV is dominated by the linear update of the Q-table and the search for the optimal action, which are both of constant time complexity.

- IL-DDQN-UCB-H algorithm: The cost of information exchange is the same as the IL-Q-UCB-H algorithm. For algorithm execution, since a deep neural network is used to fit the Q-values, the storage cost mainly depends on the structure of the deep neural network. Since the IL-DDQN-UCB-H algorithm involves updating two Q-networks, the computational complexity is dependent of the neural network structure (i.e., the network parameters) at the training stage.

5. Simulation and Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ye, L.; Zhang, Y.; Li, Y.; Han, S. A Dynamic Cluster Head Selecting Algorithm for UAV Ad Hoc Networks. In Proceedings of the 2020 International Wireless Communications and Mobile Computing (IWCMC), Limassol, Cyprus, 15–19 June 2020; pp. 225–228. [Google Scholar]

- Wu, F.; Zhang, H.; Wu, J.; Song, L. Cellular UAV-to-device communications: Trajectory design and mode selection by multi-agent deep reinforcement learning. IEEE Trans. Commun. 2020, 68, 4175–4189. [Google Scholar] [CrossRef] [Green Version]

- Ma, Z.; Ai, B.; He, R.; Wang, G.; Niu, Y.; Yang, M.; Wang, J.; Li, Y.; Zhong, Z. Impact of UAV rotation on MIMO channel characterization for air-to-ground communication systems. IEEE Trans. Veh. Technol. 2020, 69, 12418–12431. [Google Scholar] [CrossRef]

- Jingnan, L.; Pengfei, L.; Kai, L. Research on UAV communication network topology based on small world network model. In Proceedings of the 2017 IEEE International Conference on Unmanned Systems (ICUS), Beijing, China, 27–29 October 2017; pp. 444–447. [Google Scholar]

- Liu, X.; Sun, C.; Zhou, M.; Wu, C.; Peng, B.; Li, P. Reinforcement learning-based multislot double-threshold spectrum sensing with Bayesian fusion for industrial big spectrum data. IEEE Trans. Ind. Inform. 2020, 17, 3391–3400. [Google Scholar] [CrossRef]

- Ning, W.; Huang, X.; Yang, K.; Wu, F.; Leng, S. Reinforcement learning enabled cooperative spectrum sensing in cognitive radio networks. J. Commun. Netw. 2020, 22, 12–22. [Google Scholar] [CrossRef]

- Xu, W.; Wang, S.; Yan, S.; He, J. An efficient wideband spectrum sensing algorithm for unmanned aerial vehicle communication networks. IEEE Internet Things J. 2018, 6, 1768–1780. [Google Scholar] [CrossRef] [Green Version]

- Shen, F.; Ding, G.; Wang, Z.; Wu, Q. UAV-based 3D spectrum sensing in spectrum-heterogeneous networks. IEEE Trans. Veh. Technol. 2019, 68, 5711–5722. [Google Scholar] [CrossRef]

- Nie, R.; Xu, W.; Zhang, Z.; Zhang, P.; Pan, M.; Lin, J. Max-min distance clustering based distributed cooperative spectrum sensing in cognitive UAV networks. In Proceedings of the ICC 2019-2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Feng, P.; Bai, Y.; Huang, J.; Wang, W.; Gu, Y.; Liu, S. CogMOR-MAC: A cognitive multi-channel opportunistic reservation MAC for multi-UAVs ad hoc networks. Comput. Commun. 2019, 136, 30–42. [Google Scholar] [CrossRef]

- Liang, X.; Xu, W.; Gao, H.; Pan, M.; Lin, J.; Deng, Q.; Zhang, P. Throughput optimization for cognitive UAV networks: A three-dimensional-location-aware approach. IEEE Wirel. Commun. Lett. 2020, 9, 948–952. [Google Scholar] [CrossRef]

- Zhu, Q.; Wang, Y.; Jiang, K.; Chen, X.; Zhong, W.; Ahmed, N. 3D non-stationary geometry-based multi-input multi-output channel model for UAV-ground communication systems. IET Microw. Antennas Propag. 2019, 13, 1104–1112. [Google Scholar] [CrossRef]

- Khawaja, W.; Ozdemir, O.; Erden, F.; Guvenc, I.; Matolak, D.W. Ultra-Wideband Air-to-Ground Propagation Channel Characterization in an Open Area. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 4533–4555. [Google Scholar] [CrossRef]

- Lunden, J.; Kulkarni, S.R.; Koivunen, V.; Poor, H.V. Multiagent reinforcement learning based spectrum sensing policies for cognitive radio networks. IEEE J. Sel. Top. Signal Process. 2013, 7, 858–868. [Google Scholar] [CrossRef]

- Chen, H.; Zhou, M.; Xie, L.; Wang, K.; Li, J. Joint spectrum sensing and resource allocation scheme in cognitive radio networks with spectrum sensing data falsification attack. IEEE Trans. Veh. Technol. 2016, 65, 9181–9191. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Cai, P.; Pan, C.; Zhang, S. Multi-agent deep reinforcement learning-based cooperative spectrum sensing with upper confidence bound exploration. IEEE Access 2019, 7, 118898–118906. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, W.; Wang, C.X.; Sun, J.; Liu, Y. Deep reinforcement learning for dynamic spectrum sensing and aggregation in multi-channel wireless networks. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 464–475. [Google Scholar] [CrossRef]

- Cai, P.; Zhang, Y.; Pan, C. Coordination Graph-Based Deep Reinforcement Learning for Cooperative Spectrum Sensing under Correlated Fading. IEEE Wirel. Commun. Lett. 2020, 9, 1778–1781. [Google Scholar] [CrossRef]

- Lo, B.F.; Akyildiz, I.F. Reinforcement learning for cooperative sensing gain in cognitive radio ad hoc networks. Wirel. Netw. 2013, 19, 1237–1250. [Google Scholar] [CrossRef]

- Zhang, M.; Wang, L.; Feng, Y. Distributed cooperative spectrum sensing based on reinforcement learning in cognitive radio networks. AEU-Int. J. Electron. Commun. 2018, 94, 359–366. [Google Scholar] [CrossRef]

- Kaur, A.; Kumar, K. Energy-efficient resource allocation in cognitive radio networks under cooperative multi-agent model-free reinforcement learning schemes. IEEE Trans. Netw. Serv. Manag. 2020, 17, 1337–1348. [Google Scholar] [CrossRef]

- Nobar, S.K.; Ahmed, M.H.; Morgan, Y.; Mahmoud, S. Resource Allocation in Cognitive Radio-Enabled UAV Communication. IEEE Trans. Cogn. Commun. Netw. 2021; in press. [Google Scholar]

- Cui, J.; Liu, Y.; Nallanathan, A. Multi-agent reinforcement learning-based resource allocation for UAV networks. IEEE Trans. Wirel. Commun. 2019, 19, 729–743. [Google Scholar] [CrossRef] [Green Version]

- Chandrasekharan, S.; Gomez, K.; Al-Hourani, A.; Kandeepan, S.; Rasheed, T.; Goratti, L.; Reynaud, L.; Grace, D.; Bucaille, I.; Wirth, T.; et al. Designing and implementing future aerial communication networks. IEEE Commun. Mag. 2016, 54, 26–34. [Google Scholar] [CrossRef] [Green Version]

- Chen, Z.; Qiu, R.C. Cooperative spectrum sensing using q-learning with experimental validation. In Proceedings of the 2011 Proceedings of IEEE Southeastcon, Nashville, TN, USA, 17–20 March 2011; pp. 405–408. [Google Scholar]

- Han, W.; Li, J.; Tian, Z.; Zhang, Y. Efficient cooperative spectrum sensing with minimum overhead in cognitive radio. IEEE Trans. Wirel. Commun. 2010, 9, 3006–3011. [Google Scholar] [CrossRef]

- Abdi, N.; Yazdian, E.; Hoseini, A.M.D. Optimum number of secondary users in cooperative spectrum sensing methods based on random matrix theory. In Proceedings of the 2015 5th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 29 October 2015; pp. 290–294. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Zhang, K.; Yang, Z.; Başar, T. Multi-agent reinforcement learning: A selective overview of theories and algorithms. In Handbook of Reinforcement Learning and Control; Springer: Berlin/Heidelberg, Germany, 2021; pp. 321–384. [Google Scholar]

- Zhang, X.; Shin, K.G. E-MiLi: Energy-minimizing idle listening in wireless networks. IEEE Trans. Mob. Comput. 2012, 11, 1441–1454. [Google Scholar] [CrossRef]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Filar, J.; Vrieze, K. Competitive Markov Decision Processes; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Jin, C.; Allen-Zhu, Z.; Bubeck, S.; Jordan, M.I. Is Q-learning provably efficient? arXiv 2018, arXiv:1807.03765. [Google Scholar]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Parameters | Value |

|---|---|

| PU channels M | 5 |

| CUAV number N | 4, 5, 10 |

| Channel bandwidth | 50∼100 MHz |

| False alarm probability | 0.1 [17] |

| Detection probability | 0.9 |

| Transmission power | 23 dBm [24] |

| Sensing time | 0.1 ms |

| Transmission time | 0.5 ms |

| Weights of sensing/access cost | 0.01, 0.05 |

| Hyper-Parameters | Value |

|---|---|

| Greedy rate | 0.1 |

| Discount factor | 0.9 |

| Parameters of the learning rate | 0.5, 0.8 [24] |

| Parameters of UCB-H | 0.01, 2 [17] |

| Parameters of CNN | (2, 2, 10) |

| Activation function | ReLu [19] |

| Optimizer | Adam [36] |

| Batch size B | 64 |

| Target Q-Network update period F | 100 |

| Experience replay size C | 20,000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, W.; Yu, W.; Wang, W.; Huang, T. Multi-Agent Reinforcement Learning for Joint Cooperative Spectrum Sensing and Channel Access in Cognitive UAV Networks. Sensors 2022, 22, 1651. https://doi.org/10.3390/s22041651

Jiang W, Yu W, Wang W, Huang T. Multi-Agent Reinforcement Learning for Joint Cooperative Spectrum Sensing and Channel Access in Cognitive UAV Networks. Sensors. 2022; 22(4):1651. https://doi.org/10.3390/s22041651

Chicago/Turabian StyleJiang, Weiheng, Wanxin Yu, Wenbo Wang, and Tiancong Huang. 2022. "Multi-Agent Reinforcement Learning for Joint Cooperative Spectrum Sensing and Channel Access in Cognitive UAV Networks" Sensors 22, no. 4: 1651. https://doi.org/10.3390/s22041651

APA StyleJiang, W., Yu, W., Wang, W., & Huang, T. (2022). Multi-Agent Reinforcement Learning for Joint Cooperative Spectrum Sensing and Channel Access in Cognitive UAV Networks. Sensors, 22(4), 1651. https://doi.org/10.3390/s22041651